A Compact High-Speed Image-Based Method for Measuring the Longitudinal Motion of Living Tissues

Abstract

:1. Introduction

2. Materials and Methods

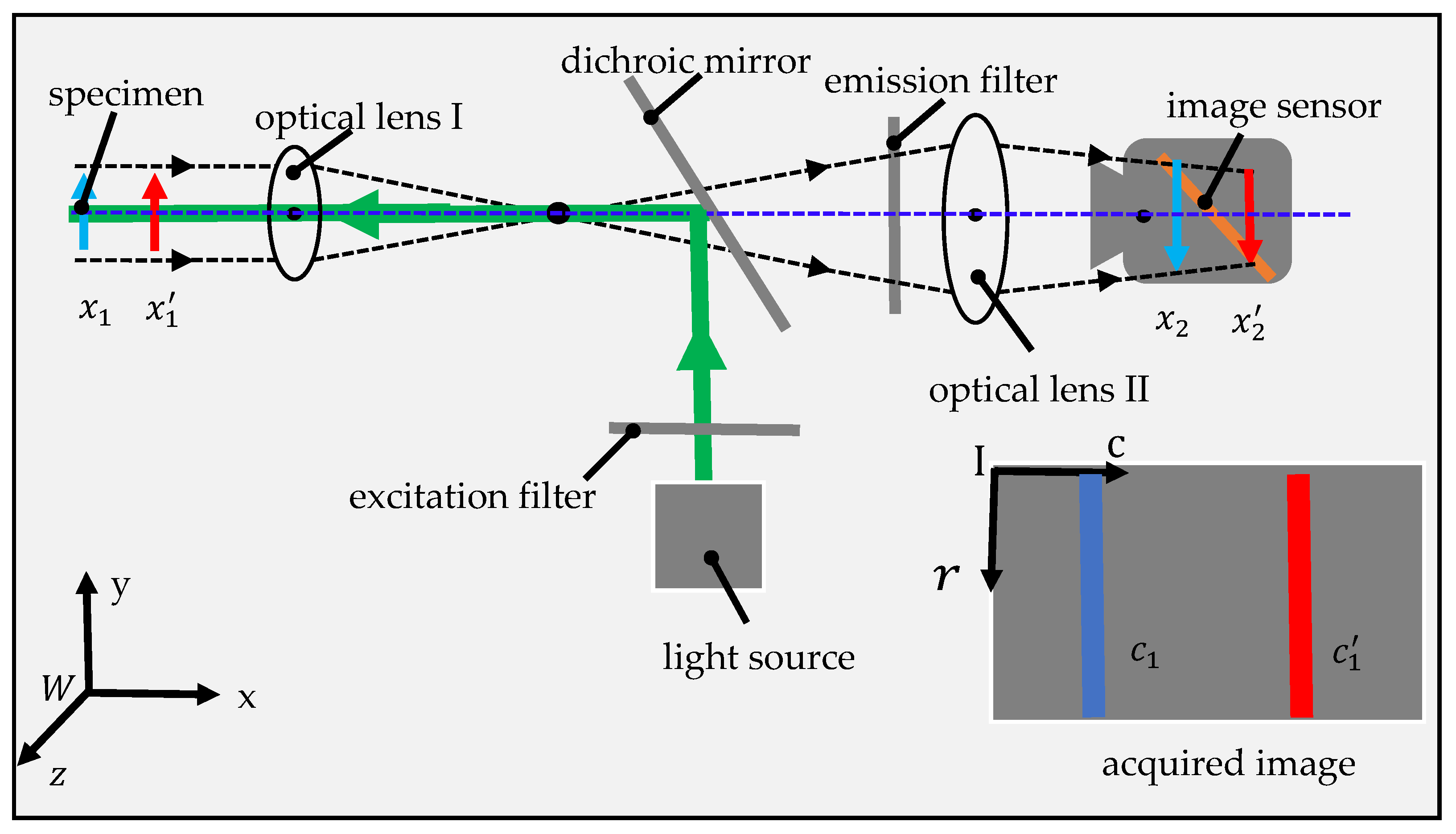

2.1. System Design and Implementation

2.2. Mathematical Model of Prototype

2.3. Image Preprocessing

2.4. Method for Estimating the Position of Stripe

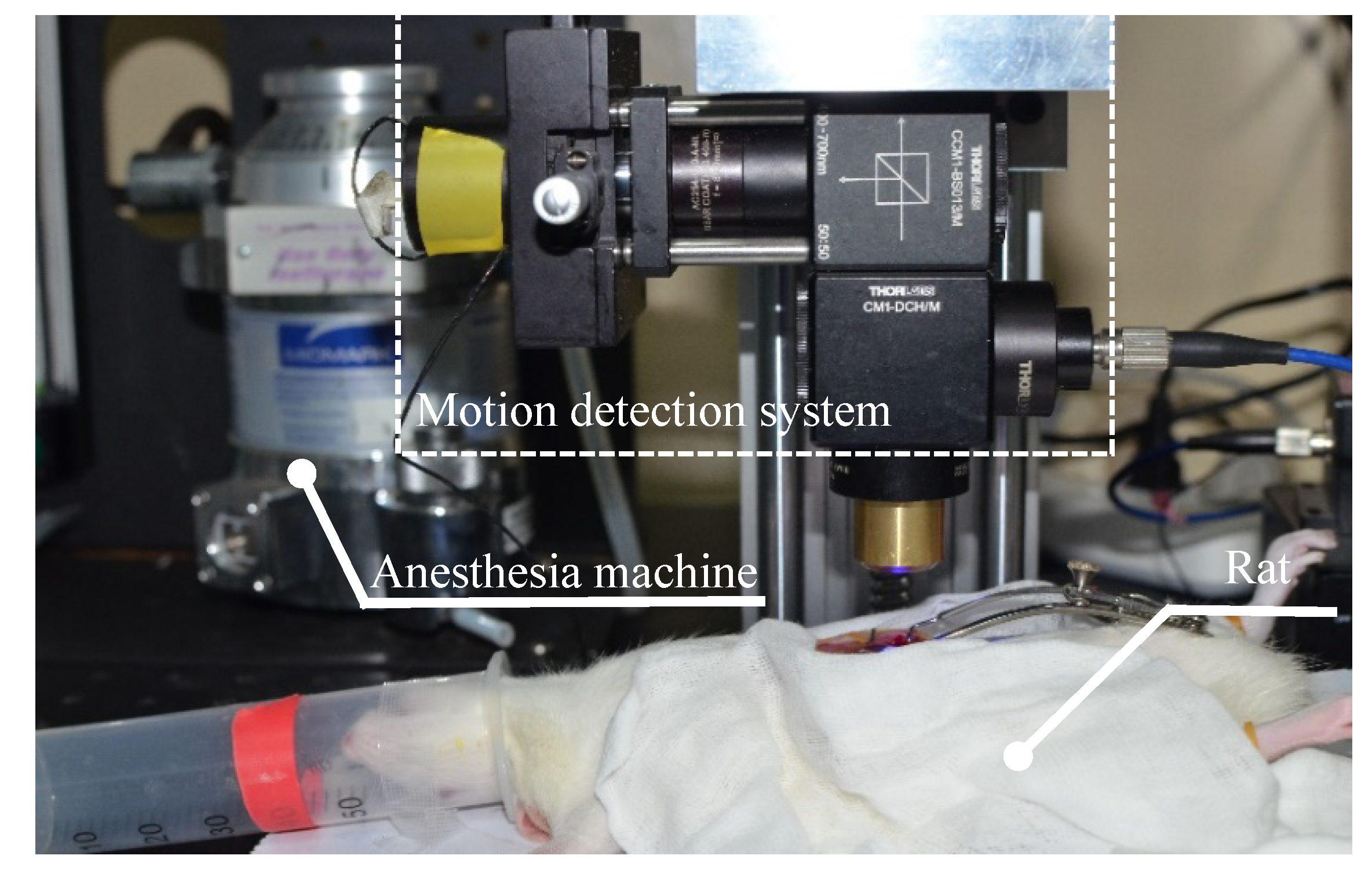

2.5. Image Acquisition and Processing

2.6. Functional Test Experiment

2.7. Calibration Experiment

2.8. Animal Experiment

2.9. Data Analysis and Processing

3. Results

3.1. Simulation

3.2. Functional Test

3.3. Calibration Experiment

3.4. Animal Experiment

4. Discussions

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ippolito, G.; Palazzo, F.F.; Sebag, F.; De Micco, C.; Henry, J.F. Intraoperative diagnosis and treatment of parathyroid cancer and atypical parathyroid adenoma. Br. J. Surg. 2007, 94, 566–570. [Google Scholar] [CrossRef] [PubMed]

- Hollon, T.C.; Lewis, S.; Pandian, B.; Niknafs, Y.S.; Garrard, M.R.; Garton, H.; Maher, C.O.; McFadden, K.; Snuderl, M.; Lieberman, A.P.; et al. Rapid intraoperative diagnosis of pediatric brain tumors using stimulated raman histology. Cancer Res. 2018, 78, 278–289. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, M.Y.; Tang, F.; Pan, X.B.; Yao, L.F.; Wang, X.Y.; Jing, Y.Y.; Ma, J.; Wang, G.F.; Mi, L. Rapid diagnosis and intraoperative margin assessment of human lung cancer with fluorescence lifetime imaging microscopy. BBA Clin. 2017, 8, 7–13. [Google Scholar] [CrossRef] [PubMed]

- Alam, I.S.; Steinberg, I.; Vermesh, O.; van den Berg, N.S.; Rosenthal, E.L.; van Dam, G.M.; Ntziachristos, V.; Gambhir, S.S.; Hernot, S.; Rogalla, S. Emerging Intraoperative imaging modalities to improve surgical precision. Mol. Imaging Biol. 2018, 20, 705–715. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pierce, M.C.; Vila, P.M.; Polydorides, A.D.; Richards-Kortum, R.; Anandasabapathy, S. Low-cost endomicroscopy in the esophagus and colon. Am. J. Gastroenterol. 2011, 106, 1722–1724. [Google Scholar] [CrossRef] [Green Version]

- Lopez, A.; Zlatev, D.V.; Mach, K.E.; Bui, D.; Liu, J.J.; Rouse, R.V.; Harris, T.; Leppert, J.T.; Liao, J.C. Intraoperative optical biopsy during robotic assisted radical prostatectomy using confocal endomicroscopy. J. Urol. 2016, 195, 1110–1117. [Google Scholar] [CrossRef] [Green Version]

- Yun, S.H.; Kwok, S.J.J. Light in diagnosis, therapy and surgery. Nat. Biomed. Eng. 2017, 1, 0008. [Google Scholar] [CrossRef]

- Kim, T.; O’Brien, C.; Choi, H.S.; Jeong, M.Y. Fluorescence molecular imaging systems for intraoperative image-guided surgery. Appl. Spectrosc. Rev. 2018, 53, 349–359. [Google Scholar] [CrossRef]

- Vinegoni, C.; Lee, S.; Feruglio, P.F.; Weissleder, R. Advanced motion compensation methods for intravital optical microscopy. IEEE J. Sel. Top Quantum Electron. 2014, 20, 83–91. [Google Scholar] [CrossRef]

- Lee, S.; Vinegoni, C.; Feruglio, P.F.; Fexon, L.; Gorbatov, R.; Pivoravov, M.; Sbarbati, A.; Nahrendorf, M.; Weissleder, R. Real-time in vivo imaging of the beating mouse heart at microscopic resolution. Nat. Commun. 2012, 3, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Jung, K.; Kim, P.; Leuschner, F.; Gorbatov, R.; Kim, J.K.; Ueno, T.; Nahrendorf, M.; Yun, S.H. Endoscopic time-lapse imaging of immune cells in infarcted mouse hearts. Circ Res. 2013, 112, 891–899. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Nakamura, Y.; Yamane, K.; Toujo, T.; Takahashi, S.; Tanikawa, Y.; Takahashi, H. Image stabilization for in vivo microscopy by high-speed visual feedback control. IEEE Trans. Robot 2008, 24, 45–54. [Google Scholar] [CrossRef]

- Lee, S.; Courties, G.; Nahrendorf, M.; Weissleder, R.; Vinegoni, C. Motion characterization scheme to minimize motion artifacts in intravital microscopy. J. Biomed. Opt. 2017, 22, 036005. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bakalar, M.; Schroeder, J.L.; Pursley, R.; Pohida, T.J.; Glancy, B.; Taylor, J.; Chess, D.; Kellman, P.; Xue, H.; Balaban, R.S. Three-dimensional motion tracking for high-resolution optical microscopy, in vivo. J. Microsc. Oxf. 2012, 246, 237–247. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, S.H.; Tay, C.J.; Quan, C.; Shang, H.M.; Zhou, Z.F. Laser integrated measurement of surface roughness and micro-displacement. Meas. Sci. Technol. 2000, 11, 454–458. [Google Scholar] [CrossRef]

- Liu, J.S.; Tian, L.X.; Li, L.J. Light power density distribution of image spot of laser triangulation measuring. Opt. Lasers Eng. 1998, 29, 457–463. [Google Scholar] [CrossRef]

- Berkovic, G.; Shafir, E. Optical methods for distance and displacement measurements. Adv. Opt. Photonics 2012, 4, 441–471. [Google Scholar] [CrossRef]

- Yang, H.W.; Tao, W.; Liu, K.M.; Selami, Y.; Zhao, H. Irradiance distribution model for laser triangulation displacement sensor and parameter optimization. Opt. Eng. 2019, 58, 095106. [Google Scholar] [CrossRef]

- Zhang, X.B.; Fan, F.M.; Gheisari, M.; Srivastava, G. A novel auto-focus method for image processing using laser triangulation. IEEE Access 2019, 7, 64837–64843. [Google Scholar] [CrossRef]

- Maybody, M.; Stevenson, C.; Solomon, S.B. Overview of navigation systems in image-guided interventions. Tech. Vasc. Interv. Radiol. 2013, 16, 136–143. [Google Scholar] [CrossRef]

- Laffray, S.; Pages, S.; Dufour, H.; De Koninck, P.; De Koninck, Y.; Cote, D. Adaptive movement compensation for in vivo imaging of fast cellular dynamics within a moving tissue. PLoS ONE 2011, 6, 19928. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huang, Y.; Zhang, K.; Lin, C.; Kang, J.U. Motion compensated fiber-optic confocal microscope based on a common-path optical coherence tomography distance sensor. Opt. Eng. 2011, 50, 083201. [Google Scholar] [CrossRef]

- Schluter, M.; Glandorf, L.; Sprenger, J.; Gromniak, M.; Schlaefer, A. High-speed markerless tissue motion tracking using volumetric optical coherence tomography images. In Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, USA, 3–7 April 2020. [Google Scholar]

- Schroeder, J.L.; Luger-Hamer, M.; Pursley, R.; Pohida, T.; Chefd’Hotel, C.; Kellman, P.; Balaban, R.S. Short Communication: Subcellular Motion Compensation for Minimally Invasive Microscopy, In Vivo: Evidence for Oxygen Gradients in Resting Muscle. Circ Res. 2010, 106, 1129–1133. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pierce, M.; Yu, D.; Richards-Kortum, R. High-resolution fiber-optic microendoscopy for in situ cellular imaging. J. Vis. Exp. 2011. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shi, R.; Jin, C.; Xie, H.; Zhang, Y.; Li, X.; Dai, Q.; Kong, L. Multi-plane, wide-field fluorescent microscopy for biodynamic imaging in vivo. Biomed Opt. Express 2019, 10, 6625–6635. [Google Scholar] [CrossRef]

- Ghosh, K.K.; Burns, L.D.; Cocker, E.D.; Nimmerjahn, A.; Ziv, Y.; Gamal, A.E.; Schnitzer, M.J. Miniaturized integration of a fluorescence microscope. Nat. Methods 2011, 8, 871–878. [Google Scholar] [CrossRef] [Green Version]

- Pierce, M.C.; Guan, Y.; Quinn, M.K.; Zhang, X.; Zhang, W.H.; Qiao, Y.L.; Castle, P.; Richards-Kortum, R. A pilot study of low-cost, high-resolution microendoscopy as a tool for identifying women with cervical precancer. Cancer Prev. Res. 2012, 5, 1273–1279. [Google Scholar] [CrossRef] [Green Version]

- Miles, B.A.; Patsias, A.; Quang, T.; Polydorides, A.D.; Richards-Kortum, R.; Sikora, A.G. Operative margin control with high-resolution optical microendoscopy for head and neck squamous cell carcinoma. Laryngoscope 2015, 125, 2308–2316. [Google Scholar] [CrossRef]

- Louie, J.S.; Shukla, R.; Richards-Kortum, R.; Anandasabapathy, S. High-resolution microendoscopy in differentiating neoplastic from non-neoplastic colorectal polyps. Best Pract. Res. Clin. Gastroenterol. 2015, 29, 663–673. [Google Scholar] [CrossRef] [Green Version]

- OmniVision Techonologies. Available online: https://www.ovt.com/sensors/OH01A10. (accessed on 13 August 2020).

- Sato, M.; Shouji, K.; Saito, D.; Nishidate, I. Imaging characteristics of an 8.8 mm long and 125 μm thick graded-index short multimode fiber probe. Appl Opt. 2016, 55, 3297–3305. [Google Scholar] [CrossRef]

- Friberg, A.T. Propagation of a generalized radiance in paraxial optical systems. Appl Opt. 1991, 30, 2443–2446. [Google Scholar] [CrossRef]

- Zhao, C.Y.; Tan, W.H.; Guo, Q.Z. Generalized optical ABCD theorem and its application to the diffraction integral calculation. J. Opt. Soc Am. A 2004, 21, 2154–2163. [Google Scholar] [CrossRef]

- Fialka, O.; Cadík, M. FFT and convolution performance in image filtering on GPU. In Proceedings of the Tenth International Conference on Information Visualisation, London, UK, 5–7 July 2006; pp. 609–614. [Google Scholar]

- Xu, B. Identifying fabric structures with fast fourier transform techniques. Text. Res. J. 1996, 66, 496–506. [Google Scholar]

- Kumar, G.G.; Sahoo, S.K.; Meher, P.K. 50 years of FFT algorithms and applications. Circuits Syst. Signal. Process. 2019, 38, 5665–5698. [Google Scholar] [CrossRef]

- Blumensath, T.; Davies, M.E. Sampling Theorems for Signals from the Union of Finite-Dimensional Linear Subspaces. IEEE Trans. Inf. Theory 2009, 55, 1872–1882. [Google Scholar] [CrossRef] [Green Version]

- Girouard, S.D.; Laurita, K.R.; Rosenbaum, D.S. Unique properties of cardiac action potentials recorded with voltage-sensitive dyes. J. Cardiovasc. Electrophysiol. 1996, 7, 1024–1038. [Google Scholar] [CrossRef] [PubMed]

- Shoham, D.; Glaser, D.E.; Arieli, A.; Kenet, T.; Wijnbergen, C.; Toledo, Y.; Hildesheim, R.; Grinvald, A. Imaging cortical dynamics at high spatial and temporal resolution with novel blue voltage-sensitive dyes. Neuron 1999, 24, 791–802. [Google Scholar] [CrossRef] [Green Version]

- Winkle, R. The relationship between ventricular ectopic beat frequency and heart rate. Circulation 1982, 66, 439. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Soares-Miranda, L.; Sattelmair, J.; Chaves, P.; Duncan, G.E.; Siscovick, D.S.; Stein, P.K.; Mozaffarian, D. Physical activity and heart rate variability in older adults the cardiovascular health study. Circulation 2014, 129, 2100–2110. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kuo, C.C.; Chuang, H.C.; Liao, A.H.; Yu, H.W.; Cai, Y.R.; Tien, E.C.; Jeng, S.C.; Chiou, E.F. Fast Fourier transform combined with phase leading compensator for respiratory motion compensation system. Quant. Imaging Med. Surg. 2020, 10, 907–920. [Google Scholar] [CrossRef]

- Wang, W.; Li, J.; Wang, S.; Su, H.; Jiang, X. System design and animal experiment study of a novel minimally invasive surgical robot. Int. J. Med. Robot. 2016, 12, 73–84. [Google Scholar] [CrossRef] [PubMed]

- Yi, B.; Wang, G.; Li, J.; Jiang, J.; Son, Z.; Su, H.; Zhu, S.; Wang, S. Domestically produced Chinese minimally invasive surgical robot system “Micro Hand S” is applied to clinical surgery preliminarily in China. Surg Endosc. 2017, 31, 487–493. [Google Scholar] [CrossRef] [PubMed]

- Jin, X.Z.; Feng, M.; Zhao, J.; Li, J.M. Design a flexible surgical instrument for robot-assisted minimally invasive surgery. In Proceedings of the 2016 IEEE International Conference on Robotics and Biomimetics (ROBIO), Qingdao, China, 3–7 December 2016; pp. 260–264. [Google Scholar]

- Skala, M.C.; Riching, K.M.; Gendron-Fitzpatrick, A.; Eickhoff, J.; Eliceiri, K.W.; White, J.G.; Ramanujam, N. In vivo multiphoton microscopy of NADH and FAD redox states, fluorescence lifetimes, and cellular morphology in precancerous epithelia. Proc. Natl. Acad. Sci. USA 2007, 104, 19494–19499. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wüst, R.C.; Helmes, M.; Stienen, G.J. Rapid changes in NADH and flavin autofluorescence in rat cardiac trabeculae reveal large mitochondrial complex II reserve capacity. J. Physiol. 2015, 593, 1829–1840. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jo, J.A.; Cuenca, R.; Duran, E.; Cheng, S.; Malik, B.; Maitland, K.C.; Wright, J.; Cheng, Y.L.; Ahmed, B. Autofluorescence Lifetime Endoscopy for Early Detection of Oral Dysplasia and Cancer. In Proceedings of the Latin America Optics and Photonics Conference, Lima, Peru, 12–15 November 2018; ISBN 978-1-943580-49-1. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, R.; Liao, H.; Ma, W.; Li, J.; Wang, S. A Compact High-Speed Image-Based Method for Measuring the Longitudinal Motion of Living Tissues. Sensors 2020, 20, 4573. https://doi.org/10.3390/s20164573

Yang R, Liao H, Ma W, Li J, Wang S. A Compact High-Speed Image-Based Method for Measuring the Longitudinal Motion of Living Tissues. Sensors. 2020; 20(16):4573. https://doi.org/10.3390/s20164573

Chicago/Turabian StyleYang, Ruilin, Heqin Liao, Weng Ma, Jinhua Li, and Shuxin Wang. 2020. "A Compact High-Speed Image-Based Method for Measuring the Longitudinal Motion of Living Tissues" Sensors 20, no. 16: 4573. https://doi.org/10.3390/s20164573