Fusion of Environmental Sensing on PM2.5 and Deep Learning on Vehicle Detecting for Acquiring Roadside PM2.5 Concentration Increments

Abstract

1. Introduction

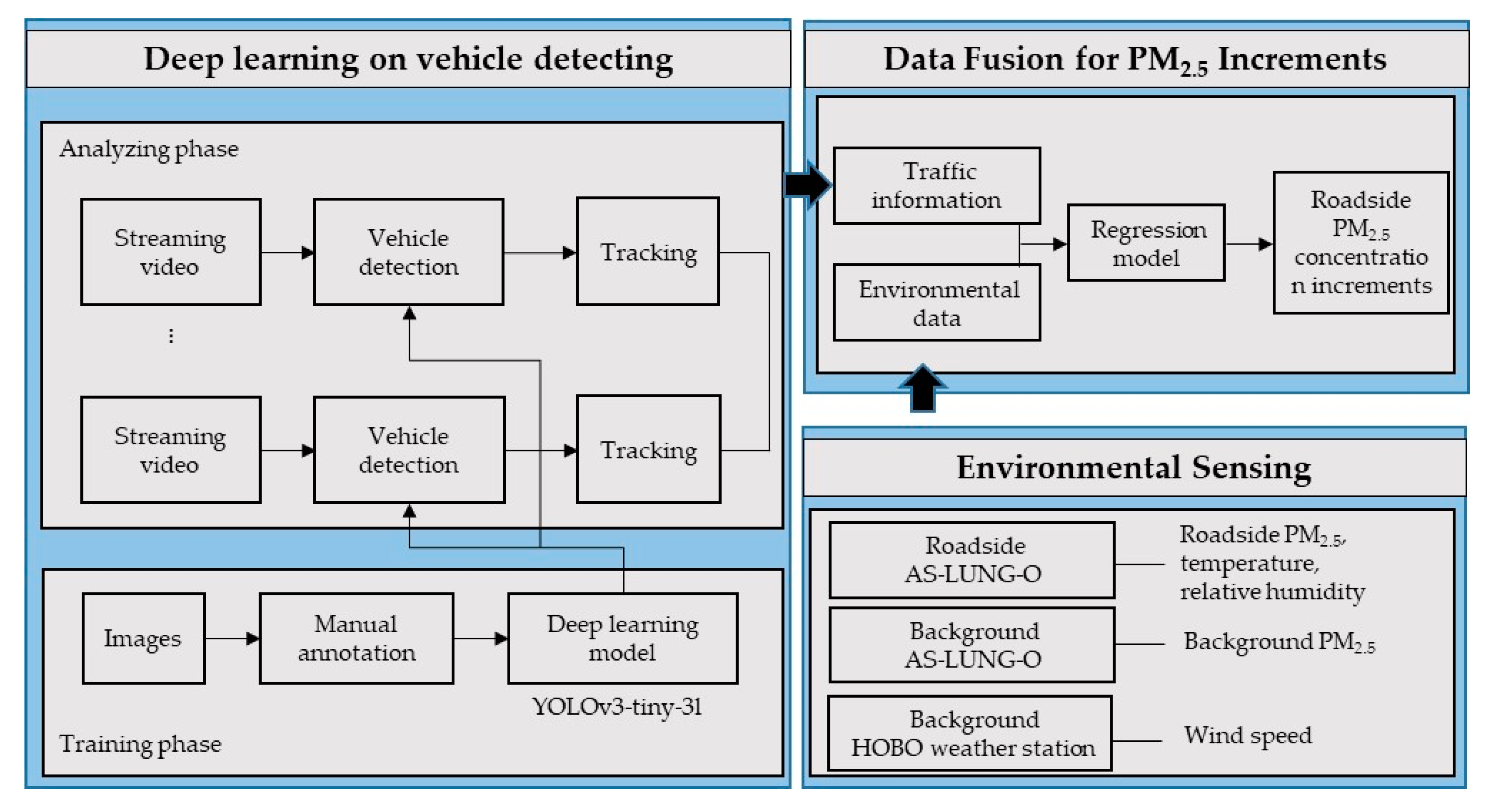

2. Materials and Methods

2.1. Vehicle Classification and Counting Method

2.2. Performance Evaluation of YOLOv3-Tiny-3l

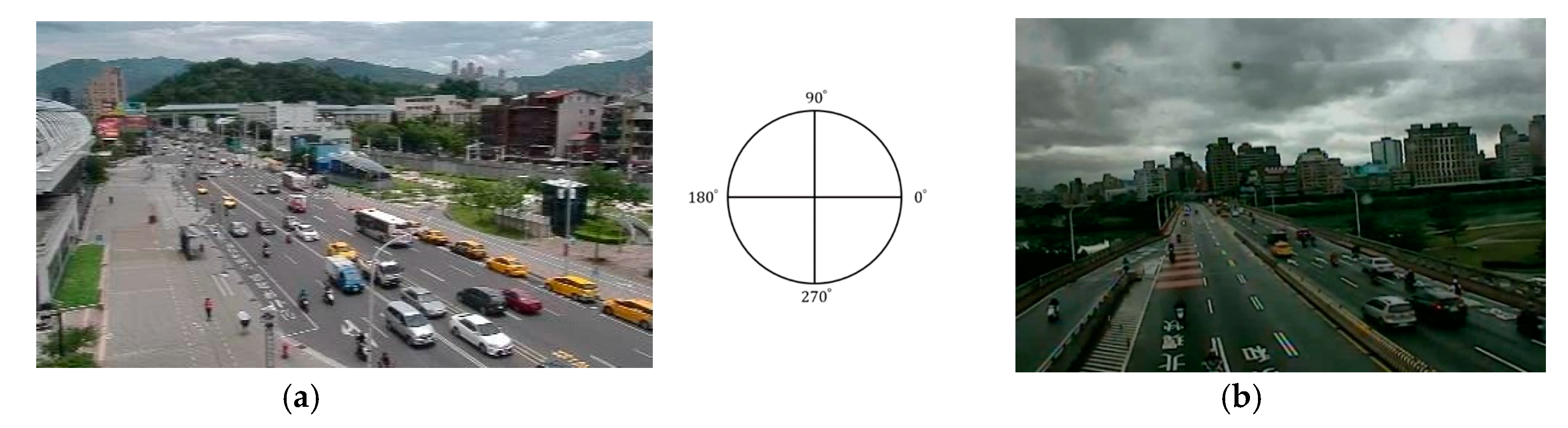

2.3. PM2.5 Sensing and Vehicle Counting in Fieldwork

2.4. Data Fusion for PM2.5 Concentration Increments

3. Results

3.1. Evaluation of Traffic Analysis System

3.2. PM2.5 Sensing and Vehicle Counting

3.3. Incremental PM2.5 Concentration Increase due to Vehicles

4. Discussion

4.1. Vehicle Classification/Counting System

4.2. Incremental Contribution of PM2.5 Levels at Roadsides of Vehicles

4.3. Limitation of This Work

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Gao, M.L.; Cao, J.J.; Seto, E. A distributed network of low-cost continuous reading sensors to measure spatiotemporal variations of PM2.5 in Xi’an, China. Environ. Pollut. 2015, 199, 56–65. [Google Scholar] [CrossRef] [PubMed]

- Holstius, D.M.; Pillarisetti, A.; Smith, K.R.; Seto, E. Field calibrations of a low-cost aerosol sensor at a regulatory monitoring site in California. Atmos. Meas. Tech. 2014, 7, 1121–1131. [Google Scholar] [CrossRef]

- Snyder, E.G.; Watkins, T.H.; Solomon, P.A.; Thoma, E.D.; Williams, R.W.; Hagler, G.S.W.; Shelow, D.; Hindin, D.A.; Kilaru, V.J.; Preuss, P.W. The changing paradigm of air pollution monitoring. Environ. Sci. Technol. 2013, 47, 11369–11377. [Google Scholar] [CrossRef] [PubMed]

- Clements, A.L.; Griswold, W.G.; Abhijit, R.S.; Johnston, J.E.; Herting, M.M.; Thorson, J.; Collier-Oxandale, A.; Hannigan, M. Low-cost air quality monitoring tools: From research to practice (A Workshop Summary). Sensors 2017, 17, 2478. [Google Scholar] [CrossRef]

- IARC. IARC Scientific Publication No. 161: Air Pollution and Cancer; International Agency for Research on Cancer (IARC): Lyon, France, 2013. [Google Scholar]

- Van Donkelaar, A.; Martin, R.V.; Brauer, M.; Boys, B.L. Use of satellite observations for long-term exposure assessment of global concentrations of fine particulate matter. Environ. Health Perspect. 2015, 123, 135–143. [Google Scholar] [CrossRef]

- Brauer, M.; Freedman, G.; Frostad, J.; Van Donkelaar, A.; Martin, R.V.; Dentener, F.; Dingenen, R.V.; Estep, K.; Amini, H.; Apte, J.S.; et al. Ambient air pollution exposure estimation for the global burden of disease 2013. Environ. Sci. Technol. 2016, 50, 79–88. [Google Scholar] [CrossRef]

- World Health Organization (WHO). Ambient (Outdoor) Air Quality and Health; World Health Organization (WHO): Geneva, Switzerland, 2018; Available online: http://www.who.int/mediacentre/factsheets/fs313/en/ (accessed on 18 April 2020).

- Forouzanfar, M.H.; Afshin, A.; Alexander, L.T.; Anderson, H.R.; Bhutta, Z.A.; Biryukov, S.; Brauer, M.; Burnett, R.; Cercy, K.; Charlson, F.J.; et al. Global, regional, and national comparative risk assessment of 79 behavioural, environmental and occupational, and metabolic risks or clusters of risks, 1990–2015: A systematic analysis for the global burden of disease study 2015. Lancet 2016, 388, 1659–1724. [Google Scholar] [CrossRef]

- Lelieveld, J.; Evans, J.S.; Fnais, M.; Giannadaki, D.; Pozzer, A. The contribution of outdoor air pollution sources to premature mortality on a global scale. Nature 2015, 525, 367–371. [Google Scholar] [CrossRef]

- Karagulian, F.; Belis, C.A.; Dora, C.F.C.; Prüss-Ustün, A.M.; Bonjour, S.; Adair-Rohani, H.; Amann, M. Contributions to cities’ ambient particulate matter (PM): A systematic review of local source contributions at global level. Atmos. Environ. 2015, 120, 475–483. [Google Scholar] [CrossRef]

- Franco, V.; Kousoulidou, M.; Muntean, M.; Ntziachristos, L.; Hausberger, S.; Dilara, P. Road vehicle emis sion factors development: A review. Atmos. Environ. 2013, 70, 84–97. [Google Scholar] [CrossRef]

- Lung, S.C.C.; Wang, W.C.; Wen, T.Y.; Liu, C.H.; Hu, S.C. A versatile low-cost sensing device for assessing PM2.5 spatiotemporal variation and qualifying source contribution. Sci. Total Environ. 2020, 716, 137145. [Google Scholar] [CrossRef] [PubMed]

- Jerrett, M.; Burnet, R.T.; Ma, R.; Pope, C.A., III; Krewski, D.; Newbold, B.; Thurston, G.; Shi, Y.; Finkelstein, N.; Calle, E.E.; et al. Spatial analysis of air pollution and mortality in Los Angeles. Epidemiology 2006, 17, S69. [Google Scholar] [CrossRef]

- Colbeck, I.; Nasir, Z.A.; Ahmad, S.; Ali, Z. Exposure to PM10, PM2.5, PM1 and carbon monoxide on roads in Lahore, Pakistan. Aerosol Air Qual. Res. 2011, 11, 689–695. [Google Scholar] [CrossRef]

- Shakya, K.M.; Peltier, R.E.; Zhang, Y.; Pandey, B.D. Roadside exposure and inflammation biomarkers among a cohort of traffic police in Kathmandu, Nepal. Int. J. Environ. Res. Public Health 2019, 16, 377. [Google Scholar] [CrossRef]

- United States Environmental Protection Agency (USEPA). Technical Note–PM2.5 Continuous Monitor Comparability Assessment; USEPA Office of Air Quality Planning and Standards: Research Triangle Park, NC, USA, 2018. Available online: https://www.epa.gov/outdoor-air-quality-data/pm25-continuous-monitor-comparability-assessments (accessed on 18 April 2020).

- United States Environmental Protection Agency (USEPA). 40 CFR Appendix Table C-4 to Subpart C of Part 53-Test Specifications for PM 10, PM2.5 and PM10-2.5 Candidate Equivalent Methods; USEPA: Washington, DC, USA, 2006; Available online: https://www.law.cornell.edu/cfr/text/40/appendix-Table_C-4_to_subpart_C_of_part_53 (accessed on 18 April 2020).

- Zheng, T.S.; Bergin, M.H.; Johnson, K.K.; Tripathi, S.N.; Shirodkar, S.; Landis, M.S.; Sutaria, R.; Carlson, D.E. Field evaluation of low-cost particulate matter sensors in high-and low-concentration environments. Atmos. Meas. Tech. 2018, 11, 4823–4846. [Google Scholar] [CrossRef]

- Sayahi, T.; Kaufman, D.; Becnel, T.; Kaur, K.; Butterfield, A.E.; Collingwood, S.; Zhang, Y.; Gaillardon, P.E.; Kelly, K.E. Development of a calibration chamber to evaluate the performance of low-cost particulate matter sensors. Environ. Pollut. 2019, 255, 9. [Google Scholar] [CrossRef]

- Wang, W.C.; Lung, S.C.C.; Liu, C.H.; Shui, C.K. Laboratory evaluations of correction equations with multiple choices for seed low-cost particle sensing devices in sensor networks. Sensors 2020, 20, 3661. [Google Scholar] [CrossRef]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, L.; Wojna, Z.; Song, Y.; Guadarrama, S.; et al. Speed/accuracy trade-offs for modern convolutional object detectors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3296–3305. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Alexey/AB, Darknet Yolo-v4 and Yolo-v3/v2 for Windows and Linux. Available online: https://github.com/AlexeyAB/darknet (accessed on 30 June 2020).

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Dodge, S.; Karam, L. Understanding how image quality affects deep neural networks. In Proceedings of the International Conference on Quality of Multimedia Experience, Lisbon, Portugal, 6–8 June 2016; pp. 1–6. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.R.; He, K.; Dollar, P. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Everingham, M.; VanGool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Bouguet, J.Y. Pyramidal Implementation of the Affine Lucas Kanade Feature Tracker Description of the Algorithm; INTEL Corporation, Microprocessor Research Labs: Santa Clara, CA, USA, 1999. [Google Scholar]

- Zoph, B.; Cubuk, E.D.; Ghiasi, G.; Lin, T.Y.; Shlens, J.; Le, Q.V. Learning data augmentation strategies for object detection. arXiv 2019, arXiv:1906.11172. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Wang, H.; He, S.; Yu, J.; Wang, L.; Liu, T. Research and implementation of vehicle target detection and information recognition technology based on NI myRIO. Sensors 2020, 20, 1765. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.W.; Lu, S.J.; Lin, K.S. Test emission characteristics of motorcycles in Central Taiwan. Sci. Total Environ. 2006, 368, 435–443. [Google Scholar] [CrossRef]

- Chen, Y.C.; Chen, L.Y.; Jeng, F.T. Analysis of motorcycle exhaust regular testing data—A case study of Taipei city. J. Air Waste Manag. Assoc. 2009, 59, 757–762. [Google Scholar] [CrossRef]

- Wu, C.D.; Lung, S.C.C. Applying GIS and fine-resolution digital terrain models to assess three-dimensional population distribution under traffic impacts. J. Exp. Sci. Environ. Epidemiol. 2012, 22, 126–234. [Google Scholar] [CrossRef][Green Version]

- Mohammed, M.O.A.; Song, W.W.; Ma, W.L.; Li, W.L.; Ambuchi, J.J.; Thabit, M.; Li, Y.F. Trends in indoor-outdoor PM2.5 research: A systematic review of studies conducted during the last decade (2003–2013). Atmos. Pollut. Res. 2015, 6, 893–903. [Google Scholar] [CrossRef]

- Fontaras, G.; Zacharof, N.G.; Ciuffo, B. Fuel consumption and CO2 emissions from passenger cars in Europe-Laboratory versus real-world emissions. Prog. Energy Combust. Sci. 2017, 60, 97–131. [Google Scholar] [CrossRef]

- Jimenez, J.L.; Valido, J.; Molden, N. The drivers behind differences between official and actual vehicle efficiency and CO2 emissions. Transp. Res. Part D 2019, 67, 628–641. [Google Scholar] [CrossRef]

- Prati, M.V.; Costagliola, M.A.; Zuccheroso, A.; Napolitano, P. Assessment of Euro 5 diesel vehicle NOx emissions by laboratory and track testing. Environ. Sci. Pollut. Res. 2019, 26, 10576–10586. [Google Scholar] [CrossRef] [PubMed]

- Oldenkamp, R.; Zelm, R.V.; Huijbregts, M.A.J. Valuing the human health damage caused by the fraud of Volkswagen. Environ. Pollut. 2016, 212, 121–127. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Jerrett, M.; Sinsheimer, P.; Zhu, Y. Estimating PM2.5-associated mortality increase in California due to the Volkswagen emission control defeat device. Atmos. Environ. 2016, 144, 168–174. [Google Scholar] [CrossRef]

- Ling, H.; Lung, S.C.C.; Uhrner, U. Micro-scale PM simulation and traffic-related PM exposure assessment in an Asian residential community. Environ. Pollut. 2020, 266, 115046. [Google Scholar] [CrossRef]

| Detector | Category | Sedan | Motorcycle | Bus | Truck | Trailer | Precision | Recall |

|---|---|---|---|---|---|---|---|---|

| YOLOv3-tiny-3l | True positive (TP) | 2233 | 1335 | 151 | 143 | 25 | ||

| (608 × 608) | False positive (FP) | 259 | 236 | 14 | 47 | 4 | ||

| False negative (FN) | 262 | 358 | 27 | 70 | 9 | 87% | 84% | |

| YOLOv4 | TP | 2332 | 1455 | 165 | 175 | 27 | ||

| (416 × 416) | FP | 249 | 259 | 16 | 37 | 6 | ||

| FN | 163 | 238 | 13 | 38 | 7 | 88% | 90% | |

| YOLOv4 | TP | 2337 | 1483 | 166 | 169 | 28 | ||

| (512 × 512) | FP | 255 | 241 | 14 | 27 | 7 | ||

| FN | 158 | 210 | 12 | 44 | 6 | 88% | 91% |

| Location A (n = 33,922) | Location B (n = 26,729) | |||

|---|---|---|---|---|

| Mean | SD 2 | Mean | SD | |

| Roadside PM2.5 (µg/m3) | 17.6 | 9.2 | 16.5 | 6.8 |

| Temperature (°C) | 28.5 | 5.1 | 28.1 | 4.52 |

| RH (%) | 71.9 | 12.2 | 59.1 | 11.0 |

| Wind speed (m/s) | 0.73 | 0.73 | 2.55 | 1.05 |

| Background PM2.5 (µg/m3) | 17.2 | 9.1 | 10.8 | 3.9 |

| Sedan_near | 10.4 | 8.5 | 45.4 | 31.4 |

| Motocycle_near | NA | NA | 7.9 | 9.1 |

| Bus_near | 0.04 | 0.20 | 1.1 | 1.4 |

| Truck_near | 0.50 | 0.87 | 2.1 | 2.5 |

| Trailer_near | 0.00 | 0.00 | 0.00 | 0.03 |

| Speed_near 1 (km/h) | 33.2 (n = 42,243) | 11.9 | 43.8 (n = 25,710) | 14.3 |

| Sedan_far | 10.2 | 8.01 | 43.2 | 27.6 |

| Motocycle_far | NA | NA | 9.1 | 9.3 |

| Bus_far | 0.04 | 0.25 | 2.0 | 1.9 |

| Truck_far | 0.58 | 1.1 | 2.3 | 2.7 |

| Trailer_far | 0.00 | 0.01 | 0.00 | 0.03 |

| Speed_far 1 (km/h) | 52.7 (n = 39,546) | 19.7 | 35.0 (n = 26,242) | 12.0 |

| Location A | Location B | |||

|---|---|---|---|---|

| Coefficient | SE | Coefficient | SE | |

| Intercept | 4.15 * | 0.096 | 2.54 * | 0.438 |

| Background PM2.5 (µg/m3) | 0.99 * | 0.001 | 1.16 * | 0.007 |

| Temperature (°C) | −0.13 * | 0.002 | −0.186 * | 0.009 |

| RH (%) | 0.0004 | 0.001 | 0.147 * | 0.004 |

| Wind speed (m/s) | 0.0095 | 0.01 | −0.60 * | 0.026 |

| Sedan (count) | 0.0027 * | 0.001 | 0.0050 * | 0.001 |

| Others (count) | NA | NA | −0.039 * | 0.002 |

| R2 | 0.983 | 0.612 | ||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.-C.V.; Lin, T.-H.; Liu, C.-H.; Su, C.-W.; Lung, S.-C.C. Fusion of Environmental Sensing on PM2.5 and Deep Learning on Vehicle Detecting for Acquiring Roadside PM2.5 Concentration Increments. Sensors 2020, 20, 4679. https://doi.org/10.3390/s20174679

Wang W-CV, Lin T-H, Liu C-H, Su C-W, Lung S-CC. Fusion of Environmental Sensing on PM2.5 and Deep Learning on Vehicle Detecting for Acquiring Roadside PM2.5 Concentration Increments. Sensors. 2020; 20(17):4679. https://doi.org/10.3390/s20174679

Chicago/Turabian StyleWang, Wen-Cheng Vincent, Tai-Hung Lin, Chun-Hu Liu, Chih-Wen Su, and Shih-Chun Candice Lung. 2020. "Fusion of Environmental Sensing on PM2.5 and Deep Learning on Vehicle Detecting for Acquiring Roadside PM2.5 Concentration Increments" Sensors 20, no. 17: 4679. https://doi.org/10.3390/s20174679

APA StyleWang, W.-C. V., Lin, T.-H., Liu, C.-H., Su, C.-W., & Lung, S.-C. C. (2020). Fusion of Environmental Sensing on PM2.5 and Deep Learning on Vehicle Detecting for Acquiring Roadside PM2.5 Concentration Increments. Sensors, 20(17), 4679. https://doi.org/10.3390/s20174679