Recognition and Repetition Counting for Local Muscular Endurance Exercises in Exercise-Based Rehabilitation: A Comparative Study Using Artificial Intelligence Models

Abstract

:1. Introduction

- To undertake a comparative analysis between different traditional supervised ML algorithms and a deep CNN model based on the state-of-the-art architecture and to find the best model for exercise recognition.

- To have a comparative analysis of traditional signal processing approach with a single deep CNN model based on the state-of-the-art architecture and to find the best model for exercise recognition.

2. Materials and Methods

2.1. Data Acquisition (Sensors and Exercises)

2.1.1. Sensor Calibration

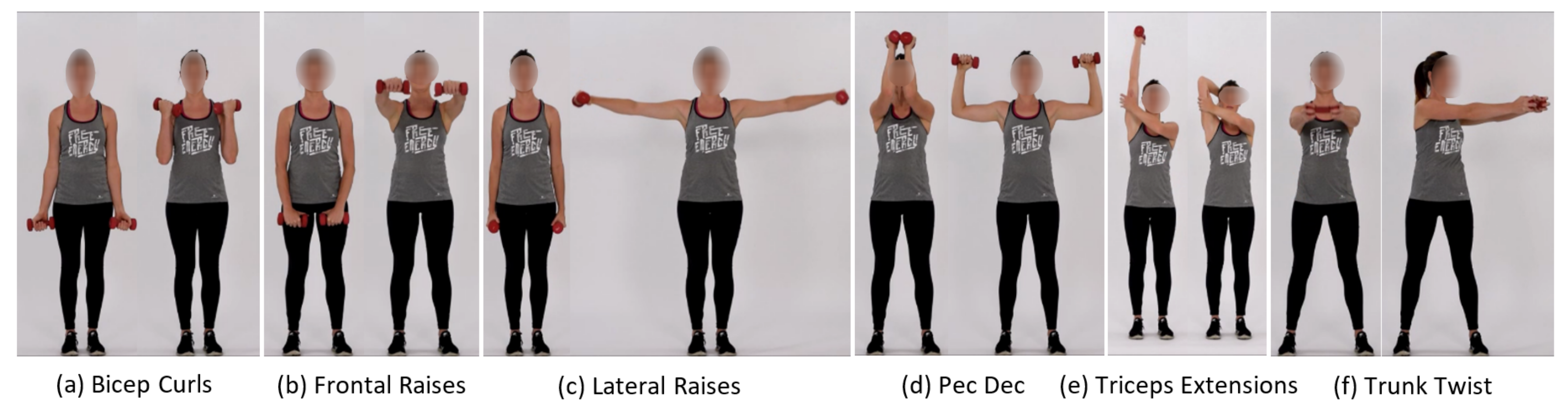

2.1.2. LME Exercise Set and Experimental Protocol

2.2. Data Collection for the Insight-Lme Data Set

2.3. The Framework of Different Models

2.3.1. Exercise Recognition with Supervised ML Models

Data Segmentation

Feature Extraction

Feature Reduction Using PCA

Classifiers for Exercise Recognition

2.3.2. Exercise Recognition with a Deep CNN Using Alexnet Architecture

Data Segmentation and Processing

CNN_Model for the Exercise Recognition Task

2.3.3. Exercise Repetition Counting with Peak Detection Method

Data Processing and Filtering

Peak Detection and Repetition Counting

2.3.4. Exercise Repetition Counting with a Deep CNN Using Alexnet Architecture

Data Segmentation & Processing

CNN_Model as a Repetition Counter

3. Results

3.1. Results of Data Sampling

Summary of Data Sampling

3.2. Results for the Exercise Recognition Task

3.2.1. Experimental Results of Exercise Recognition with Supervised ML Models

3.2.2. Experimental Results of CNN _Model

Summary of Comparative Study of Models for the Exercise Recognition Task

3.3. Results for the Exercise Repetition Counting Task

3.3.1. Experimental Results of Repetition Counting Using Peak Detectors

3.3.2. Experimental Results of Repetition Counting Using CNN _Model

Summary of Comparative Study of Models for the Exercise Repetition Counting Task

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Representative Postures for the LME Exercises Used and MATLAB–GUI Module Used in the Data Capture Process

Appendix B. Representation of PCA Computation of Time-Frequency Feature Vectors

Appendix C. Receiver Operating Characteristic of the SVM Model (AUC-ROC Plot)

Appendix D. Model Architecture for CNN _Model

| Layer | Value | Parameters |

|---|---|---|

| Input Layer | 227 × 227 × 3 | 0 |

| Convolution Filters CL1 | 96 | 34,944 |

| Kernel Size CL1 | (11, 11) | - |

| Strides CL1 | (4, 4) | - |

| Pooling PL1 | (3, 3) | 0 |

| Strides PL1 | (2, 2) | - |

| Convolution Filters CL2 | 256 | 614,656 |

| Kernel Size CL2 | (5, 5) | - |

| Strides CL2 | (1, 1) | - |

| Pooling PL2 | (3, 3) | 0 |

| Strides PL2 | (2, 2) | - |

| Convolution Filters CL3 | 384 | 885,120 |

| Kernel Size CL3 | (3, 3) | - |

| Strides CL3 | (1, 1) | - |

| Convolution Filters CL4 | 384 | 1,327,488 |

| Kernel Size CL4 | (3, 3) | - |

| Strides CL4 | (1, 1) | - |

| Convolution Filters CL5 | 256 | 884,992 |

| Kernel Size CL5 | (3, 3) | - |

| Strides CL5 | (1, 1) | - |

| Pooling PL3 | (2, 2) | 0 |

| Strides PL3 | (2, 2) | - |

| Dense DL1 | 4096 | 4,198,400 |

| Dropout DL1 | 0.4 | 0 |

| Dense DL2 | 4096 | 16,781,312 |

| Dropout DL2 | 0.4 | 0 |

| Dense DL3 | 1000 | 4,097,000 |

| Dropout DL3 | 0.4 | 0 |

| Batch Normalization CL1, CL2, CL3, CL4, CL5, DL1, DL2, DL3 | Yes | 384 + 1024 + 1536 + 1536 + 1024 + 16,384 + 16,384 + 4000 |

| Activation function CL1, CL2, CL3, CL4, CL5, DL1, DL2, DL3 | ReLU | 0 |

| Activation function DL2 | SoftMax | 0 |

| Total Parameters | : | 28,877,195 |

| Trainable Parameters | : | 28,856,059 |

| Non-trainable Parameters | : | 21,136 |

Appendix E. 3D Accelerometer Raw Data Signal Plots for All Exercises

Appendix F. 3D Gyroscope Raw Data Signal Plots for All Exercise

References

- Cardiovascular Diseases (CVDs). Available online: https://www.who.int/news-room/fact-sheets/detail/cardiovascular-diseases-(cvds) (accessed on 17 May 2017).

- de la Cuerda, R.C.; Diego, I.M.A.; Martín, J.J.A.; Sánchez, A.M.; Page, J.C.M. Cardiac rehabilitation programs and health-related quality of life. State of the art. Rev. Espa NOla Cardiol. Engl. Ed. 2012, 65, 72–79. [Google Scholar] [CrossRef]

- Pescatello, L.S.; Riebe, D.; Thompson, P.D.; Pescatello, L.S.; Riebe, D.; Thompson, P.D. ACSM’s Guidelines for Exercise Testing and Prescription; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2014. [Google Scholar]

- Franklin, B.; Bonzheim, K.; Warren, J.; Haapaniemi, S.; Byl, N.; Gordon, N. Effects of a contemporary, exercise-based rehabilitation and cardiovascular risk-reduction program on coronary patients with abnormal baseline risk factors. Chest 2002, 122, 338–343. [Google Scholar] [CrossRef] [Green Version]

- Engblom, E.; Korpilahti, K.; Hämäläinen, H.; Rönnemaa, T.; Puukka, P. Quality of life and return to work 5 years after coronary artery bypass surgery: Long-term results of cardiac rehabilitation. J. Cardiopulm. Rehabil. Prev. 1997, 17, 29–36. [Google Scholar] [CrossRef] [PubMed]

- Franklin, B.A.; Lavie, C.J.; Squires, R.W.; Milani, R.V. Exercise-based cardiac rehabilitation and improvements in cardiorespiratory fitness: Implications regarding patient benefit. In Mayo Clinic Proceedings; Elsevier: Amsterdam, The Netherlands, 2013; Volume 88, pp. 431–437. [Google Scholar]

- Dalal, H.M.; Zawada, A.; Jolly, K.; Moxham, T.; Taylor, R.S. Home based versus centre based cardiac rehabilitation: Cochrane systematic review and meta-analysis. BMJ 2010, 340, b5631. [Google Scholar] [CrossRef] [Green Version]

- Buys, R.; Claes, J.; Walsh, D.; Cornelis, N.; Moran, K.; Budts, W.; Woods, C.; Cornelissen, V.A. Cardiac patients show high interest in technology enabled cardiovascular rehabilitation. BMC Med. Inform. Decis. Mak. 2016, 16, 95. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jackson, L.; Leclerc, J.; Erskine, Y.; Linden, W. Getting the most out of cardiac rehabilitation: A review of referral and adherence predictors. Heart 2005, 91, 10–14. [Google Scholar] [CrossRef] [PubMed]

- Foerster, F.; Smeja, M.; Fahrenberg, J. Detection of posture and motion by accelerometry: A validation study in ambulatory monitoring. Comput. Hum. Behav. 1999, 15, 571–583. [Google Scholar] [CrossRef]

- Lara, O.D.; Labrador, M.A. A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutor. 2012, 15, 1192–1209. [Google Scholar] [CrossRef]

- Nabian, M. A comparative study on machine learning classification models for activity recognition. J. Inf. Technol. Softw. Eng. 2017, 7, 4–8. [Google Scholar] [CrossRef]

- Vallati, C.; Virdis, A.; Gesi, M.; Carbonaro, N.; Tognetti, A. ePhysio: A wearables-enabled platform for the remote management of musculoskeletal diseases. Sensors 2019, 19, 2. [Google Scholar] [CrossRef] [Green Version]

- Bao, L.; Intille, S.S. Activity recognition from user-annotated acceleration data. In International Conference on Pervasive Computing; Springer: Berlin/Heidelberg, Germany, 2004; pp. 1–17. [Google Scholar]

- Mannini, A.; Intille, S.S.; Rosenberger, M.; Sabatini, A.M.; Haskell, W. Activity recognition using a single accelerometer placed at the wrist or ankle. Med. Sci. Sports Exerc. 2013, 45, 2193. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Baños, O.; Damas, M.; Pomares, H.; Rojas, I.; Tóth, M.A.; Amft, O. A benchmark dataset to evaluate sensor displacement in activity recognition. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 1026–1035. [Google Scholar]

- Bulling, A.; Blanke, U.; Schiele, B. A tutorial on human activity recognition using body-worn inertial sensors. ACM Comput. Surv. CSUR 2014, 46, 1–33. [Google Scholar] [CrossRef]

- Attal, F.; Mohammed, S.; Dedabrishvili, M.; Chamroukhi, F.; Oukhellou, L.; Amirat, Y. Physical human activity recognition using wearable sensors. Sensors 2015, 15, 31314–31338. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mannini, A.; Sabatini, A.M.; Intille, S.S. Accelerometry-based recognition of the placement sites of a wearable sensor. Pervasive Mob. Comput. 2015, 21, 62–74. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Köping, L.; Shirahama, K.; Grzegorzek, M. A general framework for sensor-based human activity recognition. Comput. Biol. Med. 2018, 95, 248–260. [Google Scholar] [CrossRef] [PubMed]

- Sztyler, T.; Stuckenschmidt, H.; Petrich, W. Position-aware activity recognition with wearable devices. Pervasive Mob. Comput. 2017, 38, 281–295. [Google Scholar] [CrossRef]

- Ahmadi, A.; Mitchell, E.; Destelle, F.; Gowing, M.; O’Connor, N.E.; Richter, C.; Moran, K. Automatic activity classification and movement assessment during a sports training session using wearable inertial sensors. In Proceedings of the 2014 11th International Conference on Wearable and Implantable Body Sensor Networks, Zurich, Switzerland, 16–19 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 98–103. [Google Scholar]

- Um, T.T.; Babakeshizadeh, V.; Kulić, D. Exercise motion classification from large-scale wearable sensor data using convolutional neural networks. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2385–2390. [Google Scholar]

- O’Reilly, M.A.; Whelan, D.F.; Ward, T.E.; Delahunt, E.; Caulfield, B. Classification of lunge biomechanics with multiple and individual inertial measurement units. Sports Biomech. 2017, 16, 342–360. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Su, C.; He, C. Rehabilitation Exercise Recognition and Evaluation Based on Smart Sensors with Deep Learning Framework. IEEE Access 2020, 8, 77561–77571. [Google Scholar] [CrossRef]

- Zhu, C.; Sheng, W. Recognizing human daily activity using a single inertial sensor. In Proceedings of the 2010 8th World Congress on Intelligent Control and Automation, Jinan, China, 7–9 July 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 282–287. [Google Scholar]

- Soro, A.; Brunner, G.; Tanner, S.; Wattenhofer, R. Recognition and repetition counting for complex physical exercises with deep learning. Sensors 2019, 19, 714. [Google Scholar] [CrossRef] [Green Version]

- Ebert, A.; Beck, M.T.; Mattausch, A.; Belzner, L.; Linnhoff-Popien, C. Qualitative assessment of recurrent human motion. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 306–310. [Google Scholar]

- Koskimäki, H.; Siirtola, P. Recognizing gym exercises using acceleration data from wearable sensors. In Proceedings of the 2014 IEEE Symposium on Computational Intelligence and Data Mining (CIDM), Orlando, FL, USA, 9–12 December 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 321–328. [Google Scholar]

- Whelan, D.; O’Reilly, M.; Ward, T.; Delahunt, E.; Caulfield, B. Evaluating performance of the lunge exercise with multiple and individual inertial measurement units. In Proceedings of the Pervasive Health 2016: 10th EAI International Conference on Pervasive Computing Technologies for Healthcare, Cancun, Mexico, 16–19 May 2016; ACM: New York, NY, USA, 2016. [Google Scholar]

- O’Reilly, M.; Duffin, J.; Ward, T.; Caulfield, B. Mobile app to streamline the development of wearable sensor-based exercise biofeedback systems: System development and evaluation. JMIR Rehabil. Assist. Technol. 2017, 4, e9. [Google Scholar] [CrossRef]

- Ding, H.; Han, J.; Shangguan, L.; Xi, W.; Jiang, Z.; Yang, Z.; Zhou, Z.; Yang, P.; Zhao, J. A platform for free-weight exercise monitoring with passive tags. IEEE Trans. Mob. Comput. 2017, 16, 3279–3293. [Google Scholar] [CrossRef]

- Huang, B.; Giggins, O.; Kechadi, T.; Caulfield, B. The limb movement analysis of rehabilitation exercises using wearable inertial sensors. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 4686–4689. [Google Scholar]

- Pernek, I.; Kurillo, G.; Stiglic, G.; Bajcsy, R. Recognizing the intensity of strength training exercises with wearable sensors. J. Biomed. Inform. 2015, 58, 145–155. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhu, Z.A.; Lu, Y.C.; You, C.H.; Chiang, C.K. Deep learning for sensor-based rehabilitation exercise recognition and evaluation. Sensors 2019, 19, 887. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- De, D.; Bharti, P.; Das, S.K.; Chellappan, S. Multimodal wearable sensing for fine-grained activity recognition in healthcare. IEEE Internet Comput. 2015, 19, 26–35. [Google Scholar] [CrossRef]

- Weiss, G.M.; Timko, J.L.; Gallagher, C.M.; Yoneda, K.; Schreiber, A.J. Smartwatch-based activity recognition: A machine learning approach. In Proceedings of the 2016 IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), Las Vegas, NV, USA, 24–27 February 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 426–429. [Google Scholar]

- Sarcevic, P.; Pletl, S.; Kincses, Z. Comparison of time-and frequency-domain features for movement classification using data from wrist-worn sensors. In Proceedings of the 2017 IEEE 15th International Symposium on Intelligent Systems and Informatics (SISY), Subotica, Serbia, 14–16 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 000261–000266. [Google Scholar]

- Chernbumroong, S.; Atkins, A.S.; Yu, H. Activity classification using a single wrist-worn accelerometer. In Proceedings of the 2011 5th International Conference on Software, Knowledge Information, Industrial Management and Applications (SKIMA) Proceedings, Benevento, Italy, 8–11 September 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–6. [Google Scholar]

- Gupta, P.; Dallas, T. Feature selection and activity recognition system using a single triaxial accelerometer. IEEE Trans. Biomed. Eng. 2014, 61, 1780–1786. [Google Scholar] [CrossRef]

- Catal, C.; Tufekci, S.; Pirmit, E.; Kocabag, G. On the use of ensemble of classifiers for accelerometer-based activity recognition. Appl. Soft Comput. 2015, 37, 1018–1022. [Google Scholar] [CrossRef]

- Whelan, D.; O’Reilly, M.; Ward, T.; Delahunt, E.; Caulfield, B. Evaluating performance of the single leg squat exercise with a single inertial measurement unit. In Proceedings of the 3rd 2015 Workshop on ICTs for improving Patients Rehabilitation Research Techniques, Lisbon, Portugal, 1–2 October 2015; pp. 144–147. [Google Scholar]

- Mortazavi, B.J.; Pourhomayoun, M.; Alsheikh, G.; Alshurafa, N.; Lee, S.I.; Sarrafzadeh, M. Determining the single best axis for exercise repetition recognition and counting on smartwatches. In Proceedings of the 2014 11th International Conference on Wearable and Implantable Body Sensor Networks, Zurich, Switzerland, 16–19 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 33–38. [Google Scholar]

- Morris, D.; Saponas, T.S.; Guillory, A.; Kelner, I. RecoFit: Using a wearable sensor to find, recognize, and count repetitive exercises. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 3225–3234. [Google Scholar]

- Piercy, K.L.; Troiano, R.P.; Ballard, R.M.; Carlson, S.A.; Fulton, J.E.; Galuska, D.A.; George, S.M.; Olson, R.D. The physical activity guidelines for Americans. JAMA 2018, 320, 2020–2028. [Google Scholar] [CrossRef]

- Hatami, N.; Gavet, Y.; Debayle, J. Classification of time-series images using deep convolutional neural networks. In Proceedings of the Tenth International Conference on Machine Vision (ICMV 2017), Vienna, Austria, 13–15 November 2017; International Society for Optics and Photonics: Bellingham, WA, USA, 2018; Volume 10696, p. 106960Y. [Google Scholar]

- Hammerla, N.Y.; Halloran, S.; Plötz, T. Deep, convolutional, and recurrent models for human activity recognition using wearables. arXiv 2016, arXiv:1604.08880. [Google Scholar]

- Yang, J.; Nguyen, M.N.; San, P.P.; Li, X.L.; Krishnaswamy, S. Deep convolutional neural networks on multichannel time series for human activity recognition. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Mohammad, Y.; Matsumoto, K.; Hoashi, K. Deep feature learning and selection for activity recognition. In Proceedings of the 33rd Annual ACM Symposium on Applied Computing, Pau, France, 9–13 April 2018; pp. 930–939. [Google Scholar]

- Li, F.; Shirahama, K.; Nisar, M.A.; Köping, L.; Grzegorzek, M. Comparison of feature learning methods for human activity recognition using wearable sensors. Sensors 2018, 18, 679. [Google Scholar] [CrossRef] [Green Version]

- Veiga, J.J.D.; O’Reilly, M.; Whelan, D.; Caulfield, B.; Ward, T.E. Feature-free activity classification of inertial sensor data with machine vision techniques: Method, development, and evaluation. JMIR MHealth UHealth 2017, 5, e115. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the 33rd Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Vapnik, V. The support vector method of function estimation. In Nonlinear Modeling; Springer: Berlin/Heidelberg, Germany, 1998; pp. 55–85. [Google Scholar]

- Vapnik, V.N. An overview of statistical learning theory. IEEE Trans. Neural Netw. 1999, 10, 988–999. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ho, T.K. The random subspace method for constructing decision forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 832–844. [Google Scholar]

- Peterson, L.E. K-nearest neighbor. Scholarpedia 2009, 4, 1883. [Google Scholar] [CrossRef]

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pearson, K. LIII. On lines and planes of closest fit to systems of points in space. Lond. Edinb. Dublin Philos. Mag. J. Sci. 1901, 2, 559–572. [Google Scholar] [CrossRef] [Green Version]

- Prabhu, G.; Ahmadi, A.; O’Connor, N.E.; Moran, K. Activity recognition of local muscular endurance (LME) exercises using an inertial sensor. In Proceedings of the International Symposium on Computer Science in Sport, Constance, Germany, 6–9 September 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 35–47. [Google Scholar]

- Chollet, F. Keras. 2015. Available online: https://github.com/fchollet/keras (accessed on 19 July 2018).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: tensorflow.org (accessed on 19 July 2018).

- Kiefer, J.; Wolfowitz, J. Stochastic estimation of the maximum of a regression function. Ann. Math. Stat. 1952, 23, 462–466. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Tieleman, T.; Hinton, G. Lecture 6.5—RmsProp: Divide the gradient by a running average of its recent magnitude. COURSERA Neural Netw. Mach. Learn. 2012, 4, 26–31. [Google Scholar]

- Rubinstein, R.Y.; Kroese, D.P. The Cross-Entropy Method: A Unified Approach to Combinatorial Optimization, Monte-Carlo Simulation and Machine Learning; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Joyce, J.M. Kullback-Leibler Divergence. In International Encyclopedia of Statistical Science; Lovric, M., Ed.; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Savitzky, A.; Golay, M.J. Smoothing and differentiation of data by simplified least squares procedures. Anal. Chem. 1964, 36, 1627–1639. [Google Scholar] [CrossRef]

| Number of Features | Feature Description from Accelerometer and Gyroscope |

|---|---|

| 12 | Minimum and Maximum from each axis |

| 12 | Mean and Std Deviation from each axis |

| 6 | RMS values from each axis |

| 6 | Entropy value computed from each axis |

| 6 | Energy from the FFT coefficient from each axis |

| 6 | Pearson correlation coefficients between the axis |

| Exercise Type | Acronym | Sensor Used & Dominant Axis | |

|---|---|---|---|

| Upper-Body LME Exercises | Bicep Curls | BC | Accelerometer: X-Axis |

| Frontal Raises | FR | Accelerometer: X-Axis | |

| Lateral Raises | LR | Accelerometer: X-Axis | |

| Triceps Extension Right | TER | Accelerometer: X-Axis | |

| Pec Dec | PD | Gyroscope: X-Axis | |

| Trunk Twist | TT | Gyroscope: Y-Axis | |

| Lower-Body LME Exercises | Standing Bicycle Crunch | SBC | Gyroscope: X-Axis |

| Squats | SQ | Accelerometer: X-Axis | |

| Leg Lateral Raise | LLR | Accelerometer: Y-Axis | |

| Lunges | L | Accelerometer: X-Axis | |

| Exercise Type | Exercise Acronym | Number of Participants | |

|---|---|---|---|

| Constrained Set | Unconstrained Set | ||

| Upper-Body LME exercises | BC | 76 | 75 |

| FR | 76 | 75 | |

| LR | 76 | 74 | |

| TER | 76 | 75 | |

| PD | 75 | 74 | |

| TT | 76 | 75 | |

| Lower-Body LME exercises | SBC | 75 | 74 |

| SQ | 73 | 73 | |

| LLR | 75 | 74 | |

| L | 73 | 75 | |

| Others | OTH | 76 | 75 |

| Window Length | Classifiers | Scores (without PCA) | Scores (with PCA) | ||||

|---|---|---|---|---|---|---|---|

| Training | Validation | Test | Training | Validation | Test | ||

| 1 s | SVM | 0.9735 | 0.8559 | Models Not Selected | 0.9674 | 0.8525 | Models Not Selected |

| MLP | 0.9232 | 0.8190 | 0.9041 | 0.8041 | |||

| kNN | 0.9390 | 0.8248 | 0.9307 | 0.8227 | |||

| RF | 0.9925 | 0.8165 | 0.9898 | 0.8179 | |||

| 2 s | SVM | 0.9907 | 0.8906 | Models Not Selected | 0.9875 | 0.8816 | Models Not Selected |

| MLP | 0.9690 | 0.8615 | 0.9568 | 0.8475 | |||

| kNN | 0.9715 | 0.8571 | 0.9613 | 0.8520 | |||

| RF | 0.9956 | 0.8607 | 0.9850 | 0.8439 | |||

| 4 s | SVM | 0.9974 | 0.9171 | 0.9607 | 0.9965 | 0.9089 | 0.9596 |

| MLP | 0.9961 | 0.8709 | 0.9328 | 0.9939 | 0.8709 | 0.9347 | |

| kNN | 0.9944 | 0.8848 | 0.9415 | 0.9845 | 0.8828 | 0.9388 | |

| RF | 0.9995 | 0.8905 | 0.9467 | 0.9994 | 0.8670 | 0.9333 | |

| Exercise Type | Acronym | Precision | Recall | F1-Score | |

|---|---|---|---|---|---|

| Upper-Body LME exercises | Bicep Curls | BC | 1 | 0.9970 | 0.9985 |

| Frontal Raise | FR | 0.9142 | 0.9364 | 0.9252 | |

| Lateral Raise | LR | 0.9194 | 0.9333 | 0.9263 | |

| Triceps Extension | TER | 1 | 1 | 1 | |

| Pec Dec | PD | 0.9599 | 0.9424 | 0.9511 | |

| Trunk Twist | TT | 0.9910 | 0.9970 | 0.9940 | |

| Lower-Body LME Exercises | Standing Bicycle Crunches | SBC | 0.9419 | 0.9333 | 0.9376 |

| Squats | SQ | 0.9907 | 0.9727 | 0.9817 | |

| Leg Lateral Raise | LLR | 0.9760 | 0.9849 | 0.9804 | |

| Lunges | L | 0.9296 | 0.9606 | 0.9449 | |

| Common Movements | Others | OTH | 0.9481 | 0.9139 | 0.9307 |

| Exercise Type | Acronym | Precision | Recall | F1-Score | |

|---|---|---|---|---|---|

| Upper-Body LME exercises | Bicep Curls | BC | 1 | 1 | 1 |

| Frontal Raise | FR | 0.9052 | 0.9552 | 0.9296 | |

| Lateral Raise | LR | 0.9273 | 0.9105 | 0.9188 | |

| Triceps Extension | TER | 0.9962 | 1 | 0.9981 | |

| Pec Dec | PD | 0.9850 | 0.9990 | 0.9920 | |

| Trunk Twist | TT | 0.9962 | 0.9990 | 0.9976 | |

| Lower-Body LME Exercises | Standing Bicycle Crunches | SBC | 0.9921 | 0.9600 | 0.9758 |

| Squats | SQ | 0.9814 | 0.9552 | 0.9681 | |

| Leg Lateral Raise | LLR | 0.9209 | 0.9867 | 0.9526 | |

| Lunges | L | 0.9748 | 0.9952 | 0.9849 | |

| Common Movements | Others | OTH | 0.9868 | 0.8991 | 0.9409 |

| Exercise Type | Exercise | Acronym | Total Subjects | Error Count | |||

|---|---|---|---|---|---|---|---|

| e|0| | e|1| | e|2| | e>|2| | ||||

| Upper-Body LME Exercises | Bicep Curls | BC | 151 | 144 | 7 | 0 | 0 |

| Frontal Raises | FR | 151 | 140 | 11 | 0 | 0 | |

| Lateral Raises | LR | 150 | 141 | 9 | 0 | 0 | |

| Triceps Extension Right | TER | 152 | 143 | 9 | 0 | 0 | |

| Pec Dec | PD | 149 | 120 | 8 | 3 | 18 | |

| Trunk Twist | TT | 151 | 128 | 14 | 5 | 4 | |

| Lower-Body LME Exercises | Standing Bicycle Crunch | SBC | 149 | 132 | 8 | 4 | 5 |

| Squats | SQ | 146 | 63 | 11 | 6 | 66 | |

| Leg Lateral Raise | LLR | 149 | 73 | 10 | 18 | 48 | |

| Lunges | L | 147 | 11 | 9 | 13 | 114 | |

| Exercise Type | Exercise | Acronym | Total Subjects | Error Count | |||

|---|---|---|---|---|---|---|---|

| e|0| | e|1| | e|2| | e>|2| | ||||

| Upper-Body LME Exercises | Bicep Curls | BC | 30 | 29 | 1 | 0 | 0 |

| Frontal Raises | FR | 30 | 30 | 0 | 0 | 0 | |

| Lateral Raises | LR | 30 | 30 | 0 | 0 | 0 | |

| Triceps Extension Right | TER | 30 | 29 | 0 | 0 | 1 | |

| Pec Dec | PD | 30 | 29 | 0 | 0 | 1 | |

| Trunk Twist | TT | 30 | 19 | 5 | 3 | 3 | |

| Lower-Body LME Exercises | Standing Bicycle Crunch | SBC | 30 | 18 | 9 | 1 | 2 |

| Squats | SQ | 30 | 19 | 10 | 0 | 1 | |

| Leg Lateral Raise | LLR | 30 | 24 | 3 | 1 | 2 | |

| Lunges | L | 30 | 3 | 6 | 11 | 10 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prabhu, G.; O’Connor, N.E.; Moran, K. Recognition and Repetition Counting for Local Muscular Endurance Exercises in Exercise-Based Rehabilitation: A Comparative Study Using Artificial Intelligence Models. Sensors 2020, 20, 4791. https://doi.org/10.3390/s20174791

Prabhu G, O’Connor NE, Moran K. Recognition and Repetition Counting for Local Muscular Endurance Exercises in Exercise-Based Rehabilitation: A Comparative Study Using Artificial Intelligence Models. Sensors. 2020; 20(17):4791. https://doi.org/10.3390/s20174791

Chicago/Turabian StylePrabhu, Ghanashyama, Noel E. O’Connor, and Kieran Moran. 2020. "Recognition and Repetition Counting for Local Muscular Endurance Exercises in Exercise-Based Rehabilitation: A Comparative Study Using Artificial Intelligence Models" Sensors 20, no. 17: 4791. https://doi.org/10.3390/s20174791

APA StylePrabhu, G., O’Connor, N. E., & Moran, K. (2020). Recognition and Repetition Counting for Local Muscular Endurance Exercises in Exercise-Based Rehabilitation: A Comparative Study Using Artificial Intelligence Models. Sensors, 20(17), 4791. https://doi.org/10.3390/s20174791