1. Introduction

Currently, many people spend a significant amount of time viewing electronic displays. With the development of computers and electronic display devices, electronic displays can be seen everywhere. Through continuous development, the electronic display is becoming clearer and more realistic. However, as screen sizes and resolution increase, the amount of information reflected in the eyes, and the movement of the eyes also increases. This contributes to the prevalence of eye fatigue. Digital eye fatigue that accompanies computer vision syndrome is related to the use of computers (desktops, laptops, and tablets) and other electronic displays (smartphones and electronic devices) and is experienced by over 40% of adults and over 80% of teens. Previous studies have shown that preschoolers spend up to 2.4 h a day watching electronic screens, which is an indication of the ubiquity of electronic display devices. Accordingly, eye fatigue problems are increasing and are a central problem in the electronic display field [

1].

When eyestrain is low, it can be resolved by simply closing the eyes for a period of time. However, when fatigue accumulates, users may experience pain around the eyes, blurred vision, headaches, dizziness, and other symptoms. The problem is that it is difficult to notice the accumulation of eye fatigue when working with an electronic display. In addition, preschoolers may deliberately ignore fatigue for continued viewing. Therefore, it is important to relieve eye fatigue at the appropriate time, which highlights the need for objective and quantitative criteria for measuring eye fatigue [

2]. In order to solve the aforementioned problems, several studies have measured eye fatigue based on images.

In previous research, eye fatigue was measured through a questionnaire [

3,

4,

5]. However, since this questionnaire is based on the subjective thinking of the user, it is difficult to guarantee its reliability. Further studies have used biosignals to measure eye fatigue objectively [

6,

7,

8,

9]. Biosignal measurement methods such as galvanic skin response, photoplethysmogram, and skin temperature are objective measurement methods; however, the measurement results may vary due to the surrounding environment, humidity, temperature, and subject movement. In addition, the sensors must be attached to the human body for biosignal measurement, which can cause the subject to feel uncomfortable. In order to solve these problems, some studies have measured eye fatigue based on images. In one such study, eye fatigue was measured based on pupil size values obtained using the device, which is commonly used in ophthalmology [

10]. A subsequent study measured eye fatigue using the pupil size of the subject and the time it takes to read an article with an eye-tracker [

11]. However, these methods have some key disadvantages; specifically, expensive equipment is required, and the pupil size is influenced by various factors, such as the brightness of the surrounding environment. Yet another study measured the eye fatigue caused by watching an Liquid Crystal Display (LCD) TV by measuring pupil accommodation speed [

12]. In this study, the pupil size difference between adjacent frames was used to calculate the pupil accommodation speed. Based on 30 frames, the difference between frames was 33 ms. However, it is difficult to determine whether the difference that occurs in this short period is meaningful. In this study, the points where the pupil size increases and decreases are designated as the inflection points, and the rate of change between the inflection points is then calculated in order to utilize meaningful pupil size differences for analysis. One study aimed at measuring visual fatigue through eye behavior concluded that blinking frequency is an appropriate metric for measuring visual fatigue, but it is impatient to draw conclusions with only one metric [

13]. Therefore, in order to confirm an objective and reliable visual fatigue measurement scale, it is necessary to observe various features in various environments. In our study, the pupil area of the subject was extracted and their multi-features of the eye were observed. The multi-features used in our study are defined based on accurate pupil region detection.

To detect pupil region, research that detects the dark and bright pupils has the advantage of being robust against external light changes, but it uses two consecutive frames, making it difficult to detect the pupil if there is violent motion [

14]. The method using the circular Hough transform is robust to noise, but detection accuracy is poor when the subject rotates the head or the pupil is not a clean circle [

15]. In addition, the circular edge detection method using the ellipse template or the difference in the pixel values of the pupil boundary can accurately detect the pupil region, but the computational complexity is very high because it is applied to the entire image in consideration of the sizes of various circular templates [

16]. In the study for gaze tracking in a visible light environment, the pupil region was detected by performing the logical AND operation of two binarized images [

17]. This method is capable of real-time detection, but it is highly affected by external light. Finally, the method using binarization, boundary segmentation, and ellipse fitting can obtain the optimal and fast result in high-resolution eye images, but it has a disadvantage in that the detection result varies depending on the location of the device [

18]. To solve these problems, a deep learning method is adopted in order to detect pupil region, which can be operated in real-time.

In recent research, studies have been conducted to quantitatively measure eye fatigue through an eye tracker that provides infrared eye images suitable for detecting the pupil region [

19,

20]. Our study is similar in that it uses infrared eye imaging, and this paper attempts to verify a more suitable quantitative metric for measuring visual fatigue.

Our study has three contributions. First, a method based on noise removal and inflection point detection was used to calculate the exact change in pupil size. Second, a deep learning-based pupil region detection method was used for accurate pupil size calculation. Third, in order to verify the proposed method, experiments were conducted in various environments (gamma conversion, color temperature conversion, and brightness conversion). In addition, the influence of three features (pupil accommodation speed, blink frequency, and eye-closed duration) on the objective and quantitative measurement of eye fatigue was experimentally verified.

The remainder of this paper is organized as follows: In

Section 2, the apparatus and methods for measuring eye fatigue, which refers to the overall flow of the experimental method and describes the eye imaging equipment, are presented. In addition, the method of deep learning-based eye region detection is briefly described and the eye fatigue measurement scale used in the experiment is discussed.

Section 3 presents the accuracy of the eye region detection and confirms the results of the eye fatigue measurements. This paper is concluded in

Section 4 and

Section 5 with the final analysis and interpretation of the experimental results.

2. Materials and Methods

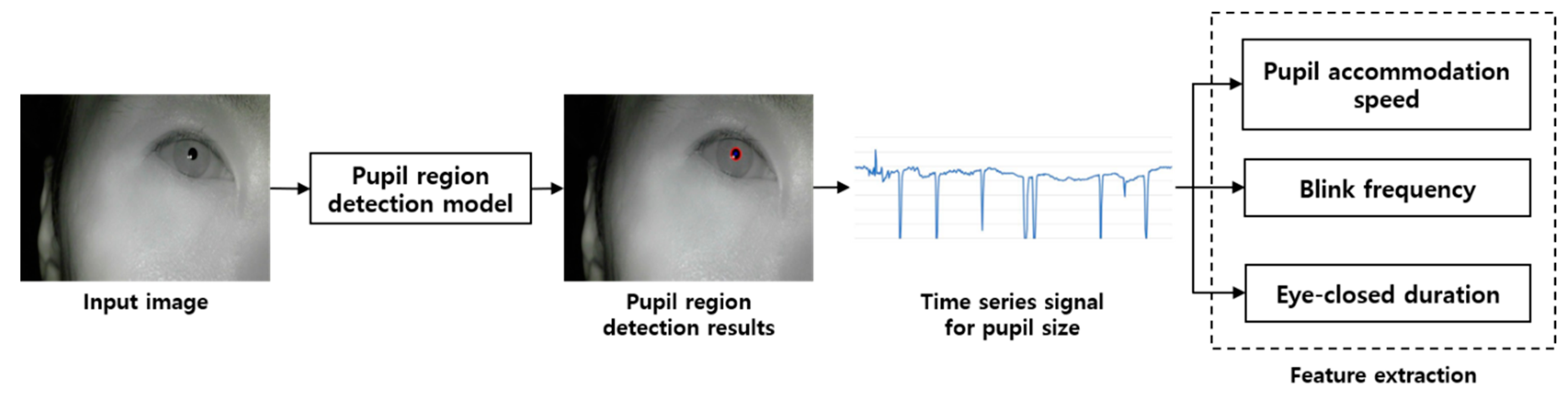

This section describes the devices used in the experiment and the pupil detection Algorithm 1. The overall flow of the experiment is shown in

Figure 1. First, a head-mounted device equipped with an infrared camera module was worn and an image of the eye, while watching a video to cause visual stimulation, was captured. Next, labeling and size filtering were applied to the results obtained by performing convolutional neural network(CNN)-based semantic segmentation to obtain the pupil size value. Based on the obtained pupil size values, the pupil accommodation speed, blink frequency, and eye-closed duration were calculated, and changes in these three characteristics were analyzed over time.

2.1. Equipment Used in the Experiment

The equipment for measuring eye fatigue is shown in

Figure 2. An infrared camera module was attached to the end of the support connected to the headband. The infrared cameras were connected to a computer via a universal serial bus and video was transmitted at 30 frame per second(fps). In addition, an infra-red(IR) pass-through filter (visible light cutoff) is attached to the camera lens to pass IR light with a wavelength greater than 750 nm, and near-infrared illumination was used to illuminate the pupil area that cannot be seen by the eye with visible light. The wavelength of the used IR illumination is 850 nm, which is invisible to the eye, so it can suppress the dazzling lighting effect.

Figure 3 is an example of the input image used in the experiment. Because the subject’s right eye was captured by the head wearable camera, as shown in

Figure 2, the image capturing angle is not affected by facial rotation and translation. Next, we describe the method for dividing the eye region from the remaining region in the input image.

2.2. Pupil Region Detection Method

Accurate pupil region detection is required to measure the pupil accommodation speed, blink frequency, and eye-closed duration. Several studies have been conducted on detecting pupils using filtering and image processing techniques in facial images, including eyes. The existing methods show good performance in images that are pre-cut to fit the eye area, as shown in

Figure 4. However, as shown in

Figure 3, if the area occupied by the eye is small and the background area is wide, the detection accuracy is poor. In addition, there is a study to find the pupil center point on the entire face for gaze tracking. However, since an RGB camera is used and minute changes in pupil size cannot be measured, a new pupil region detection method is required [

21].

We use the pupil size to extract three features for eye fatigue measurement: the pupil accommodation speed, blink frequency, and eye-closed duration. However, if inaccurate pupil region segmentation occurs frequently, it has a great influence on the feature extraction and analysis steps; thus, it is necessary to accurately detect the pupil region.

The flow chart of our pupil detection algorithm is shown in

Figure 5. First, an image is entered into the CNN-based semantic segmentation model to obtain a pupil candidate pixel. The semantic segmentation model uses the DeepLab V3+ model published by Google [

22]. Following this, a contour detection algorithm is applied to the pixels predicted to be the pupil region, and adjacent pixels are grouped into candidate groups. In this step, the candidate groups are sometimes generated outside the real pupil region; thus, the incorrectly predicted candidate groups must be removed. Using the fact that the shape of the pupil is almost circular, it is removed as a candidate if the aspect ratio exceeds a preset threshold which is set to 0.4. Second, filtering is performed based on the number of pixels in the remaining candidate groups that do not exceed the threshold. In this study, the threshold of the pupil region’s number of pixels is set to 300 pixels. The thresholds mentioned here were chosen experimentally to obtain the highest Intersection over Union(IoU) results, which are discussed in

Section 3.2. As shown in

Figure 3, the pupil size in the image does not show a significant difference for each object. Therefore, the candidate groups with unusually large or small numbers of pixels are removed. Finally, if there are no remaining candidate groups, the pupil size of the corresponding frame is determined to be 0; if candidate groups remain, the largest group is determined to be the pupil region.

| Algorithm 1: Proposed algorithm for pupil detection in a video frame. |

- 1:

Inputs: Video frame F - 2:

Output: Contour size fitted to the pupil area - 3:

Begin - 4:

CA_THR = 300 - 5:

AS_THR = 0.4 - 6:

Input F to CNN-based semantic segmentation model and output SF (Segmented F) - 7:

Contours detection in SF - 8:

for contour in contours: - 9:

Calculate area value CA from contour - 10:

if CA > CA_THR: - 11:

Calculate width (W) and height (H) from contour - 12:

if W / H > AS_THR: - 13:

C_EF output by performing ellipse fitting for contour - 14:

End - 15:

Area calculation of C_EF - 16:

End

|

After applying the algorithm in

Figure 5, ellipse fitting is performed on the detected pupil area and the size of the ellipse is calculated and used for feature extraction. The graph representing the calculated area value in frame units over time can be seen in

Figure 6. The graph in

Figure 6 is the resulting graph for the eye image taken for 10 s at 30 fps, the x-axis is the frame, and the y-axis is the pupil size. If the frame does not have a pupil, the pupil size value is treated as 0.

2.3. Eye Fatigue Measurement Scale

Next, we calculated the pupil accommodation speed from the pupil size data. In our previously published paper, the pupil accommodation speed was calculated using the size difference between adjacent frames [

12]. However, since pupil convergence and divergence proceed over several seconds, the pupil accommodation speed calculated using this size difference is not appropriate. In addition, using a static interval difference may produce different results depending on changes in the surrounding environment. Thus, to calculate the accurate pupil accommodation speed, we designated the point at which the pupil contraction and expansion were switched as the inflection point and calculated the pupil accommodation speed through this point.

The inflection points and the point where the pupil size is zero (from the graph in

Figure 6 are displayed in

Figure 7. Changes in the size values continue to occur, but not all are significant. Therefore, it is necessary to find an appropriate inflection point, such as the point indicated by the red dot in

Figure 7. In order to determine an appropriate inflection point, all inflection points present in the data are first found—these are called inflection point candidates. The inflection point candidates are selected as inflection points if two conditions are satisfied: (1) When the difference value sign from the previous inflection point is different from the difference value sign first calculated, and (2) when the difference value from the previous inflection point exceeds the threshold.

Three features are used to measure visual fatigue:

Average pupil accommodation speed

Blink frequency per unit time

Average time to eye-closed duration

The pupil accommodation speed was calculated using Equation (1), where

N is the total number of inflection points minus one. In addition,

Sn and

Sn+1 represent the size values of the

nth and (

n + 1)th inflection points, and

Fn and

Fn+1 represent the frame numbers of the

nth and (

n + 1)th inflection points. Blink frequency is calculated as the number of times the pupil size value shown by the yellow bar in

Figure 7 is zero. In this case, the number of times the pupil size value was zero is five, and the eye blinked 0.5 times per second in 10 s. The eye-closed duration is calculated using the number of frames in which the size value is zero. These frames were marked as yellow bars in

Figure 7. For example, because the numbers of frames at the point where the size value is zero were 6, 1, 5, 8, and 6, the eyes were closed for 26 frames within 10 s. Changing the unit from frames to seconds results in 0.86 s, and the average closing time for the duration (10 s) is 0.086 s.

The extracted features were selected based on three hypotheses: First, the pupil accommodation speed decreases as the iris control muscles feel tired over time. Second, the blink frequency increases fatigue and dryness over time. Third, if tiredness or dryness is felt, the eyes are closed for a longer period of time.

4. Discussion

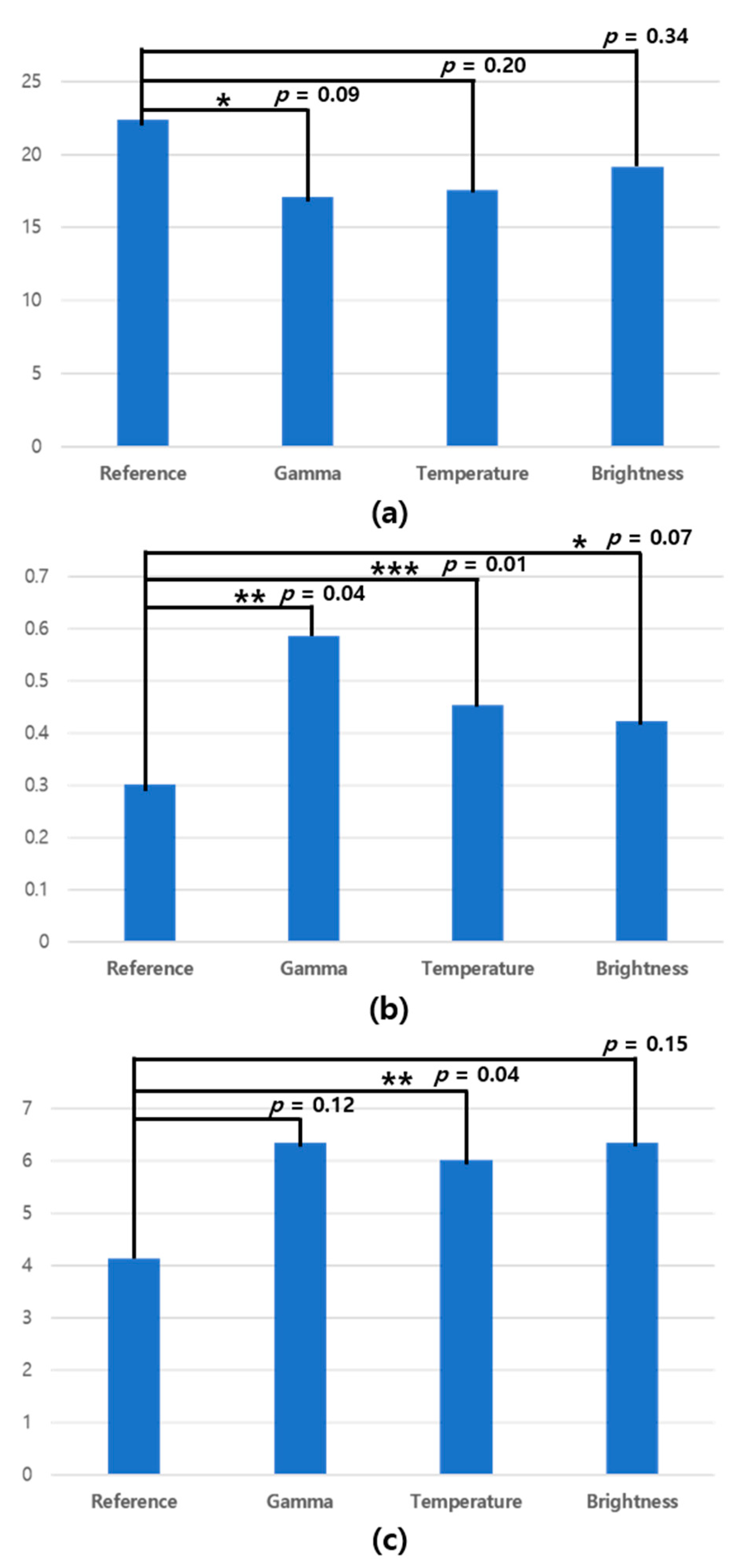

The results of our experiments confirmed that the pupil accommodation speed was lowered in all environments. We also confirmed that our hypotheses for all three features in the tablet viewing environment matched. As shown in

Figure 10b, the

p-value for the blink frequency was less than 0.05 in the gamma and color temperature change environments. In addition,

Figure 10c shows a significant difference, as the

p-value for the eye-closed duration in the color temperature change environment was less than 0.05.

Previous studies have shown that visual fatigue increases under different environmental conditions [

12]. Based on the results of this study, significant changes in each change environment (gamma, color temperature, and brightness) were identified. In previous research, which confirmed the degree to which the resolution of the visual display terminal (VDT) affects visual fatigue, the pupil accommodation speed was used as a visual fatigue evaluation scale and a significant difference between the two resolutions was confirmed [

23,

24]. In the experimental environments of this study, the blink frequency increased with time. In previous research, the visual fatigue of motorists was measured by calculating the eye-closed duration. However, this research did not determine whether the eye-closed duration is suitable for measuring visual fatigue. To solve this problem, our study experimentally verified that the eye-closed duration is suitable for measuring visual fatigue. It has also been argued that visual fatigue occurs when the pupil size changes continuously due to uneven stimulation [

24]. In addition, studies have shown that pupil size is an appropriate metric for visual fatigue [

25,

26]. In fact, one study showed that the pupil accommodation speed is lowered when feeling visual fatigue [

12]. Based on previous studies and our experimental results, we can conclude that the pupil accommodation speed, the blink frequency, and the eye-closed duration are all appropriate metrics for visual fatigue.