A Hybrid Approach for Turning Intention Prediction Based on Time Series Forecasting and Deep Learning

Abstract

:1. Introduction

- The purpose of this research is to realize the intention prediction of the vehicle before the start of the steering maneuver, and propose a framework that combines time series prediction and deep learning methods to apply to the new generation of ADAS or future autonomous vehicles. The framework uses online prediction algorithms to reflect the driving intention of the vehicle in the prediction window, and achieves high recognition accuracy and modeling of vehicle kinematics that indicate steering behavior through Bi-LSTM.

- Since the variables representing the driving behavior are time series data, a novel vehicle behavior prediction method is proposed that combines the ARIMA with an online gradient descent (OGD) optimizer. This method allows for predicting the driving intention without reducing the recognition rate.

2. Framework for Turning Behavior Recognition

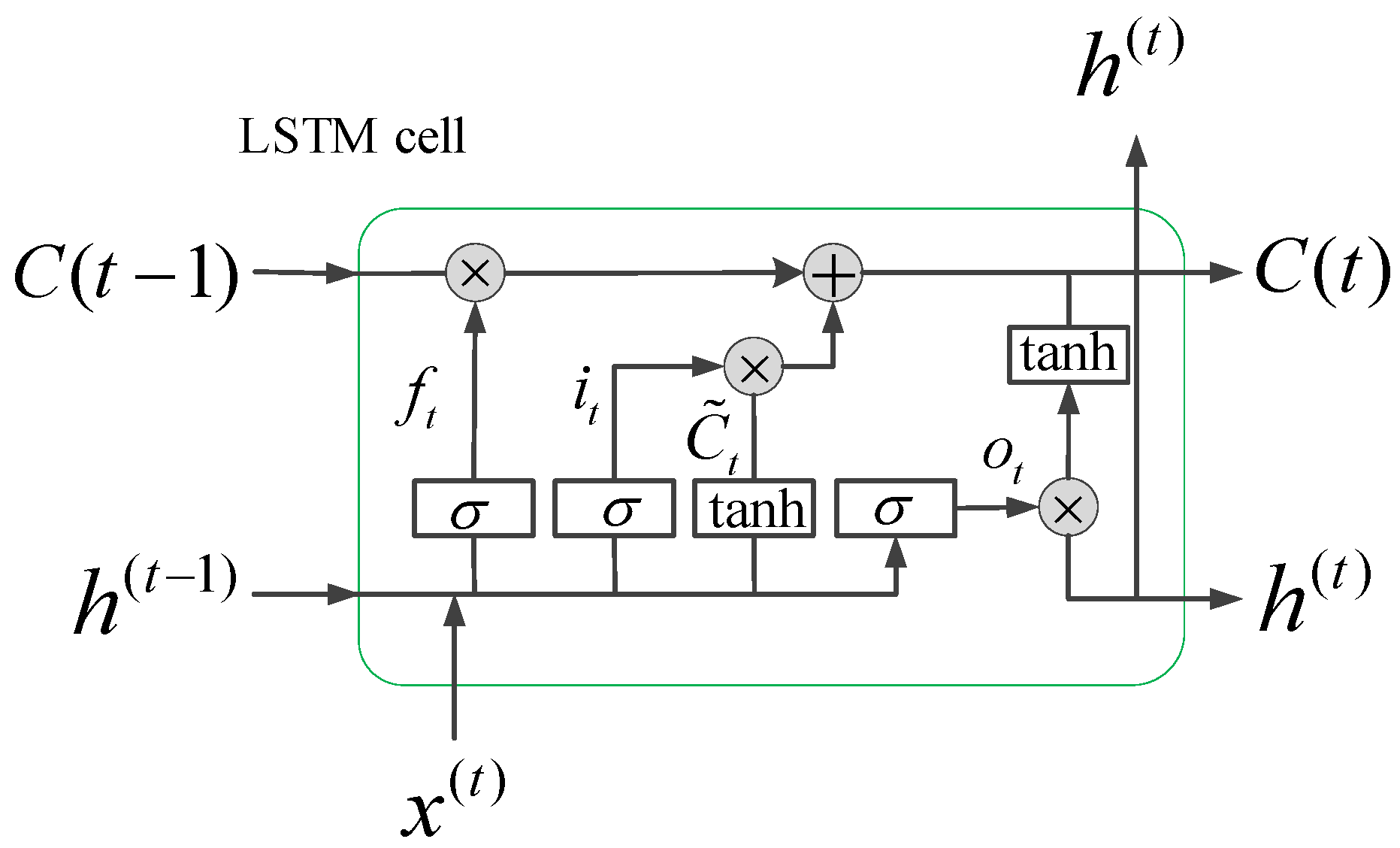

2.1. Bi-LSTM

2.2. Online ARIMA

3. Experimental Data

3.1. Data Description

3.2. Data Extraction

- Identify the ID of the vehicle TL or TR;

- Calculate the heading angle of the vehicle based on the trajectory information of the vehicle;

- Search the starting time ts when the vehicle begins to turn and mark it;

- Using the ts as a reference, 11 s is extracted from the time series of the entire turning process, including the time series of 10 s before ts and 1 s after ts.

3.3. Input and Output Variable

3.4. Data Analysis

3.5. Training and Test Procedure

3.5.1. Evaluation Index for the Online Prediction Algorithm

3.5.2. Training of the Behavior Recognition Model

4. Results and Discussion

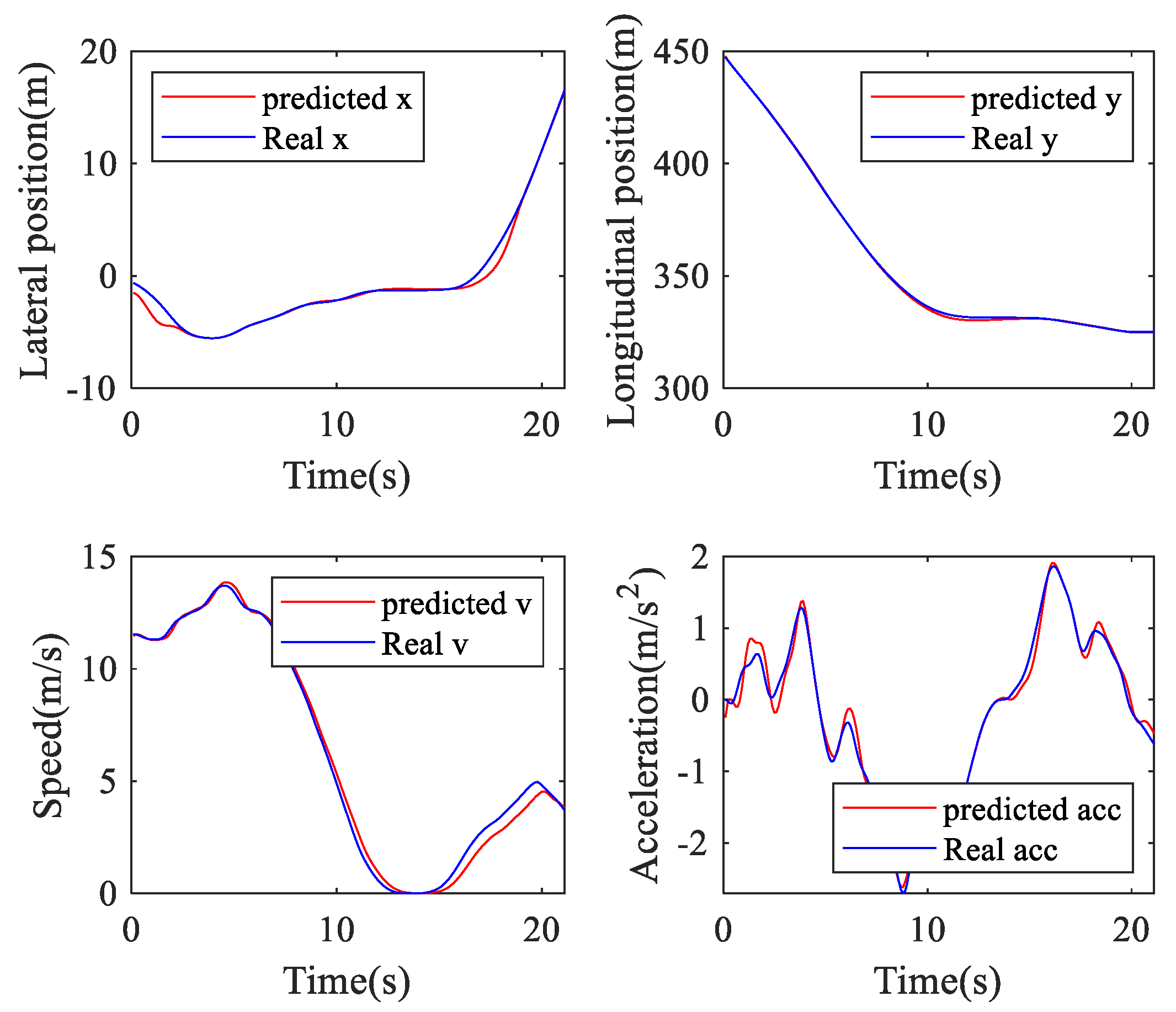

4.1. Performance of the Online Prediction Algorithm

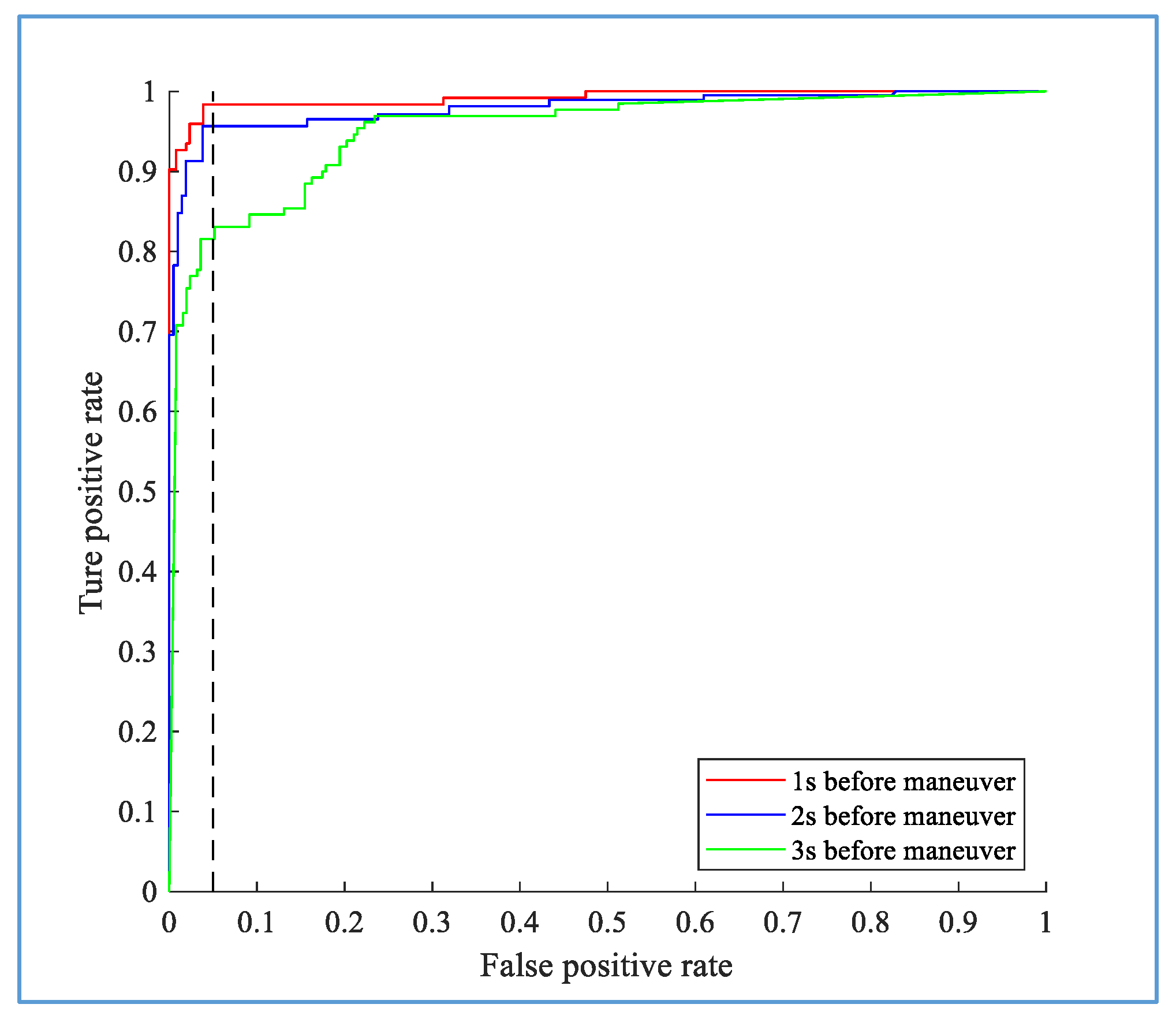

4.2. Performance of the Hybrid Method for Turning Behavior Recognition

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zhou, M.; Yu, Y.; Qu, X. Development of an Efficient Driving Strategy for Connected and Automated Vehicles at Signalized Intersections: A Reinforcement Learning Approach. IEEE Trans. Intell. Transp. Syst. 2019, 21, 433–443. [Google Scholar] [CrossRef]

- Sun, J.; Qi, X.; Xu, Y.; Tian, Y. Vehicle Turning Behavior Modeling at Conflicting Areas of Mixed-Flow Intersections Based on Deep Learning. IEEE Trans. Intell. Transp. Syst. 2019. [Google Scholar] [CrossRef]

- Deo, N.; Rangesh, A.; Trivedi, M.M. How would surround vehicles move? A Unified Framework for Maneuver Classification and Motion Prediction. IEEE Trans. Intell. Veh. 2018, 3, 129–140. [Google Scholar] [CrossRef] [Green Version]

- Noh, S. Decision-Making Framework for Autonomous Driving at Road Intersections: Safeguarding Against Collision, Overly Conservative Behavior, and Violation Vehicles. IEEE Trans. Ind. Electron. 2018, 66, 3275–3286. [Google Scholar] [CrossRef]

- Wang, J.; Peeta, S.; He, X. Multiclass traffic assignment model for mixed traffic flow of human-driven vehicles and connected and autonomous vehicles. Transp. Res. Part B Methodol. 2019, 126, 139–168. [Google Scholar] [CrossRef]

- Papadoulis, A.; Quddus, M.; Imprialou, M. Evaluating the safety impact of connected and autonomous vehicles on motorways. Accid. Anal. Prev. 2019, 124, 12–22. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fu, R.; Li, Z.; Sun, Q.; Wang, C. Human-like car-following model for autonomous vehicles considering the cut-in behavior of other vehicles in mixed traffic. Accid. Anal. Prev. 2019, 132, 105260. [Google Scholar] [CrossRef] [PubMed]

- Xu, T.; Jiang, R.; Wen, C.; Liu, M.; Zhou, J. A hybrid model for lane change prediction with V2X-based driver assistance. Phys. A Stat. Mech. Appl. 2019, 15, 122033. [Google Scholar] [CrossRef]

- Ali, Y.; Zheng, Z.; Haque, M.M.; Yildirimoglu, M.; Washington, S. Understanding the discretionary lane-changing behaviour in the connected environment. Accid. Anal. Prev. 2020, 137, 105463. [Google Scholar] [CrossRef]

- Ali, Y.; Zheng, Z.; Haque, M.M.; Wang, M. A game theory-based approach for modelling mandatory lane-changing behaviour in a connected environment. Transp. Res. Part C Emerg. Technol. 2019, 106, 220–242. [Google Scholar] [CrossRef]

- Ali, Y.; Zheng, Z.; Haque, M.M. Connectivity’s impact on mandatory lane-changing behaviour: Evidences from a driving simulator study. Transp. Res. Part C Emerg. Technol. 2018, 93, 292–309. [Google Scholar] [CrossRef]

- Choi, E.H. Crash Factors in Intersection-Related Crashes: An On-Scene Perspective; Mathematical Analysis Division, National Center for Statistics and Analysis, National Highway Traffic Safety Administration: Washington, DC, USA, 2010. [Google Scholar]

- Liu, C.; Ye, T.J. Run-Off-Road Crashes: An On-Scene Perspective; Mathematical Analysis Division, National Center for Statistics and Analysis, National Highway Traffic Safety Administration: Washington, DC, USA, 2011. [Google Scholar]

- National Highway Traffic Safety Administration. Fatality Analysisi Reporting System Encyclopedia. 2009. Available online: http://www-fars.nhtsa.dot.gov/Main/index.aspx (accessed on 24 June 2020).

- Yi, D.; Su, J.; Liu, C.; Chen, W.H. Trajectory Clustering Aided Personalized Driver Intention Prediction for Intelligent Vehicles. IEEE Trans. Ind. Inform. 2018, 15, 3693–3702. [Google Scholar] [CrossRef] [Green Version]

- Bougler, B.; Cody, D.; Nowakowski, C. California Intersection Decision Support: A Driver-Centered Approach to Left-Turn Collision Avoidance System Design; California Partners for Advanced Transportation Technology, UC Berkeley: Berkeley, CA, USA, 2008. [Google Scholar]

- Ghiasi, A.; Li, X.; Ma, J. A mixed traffic speed harmonization model with connected autonomous vehicles. Transp. Res. Part C-Emerg. Technol. 2019, 104, 210–233. [Google Scholar] [CrossRef]

- Chen, C.; Liu, L.; Qiu, T.; Ren, Z.; Hu, J.; Ti, F. Driver’s Intention Identification and Risk Evaluation at Intersections in the Internet of Vehicles. IEEE Internet Things J. 2018, 5, 1575–1587. [Google Scholar] [CrossRef]

- Yao, Y.; Zhao, X.; Wu, Y.; Zhang, Y.; Rong, J. Clustering driver behavior using dynamic time warping and hidden Markov model. J. Intell. Transp. Syst. 2019, 24, 1–14. [Google Scholar] [CrossRef]

- Schorr, J.; Hamdar, S.H.; Silverstein, C. Measuring the safety impact of road infrastructure systems on driver behavior: Vehicle instrumentation and real world driving experiment. J. Intell. Transp. Syst. 2017, 21, 364–374. [Google Scholar] [CrossRef]

- Chen, Z.; Wu, C.; Huang, Z.; Lyu, N.; Hu, Z.; Zhong, M.; Cheng, Y.; Ran, B. Dangerous driving behavior detection using video-extracted vehicle trajectory histograms. J. Intell. Transp. Syst. 2017, 21, 409–421. [Google Scholar] [CrossRef]

- Chen, X.; Zhou, L.; Li, L. Bayesian network for red-light-running prediction at signalized intersections. J. Intell. Transp. Syst. 2019, 23, 120–132. [Google Scholar] [CrossRef]

- Li, M.; Chen, X.; Lin, X.; Xu, D.; Wang, Y. Connected vehicle-based red-light running prediction for adaptive signalized intersections. J. Intell. Transp. Syst. 2018, 22, 229–243. [Google Scholar] [CrossRef]

- Zhang, M.; Fu, R.; Morris, D.; Wang, C. A Framework for Turning Behavior Classification at Intersections Using 3D LIDAR. IEEE Trans. Veh. Technol. 2019, 68, 7431–7442. [Google Scholar] [CrossRef]

- Gadepally, V.; Krishnamurthy, A.; Ozguner, U. A Framework for Estimating Driver Decisions Near Intersections. IEEE Trans. Intell. Transp. Syst. 2014, 15, 637–646. [Google Scholar] [CrossRef]

- Jin, L.; Hou, H.; Jiang, Y. Driver intention recognition based on Continuous Hidden Markov Model. In Proceedings of the International Conference on Transportation, Mechanical, and Electrical Engineering, Changchun, China, 16–18 December 2011; pp. 739–742. [Google Scholar]

- Li, K.; Wang, X.; Xu, Y.; Wang, J. Lane changing intention recognition based on speech recognition models. Transp. Res. Part C Emerg. Technol. 2016, 69, 497–514. [Google Scholar] [CrossRef]

- Zhang, Y.; Lin, Q.; Wang, J.; Verwer, S.; Dolan, J.M. Lane-Change Intention Estimation for Car-Following Control in Autonomous Driving. IEEE Trans. Intell. Veh. 2018, 3, 276–286. [Google Scholar] [CrossRef]

- Amsalu, S.B.; Homaifar, A. Driver Behavior Modeling Near Intersections Using Hidden Markov Model Based on Genetic Algorithm. In Proceedings of the 2016 IEEE International Conference on Intelligent Transportation Engineering (ICITE), Singapore, 20–22 August 2016; pp. 193–200. [Google Scholar]

- Kumar, P.; Perrollaz, M.; Lefevre, S.; Laugier, C. Learning-based approach for online lane change intention prediction. In Proceedings of the Intelligent Vehicles Symposium, Gold Coast, Australia, 23–26 June 2013; pp. 797–802. [Google Scholar]

- Aoude, G.S.; Desaraju, V.R.; Stephens, L.H.; How, J.P. Driver Behavior Classification at Intersections and Validation on Large Naturalistic Data Set. IEEE Trans. Intell. Transp. Syst. 2012, 13, 724–736. [Google Scholar] [CrossRef]

- Peng, J.; Guo, Y.; Fu, R.; Yuan, W.; Wang, C. Multi-parameter prediction of drivers’ lane-changing behaviour with neural network model. Appl. Ergon. 2015, 50, 207–217. [Google Scholar] [CrossRef] [PubMed]

- Yi, H.; Edara, P.; Sun, C. Situation assessment and decision making for lane change assistance using ensemble learning methods. Expert Syst. Appl. 2015, 42, 3875–3882. [Google Scholar]

- Maier, A.; Syben, C.; Lasser, T.; Riess, C. A gentle introduction to deep learning in medical image processing. Z. Med. Phys. 2019, 29, 86–101. [Google Scholar] [CrossRef]

- Kumar, A.; Verma, S.; Mangla, H. A Survey of Deep Learning Techniques in Speech Recognition. In Proceedings of the 2018 International Conference on Advances in Computing, Communication Control and Networking (ICACCCN), Greater Noida (UP), India, 12–13 October 2018; pp. 179–185. [Google Scholar]

- Zyner, A.; Worrall, S.; Nebot, E. Naturalistic driver intention and path prediction using recurrent neural networks. IEEE Trans. Intell. Transp. Syst. 2020, 21, 1584–1594. [Google Scholar] [CrossRef] [Green Version]

- Graves, A. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar]

- Zyner, A.; Worrall, S.; Nebot, E.J.I.R.; Letters, A. A Recurrent Neural Network Solution for Predicting Driver Intention at Unsignalized Intersections. IEEE Robot. Autom. Lett. 2018, 3, 1759–1764. [Google Scholar] [CrossRef]

- Zyner, A.; Worrall, S.; Ward, J.; Nebot, E. Long short term memory for driver intent prediction. In Proceedings of the Intelligent Vehicles Symposium, Stockholm, Sweden, 16–21 May 2016; pp. 1484–1489. [Google Scholar]

- Phillips, D.J.; Wheeler, T.A.; Kochenderfer, M.J. Generalizable intention prediction of human drivers at intersections. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017. [Google Scholar]

- Jain, A.; Singh, A.; Koppula, H.S.; Soh, S.; Saxena, A.; Jain, A.; Singh, A.; Koppula, H.S.; Soh, S.; Saxena, A. Recurrent Neural Networks for Driver Activity Anticipation via Sensory-Fusion Architecture. In Proceedings of the IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; pp. 699–706. [Google Scholar]

- Liebner, M.; Klanner, F.; Baumann, M.; Ruhhammer, C.; Stiller, C. Velocity-Based Driver Intent Inference at Urban Intersections in the Presence of Preceding Vehicles. IEEE Intell. Transp. Syst. Mag. 2013, 5, 10–21. [Google Scholar] [CrossRef] [Green Version]

- Tan, Y.V.; Elliott, M.R.; Flannagan, C.A. Development of a real-time prediction model of driver behavior at intersections using kinematic time series data. Accid. Anal. Prev. 2017, 106, 428–436. [Google Scholar] [CrossRef] [PubMed]

- Jain, A.; Koppula, H.S.; Raghavan, B.; Soh, S.; Saxena, A. Car that Knows Before You Do: Anticipating Maneuvers via Learning Temporal Driving Models. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015. [Google Scholar]

- Lethaus, F.; Baumann, M.R.K.; Köster, F.; Lemmer, K.J.N. A comparison of selected simple supervised learning algorithms to predict driver intent based on gaze data. Neurocomputing 2013, 121, 108–130. [Google Scholar] [CrossRef]

- Deo, N.; Trivedi, M.M. Multi-Modal Trajectory Prediction of Surrounding Vehicles with Maneuver based LSTMs. In Proceedings of the IEEE intelligent vehicles symposium, Changshu, China, 26–30 June 2018; pp. 1179–1184. [Google Scholar]

- Woo, H.; Yonghoon, J.I.; Kono, H.; Tamura, Y.; Kuroda, Y.; Sugano, T.; Yamamoto, Y.; Yamashita, A.; Asama, H.J.I.R.; Letters, A. Lane-Change Detection Based on Vehicle-Trajectory Prediction. IEEE Robot. Autom. Lett. 2017, 2, 1109–1116. [Google Scholar] [CrossRef]

- Lee, S.; Khan, M.Q.; Husen, M.N. Continuous Car Driving Intent Detection Using Structural Pattern Recognition. IEEE Trans. Intell. Transp. Syst. 2020, 1–13. [Google Scholar] [CrossRef]

- Fawaz, H.I.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.A.J.D.M.; Discovery, K. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef] [Green Version]

- Ye, R.; Dai, Q. MultiTL-KELM: A multi-task learning algorithm for multi-step-ahead time series prediction. Appl. Soft Comput. 2019, 79, 227–253. [Google Scholar] [CrossRef]

- Liu, G.; Guo, J. Bidirectional LSTM with attention mechanism and convolutional layer for text classification. Neurocomputing 2019, 337, 325–338. [Google Scholar] [CrossRef]

- Wang, S.; Wang, X.; Wang, S.; Wang, D. Bi-directional long short-term memory method based on attention mechanism and rolling update for short-term load forecasting. Int. J. Electr. Power Energy Syst. 2019, 109, 470–479. [Google Scholar] [CrossRef]

- Liu, C.; Hoi, S.C.H.; Zhao, P.; Sun, J. Online ARIMA algorithms for time series prediction. In Proceedings of the National Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 1867–1873. [Google Scholar]

- Zinkevich, M. Online convex programming and generalized infinitesimal gradient ascent. In Proceedings of the 20th International Conference on Machine Learning (ICML-03), Washington, DC, USA, 21–24 August 2003; pp. 928–936. [Google Scholar]

- Biehl, M.; Schwarze, H. Learning by online gradient descent. J. Phys. A 1995, 28, 643–656. [Google Scholar] [CrossRef]

- Yang, D.; Zhu, L.; Ran, B.; Pu, Y.; Hui, P. Modeling and Analysis of the Lane-Changing Execution in Longitudinal Direction. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2984–2992. [Google Scholar] [CrossRef]

- Yang, D.; Zhu, L.; Liu, Y.; Wu, D.; Ran, B. A Novel Car-Following Control Model Combining Machine Learning and Kinematics Models for Automated Vehicles. IEEE Trans. Intell. Transp. Syst. 2019, 20, 1991–2000. [Google Scholar] [CrossRef]

- Zhang, X.; Sun, J.; Qi, X.; Sun, J. Simultaneous modeling of car-following and lane-changing behaviors using deep learning. Transp. Res. Part C Emerg. Technol. 2019, 104, 287–304. [Google Scholar] [CrossRef]

- Yang, D.; Zhu, L.; Yang, F.; Pu, Y. Modeling and analysis of lateral driver behavior in lane-changing execution. Transp. Res. Rec. J. Transp. Res. Board 2015, 2490, 127–137. [Google Scholar] [CrossRef]

| Dataset | Time Period | Going Straight | Left-Turn | Right-Turn | Total |

|---|---|---|---|---|---|

| Lankershim | 8:30–8:45 a.m. | 341 | 265 | 315 | 921 |

| Lankershim | 8:45–9:00 a.m. | 341 | 302 | 339 | 982 |

| Peachtree | 2:45–1:00 p.m. | 151 | 218 | 173 | 542 |

| Peachtree | 4:00–4:15 p.m. | 143 | 254 | 151 | 548 |

| Total | 1 h | 976 | 1039 | 978 | 2993 |

| Scenarios | Lateral Position (m) | Longitudinal Position (m) | Speed (m/s) | Acceleration (m/s2) | ||||

|---|---|---|---|---|---|---|---|---|

| RMSE | MAPE (%) | RMSE | MAPE (%) | RMSE | MAPE (%) | RMSE | MAPE (%) | |

| GS | 0.0932 | 1.119 | 0.1093 | 1.028 | 0.1635 | 1.227 | 0.2381 | 0.043 |

| TL | 0.2719 | 0.162 | 0.1592 | 0.258 | 0.3674 | 0.184 | 0.1218 | 0.023 |

| TR | 0.1168 | 0.026 | 0.3954 | 0.200 | 0.1350 | 0.213 | 0.4007 | 0.058 |

| Model | Online Prediction (s) | Bi-LSTM (s) | Total (s) |

|---|---|---|---|

| Average time | 0.0013 | 0.0150 | 0.0163 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Fu, R. A Hybrid Approach for Turning Intention Prediction Based on Time Series Forecasting and Deep Learning. Sensors 2020, 20, 4887. https://doi.org/10.3390/s20174887

Zhang H, Fu R. A Hybrid Approach for Turning Intention Prediction Based on Time Series Forecasting and Deep Learning. Sensors. 2020; 20(17):4887. https://doi.org/10.3390/s20174887

Chicago/Turabian StyleZhang, Hailun, and Rui Fu. 2020. "A Hybrid Approach for Turning Intention Prediction Based on Time Series Forecasting and Deep Learning" Sensors 20, no. 17: 4887. https://doi.org/10.3390/s20174887

APA StyleZhang, H., & Fu, R. (2020). A Hybrid Approach for Turning Intention Prediction Based on Time Series Forecasting and Deep Learning. Sensors, 20(17), 4887. https://doi.org/10.3390/s20174887