Figure 1.

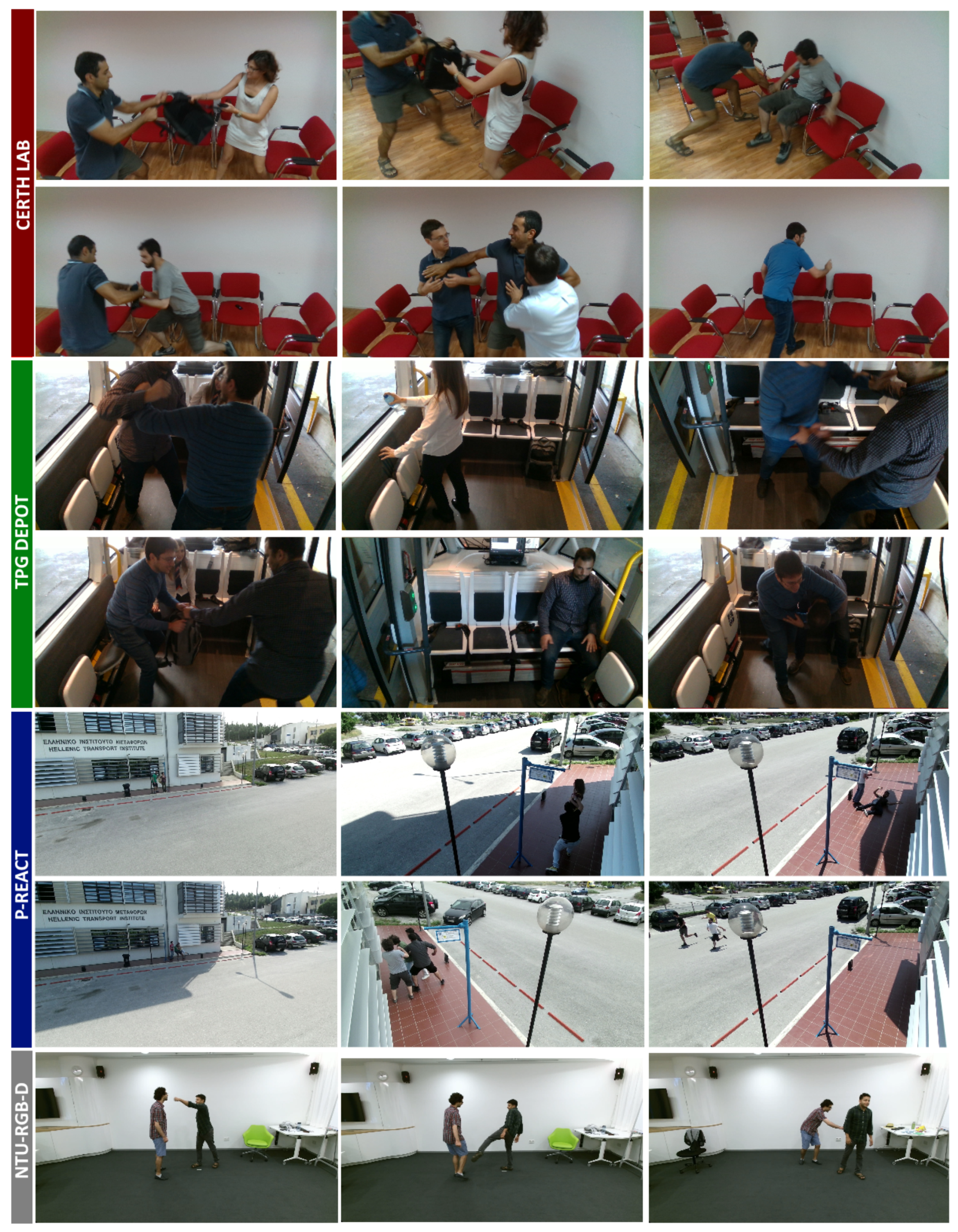

Dataset samples with abnormal events which showcase the use cases. Fighting, aggression, bag-snatching, and vandalism scenarios are illustrated. The red section contains simulated data in lab, green section depicts captured data from TPG shuttles, blue section shows scenarios from P-REACT dataset, and the gray section indicates additional data imported from the NTU-RGB dataset.

Figure 1.

Dataset samples with abnormal events which showcase the use cases. Fighting, aggression, bag-snatching, and vandalism scenarios are illustrated. The red section contains simulated data in lab, green section depicts captured data from TPG shuttles, blue section shows scenarios from P-REACT dataset, and the gray section indicates additional data imported from the NTU-RGB dataset.

Figure 2.

VLabel: Pose labeling using mouse clicks on the skeleton’s circle and keyboard navigation.

Figure 2.

VLabel: Pose labeling using mouse clicks on the skeleton’s circle and keyboard navigation.

Figure 3.

Pipeline of the pose classification.

Figure 3.

Pipeline of the pose classification.

Figure 4.

Skeleton matching across two subsequent frames (blended). Notice that the passenger ID, highlighted in green at the left of each bounding box, is the same across the frames.

Figure 4.

Skeleton matching across two subsequent frames (blended). Notice that the passenger ID, highlighted in green at the left of each bounding box, is the same across the frames.

Figure 5.

Examples indicating the restricted field of view of the camera sensor.

Figure 5.

Examples indicating the restricted field of view of the camera sensor.

Figure 6.

Despite the occlusion, the two leg joints of the girl are being approximately reconstructed (b) by their relative location in the previous frame (a).

Figure 6.

Despite the occlusion, the two leg joints of the girl are being approximately reconstructed (b) by their relative location in the previous frame (a).

Figure 7.

Representation of the extracted features. Joint positions (normalized), velocity, and body velocity are used as features for the classification.

Figure 7.

Representation of the extracted features. Joint positions (normalized), velocity, and body velocity are used as features for the classification.

Figure 8.

Performance evaluation across different buffer sizes. A buffer size of 5 frames achieved the best accuracy on the evaluation test.

Figure 8.

Performance evaluation across different buffer sizes. A buffer size of 5 frames achieved the best accuracy on the evaluation test.

Figure 9.

Model performance evaluation. (a) Train/test accuracy and (b) train/test loss metrics.

Figure 9.

Model performance evaluation. (a) Train/test accuracy and (b) train/test loss metrics.

Figure 10.

Model architecture of the autoencoder: The first two convolutional layers are spatial encoders, followed by temporal encoder and decoder. Between them, a ConvLSTM with reduced filters is used as a bottleneck to eliminate non useful information. At the last two layers we perform spatial decoding, reconstructing the input image to the same format.

Figure 10.

Model architecture of the autoencoder: The first two convolutional layers are spatial encoders, followed by temporal encoder and decoder. Between them, a ConvLSTM with reduced filters is used as a bottleneck to eliminate non useful information. At the last two layers we perform spatial decoding, reconstructing the input image to the same format.

Figure 11.

Preprocessing using a sliding window of 10 frames and training pipeline. Euclidean loss is used to learn regularity.

Figure 11.

Preprocessing using a sliding window of 10 frames and training pipeline. Euclidean loss is used to learn regularity.

Figure 12.

Decision flow: A high reconstruction cost between OF and RF indicates an abnormal event.

Figure 12.

Decision flow: A high reconstruction cost between OF and RF indicates an abnormal event.

Figure 13.

Outdoor group fighting scenario on a simulated bus stop. Green metrics at top-left indicate the current and the average regularity score. A lower regularity score indicates that the predicted reconstruction is not accurate, since our model did not learn such an event. Note that the current score is much lower than the average, triggering an abnormal notification.

Figure 13.

Outdoor group fighting scenario on a simulated bus stop. Green metrics at top-left indicate the current and the average regularity score. A lower regularity score indicates that the predicted reconstruction is not accurate, since our model did not learn such an event. Note that the current score is much lower than the average, triggering an abnormal notification.

Figure 14.

Example of a prediction with a lower regularity threshold.

Figure 14.

Example of a prediction with a lower regularity threshold.

Figure 15.

Model architecture of the hybrid model. The red container contains components of the previous autoencoder approach. The green components indicate the new hybrid model which acts as a classifier.

Figure 15.

Model architecture of the hybrid model. The red container contains components of the previous autoencoder approach. The green components indicate the new hybrid model which acts as a classifier.

Figure 16.

The three stage pipeline of the hybrid training procedure consists of an initial unsupervised training, followed by transfer learning and retraining of the stacked LSTM Classifier.

Figure 16.

The three stage pipeline of the hybrid training procedure consists of an initial unsupervised training, followed by transfer learning and retraining of the stacked LSTM Classifier.

Figure 17.

Train/Val loss and accuracy of the hybrid classifier, over 20 epochs.

Figure 17.

Train/Val loss and accuracy of the hybrid classifier, over 20 epochs.

Figure 18.

Evaluation on test data: (a–c) Abnormal event detection (violence/passengers are fighting) using different camera angles from the NTU-RGB dataset. (d,e) Detection of fighting/bag-snatch real-world scenarios inside the shuttle.

Figure 18.

Evaluation on test data: (a–c) Abnormal event detection (violence/passengers are fighting) using different camera angles from the NTU-RGB dataset. (d,e) Detection of fighting/bag-snatch real-world scenarios inside the shuttle.

Figure 19.

Evaluation on multiple camera angles, excessive occlusion, and partial presence.

Figure 19.

Evaluation on multiple camera angles, excessive occlusion, and partial presence.

Figure 20.

Evaluation across various scenarios (left to right): Bag-snatch, fighting, and vandalism.

Figure 20.

Evaluation across various scenarios (left to right): Bag-snatch, fighting, and vandalism.

Figure 21.

Additional evaluation on the NTU-RGB dataset. Metrics at the top-left depict the prediction scores for the P1.

Figure 21.

Additional evaluation on the NTU-RGB dataset. Metrics at the top-left depict the prediction scores for the P1.

Figure 22.

Regularity curves (top-left) with a bag-snatching scenario using three preprocessing methods: (a) MOG2 background subtraction, (b) frame subtraction (absdiff), (c) Farneback optical flow. All methods managed to detect the abnormal events in 80th and 250th frame. MOG2 performed better in terms of stability and achieved more consistent results on passenger boarding and disembarking.

Figure 22.

Regularity curves (top-left) with a bag-snatching scenario using three preprocessing methods: (a) MOG2 background subtraction, (b) frame subtraction (absdiff), (c) Farneback optical flow. All methods managed to detect the abnormal events in 80th and 250th frame. MOG2 performed better in terms of stability and achieved more consistent results on passenger boarding and disembarking.

Figure 23.

Experimental outdoor evaluation with a fighting scenario on a simulated bus stop. Red regions represent abnormalities in the frame.

Figure 23.

Experimental outdoor evaluation with a fighting scenario on a simulated bus stop. Red regions represent abnormalities in the frame.

Figure 24.

Confusion matrix of the hybrid classifier on the test data.

Figure 24.

Confusion matrix of the hybrid classifier on the test data.

Figure 25.

NVIDIA Tesla K40 m GPU energy consumption on full usage (avg. 13 fps).

Figure 25.

NVIDIA Tesla K40 m GPU energy consumption on full usage (avg. 13 fps).

Table 1.

Methods comparison.

Table 1.

Methods comparison.

| Method | Strengths | Drawbacks |

|---|

| [13] | crowded scenes | offline, low accuracy |

| [14] | high accuracy | requires data from wearable sensors |

| [15] | robust, high accuracy | requires data from additional sensors |

| [16] | supervised/unsupervised learning | multiple camera types, angle support |

| [19] | high performance | low accuracy, events of occlusion |

| [20] | robust, high accuracy | requires depth, acceleration data |

| proposed | flexible, high accuracy, multiple FoV, positions, angles | requires a lot of data, fine tuning |

Table 2.

A description of the features generated in the feature extraction stage for the action classification.

Table 2.

A description of the features generated in the feature extraction stage for the action classification.

| Feature | Description |

|---|

| Xs | A direct concatenation of joints positions of N frames. |

| H | Average height (Neck to Thigh length) of N frames. Used for normalization. |

| X | Normalized joint positions [Xs − mean(Xs)]/H |

| Vj | Velocities of the joints {X[t] − X[t − 1]} |

| Vc | Velocity of the center {sum(Xc[t] − Xc[t − 1])} (10× weight) |

Table 3.

Technical specifications of the equipment used in the experimental environment.

Table 3.

Technical specifications of the equipment used in the experimental environment.

| Solution | Pose Classification | Regularity Learning | Hybrid Classification |

|---|

| Camera | oCam 5MP sensor | AXIS M3046-V | AXIS M3046-V |

| Power Supply | USB 3.0 | PoE | PoE |

| Host | In-shuttle | In-shuttle | In-shuttle |

| Power Supply | 500 W (max) | 500 W (max) | 500 W (max) |

Table 4.

Precision, recall, and F1-Score metrics for the two classes.

Table 4.

Precision, recall, and F1-Score metrics for the two classes.

| Class | Precision | Recall | F1-Score | Support |

|---|

| Normal | 0.99 | 0.99 | 0.99 | 6040 |

| Abnormal | 0.93 | 0.95 | 0.94 | 1335 |

| Accuracy | | | 0.99 | 7375 |

| Macro avg | 0.96 | 0.97 | 0.96 | 7375 |

| Weighted avg | 0.99 | 0.99 | 0.99 | 7375 |

Table 5.

Experimental comparison with human action recognition methods based on the recent survey by Zhang et al. [

32]. The proposed method does not utilize depth information from the NTU-RGB-D dataset.

Table 5.

Experimental comparison with human action recognition methods based on the recent survey by Zhang et al. [

32]. The proposed method does not utilize depth information from the NTU-RGB-D dataset.

| Method | Year | NTU-RGB-D | Custom Dataset |

|---|

| [33] | 2020 | 91.5% |

| [34] | 2020 | 90.3% |

| [35] | 2018 | 73.4% |

| [12] | 2018 | 30.7% |

| [26] | 2016 | 62.93% |

| [36] | 2016 | 69.2% |

| [37] | 2014 | 31.82% |

| Proposed | 2020 | 71.4% | 99.6% |

Table 6.

Comparison of area under ROC curve (AUC) and Equal Error Rate (EER) of different methods on the UCSD-Ped1 dataset [

13]. Higher AUC and lower EER are better.

Table 6.

Comparison of area under ROC curve (AUC) and Equal Error Rate (EER) of different methods on the UCSD-Ped1 dataset [

13]. Higher AUC and lower EER are better.

| Method | Area under ROC Curve (AUC) | Equal Error Rate (EER) |

|---|

| [29] | 81.0 | 27.9 |

| [38] | 89.9 | 12.5 |

| [39] | 72.7 | 33.1 |

| [40] | 77.1 | 38.0 |

| [13] | 66.8 | 40.0 |

| [41] | 67.5 | 31.0 |

| Proposed | 88.2 | 13.1 |

Table 7.

Precision, Recall, and F1-Score metrics on the test set of the hybrid classifier.

Table 7.

Precision, Recall, and F1-Score metrics on the test set of the hybrid classifier.

| Class | Precision | Recall | F1-Score | Support |

|---|

| Normal | 0.98 | 0.99 | 0.99 | 640 |

| Abnormal | 0.98 | 0.96 | 0.97 | 252 |

| Accuracy | - | - | 0.98 | 892 |

| Macro avg | 0.98 | 0.97 | 0.98 | 892 |

| Weighted avg | 0.98 | 0.98 | 0.98 | 892 |