A Novel Change Detection Method for Natural Disaster Detection and Segmentation from Video Sequence

Abstract

:1. Introduction

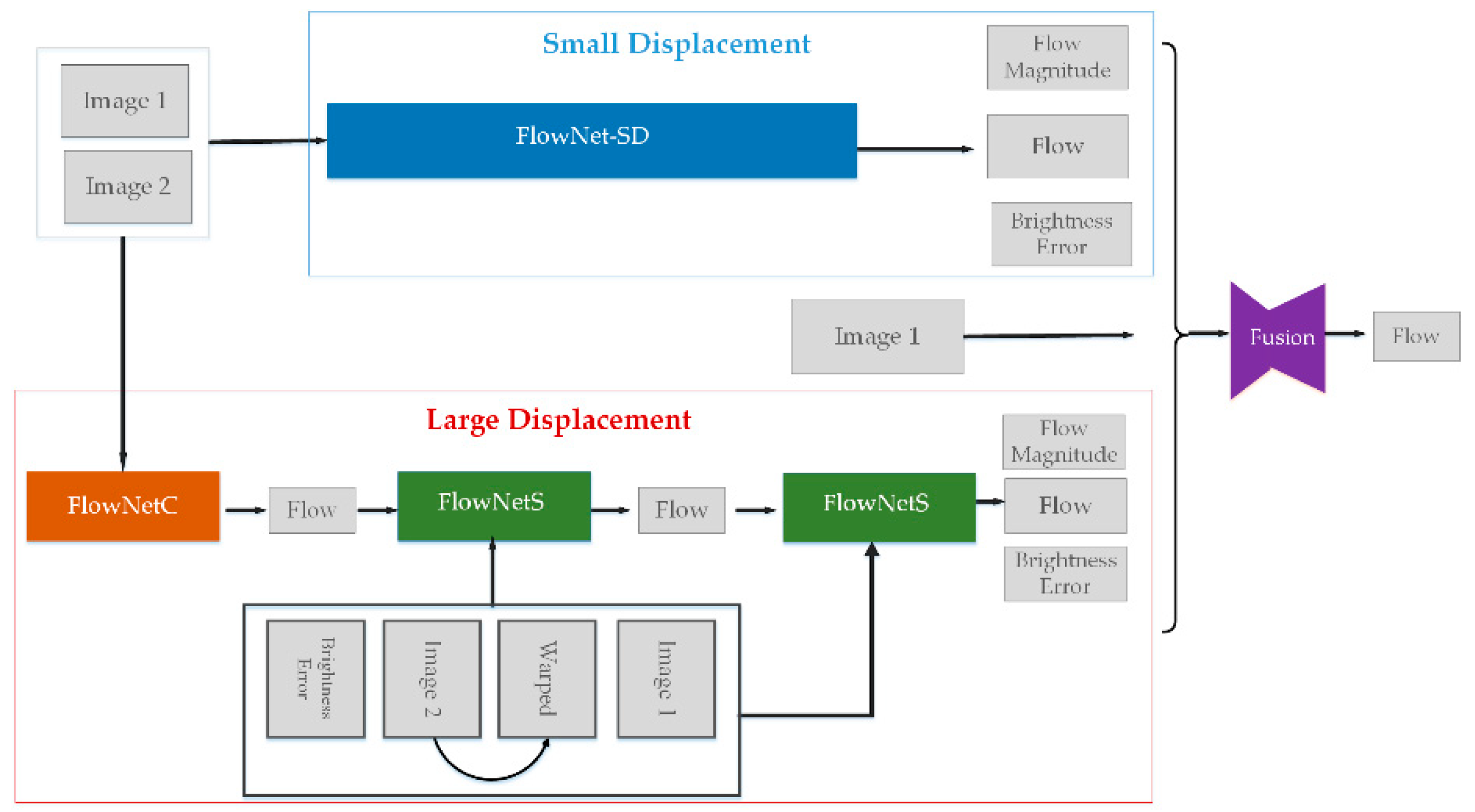

2. Optical Flow Estimation Methods

3. Proposed OFATS Method

3.1. Motion Detection

3.2. Change Boundary Extraction

3.2.1. The Objective Function

3.2.2. Optimizing Strategies for Threshold Selection

3.3. The Proposed CD Method

| Algorithm 1. The proposed OFATS for change detection in natural disaster |

| Input: The two frames extracted from the video sequence. |

| Output: The change detection result. |

| 1: Input the two frame images and calculate the movement in horizontal and vertical directions based on FlowNet 2.0; |

| 2: Calculate the displacements reserving a decimal fraction based on Equation (1); |

| 3: Generate initial global fitness value gbest and iteration value i; |

| 4: while the algorithm does not reach the termination condition do |

| 5: I = I + 1; |

| 6: Divide into unchanged class C1 and changed class C2 threshold wi and normalize displacements which are larger than 1 in C2 according to Equation (12) and (13) and then involve in arithmetic by using Equations (5)–(10); |

| 7: Calculate between- and within-class variance by using Equations (3) and (4); |

| 8: Calculate fitness value by using Equation (2); |

| 9: if The solution is better then |

| 10: Replace the current individual; |

| 11: else |

| 12: The individual does not change; |

| 13: End if |

| 14: Find out the current global best agent; |

| 15: end while |

| 16: return The optimal threshold. |

| 17: Divide the image into two parts by optimal threshold value by using Equation (11). |

4. Data and Experiments

4.1. Study Datasets

4.2. Evaluation of the Proposed Threshold Selection Method

4.3. Comparing the Proposed Method with Other CD Methods

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Milly, P.C.D.; Wetherald, R.T.; Dunne, K.A.; Delworth, T.L. Increasing risk of great floods in a changing climate. Nature 2002, 415, 514. [Google Scholar] [CrossRef] [PubMed]

- Sublime, J.; Kalinicheva, E. Automatic post-disaster damage mapping using deep-learning techniques for change detection: Case study of the Tohoku Tsunami. Remote Sens. 2019, 11, 1123. [Google Scholar] [CrossRef] [Green Version]

- Crooks, A.T.; Wise, S. GIS and agent-based models for humanitarian assistance. Comput. Environ. Urban Syst. 2013, 41, 100–111. [Google Scholar] [CrossRef]

- Lu, C.; Ying, K.; Chen, H. Real-time relief distribution in the aftermath of disasters—A rolling horizon approach. Transp. Res. Part E Logist. Transp. Rev. 2016, 93, 1–20. [Google Scholar] [CrossRef]

- Asokan, A.; Anitha, J. Change detection techniques for remote sensing applications: A survey. Earth Sci. Inform. 2019, 12, 143–160. [Google Scholar] [CrossRef]

- Klomp, J. Economic development and natural disasters: A satellite data analysis. Global Environ. Chang. 2016, 36, 67–88. [Google Scholar] [CrossRef]

- Yu, H.; Wen, Y.; Guang, H.; Ru, H.; Huang, P. Change detection using high resolution remote sensing images based on active learning and Markov random fields. Remote Sens. 2017, 9, 1233. [Google Scholar] [CrossRef] [Green Version]

- Pulvirenti, L.; Chini, M.; Pierdicca, N.; Guerriero, L.; Ferrazzoli, P. Flood monitoring using multi-temporal COSMO-SkyMed data: Image segmentation and signature interpretation. Remote Sens. Environ. 2011, 115, 990–1002. [Google Scholar] [CrossRef]

- Lacroix, P.; Bièvre, G.; Pathier, E.; Kniess, U.; Jongmans, D. Use of Sentinel-2 images for the detection of precursory motions before landslide failures. Remote Sens. Environ. 2018, 215, 507–516. [Google Scholar] [CrossRef]

- Cai, J.; Wang, C.; Mao, X.; Wang, Q. An adaptive offset tracking method with SAR images for landslide displacement monitoring. Remote Sens. 2017, 9, 830. [Google Scholar] [CrossRef] [Green Version]

- Gautam, D.; Dong, Y. Multi-hazard vulnerability of structures and lifelines due to the 2015 Gorkha earthquake and 2017 central Nepal flash flood. J. Build. Eng. 2018, 17, 196–201. [Google Scholar] [CrossRef]

- Alizadeh, M.; Ngah, I.; Hashim, M.; Pradhan, B.; Pour, A. A hybrid analytic network process and artificial neural network (ANP-ANN) model for urban earthquake vulnerability assessment. Remote Sens. 2018, 10, 975. [Google Scholar] [CrossRef] [Green Version]

- Carlotto, M.J. Detection and analysis of change in remotely sensed imagery with application to wide area surveillance. IEEE T. Image Process. 1997, 6, 189–202. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bejiga, M.; Zeggada, A.; Nouffidj, A.; Melgani, F. A convolutional neural network approach for assisting avalanche search and rescue operations with UAV imagery. Remote Sens. 2017, 9, 100. [Google Scholar] [CrossRef] [Green Version]

- Shi, W.; Zhang, M.; Zhang, R.; Chen, S.; Zhan, Z. Change detection based on artificial intelligence state-of-the-art and challenges. Remote Sens. 2020, 12, 1688. [Google Scholar] [CrossRef]

- Hall, O.; Hay, G.J. A multiscale object-specific approach to digital change detection. Int. J. Appl. Earth Obs. 2003, 4, 311–327. [Google Scholar] [CrossRef]

- Matsuoka, M.; Yamazaki, F. Building damage mapping of the 2003 Bam, Iran, earthquake using Envisat/ASAR intensity imagery. Earthq. Spectra 2005, 21, 285–294. [Google Scholar] [CrossRef]

- Sharma, K.; Saraf, A.K.; Das, J.; Baral, S.S.; Borgohain, S.; Singh, G. Mapping and change detection study of Nepal-2015 earthquake induced landslides. J. Indian Soc. Remote 2018, 46, 605–615. [Google Scholar] [CrossRef]

- Alizadeh, M.; Shirzadi, A.; Khosravi, K.; Melesse, A.M.; Yekrangnia, M.; Rezaie, F.; Al, E. SEVUCAS a novel GIS-based machine learning software for seismic vulnerability assessment. Appl. Sci. 2019, 9, 3495. [Google Scholar]

- ElGharbawi, T.; Tamura, M. Coseismic and postseismic deformation estimation of the 2011 Tohoku earthquake in Kanto Region, Japan, using InSAR time series analysis and GPS. Remote Sens. Environ. 2015, 168, 374–387. [Google Scholar] [CrossRef] [Green Version]

- Du, S.; Zhang, Y.; Qin, R.; Yang, Z.; Zou, Z.; Tang, Y.; Fan, C. Building change detection using old aerial images and new LiDAR data. Remote Sens. 2016, 8, 1030. [Google Scholar] [CrossRef] [Green Version]

- Sudipan, S.; Francesca, B.; Lorenzo, B. Destroyed-buildings detection from VHR SAR images using deep features. In Proceedings of the Image and Signal Processing for Remote Sensing XXIV, Berlin, Germany, 10–12 September 2018. [Google Scholar]

- Ji, M.; Liu, L.; Du, R.; Buchroithner, M.F. A comparative study of texture and convolutional neural network features for detecting collapsed buildings after earthquakes using pre- and post-event satellite imagery. Remote Sens. 2019, 11, 1202. [Google Scholar] [CrossRef] [Green Version]

- Ci, T.; Liu, Z.; Wang, Y. Assessment of the degree of building damage caused by disaster using convolutional neural networks in combination with ordinal regression. Remote Sens. 2019, 11, 2858. [Google Scholar] [CrossRef] [Green Version]

- Peng, D.; Zhang, Y.; Guan, H. End-to-end change detection for high resolution satellite images using improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef] [Green Version]

- Yavariabdi, A.; Kusetogullari, H. Change detection in multispectral landsat images using multiobjective evolutionary algorithm. IEEE Geosci. Remote Sens. 2017, 14, 414–418. [Google Scholar] [CrossRef]

- Ghaffarian, S.; Kerle, N.; Pasolli, E.; Jokar Arsanjani, J. Post-disaster building database updating using automated deep learning: An integration of pre-disaster OpenStreetMap and multi-temporal satellite data. Remote Sens. 2019, 11, 2427. [Google Scholar] [CrossRef] [Green Version]

- Pi, Y.; Nath, N.D.; Behzadan, A.H. Convolutional neural networks for object detection in aerial imagery for disaster response and recovery. Adv. Eng. Inform. 2020, 43, 101009. [Google Scholar] [CrossRef]

- Kung, H.; Hsieh, C.; Ho, C.; Tsai, Y.; Chan, H.; Tsai, M. Data-augmented hybrid named entity recognition for disaster management by transfer learning. Appl. Sci. 2020, 10, 4234. [Google Scholar] [CrossRef]

- Li, M.; Li, M.; Zhang, P.; Wu, Y.; Song, W.; An, L. SAR image change detection using PCANet guided by saliency detection. IEEE Geosci. Remote Sens. 2018, 16, 402–406. [Google Scholar] [CrossRef]

- Curtis, A.; Mills, J.W. Spatial video data collection in a post-disaster landscape: The Tuscaloosa Tornado of 27 April 2011. Appl. Geogr. 2012, 32, 393–400. [Google Scholar] [CrossRef]

- Curtis, A.J.; Mills, J.W.; McCarthy, T.; Fotheringham, A.S.; Fagan, W.F. Space and Time Changes in Neighborhood Recovery after a Disaster Using a Spatial Video Acquisition System; Springer: Berlin, Germany, 2009; pp. 373–392. [Google Scholar]

- Tu, Z.; Xie, W.; Zhang, D.; Poppe, R.; Veltkamp, R.C.; Li, B.; Yuan, J. A survey of variational and CNN-based optical flow techniques. Signal Process. Image Commun. 2019, 72, 9–24. [Google Scholar] [CrossRef]

- Guo, Y.; Zhang, Z.; He, D.; Niu, J.; Tan, Y. Detection of cow mounting behavior using region geometry and optical flow characteristics. Comput. Electron. Agric. 2019, 163, 104828. [Google Scholar] [CrossRef]

- Gronskyte, R.; Clemmensen, L.H.; Hviid, M.S.; Kulahci, M. Monitoring pig movement at the slaughterhouse using optical flow and modified angular histograms. Biosyst. Eng. 2016, 141, 19–30. [Google Scholar] [CrossRef] [Green Version]

- Yan, W.; Wang, Y.; van der Geest, R.J.; Tao, Q. Cine MRI analysis by deep learning of optical flow: Adding the temporal dimension. Comput. Biol. Med. 2019, 111, 103356. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Clarysse, P.; Liu, Z.; Gao, B.; Liu, W.; Croisille, P.; Delachartre, P. A gradient-based optical-flow cardiac motion estimation method for cine and tagged MR images. Med. Image Anal. 2019, 57, 136–148. [Google Scholar] [CrossRef]

- Cao, Y.; Renfrew, A.; Cook, P. Comprehensive vehicle motion analysis using optical flow optimization based on pulse-coupled neural network. IFAC Proc. Vol. 2008, 41, 158–163. [Google Scholar] [CrossRef] [Green Version]

- Tchernykh, V.; Beck, M.; Janschek, K. Optical flow navigation for an outdoor UVA using a wide angle mono camera and dem matching. IFAC Proc. Vol. 2006, 39, 590–595. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Xi, D.; Li, Z.; Hong, Y. A new methodology for pixel-quantitative precipitation nowcasting using a pyramid Lucas Kanade optical flow approach. J. Hydrol. 2015, 529, 354–364. [Google Scholar] [CrossRef]

- Zhao, R.; Sun, P. Deformation-phase measurement by optical flow method. Opt. Commun. 2016, 371, 144–149. [Google Scholar] [CrossRef]

- Osman, A.B.; Ovinis, M. A review of in-situ optical flow measurement techniques in the Deepwater Horizon oil spill. Measurement 2020, 153, 107396. [Google Scholar]

- Yuan, W.; Yuan, X.; Xu, S.; Gong, J.; Shibasaki, R. Dense Image-Matching via Optical Flow Field Estimation and Fast-Guided Filter Refinement. Remote Sens. 2019, 11, 2410. [Google Scholar] [CrossRef] [Green Version]

- Sun, D.; Roth, S.; Black, M.J. Secrets of optical flow estimation and their principles. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2432–2439. [Google Scholar]

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef] [Green Version]

- Prajapati, D.; Galiyawala, H.J. A Review on Moving Object Detection and Tracking; Department of Electronics and Communication Engineering, UKA Tarsadia University: Bardoli, India, 2015. [Google Scholar]

- Wei, S.; Yang, L.; Chen, Z.; Liu, Z. Motion detection based on optical flow and self-adaptive threshold segmentation. Procedia Eng. 2011, 15, 3471–3476. [Google Scholar] [CrossRef] [Green Version]

- Hou, B.; Wang, Y.; Liu, Q. Change detection based on deep features and low rank. IEEE Geosci. Remote Sens. 2017, 14, 2418–2422. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Fischer, P.; Ilg, E.; Hausser, P.; Hazirbas, C.; Golkov, V.; Van Der Smagt, P.; Cremers, D.; Brox, T. Flownet: Learning optical flow with convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; pp. 2758–2766. [Google Scholar]

- Hui, T.W.; Tang, X. LiteFlowNet: A lightweight convolutional neural network for optical flow estimation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8981–8989. [Google Scholar]

- Ilg, E.; Mayer, N.; Saikia, T.; Keuper, M.; Dosovitskiy, A.; Brox, T. Flownet 2.0: Evolution of optical flow estimation with deep networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, San Francisco, CA, USA, 18–20 June 1996; pp. 2462–2470. [Google Scholar]

- Baker, S.; Scharstein, D.; Lewis, J.P.; Roth, S.; Black, M.J.; Szeliski, R. A Database and evaluation methodology for optical flow. Int. J. Comput. Vis. 2011, 92, 1–31. [Google Scholar] [CrossRef] [Green Version]

- Vala, H.J.; Baxi, A. A review on Otsu image segmentation algorithm. Int. J. Adv. Res. Comput. Eng. Technol. 2013, 2, 387–389. [Google Scholar]

- Pal, N.R.; Pal, S.K. A review on image segmentation techniques. Pattern Recogn. 1993, 26, 1277–1294. [Google Scholar] [CrossRef]

- Waseem Khan, M. A Survey: Image segmentation techniques. Int. J. Future Comput. Commun. 2014, 3, 89–93. [Google Scholar] [CrossRef] [Green Version]

- Digital Globe Data in Indonesia Earthquake. Available online: https://www.youtube.com/watch?v=-41ENJF0wVwx (accessed on 24 October 2018).

- Slow-Moving Landslide Des Caught on Camera 2. Available online: https://www.youtube.com/watch?v=PmLHg-mLrMU (accessed on 10 July 2019).

- Qiao, H.J.; Wan, X.; Xu, J.Z.; Li, S.Y.; He, P.P. Deep learning based optical flow estimation for change detection: A case study in Indonesia earthquake. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 3, 317–322. [Google Scholar] [CrossRef]

| Parameter Name | Formula | Explanation of Abbreviations |

|---|---|---|

| (true positive): detects that are correctly identified as changed (true negative): detects that are correctly identified as unchanged (false positive): detects that are falsely identified as changed (false negative): detects that are falsely identified as unchanged | ||

| Adaptive Threshold Selection in OFATS | Threshold Selection Based on Otsu | ||||

|---|---|---|---|---|---|

| Study Data | Experimental Frame | Optimum Threshold | F1 | Optimum Threshold | F1 |

| Tsunami data | Frame 160–165 | 0.3 | 0.98 | 0.4 | 0.97 |

| Frame 162–163 | 0.2 | 0.97 | 0.38 | 0.90 | |

| Frame 175–180 | 0.3 | 0.99 | 0.48 | 0.96 | |

| Landslide data | Frame 4960–4970 | 0.4 | 0.94 | 0.4 | 0.94 |

| Frame 4970–4980 | 0.3 | 0.92 | 0.5 | 0.91 | |

| Frame 4980–4985 | 0.3 | 0.92 | 0.5 | 0.91 | |

| Method | Ground Truth | F1 | K | OA (%) | |||

|---|---|---|---|---|---|---|---|

| C | U | UA (%) | |||||

| Image differencing | C | 507,934 | 37,899 | 93.0 | 0.79 | 0.69 | 86.0 |

| U | 237,043 | 1,183,204 | 83.3 | ||||

| PA (%) | 68.2 | 96.7 | |||||

| Image rationing | C | 624,451 | 841,520 | 42.6 | 0.57 | 0.13 | 51.1 |

| U | 120,526 | 379,583 | 76.0 | ||||

| PA (%) | 83.8 | 31.1 | |||||

| CVA | C | 420,863 | 229,199 | 64.7 | 0.60 | 0.39 | 71.9 |

| U | 324,114 | 991,904 | 75.4 | ||||

| PA (%) | 56.5 | 81.2 | |||||

| PCC | C | 294,325 | 401,789 | 42.2 | 0.41 | 0.07 | 56.6 |

| U | 450,652 | 819,314 | 64.5 | ||||

| PA (%) | 39.5 | 67.1 | |||||

| KL | C | 548,695 | 750,222 | 42.2 | 0.54 | 0.11 | 51.9 |

| U | 196,282 | 470,881 | 70.6 | ||||

| PA (%) | 73.7 | 38.6 | |||||

| COF | C | 688,338 | 36,873 | 94.9 | 0.94 | 0.90 | 95.2 |

| U | 56,639 | 1,184,230 | 95.4 | ||||

| PA (%) | 92.4 | 97.0 | |||||

| OFATS | C | 737,237 | 21,164 | 97.2 | 0.98 | 0.97 | 98.5 |

| U | 7740 | 1,199,939 | 99.3 | ||||

| PA (%) | 98.9 | 98.2 | |||||

| Method | Ground Truth | F1 | K | OA (%) | |||

|---|---|---|---|---|---|---|---|

| C | U | UA (%) | |||||

| Image differencing | C | 512,244 | 218,623 | 70.1 | 0.76 | 0.64 | 83.8 |

| U | 100,309 | 1,134,904 | 91.9 | ||||

| PA (%) | 83.6 | 83.8 | |||||

| Image rationing | C | 540,840 | 205,471 | 72.5 | 0.80 | 0.69 | 85.9 |

| U | 71,713 | 1,148,056 | 94.1 | ||||

| PA (%) | 88.3 | 84.8 | |||||

| CVA | C | 577,780 | 90,087 | 86.5 | 0.90 | 0.86 | 93.6 |

| U | 34,773 | 1,263,440 | 97.3 | ||||

| PA (%) | 94.3 | 93.3 | |||||

| PCC | C | 380,536 | 613,376 | 38.3 | 0.47 | 0.14 | 57.0 |

| U | 232,017 | 740,151 | 76.1 | ||||

| PA (%) | 62.1 | 54.7 | |||||

| KL | C | 525,254 | 208,691 | 71.6 | 0.78 | 0.67 | 84.9 |

| U | 87,299 | 1,144,836 | 92.9 | ||||

| PA (%) | 85.7 | 84.6 | |||||

| COF | C | 611,643 | 140,762 | 81.3 | 0.90 | 0.84 | 92.8 |

| U | 910 | 1,212,765 | 99.9 | ||||

| PA (%) | 99.8 | 89.6 | |||||

| OFATS | C | 584,927 | 44,777 | 92.9 | 0.94 | 0.91 | 96.3 |

| U | 27,626 | 1,308,750 | 97.9 | ||||

| PA (%) | 95.4 | 96.7 | |||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiao, H.; Wan, X.; Wan, Y.; Li, S.; Zhang, W. A Novel Change Detection Method for Natural Disaster Detection and Segmentation from Video Sequence. Sensors 2020, 20, 5076. https://doi.org/10.3390/s20185076

Qiao H, Wan X, Wan Y, Li S, Zhang W. A Novel Change Detection Method for Natural Disaster Detection and Segmentation from Video Sequence. Sensors. 2020; 20(18):5076. https://doi.org/10.3390/s20185076

Chicago/Turabian StyleQiao, Huijiao, Xue Wan, Youchuan Wan, Shengyang Li, and Wanfeng Zhang. 2020. "A Novel Change Detection Method for Natural Disaster Detection and Segmentation from Video Sequence" Sensors 20, no. 18: 5076. https://doi.org/10.3390/s20185076