A New Roadway Eventual Obstacle Detection System Based on Computer Vision

Abstract

:1. Introduction

2. Materials and Methods

2.1. Hardware Architecture

2.1.1. Vision System

2.1.2. Control System

2.1.3. Power System

2.1.4. Alert System

2.2. Software

- Read and decode each image received from the camera and establish the region of interest (ROI) according to the parameters fixed in the config file.

- Detection of motion based on background subtraction techniques. This process is described in Section 2.2.1.

- Separation of the different moving elements within the ROI. This process is also described in Section 2.2.1.

- Assign and manage a child thread to track each detected moving element, avoiding that the same element is followed and duplicated. In this part, the algorithm starts a child thread for each tracking loop and cancels it when the tracking element is lost. In Section 2.2.2 is described the tracking process carried out for each child thread.

- Process the results returned by the children threads and control the states of the warning system for the drivers. The warning system is switch on when some elements are classified as obstacle, but when the obstacle disappears, the warning control remains activated for an ‘extra warning time’ (fixed in the config file) to prevent possible unwanted losses of tracking elements (e.g., due to large twist of the animal). In any case, we prioritise the presence of false positives over false negatives.

2.2.1. Elements Detection

- 1-a

- Background subtraction: this step creates a binary image which defines if each pixel belongs to the background or not. For it, we have considered and tested different background subtraction methods such as: (a) Mixture-of-gaussian (MOG) [44,45] which is based on gaussians mixture probability density functions; (b) MOG2 [46] which is also based on gaussians mixture models but adapting the number of components for each pixel; (c) k-nearest neighbours (KNN) [47] which presents recursive equations that are used to constantly update the parameters of a gaussian mixture model and to simultaneously select the appropriate number of components for each pixel according to the nearest neighbour; and (d) Godbehere-Matsukawa-Goldberg (GMG) [48] which combines a static background model (obtained with the first 120 images) with a probability estimation to detect foreground objects according to Bayes theorem. Although any of these methods can be selected in the config file, we worked with the MOG2 method because it produces very good results and it is very fast and adequate for real time applications. A comparative analysis of these methods can be found in [25].

- 1-b

- Filtering and corrections in the background subtraction: this step has two parts. The first one consists in joining the wrong segmentations in the moving element detections, by means of a dilation operator; and the second part eliminates those small size detections based on an erosion operator. Particularly, this erosion operator eliminates movements of background elements such as leaves, specks of dust, snow or water drops, among others. The amplitude in each transformation of this step is defined in the config file.

- 2-a

- Segmentation: this step separates the different detections and returns the contour of each one. It makes possible to track each element separately using parallel child loops running by different threads.

- 2-b

- Segmentation filtering: in this step a routine calculates the perimeter of each detection in order to filter wrong detections. It eliminates those detections whose perimeter is out of a range (defined in the config file). For instance, some wrong detections such as trees, branches, or even small elements such as leaves, rain, snow, etc., that have ended up forming large and irregular shapes.

2.2.2. Motion Analysis: Element Tracking and Classification

Validation of Feature Points

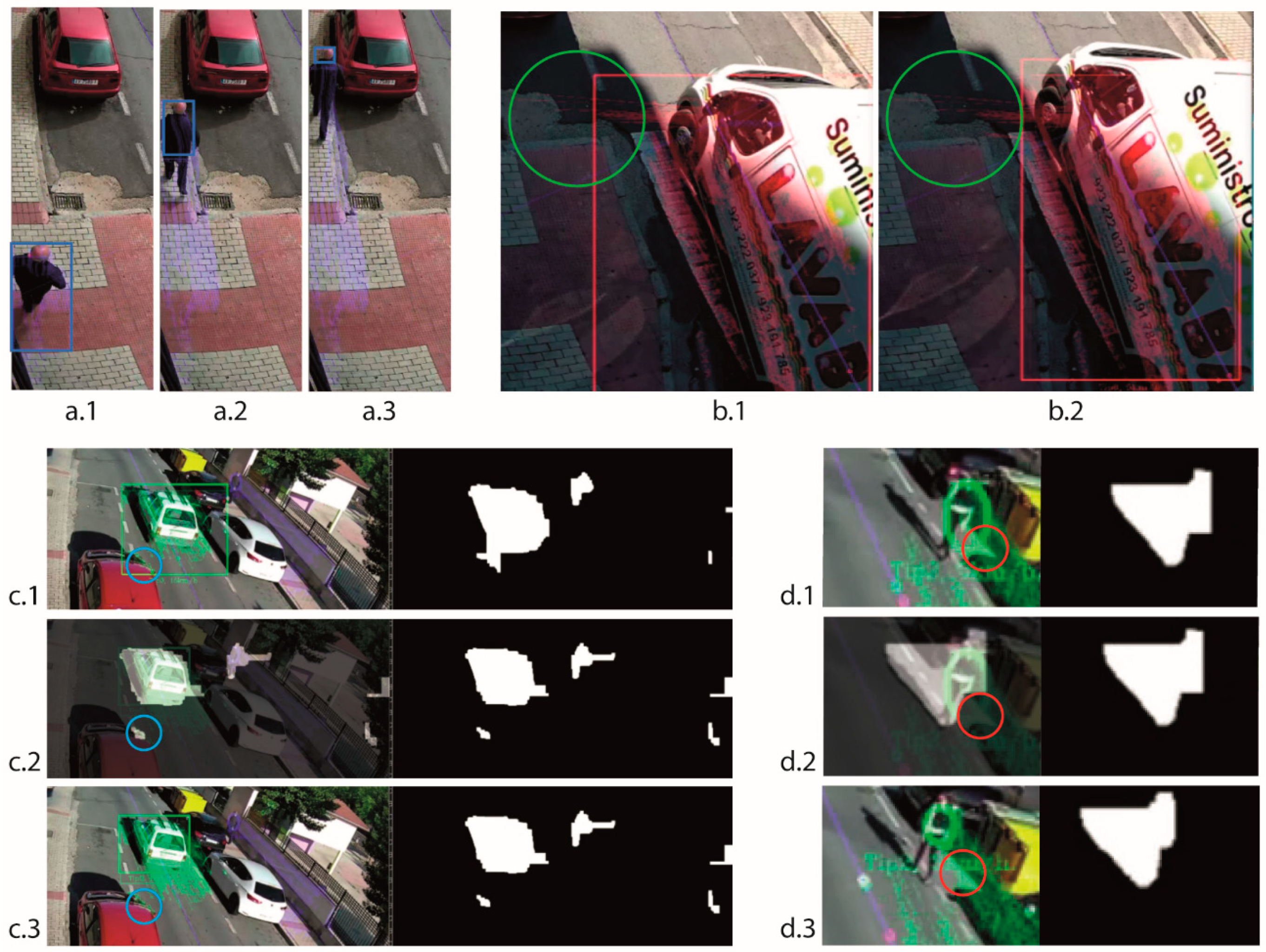

- The LK algorithm returns a state value to indicate that the matching of the point in the new image is not good. For instance, in Figure 4a is outlined a sequence of images where the matching of LK is losing tracking points because of the variation in the perspective view of the element. We can see how the size of the bounding rectangle, in blue, is decreasing.

- The displacement between matching points is unusually different with respect to the typical displacement of the total feature points of the element. For instance, in Figure 4b is shown two consecutives images where the matching of LK of some tracking points, surrounded by a green circle, produces a very strange jump and then they are discarded. In Figure 4c we can observe how some tracking points are discarded because they are caused by a reflection and do not follow the moving element although they are in a movement zone that has detached itself from the moving element zone.

- The feature point is out of the motion zone defined by the motion detection loop, so it does not follow the element contour defined by the main loop (Section 2.2.1). For instance, in Figure 4d is outlined a sequence of images where some tracking points, surrounded by the red circle, are discarded because they are out of the motion zone (Figure 4(d.2)).

Element Classification

- Animals: obstacles with an irregular path or stopped. They are represented as elements with a very low speed with respect to the axis of the road, but they move in any direction through the ROI. This trajectory has a displacement in some direction, not reciprocating movements typical of the effect of the wind over branches. They can be elements that has a fix position in the ROI, with small motions over its position, having been previously detected with a displacement path to enter in the ROI (e.g., animals stopped in the road or roadside feeding).

- Pedestrian: Moving obstacles with low speed in road direction, normally pedestrians. They could be also an animal, or a broken vehicle, which represent an obstacle in the road or even a broken branch or similar element dragged by the wind in the road direction.

- Low speed vehicles: cyclist, agricultural tractor, harvester, backhoe, etc. Elements whose travel speed measured respect to the axis of the road is high to be considered an animal or pedestrian, but it could increase the risk of collision accident. The speed range in this type of elements is also fixed in the config file.

- Normal traffic: cars, trunks, motorcycle, buses, etc. Elements whose travel speed measured respect to the axis of the road is very high. It is bigger than a value defined in the config file, normally 35 or 40 km/h.

- Noise: They are typically produced by the wind or by changes of illumination. These elements do not have displacement in the ROI, but they can have motion without changing its location. For example, the vegetation stirred by the wind, the own vibrations on the camera. These elements appear in the ROI detected by the main loop in the motion detection step, but they do not present a path to arrive to this location like the ‘animals. Although, an animal cannot be detected by the tracking loop, it is unlikely that an animal does not generate a new path to be detected again by the system in the defined ‘extra warning time’ controlled by the main loop. When an element is classified as noise, it can be tracked again in the next iterations in order to reduce the false negatives.

3. Results and Discussion

3.1. Experimental Test Bench

3.2. Processing Hardware Validation: Times and CPU Usage

3.2.1. Main Loop

3.2.2. Children Threads

3.3. Motion Detection: Background Subtraction Method

3.4. Motion Analysis

3.4.1. Definition and Tracking of Feature Points

3.4.2. Classification of the Elements

3.5. Analysis of False Negatives

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AVC | animal-vehicle collision |

| CPU | central processing unit |

| CV | computer vision |

| DL | deep learning |

| FAST | features from accelerated segment test |

| FIR | far-infrared |

| FPS | frames per second |

| GFTT | good features to track |

| GMG | Godbehere-Matsukawa-Goldberg |

| GMM | Gaussian mixture models |

| HW | hardware |

| KNN | k-nearest neighbours |

| LK | Lucas-Kanade |

| MIR | mid-infrared |

| ML | machine learning |

| MOG | mixture-of-gaussian |

| NIR | near-infrared |

| RADS | roadway animal detection system |

| RGB | red-green-blue |

| ROI | region of interest |

| RT | real time |

| SD | standard deviation |

| SW | software |

| WVC | wildlife-vehicle collision |

References

- Bruinderink, G.G.; Hazebroek, E. Ungulate traffic collisions in Europe. Conserv. Biol. 1996, 10, 1059–1067. [Google Scholar] [CrossRef]

- DGT, E. Accidentes Por Animales en la Carretera. Available online: http://revista.dgt.es/es/noticias/nacional/2015/08AGOSTO/0814-Accidentes-causados-por-animales.shtml#.Xk2JLyhKhaQ (accessed on 19 February 2020).

- Sáenz-de-Santa-María, A.; Tellería, J.L. Wildlife-vehicle collisions in Spain. Eur. J. Wildl. Res. 2015, 61, 399–406. [Google Scholar] [CrossRef]

- Mehdizadeh, A.; Cai, M.; Hu, Q.; Alamdar Yazdi, M.A.; Mohabbati-Kalejahi, N.; Vinel, A.; Rigdon, S.E.; Davis, K.C.; Megahed, F.M. A review of data analytic applications in road traffic safety. Part 1: Descriptive and predictive modeling. Sensors 2020, 20, 1107. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hu, Q.; Cai, M.; Mohabbati-Kalejahi, N.; Mehdizadeh, A.; Alamdar Yazdi, M.A.; Vinel, A.; Rigdon, S.E.; Davis, K.C.; Megahed, F.M. A review of data analytic applications in road traffic safety. Part 2: Prescriptive modeling. Sensors 2020, 20, 1096. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Van der Ree, R.; Smith, D.J.; Grilo, C. (Eds.) Handbook of Road Ecology; Wiley-Blackwell: Hoboken, NJ, USA; Chichester, West Sussex, UK, 2015; ISBN 978-1-118-56816-3. [Google Scholar]

- Rytwinski, T.; Fahrig, L. The impacts of roads and traffic on terrestrial animal populations. Handb. Road Ecol. 2015, 1, 237–246. [Google Scholar]

- Wilkins, D.C.; Kockelman, K.M.; Jiang, N. Animal-vehicle collisions in Texas: How to protect travelers and animals on roadways. Accid. Anal. Prev. 2019, 131, 157–170. [Google Scholar] [CrossRef] [PubMed]

- Smith, D.J.; van der Ree, R.; Rosell, C. Wildlife crossing structures: An effective strategy to restore or maintain wildlife connectivity across roads. Handb. Road Ecol. 2015, 172–183. [Google Scholar]

- Glista, D.J.; DeVault, T.L.; DeWoody, J.A. A review of mitigation measures for reducing wildlife mortality on roadways. Landsc. Urban Plan. 2009, 91, 1–7. [Google Scholar] [CrossRef] [Green Version]

- Huijser, M.P.; Kociolek, A.; McGowen, P.; Hardy, A.; Clevenger, A.P.; Ament, R. Wildlife-Vehicle Collision and Crossing Mitigation Measures: A toolbox for the Montana Department of Transportation. Available online: https://rosap.ntl.bts.gov/view/dot/24837 (accessed on 24 July 2019).

- Huijser, M.P.; Mosler-Berger, C.; Olsson, M.; Strein, M. Wildlife warning signs and animal detection systems aimed at reducing wildlife-vehicle collisions. Handb. Road Ecol. 2015, 198–212. [Google Scholar] [CrossRef]

- Huijser, M.P.; Holland, T.D.; Blank, M.; Greenwood, M.C.; McGowen, P.; Hubbard, B.; Wang, S. The Comparison of Animal Detection Systems in a Test-Bed: A Quantitative Comparison of System Reliability and Experiences with Operation and Maintenance. Available online: https://rosap.ntl.bts.gov/view/dot/24820 (accessed on 24 July 2019).

- Grace, M.K.; Smith, D.J.; Noss, R.F. Testing alternative designs for a roadside animal detection system using a driving simulator. Nat. Conserv. 2015, 11, 61–77. [Google Scholar] [CrossRef] [Green Version]

- Grace, M.; Smith, D.; Noss, R. Reducing the threat of wildlife-vehicle collisions during peak tourism periods using a roadside animal detection system. Accid. Anal. Prev. 2017, 109, 55–61. [Google Scholar] [CrossRef] [PubMed]

- Gagnon, J.W.; Dodd, N.L.; Sprague, S.C.; Ogren, K.S.; Loberger, C.D.; Schweinsburg, R.E. Animal-activated highway crosswalk: Long-term impact on elk-vehicle collisions, vehicle speeds, and motorist braking response. Hum. Dimens. Wildl. 2019, 24, 132–147. [Google Scholar] [CrossRef]

- Zhou, D.; Dillon, M.; Kwon, E. Tracking-based deer vehicle collision detection using thermal imaging. In Proceedings of the 2009 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guilin, China, 19–23 December 2009; pp. 688–693. [Google Scholar]

- MATLAB—MathWorks. Available online: https://www.mathworks.com/products/matlab.html (accessed on 5 February 2020).

- Forslund, D.; Bjärkefur, J. Night vision animal detection. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014; pp. 737–742. [Google Scholar]

- Price Tack, J.L.; West, B.S.; McGowan, C.P.; Ditchkoff, S.S.; Reeves, S.J.; Keever, A.C.; Grand, J.B. AnimalFinder: A semi-automated system for animal detection in time-lapse camera trap images. Ecol. Inform. 2016, 36, 145–151. [Google Scholar] [CrossRef]

- Mammeri, A.; Zhou, D.; Boukerche, A. Animal-vehicle collision mitigation system for automated vehicles. IEEE Trans. Syst. Man Cybern. Syst. 2016, 46, 1287–1299. [Google Scholar] [CrossRef]

- Jaskó, G.; Giosan, I.; Nedevschi, S. Animal detection from traffic scenarios based on monocular color vision. In Proceedings of the 2017 13th IEEE International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 7–9 September 2017; pp. 363–368. [Google Scholar]

- OpenCV. Available online: https://docs.opencv.org/4.1.1/ (accessed on 23 January 2020).

- Dhulekar, P.A.; Gandhe, S.T.; Bagad, G.R.; Dwivedi, S.S. Vision based technique for animal detection. In Proceedings of the 2018 International Conference On Advances in Communication and Computing Technology (ICACCT), Sangamner, India, 8–9 February 2018; pp. 344–348. [Google Scholar]

- Trnovszký, T.; Sýkora, P.; Hudec, R. Comparison of background subtraction methods on near infra-red spectrum video sequences. Procedia Eng. 2017, 192, 887–892. [Google Scholar] [CrossRef]

- Benraya, I.; Benblidia, N. Comparison of background subtraction methods. In Proceedings of the 2018 International Conference on Applied Smart Systems (ICASS), Medea, Algeria, 24–25 November 2018; pp. 1–5. [Google Scholar]

- Sewalkar, P.; Seitz, J. Vehicle-to-pedestrian communication for vulnerable road users: Survey, design considerations, and challenges. Sensors 2019, 19, 358. [Google Scholar] [CrossRef] [Green Version]

- Algabri, R.; Choi, M.-T. Deep-learning-based indoor human following of mobile robot using color feature. Sensors 2020, 20, 2699. [Google Scholar] [CrossRef]

- Qiu, Z.; Zhao, N.; Zhou, L.; Wang, M.; Yang, L.; Fang, H.; He, Y.; Liu, Y. Vision-based moving obstacle detection and tracking in paddy field using improved yolov3 and deep SORT. Sensors 2020, 20, 4082. [Google Scholar] [CrossRef]

- Chang, S.; Zhang, Y.; Zhang, F.; Zhao, X.; Huang, S.; Feng, Z.; Wei, Z. Spatial Attention fusion for obstacle detection using mmwave radar and vision sensor. Sensors 2020, 20, 956. [Google Scholar] [CrossRef] [Green Version]

- Yang, Y.; Li, Q.; Zhang, J.; Xie, Y. Iterative learning-based path and speed profile optimization for an unmanned surface vehicle. Sensors 2020, 20, 439. [Google Scholar] [CrossRef] [Green Version]

- Zeng, J.; Ju, R.; Qin, L.; Hu, Y.; Yin, Q.; Hu, C. Navigation in unknown dynamic environments based on deep reinforcement learning. Sensors 2019, 19, 3837. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pinchon, N.; Cassignol, O.; Nicolas, A.; Bernardin, F.; Leduc, P.; Tarel, J.P.; Brémond, R.; Bercier, E.; Brunet, J. All-Weather Vision for Automotive Safety: Which Spectral Band? In Advanced Microsystems for Automotive Applications 2018; Dubbert, J., Müller, B., Meyer, G., Eds.; Springer: Cham, Germany, 2019; Volume 21, pp. 3–15. [Google Scholar] [CrossRef] [Green Version]

- FS FS España. Available online: https://www.fs.com/es/ (accessed on 5 March 2020).

- FS-VCBOZ-4M—Datasheet FS—Data Center. Online document. Available online: https://img-en.fs.com/file/datasheet/fs-vcboz-4m-datasheet.pdf (accessed on 5 March 2020).

- Cámaras Matriciales—Visión Artificial—Productos. Available online: https://www.infaimon.com/categoria-producto/camaras/camaras-matriciales (accessed on 21 August 2020).

- Intel® CoreTM i7-8550U. Available online: https://ark.intel.com/content/www/es/es/ark/products/122589/intel-core-i7-8550u-processor-8m-cache-up-to-4-00-ghz.html (accessed on 24 June 2020).

- WEIDIAN Industrial PC. Available online: https://www.amazon.es/dp/B07WD2WQF2/ref=cm_sw_em_r_mt_dp_aQEuFb4YQP (accessed on 24 June 2020).

- Gama de PC Compacto y soluciones x86|MONOLITIC. Available online: http://www.monolitic.com/soluciones-y-productos/hardware-industrial/pc-compacto (accessed on 21 August 2020).

- Procesador Intel® CoreTM i7-7700T (caché de 8 M, hasta 380 GHz) Especificaciones de Productos. Available online: https://ark.intel.com/content/www/es/es/ark/products/97122/intel-core-i7-7700t-processor-8m-cache-up-to-3-80-ghz.html (accessed on 21 August 2020).

- BS-B 501F | Mini PC Box fanless de aluminio para la industria. Available online: https://www.belsatisistemas.com/pc-industrial/bs-b-501f (accessed on 22 August 2020).

- Procesador Intel® CoreTM i7-8700T (caché de 12 M, hasta 400 GHz) Especificaciones de Productos. Available online: https://ark.intel.com/content/www/es/es/ark/products/129948/intel-core-i7-8700t-processor-12m-cache-up-to-4-00-ghz.html (accessed on 23 August 2020).

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer Vision with the OpenCV Library; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2008; ISBN 978-0-596-55404-0. [Google Scholar]

- Stauffer, C.; Grimson, W.E.L. Adaptive background mixture models for real-time tracking. In Proceedings of the 1999 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (Cat. No PR00149), Fort Collins, CO, USA, 23–25 June 1999; Volume 2, pp. 246–252. [Google Scholar]

- KaewTraKulPong, P.; Bowden, R. An improved adaptive background mixture model for real-time tracking with shadow detection. In Video-Based Surveillance Systems: Computer Vision and Distributed Processing; Remagnino, P., Jones, G.A., Paragios, N., Regazzoni, C.S., Eds.; Springer US: Boston, MA, USA, 2002; pp. 135–144. ISBN 978-1-4615-0913-4. [Google Scholar]

- Zivkovic, Z. Improved adaptive Gaussian mixture model for background subtraction. In Proceedings of the Proceedings of the 17th International Conference on Pattern Recognition, Cambridge, UK, 26 August 2004; pp. 28–31. [Google Scholar]

- Zivkovic, Z.; van der Heijden, F. Efficient adaptive density estimation per image pixel for the task of background subtraction. Pattern Recognit. Lett. 2006, 27, 773–780. [Google Scholar] [CrossRef]

- Godbehere, A.B.; Matsukawa, A.; Goldberg, K. Visual tracking of human visitors under variable-lighting conditions for a responsive audio art installation. In Proceedings of the 2012 American Control Conference (ACC), Montreal, QC, Canada, 27–29 June 2012; pp. 4305–4312. [Google Scholar]

- Rosten, E.; Drummond, T. Machine Learning for high-speed corner detection. In Proceedings of the Computer Vision—ECCV 2006, Graz, Austria, 7–13 May 2006; Leonardis, A., Bischof, H., Pinz, A., Eds.; Springer: Berlin, Heidelberg, 2006; pp. 430–443. [Google Scholar]

- Jianbo, S.; Tomasi, C. Good features to track. In Proceedings of the 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence (IJCAI), Vancouver, BC, Canada, 24–28 August 1981; pp. 674–679. [Google Scholar]

- Zhiwei, H.; Yuanyuan, L.; Xueyi, Y. Models of vehicle speeds measurement with a single camera. In Proceedings of the 2007 International Conference on Computational Intelligence and Security Workshops (CISW 2007), Heilongjiang, China, 15–19 December 2007; pp. 283–286. [Google Scholar]

- Dehghani, A.; Pourmohammad, A. Single camera vehicles speed measurement. In Proceedings of the 2013 8th Iranian Conference on Machine Vision and Image Processing (MVIP), Zanjan, Iran, 10–12 September 2013; pp. 190–193. [Google Scholar]

- Tang, Z.; Wang, G.; Xiao, H.; Zheng, A.; Hwang, J.-N. Single-camera and inter-camera vehicle tracking and 3D speed estimation based on fusion of visual and semantic features. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 108–1087. [Google Scholar] [CrossRef]

- Sochor, J.; Juránek, R.; Špaňhel, J.; Maršík, L.; Široký, A.; Herout, A.; Zemčík, P. Comprehensive data set for automatic single camera visual speed measurement. IEEE Trans. Intell. Transp. Syst. 2019, 20, 1633–1643. [Google Scholar] [CrossRef]

| Feature | Value |

|---|---|

| Brand | FS |

| Model | FS-VCBOZ-4M |

| Image Sensor | OV4689 |

| Effective Pixels | 2688(H)*1520(V) (4Mpx) |

| Compression | H.264/H.265/JPEG/AVI/MJPEG |

| Video Delay | 0.3S (Within the Lan) |

| Focus | Auto |

| Infrared Distance | 60 m |

| Communication | TCP/IP, ICMP, HTTP, HTTPS, FTP, DHCP, DNS, DDNS, RTP, RTSP, RTCP, NTP, SMTP |

| Operation Temperature | −20 °C ~ +60 °C RH95% Max |

| Power Source | DC 12 V ± 10%, 1000mA |

| Feature | Control System | Alternative 1: Control System | Alternative 2: Control System |

|---|---|---|---|

| Brand | WEIDIAN | BOXER | BELSATI |

| Model | WE63 8SJ-227XES | 6640M-A1-1010 | BS-B 500F |

| OS | Windows® 10 Pro | Windows® 10 IOT (64bit), | Windows® 10 IOT (64bit), |

| CPU | Intel Core™ i7 8550U 4 Cores 8 Threads, 1.8 GHz up to 4.0 GHz | Intel® Core™ i7-7700T, 4 Cores 8 Threads, 2.9GHz up to 3.8GHz | Intel® Core™ i7-8700T, 6 Cores 12 Threads, 2.9 GHz up to 4.0 GHz |

| RAM | 4 GB DDR4 | 4 GB DDR4 | 4 GB DDR4 |

| Storage | 128 GB SSD | 128 GB SSD | 256 GB SSD |

| GPU | Intel UHD Graphics 620 | HD Intel® 630 | UHD Intel® 630 |

| Communication | Gb LAN 802.11ac Wi-Fi Universal Mobile Telecommunications System (UMTS) | Gb LAN Universal Mobile Telecommunications System (UMTS) GPIOs | Gb LAN GPIOs |

| Power | DC 12 V–19 V/6 A | DC 9–36 V | DC 9–24 V |

| Operating Temperature | −10~60 °C | −20 °C~50 °C (according to IEC68-2-14) | 0 °C ~ 40 °C |

| Anti-Vibration | - | 2 Grms/ 5~500 Hz | - |

| Certification | - | CE/FCC class A | IP40 |

| Price | 540 € | 1239 € | 1475 € |

| Feature | Value |

|---|---|

| Power source | Photovoltaic panels |

| Energy storage | AGM or Gel Batteries |

| Autonomy | 2 days |

| Power | 12 V/170 wp |

| Feature | Value |

|---|---|

| Signal type | P-24 |

| Warning | 4 Dimmable LEDs & Text screen |

| Sensors | Photocell |

| Control | Digital input |

| Power | 12 V, 20 W |

| Maximum Number of Tracking Threads | 1 | 3 | 8 | |||

|---|---|---|---|---|---|---|

| Times (ms) | Watch | CPU | Watch | CPU | Watch | CPU |

| Image reading and decoding | 24.27 | 24.46 | 23.52 | 24.03 | 24.16 | 24.44 |

| Motion detection and filtering | 27.03 | 27.53 | 27.62 | 28.11 | 26.98 | 27.48 |

| Elements segmentation and filtering | 1.17 | 1.55 | 1.10 | 1.49 | 1.18 | 1.53 |

| Tracking thread management | 24.56 | 25.06 | 29.85 | 30.34 | 35.60 | 36.11 |

| Results processing | 0.16 | 0.17 | 0.16 | 0.16 | 0.27 | 0.28 |

| Total program Cycle | 77.20 | 78.76 | 82.26 | 84.14 | 88.20 | 89.83 |

| Maximum images rate (FPS) | 12.95 | 12.70 | 12.16 | 11.89 | 11.34 | 11.13 |

| Times (ms) * | Watch | CPU |

|---|---|---|

| Definition of feature points | 15.90 | 16.42 |

| Points tracking | 11.49 | 12.00 |

| Motion analysis | 0.94 | 1.12 |

| Loop iteration | 12.43 | 13.11 |

| Method | GFTT * | FAST * | ||

|---|---|---|---|---|

| Times (ms) | Watch | CPU | Watch | CPU |

| Definition of feature points | 68.32 | 68.82 | 14.47 | 14.95 |

| Points tracking (LK) | 11.79 | 11.79 | 11.74 | 11.73 |

| Results analysis | 1.58 | 1.58 | 1.61 | 1.61 |

| Loop iteration (tracking + analysis) | 13.36 | 13.36 | 13.35 | 13.35 |

| Good Visibility | Bad Visibility | |||

|---|---|---|---|---|

| Feature | GFTT | FAST | GFTT | FAST |

| Initial feature points | 22.07 | 560.24 | 8.98 | 26.38 |

| CPU definition time (ms) | 68.82 | 14.95 | 66.16 | 3.60 |

| CPU tracking time (ms) | 11.79 | 11.73 | 12.94 | 12.45 |

| Cases where the tracking ends earlier, with respect to the total number of obstacles * | 4.7% | 84.0% | 22.8% | 69.0% |

| Cases where the elements are classified as obstacles *, with respect to the total number of obstacles *. | 26.8% | 95.8% | 57.6% | 91.0% |

| Cases where the detector finds enough points to start the tracking, and the other detector does not, with respect to the total number of elements tracked (obstacles, cars and noises). | 2.6% | 0.0% | 40.4% | 0.0% |

| Cases where the elements are classified as obstacles * and the other detector does not find points, with respect to the total number of obstacles *. | 0.5% | 0.0% | 10.2% | 0.0% |

| Case | Odometer | Video | Algorithm | Algorithm SD | Error * |

|---|---|---|---|---|---|

| 1 | 23 ± 1 | 22.6 ± 1.7 | 22.4 | 0.7 | 1.6% |

| 2 | 23 ± 1 | 23.4 ± 1.8 | 23.3 | 0.9% | |

| 3 | 23 ± 1 | 22.6 ± 1.7 | 21.8 | 4.5% | |

| 4 | 23 ± 1 | 23.4 ± 1.8 | 23.7 | 1.9% | |

| 5 | 23 ± 1 | 23.9 ± 1.9 | 22.9 | 2.2% | |

| 6 | 18 ± 1 | 18.2 ± 1.1 | 16.4 | 0.9 | 9.1% |

| 7 | 18 ± 1 | 18.2 ± 1.1 | 16.4 | 9.4% | |

| 8 | 18 ± 1 | 18.4 ± 1.1 | 18.2 | 1.1% | |

| 9 | 18 ± 1 | 18.4 ± 1.1 | 16.3 | 10.5% | |

| 10 | 18 ± 1 | 19.0 ± 1.2 | 18.0 | 2.9% | |

| 11 | 13 ± 1 | 12.6 ± 0.5 | 10.9 | 0.9 | 14.6% |

| 12 | 13 ± 1 | 13.7 ± 0.6 | 11.2 | 16.0% | |

| 13 | 13 ± 1 | 13.7 ± 0.6 | 12.4 | 7.4% | |

| 14 | 13 ± 1 | 13.6 ± 0.6 | 13.0 | 2.1% | |

| 15 | 13 ± 1 | 14.5 ± 0.7 | 12.4 | 9.8% | |

| 16 | 6 ± 1 | 6.1 ± 0.1 | 5.8 | 0.5 | 4.6% |

| 17 | 6 ± 1 | 6.3 ± 0.1 | 5.4 | 12.6% | |

| 18 | 6 ± 1 | 6.3 ± 0.1 | 6.3 | 3.3% | |

| 19 | 6 ± 1 | 6.4 ± 0.1 | 5.3 | 14.6% | |

| 20 | 6 ± 1 | 6.4 ± 0.1 | 5.3 | 14.8% |

| Case | Average Duration of the False Negatives ± SD (s) | Average Time during for the Detection of the Motion Contour ± SD (s) | Average Time for the Elements Tracked (SD ≈ ±1) (s) | Reason Why an Obstacle is not Classified | % of Similar Cases * |

|---|---|---|---|---|---|

| 1 | 9 ± 3 | 9 ± 3 | 2; 1.5; 2 | The tracking loses the element a few times before classifying it. | 33.3% |

| 2 | 8 ± 2 | 8 ± 2 | 1; 1.5; 2; 2 | The tracking loses the element a few times, and it disappears from the ROI. | 25.9% |

| 3 | 13 ± 3 | 13 ± 3 | 3; 3; 3 | The element is classified as noise because it is so far before classifying it. | 18.5% |

| 4 | 38 ± 8 | 24 ± 6 | 1.5; 2; 1; 1.5; 1; 1; 0.5; 0.5 | The element appears together with a lot of noise and then, the tracking loses the element a few times and it disappears from the ROI. | 7.4% |

| 5 | 31 ± 6 | 19 ± 4 | 1; 2; 1.5; 2 | The element appears together with a lot of noise and then, the tracking loses the element a few times before classifying it. | 11.1% |

| 6 | 110 ± 20 ** | 56 ± 10 ** | 1; 2; 3; ...; 3 | The tracking loses the element 2 or 3 times, and later it is classified as noise. | 3.7% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gonzalez-de-Soto, M.; Mora, R.; Martín-Jiménez, J.A.; Gonzalez-Aguilera, D. A New Roadway Eventual Obstacle Detection System Based on Computer Vision. Sensors 2020, 20, 5109. https://doi.org/10.3390/s20185109

Gonzalez-de-Soto M, Mora R, Martín-Jiménez JA, Gonzalez-Aguilera D. A New Roadway Eventual Obstacle Detection System Based on Computer Vision. Sensors. 2020; 20(18):5109. https://doi.org/10.3390/s20185109

Chicago/Turabian StyleGonzalez-de-Soto, Mariano, Rocio Mora, José Antonio Martín-Jiménez, and Diego Gonzalez-Aguilera. 2020. "A New Roadway Eventual Obstacle Detection System Based on Computer Vision" Sensors 20, no. 18: 5109. https://doi.org/10.3390/s20185109