1. Introduction

Activity recognition, as an important application, has been received considerable attention and is widely used in many research fields, such as industrial and healthcare fields [

1]. In general, activity recognition means that people’s daily behavior types are identified by a large amount of human behavior information that is collected by a variety of channels which include camera [

2], microphone [

3], sensor [

4,

5], and so on. Moreover, for activity recognition, research has found four dominant groups of technologies and two emerging technologies: smartphones, wearables, video, electronic components, Wi-Fi, and assistive robots [

6]. Wearable sensors that are currently embedded in smart devices are widely used. It is prevailing to utilize wearables which have embedded sensors to collect data, such as smartphones [

7], due to the reason that wearable devices are convenient to carry and suitable for long-term monitoring.

The wearable sensors that are used to collect raw data are varied [

8,

9,

10,

11]. For example, there are accelerometer, gyroscope, magnetometer, barometer, light, proximity, GPS, and so on. Paraschiakos et al. focused on the task of activity recognition from accelerometer data by combining ankle and wrist accelerometers [

12]. Elsts et al. also used wearable accelerometers to propose an energy-efficient activity recognition framework [

13]. Additionally, Lawal et al. utilized accelerometer and gyroscope signals from seven different body parts to generate frequency image sequences for predicting human activity [

14]. The accelerometer measures acceleration of subject in experiment to analyze human motion states. The gyroscope is commonly used to measure the angle of rotation and its rate of change. Thus, the combination of the two sensors is able to collect informative data, which include more representative features.

The activity recognition is to identify various types of human daily activities that are grouped according to different points of view. For example, the main monitoring activities are classified into static activity, dynamic activity [

15] and transitional activity according to activities’ characteristics, which is also studied in this paper. Generally, the static activities (e.g., standing, sleeping) and the dynamic activities (e.g., walking, running) are focused in current researches, which have achieved significant performance. But the transitional activities (e.g., stand-to-sit, sit-to-stand) are more complicated due to their short duration, which cause easy loss of performance [

16]. Although transitional activities have some overlaps with static and dynamic activities, it is indispensable to detect transitional activities in real life. A variety of activities are usually performed by people in a period of time, containing various transitional activities that include the end of an activity and the beginning of next activity. The transitional activity can also play a huge role in practical application. For patients, monitoring the transitional period makes great sense, which can help diagnosing patient’s physical state in real-time [

17]. Nowadays, there is already clinical application that using Sit-to-Stand Test to judge safety and reliability with older intensive care unit patients at discharge [

18]. Thus, transitional activities recognition is worth studying. Meanwhile, transitional activities are intermediate and short-term transitions between two activities, thus the number of instances of transitional activities is usually far less than static and dynamic activities in an experiment, which results in drops of accuracy due to the imbalance of sample number. In order to alleviate the sample imbalance problem, some methods can play an important role in it. Research has investigated this problem by improving the performance of the recognition model [

19]. In addition to modifying model, it can also be alleviated from the perspective of data processing. Therefore, the resampling technique [

20] is also used to improve unbalanced problem.

The activity recognition is often conducted by many conventional machine learning algorithms, such as KNN [

21,

22], SVM [

23], Random Forest (RF) [

24], and K-means [

25]. The universal procedures which perform activity recognition by applying machine learning algorithms contain data gathering, data preprocessing, data segmentation, feature extraction, classifier training, and testing. Feature extraction is the core of procedure, which has a significant influence on classification performance. In this step, features are often extracted manually. Even though conducting activity recognition by applying machine learning algorithms have reached reasonable performance, they need high time-cost and computing resource for extracting features manually. Thus, deep learning methods that are able to extract features automatically have received more widespread attention on human activity recognition [

26,

27].

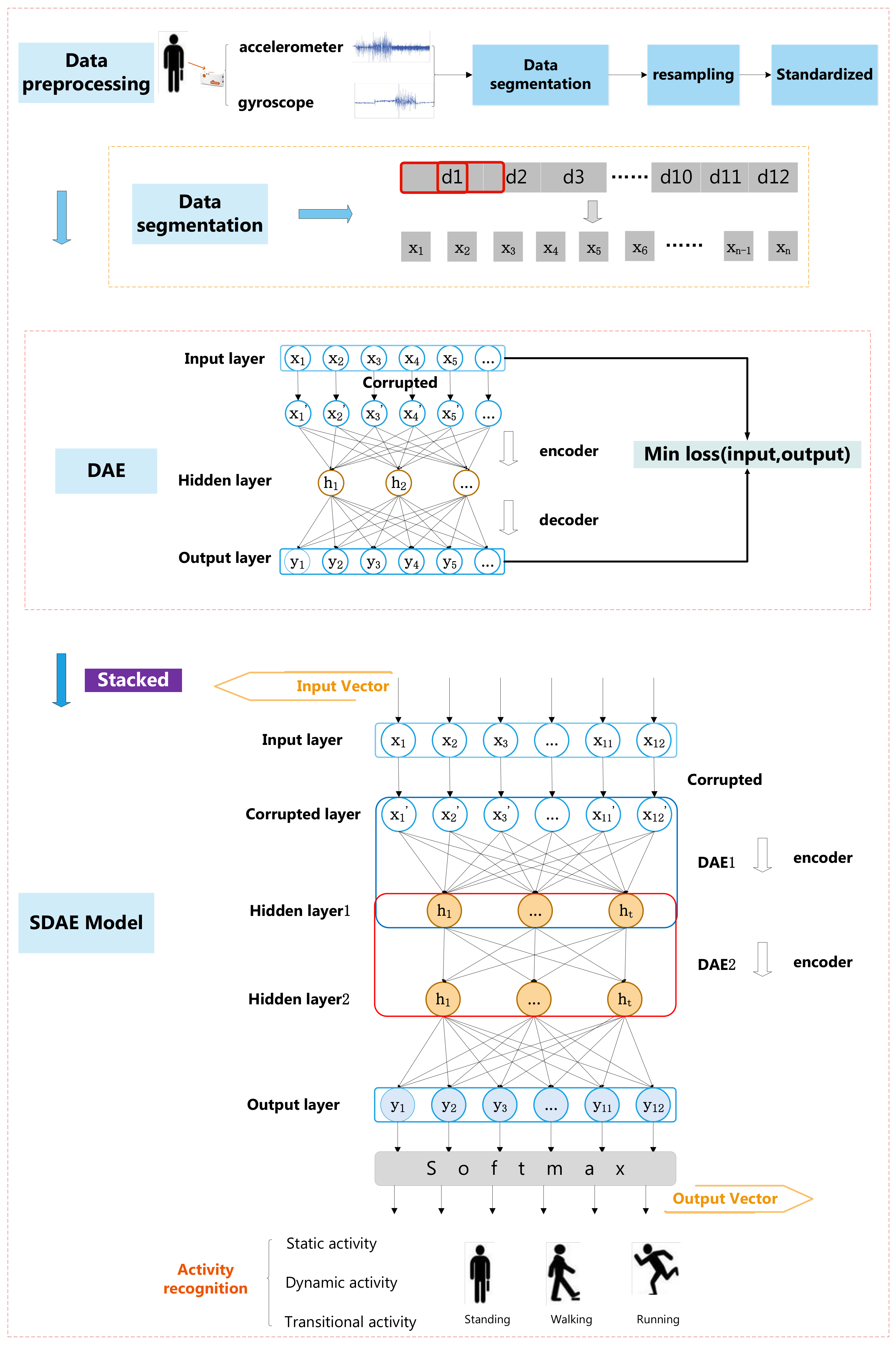

In this paper, the stacked denoising autoencoder (SDAE) [

8], which is a kind of deep learning model utilized to perform activity recognition on account of its advantages. Firstly, it is able to extract features automatically comparing with conventional machine learning methods. Secondly, it is able to reduce data dimension to simplify calculation while preserving information, thus providing better conditions for learning in the final training stage. Thirdly, it utilizes encoder to compress unnecessary data and uses decoder to reconstruct data, which is able to extract useful higher level of representations [

28]. Moreover, our experimental data are collected by using wearable sensors that contain accelerometer and gyroscope. The data from the two sensors that are tri-axial are three-dimensional [

29], which describes meaningful human movement tendency in realistic space. Additionally we would focus on not only the static and dynamic activities recognition, but also transitional activities recognition. Thus, our activities’ types in this study contain three static activities (standing, sleeping, and watching TV), three dynamic activities (walking, running, and sweeping) and six transitional activities (stand-to-sit, sit-to-stand, stand-to-walk, walk-to-stand, lie-to-sit, and sit-to-lie).

The main contributions in this study are described, as follows:

In this paper, besides static and dynamic activities recognition, we also focus on utilizing deep learning models to perform transitional activities recognition which are more difficult and complicated than other two cases.

We have improved the problem of unbalanced samples due to relatively short duration characteristic of transitional activity by applying the resampling methods and adopted varied resampling methods to search optimal scenario.

A novel framework based on stacked denoising autoencoder is utilized to recognize three types of activities, which has achieved significant performances and compared with other classical methods to verify the effectiveness of SDAE model on activity recognition, especially for transitional activities.

The rest of this paper is organized as follows:

Section 2 introduces existing related works about activity recognition and deep learning methods.

Section 3 describes the overall framework about architecture of stacked denoising autoencoder model.

Section 4 designs the experimental procedures and proposes the methods of data preprocessing. The results of experiment are presented and analyzed. Finally, the conclusions are drawn in

Section 6.

5. Discussion

The preceding results show the significant performance of SDAE model on transitional activities and illustrate that the accuracy will drop when dataset is unbalanced. The resampling technique provides feasible methods to improve this question and the accuracy can be greatly improved. In the experiment, we perform multiple sets of contrast experiments, which refer to resampling, hyperparameters, different sensors, multiple methods, and datasets. According to the resampling experimental result, the resampling technique is able to balance sample gap and enhance the recognition performance obviously. Additionally, the SDAE model can alleviate the overfitting problem due to its denoising characteristic. Different sensors have unique effect on activity recognition, the integration of varied wearable sensors can have a positive influence on performance. Additionally, it must be noted that the measurement accuracy of wearable sensors may have an effect on recognition performance. In this study, we have utilized a kind of accelerometer and a kind of gyroscope to collect data. Thus, we do not discuss it in detail and it will be considered in our future work. Moreover, we have analyzed the reason that CNN and LSTM achieve lower performance than SDAE. We assume that these models cannot apply to all human daily activities and the architecture need to be adjusted according to demand. Furthermore, the results on three public datasets based SDAE demonstrate the effectiveness and applicability of our framework. According to these experimental results, it can be concluded that SDAE is proper to recognize three types of activities in this paper, especially for transitional activities.

In activity recognition area, the transitional activity is as important as other activities and can be applied to healthcare filed. For example, it can play a significant role in human fall detection technique, detecting patient state, and so on. We believe there will be more research results about transitional activities in different fields in the future.

6. Conclusions

In this paper, we utilized the stacked denoising autoencoder, a deep learning model that is able to extract useful features automatically and compress unnecessary data by encoder to extract more representative features and recognize human daily activities. Moreover, besides static and dynamic activities, we also focused on transitional activities recognition which were more difficult for recognizing. Furthermore, we utilized random oversampling technology to improve problem that samples were unbalanced due to the short-term characteristic of transitional activities. The experimental result we obtained demonstrated the SDAE model was able to achieve significant performance on transitional activities recognition and outperformed other conventional machine learning methods on human daily activity recognition. Meanwhile, we analyzed the influence of sensors respectively and verified the effectiveness of the SDAE model on three public datasets for activity recognition, which indirectly proved that the result we obtained in our dataset is convincing.

In the future, we consider studying further special human’s activity recognition, which will be able to be applied to specific fields, such as healthcare. Additionally, we also consider utilizing more kinds of sensors to collect data in future experiments. Furthermore, when considering various channels of data gathering, the combination of activity recognition and computer vision is also an important research.