A Vision-Based Driver Assistance System with Forward Collision and Overtaking Detection †

Abstract

:1. Introduction

- A driver assistance system including lane change detection, forward collision warning, and overtaking vehicle identification is presented.

- We propose a new method for front vehicle detection using an adaptive ROI and the CDF-based verification.

- The proposed overtaking detection approach with CNN-based classification is used to solve the difficult repetitive pattern problem.

- The vision-based system is developed with comprehensive camera calibration procedures and evaluated on real traffic scene experiments.

2. Related Work

3. Lane Change Detection

3.1. Lane Marking Detection in Daytime

3.2. Image Enhancement for Low Light Scenarios

4. Forward Collision Warning

4.1. Front Vehicle Detection in Daytime

4.2. Front Vehicle Detection in Nighttime

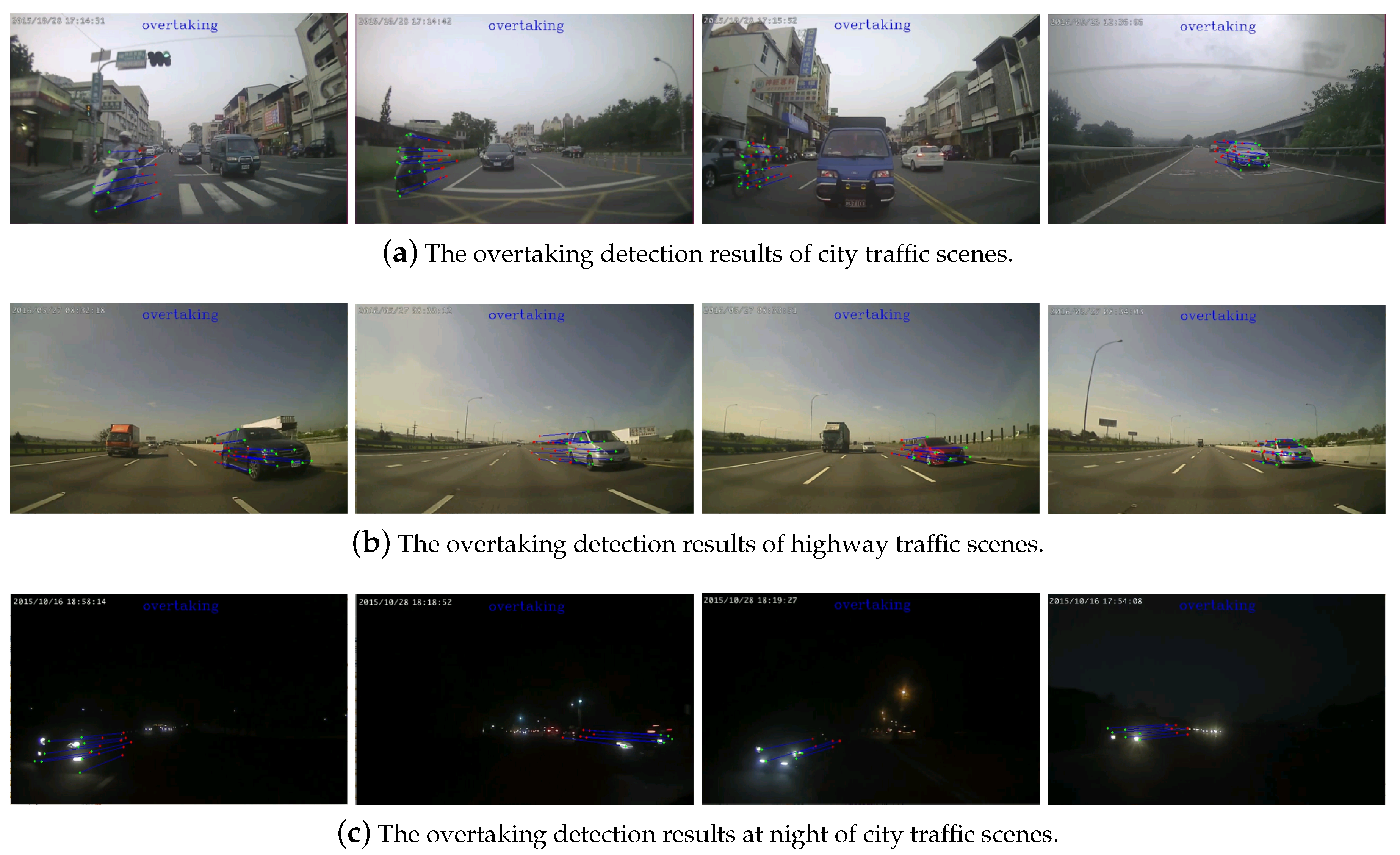

5. Overtaking Vehicle Detection

5.1. Pre-Processing and Segmentation

5.2. Repetitive Pattern Removal Using Cnn

6. Implementation and Experiments

6.1. Camera Setting and System Calibration

6.2. Experiments and Evaluation

- The width of the lanes is 3∼4 m.

- The vehicle velocity is over 60 km/h.

- The curvature of the lanes is over 250 m in radius.

- The visible range of the camera is over 120 m.

- The toll booth areas are not considered.

7. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Kukkala, V.K.; Tunnell, J.; Pasricha, S.; Bradley, T. Advanced Driver-Assistance Systems: A Path Toward Autonomous Vehicles. IEEE Consum. Electron. Mag. 2018, 7, 18–25. [Google Scholar] [CrossRef]

- MOTC. Taiwan Area National Freeway Bureau; MOTC: Taipei, Taiwan, 2017. Available online: http://www.freeway.gov.tw/ (accessed on 13 July 2020).

- Su, C.; Deng, W.; Sun, H.; Wu, J.; Sun, B.; Yang, S. Forward collision avoidance systems considering driver’s driving behavior recognized by Gaussian Mixture Model. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 535–540. [Google Scholar]

- Song, W.; Yang, Y.; Fu, M.; Li, Y.; Wang, M. Lane Detection and Classification for Forward Collision Warning System Based on Stereo Vision. IEEE Sens. J. 2018, 18, 5151–5163. [Google Scholar] [CrossRef]

- Butakov, V.A.; Ioannou, P. Personalized Driver/Vehicle Lane Change Models for ADAS. IEEE Trans. Veh. Technol. 2015, 64, 4422–4431. [Google Scholar] [CrossRef]

- Liu, G.; Wang, L.; Zou, S. A radar-based blind spot detection and warning system for driver assistance. In Proceedings of the 2017 IEEE 2nd Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 25–26 March 2017; pp. 2204–2208. [Google Scholar]

- Dai, J.; Wu, L.; Lin, H.; Tai, W. A driving assistance system with vision based vehicle detection techniques. In Proceedings of the 2016 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA), Jeju, Korea, 13–16 December 2016. [Google Scholar]

- Yeh, T.; Lin, S.; Lin, H.; Chan, S.; Lin, C.; Lin, Y. Traffic Light Detection using Convolutional Neural Networks and Lidar Data. In Proceedings of the 2019 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Taipei, Taiwan, 3–6 December 2019; pp. 1–2. [Google Scholar]

- Lin, H.Y.; Chang, C.C.; Tran, V.L.; Shi, J.H. Improved traffic sign recognition for in-car cameras. J. Chin. Inst. Eng. 2020, 43, 300–307. [Google Scholar] [CrossRef]

- Khan, S.D.; Ullah, H. A survey of advances in vision-based vehicle re-identification. Comput. Vis. Image Underst. 2019, 182, 50–63. [Google Scholar] [CrossRef] [Green Version]

- Lin, H.Y.; Li, K.J.; Chang, C.H. Vehicle speed detection from a single motion blurred image. Image Vis. Comput. 2008, 26, 1327–1337. [Google Scholar] [CrossRef]

- Brunetti, A.; Buongiorno, D.; Trotta, G.F.; Bevilacqua, V. Computer vision and deep learning techniques for pedestrian detection and tracking: A survey. Neurocomputing 2018, 300, 17–33. [Google Scholar] [CrossRef]

- Braunagel, C.; Rosenstiel, W.; Kasneci, E. Ready for Take-Over? A New Driver Assistance System for an Automated Classification of Driver Take-Over Readiness. IEEE Intell. Transp. Syst. Mag. 2017, 9, 10–22. [Google Scholar] [CrossRef]

- Heimberger, M.; Horgan, J.; Hughes, C.; McDonald, J.; Yogamani, S. Computer vision in automated parking systems: Design, implementation and challenges. Image Vis. Comput. 2017, 68, 88–101. [Google Scholar] [CrossRef]

- Tang, Z.; Wang, G.; Xiao, H.; Zheng, A.; Hwang, J.N. Single-Camera and Inter-Camera Vehicle Tracking and 3D Speed Estimation Based on Fusion of Visual and Semantic Features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Ziebinski, A.; Cupek, R.; Erdogan, H.; Waechter, S. A Survey of ADAS Technologies for the Future Perspective of Sensor Fusion. In Computational Collective Intelligence; Nguyen, N.T., Iliadis, L., Manolopoulos, Y., Trawiński, B., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 135–146. [Google Scholar]

- Niu, J.; Lu, J.; Xu, M.; Lv, P.; Zhao, X. Robust Lane Detection using Two-stage Feature Extraction with Curve Fitting. Pattern Recognit. 2016, 59, 225–233. [Google Scholar] [CrossRef]

- Lu, Y.; Huang, J.; Chen, Y.; Heisele, B. Monocular localization in urban environments using road markings. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 468–474. [Google Scholar]

- Xing, Y. Dynamic integration and online evaluation of vision-based lane detection algorithms. IET Intell. Transp. Syst. 2019, 13, 55–62. [Google Scholar] [CrossRef]

- Narote, S.P.; Bhujbal, P.N.; Narote, A.S.; Dhane, D.M. A review of recent advances in lane detection and departure warning system. Pattern Recognit. 2018, 73, 216–234. [Google Scholar] [CrossRef]

- Bruls, T.; Porav, H.; Kunze, L.; Newman, P. The Right (Angled) Perspective: Improving the Understanding of Road Scenes Using Boosted Inverse Perspective Mapping. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 302–309. [Google Scholar]

- Ying, Z.; Li, G. Robust lane marking detection using boundary-based inverse perspective mapping. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 1921–1925. [Google Scholar]

- Chen, D.; Tian, Z.; Zhang, X. Lane Detection Algorithm Based on Inverse Perspective Mapping. In Man—Machine—Environment System Engineering; Long, S., Dhillon, B.S., Eds.; Springer: Singapore, 2020; pp. 247–255. [Google Scholar]

- Kluge, K.; Lakshmanan, S. A deformable-template approach to lane detection. In Proceedings of the Intelligent Vehicles ’95. Symposium, Detroit, MI, USA, 25–26 September 1995; pp. 54–59. [Google Scholar]

- Deng, Y.; Liang, H.; Wang, Z.; Huang, J. An integrated forward collision warning system based on monocular vision. In Proceedings of the 2014 IEEE International Conference on Robotics and Biomimetics (ROBIO 2014), Bali, Indonesia, 5–10 December 2014; pp. 1219–1223. [Google Scholar]

- Song, W.; Fu, M.; Yang, Y.; Wang, M.; Wang, X.; Kornhauser, A. Real-time lane detection and forward collision warning system based on stereo vision. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 493–498. [Google Scholar]

- Di, Z.; He, D. Forward Collision Warning system based on vehicle detection and tracking. In Proceedings of the 2016 International Conference on Optoelectronics and Image Processing (ICOIP), Warsaw, Poland, 10–12 June 2016; pp. 10–14. [Google Scholar]

- Baek, J.W.; Han, B.; Kang, H.; Chung, Y.; Lee, S. Fast and reliable tracking algorithm for on-road vehicle detection systems. In Proceedings of the 2016 Eighth International Conference on Ubiquitous and Future Networks (ICUFN), Vienna, Austria, 5–8 July 2016; pp. 70–72. [Google Scholar]

- Moujahid, A.; ElAraki Tantaoui, M.; Hina, M.D.; Soukane, A.; Ortalda, A.; ElKhadimi, A.; Ramdane-Cherif, A. Machine Learning Techniques in ADAS: A Review. In Proceedings of the 2018 International Conference on Advances in Computing and Communication Engineering (ICACCE), Paris, France, 22–23 June 2018; pp. 235–242. [Google Scholar]

- Fekri, P.; Abedi, V.; Dargahi, J.; Zadeh, M. A Forward Collision Warning System Using Deep Reinforcement Learning; SAE Technical Paper; SAE International: Warrendale, PA, USA, 2020. [Google Scholar] [CrossRef]

- Nur, S.A.; Ibrahim, M.M.; Ali, N.M.; Nur, F.I.Y. Vehicle detection based on underneath vehicle shadow using edge features. In Proceedings of the 2016 6th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Batu Ferringhi, Malaysia, 25–27 November 2016; pp. 407–412. [Google Scholar]

- Zebbara, K.; El Ansari, M.; Mazoul, A.; Oudani, H. A Fast Road Obstacle Detection Using Association and Symmetry recognition. In Proceedings of the 2019 International Conference on Wireless Technologies, Embedded and Intelligent Systems (WITS), Fez, Morocco, 3–4 April 2019; pp. 1–5. [Google Scholar]

- Ma, X.; Sun, X. Detection and segmentation of occluded vehicles based on symmetry analysis. In Proceedings of the 2017 4th International Conference on Systems and Informatics (ICSAI), Hangzhou, China, 11–13 November 2017; pp. 745–749. [Google Scholar]

- Lim, Y.; Kang, M. Stereo vision-based visual tracking using 3D feature clustering for robust vehicle tracking. In Proceedings of the 2014 11th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Vienna, Austri, 1–3 September 2014; Volume 2, pp. 788–793. [Google Scholar]

- Lai, Y.; Huang, Y.; Hwang, C. Front moving object detection for car collision avoidance applications. In Proceedings of the 2016 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 7–11 January 2016; pp. 367–368. [Google Scholar]

- Chen, D.; Wang, J.; Chen, C.; Chen, Y. Video-based intelligent vehicle contextual information extraction for night conditions. In Proceedings of the 2011 International Conference on Machine Learning and Cybernetics, Guilin, China, 10–13 July 2011; Volume 4, pp. 1550–1554. [Google Scholar]

- Hultqvist, D.; Roll, J.; Svensson, F.; Dahlin, J.; Schön, T.B. Detecting and positioning overtaking vehicles using 1D optical flow. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014; pp. 861–866. [Google Scholar]

- Chen, Y.; Wu, Q. Moving vehicle detection based on optical flow estimation of edge. In Proceedings of the 2015 11th International Conference on Natural Computation (ICNC), Zhangjiajie, China, 15–17 August 2015; pp. 754–758. [Google Scholar]

- Diaz Alonso, J.; Ros Vidal, E.; Rotter, A.; Muhlenberg, M. Lane-Change Decision Aid System Based on Motion-Driven Vehicle Tracking. IEEE Trans. Veh. Technol. 2008, 57, 2736–2746. [Google Scholar] [CrossRef]

- Wu, B.F.; Kao, C.C.; Li, Y.F.; Tsai, M.Y. A real-time embedded blind spot safety assistance system. Int. J. Veh. Technol. 2012, 2012, 506235. [Google Scholar] [CrossRef] [Green Version]

- Oron, S.; Bar-Hille, A.; Avidan, S. Extended Lucas-Kanade Tracking. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 142–156. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems 25; Pereira, F., Burges, C.J.C., Bottou, L., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Shi, J. Good features to track. In Proceedings of the 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Woo, H.; Ji, Y.; Kono, H.; Tamura, Y.; Kuroda, Y.; Sugano, T.; Yamamoto, Y.; Yamashita, A.; Asama, H. Lane-Change Detection Based on Vehicle-Trajectory Prediction. IEEE Robot. Autom. Lett. 2017, 2, 1109–1116. [Google Scholar] [CrossRef]

- Mandalia, H.M.; Salvucci, M.D.D. Using Support Vector Machines for Lane-Change Detection. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2005, 49, 1965–1969. [Google Scholar] [CrossRef]

- Schlechtriemen, J.; Wedel, A.; Hillenbrand, J.; Breuel, G.; Kuhnert, K. A lane change detection approach using feature ranking with maximized predictive power. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014; pp. 108–114. [Google Scholar]

- Aly, M. Real time detection of lane markers in urban streets. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar]

- Ye, Y.Y.; Hao, X.L.; Chen, H.J. Lane detection method based on lane structural analysis and CNNs. IET Intell. Transp. Syst. 2018, 12, 513–520. [Google Scholar] [CrossRef]

- Shiru, Q.; Xu, L. Research on multi-feature front vehicle detection algorithm based on video image. In Proceedings of the 2016 Chinese Control and Decision Conference (CCDC), Yinchuan, China, 28–30 May 2016; pp. 3831–3835. [Google Scholar]

- Chen, C.; Chen, T.; Huang, D.; Feng, K. Front Vehicle Detection and Distance Estimation Using Single-Lens Video Camera. In Proceedings of the 2015 Third International Conference on Robot, Vision and Signal Processing (RVSP), Kaohsiung, Taiwan, 18–20 November 2015; pp. 14–17. [Google Scholar]

- Li, Y.; Wang, F.Y. Vehicle detection based on And–Or Graph and Hybrid Image Templates for complex urban traffic conditions. Transp. Res. Part C Emerg. Technol. 2015, 51, 19–28. [Google Scholar] [CrossRef]

- Yang, B.; Zhang, S.; Tian, Y.; Li, B. Front-Vehicle Detection in Video Images Based on Temporal and Spatial Characteristics. Sensors 2019, 19, 1728. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, J.; Chen, Y.; Chuang, C. Overtaking Vehicle Detection Based on Deep Learning and Headlight Recognition. In Proceedings of the 2019 IEEE International Conference on Consumer Electronics—Taiwan (ICCE-TW), YILAN, Taiwan, 20–22 May 2019; pp. 1–2. [Google Scholar]

- Tseng, C.; Liao, C.; Shen, P.; Guo, J. Using C3D to Detect Rear Overtaking Behavior. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 151–154. [Google Scholar]

| Method | T.P. | F.P. | F.N. | Precision | Recall |

|---|---|---|---|---|---|

| Woo et al. [44] | – | – | – | 96.3% | 100% |

| Mandalia et al. [45] | – | – | – | 80.0% | 80.5% |

| Schlechtriemen et al. [46] | – | – | – | 93.6% | 99.3% |

| Aly et al. [47] | 1955 | 235 | – | 96.4% | – |

| Ye et al. [48] | 1998 | 48 | – | 98.5% | – |

| Proposed method | 2423 | 53 | 80 | 97.9% | 96.8% |

| Method | T.P. | F.P. | F.N. | Precision | Recall |

|---|---|---|---|---|---|

| Qu et al. [49] | – | – | – | 90.3% | 80.5% |

| Chen et al. [50] | – | – | – | 90.8% | 78.8% |

| Li et al. [51] | – | – | – | 90.4% | 79.2% |

| Yang et al. [52] | – | – | – | 91.9% | 83.6% |

| Proposed method | 1510 | 0 | 911 | 100% | 62.4% |

| BG | LM | MC | RP | FV | RV | |

|---|---|---|---|---|---|---|

| GT | 375 | 252 | 545 | 408 | 480 | 710 |

| BG | 322 | 1 | 2 | 0 | 1 | 11 |

| LM | 2 | 249 | 0 | 2 | 0 | 0 |

| MC | 0 | 0 | 524 | 1 | 0 | 0 |

| RP | 25 | 2 | 0 | 405 | 0 | 3 |

| FV | 13 | 0 | 16 | 0 | 470 | 34 |

| RV | 13 | 0 | 3 | 0 | 9 | 662 |

| Precision | 85.9% | 98.8% | 96.1% | 99.3% | 97.9% | 93.2% |

| Recall | 85.8% | 98.8% | 96.1% | 99.2% | 97.9% | 93.2% |

| Scene | True Overtakes | Detected | Missed | False |

|---|---|---|---|---|

| City traffic | 102 | 102 | 0 | 13 |

| Highway | 79 | 79 | 0 | 1 |

| At night road | 42 | 37 | 5 | 4 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, H.-Y.; Dai, J.-M.; Wu, L.-T.; Chen, L.-Q. A Vision-Based Driver Assistance System with Forward Collision and Overtaking Detection. Sensors 2020, 20, 5139. https://doi.org/10.3390/s20185139

Lin H-Y, Dai J-M, Wu L-T, Chen L-Q. A Vision-Based Driver Assistance System with Forward Collision and Overtaking Detection. Sensors. 2020; 20(18):5139. https://doi.org/10.3390/s20185139

Chicago/Turabian StyleLin, Huei-Yung, Jyun-Min Dai, Lu-Ting Wu, and Li-Qi Chen. 2020. "A Vision-Based Driver Assistance System with Forward Collision and Overtaking Detection" Sensors 20, no. 18: 5139. https://doi.org/10.3390/s20185139

APA StyleLin, H.-Y., Dai, J.-M., Wu, L.-T., & Chen, L.-Q. (2020). A Vision-Based Driver Assistance System with Forward Collision and Overtaking Detection. Sensors, 20(18), 5139. https://doi.org/10.3390/s20185139