1. Introduction

Images or videos taken outdoors usually suffer from an apparent loss of contrast and details owing to the inevitable adverse effects of bad weather conditions. Spatially varying degradation sharply decreases the performance of computer vision and consumer applications, such as surveillance cameras, autonomous driving vehicles, traffic sign detecting systems, and notably face recognition, which is widely adopted on smartphones and Internet-of-Things devices [

1]. Haze removal, also known as dehazing or defogging, addresses this problem by eliminating the undesirable effects of the transmission medium and restoring clear visibility.

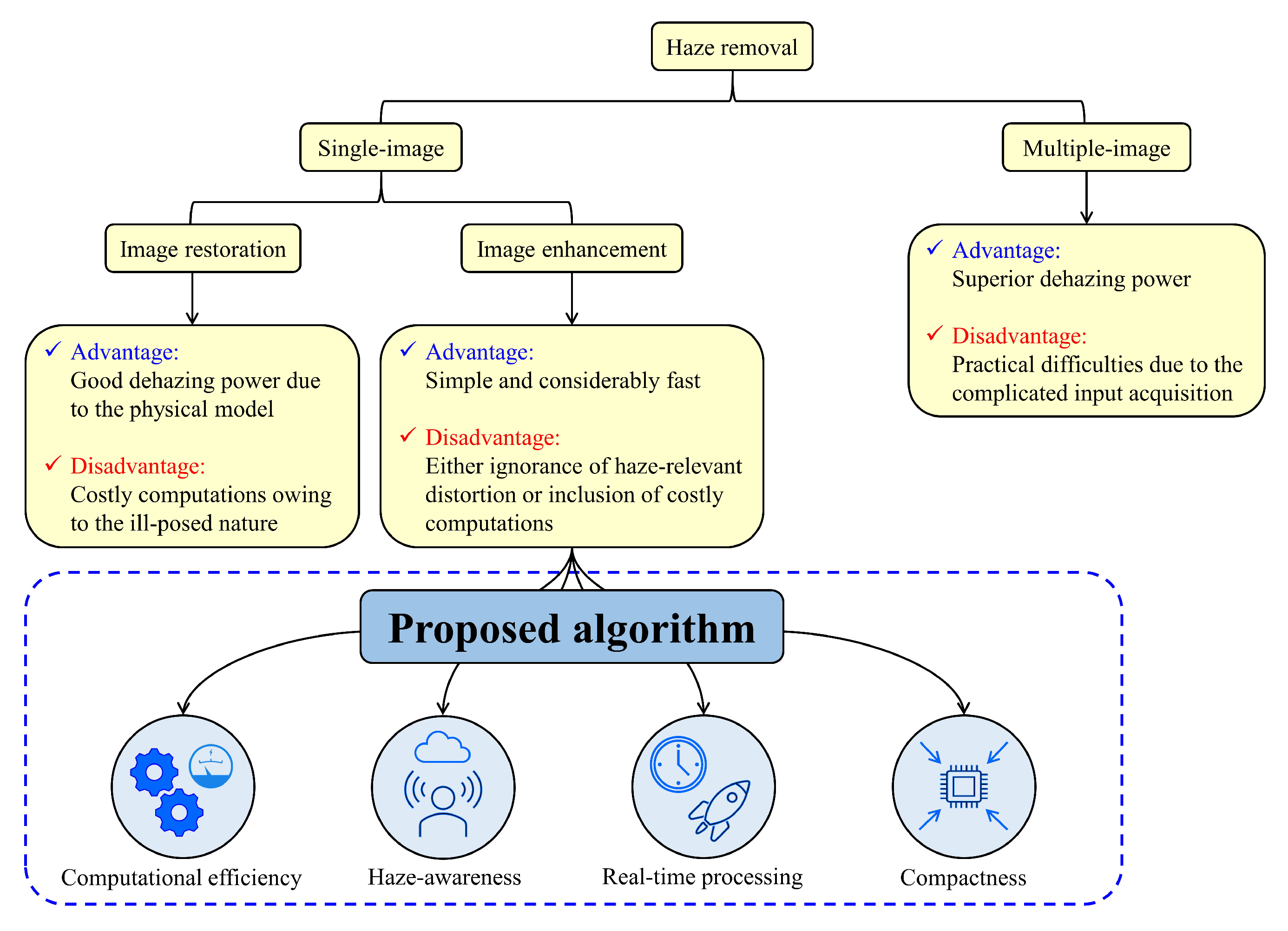

In general, haze removal algorithms fall into two categories—single- and multiple-image algorithms. Even though the latter is no longer of interest to researchers, its superior performance is worthy of attention. According to Rayleigh’s scattering law [

2], the scattering of incoming light in the atmosphere is inversely proportional to the wavelength, and therein lies the cause of the wavelength-dependent haze distribution. Thus, the polarizing filter, which allows light waves of a specific polarization to pass through while reflecting light waves of other polarizations, was widely used in photography as a first attempt to remove haze. However, this polarization filtering technique alone could not remove the hazy effects because the atmospheric scattering phenomenon appeared at different wavelengths. As a result, Schechner et al. [

3] developed a method that took at least two images captured under different polarization to estimate the airlight and the scaled depth. Subsequently, they reversed the hazy image formation process to restore the original haze-free scene radiance. On the other hand, Narasimhan and Nayar [

4] proposed an approach that could function properly with as few as two images taken in different weather conditions, and Kopf et al. [

5] exploited the existing georeferenced digital terrain and urban models to remove haze from outdoor images. Notwithstanding the impressive dehazing performance, researchers were apathetic about multiple-image algorithms due to the difficulties in the input acquisition process, consequently leading to the rapid development of single-image haze removal algorithms.

Researchers usually approach removing haze from a single input image from two perspectives: image restoration and image enhancement. In the former, a physical model, referred to as Koschmieder atmospheric scattering model [

6], describes the formation of hazy images in the atmosphere. The incoming light is not only scattered by microscopic particles like dust or water droplets, but it is also attenuated when traversing the transmission medium. Therefore, solving the Koschmieder model with the two unknown variables representing the phenomena mentioned earlier is considered problematic. He et al. [

7] proposed a dark channel prior (DCP), which states that there exist some pixels whose intensity is very low in at least one color channel of non-sky local patches. DCP provided an efficient way to estimate the patch-based extinction coefficients of the transmission medium. Subsequently, the computationally expensive soft-matting [

8] was employed to suppress the block artifacts, giving rise to the DCP’s time-consuming drawback. They after that proposed a multi-function guided image filter [

9], which could replace the soft-matting for accelerating the DCP at the cost of a specific degradation in image quality. The DCP has also been developed in many directions to overcome its shortcomings of slow processing rate and unpredictable performance in sky regions [

10,

11,

12,

13,

14,

15,

16]. A fast dehazing method proposed by Kim et al. [

15] and its corresponding 4K-capable intellectual property (IP) presented in Reference [

17] are cases considered. By exploiting the modified hybrid median filter, which possesses excellent edge-preserving characteristics, to estimate the atmospheric veil, their method eliminated the need for soft-matting refinement, resulting in considerably faster processing speed, albeit a slight degradation in image quality. Based on the observation that the scene depth positively correlates with the difference between the image’s saturation and brightness, Zhu et al. [

18] developed a linear model known as color attenuation prior (CAP). They then estimated its parameters in a supervised learning manner. Since the depth is exponentially proportional to the extinction coefficients of the transmission medium, CAP provided a fast way to solve the Koschmieder model. However, according to a detailed investigation conducted by Ngo et al. [

19], it suffered from a few shortcomings, such as background noise, color distortion, and reduced dynamic range. Tang et al. [

20] utilized another machine learning technique called random forest regression to calculate the extinction coefficients from a set of multi-scale image features including DCP, local maximum contrast, hue disparity, and local maximum saturation. Ngo et al. [

21] exploited the simplex-based Nelder-Mead optimization to find the optimal transmission map. They additionally devised an adaptive atmospheric light to account for the heterogeneity of lightness. Although the dehazing power of the methods proposed by Tang et al. [

20] and Ngo et al. [

21] is quite impressive, several practical difficulties resulted owing to their highly expensive computations. Recently, deep learning techniques, notably convolutional neural networks (CNNs), have been used to learn the unknown variables of the Koschmieder model from the collected data. Cai et al. [

22] developed a shallow-but-efficient CNN dubbed DehazeNet, taking a hazy image and producing its corresponding extinction coefficients. Other studies [

23,

24,

25,

26] have attempted to improve performance by either increasing the receptive field via deeper networks or developing a more sophisticated loss function as a surrogate for the universally used mean squared error. However, they all share the same problem of lacking a real training dataset comprising pairs of hazy and haze-free images. This drawback imposes a limit on their dehazing power. Interested readers are referred to a comprehensive review provided by Li et al. [

27].

Since estimating two unknown variables in the Koschmieder model is somewhat computationally expensive, researchers have attempted to dehaze images employing image enhancement techniques. Instead of relying on a physical model, they first attempted to enhance low-level features such as contrast, sharpness, and brightness for alleviating the adverse effects of haze. Low-light stretch [

28], unsharp masking [

29,

30,

31], and histogram equalization [

32,

33] are cases considered. Nonetheless, since these methods did not take the underlying cause of haze-relevant distortion into account, they were merely appropriate to images obscured by thin haze. With current efforts in exploiting image fusion techniques, this category of haze removal algorithms has become haze-aware. Ancuti et al. [

34] developed a method where the fusion followed a multi-scale manner with corresponding weight maps derived from luminance, chrominance, and salience information. Choi et al. [

35] investigated haze-relevant features such as contrast energy, image entropy, normalized dispersion, and colorfulness, to name but a few. They then developed a more sophisticated weighting scheme for selectively blending regions with good visibility to the restored image. Galdran [

36] also removed the hazy effects utilizing multi-scale fusion, but weight maps solely comprised contrast and saturation. As haze-relevant image features constituted the weight maps, these approaches achieved improved performance and extended their applicability to a wide variety of images with different haze density. However, the multi-scale fusion process, represented by the Laplacian pyramid, is quite expensive because of image buffers and line memories that are resulted from up- and down-sampling operations.

In this paper, we present a novel and simple image enhancement-based haze removal method capable of producing satisfactory results. Based on the observation that haze often obscures image details and increases brightness, a set of detail-enhanced and under-exposed images derived from a single hazy image is employed as inputs to image fusion. The corresponding weight maps are calculated according to DCP, which is well recognized as a good haze indicator. Then, the fusion process is simply equivalent to a weighted sum of images and weight maps. Finally, a post-processing method known as adaptive tone remapping is employed for expanding the dynamic range. Thus, the proposed algorithm is computationally efficient and haze-aware, while its compact hardware counterpart is capable of handling videos in real-time.

Figure 1 depicts a general classification of haze removal algorithms as a summary of this section.

The remainder of this paper is organized as follows.

Section 2 introduces the Koschmieder model and explains the relation between under-exposure and haze removal.

Section 3 presents the proposed algorithm by sequentially describing individual main computations.

Section 4 conducts a comparative evaluation with other state-of-the-art methods.

Section 5 describes a hardware architecture for facilitating real-time processing, while

Section 6 concludes the paper.