1. Introduction

With the advancement and development of technology, obtaining three-dimensional (3D) range images using laser radar (ladar) is easy and popular in recent years [

1,

2]. The recognition technology of the target object in the 3D range image is also extensively used in various fields such as high-altitude detection, precision guidance, automatic driving and smart homes and is a remarkable possibility for expansion remains in the future [

3,

4]. Owing to the limitation of hardware conditions and cost, the spatial resolution of the obtained 3D range images is typically low. Therefore, determining how to improve the spatial resolution of 3D range images through algorithms has become an important research direction of 3D imaging. This interest raises the question of whether we can improve the recognition rate of low-resolution (low-res) range images by increasing their spatial resolution.

Super-resolution (SR) of images can also be called up-sampling of images, which is a signal processing technique for estimating a high-resolution (high-res) image from a low-res image [

5]. SR algorithms for range images are developed on the bases of SR algorithms for intensity images and can be classified into two categories according to the difference in input data sources. One uses only single- or multi-frame low-res range images such as bicubic interpolation (Bicubic) proposed by Hou [

6], convex projections proposed by Stark [

7], neighbor embedding proposed by Chang [

8] and self-guided residual interpolation proposed by Konno [

9]. Another uses the information of high-res intensity images of the same scene to guide the SR of range images [

10] such as joint bilateral filter (JBF) proposed by Kopf [

11], weighted mode filtering proposed by Min [

12], Markov random field (MRF) proposed by Diebel [

13] and total generalized variation (TGV) proposed by Ferstl [

14]. Considering that these algorithms must strictly align high-res intensity images and low-res range images, initially registering the two images through the registration algorithm is necessary. In addition, SR methods based on deep learning have also made significant progress in recent years [

15,

16]. However, the current research on the role of the SR algorithm in recognition is focused on specific fields such as 2D face recognition [

17,

18]. To the best of our knowledge, no relevant study covers the role of the SR algorithm in range image recognition.

This work aims to propose an SR algorithm for range images based on self-guided joint filtering (SGJF). The algorithm adds the interpolation result of the range image as a guide image to the filter kernel to reduce the influence of the intensity image texture on the super-resolved image. In addition, an SR recognition system for range images is constructed and a range image acquisition platform is designed to test the effect of SR algorithms in recognizing ladar range images. Four SR algorithms are tested, including Bicubic, JBF, MRF and our proposed SGJF. The SR recognition system uses combined moment invariants composed of seven Hu and three affine moment invariants to describe the global features of the image. Moreover, it uses a back propagation neural network (BPNN) to classify and recognize the features. The recognition rates of the SR recognition system under different SR algorithms, SR factors, model library sizes and noise conditions are tested via experiments. The results show that the SGJF algorithm we proposed can largely avoid the problem of ‘texture transfer’ in the JBF algorithm and is also robust to noise. All of the SR algorithms we tested can improve the recognition rate of low-res range images and a high SR factor increases the improvement. Regardless of the resolution of the model library, high resolution of the super-resolved scene raises the recognition rate and using the model library with the same resolution as the super-resolved scene can obtain the highest recognition rate. Finally, following the experimental results, the application suggestions of SR algorithms in actual scene recognition are proposed.

The remainder of this work is organized as follows.

Section 2 presents the principles of the SR and recognition algorithm we used.

Section 3 presents the experimental process and analyzes the effects of different SR algorithms, SR factors, model library sizes and noise conditions on the recognition rate.

Section 4 provides the conclusions.

3. Experiments and Results

This section is divided into four parts.

Section 3.1 introduces the overall steps of the experiments and the platform used to acquire range images.

Section 3.2,

Section 3.3 and

Section 3.4 are three independent experiments and each experiment is described in the order of experiment purpose, organization method and result analysis.

3.1. Experimental Steps and Platform

3.1.1. Experimental Steps

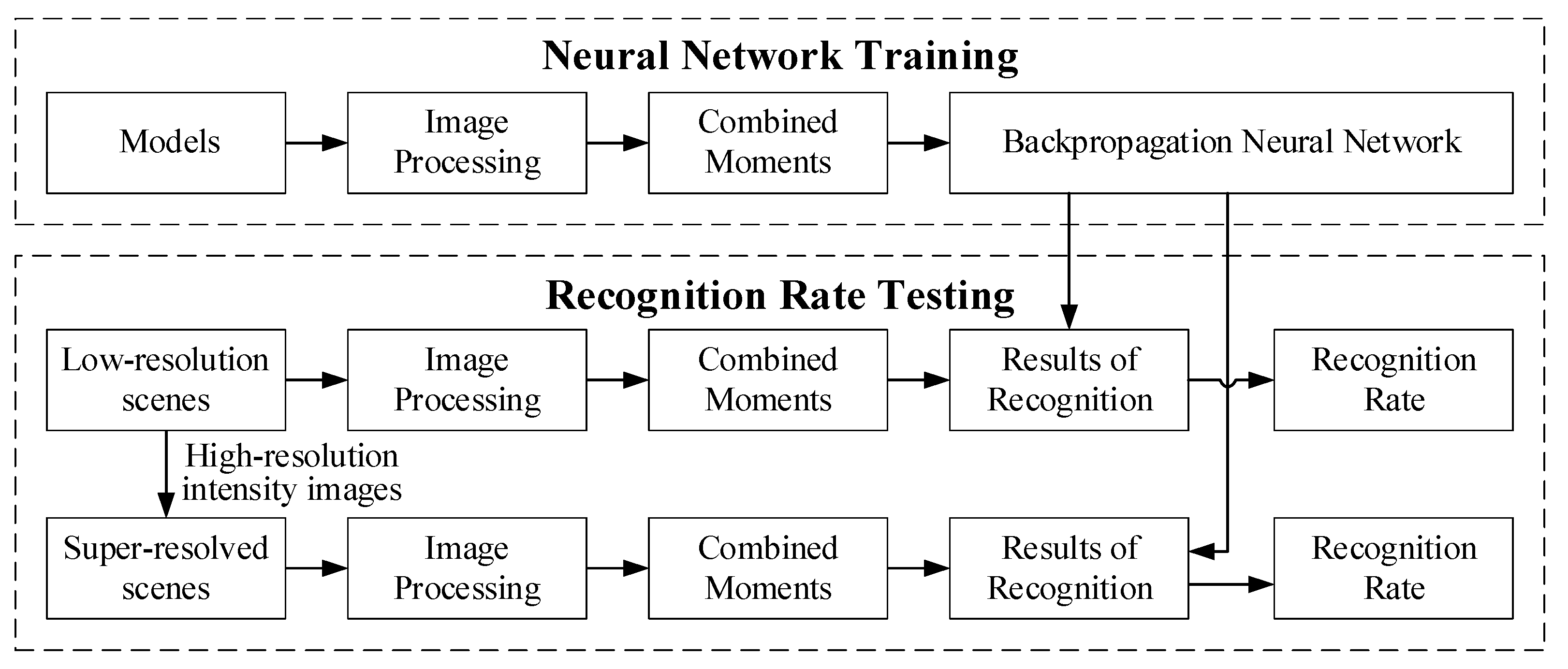

Figure 2 illustrates the operating steps of the designed SR recognition system for range images, which is divided into neural network training and recognition rate testing. For training neural networks, the noise of models was initially removed through image pre-processing and converted into binary images with target shape information. The combined moments were then used as the feature descriptor to calculate the feature vectors of the binary image. Finally, the BPNN was trained by these feature vectors as the feature classifier for scene recognition. In the process of recognition rate testing, the low-res scenes and their super-resolved images were calculated in the same way to obtain feature vectors. These were then inputted into the trained BPNN to obtain the classification results before calculating the recognition rate. Finally, the effect of the SR algorithms on recognition was evaluated by comparing the two sets of recognition rates.

3.1.2. Image Acquisition Platform

An image acquisition platform (

Figure 3a) was designed to acquire range and intensity images of eight target objects (

Figure 3b). This platform was equipped with a Kinect V2, which combined a Time-of-Flight (ToF) camera and an RGB camera. Moreover, it acquired range and intensity images of the target objects at any attitude angle (azimuth angle 0°–360° and view angle 0°–80°). The acquired images were divided into training and test sample sets in which the former was used to simulate the model library to train the BPNN and the latter was used to simulate the scene library to test the recognition rate. We defined the acquired range image, with a spatial resolution of 320 × 320 pixels, as the original high-res range image (OHRRI) and the intensity image aligned with the OHRRI as the original high-res intensity image (OHRII) in the experiments. In addition, given that temperature could have a profound influence on the credibility of the range data acquired by the Kinect V2 ToF camera, all data of the experiment were acquired after the Kinect V2 was run for 30 min to avoid temperature effects [

30].

Figure 4 shows the range and intensity images of the eight target objects and the binary image obtained after pre-processing.

Table 1 shows the parameters of the SR algorithms. For an objective comparison, the values of the common parameters of JBF and SGJF were set to be the same and the value of

was different according to the SR factor as

. Bicubic used the function imresize () in MATLAB. For BPNN, the input layer node was set to 10 to correspond to 10 moment invariant feature description vectors and the output node was set to 8 to correspond to 8 target objects. The transfer function of its first layer was the ‘Tansig’ function and the second layer was the ‘Purelin’ function. The number of iterations was set to 500. Considering that different training sample sets contained different numbers of training samples, the number of hidden layer neurons was also different when training BPNN to avoid underfitting or overfitting. The selection of all parameters represented the best result of multiple tests.

3.2. Arbitrary Attitude Angle Recognition

In the case where the SR algorithm was not used, the recognition effect of the recognition system on scenes with any attitude angle within the acquisition range of the platform was tested and the influence of the model library size on the recognition rate was analyzed. A test sample set for simulating a scene library was firstly established. At 10° intervals, range images of each target object at view angles of 5° to 75° and azimuth angles of 5° to 175° were acquired; thus, the test sample set contained 1152 range images. Next, training sample sets for simulating model libraries were established. Still at 10° intervals, range images of each target object at view angles of 10° to 80° and azimuth angles of 0° to 180° were acquired and one image when the view angle was 0°; thus, a total of 153 range images of each target object were acquired. Different numbers of range images were finally extracted as training samples to establish the training sample sets with different sizes. According to the view angle, the number of range images taken at different intervals were as follows: (1) three at 40°, (2) five at 20° and (3) nine at 10°. For azimuth, the numbers of range images taken at different intervals were as follows: (1) three at 90°, (2) four at 60°, (3) seven at 30°, (4) 10 at 20° and (5) 19 at 10°. Combining the view and azimuth angles, 15 training sample sets with different sizes were obtained.

Table 2 shows the recognition rates of the test sample set by 15 BPNNs trained by the training sample sets and the number of samples contained in each training sample set is shown in parentheses.

From the table, the recognition rate of the test sample set by the BPNN was typically positively related to the training sample set size. However, when the training sample set had fewer samples selected at the view angle (or azimuth angle), the recognition rate was low even if many samples were selected in another direction. By contrast, when the training sample set size increased to a certain number, the recognition rate grew slowly or even stopped. If we wanted to maintain the recognition rate above 95%, a minimum of 232 training samples were required and if we wanted to maintain the recognition rate above 90%, a minimum of 104 training samples were required.

3.3. SR Recognition of Scene-Model with the Same Resolution

3.3.1. Experiment without Noise

The SR recognition of low-res scenes without noise was tested and the resolution of their super-resolved scene was the same as the resolution of the model. The down-sampling factor was defined as

and the SR factor as

. Firstly, OHRRIs and OHRIIs of each target object (

Figure 5a) were acquired. OHRRIs were then down-sampled by factors

of 2, 4 and 8 to simulate low-res scenes (

Figure 5b). Finally, the four SR algorithms described above were applied to low-res scenes and

was the same as

(

Figure 5c). OHRIIs were used as guidance for SR algorithms requiring intensity image guidance.

The binary image could well represent the contour of the target object and the effect of the down-sampled images slowly worsened as the factor increased. For super-resolved images, the difference was not very obvious when the factor was small. When the factor was large, the edge of the Bicubic became blurred, MRF was difficult to see the details, JBF had obvious texture transfer and SGJF was less affected by the texture of intensity and the details were clear.

To test the recognition rate, the training sample sets were established by OHRRIs and the test sample sets were established by down-sampled images and their SR images. Compared with

Section 3.2, the attitude angle of the acquired image was simplified to only acquire images with a view angle of 40°, whereas the acquisition method of the azimuth angle was unchanged. For the training sample set, extracting the azimuth angle in the same way as in

Section 3.2 established five training sample sets with different sizes and the number of range images they contained was 24, 32, 56, 80 and 152, respectively. For the test sample set, each target object first acquired 18 images. These were then down-sampled by factors

of 2, 4 and 8 to establish three down-sampling test sample sets. Next, the four SR algorithms were applied to the three down-sampling test sample sets with the same

as

. According to the different SR algorithms and factors

, 12 SR test sample sets were established. Therefore, a total of 15 test sample sets were finally established and each of them contained 144 (18 × 8) images.

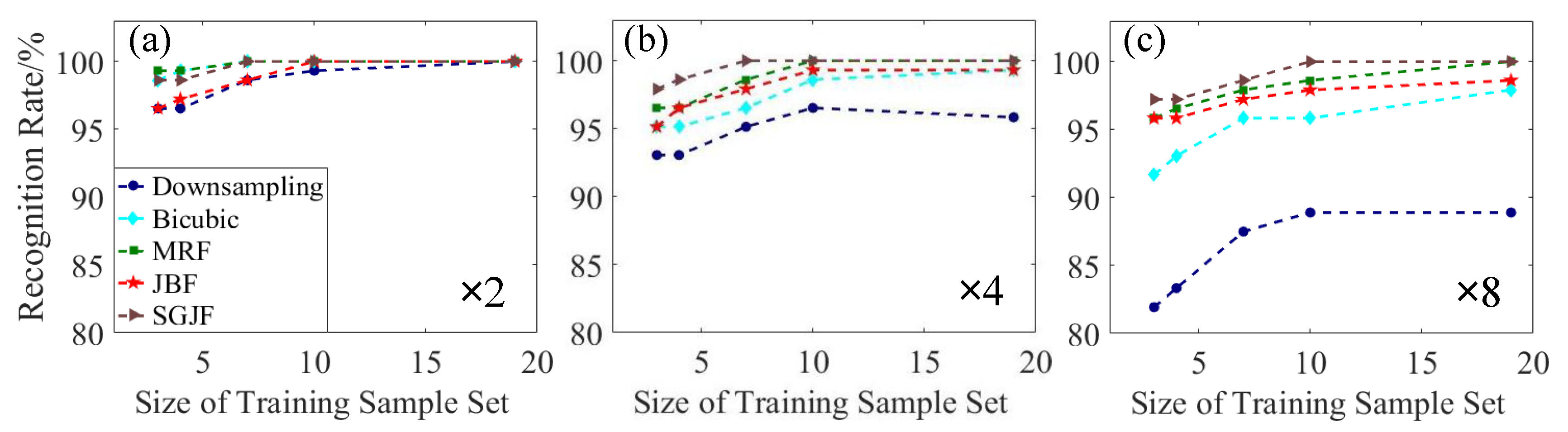

Figure 6 shows the recognition results of the 15 test sample sets by five BPNNs trained by the training sample sets.

Figure 6 shows that the recognition rate of all SR test sample sets was higher than that of the down-sampling test sample sets, especially when the factor was large. It represented that the SR algorithm helped improve the recognition rate of the acquired low-res scenes; a high factor increased the improvement. However, for each SR algorithm, the recognition rate of the large factor sample set was lower than that of the small factor sample set. Specifically, the SGJF test sample set often maintained a high recognition rate. The JBF test sample set had a lower recognition rate than others when the factor was small. However, as the factor increased, its decrease was smaller. The MRF test sample set had the highest recognition rate when the factor was small and was only lower than the SGJF test sample set when the factor was large. The recognition rate of the Bicubic test sample set decreased the most with the increase in the factor.

3.3.2. Experiment with Noise

In practical applications, the ladar range image often contains various noises, mainly Gaussian noise. To test the influence of noise on the SR algorithm in the system, this section used the same experimental procedure as

Section 3.3.1. The difference was that before running the SR algorithm, Gaussian noise with a signal-to-noise ratio (SNR) of 35 dB and 25 dB was first added to the down-sampled image.

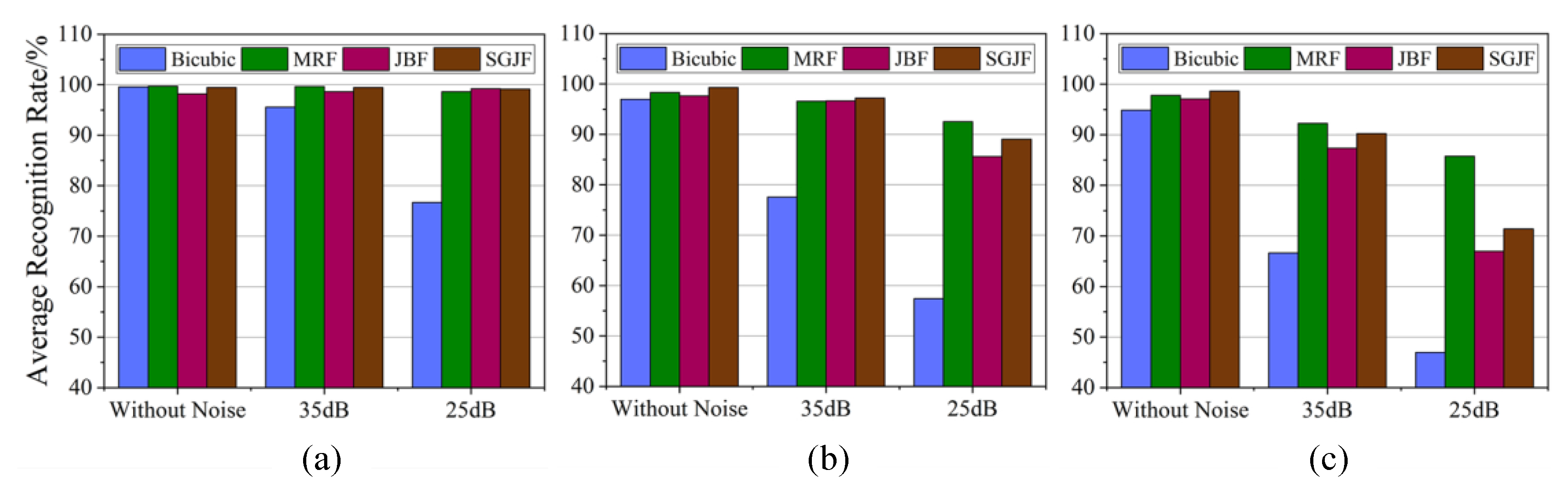

Figure 7 shows the super-resolved images and their binary images when the factor was 4. The Bicubic algorithm was incapable of suppressing noise and the noise seriously affected the extraction of binary images. The other three algorithms obviously suppressed the noise, where the MRF super-resolved range image was the clearest and its binary image obtained smooth edges even when the noise intensity was large. Both JBF and SGJF super-resolved range images were somewhat affected when the noise intensity was large. In addition, the JBF super-resolved range image was also affected by the intensity image texture. These findings were all reflected in the binary image.

The five BPNNs trained by the training sample set in

Section 3.3.1 were still used to test the recognition rate and the acquisition of the test sample set also followed the steps in

Section 3.3.1, except for adding noise with a different SNR. According to the result of

Section 3.3.1, the recognition rate increased with the training sample set size and the recognition rate curves of different SR algorithms basically did not cross. Therefore, the average recognition rate (i.e., the average of the recognition rates of five different BPNNs) was used to describe the recognition results of the system under noisy conditions, as shown in

Figure 8.

Overall, a larger factor hastened the decrease in the average recognition rate and 25 dB decreased faster than 35 dB. Specifically, the Bicubic algorithm was extremely sensitive to noise and was unsuitable for use when the noise intensity and factor was large. The MRF algorithm performed best in noise and the recognition rate of its test sample set only decreased slowly with the increase in noise intensity and factor. However, it needed to adjust more parameters and the calculation was more complicated. The JBF and SGJF algorithms were also robust to noise. When the factor or noise intensity was low, they were less affected. However, when the factor and noise intensity increased simultaneously, the average recognition rate dropped significantly and the gap between the JBF and SGJF algorithms also increased. Taken together, the average recognition rate of the SGJF algorithm was significantly smaller than the MRF algorithm only when the noise intensity and factor were at the maximum, whilst it was superior or similar to other algorithms in other cases. Therefore, the SGJF algorithm had a very good comprehensive recognition performance.

3.4. SR Recognition of Scene-Model with Multiple Resolutions

Recognizing multi-resolution (multi-res) super-resolved scene libraries by multi-res model libraries without noise was tested where the resolution of the super-resolved scene library may have been lower than, equal to or higher than the model library. Firstly, OHRRIs and OHRIIs of each target object (

Figure 9(a1,b1)) were acquired. OHRRIs were then down-sampled by factors

of 2, 4 and 8 (

Figure 9(a2–a4)) and the down-sampled image with

was called the original low-res range image (OLRRI). Meanwhile, the down-sampled images of OHRIIs with factors

as 2 and 4 (

Figure 9(b2,b3)) together with OHRIIs were used as the guide images. Finally, the SGJF algorithm with a very good comprehensive recognition performance was applied to OLRRIs and the factors

were 2, 4 and 8 (

Figure 9(c1–c3)). The smaller the factor

of the down-sampled image and the larger the factor

of the SR image, the clearer the image and the smoother the edges of their binary image.

To test the recognition rate, training and test sample sets were established and the attitude angle of the image in each sample set was the same as

Section 3.3.1. The images in the training sample sets contained four different resolutions, which were OHRRI and its down-sampled image with factors

of 2, 4 and 8 (i.e., OLRRI). Moreover, each resolution image established five training sample sets with different sizes. Therefore, a total of 20 training sample sets were obtained. In addition, the resolutions of the four test sample sets established were also different. They were composed of OLRRIs and their super-resolved images obtained by SGJF algorithm with factors

of 2, 4 and 8.

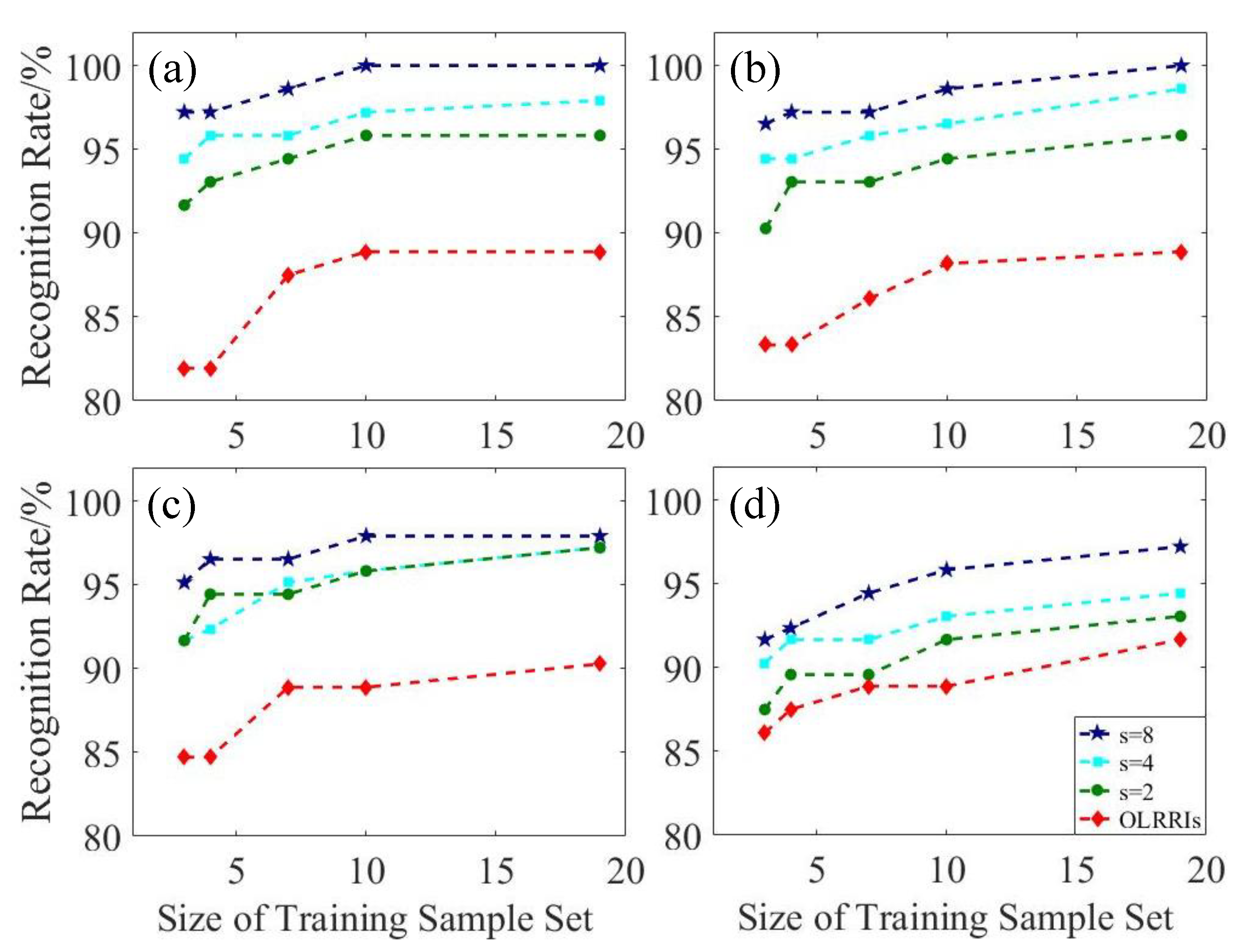

Figure 10 shows the recognition results of the four test sample sets by 20 BPNNs trained by the training sample sets.

Regardless of which resolution training sample set was used to train the BPNN, the higher the resolution of the test sample set (i.e., the greater ), the higher the recognition rate. This finding was explained by the fact that the difference between different test samples increased as the resolution increased. Therefore, test samples with a higher resolution were easier to distinguish and recognize. Conversely, the results of each test sample set recognized by training sample sets with different resolutions were compared longitudinally. When the resolutions of the test and training sample sets were the same, the recognition rate was higher than when their resolutions were different.

According to the experimental results, the method of using the SR algorithm in actual scene recognition is obtained as follows. (1) When a low-res scene is acquired, if it can be super-resolved then it should be super-resolved. (2) If multiple SR factors are available to choose from, the largest one should be selected even if the resolution of the super-resolved scene is higher than that of the model in the model library. (3) If only one SR factor exists to choose from, but multiple model libraries are available with different resolutions, the model library with the same resolution as the super-resolved scene should be selected.

4. Conclusions

This work proposed an SR algorithm for range images based on SGJF, which can reduce the effect of intensity image texture on super-resolved images by adding the range information of the range image as a coefficient of the filter. We also constructed an SR recognition system for range images, which acquired range and intensity images of eight military target objects through the image acquisition platform to establish training and test sample sets for the simulation model and scene libraries and used the Bicubic, JBF, MRF and SGJF algorithms to test the effect of the SR algorithm on the recognition of ladar range images. The recognition of scenes with arbitrary attitude angles by model libraries of different sizes was initially tested. The results demonstrated that the recognition rate was positively correlated with the model library size. However, the recognition rate grew slowly or even stopped when it increased to a certain number. The recognition of the system under different noise conditions was then tested when the resolutions of the super-resolved scene and the model were the same. The results demonstrated that all the SR algorithms we tested can help improve the recognition rate of low-res scenes; moreover, a larger factor increases the improvement. The SGJF algorithm we proposed largely avoids the problem of ‘texture transfer’. It had the highest recognition rate when no noise occurred and showed noise robustness after adding noise. Therefore, the SGJF algorithm has a very good comprehensive recognition performance. Finally, the recognition of multi-res super-resolved scene libraries by multi-res model libraries was tested. The results demonstrated that regardless of the resolution of the model library, an increase in the resolution of the super-resolved scene also increased the recognition rate. In addition, using the model library with the same resolution as the super-resolved scene could obtain the highest recognition rate. According to the experimental results, suggestions on the use of SR algorithms in actual scene recognition are proposed. Our future work includes studying the application of the SR algorithm in the recognition of radar range images based on local features.