NAS-HRIS: Automatic Design and Architecture Search of Neural Network for Semantic Segmentation in Remote Sensing Images

Abstract

:1. Introduction

- The NAS of the HR remote sensing image segmentation is explored for the first time;

- Our work embeds DAG into the search space and designs the differentiable searching process, which enables learning an end-to-end searching rule by using gradient descent optimisation [38]. We use the Gumbel-Max trick to provide an efficient way to draw samples from a non-continuous probability distribution, and it improves the efficiency of searching and reduces the memory consumption;

- We provide a new HR remote sensing image segmentation dataset: the Beijing building datasets (BBD) that can be useful for image segmentation applications such as building segmentation for urban planning; (Figure 1)

- Conducted search on a variety of remote sensing images, and training was conducted in aerial images, satellite images and Google earth image, obtaining and we got 98.52% pix accuracy, and 90.44% Mean Intersection over Union (MIoU) by using NAS-HRIS on the WHU dataset.

2. Methodology

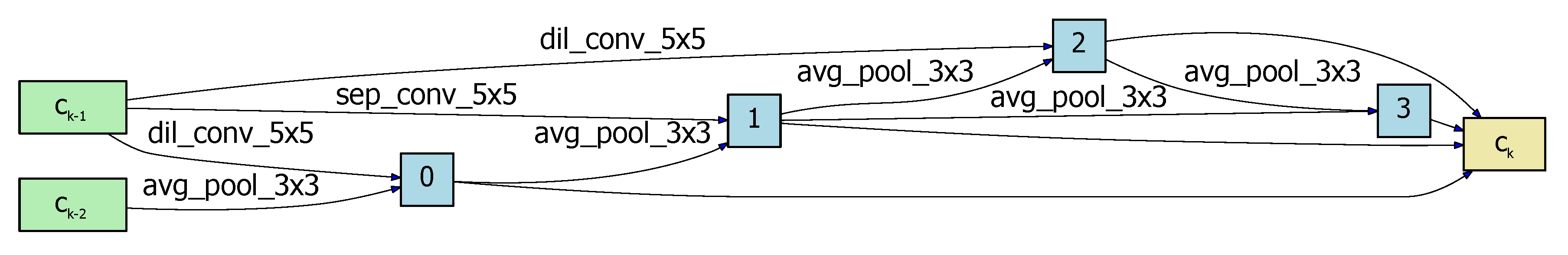

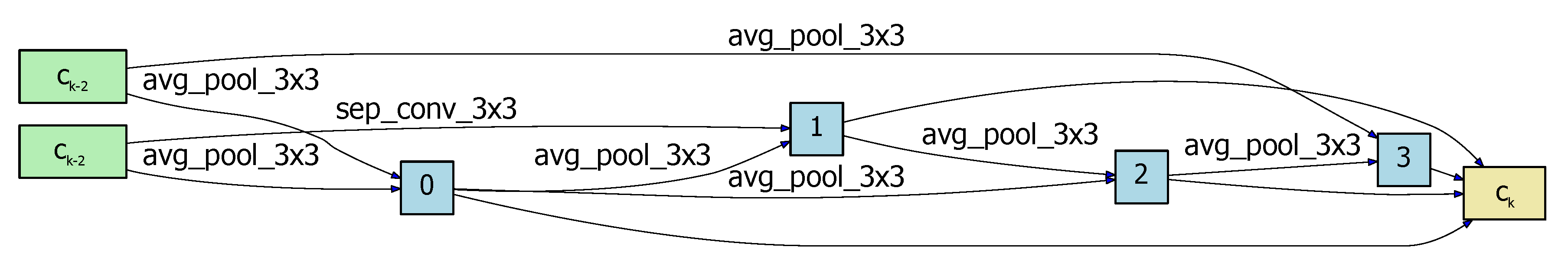

2.1. Architecture Search Space

2.1.1. Cell Level

2.1.2. Network Level

2.2. Continuous Relaxation and Search Strategy

| Algorithm 1 NAS-HRIS Search Encoder for High-Resolution Remote Sensing Image Segmention |

| Require:: the training set; : the validation set; n: batch size; initialized operation set P; |

| Ensure: |

| 1: initialized the architecture variable and the weights randomly, learning rate , search epochs |

| 2: repeat |

| 3: Sample batch of data from ; |

| 4: compute ; |

| 5: Updata by gradient descent: |

| ; |

| 6: Sample batch of data from ; |

| 7: compute ; |

| 8: Updata by gradient descent: |

| ; |

| 9: until converge |

2.3. Evaluation criteria

2.3.1. Pixel Accuracy (PA)

2.3.2. Score

2.3.3. Mean Intersection over Union (MIoU)

3. Experiments and Results

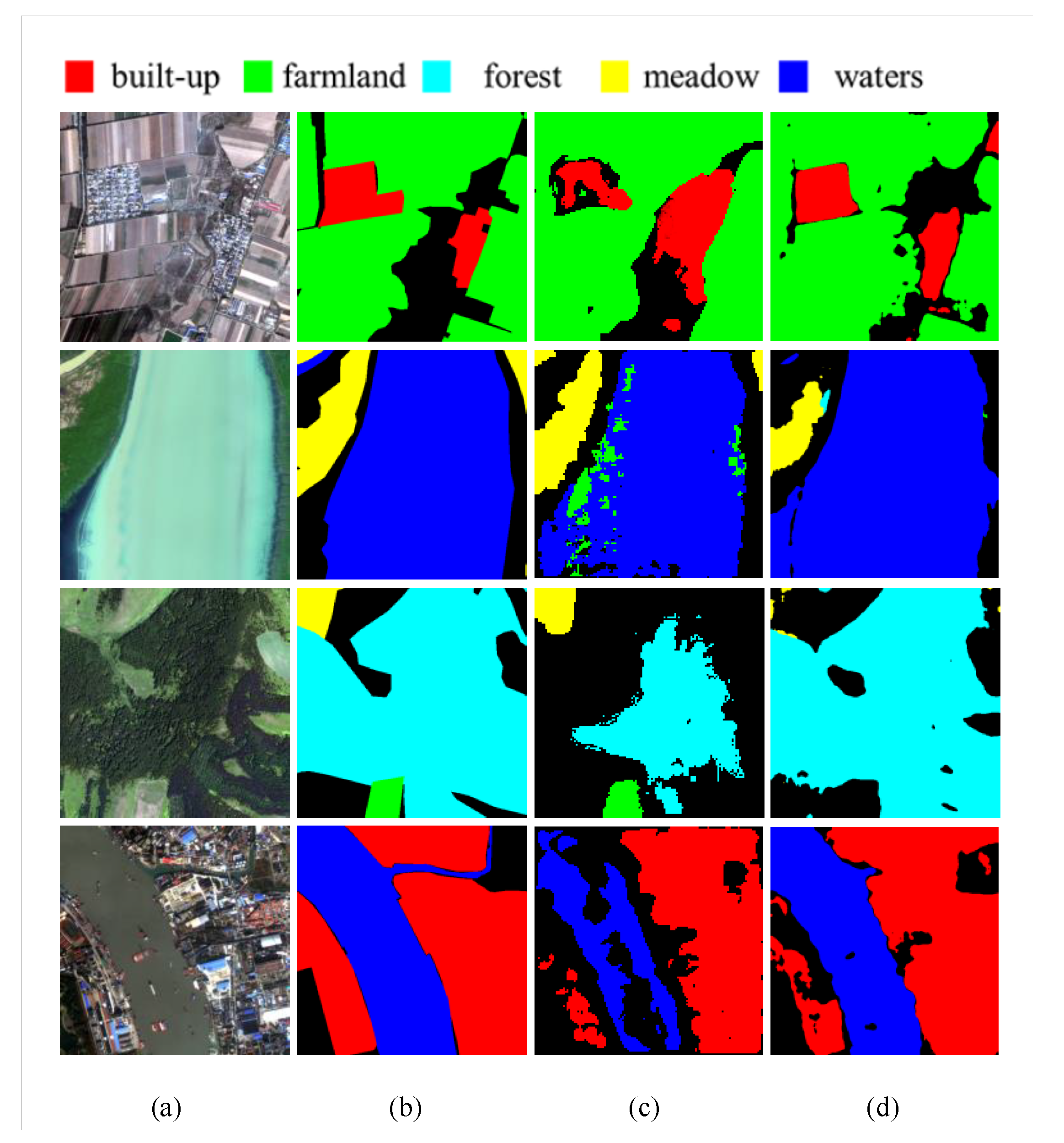

3.1. Experiments on Aerial Dataset

3.2. Experiments on Satellite Dataset

3.3. Experiments on Non-single Source Dataset

4. Discussions and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Wei, W.; Polap, D.; Li, X.; Woźniak, M.; Liu, J. Study on Remote Sensing Image Vegetation Classification Method Based on Decision Tree Classifier. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bangalore, India, 18–21 November 2018; pp. 2292–2297. [Google Scholar] [CrossRef]

- Capizzi, G.; Sciuto, G.; Woźniak, M.; Damaševičius, R. A clustering based system for automated oil spill detection by satellite remote sensing. Lect. Notes Comput. Sci. 2016, 9693, 613–623. [Google Scholar] [CrossRef]

- Sun, Z.; Lin, D.; Wei, W.; Wozniak, M.; Damasevicius, R. Road Detection Based on Shearlet for GF-3 Synthetic Aperture Radar Images. IEEE Access 2020, 8, 28133–28141. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Chen, Y.; Ming, D.; Zhao, L.; Lv, B.; Zhou, K.; Qing, Y. Review on high spatial resolution remote sensing image segmentation evaluation. Photogramm Eng. Remote Sens. 2018, 84, 629–646. [Google Scholar] [CrossRef]

- Badmos, O.S.; Rienow, A.; Callo-Concha, D.; Greve, K.; Jürgens, C. Urban development in West Africa-monitoring and intensity analysis of slum growth in Lagos: Linking pattern and process. Remote Sens. 2018, 10, 1044. [Google Scholar] [CrossRef] [Green Version]

- Zhou, D.; Xiao, J.; Bonafoni, S.; Berger, C.; Deilami, K.; Zhou, Y.; Frolking, S.; Yao, R.; Qiao, Z.; Sobrino, J.A. Satellite remote sensing of surface urban heat islands: Progress, challenges, and perspectives. Remote Sens. 2019, 11, 48. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Liu, H.; Wang, Y.; Li, Z.; Jia, Y.; Gui, G. Deep Learning-Based Classification Methods for Remote Sensing Images in Urban Built-Up Areas. IEEE Access 2019, 7, 36274–36284. [Google Scholar] [CrossRef]

- Yi, Y.; Zhang, Z.; Zhang, W.; Zhang, C.; Li, W.; Zhao, T. Semantic segmentation of urban buildings from VHR remote sensing imagery using a deep convolutional neural network. Remote Sens. 2019, 11, 1774. [Google Scholar] [CrossRef] [Green Version]

- Dey, V.; Zhang, Y.; Zhong, M. A Review on Image Segmentation Techniques with Remote Sensing Perspective; ISPRS: Vienna, Austria, 2010; Volume 38, pp. 31–42. [Google Scholar]

- Kettig, R.L.; Landgrebe, D. Classification of multispectral image data by extraction and classification of homogeneous objects. IEEE Trans. Geosci. Remote Sens. 1976, 14, 19–26. [Google Scholar] [CrossRef] [Green Version]

- Carleer, A.; Debeir, O.; Wolff, E. Assessment of Very High Spatial Resolution Satellite Image Segmentations. Photogramm Eng. Remote Sens. 2010, 71, 1285–1294. [Google Scholar] [CrossRef] [Green Version]

- Guo, S.; Jin, Q.; Wang, H.; Wang, X.; Wang, Y.; Xiang, S. Learnable gated convolutional neural network for semantic segmentation in remote-sensing images. Remote Sens. 2019, 11, 1922. [Google Scholar] [CrossRef] [Green Version]

- Chen, G.; Li, C.; Wei, W.; Jing, W.; Woźniak, M.; Blažauskas, T.; Damaševičius, R. Fully convolutional neural network with augmented atrous spatial pyramid pool and fully connected fusion path for high resolution remote sensing image segmentation. Appl. Sci. 2019, 9, 1816. [Google Scholar] [CrossRef] [Green Version]

- Dong, L.; Xing, L.; Liu, T.; Du, H.; Mao, F.; Han, N.; Li, X.; Zhou, G.; Zhu, D.; Zheng, J.; et al. Very High Resolution Remote Sensing Imagery Classification Using a Fusion of Random Forest and Deep Learning Technique-Subtropical Area for Example. IEEE J. Sel. Top Appl. Earth Obs. Remote Sens. 2020, 13, 113–128. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Fu, G.; Liu, C.; Zhou, R.; Sun, T.; Zhang, Q. Classification for high resolution remote sensing imagery using a fully convolutional network. Remote Sens. 2017, 9, 498. [Google Scholar] [CrossRef] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical image computing and computer-assisted intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual u-net. IEEE Geosci. Remote. Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef] [Green Version]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Li, J.; Liang, B.; Wang, Y. A hybrid neural network for hyperspectral image classification. Remote Sens. Lett. 2020, 11, 96–105. [Google Scholar] [CrossRef]

- Liu, Q.; Basu, S.; Ganguly, S.; Mukhopadhyay, S.; DiBiano, R.; Karki, M.; Nemani, R. DeepSat V2: Feature augmented convolutional neural nets for satellite image classification. Remote Sens. Lett. 2020, 11, 156–165. [Google Scholar] [CrossRef] [Green Version]

- Pashaei, M.; Kamangir, H.; Starek, M.J.; Tissot, P. Review and evaluation of deep learning architectures for efficient land cover mapping with UAS hyper-spatial imagery: A case study over a wetland. Remote Sens. 2020, 12, 959. [Google Scholar] [CrossRef] [Green Version]

- Xiong, W.; Xiong, Z.; Cui, Y.; Lv, Y. Deep multi-feature fusion network for remote sensing images. Remote Sens. Lett. 2020, 11, 563–571. [Google Scholar] [CrossRef]

- Gabryel, M.; Damaševičius, R. The image classification with different types of image features. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2017; Volume 10245, pp. 497–506. [Google Scholar]

- Zhou, B.; Duan, X.; Ye, D.; Wei, W.; Woźniak, M.; Połap, D.; Damaševičius, R. Multi-level features extraction for discontinuous target tracking in remote sensing image monitoring. Sensors 2019, 19, 4855. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Baker, B.; Gupta, O.; Naik, N.; Raskar, R. Designing neural network architectures using reinforcement learning. arXiv 2016, arXiv:1611.02167. [Google Scholar]

- Zoph, B.; Le, Q.V. Neural Architecture Search with Reinforcement Learning. arXiv 2016, arXiv:1611.01578. [Google Scholar]

- Liu, H.; Simonyan, K.; Yang, Y. Darts: Differentiable architecture search. arXiv 2018, arXiv:1806.09055. [Google Scholar]

- Huang, S.Y.; Chu, W.T. PONAS: Progressive One-shot Neural Architecture Search for Very Efficient Deployment. arXiv 2020, arXiv:2003.05112. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8697–8710. [Google Scholar]

- Liu, C.; Zoph, B.; Neumann, M.; Shlens, J.; Hua, W.; Li, L.J.; Fei-Fei, L.; Yuille, A.; Huang, J.; Murphy, K. Progressive neural architecture search. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 19–34. [Google Scholar]

- Real, E.; Aggarwal, A.; Huang, Y.; Le, Q.V. Regularized evolution for image classifier architecture search. In Proceedings of the aaai conference on artificial intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 4780–4789. [Google Scholar]

- Real, E.; Moore, S.; Selle, A.; Saxena, S.; Suematsu, Y.L.; Tan, J.; Le, Q.V.; Kurakin, A. Large-scale evolution of image classifiers. In Proceedings of the 34th International Conference on Machine Learning—JMLR.org, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 2902–2911. [Google Scholar]

- Wei, W.; Zhou, B.; Maskeliūnas, R.; Damaševičius, R.; Połap, D.; Woźniak, M. Iterative Design and Implementation of Rapid Gradient Descent Method. Lect. Notes Comput. Sci. 2019, 11508, 530–539. [Google Scholar] [CrossRef]

- Jang, E.; Gu, S.; Poole, B. Categorical reparameterization with gumbel-softmax. arXiv 2016, arXiv:1611.01144. [Google Scholar]

- Maddison, C.J.; Mnih, A.; Teh, Y.W. The concrete distribution: A continuous relaxation of discrete random variables. arXiv 2016, arXiv:1611.00712. [Google Scholar]

- Dong, X.; Yang, Y. Searching for a robust neural architecture in four gpu hours. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1761–1770. [Google Scholar]

- Ji, S.; Wei, S.; Lu, M. Fully convolutional networks for multisource building extraction from an open aerial and satellite imagery data set. IEEE Trans. Geosci. Remote Sens. 2018, 57, 574–586. [Google Scholar] [CrossRef]

- Tong, X.Y.; Xia, G.S.; Lu, Q.; Shen, H.; Li, S.; You, S.; Zhang, L. Learning transferable deep models for land-use classification with high-resolution remote sensing images. arXiv 2018, arXiv:1807.05713. [Google Scholar]

| Architectures | Parameters (M) | PA (%) | (%) | MIoU (%) | Search Time (h) | Train Time (h) |

|---|---|---|---|---|---|---|

| SegNet | 29.4441 | 97.77 | 88.96 | 84.51 | - | 7.4 |

| U-Net | 23.3565 | 98.30 | 93.56 | 88.41 | - | 6.2 |

| Deeplab v3+ | 13.3953 | 98.09 | 94.47 | 90.20 | - | 4.0 |

| NAS-HRIS | 0.1868 | 98.52 | 93.77 | 90.44 | 12.1 | 16.4 |

| Architectures | Parameters (M) | PA (%) | (%) | MIoU (%) | Search Time (h) | Train Time (h) |

|---|---|---|---|---|---|---|

| SegNet | 29.4441 | 79.96 | 71.50 | 63.19 | - | 18.3 h |

| U-Net | 23.3565 | 80.37 | 73.71 | 64.66 | - | 13.2 h |

| Deeplab v3+ | 13.3953 | 82.42 | 71.83 | 63.82 | - | 14.9 h |

| NAS-HRIS | 0.1232 | 88.48 | 78.35 | 67.03 | 10.6 | 19.5 h |

| Architectures | Parameters (M) | PA (%) | (%) | MIoU (%) | Search Time (h) | Train Time (h) |

|---|---|---|---|---|---|---|

| SegNet | 29.4441 | 95.48 | 82.11 | 74.12 | - | 5.4 |

| U-Net | 23.3565 | 95.21 | 83.56 | 74.66 | - | 2.8 |

| Deeplab v3+ | 13.3953 | 94.42 | 84.43 | 75.19 | - | 3.3 |

| NAS-HRIS | 0.2048 | 96.28 | 85.31 | 75.21 | 12.1 | 5.8 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, M.; Jing, W.; Lin, J.; Fang, N.; Wei, W.; Woźniak, M.; Damaševičius, R. NAS-HRIS: Automatic Design and Architecture Search of Neural Network for Semantic Segmentation in Remote Sensing Images. Sensors 2020, 20, 5292. https://doi.org/10.3390/s20185292

Zhang M, Jing W, Lin J, Fang N, Wei W, Woźniak M, Damaševičius R. NAS-HRIS: Automatic Design and Architecture Search of Neural Network for Semantic Segmentation in Remote Sensing Images. Sensors. 2020; 20(18):5292. https://doi.org/10.3390/s20185292

Chicago/Turabian StyleZhang, Mingwei, Weipeng Jing, Jingbo Lin, Nengzhen Fang, Wei Wei, Marcin Woźniak, and Robertas Damaševičius. 2020. "NAS-HRIS: Automatic Design and Architecture Search of Neural Network for Semantic Segmentation in Remote Sensing Images" Sensors 20, no. 18: 5292. https://doi.org/10.3390/s20185292

APA StyleZhang, M., Jing, W., Lin, J., Fang, N., Wei, W., Woźniak, M., & Damaševičius, R. (2020). NAS-HRIS: Automatic Design and Architecture Search of Neural Network for Semantic Segmentation in Remote Sensing Images. Sensors, 20(18), 5292. https://doi.org/10.3390/s20185292