Development of a Robust Multi-Scale Featured Local Binary Pattern for Improved Facial Expression Recognition

Abstract

:1. Introduction

2. Contribution

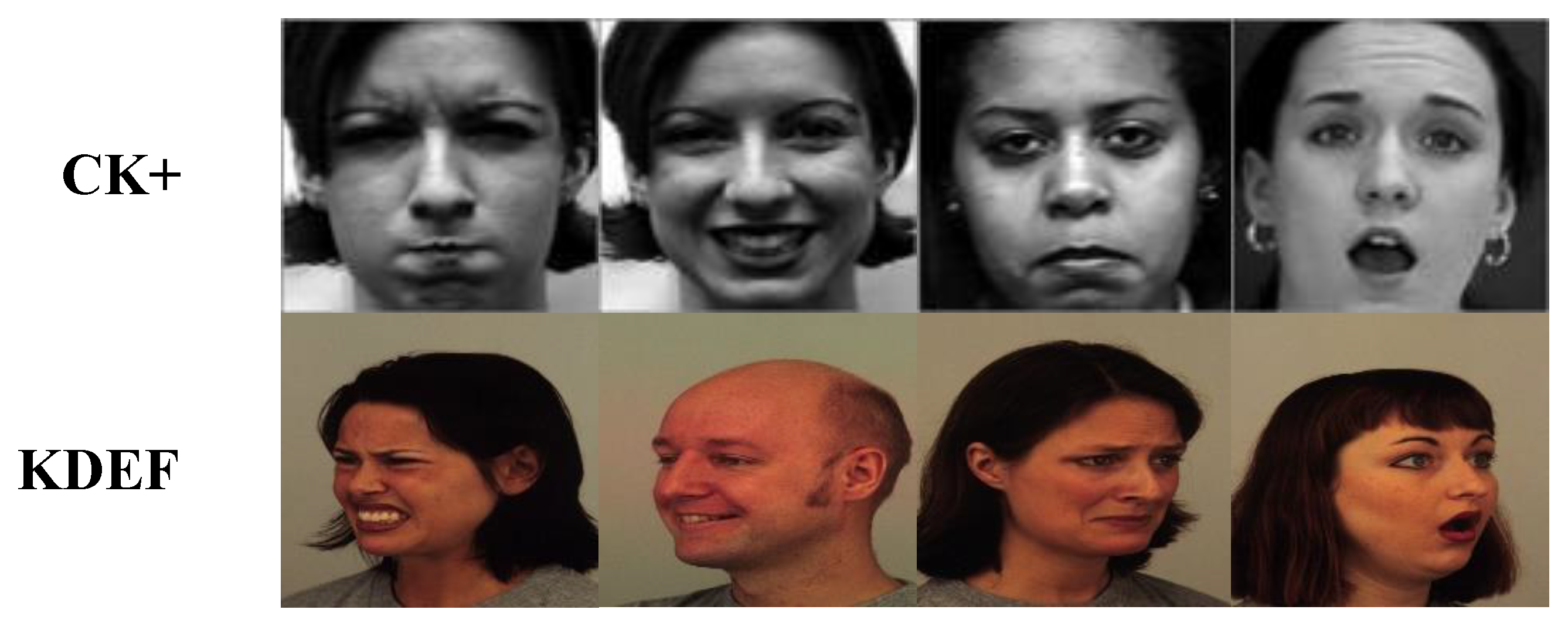

3. Proposed Method

3.1. Pre-Processing

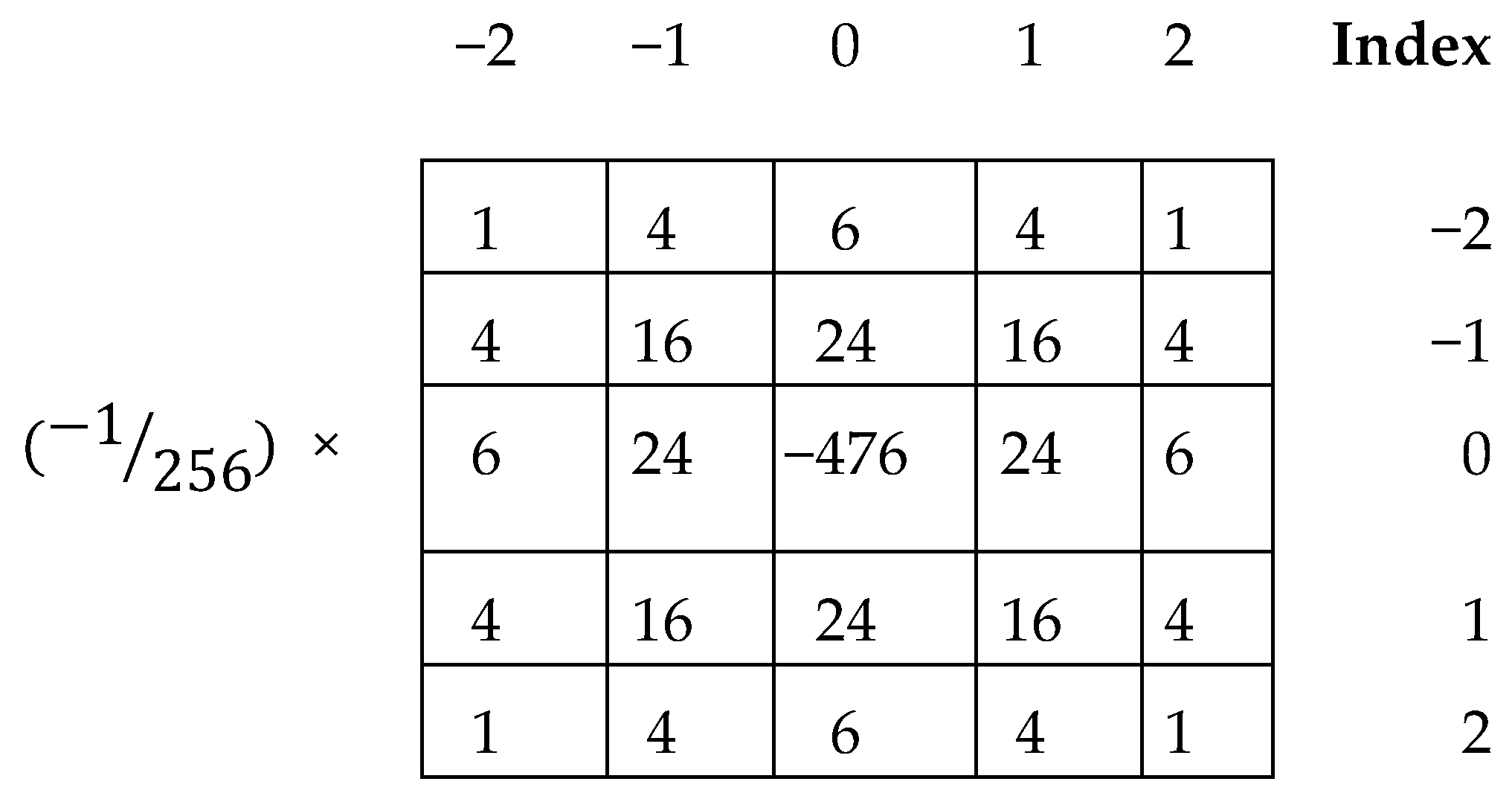

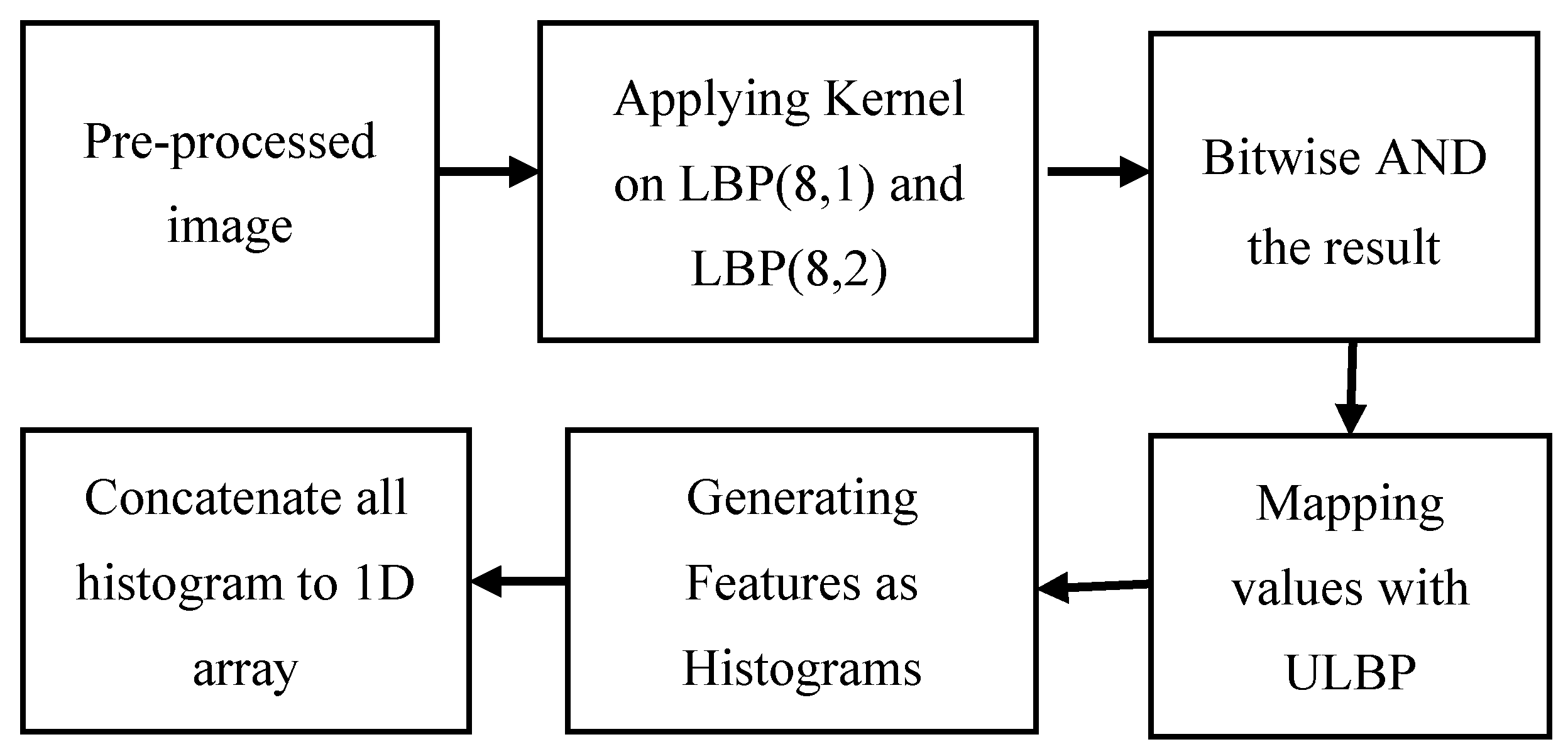

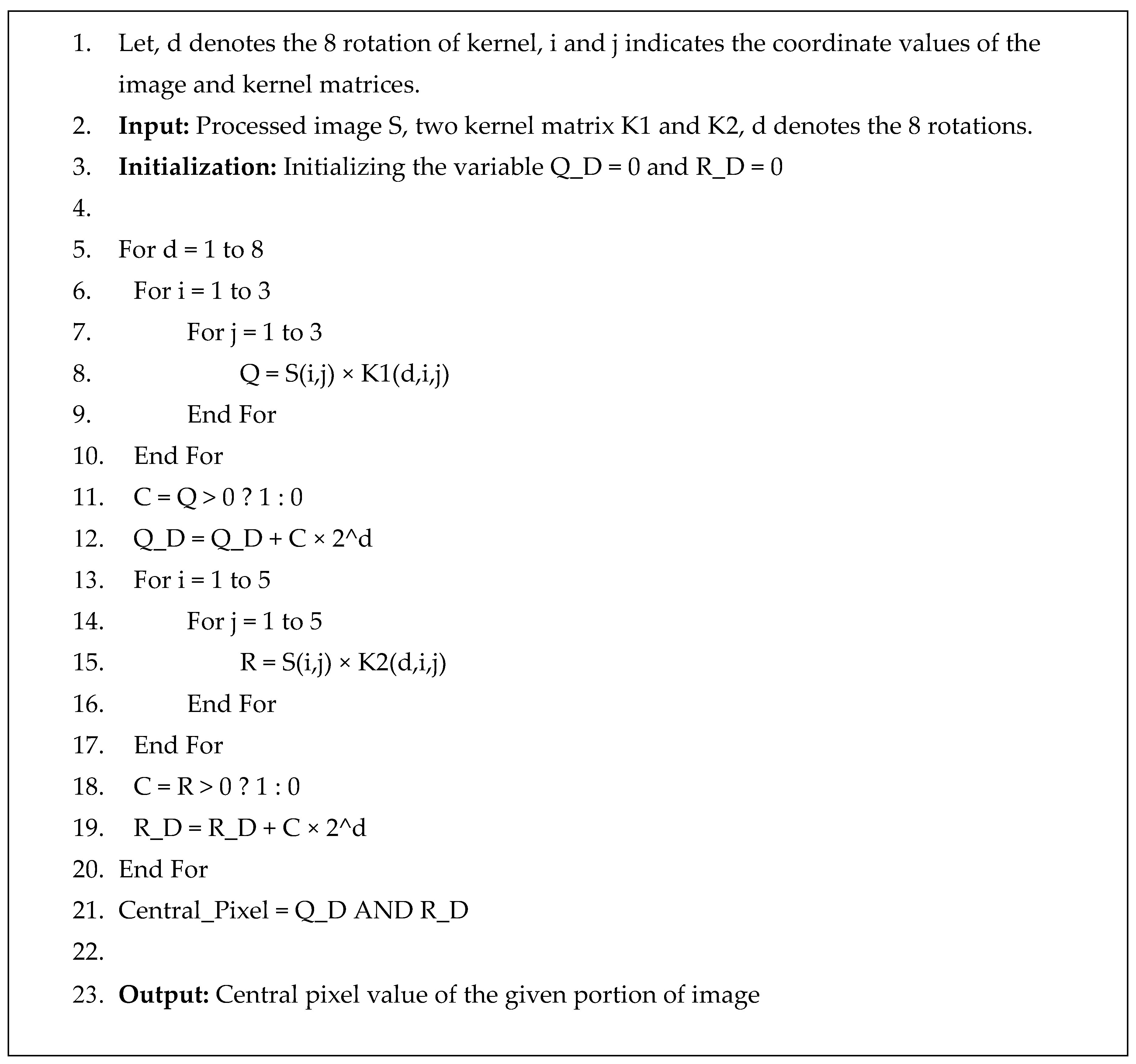

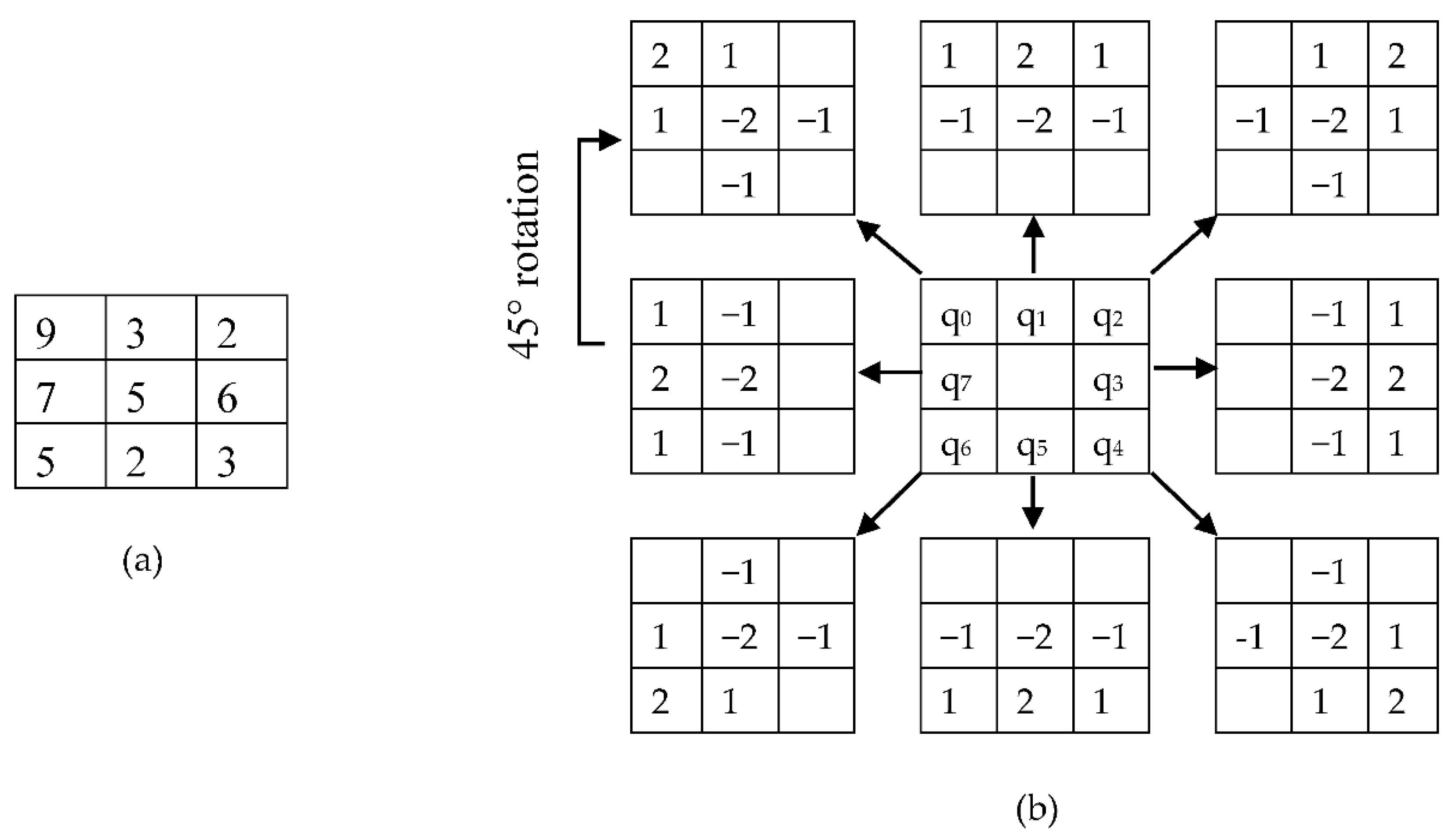

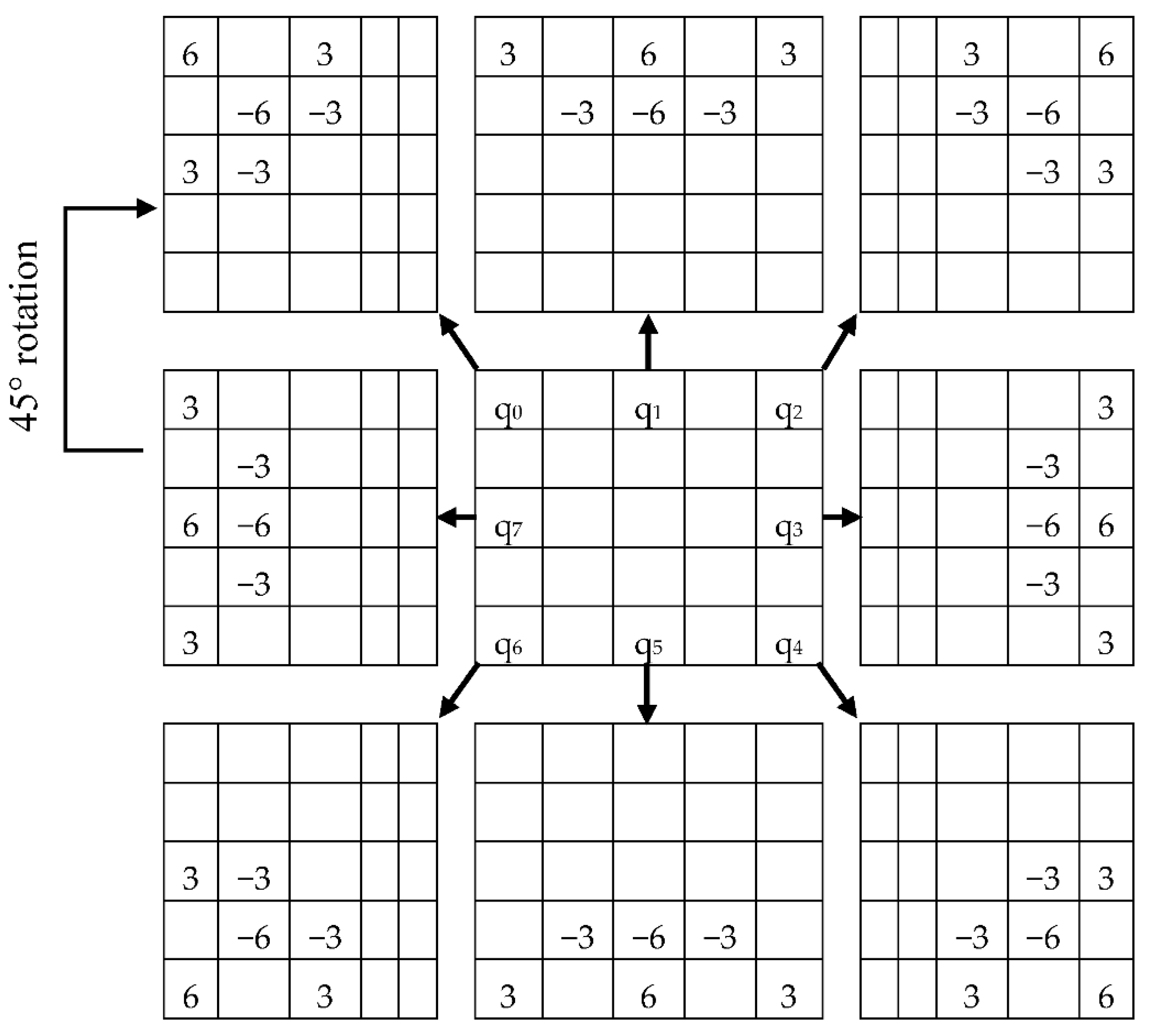

3.2. Feature Extraction

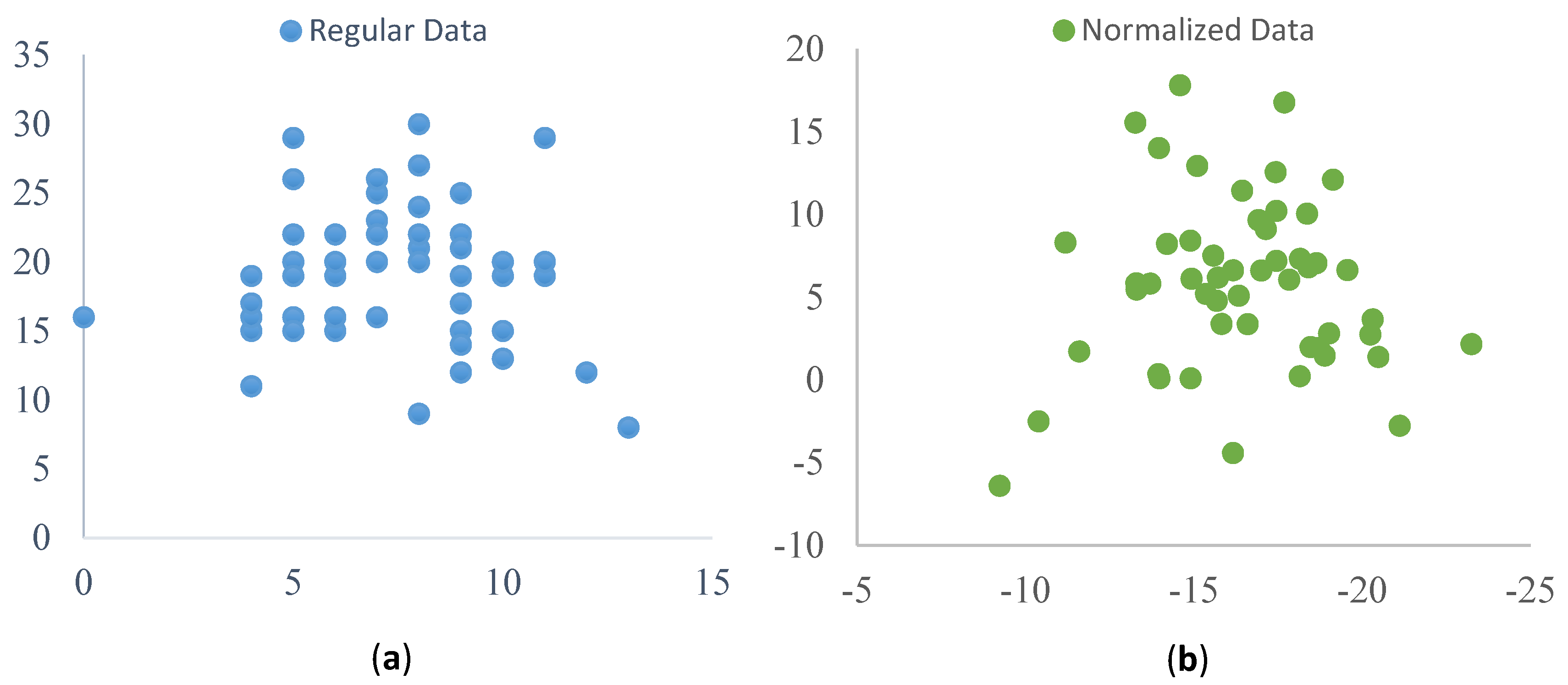

3.3. Normalization

4. Results and Discussion

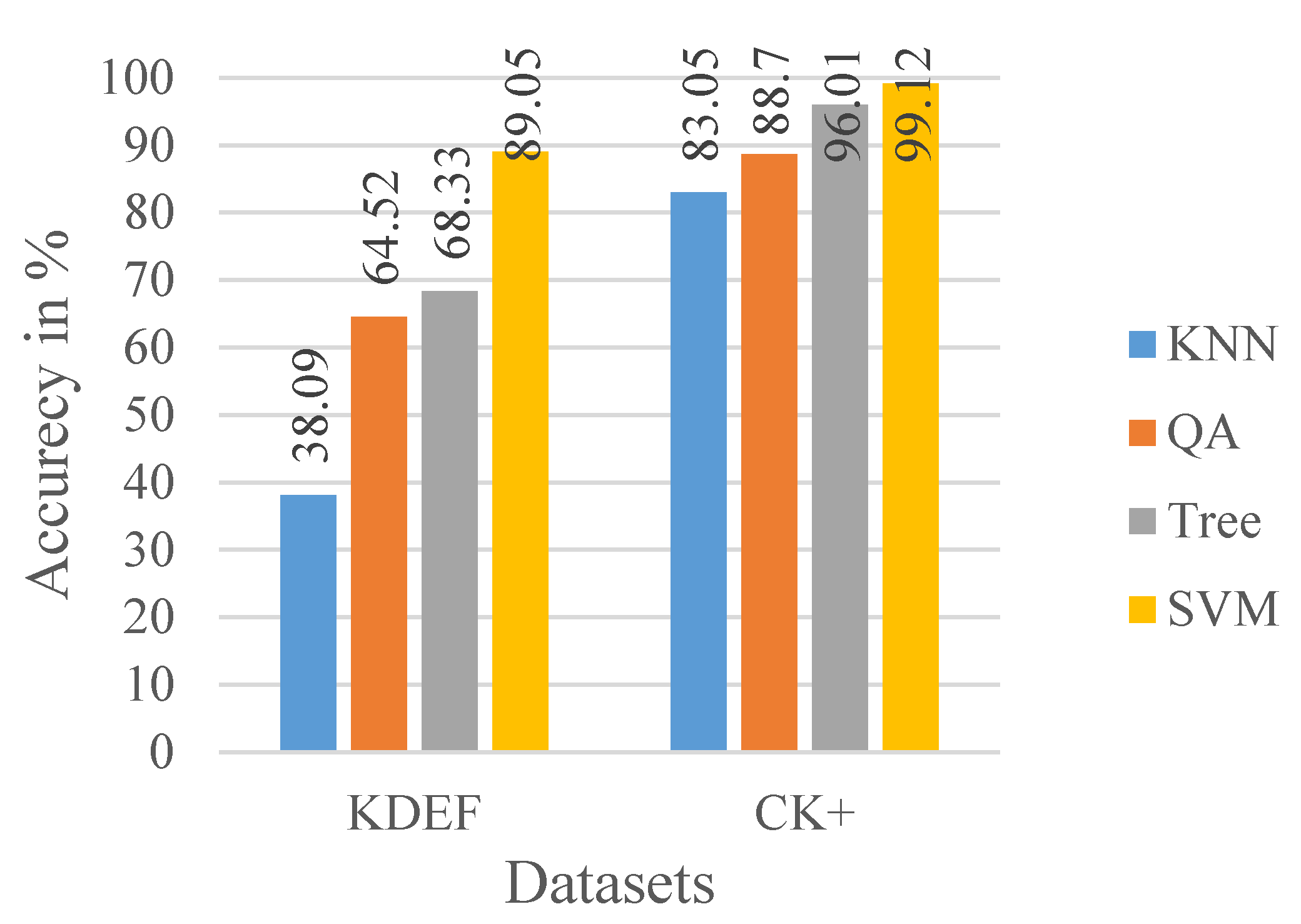

4.1. Performance Analysis of the Proposed Method

4.2. Analyses and Discussion of Results

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Yu, Z.; Zhang, C. Image based static facial expression recognition with multiple deep network learning. In Proceedings of the ICMI 2015-Proceedings of the 2015 ACM International Conference on Multimodal Interaction, Washington, DC, USA, 9–13 November 2015. [Google Scholar]

- Kahou, S.E.; Michalski, V.; Konda, K.; Memisevic, R.; Pal, C. Recurrent neural networks for emotion recognition in video. In Proceedings of the ICMI 2015-Proceedings of the 2015 ACM International Conference on Multimodal Interaction, Washington, DC, USA, 9–13 November 2015. [Google Scholar]

- Liu, M.; Li, S.; Shan, S.; Wang, R.; Chen, X. Deeply learning deformable facial action parts model for dynamic expression analysis. In Asian Conference on Computer Vision, Proceedings of the Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar]

- Shan, C.; Braspenning, R. Recognizing Facial Expressions Automatically from Video. In Handbook of Ambient Intelligence and Smart Environments; Nakashima, H., Aghajan, H., Augusto, J.C., Eds.; Springer International Publishing: Cham, Switzerland, 2010. [Google Scholar]

- Yang, B.; Cao, J.; Ni, R.; Zhang, Y. Facial Expression Recognition Using Weighted Mixture Deep Neural Network Based on Double-Channel Facial Images. IEEE Access 2017. [Google Scholar] [CrossRef]

- Li, Y.; Zeng, J.; Shan, S.; Chen, X. Occlusion Aware Facial Expression Recognition Using CNN With Attention Mechanism. IEEE Trans. Image Process. 2018. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Li, Y.; Ma, X.; Song, R. Facial Expression Recognition with Fusion Features Extracted from Salient Facial Areas. Sensors 2017, 17, 712. [Google Scholar] [CrossRef]

- Zhao, Y.; Xu, J. An Improved Micro-Expression Recognition Method Based on Necessary Morphological Patches. Symmetry 2019, 11, 497. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.; Wang, X.; Han, S.; Wang, J.; Park, D.S.; Wang, Y. Improved Real-Time Facial Expression Recognition Based on a Novel Balanced and Symmetric Local Gradient Coding. Sensors 2019, 19, 1899. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Shan, S.; Zhang, H.; Gao, W.; Chen, X. Multi-resolution Histograms of Local Variation Patterns (MHLVP) for robust face recognition. In International Conference on Audio-and Video-Based Biometric Person Authentication, Proceedings of the Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2005. [Google Scholar]

- Huang, D.; Shan, C.; Ardabilian, M.; Wang, Y.; Chen, L. Local binary patterns and its application to facial image analysis: A survey. IEEE Trans. Syst. Man Cybern. Part C 2011, 41, 765–781. [Google Scholar] [CrossRef] [Green Version]

- Kumari, J.; Rajesh, R.; Pooja, K.M. Facial Expression Recognition: A Survey. Procedia Comput. Sci. 2015, 58, 486–491. [Google Scholar] [CrossRef] [Green Version]

- Ahonen, T.; Hadid, A.; Pietikäinen, M. Face description with local binary patterns: Application to face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2006. [Google Scholar] [CrossRef]

- Canedo, D.; Neves, A.J.R. Facial Expression Recognition Using Computer Vision: A Systematic Review. Appl. Sci. 2019, 9, 4678. [Google Scholar] [CrossRef] [Green Version]

- Huang, D.; Wang, Y.; Wang, Y. A robust method for near-infrared face recognition is based on extended local binary patterns. In Proceedings of the Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany; pp. 437–446.

- Guo, Z.; Zhang, L.; Zhang, D. A completed modeling of local binary pattern operator for texture classification. IEEE Trans. Image Process. 2010. [Google Scholar] [CrossRef] [Green Version]

- Zhao, G.; Pietikäinen, M. Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans. Pattern Anal. Mach. Intell. 2007. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sajjad, M.; Shah, A.; Jan, Z.; Shah, S.I.; Baik, S.W.; Mehmood, I. Facial appearance and texture feature-based robust facial expression recognition framework for sentiment knowledge discovery. Cluster Comput. 2017. [Google Scholar] [CrossRef]

- Zhang, B.; Gao, Y.; Zhao, S.; Liu, J. Local derivative pattern versus local binary pattern: Face recognition with high-order local pattern descriptor. IEEE Trans. Image Process. 2010. [Google Scholar] [CrossRef] [Green Version]

- Zangeneh, E.; Moradi, A. Facial expression recognition by using differential geometric features. Imaging Sci. J. 2018. [Google Scholar] [CrossRef]

- Chen, J.; Takiguchi, T.; Ariki, Y. Rotation-reversal invariant HOG cascade for facial expression recognition. Signal Image Video Process. 2017. [Google Scholar] [CrossRef]

- Tsai, H.H.; Chang, Y.C. Facial expression recognition using a combination of multiple facial features and a support vector machine. Soft Comput. 2018. [Google Scholar] [CrossRef]

- Alphonse, A.S.; Dharma, D. Novel directional patterns and a Generalized Supervised Dimension Reduction System (GSDRS) for facial emotion recognition. Multimed. Tools Appl. 2018. [Google Scholar] [CrossRef]

- Yu, Z.; Liu, G.; Liu, Q.; Deng, J. Spatio-temporal convolutional features with nested LSTM for facial expression recognition. Neurocomputing 2018. [Google Scholar] [CrossRef]

- Zhang, L.; Gao, Q.; Zhang, D. Directional independent component analysis with tensor representation. In Proceedings of the 26th IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Samara, A.; Galway, L.; Bond, R.; Wang, H. Affective state detection via facial expression analysis within a human-computer interaction context. J. Ambient Intell. Humaniz. Comput. 2019. [Google Scholar] [CrossRef] [Green Version]

- Turabzadeh, S.; Meng, H.; Swash, R.; Pleva, M.; Juhar, J. Facial Expression Emotion Detection for Real-Time Embedded Systems. Technologies 2018, 6, 17. [Google Scholar] [CrossRef] [Green Version]

- Martínez, A.; Pujol, F.A.; Mora, H. Application of Texture Descriptors to Facial Emotion Recognition in Infants. Appl. Sci. 2020, 10, 1115. [Google Scholar] [CrossRef] [Green Version]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The extended Cohn-Kanade dataset (CK+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, CVPRW, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Kanade, T.; Cohn, J.F.; Tian, Y. Comprehensive database for facial expression analysis. In Proceedings of the Proceedings-4th IEEE International Conference on Automatic Face and Gesture Recognition, FG, Grenoble, France, 28–30 March 2000. [Google Scholar]

- Kernel (Image Processing), n.d., para.2, Wikipedia. Available online: https://en.wikipedia.org/w/index.php?title=Kernel_(image_processing) (accessed on 30 August 2020).

- Xiong, H.; Zhang, D.; Martyniuk, C.J.; Trudeau, V.L.; Xia, X. Using Generalized Procrustes Analysis (GPA) for normalization of cDNA microarray data. BMC Bioinform. 2008. [Google Scholar] [CrossRef] [Green Version]

- Sert, M.; Aksoy, N. Recognizing facial expressions of emotion using action unit-specific decision thresholds. In Proceedings of the 2nd Workshop on Advancements in Social Signal Processing for Multimodal Interaction-ASSP4MI ’16, Tokyo, Japan, 16 November 2016. [Google Scholar] [CrossRef]

- Liliana, D.Y.; Basaruddin, C.; Widyanto, M.R. Mix Emotion Recognition from Facial Expression using SVM-CRF Sequence Classifier. In Proceedings of the International Conference on Algorithms, Computing and Systems-ICACS ’17, Jeju Island, Korea, 10–13 August 2017; pp. 27–31. [Google Scholar] [CrossRef]

- Ruiz-Garcia, A.; Elshaw, M.; Altahhan, A.; Palade, V. A hybrid deep learning neural approach for emotion recognition from facial expressions for socially assistive robots. Neural Comput. Appl. 2018, 29, 359–373. [Google Scholar] [CrossRef]

- Yaddaden, Y.; Bouzouane, A.; Adda, M.; Bouchard, B. A New Approach of Facial Expression Recognition for Ambient Assisted Living. In Proceedings of the 9th ACM International Conference on PErvasive Technologies Related to Assistive Environments-PETRA ’16, Corfu, Greece, 29 June–1 July 2016. [Google Scholar] [CrossRef]

- FaceReader 8, Technical Specifications. Noldus Information Technology. Available online: https://www.mindmetriks.com/uploads/4/4/6/0/44607631/technical_specs__facereader_8.0.pdf (accessed on 9 September 2020).

- Face-An AI Service that Analyzes Faces in Images. Microsoft Azure. Available online: https://azure.microsoft.com/en-us/services/cognitive-services/face/ (accessed on 9 September 2020).

| Year | Classifier | Features | Databases |

|---|---|---|---|

| 2015 [1] | SVM | CNN | FER/SFEW |

| 2017 [5] | WMDNN | LBP | CK+/JAFFE/CASIA |

| 2017 [7] | PCA | LBP/HOG | CK+/JAFFE |

| 2019 [8] | SVM | LBP-TOP | CASME II/SMIC |

| 2019 [9] | ELM | CS-LGC | CK+/JAFFE |

| 2005 [10] | KNN | MHLVP | FERET |

| 2007 [17] | SVM | VLBP/LBP-TOP | DynTex/MIT/CK+ |

| 2017 [18] | HOG | Ri-HOG | CK+/MMI/AFEW |

| 2018 [20] | SVM | Differential Geometric Features | CK+ |

| 2017 [21] | HOG | Ri-HOG | CK+/MMI/AFEW |

| 2017 [22] | SVM | FERS | CKFI/FG-NET/JAFFE |

| 2019 [28] | SVM | LBP/LTP/RBC | Infant COPE |

| Dataset | No of Expressions Used | Image Size | No of Subject | Total Image |

|---|---|---|---|---|

| CK+ | 7 | 640 490 | 123 | 593 video sequence |

| KDEF | 7 | 562 762 | 70 | 4900 Images |

| Happy | Surprise | Sadness | Anger | Disgust | Fear | Neutral | |

|---|---|---|---|---|---|---|---|

| Happy | 100 | 0 | 0 | 0 | 0 | 0 | 0 |

| Surprise | 0 | 99.67 | 0 | 0 | 0 | 0.33 | 0 |

| Sadness | 2.3256 | 0 | 97.67 | 0 | 0 | 0 | 0 |

| Anger | 0 | 0 | 0 | 100 | 0 | 0 | 0 |

| Disgust | 0 | 0 | 0 | 1.78 | 98.22 | 0 | 0 |

| Fear | 0 | 0 | 0 | 0 | 0 | 100 | 0 |

| Neutral | 1.04 | 0 | 0.68 | 0 | 0 | 0 | 98.28 |

| Happy | Surprise | Sadness | Anger | Disgust | Fear | Neutral | |

|---|---|---|---|---|---|---|---|

| Happy | 90.28 | 0 | 0 | 0 | 9.72 | 0 | 0 |

| Surprise | 0 | 98.28 | 0 | 0 | 1.04 | 0 | 0.64 |

| Sadness | 0 | 0 | 76.72 | 8.56 | 0 | 9.44 | 5.28 |

| Anger | 0 | 0 | 4.17 | 88.89 | 0 | 4.17 | 2.78 |

| Disgust | 2.78 | 0 | 0 | 0 | 97.22 | 0 | 0 |

| Fear | 0 | 0 | 9.72 | 6.94 | 0 | 83.33 | 0 |

| Neutral | 0 | 0 | 6.94 | 1.39 | 0 | 2.78 | 88.89 |

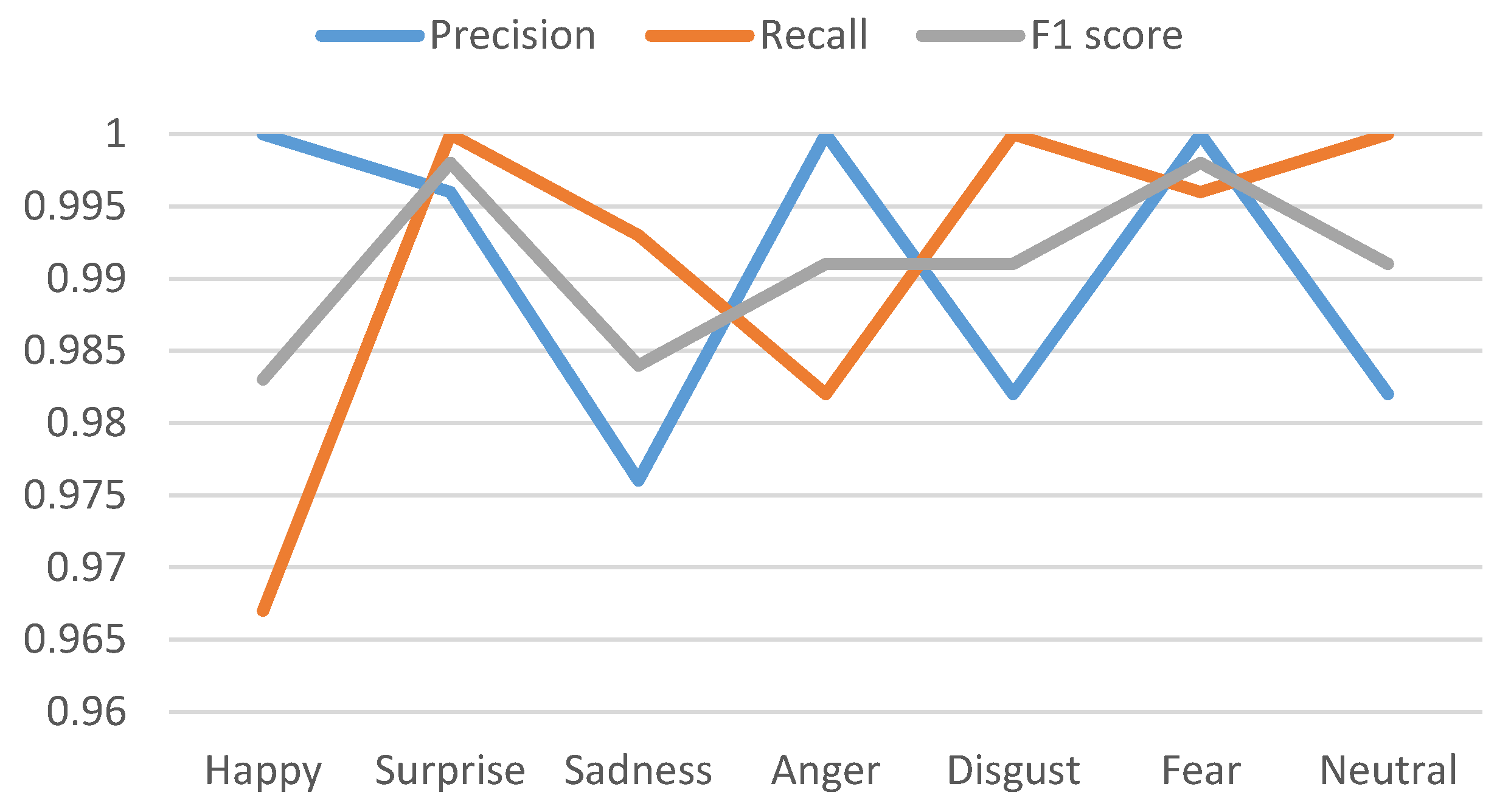

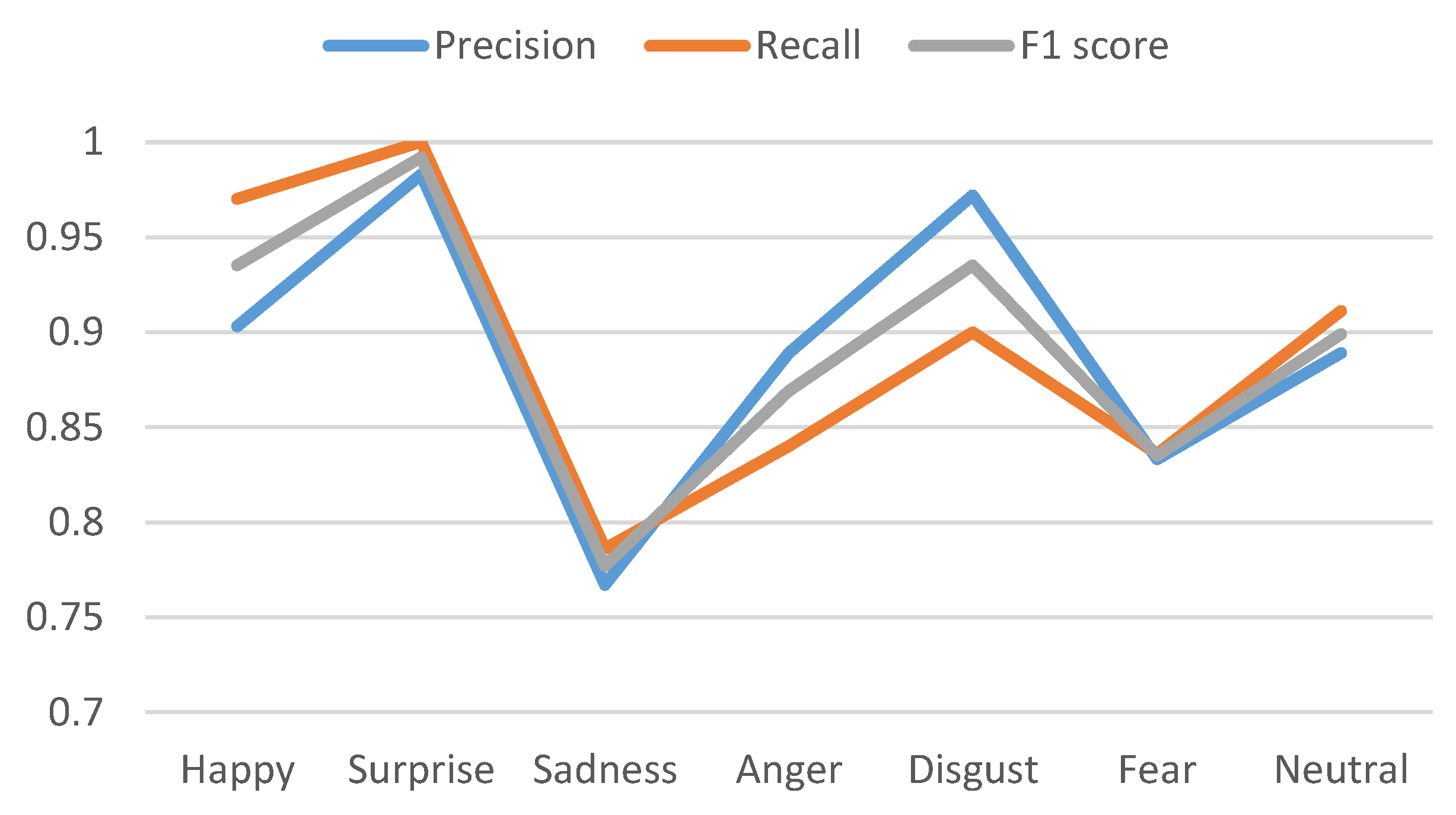

| Classes | CK+ | KDEF | ||||

|---|---|---|---|---|---|---|

| Pre | Rec | F1 | Pre | Rec | F1 | |

| Happy | 1 | 0.967 | 0.983 | 0.903 | 0.970 | 0.935 |

| Surprise | 0.996 | 1 | 0.998 | 0.983 | 1 | 0.992 |

| Sadness | 0.976 | 0.993 | 0.984 | 0.767 | 0.786 | 0.777 |

| Anger | 1 | 0.982 | 0.991 | 0.889 | 0.840 | 0.869 |

| Disgust | 0.982 | 1 | 0.991 | 0.972 | 0.900 | 0.935 |

| Fear | 1 | 0.996 | 0.998 | 0.833 | 0.836 | 0.835 |

| Neutral | 0.982 | 1 | 0.991 | 0.889 | 0.911 | 0.899 |

| Year | Classifier | Features | Databases | Accuracy (%) |

|---|---|---|---|---|

| 2017 [5] | WMDNN | LBP | CK+/JAFFE/CASIA | 97.02 |

| 2019 [8] | SVM | LBP-TOP | CASME II/SMIC | 73.51/70.02 |

| 2019 [9] | ELM | CS-LGC | CK+/JAFFE | 98.33/95.24 |

| 2017 [18] | HOG | Ri-HOG | CK+/MMI/AFEW | 93.8/72.4/56.8 |

| 2019 [28] | SVM | LBP/LTP/RBC | Infant COPE | 89.43/95.12 |

| 2016 [33] | SVM | AAM/AUs | CK+ | 54.47 |

| 2016 [36] | KNN | Landmarks | KDEF/JAFFE | 92.29 |

| 2017 [34] | SVM/CRF | AAM/Gabor | CK+ | 93.93 |

| 2020 | SVM | The proposed method (MSFLBP) | CK+ | 99.12 |

| KDEF | 89.08 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yasmin, S.; Pathan, R.K.; Biswas, M.; Khandaker, M.U.; Faruque, M.R.I. Development of a Robust Multi-Scale Featured Local Binary Pattern for Improved Facial Expression Recognition. Sensors 2020, 20, 5391. https://doi.org/10.3390/s20185391

Yasmin S, Pathan RK, Biswas M, Khandaker MU, Faruque MRI. Development of a Robust Multi-Scale Featured Local Binary Pattern for Improved Facial Expression Recognition. Sensors. 2020; 20(18):5391. https://doi.org/10.3390/s20185391

Chicago/Turabian StyleYasmin, Suraiya, Refat Khan Pathan, Munmun Biswas, Mayeen Uddin Khandaker, and Mohammad Rashed Iqbal Faruque. 2020. "Development of a Robust Multi-Scale Featured Local Binary Pattern for Improved Facial Expression Recognition" Sensors 20, no. 18: 5391. https://doi.org/10.3390/s20185391

APA StyleYasmin, S., Pathan, R. K., Biswas, M., Khandaker, M. U., & Faruque, M. R. I. (2020). Development of a Robust Multi-Scale Featured Local Binary Pattern for Improved Facial Expression Recognition. Sensors, 20(18), 5391. https://doi.org/10.3390/s20185391