Real-Time Fruit Recognition and Grasping Estimation for Robotic Apple Harvesting

Abstract

1. Introduction

- Proposing a computational-efficient light-weight one-stage instance segmentation network, Mobile-DasNet, to perform fruit detection and instance segmentation on sensory data.

- Proposing a modified PointNet-based network to perform fruit modelling and grasping estimation using point clouds from an RGB-D camera.

- Applying and combining the aforementioned two features into the design and build of the accurate robotic system towards autonomous fruit harvesting.

2. Literature Review

2.1. Fruit Recognition

2.2. Grasping Estimation

3. Methods and Materials

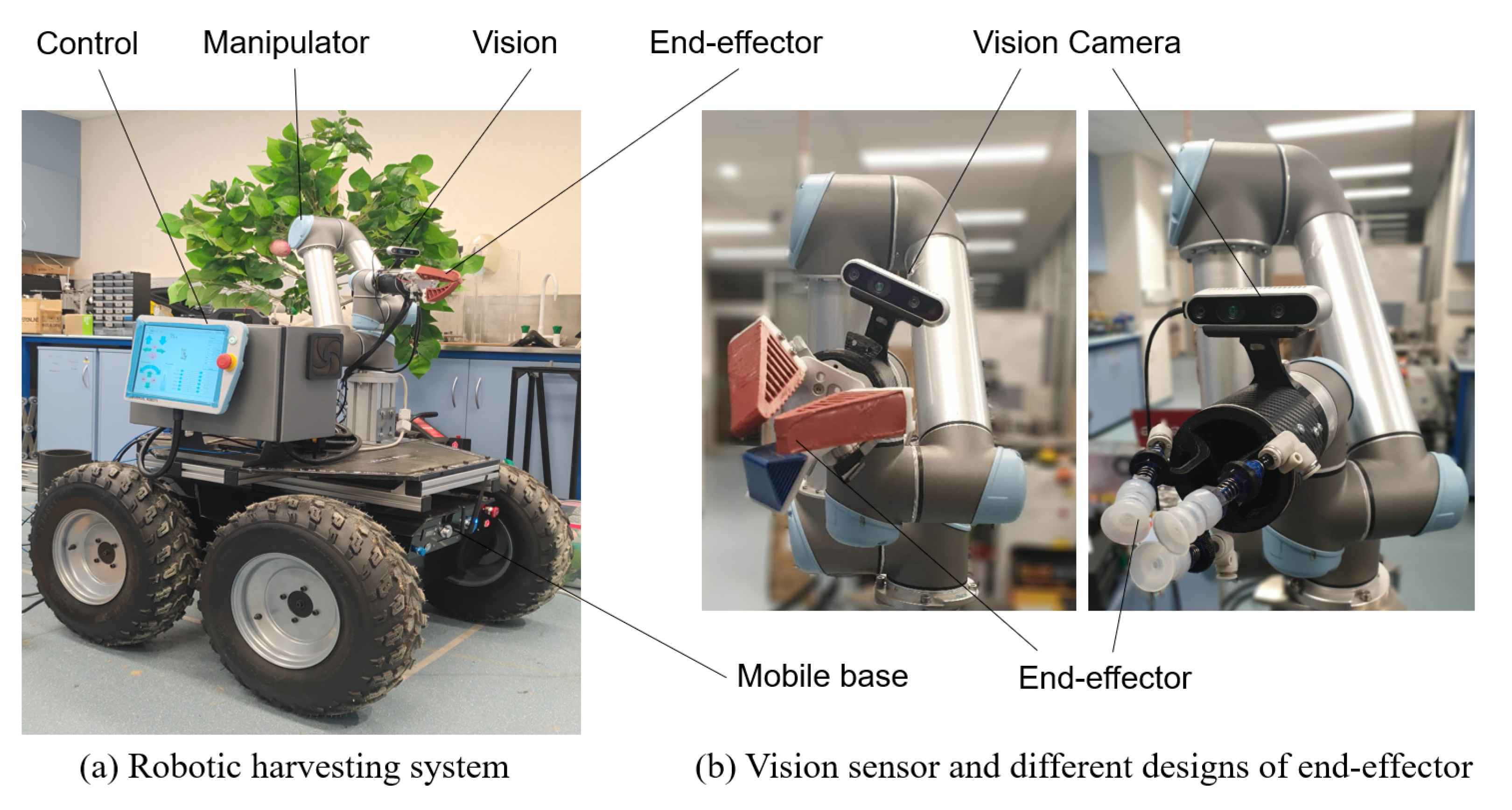

3.1. System Configuration

Software Design

3.2. Fruit Recognition

3.2.1. Network Architecture

3.2.2. Network Training

3.3. Grasping Estimation

3.3.1. Pose Representation

3.3.2. Pose Annotation

3.3.3. PointNet Architecture

3.3.4. Network Training

4. Experiment and Discussion

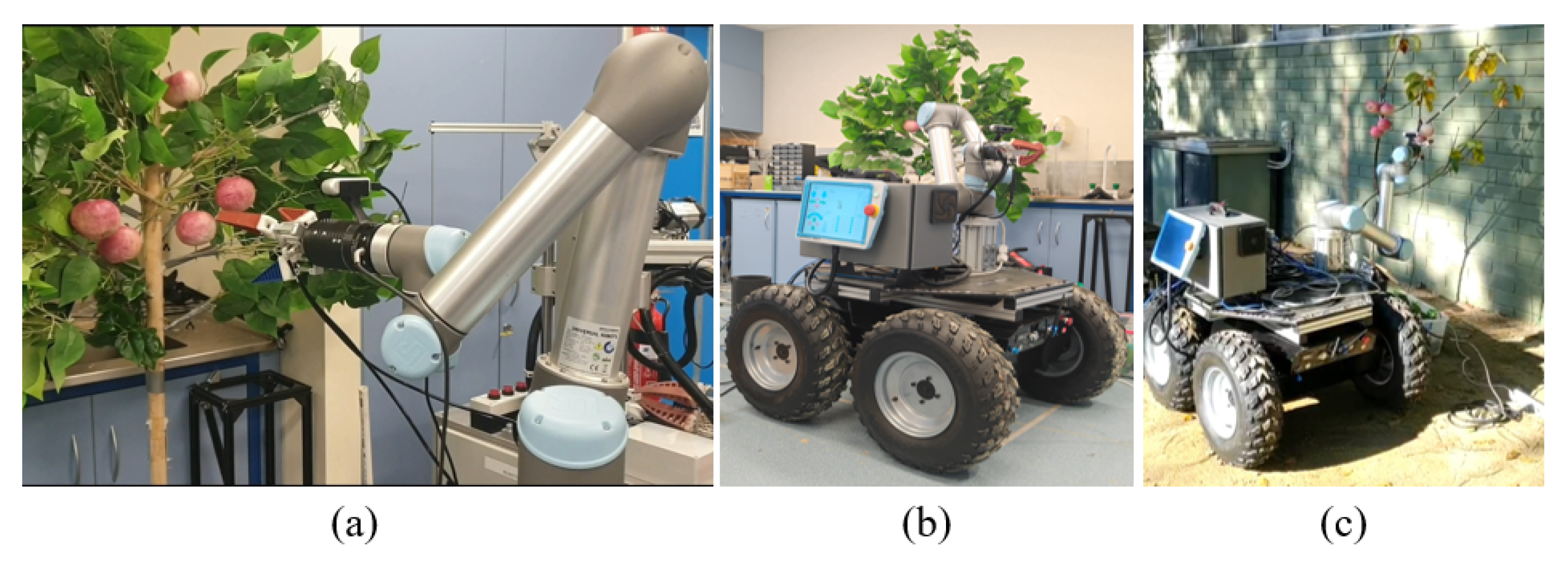

4.1. Experiment Setup

4.2. Image Data Experiments

4.2.1. Experiments in Laboratory Environment

4.2.2. Experiments in Orchards Environment

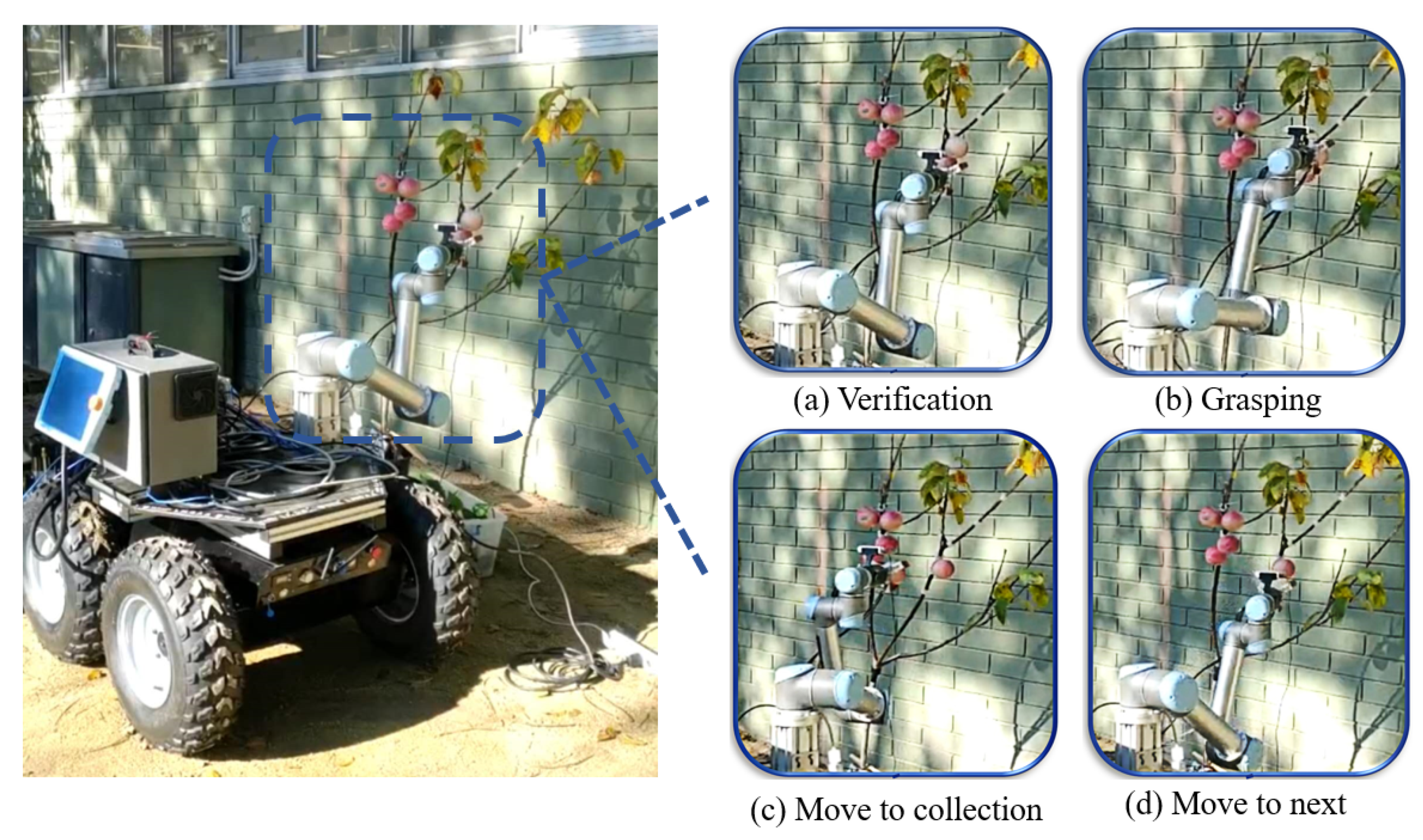

4.3. Experiments of Robotic Harvesting

4.4. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Vasconez, J.P.; Kantor, G.A.; Cheein, F.A.A. Human–robot interaction in agriculture: A survey and current challenges. Biosyst. Eng. 2019, 179, 35–48. [Google Scholar] [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. Deepfruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed]

- Bac, C.W.; van Henten, E.J.; Hemming, J.; Edan, Y. Harvesting robots for high-value crops: State-of-the-art review and challenges ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Zhao, Y.; Gong, L.; Huang, Y.; Liu, C. A review of key techniques of vision-based control for harvesting robot. Comput. Electron. Agric. 2016, 127, 311–323. [Google Scholar]

- Lin, G.; Tang, Y.; Zou, X.; Xiong, J.; Li, J. Guava detection and pose estimation using a low-cost RGB-D sensor in the field. Sensors 2019, 19, 428. [Google Scholar] [CrossRef]

- Kang, H.; Zhou, H.; Chen, C. Visual Perception and Modelling for Autonomous Apple Harvesting. IEEE Access 2020, 8, 62151–62163. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Vibhute, A.; Bodhe, S. Applications of image processing in agriculture: A survey. Int. J. Comput. Appl. 2012, 52. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Xiong, J.; Fang, Y. Color-, depth-, and shape-based 3D fruit detection. Precis. Agric. 2019, 1–17. [Google Scholar]

- Lin, G.; Tang, Y.; Zou, X.; Cheng, J.; Xiong, J. Fruit detection in natural environment using partial shape matching and probabilistic Hough transform. Precis. Agric. 2019, 1–18. [Google Scholar] [CrossRef]

- Fu, L.; Tola, E.; Al-Mallahi, A.; Li, R.; Cui, Y. A novel image processing algorithm to separate linearly clustered kiwifruits. Biosyst. Eng. 2019, 183, 184–195. [Google Scholar] [CrossRef]

- Kapach, K.; Barnea, E.; Mairon, R.; Edan, Y.; Ben-Shahar, O. Computer vision for fruit harvesting robots–state of the art and challenges ahead. Int. J. Comput. Vis. Robot. 2012, 3, 4–34. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Han, J.; Zhang, D.; Cheng, G.; Liu, N.; Xu, D. Advanced deep-learning techniques for salient and category-specific object detection: A survey. IEEE Signal Process. Mag. 2018, 35, 84–100. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.t.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, 2015, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Kang, H.; Chen, C. Fast implementation of real-time fruit detection in apple orchards using deep learning. Comput. Electron. Agric. 2020, 168, 105108. [Google Scholar] [CrossRef]

- Bargoti, S.; Underwood, J. Deep fruit detection in orchards. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Marina Bay Sands, Singapore, 29 May–3 June 2017; pp. 3626–3633. [Google Scholar]

- Yu, Y.; Zhang, K.; Yang, L.; Zhang, D. Fruit detection for strawberry harvesting robot in non-structural environment based on Mask-RCNN. Comput. Electron. Agric. 2019, 163, 104846. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Liu, Z.; Wu, J.; Fu, L.; Majeed, Y.; Feng, Y.; Li, R.; Cui, Y. Improved kiwifruit detection using pre-trained VGG16 with RGB and NIR information fusion. IEEE Access 2019, 8, 2327–2336. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.; Wang, Z.; McCarthy, C. Deep learning for real-time fruit detection and orchard fruit load estimation: Benchmarking of ‘MangoYOLO’. Precis. Agric. 2019, 1–29. [Google Scholar] [CrossRef]

- Kang, H.; Chen, C. Fruit detection and segmentation for apple harvesting using visual sensor in orchards. Sensors 2019, 19, 4599. [Google Scholar] [CrossRef]

- Kang, H.; Chen, C. Fruit detection, segmentation and 3d visualisation of environments in apple orchards. Comput. Electron. Agric. 2020, 171, 105302. [Google Scholar] [CrossRef]

- Chitta, S.; Jones, E.G.; Ciocarlie, M.; Hsiao, K. Perception, planning, and execution for mobile manipulation in unstructured environments. IEEE Robot. Autom. Mag. Spec. Issue Mob. Manip. 2012, 19, 58–71. [Google Scholar] [CrossRef]

- Caldera, S.; Rassau, A.; Chai, D. Review of deep learning methods in robotic grasp detection. Multimodal Technol. Interact. 2018, 2, 57. [Google Scholar] [CrossRef]

- Aldoma, A.; Marton, Z.; Tombari, F.; Wohlkinger, W.; Potthast, C.; Zeisl, B.; Vincze, M. Three-dimensional object recognition and 6 DoF pose estimation. IEEE Robot. Autom. Mag. 2012, 19, 80–91. [Google Scholar] [CrossRef]

- Ten Pas, A.; Gualtieri, M.; Saenko, K.; Platt, R. Grasp pose detection in point clouds. Int. J. Robot. Res. 2017, 36, 1455–1473. [Google Scholar] [CrossRef]

- Lenz, I.; Lee, H.; Saxena, A. Deep learning for detecting robotic grasps. Int. J. Robot. Res. 2015, 34, 705–724. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Gualtieri, M.; Ten Pas, A.; Saenko, K.; Platt, R. High precision grasp pose detection in dense clutter. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 598–605. [Google Scholar]

- Liang, H.; Ma, X.; Li, S.; Görner, M.; Tang, S.; Fang, B.; Sun, F.; Zhang, J. Pointnetgpd: Detecting grasp configurations from point sets. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3629–3635. [Google Scholar]

- Si, Y.; Liu, G.; Feng, J. Location of apples in trees using stereoscopic vision. Comput. Electron. Agric. 2015, 112, 68–74. [Google Scholar] [CrossRef]

- Yaguchi, H.; Nagahama, K.; Hasegawa, T.; Inaba, M. Development of an autonomous tomato harvesting robot with rotational plucking gripper. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 652–657. [Google Scholar]

- Onishi, Y.; Yoshida, T.; Kurita, H.; Fukao, T.; Arihara, H.; Iwai, A. An automated fruit harvesting robot by using deep learning. Robomech J. 2019, 6, 13. [Google Scholar] [CrossRef]

- Lehnert, C.; Sa, I.; McCool, C.; Upcroft, B.; Perez, T. Sweet pepper pose detection and grasping for automated crop harvesting. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2428–2434. [Google Scholar]

- Lehnert, C.; English, A.; McCool, C.; Tow, A.W.; Perez, T. Autonomous sweet pepper harvesting for protected cropping systems. IEEE Robot. Autom. Lett. 2017, 2, 872–879. [Google Scholar] [CrossRef]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. Available online: https://www.willowgarage.com/sites/default/files/icraoss09-ROS.pdf (accessed on 1 October 2020).

- Sucan, I.A.; Chitta, S. Moveit! Available online: https://www.researchgate.net/profile/Sachin_Chitta/publication/254057457_MoveitROS_topics/links/565a2a0608aefe619b232fa8.pdf (accessed on 1 October 2020).

- Beeson, P.; Ames, B. TRAC-IK: An open-source library for improved solving of generic inverse kinematics. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Korea, 3–5 November 2015; pp. 928–935. [Google Scholar]

- Crooks, W.; Vukasin, G.; O’Sullivan, M.; Messner, W.; Rogers, C. Fin ray® effect inspired soft robotic gripper: From the robosoft grand challenge toward optimization. Front. Robot. 2016, 3, 70. [Google Scholar] [CrossRef]

- Wang, X.; Khara, A.; Chen, C. A soft pneumatic bistable reinforced actuator bioinspired by Venus Flytrap with enhanced grasping capability. Bioinspir. Biomimetics 2020, 15, 056017. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Yu, J.; Yao, J.; Zhang, J.; Yu, Z.; Tao, D. SPRNet: Single-Pixel Reconstruction for One-Stage Instance Segmentation. IEEE Trans. Cybern. 2020, 1–12. [Google Scholar] [CrossRef]

- Tzutalin. LabelImg. Git Code (2015). Available online: https://github.com/tzutalin/labelImg (accessed on 30 September 2020).

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3d object detection from rgb-d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake, UT, USA, 18–22 June 2018; pp. 918–927. [Google Scholar]

- Xu, J.; Ma, Y.; He, S.; Zhu, J. 3D-GIoU: 3D Generalized Intersection over Union for Object Detection in Point Cloud. Sensors 2019, 19, 4093. [Google Scholar] [CrossRef]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Torii, A.; Imiya, A. The randomized-Hough-transform-based method for great-circle detection on sphere. Pattern Recognit. Lett. 2007, 28, 1186–1192. [Google Scholar] [CrossRef]

| Normal | Noise | Outlier | Dense Clutter | Noise+Outlier+Dense Clutter | |

|---|---|---|---|---|---|

| PointNet | 0.94 | 0.92 | 0.93 | 0.91 | 0.89 |

| RANSAC | 0.82 | 0.71 | 0.81 | 0.74 | 0.61 |

| HT | 0.81 | 0.67 | 0.79 | 0.73 | 0.63 |

| Normal | Noise | Outlier | Dense Clutter | Noise+Outlier+Dense Clutter | |

|---|---|---|---|---|---|

| PointNet | 4.2 | 5.4 | 4.6 | 6.8 | 7.5 |

| F Score | mAP | Recall | Accuracy | IoU | Running Speed | |

|---|---|---|---|---|---|---|

| DasNet | 0.884 | 0.905 | 0.88 | 0.91 | 0.873 | 25 FPS |

| Mobile-DasNet | 0.851 | 0.863 | 0.826 | 0.9 | 0.82 | 63 FPS |

| PointNet | RANSAC | HT | |

|---|---|---|---|

| Accuracy | 0.88 | 0.76 | 0.78 |

| Grasp Orientation | 6.6 | - | - |

| Harvesting Method | Pose Prediction Success Rate | Harvesting Success Rate | Re-Attempt Times | |

|---|---|---|---|---|

| Indoor | Naive | - | 0.73 | 1.5 |

| Indoor | Pose prediction enabled | 0.88 | 0.85 | 1.2 |

| Outdoor | Naive | - | 0.72 | 1.6 |

| Outdoor | Pose prediction enabled | 0.83 | 0.8 | 1.3 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, H.; Zhou, H.; Wang, X.; Chen, C. Real-Time Fruit Recognition and Grasping Estimation for Robotic Apple Harvesting. Sensors 2020, 20, 5670. https://doi.org/10.3390/s20195670

Kang H, Zhou H, Wang X, Chen C. Real-Time Fruit Recognition and Grasping Estimation for Robotic Apple Harvesting. Sensors. 2020; 20(19):5670. https://doi.org/10.3390/s20195670

Chicago/Turabian StyleKang, Hanwen, Hongyu Zhou, Xing Wang, and Chao Chen. 2020. "Real-Time Fruit Recognition and Grasping Estimation for Robotic Apple Harvesting" Sensors 20, no. 19: 5670. https://doi.org/10.3390/s20195670

APA StyleKang, H., Zhou, H., Wang, X., & Chen, C. (2020). Real-Time Fruit Recognition and Grasping Estimation for Robotic Apple Harvesting. Sensors, 20(19), 5670. https://doi.org/10.3390/s20195670