Motion Capture Technology in Industrial Applications: A Systematic Review

Abstract

:1. Introduction

2. Materials and Methods

2.1. Search Strategy

2.2. Assessment of Risk of Bias

2.3. Data Extraction

3. Results

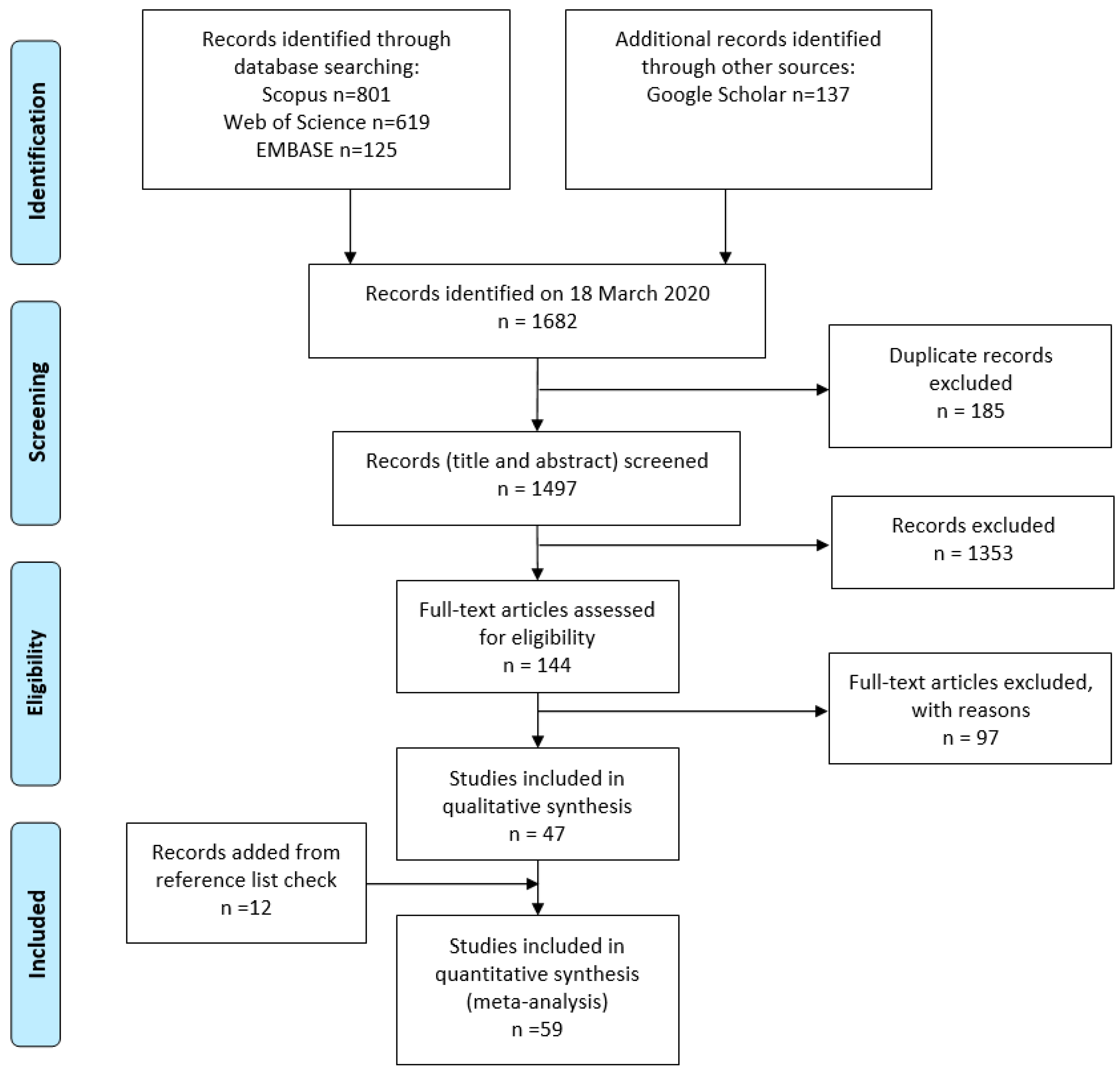

3.1. Search Results

3.2. Risk Assessment

3.3. MoCap Technologies in Industry

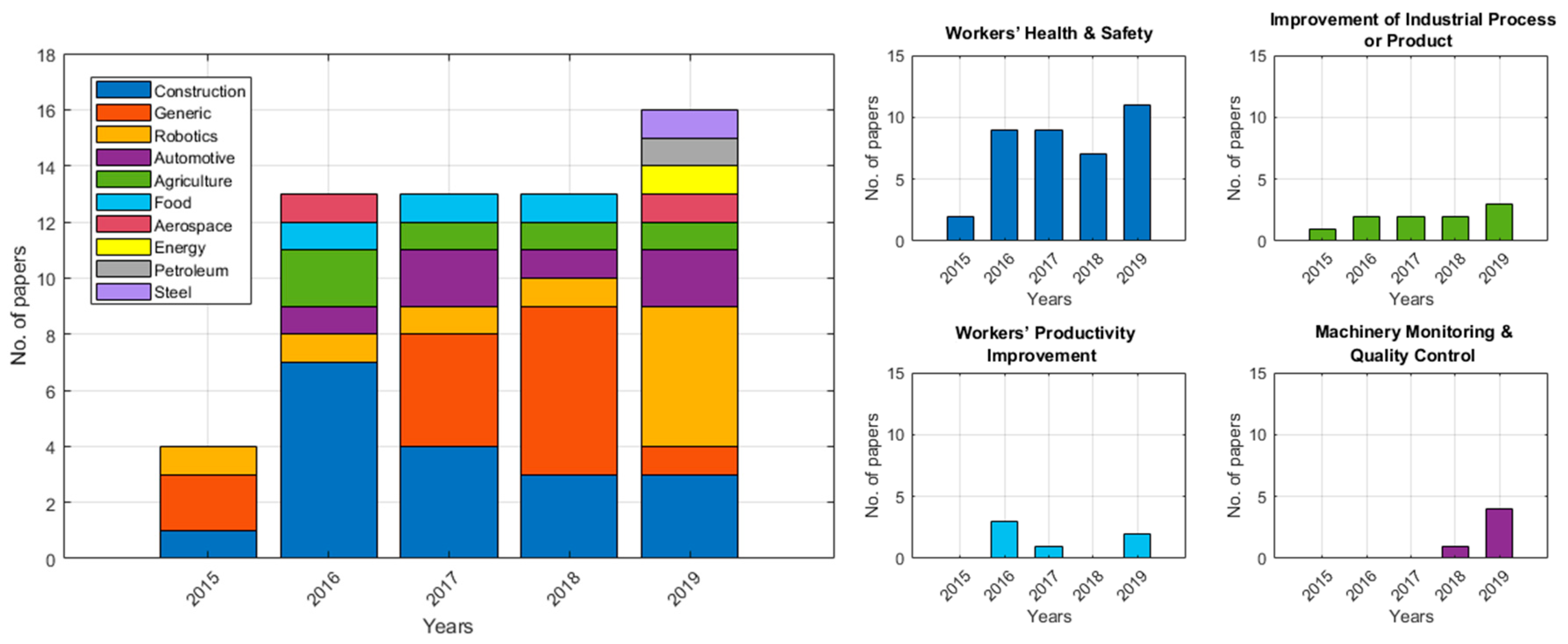

3.4. Types of Industry Sectors

3.5. MoCap Industrial Applications

3.6. MoCap Data Processing

3.7. Study Designs and Accuracy Assessments

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Database | Search String |

|---|---|

| Embase | (‘motion analys*’:ab,kw,ti OR ‘movement analys*’:ab,kw,ti OR ‘movement monitor*’:ab,kw,ti OR ‘biomech*’:ab,kw,ti OR ‘kinematic*’:ab,kw,ti OR ‘position*’:ab,kw,ti OR ‘location*’:ab,kw,ti) AND (‘motion capture’:ab,kw,ti OR ‘mocap’:ab,kw,ti OR ‘acceleromet*’:ab,kw,ti OR ‘motion tracking’:ab,kw,ti OR ‘wearable sensor*’:ab,kw,ti OR ‘inertial sensor*’:ab,kw,ti OR ‘inertial measur*’:ab,kw,ti OR ‘imu’:ab,kw,ti OR ‘magnetomet*’:ab,kw,ti OR ‘gyroscop*’:ab,kw,ti OR ‘mems’:ab,kw,ti) AND (‘industr*’:ab,kw,ti OR ‘manufactur*’:ab,kw,ti OR ‘occupation*’:ab,kw,ti OR ‘factory’:ab,kw,ti OR ‘assembly’:ab,kw,ti OR ‘safety’:ab,kw,ti) NOT (‘animal’:ti,kw OR ‘surg*’:ti,kw OR ‘rehabilitation’:ti,kw OR ‘disease*’:ti,kw OR ‘sport*’:kw,ti OR ‘therap*’:kw,ti OR ‘treatment’:kw,ti OR ‘patient’:kw,ti) AND ([article]/lim OR [article in press]/lim OR [conference paper]/lim OR [conference review]/lim OR [data papers]/lim OR [review]/lim) AND [english]/lim AND [abstracts]/lim AND ([embase]/lim OR [medline]/lim OR [pubmed-not-medline]/lim) AND [2015–2020]/py AND [medline]/lim |

| Scopus | TITLE-ABS-KEY (“motion analys*” OR “movement analys*” OR “movement monitor*” OR biomech* OR kinematic* OR position* OR location*) AND TITLE-ABS-KEY(“motion capture” OR mocap OR acceleromet* OR “motion tracking” OR wearable sensor* OR “inertial sensor*” OR “inertial measur*” OR imu OR magnetomet* OR gyroscop* OR mems) AND TITLE-ABS-KEY(industr* OR manufactur* OR occupation* OR factory OR assembly OR safety) AND PUBYEAR > 2014 AND (LIMIT-TO (PUBSTAGE, “final”)) AND (LIMIT-TO (DOCTYPE, “cp”) OR LIMIT-TO (DOCTYPE, “ar”) OR LIMIT-TO (DOCTYPE, “cr”) OR LIMIT-TO (DOCTYPE, “re”)) |

| Web of Science | TS = (imu OR “wearable sensor*” OR wearable*) AND TS = (“motion analys*” OR “motion track*” OR “movement analys*” OR “motion analys*” OR biomech* OR kinematic*) AND TS = (industr* OR manufactur* OR occupation* OR factory OR assembly OR safety) NOT TS = (animal* OR patient*) NOT TS = (surg* OR rehabilitation OR disease* OR sport* OR therap* OR treatment* OR rehabilitation OR “energy harvest*”) |

| Google Scholar | (“motion|movement analysis|monitoring” OR biomechanics OR kinematic OR position OR Location) (“motion capture|tracking” OR mocap OR accelerometer OR “wearable|inertial sensor|measuring” OR mems) (industry OR manufacturing OR occupation OR factory OR safety) |

References

- Zhang, Z. Microsoft Kinect Sensor and Its Effect. IEEE MultiMedia 2012, 19, 4–10. [Google Scholar] [CrossRef] [Green Version]

- Roetenberg, D.; Luinge, H.; Slycke, P. Xsens MVN: Full 6DOF human motion tracking using miniature inertial sensors. Xsens Motion Technologies BV. Tech. Rep. 2009, 1. [Google Scholar]

- Bohannon, R.W.; Harrison, S.; Kinsella-Shaw, J. Reliability and validity of pendulum test measures of spasticity obtained with the Polhemus tracking system from patients with chronic stroke. J. Neuroeng. Rehabil. 2009, 6, 30. [Google Scholar] [CrossRef] [Green Version]

- Park, Y.; Lee, J.; Bae, J. Development of a wearable sensing glove for measuring the motion of fingers using linear potentiometers and flexible wires. IEEE Trans. Ind. Inform. 2014, 11, 198–206. [Google Scholar] [CrossRef]

- Bentley, M. Wireless and Visual Hybrid Motion Capture System. U.S. Patent 9,320,957, 26 April 2016. [Google Scholar]

- Komaris, D.-S.; Perez-Valero, E.; Jordan, L.; Barton, J.; Hennessy, L.; O’Flynn, B.; Tedesco, S.; OrFlynn, B. Predicting three-dimensional ground reaction forces in running by using artificial neural networks and lower body kinematics. IEEE Access 2019, 7, 156779–156786. [Google Scholar] [CrossRef]

- Jin, M.; Zhao, J.; Jin, J.; Yu, G.; Li, W. The adaptive Kalman filter based on fuzzy logic for inertial motion capture system. Measurement 2014, 49, 196–204. [Google Scholar] [CrossRef]

- Komaris, D.-S.; Govind, C.; Clarke, J.; Ewen, A.; Jeldi, A.; Murphy, A.; Riches, P.L. Identifying car ingress movement strategies before and after total knee replacement. Int. Biomech. 2020, 7, 9–18. [Google Scholar] [CrossRef] [Green Version]

- Aminian, K.; Najafi, B. Capturing human motion using body-fixed sensors: Outdoor measurement and clinical applications. Comput. Animat. Virtual Worlds 2004, 15, 79–94. [Google Scholar] [CrossRef]

- Tamir, M.; Oz, G. Real-Time Objects Tracking and Motion Capture in Sports Events. U.S. Patent Application No. 11/909,080, 14 August 2008. [Google Scholar]

- Bregler, C. Motion capture technology for entertainment [in the spotlight]. IEEE Signal. Process. Mag. 2007, 24. [Google Scholar] [CrossRef]

- Geng, W.; Yu, G. Reuse of motion capture data in animation: A Review. In Proceedings of the Lecture Notes in Computer Science; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2003; pp. 620–629. [Google Scholar]

- Field, M.; Stirling, D.; Naghdy, F.; Pan, Z. Motion capture in robotics review. In Proceedings of the 2009 IEEE International Conference on Control and Automation; Institute of Electrical and Electronics Engineers (IEEE), Christchurch, New Zealand, 9–11 December 2009; pp. 1697–1702. [Google Scholar]

- Plantard, P.; Shum, H.P.H.; Le Pierres, A.-S.; Multon, F. Validation of an ergonomic assessment method using Kinect data in real workplace conditions. Appl. Ergon. 2017, 65, 562–569. [Google Scholar] [CrossRef]

- Valero, E.; Sivanathan, A.; Bosché, F.; Abdel-Wahab, M. Analysis of construction trade worker body motions using a wearable and wireless motion sensor network. Autom. Constr. 2017, 83, 48–55. [Google Scholar] [CrossRef]

- Brigante, C.M.N.; Abbate, N.; Basile, A.; Faulisi, A.C.; Sessa, S. Towards miniaturization of a MEMS-based wearable motion capture system. IEEE Trans. Ind. Electron. 2011, 58, 3234–3241. [Google Scholar] [CrossRef]

- Dong, M.; Li, J.; Chou, W. A new positioning method for remotely operated vehicle of the nuclear power plant. Ind. Robot. Int. J. 2019, 47, 177–186. [Google Scholar] [CrossRef]

- Hondori, H.M.; Khademi, M. A review on technical and clinical impact of microsoft kinect on physical therapy and rehabilitation. J. Med. Eng. 2014, 2014, 1–16. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barris, S.; Button, C. A review of vision-based motion analysis in sport. Sports Med. 2008, 38, 1025–1043. [Google Scholar] [CrossRef] [PubMed]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, U.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Loconsole, C.; Leonardis, D.; Barsotti, M.; Solazzi, M.; Frisoli, A.; Bergamasco, M.; Troncossi, M.; Foumashi, M.M.; Mazzotti, C.; Castelli, V.P. An emg-based robotic hand exoskeleton for bilateral training of grasp. In Proceedings of the 2013 World Haptics Conference (WHC); Institute of Electrical and Electronics Engineers (IEEE), Daejeon, Korea, 14–17 April 2013; pp. 537–542. [Google Scholar]

- Downes, M.J.; Brennan, M.L.; Williams, H.C.; Dean, R.S. Development of a critical appraisal tool to assess the quality of cross-sectional studies (AXIS). BMJ Open 2016, 6, e011458. [Google Scholar] [CrossRef] [Green Version]

- Bortolini, M.; Faccio, M.; Gamberi, M.; Pilati, F. Motion Analysis System (MAS) for production and ergonomics assessment in the manufacturing processes. Comput. Ind. Eng. 2020, 139, 105485. [Google Scholar] [CrossRef]

- Akhavian, R.; Behzadan, A.H. Productivity analysis of construction worker activities using smartphone sensors. In Proceedings of the 16th International Conference on Computing in Civil and Building Engineering (ICCCBE2016), Osaka, Japan, 6–8 July 2016. [Google Scholar]

- Krüger, J.; Nguyen, T.D. Automated vision-based live ergonomics analysis in assembly operations. CIRP Ann. 2015, 64, 9–12. [Google Scholar] [CrossRef]

- Austad, H.; Wiggen, Ø.; Færevik, H.; Seeberg, T.M. Towards a wearable sensor system for continuous occupational cold stress assessment. Ind. Health 2018, 56, 228–240. [Google Scholar] [CrossRef] [Green Version]

- Brents, C.; Hischke, M.; Reiser, R.; Rosecrance, J.C. Low Back Biomechanics of Keg Handling Using Inertial Measurement Units. In Software Engineering in Intelligent Systems; Springer Science and Business Media: Berlin/Heidelberg, Germany, 2018; Volume 825, pp. 71–81. [Google Scholar]

- Caputo, F.; Greco, A.; D’Amato, E.; Notaro, I.; Sardo, M.L.; Spada, S.; Ghibaudo, L. A human postures inertial tracking system for ergonomic assessments. In Proceedings of the 20th Congress of the International Ergonomics Association (IEA 2018), Florence, Italy, 26–30 August 2018; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2018; Volume 825, pp. 173–184. [Google Scholar]

- Greco, A.; Muoio, M.; Lamberti, M.; Gerbino, S.; Caputo, F.; Miraglia, N. Integrated wearable devices for evaluating the biomechanical overload in manufacturing. In Proceedings of the 2019 II Workshop on Metrology for Industry 4.0 and IoT (MetroInd4.0&IoT), Naples, Italy, 4–6 June 2019; pp. 93–97. [Google Scholar] [CrossRef]

- Lin, H.J.; Chang, H.S. In-process monitoring of micro series spot welding using dual accelerometer system. Weld. World 2019, 63, 1641–1654. [Google Scholar] [CrossRef]

- Malaisé, A.; Maurice, P.; Colas, F.; Charpillet, F.; Ivaldi, S. Activity Recognition with Multiple Wearable Sensors for Industrial Applications. In Proceedings of the ACHI 2018-Eleventh International Conference on Advances in Computer-Human Interactions, Rome, Italy, 25 March 2018. [Google Scholar]

- Zhang, Y.; Zhang, C.; Nestler, R.; Notni, G. Efficient 3D object tracking approach based on convolutional neural network and Monte Carlo algorithms used for a pick and place robot. Photonics Educ. Meas. Sci. 2019, 11144, 1114414. [Google Scholar] [CrossRef] [Green Version]

- Tuli, T.B.; Manns, M. Real-time motion tracking for humans and robots in a collaborative assembly task. Proceedings 2020, 42, 48. [Google Scholar] [CrossRef] [Green Version]

- Agethen, P.; Otto, M.; Mengel, S.; Rukzio, E. Using marker-less motion capture systems for walk path analysis in paced assembly flow lines. Procedia CIRP 2016, 54, 152–157. [Google Scholar] [CrossRef] [Green Version]

- Fletcher, S.; Johnson, T.; Thrower, J. A study to trial the use of inertial non-optical motion capture for ergonomic analysis of manufacturing work. Proc. Inst. Mech. Eng. Part. B J. Eng. Manuf. 2016, 232, 90–98. [Google Scholar] [CrossRef] [Green Version]

- Kim, K.; Chen, J.; Cho, Y.K. Evaluation of machine learning algorithms for worker’s motion recognition using motion sensors. Comput. Civ. Eng. 2019, 51–58. [Google Scholar]

- McGregor, A.; Dobie, G.; Pearson, N.; MacLeod, C.; Gachagan, A. Mobile robot positioning using accelerometers for pipe inspection. In Proceedings of the 14th International Conference on Concentrator Photovoltaic Systems, Puertollano, Spain, 16–18 April 2018; AIP Publishing: Melville, NY, USA, 2019; Volume 2102, p. 060004. [Google Scholar]

- Müller, B.C.; Nguyen, T.D.; Dang, Q.-V.; Duc, B.M.; Seliger, G.; Krüger, J.; Kohl, H. Motion tracking applied in assembly for worker training in different locations. Procedia CIRP 2016, 48, 460–465. [Google Scholar] [CrossRef] [Green Version]

- Nath, N.D.; Akhavian, R.; Behzadan, A.H. Ergonomic analysis of construction worker’s body postures using wearable mobile sensors. Appl. Ergon. 2017, 62, 107–117. [Google Scholar] [CrossRef] [Green Version]

- Papaioannou, S.; Markham, A.; Trigoni, N. Tracking people in highly dynamic industrial environments. IEEE Trans. Mob. Comput. 2016, 16, 2351–2365. [Google Scholar] [CrossRef]

- Ragaglia, M.; Zanchettin, A.M.; Rocco, P. Trajectory generation algorithm for safe human-robot collaboration based on multiple depth sensor measurements. Mechatronics 2018, 55, 267–281. [Google Scholar] [CrossRef]

- Scimmi, L.S.; Melchiorre, M.; Mauro, S.; Pastorelli, S.P. Implementing a Vision-Based Collision Avoidance Algorithm on a UR3 Robot. In Proceedings of the 2019 23rd International Conference on Mechatronics Technology (ICMT), Salerno, Italy, 23–26 October 2019; Institute of Electrical and Electronics Engineers (IEEE): New York City, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Sestito, A.G.; Frasca, T.M.; O’Rourke, A.; Ma, L.; Dow, D.E. Control for camera of a telerobotic human computer interface. Educ. Glob. 2015, 5. [Google Scholar] [CrossRef]

- Yang, K.; Ahn, C.; Vuran, M.C.; Kim, H. Sensing Workers gait abnormality for safety hazard identification. In Proceedings of the 33rd International Symposium on Automation and Robotics in Construction (ISARC), Auburn, AL, USA, 18–21 July 2016; pp. 957–965. [Google Scholar]

- Tarabini, M.; Marinoni, M.; Mascetti, M.; Marzaroli, P.; Corti, F.; Giberti, H.; Villa, A.; Mascagni, P. Monitoring the human posture in industrial environment: A feasibility study. In Proceedings of the 2018 IEEE Sensors Applications Symposium (SAS), Seoul, Korea, 12–14 March 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Jha, A.; Chiddarwar, S.S.; Bhute, R.Y.; Alakshendra, V.; Nikhade, G.; Khandekar, P.M. Imitation learning in industrial robots. In Proceedings of the Advances in Robotics on-AIR ’17, New Delhi, India, 28 June–2 July 2017; pp. 1–6. [Google Scholar]

- Lim, T.-K.; Park, S.-M.; Lee, H.-C.; Lee, D.-E. Artificial neural network-based slip-trip classifier using smart sensor for construction workplace. J. Constr. Eng. Manag. 2016, 142, 04015065. [Google Scholar] [CrossRef]

- Maman, Z.S.; Yazdi, M.A.A.; Cavuoto, L.A.; Megahed, F.M. A data-driven approach to modeling physical fatigue in the workplace using wearable sensors. Appl. Ergon. 2017, 65, 515–529. [Google Scholar] [CrossRef] [PubMed]

- Merino, G.S.A.D.; Da Silva, L.; Mattos, D.; Guimarães, B.; Merino, E.A.D. Ergonomic evaluation of the musculoskeletal risks in a banana harvesting activity through qualitative and quantitative measures, with emphasis on motion capture (Xsens) and EMG. Int. J. Ind. Ergon. 2019, 69, 80–89. [Google Scholar] [CrossRef]

- Monaco, M.G.L.; Fiori, L.; Marchesi, A.; Greco, A.; Ghibaudo, L.; Spada, S.; Caputo, F.; Miraglia, N.; Silvetti, A.; Draicchio, F. Biomechanical overload evaluation in manufacturing: A novel approach with sEMG and inertial motion capture integration. In Software Engineering in Intelligent Systems; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2018; Volume 818, pp. 719–726. [Google Scholar]

- Monaco, M.G.L.; Marchesi, A.; Greco, A.; Fiori, L.; Silvetti, A.; Caputo, F.; Miraglia, N.; Draicchio, F. Biomechanical load evaluation by means of wearable devices in industrial environments: An inertial motion capture system and sEMG based protocol. In Software Engineering in Intelligent Systems; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2018; Volume 795, pp. 233–242. [Google Scholar]

- Mueller, F.; Deuerlein, C.; Koch, M. Intuitive welding robot programming via motion capture and augmented reality. IFAC-PapersOnLine 2019, 52, 294–299. [Google Scholar] [CrossRef]

- Nahavandi, D.; Hossny, M. Skeleton-free RULA ergonomic assessment using Kinect sensors. Intell. Decis. Technol. 2017, 11, 275–284. [Google Scholar] [CrossRef]

- Peppoloni, L.; Filippeschi, A.; Ruffaldi, E.; Avizzano, C.A. A novel wearable system for the online assessment of risk for biomechanical load in repetitive efforts. Int. J. Ind. Ergon. 2016, 52, 1–11. [Google Scholar] [CrossRef]

- Seo, J.; Alwasel, A.A.; Lee, S.; Abdel-Rahman, E.M.; Haas, C. A comparative study of in-field motion capture approaches for body kinematics measurement in construction. Robotica 2017, 37, 928–946. [Google Scholar] [CrossRef]

- Tao, Q.; Kang, J.; Sun, W.; Li, Z.; Huo, X. Digital evaluation of sitting posture comfort in human-vehicle system under industry 4.0 framework. Chin. J. Mech. Eng. 2016, 29, 1096–1103. [Google Scholar] [CrossRef] [Green Version]

- Wang, W.; Li, R.; Diekel, Z.M.; Chen, Y.; Zhang, Z.; Jia, Y. Controlling object hand-over in human-robot collaboration via natural wearable sensing. IEEE Trans. Hum. Mach. Syst. 2019, 49, 59–71. [Google Scholar] [CrossRef]

- Yang, K.; Jebelli, H.; Ahn, C.R.; Vuran, M.C. Threshold-Based Approach to Detect Near-Miss Falls of Iron Workers Using Inertial Measurement Units. Comput. Civ. Eng. 2015, 148–155. [Google Scholar] [CrossRef]

- Yang, K.; Ahn, C.; Kim, H. Validating ambulatory gait assessment technique for hazard sensing in construction environments. Autom. Constr. 2019, 98, 302–309. [Google Scholar] [CrossRef]

- Yang, K.; Ahn, C.; Vuran, M.C.; Kim, H. Collective sensing of workers’ gait patterns to identify fall hazards in construction. Autom. Constr. 2017, 82, 166–178. [Google Scholar] [CrossRef]

- Albert, D.L.; Beeman, S.M.; Kemper, A.R. Occupant kinematics of the Hybrid III, THOR-M, and postmortem human surrogates under various restraint conditions in full-scale frontal sled tests. Traffic Inj. Prev. 2018, 19, S50–S58. [Google Scholar] [CrossRef]

- Cardoso, M.; McKinnon, C.; Viggiani, D.; Johnson, M.J.; Callaghan, J.P.; Albert, W.J. Biomechanical investigation of prolonged driving in an ergonomically designed truck seat prototype. Ergonomics 2017, 61, 367–380. [Google Scholar] [CrossRef]

- Ham, Y.; Yoon, H. Motion and visual data-driven distant object localization for field reporting. J. Comput. Civ. Eng. 2018, 32, 04018020. [Google Scholar] [CrossRef]

- Herwan, J.; Kano, S.; Ryabov, O.; Sawada, H.; Kasashima, N.; Misaka, T. Retrofitting old CNC turning with an accelerometer at a remote location towards Industry 4.0. Manuf. Lett. 2019, 21, 56–59. [Google Scholar] [CrossRef]

- Jebelli, H.; Ahn, C.R.; Stentz, T.L. Comprehensive fall-risk assessment of construction workers using inertial measurement units: Validation of the gait-stability metric to assess the fall risk of iron workers. J. Comput. Civ. Eng. 2016, 30, 04015034. [Google Scholar] [CrossRef]

- Kim, H.; Ahn, C.; Yang, K. Identifying safety hazards using collective bodily responses of workers. J. Constr. Eng. Manag. 2017, 143, 04016090. [Google Scholar] [CrossRef]

- Oyekan, J.; Prabhu, V.; Tiwari, A.; Baskaran, V.; Burgess, M.; McNally, R. Remote real-time collaboration through synchronous exchange of digitised human–workpiece interactions. Futur. Gener. Comput. Syst. 2017, 67, 83–93. [Google Scholar] [CrossRef] [Green Version]

- Prabhu, V.A.; Song, B.; Thrower, J.; Tiwari, A.; Webb, P. Digitisation of a moving assembly operation using multiple depth imaging sensors. Int. J. Adv. Manuf. Technol. 2015, 85, 163–184. [Google Scholar] [CrossRef] [Green Version]

- Yang, K.; Ahn, C.; Vuran, M.C.; Aria, S.S. Semi-supervised near-miss fall detection for ironworkers with a wearable inertial measurement unit. Autom. Constr. 2016, 68, 194–202. [Google Scholar] [CrossRef] [Green Version]

- Zhong, H.; Kanhere, S.S.; Chou, C.T. WashInDepth: Lightweight hand wash monitor using depth sensor. In Proceedings of the 13th Annual International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, Hiroshima, Japan, 28 November–1 December 2016; pp. 28–37. [Google Scholar]

- Baghdadi, A.; Megahed, F.M.; Esfahani, E.T.; Cavuoto, L.A. A machine learning approach to detect changes in gait parameters following a fatiguing occupational task. Ergonomics 2018, 61, 1116–1129. [Google Scholar] [CrossRef]

- Balaguier, R.; Madeleine, P.; Rose-Dulcina, K.; Vuillerme, N. Trunk kinematics and low back pain during pruning among vineyard workers-A field study at the Chateau Larose-Trintaudon. PLoS ONE 2017, 12, e0175126. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Faber, G.S.; Koopman, A.S.; Kingma, I.; Chang, C.; Dennerlein, J.T.; Van Dieën, J.H. Continuous ambulatory hand force monitoring during manual materials handling using instrumented force shoes and an inertial motion capture suit. J. Biomech. 2018, 70, 235–241. [Google Scholar] [CrossRef] [Green Version]

- Hallman, D.M.; Jørgensen, M.B.; Holtermann, A. Objectively measured physical activity and 12-month trajectories of neck–shoulder pain in workers: A prospective study in DPHACTO. Scand. J. Public Health 2017, 45, 288–298. [Google Scholar] [CrossRef]

- Jebelli, H.; Ahn, C.; Stentz, T.L. Fall risk analysis of construction workers using inertial measurement units: Validating the usefulness of the postural stability metrics in construction. Saf. Sci. 2016, 84, 161–170. [Google Scholar] [CrossRef]

- Kim, H.; Ahn, C.; Stentz, T.L.; Jebelli, H. Assessing the effects of slippery steel beam coatings to ironworkers’ gait stability. Appl. Ergon. 2018, 68, 72–79. [Google Scholar] [CrossRef]

- Mehrizi, R.; Peng, X.; Xu, X.; Zhang, S.; Metaxas, D.; Li, K. A computer vision based method for 3D posture estimation of symmetrical lifting. J. Biomech. 2018, 69, 40–46. [Google Scholar] [CrossRef]

- Chen, H.; Luo, X.; Zheng, Z.; Ke, J. A proactive workers’ safety risk evaluation framework based on position and posture data fusion. Autom. Constr. 2019, 98, 275–288. [Google Scholar] [CrossRef]

- Dutta, T. Evaluation of the Kinect™ sensor for 3-D kinematic measurement in the workplace. Appl. Ergon. 2012, 43, 645–649. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ferrari, E.; Gamberi, M.; Pilati, F.; Regattieri, A. Motion Analysis System for the digitalization and assessment of manual manufacturing and assembly processes. IFAC-PapersOnLine 2018, 51, 411–416. [Google Scholar] [CrossRef]

- Van Der Kruk, E.; Reijne, M.M. Accuracy of human motion capture systems for sport applications; state-of-the-art review. Eur. J. Sport Sci. 2018, 18, 806–819. [Google Scholar] [CrossRef] [PubMed]

- Kim, G.; Menon, R. Computational imaging enables a “see-through” lens-less camera. Opt. Express 2018, 26, 22826–22836. [Google Scholar] [CrossRef] [PubMed]

- Abraham, L.; Urru, A.; Wilk, M.P.; Tedesco, S.; O’Flynn, B. 3D ranging and tracking using lensless smart sensors. In Proceedings of the 11th Smart Systems Integration, SSI 2017: International Conference and Exhibition on Integration Issues of Miniaturized Systems, Cork, Ireland, 8–9 March 2017; pp. 1–8. [Google Scholar]

- Normani, N.; Urru, A.; Abraham, A.; Walsh, M.; Tedesco, S.; Cenedese, A.; Susto, G.A.; O’Flynn, B. A machine learning approach for gesture recognition with a lensless smart sensor system. In Proceedings of the 2018 IEEE 15th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Las Vegas, NV, USA, 4–7 March 2018; pp. 136–139. [Google Scholar] [CrossRef]

| Question Number | AXIS Question Code | INTRODUCTION |

|---|---|---|

| Q1 | 1 | Were the aims/objectives of the study clear? |

| METHODS | ||

| Q2 | 2 | Was the study design appropriate for the stated aim(s)? |

| Q3 | 3, 4 and 5 | Was the sample size justified, clearly defined, and taken from an appropriate population? |

| Q4 | 8 | Were the outcome variables measured appropriate to the aims of the study? |

| Q5 | 9 | Were the outcome variables measured correctly using instruments/measurements that had been trialled, piloted or published previously? |

| Q6 | 10 | Is it clear what was used to determined statistical significance and/or precision estimates? (e.g., p-values, confidence intervals) |

| Q7 | 11 | Were the methods sufficiently described to enable them to be repeated? |

| RESULTS | ||

| Q8 | 12 | Were the basic data adequately described? |

| Q9 | 16 | Were the results presented for all the analyses described in the methods? |

| DISCUSSION | ||

| Q10 | 17 | Were the authors’ discussions and conclusions justified by the results? |

| Q11 | 18 | Were the limitations of the study discussed? |

| OTHER | ||

| Q12 | 19 | Were there any funding sources or conflicts of interest that may affect the authors’ interpretation of the results? |

| Risk of Bias | Score | Study | Number of Studies |

|---|---|---|---|

| High | 0–6 | - | 0 |

| Medium | 7 | [24,25] | 2 |

| Medium | 8 | [23,26,27,28,29,30,31,32,33] | 8 |

| Medium | 9 | [34,35,36,37,38,39,40,41,42,43,44,45] | 12 |

| Low | 10 | [14,15,36,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60] | 19 |

| Low | 11 | [17,61,62,63,64,65,66,67,68,69,70] | 11 |

| Low | 12 | [71,72,73,74,75,76,77] | 7 |

| Sensors | Study | Number of Studies | Percentage of Studies |

|---|---|---|---|

| IMUs | [24,26,27,28,29,30,35,36,37,39,44,47,48,49,50,51,58,59,60,64,65,66,69,71,72,73,74,75,76] | 29 | 49.2% |

| Camera-based Sensors * | [14,23,25,32,33,34,38,41,42,43,46,52,53,55,67,68,70,77] | 18 | 30.5% |

| IMUs + Camera-based Sensors | [15,45,56,61,62,63] | 6 | 10.2% |

| IMUs + Other Technologies | [17,31,54,57,78] | 5 | 8.5% |

| IMUs + Camera-based + Other Technologies | [40] | 1 | 1.7% |

| Sensors | |||

|---|---|---|---|

| IMUs | Camera-Based | ||

| Marker-Based | Marker-Less | ||

| Accuracy | High (0.75° to 1.5°) 3 | Very high (0.1 mm and 0.5°) 1; subject to number/location of cameras | Low (static, 0.0348 m [79]) subject to distance from camera |

| Set up | Straightforward; subject to number of IMUs | Requires time-consuming and frequent calibrations | Usually requires checkerboard calibrations |

| Capture volumes | Only subject to distance from station (if required) | Varies; up to 15 × 15 × 6 m 1 | Field of view: 70.6° × 60°; 8 m depth range 5 |

| Cost of installation | From USD 50 per unit to over USD 12,000 for a full-body suit 4 | Varies; from USD 5000 2 to USD 150,000 1 | USD 200 5 per unit |

| Ease of use and data processing | Usually raw sensor data to ASCII files | Usually highly automated, outputs full 3D kinematics | Requires custom-made processing algorithms |

| Invasiveness (individual) | Minimal | High (markers’ attachment) | Minimal |

| Invasiveness (workplace) | Minimal | High (typically, 6 to 12 camera systems) | Medium (typically, 1 to 4 camera systems) |

| Line-of-sight necessity | No | Yes | Yes |

| Portability | Yes | Limited | Yes |

| Range | Usually up to 20 m from station 3 (if wireless) | Up to 30 m camera-to-marker 1 | Low: skeleton tracking range of 0.5 m to 4.5 m 5 |

| Sampling rate | Usually from 60 to 120 Hz 3 (if wireless) | Usually up to 250 Hz 1 (subject to resolution) | Varies; 15–30 Hz 5 or higher for high-speed cameras |

| Software | Usually requires bespoke or off-the-shelf software | Requires off-the-shelf software | Requires bespoke software, off-the-shelf solutions not available |

| Noise sources and environmental interference | Ferromagnetic disturbances, temperature changes | Bright light and vibrations | IR-interference with overlapping coverage, angle of observed surface |

| Other limitations | Drift, battery life, no direct position tracking | Camera obstructions | Camera obstructions, difficulties tracking bright or dark objects |

| Favoured applications | Activity recognition [31], identification of hazardous events/poses [44,58,60,65,66,69,75] | Human–robot collaboration [42], robot trajectory planning [52] | Activity tracking [34], gesture or pose classification [25,45,53] |

| Industry | Study | Number of Studies | Percentage of Studies |

|---|---|---|---|

| Construction Industry | [15,24,36,39,40,44,47,55,58,59,60,63,65,66,69,75,76,78] | 18 | 30.5% |

| Generic | [14,23,25,26,31,45,48,53,54,71,73,74,77] | 13 | 22.0% |

| Industrial Robot Manufacturing | [30,32,33,41,42,43,46,52,57] | 9 | 15.3% |

| Automotive and Cycling Industry | [28,29,34,38,61,62] | 6 | 10.2% |

| Agriculture and Timber | [50,51,56,67,68] | 5 | 8.5% |

| Food Industry | [27,70,72] | 3 | 5.1% |

| Aerospace Manufacturing | [35,49] | 2 | 3.4% |

| Energy Industry | [17] | 1 | 1.7% |

| Petroleum Industry | [37] | 1 | 1.7% |

| Steel Industry | [64] | 1 | 1.7% |

| Applications | Study | Number of Studies | Percentage of Studies |

|---|---|---|---|

| Workers’ Health and Safety | [14,15,25,26,27,28,29,33,35,36,39,41,42,44,45,47,48,49,50,51,53,54,55,58,59,60,65,66,69,70,71,72,73,74,75,76,77,78] | 38 | 64.4% |

| Improvement of Industrial Process or Product | [32,43,46,52,56,57,61,62,67,68] | 10 | 17.0% |

| Workers’ Productivity Improvement | [23,24,31,34,38,40] | 6 | 10.1% |

| Machinery Monitoring and Quality Control | [17,30,37,63,64] | 5 | 8.5% |

| Study | Machine Learning Model | Input Data | Training Dataset | Classification Output | Accuracy |

|---|---|---|---|---|---|

| [24] | ANN, k-NN | Magnitude of linear and angular acceleration. | Manually labelled video sequences | Activity recognition | 94.11% |

| [71] | SVM | Eight different motion components (2D position trajectories, profile magnitude of vel., acc. and jerk, angle and velocity). | 2000 sample data points manually labelled | 2 Fatigue states (Yes or No) | 90% |

| [63] | Bayes classifier | Acceleration. | Labelled sensor data features | Type of landmarks (lift, staircase, etc.) | 96.8% * |

| [64] | ANN | Cutting speed, feed rate, depth of cut, and the three peak spectrum amplitudes from vibration signals in 3 directions. | Labelled cutting and vibration data from 9 experiments | Worn tool condition (Yes or No) | 94.9% |

| [36] | k-NN, MLP, RF, SVM | Quaternions, three-dimensional acceleration, linear velocity, and angular velocity. | Manually labelled video sequences | 14 Activities (e.g., bending-up and bending-down) | Best RF, with 79.83% |

| [25] | RF | Joint angles evaluated from an artificial model built on a segmentation from depth images. | Manually labelled video sequences | 5 different postures | 87.1% |

| [47] | ANN | Three-dimensional acceleration. | Labelled dataset | Walk/slip/trip | 94% |

| [53] | RF | Depth Comparison Features (DCF) from depth images. | Labelled dataset of 5000 images | 7 RULA score postures | 93% |

| [57] | DAG-SVM | Rotation angle and EMG signals. | Dataset acquired with known object weight | Light objects, large objects, and heavy objects | 96.67% |

| [69] | One-class SVM | Acceleration. | Dataset of normal walk samples | 2 states (walk, near-miss falls) | 86.4% |

| [32] | CNN | Distance of nearest point, curvature and HSV colour. | Pre-existing dataset of objects from YOLO [80] | Type of object | 97.3% * |

| Study | Sample Size | Sensor Placement | Number of IMUs | MoCap Systems and Complementary Sensors | Study Findings |

|---|---|---|---|---|---|

| [34] | 1 | - | 6 × Kinect, 16 × ARTtrack2 IRCs | Evaluation of workers’ walk paths in assembly lines showed an average difference of 0.86 m in the distance between planned and recorded walk paths. | |

| [24] | 1 | Upper Body | 1 | - | Activity recognition of over 90% between different construction activities; very good accuracy in activity duration measurements. |

| [61] | 8 | - | 3 | 16 × VICON IRCs (50 × passive markers), 1 × pressure sensor, 2 × belt load cells | Both tested car crash test surrogates had comparable overall ISO scores; THOR-M scored better in acceleration and angular velocity data; Hybrid III had higher average ISO/TS 18571 scores in excursion data. |

| [26] | 11 | Upper Body | 2 | Heart rate, skin temperature, air humidity, temperature and VO2 | IMUs can discriminate rest from work but they are less accurate differentiating moderate from hard work. Activity is a reliable predictor of cold stress for workers in cold weather environments. |

| [71] | 20 | Lower Body | 1 | - | Fatigue detection of workers with an accuracy of 80%. |

| [72] | 15 | Upper body | 1 | 1 × electronic algometer | Vineyard-workers spent more than 50% of their time with the trunk flexed over 30°. No relationship between duration of forward bending or trunk rotation and pain intensity. |

| [23] | 1 | - | - | 4 × Kinect | Proof of concept of a MoCap system for the evaluation of the human labour in assembly workstations. |

| [27] | 5 | Full body | 17 | - | Workers lifting kegs from different heights showed different torso flexion/extension angles. |

| [28] | 2 | Full body | 7 | - | Proof of concept of an IMU system for workers’ postural analyses, with an exemplary application in automotive industry. |

| [62] | 20 | Upper body | 3 | 4 × Optotrack IRCs (active markers), 2 × Xsensor pressure pads | No significant differences in terms of body posture between the tested truck seats; peak and average seat pressure was higher with industry standard seats; average trunk flexion was higher with industry standard seats by 16% of the total RoM. |

| [78] | 3 | Upper body | 17 | 1 × UWB, 1 × Perception Neuron, 1 × phone camera | In construction tasks, the accuracy of the automatic safety risk evaluation was 83%, as compared to the results of the video evaluation by a safety expert. |

| [17] | - | Machinery | 1 | 1 × mechanical scanning sonar | The position tracking accuracy of remotely operated vehicles in a nuclear power plant was within centimetre level when compared to a visual positioning method. |

| [73] | 16 | Full body | 17 | 1 × Certus Optotrak, 6 × Kistler FPs, 2 × Xsens instrumented shoes | The root-mean square differences between the estimated and measured hand forces during manual materials handling tasks from IMUs and instrumented force shoes ranged between 17-21N. |

| [81] | 1 | Full body | - | 4 × depth cameras | Proof of concept of a motion analysis system for the evaluation of the human labour in assembly workstations. |

| [35] | 10 | Full body | 17 | - | Demonstrated an IMU MoCap system for the evaluation of workers’ posture in the aerospace industry. |

| [29] | 1 | Upper body | 4 | 1 × wearable camera, 3 × video cameras 6 × BTS EMG sensors | Proof of concept of an EMG and IMU system for the risk assessing of workers’ biomechanical overload in assembly lines. |

| [74] | 625 | Full body | 4 | - | More time spent in leisure physical activities was associated with lower pain levels in a period of over 12 months. Depending on sex and working domain, high physical activity had a negative effect on the course of pain over 12 months. |

| [63] | - | Machinery | 1 | 1 × mobile phone camera | Three-dimensional localization of distant target objects in industry with an average position errors of 3% in the location of the targeted objects. |

| [64] | - | Machinery | 1 | - | Tool wear detection in CNC machines using an accelerometer and an artificial neural network with an accuracy of 88.1%. |

| [65] | 8 | Lower Body | 1 | 1 × video camera | Distinguish low-fall-risk tasks (comfortable walking) from high-risk tasks (carrying a side load or high-speed walking) in construction workers walking on I-beams. |

| [75] | 10 | Upper body | 1 | 1 × force plate | Wearing a harness loaded with common iron workers’ tools could be considered as a moderate fall-risk task, while holding a toolbox or squatting as a high-risk task. |

| [46] | 1 | Upper body | - | 1 × Kinect | Teleoperation of a robot’s end effector through imitation of the operator’s arm motion with a similarity of 96% between demonstrated and imitated trajectories. |

| [66] | 10 | Upper body | 1 | - | The Shapiro–Wilk statistic of the used acceleration metric can distinguish workers’ movements in hazardous (slippery floor) from non-hazardous areas. |

| [76] | 16 | Lower Body | 1 | - | The gait stability while walking on coated steel beam surfaces is greatly affected by the slipperiness of the surfaces (p = 0.024). |

| [36] | 1 | Full body | 17 | 1 × video camera | Two IMU sensors on hip and either neck or head showed similar motion recognition accuracy (higher than 0.75) to a full body model of 17 IMUs (0.8) for motion classification. |

| [25] | 8 | Full body | - | 1 × Kinect | Posture classification in assembly operations from a stream of depth images with an accuracy of 87%; similar but systematically overestimated EAWS scores. |

| [47] | 3 | Lower Body | 1 | - | Identification of slip and trip events in workers’ walking using an ANN and phone accelerometer with detection accuracy of 88% for slipping, and 94% for tripping. |

| [30] | - | Machinery | 2 | - | High frequency vibrations can be detected by common accelerometers and can be used for micro series spot welder monitoring. |

| [31] | 1 | Full Body | 17 | E-glove from Emphasis Telematics | Measurements from the IMU and force sensors were used for an operator activity recognition model for pick-and-place tasks (precision 96.12%). |

| [48] | 8 | Full Body | 4 | 1 × ECG | IMUs were a better predictor of fatigue than ECG. Hip movements can predict the level of physical fatigue. |

| [37] | - | Machinery | 1 | - | The orientation of a robot (clock face and orientation angles) for pipe inspection can be estimated via an inverse model using an on-board IMU. |

| [77] | 12 | Full Body | - | Motion analysis system (45 × passive markers), 2 × camcorders | The 3D pose reconstruction can be achieved by integrating morphological constraints and discriminative computer vision. The performance was activity-dependent and was affected by self and object occlusion. |

| [49] | 3 | Full Body | 17 | 1 × manual grip dynamometer, 1 × EMG | Workers in a banana harvesting adapt to the position of the bunches and the used tools leading to musculoskeletal risk and fatigue. |

| [50] | 2 | Upper Body | 8 | 6 × EMG | A case study on the usefulness of the integration of kinematic and EMG technologies for assessing the biomechanical overload in production lines. |

| [51] | 2 | Upper Body | 8 | 6 × EMG | Demonstration of an integrated EMG-IMU protocol for the posture evaluation during work activities, tested in an automotive environment. |

| [52] | - | Machinery | - | 4 × IRCs (5 × passive markers) | Welding robot path planning with an error in the trajectory of the end-effector of less than 3 mm. |

| [38] | 42 | - | - | 1 × Kinect | The transfer of assembly knowledge between workers is faster with printed instructions rather with the developed smart assembly workplace system (p-value = 7 × 10−9) as tested in the assembly of a bicycle e-hub. |

| [53] | - | - | - | 1 × Kinect | Real time RULA for the ergonomic analysis for assembly operations in industrial environments with an accuracy of 93%. |

| [39] | - | - | 3 | 1 × Kinect, 1 × Oculus rift | Smartphone sensors to monitor workers’ bodily postures, with errors in the measurements of trunk and shoulder flexions of up to 17˚. |

| [67] | - | - | - | 1 × Kinect | Proof of concept of a real-time MoCap platform, enabling workers to remotely work on a common engineering problem during a collaboration session, aiding in collaborative designs, inspection and verifications tasks. |

| [40] | - | - | 1 | 1 × CCTV, radio transmitter | A positioning system for tracking people in construction sites with an accuracy of approximately 0.8 m in the trajectory of the target. |

| [54] | 10 | Upper Body | 3 | EMG | A wireless wearable system for the assessment of work-related musculoskeletal disorder risks with a 95% and 45% calculation accuracy of the RULA and SI metrics, respectively. |

| [14] | 12 | - | - | 1 × Kinect, 15 × Vicon IRC (47 × passive markers) | RULA ergonomic assessment in real work conditions using Kinect with similar computed scores compared to expert observations (p = 0.74). |

| [68] | - | - | - | 2 × Kinect, 1 × laser motion tracker | Digitising the wheel loading process in the automotive industry, for tracking the moving wheel hub with an error less than the required assembly tolerance of 4 mm. |

| [41] | - | - | - | 1 × Kinect, 1 × Xtion | A demonstration of a real time trajectory generation algorithm for human–robot collaboration that predicts the space that the human worker can occupy within the robot’s stopping time and modifies the trajectory to ensure the worker’s safety. |

| [42] | - | Upper Body | - | 1 × OptiTrack V120: Trio system | A demonstration of a collision avoidance algorithm for robotics aiming to avoid collisions with obstacles without losing the planned tasks. |

| [55] | - | Lower Body | - | 1 × Kinect, 1 × bumblebee XB3 camera, 1 × 3D Camcoder, 2 × Optotrack IRCs, 1 × Goniometer | A vision-based and angular measurement sensor-based approach for measuring workers’ motions. Vision-based approaches had about 5–10 degrees of error in body angles (Kinect’s performance), while an angular measurement sensor-based approach measured body angles with about 3 degrees of error during diverse tasks. |

| [43] | - | - | - | 1 × Oculus Rift, 2 × PlayStation Eye cameras | Using the Oculus rift to control remote robots for human computer interface. The method outperforms the mouse in rise time, percent overshoot and settling time. |

| [56] | 29 | Machinery and Upper Body | 6 | 11 × IRC Eagle Digital | Proof of concept method for the evaluation of sitting posture comfort in a vehicle. |

| [45] | - | Upper Body | 7 | 1 × Kinect | A demonstration of a human posture monitoring systems aiming to estimate the range of motion of the body angles in industrial environments. |

| [33] | - | Upper Body | - | 1 × HTC Vive system | Proof of concept of a real-time motion tracking system for assembly processes aiming to identify if the human worker body parts enter the restricted working space of the robot. |

| [15] | 6 | Full Body | 8 | 1 × video Camera | A demonstration of a system for the identification of detrimental postures in construction jobsites. |

| [57] | 10 | Upper Body | 1 | 8 × EMG | A wearable system for human–robot collaborative assembly tasks using hand-over intentions and gestures. Gestures and intentions by different individuals were recognised with a success rate of 73.33% to 90%. |

| [58] | 2 | Upper Body | 1 | - | Detection of near-miss falls of construction workers with 74.9% precision and 89.6% recall. |

| [69] | 5 | Upper Body | 1 | - | Automatically detect and document near-miss falls from kinematic data with 75.8% recall and 86.4% detection accuracy. |

| [44] | 4 | Lower Body | 2 | video cameras | A demonstration of a method for detecting jobsite safety hazards of ironworkers by analysing gait anomalies. |

| [60] | 9 | Lower Body | 1 | video cameras | Identification of physical fall hazards in construction, results showed a strong correlation between the location of hazards and the workers’ responses (0.83). |

| [59] | 4 | Lower Body | 2 | Osprey IRC system | Distinguish hazardous from normal conditions on construction jobsites with 1.2 to 6.5 mean absolute percentage error in non-hazard and 5.4 to 12.7 in hazardous environments. |

| [32] | - | - | - | 1 × video camera | Presentation of a robot vision system based on CNN and a Monte Carlo algorithm with a success rate of 97.3% for the pick-and-place task. |

| [70] | 15 | - | - | 1 × depth camera | A system aiming to warn a person while washing hands if improper application of soap was detected based on hand gestures, with 94% gesture detection accuracy. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Menolotto, M.; Komaris, D.-S.; Tedesco, S.; O’Flynn, B.; Walsh, M. Motion Capture Technology in Industrial Applications: A Systematic Review. Sensors 2020, 20, 5687. https://doi.org/10.3390/s20195687

Menolotto M, Komaris D-S, Tedesco S, O’Flynn B, Walsh M. Motion Capture Technology in Industrial Applications: A Systematic Review. Sensors. 2020; 20(19):5687. https://doi.org/10.3390/s20195687

Chicago/Turabian StyleMenolotto, Matteo, Dimitrios-Sokratis Komaris, Salvatore Tedesco, Brendan O’Flynn, and Michael Walsh. 2020. "Motion Capture Technology in Industrial Applications: A Systematic Review" Sensors 20, no. 19: 5687. https://doi.org/10.3390/s20195687

APA StyleMenolotto, M., Komaris, D.-S., Tedesco, S., O’Flynn, B., & Walsh, M. (2020). Motion Capture Technology in Industrial Applications: A Systematic Review. Sensors, 20(19), 5687. https://doi.org/10.3390/s20195687