A High-Robust Automatic Reading Algorithm of Pointer Meters Based on Text Detection

Abstract

:1. Introduction

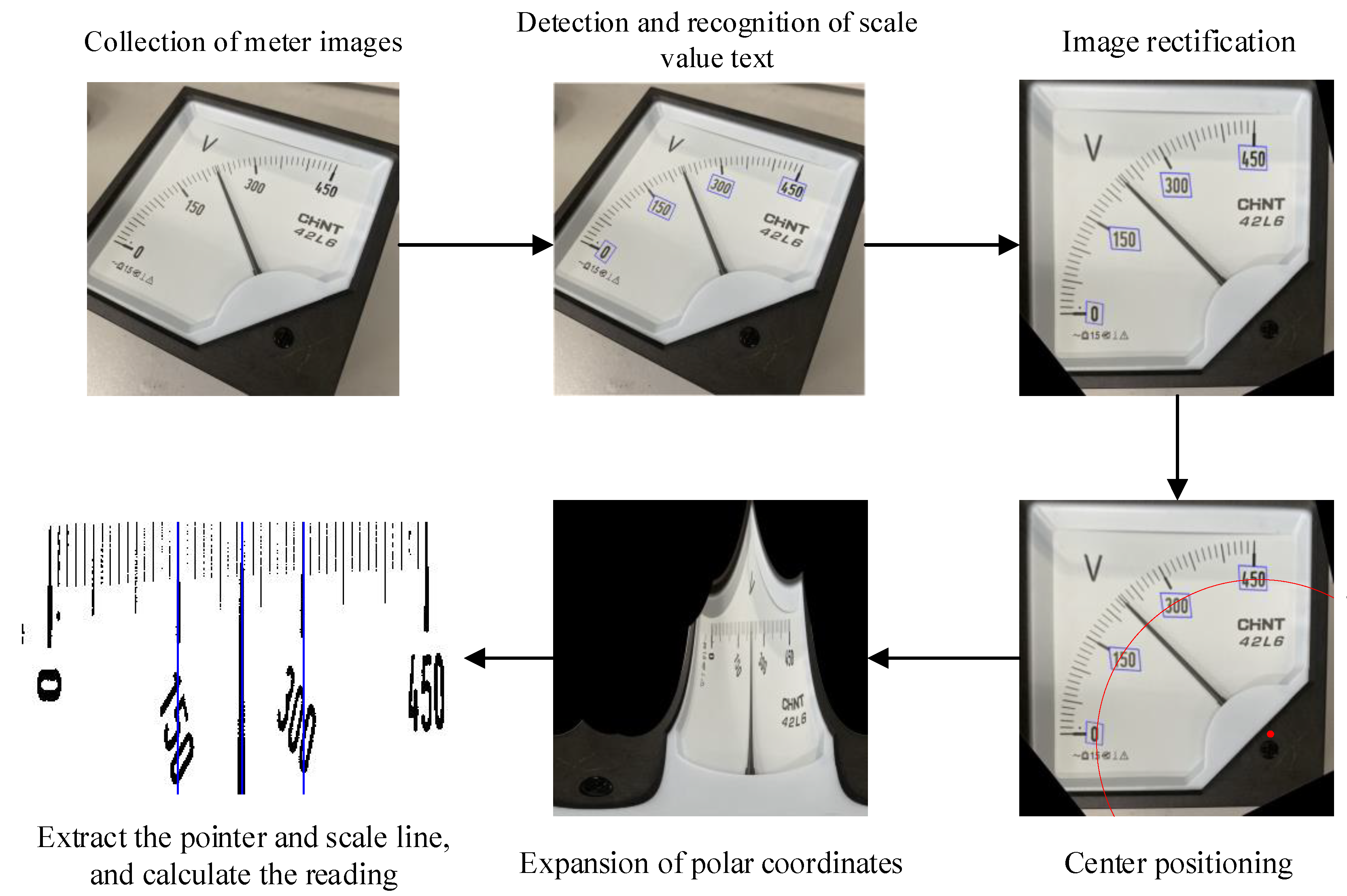

- Deep learning was applied to the detection of scale value text in the meters, which realizes the text coordinate positioning with high precision and robustness, and the text recognition with high accuracy. Also, compared with the distance method of reading from zero scale to full scale, using the recognition result of scale value in the distance method of reading allows a smaller error.

- A novel meter center positioning method was proposed, which locates the meter center according to the position of scale value text. The image of scale value text provides more features than that of scale line, so it can adapt to more complex environments when used to fit the meter center.

- The detection of scale value text was applied to the meter rectification. Since scale value text is a common feature of almost all meters, such design can greatly improve the adaptive ability of the algorithm.

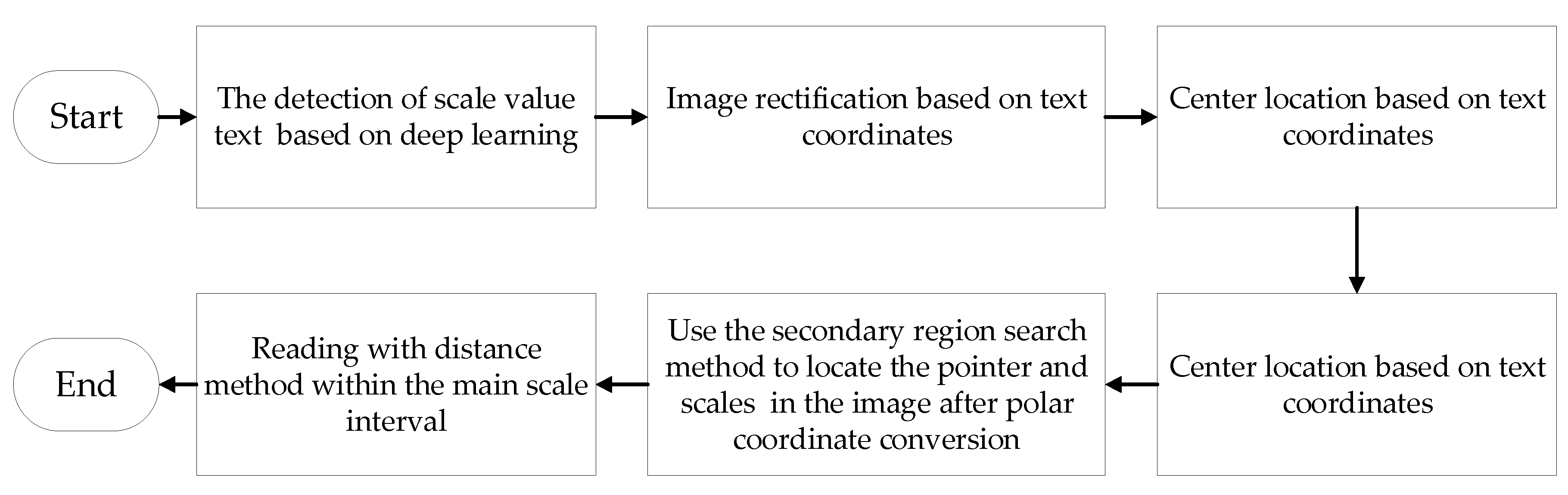

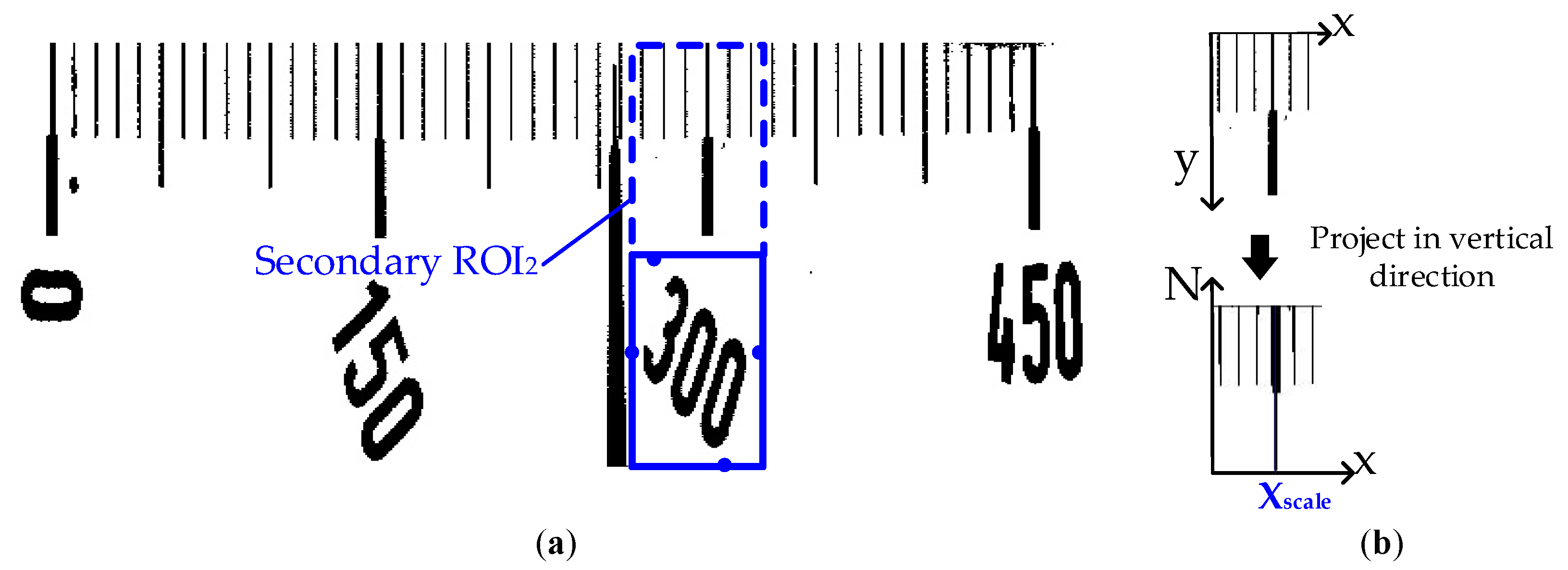

- Based on the position of scale value text, a secondary region search method was proposed to extract the pointer and scale line. This method has effectively solved the problem of pointer shadow, and also eliminated the influence of other objects in the dial on pointer and scale line extraction. The detailed algorithm flowchart is shown in Figure 2.

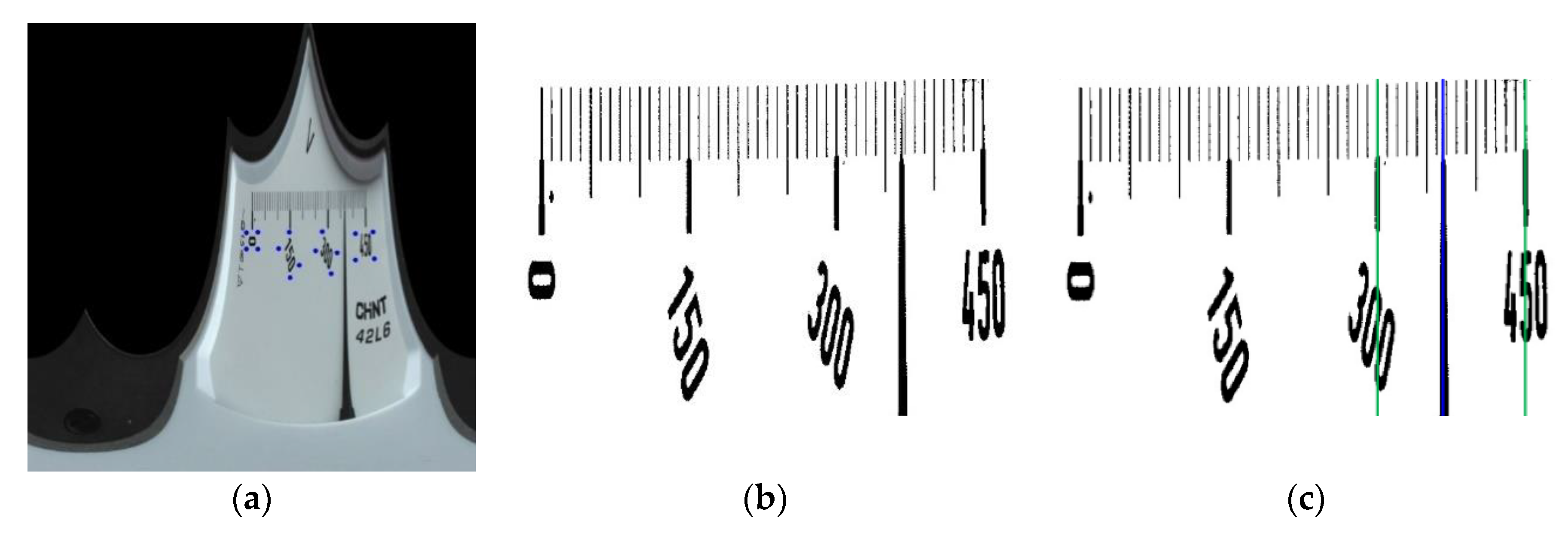

2. Image Rectification Based on Text Position

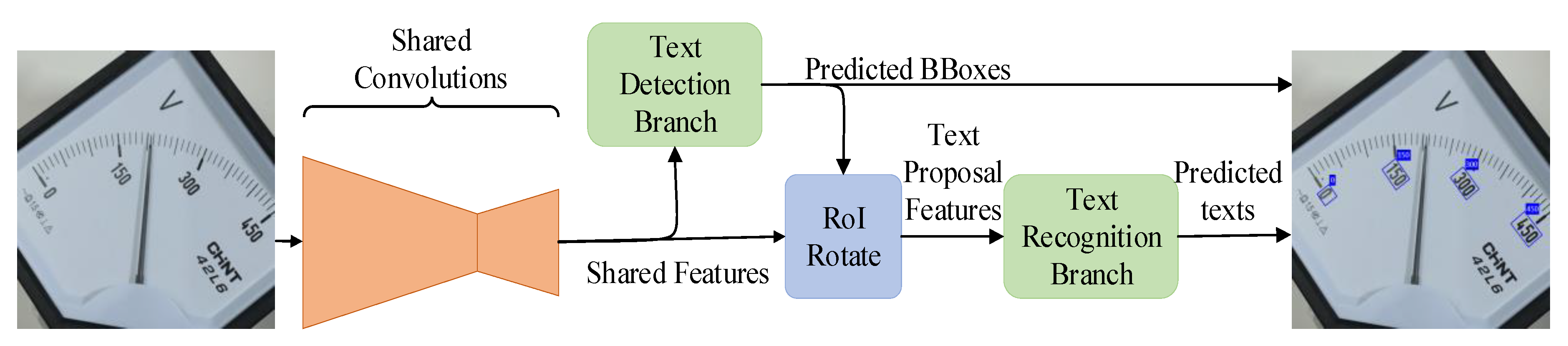

2.1. Digital Detection and Recognition of Scale Value

2.2. Image Rectification

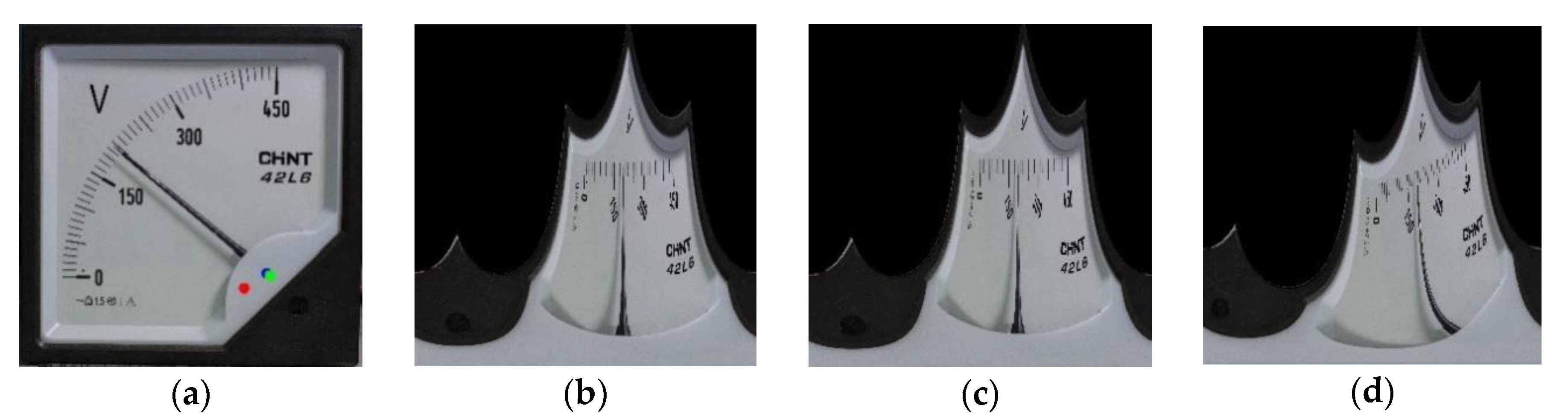

3. Pointer and Scale Extraction

3.1. Polar Transform

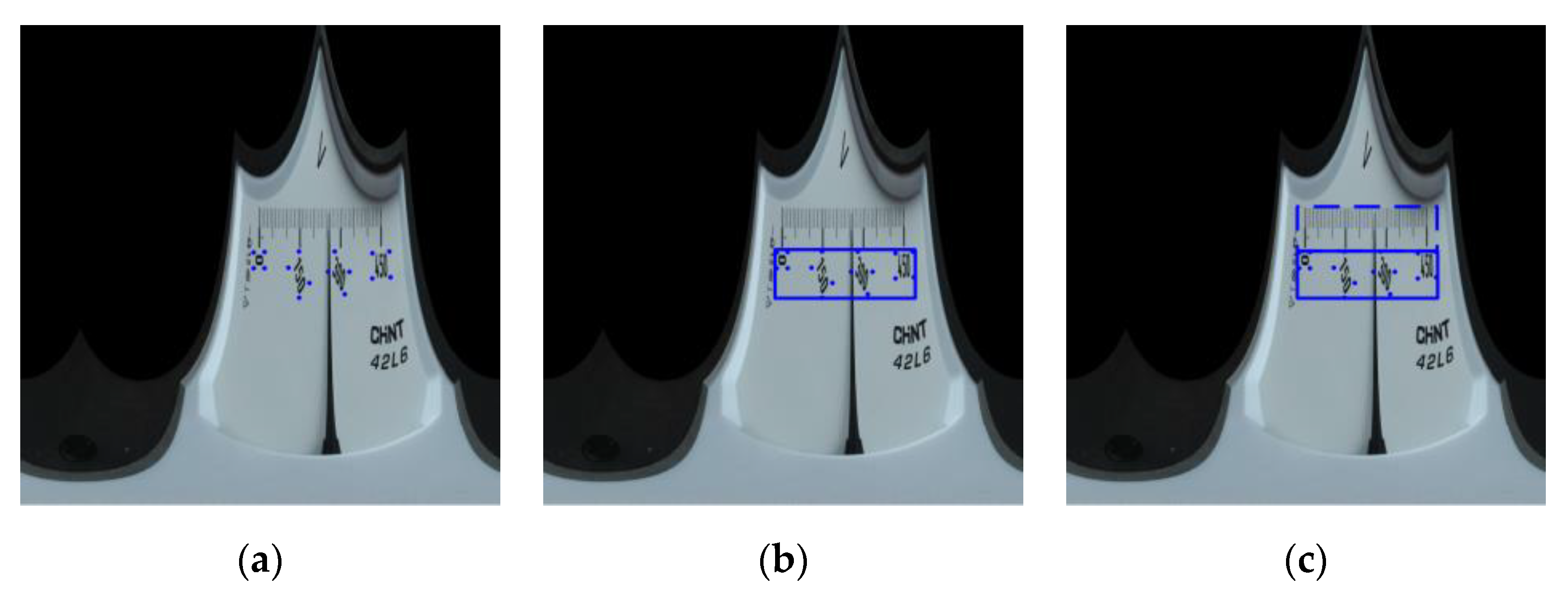

3.2. Pointer and Scale Extraction

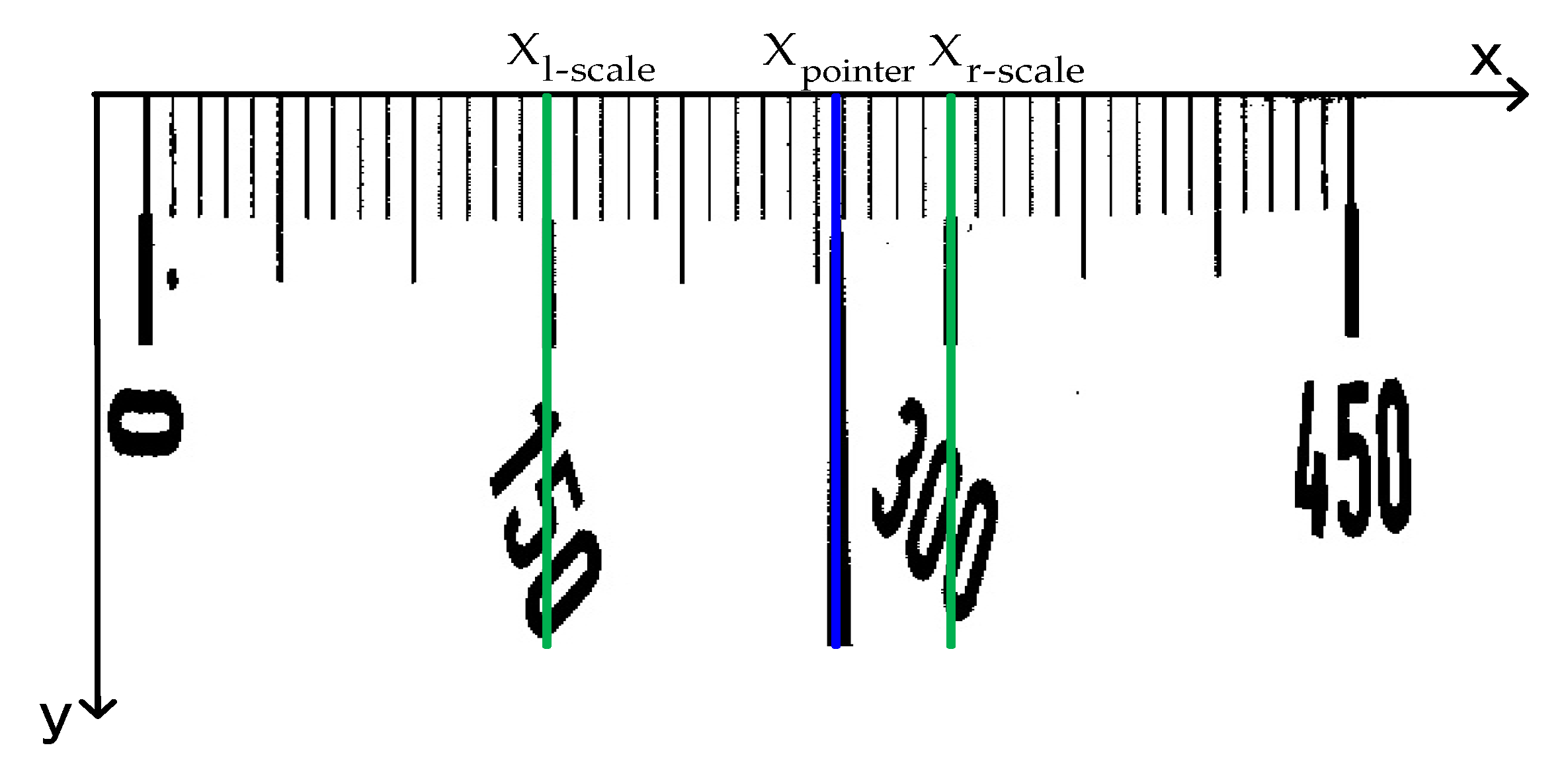

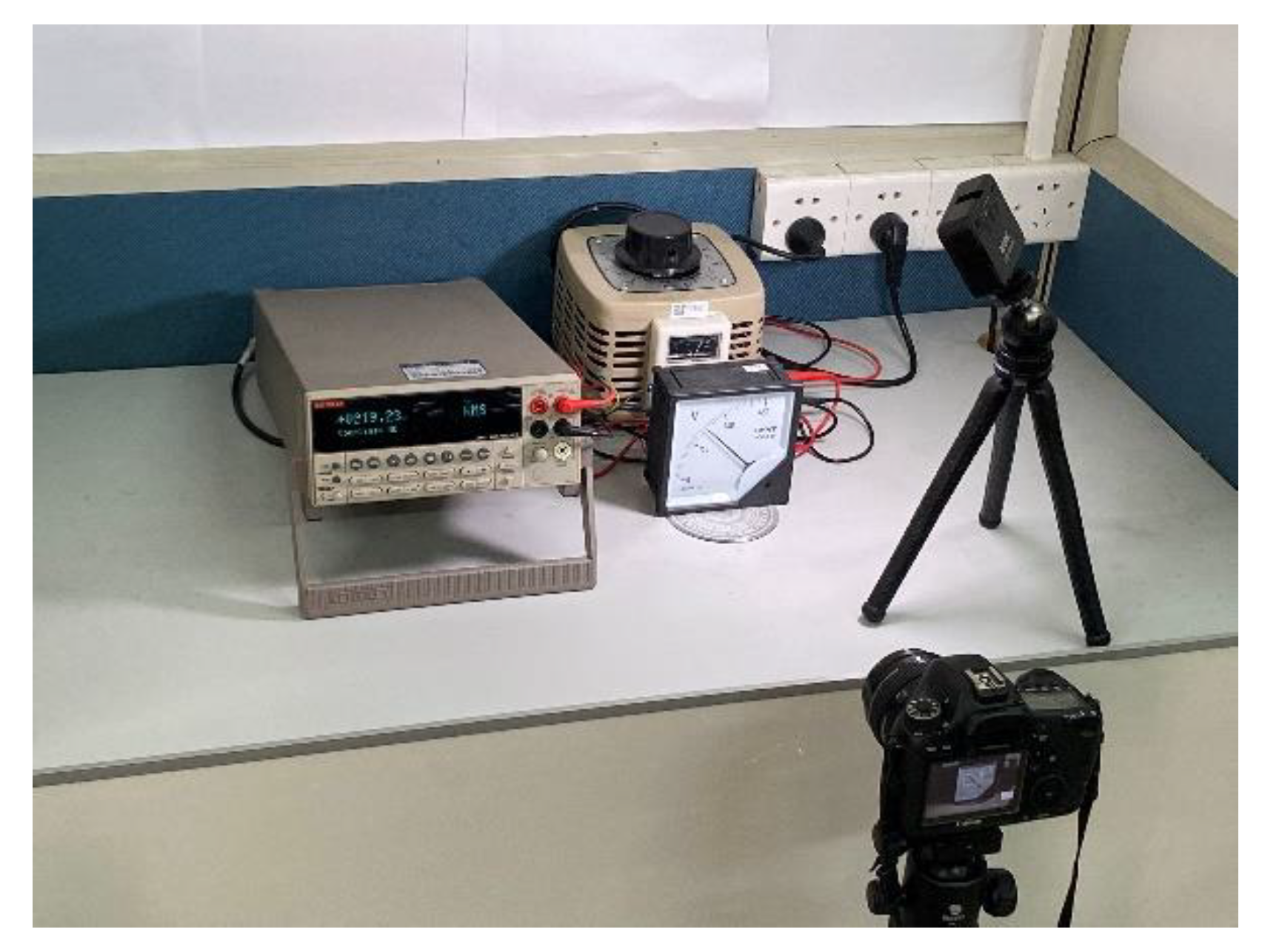

4. Experiments

4.1. Scale Value Text Detection and Image Rectification

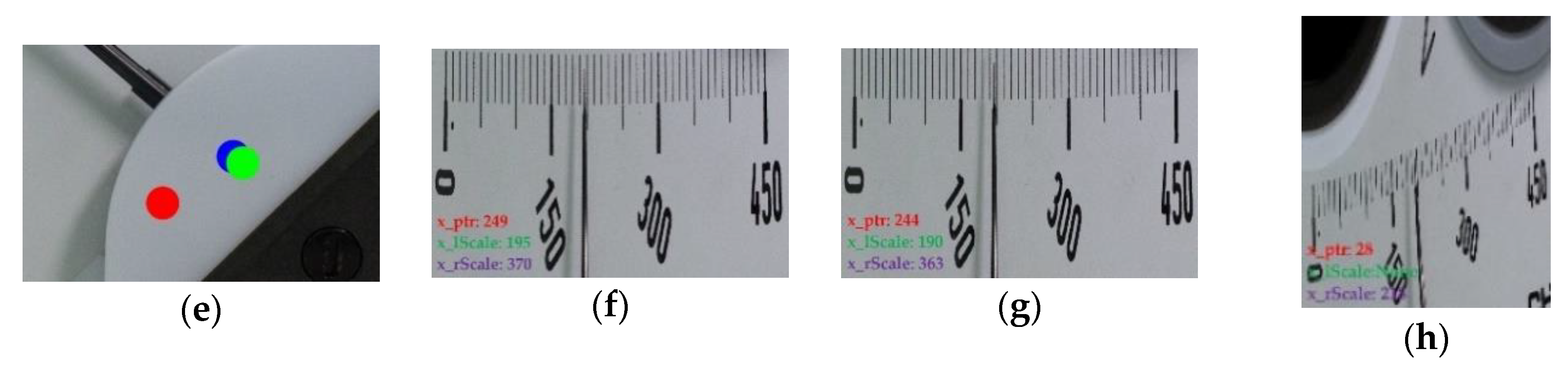

4.2. Extraction of Pointer and Scale Line

4.3. Analysis of the Error

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Acronyms

| MSRCR | Multi-Scale Retinex with Color Restoration |

| HOG | Histogram of Oriented Gradient |

| SVM | Support Vector Machine |

| MSVM | Multiple Support Vector Machine |

| RANSAC | Random sample consensus |

| LSTM | Long Short-Term Memory |

| AC | Alternating Currents |

| DC | Direct Current |

| ROI | Region of Interest |

| GAN | Generative Adversarial Networks |

References

- Lai, H.; Kang, Q.; Pan, L.; Cui, C. A Novel Scale Recognition Method for Pointer Meters Adapted to Different Types and Shapes. In Proceedings of the 2019 IEEE 15th International Conference on Automation Science and Engineering, Vancouver, BC, Canada, 25–28 August 2019; pp. 374–379. [Google Scholar]

- Bao, H.; Tan, Q.; Liu, S.; Miao, J. Computer Vision Measurement of Pointer Meter Readings Based on Inverse Perspective Mapping. Appl. Sci. 2019, 9, 3729. [Google Scholar] [CrossRef] [Green Version]

- Ye, X.; Xie, D.; Tao, S. Automatic Value Identification of Pointer-Type Pressure Gauge Based on Machine Vision. J. Comput. 2013, 8, 1309–1314. [Google Scholar] [CrossRef]

- Yang, B.; Lin, G.; Zhang, W. Auto-recognition Method for Pointer-type Meter Based on Binocular Vision. J. Comput. 2014, 9, 787–793. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, J.L.; Rui, T.; Wang, Y.; Wang, Y.N. Research on the Image Enhancement Algorithm of Pointer Instrument under Inadequate Light. Appl. Mech. Mater. 2014, 615, 248–254. [Google Scholar] [CrossRef]

- Khan, W.; Ansell, D.; Kuru, K.; Bilal, M. Flight Guardian: Autonomous Flight Safety Improvement by Monitoring Aircraft Cockpit Instruments. J. Aerosp. Inf. Syst. 2018, 15, 203–214. [Google Scholar] [CrossRef]

- Tian, E.; Zhang, H.; Hanafiah, M.M. A pointer location algorithm for computer vision based automatic reading recognition of pointer gauges. Open Phys. 2019, 17, 86–92. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, J. Computer Vision-Based Approach for Reading Analog Multimeter. Appl. Sci. 2018, 8, 1268. [Google Scholar] [CrossRef] [Green Version]

- Wen, K.; Li, D.; Zhao, X.; Fan, A.; Mao, Y.; Zheng, S. Lightning Arrester Monitor Pointer Meter and Digits Reading Recognition Based on Image Processing. In Proceedings of the 2018 IEEE 3rd Advanced Information Technology, Electronic and Automation Control Conference, Chongqing, China, 12–14 October 2018; pp. 759–764. [Google Scholar]

- Sowah, R.A.; Ofoli, A.R.; Mensah-Ananoo, E.; Mills, G.A.; Koumadi, K.M. Intelligent Instrument Reader Using Computer Vision and Machine Learning. In Proceedings of the 2018 IEEE Industry Applications Society Annual Meeting, Portland, OR, USA, 23–27 September 2018. [Google Scholar]

- Alegria, E.C.; Serra, A.C. Automatic calibration of analog and digital measuring instruments using computer vision. IEEE Trans. Instrum. Meas. 2000, 49, 94–99. [Google Scholar] [CrossRef]

- Belan, P.A.; Araujo, S.A.; Librantz, A.F.H. Segmentation-free approaches of computer vision for automatic calibration of digital and analog instruments. Measurement 2013, 46, 177–184. [Google Scholar] [CrossRef]

- Zheng, C.; Wang, S.; Zhang, Y.; Zhang, P.; Zhao, Y. A robust and automatic recognition system of analog instruments in power system by using computer vision. Measurement 2016, 92, 413–420. [Google Scholar] [CrossRef]

- Gao, H.; Yi, M.; Yu, J.; Li, J.; Yu, X. Character Segmentation-Based Coarse-Fine Approach for Automobile Dashboard Detection. IEEE Trans. Ind. Inf. 2019, 15, 5413–5424. [Google Scholar] [CrossRef]

- Ma, Y.; Jiang, Q. A robust and high-precision automatic reading algorithm of pointer meters based on machine vision. Meas. Sci. Technol. 2019, 30, 015401. [Google Scholar] [CrossRef]

- Chi, J.; Liu, L.; Liu, J.; Jiang, Z.; Zhang, G. Machine Vision Based Automatic Detection Method of Indicating Values of a Pointer Gauge. Math. Probl. Eng. 2015, 283629. [Google Scholar] [CrossRef] [Green Version]

- Sheng, Q.; Zhu, L.; Shao, Z.; Jiang, J. Automatic reading method of pointer meter based on double Hough space voting. Chin. J. Sci. Instrum. 2019, 40, 230–239. [Google Scholar]

- Liu, Y.; Liu, J.; Ke, Y. A detection and recognition system of pointer meters in substations based on computer vision. Measurement 2020, 152, 107333. [Google Scholar] [CrossRef]

- Zhang, X.; Dang, X.; Lv, Q.; Liu, S. A Pointer Meter Recognition Algorithm Based on Deep Learning. In Proceedings of the 2020 3rd International Conference on Advanced Electronic Materials, Computers and Software Engineering (AEMCSE), Shenzhen, China, 6–8 March 2020. [Google Scholar]

- Cai, W.; Ma, B.; Zhang, L.; Han, Y. A pointer meter recognition method based on virtual sample generation technology. Measurement 2020, 163, 107962. [Google Scholar] [CrossRef]

- He, P.; Zuo, L.; Zhang, C.; Zhang, Z. A Value Recognition Algorithm for Pointer Meter Based on Improved Mask-RCNN. In Proceedings of the 9th International Conference on Information Science and Technology (ICIST), Hulunbuir, China, 2–5 August 2019. [Google Scholar]

- Suykens, J.A.K. Support Vector Machines: A Nonlinear Modelling and Control Perspective. Eur. J. Control 2001, 7, 311–327. [Google Scholar] [CrossRef]

- Liu, X.; Liang, D.; Yan, S.; Chen, D.; Qiao, Y.; Yan, J. FOTS: Fast Oriented Text Spotting with a Unified Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 5676–5685. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Computer Vision and Pattern Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Chernov, N.; Lesort, C. Least Squares Fitting of Circles. J. Math. Imaging Vis. 2005, 23, 239–252. [Google Scholar] [CrossRef]

- Gupta, A.; Vedaldi, A.; Zisserman, A. Synthetic Data for Text Localisation in Natural Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

| Type | Kernel [Size,Stride] | Out Channels |

|---|---|---|

| conv_bn_relu | [3,1] | 64 |

| conv_bn_relu | [3,1] | 64 |

| height-max-pool | [(2,1),(2,1)] | 64 |

| conv_bn_relu | [3,1] | 128 |

| conv_bn_relu | [3,1] | 128 |

| height-max-pool | [(2,1),(2,1)] | 128 |

| conv_bn_relu | [3,1] | 256 |

| conv_bn_relu | [3,1] | 256 |

| height-max-pool | [(2,1),(2,1)] | 256 |

| bi-directional_lstm | 256 | |

| Fully-connected | |S| |

| Types of Pointer Meter | Range | Image Size | Number of Images |

|---|---|---|---|

| AC Voltmeter | 450 V | 2000 × 2000 | 200 |

| AC Ammeter | 100 A | 2000 × 2000 | 200 |

| DC Voltmeter | 100 V | 2000 × 2000 | 200 |

| Image Number | Manual Measured Values | Double Hough Space Voting | Proposed Model | ||

|---|---|---|---|---|---|

| Recognition Values | Error (Pixel) | Recognition Values | Error (Pixel) | ||

| 1 | [1465,1463] | [1480,1395] | 69.63 | [1473,1469] | 10.00 |

| 2 | [1470,1466] | [1485,1455] | 18.60 | [1480,1475] | 13.45 |

| 3 | [1476,1480] | [1454,1437] | 48.30 | [1486,1472] | 12.81 |

| 4 | [1469,1462] | [1416,1449] | 54.57 | [1475,1465] | 6.71 |

| 5 | [1469,1463] | [1470,1439] | 24.02 | [1460,1464] | 9.06 |

| 6 | [1473,1469] | [1271,1484] | 202.56 | [1462,1501] | 33.84 |

| 7 | [1478,1477] | [1526,1715] | 242.79 | [1493,1496] | 24.21 |

| 8 | [1478,1480] | [1493,1520] | 42.72 | [1502,1497] | 29.41 |

| 9 | [1474,1476] | [1523,1311] | 172.12 | [1476,1477] | 2.24 |

| 10 | [1473,1469] | [1422,1546] | 92.36 | [1464,1469] | 9.00 |

| Average | 96.767 | 15.07 | |||

| Shooting Environment | Number | Reading by Multimeter (V) | Ref. [14] (V) | Reference Error (%) | Ref. [15] (V) | Reference Error (%) | Proposed Algorithm (V) | Reference Error (%) |

|---|---|---|---|---|---|---|---|---|

| uniform illumination | 1 | 23.27 | 25.39 | 0.47 | 25.70 | 0.54 | 24.31 | 0.23 |

| 2 | 148.91 | 147.29 | 0.36 | 146.53 | 0.53 | 150.31 | 0.31 | |

| 3 | 204.60 | 202.71 | 0.42 | 201.81 | 0.62 | 203.07 | 0.34 | |

| 4 | 367.56 | 369.23 | 0.37 | 369.86 | 0.51 | 368.87 | 0.29 | |

| strong light exposure | 5 | 58.10 | 61.61 | 0.78 | 62.11 | 0.89 | 56.21 | 0.42 |

| 6 | 106.89 | 111.08 | 0.93 | 111.03 | 0.92 | 108.60 | 0.38 | |

| 7 | 219.23 | 215.36 | 0.86 | 215.50 | 0.83 | 217.25 | 0.44 | |

| 8 | 274.26 | 270.98 | 0.73 | 270.75 | 0.78 | 272.69 | 0.35 | |

| shadowing | 9 | 124.53 | 127.91 | 0.75 | 128.67 | 0.92 | 125.84 | 0.29 |

| 10 | 188.72 | 192.68 | 0.88 | 192.50 | 0.84 | 190.34 | 0.36 | |

| 11 | 248.23 | 251.29 | 0.68 | 251.65 | 0.76 | 249.67 | 0.32 | |

| 12 | 302.38 | 300.11 | 0.50 | 298.56 | 0.85 | 301.17 | 0.27 | |

| different shooting angles | 13 | 100.03 | 92.56 | 1.66 | 90.87 | 2.04 | 95.13 | 1.09 |

| 14 | 148.79 | 143.34 | 1.21 | 142.46 | 1.41 | 145.01 | 0.84 | |

| 15 | 247.05 | 251.96 | 1.09 | 253.42 | 1.42 | 249.89 | 0.63 | |

| 16 | 281.26 | 286.33 | 1.13 | 287.32 | 1.35 | 284.76 | 0.78 |

| Shooting Environment | Types of Pointer Meter | Average Relative Error (%) | ||

|---|---|---|---|---|

| Proposed Algorithm | Ref. [14] | Ref. [15] | ||

| uniform illumination | AC Voltmeter | 0.295 | 0.402 | 0.522 |

| AC Ammeter | 0.343 | 0.496 | 0.613 | |

| DC Voltmeter | 0.369 | 0.517 | 0.596 | |

| strong light exposure | AC Voltmeter | 0.387 | 0.769 | 0.846 |

| AC Ammeter | 0.419 | 0.845 | 0.825 | |

| DC Voltmeter | 0.401 | 0.863 | 0.872 | |

| shadowing | AC Voltmeter | 0.346 | 0.755 | 0.845 |

| AC Ammeter | 0.423 | 0.799 | 0.813 | |

| DC Voltmeter | 0.376 | 0.723 | 0.864 | |

| different shooting angles | AC Voltmeter | 0.832 | 1.324 | 1.621 |

| AC Ammeter | 0.953 | 1.467 | 1.694 | |

| DC Voltmeter | 0.866 | 1.332 | 1.637 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Zhou, Y.; Sheng, Q.; Chen, K.; Huang, J. A High-Robust Automatic Reading Algorithm of Pointer Meters Based on Text Detection. Sensors 2020, 20, 5946. https://doi.org/10.3390/s20205946

Li Z, Zhou Y, Sheng Q, Chen K, Huang J. A High-Robust Automatic Reading Algorithm of Pointer Meters Based on Text Detection. Sensors. 2020; 20(20):5946. https://doi.org/10.3390/s20205946

Chicago/Turabian StyleLi, Zhu, Yisha Zhou, Qinghua Sheng, Kunjian Chen, and Jian Huang. 2020. "A High-Robust Automatic Reading Algorithm of Pointer Meters Based on Text Detection" Sensors 20, no. 20: 5946. https://doi.org/10.3390/s20205946

APA StyleLi, Z., Zhou, Y., Sheng, Q., Chen, K., & Huang, J. (2020). A High-Robust Automatic Reading Algorithm of Pointer Meters Based on Text Detection. Sensors, 20(20), 5946. https://doi.org/10.3390/s20205946