A Two-Phase Cross-Modality Fusion Network for Robust 3D Object Detection

Abstract

1. Introduction

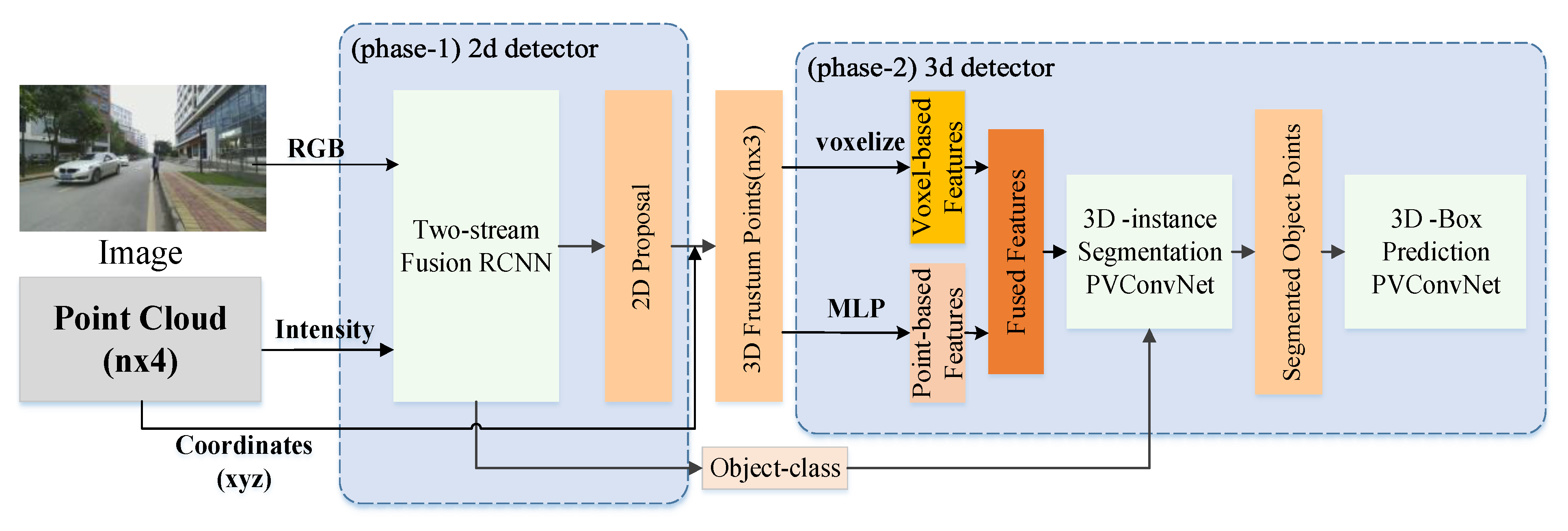

- We propose a cascading 3D detector that exploits multi-modal information at both the feature fusion and decision making levels.

- At the decision-level, we design a two-phase detector in which the second-phase 3D detection gets assistance from the first-phase 2D detection in a way that 2D detection results are transformed into 3D frustums to filter the point cloud, in order to improve both the detection accuracy and real-time performance of 3D detection.

- At the feature-level, we design a two-stream fusion network to merge cross-modality features extracted from RGB images and intensity maps, so as to produce more expressive and robust features for high-precision 2D detection. The validity of the proposed feature fusion scheme is examined and strongly supported by the experimental results and through visualizing features at multiple network stages.

2. Related Work

2.1. 2D Object Detection with Images

2.2. 3D Object Detection with Point Clouds

2.3. Object Detection Based on Multi-Modal Fusion

3. Methods

3.1. Overview

3.2. Two-Stream Fusion RCNN

3.3. PVConvNet-Based Object Detection

3.3.1. Point-Voxel Convolution

3.3.2. 3D Detection

4. Experiments

4.1. Experimental Setups

4.2. Implementation Details

4.3. Ablation Study

4.3.1. Cross-Modality Fusion

4.3.2. Cascade Detector Head and Attention-Based Weighted Fusion

4.4. Comparison with Other Methods

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans Pattern Anal Mach Intell 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision(ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Yuille, A.L.; Liu, C. Deep Nets: What have they ever done for Vision? arXiv 2018, arXiv:1805.04025. [Google Scholar]

- Yoo, J.H.; Kim, Y.; Kim, J.S.; Choi, J.W. 3D-CVF: Generating Joint Camera and LiDAR Features Using Cross-View Spatial Feature Fusion for 3D Object Detection. arXiv 2020, arXiv:2004.12636. [Google Scholar]

- Huang, T.; Liu, Z.; Chen, X.; Bai, X. EPNet: Enhancing Point Features with Image Semantics for 3D Object Detection. arXiv 2020, arXiv:2007.08856. [Google Scholar]

- Liang, M.; Yang, B.; Chen, Y.; Hu, R.; Urtasun, R. Multi-task multi-sensor fusion for 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 18–20 June 2019; pp. 7345–7353. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017; pp. 652–660. [Google Scholar]

- Wang, J.; Zhu, M.; Sun, D.; Wang, B.; Gao, W.; Wei, H. MCF3D: Multi-Stage Complementary Fusion for Multi-Sensor 3D Object Detection. IEEE Access 2019, 7, 90801–90814. [Google Scholar] [CrossRef]

- Al-Osaimi, F.R.; Bennamoun, M.; Mian, A. Spatially optimized data-level fusion of texture and shape for face recognition. IEEE Trans Image Process 2011, 21, 859–872. [Google Scholar] [CrossRef]

- Gunatilaka, A.H.; Baertlein, B.A. Feature-level and decision-level fusion of noncoincidently sampled sensors for land mine detection. IEEE Trans Pattern Anal 2001, 23, 577–589. [Google Scholar] [CrossRef]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3d object detection from rgb-d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 918–927. [Google Scholar]

- Oh, S.; Kang, H. Object detection and classification by decision-level fusion for intelligent vehicle systems. Sensors 2017, 17, 207. [Google Scholar] [CrossRef]

- Xu, D.; Anguelov, D.; Jain, A. Pointfusion: Deep sensor fusion for 3d bounding box estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 244–253. [Google Scholar]

- Liu, Z.; Tang, H.; Lin, Y.; Han, S. Point-Voxel CNN for efficient 3D deep learning. In Proceedings of the Advances in Neural Information Processing Systems 32 (NIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; pp. 965–975. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 3354–3361. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. In Proceedings of the Advances in neural information processing systems 29 (NIPS 2016), Barcelona, Spain, 5–10 December 2016; pp. 379–387. [Google Scholar]

- Lin, T.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017; pp. 2117–2125. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Liang, M.; Yang, B.; Wang, S.; Urtasun, R. Deep continuous fusion for multi-sensor 3d object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 641–656. [Google Scholar]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3d proposal generation and object detection from view aggregation. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3d object detection network for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017; pp. 1907–1915. [Google Scholar]

- Yang, B.; Luo, W.; Urtasun, R. Pixor: Real-time 3d object detection from point clouds. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7652–7660. [Google Scholar]

- Li, B.; Zhang, T.; Xia, T. Vehicle detection from 3d lidar using fully convolutional network. arXiv 2016, arXiv:1608.07916. [Google Scholar]

- Song, S.; Xiao, J. Deep Sliding Shapes for Amodal 3D Object Detection in RGB-D Images. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 808–816. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4490–4499. [Google Scholar]

- Xu, Y.; Ye, Z.; Yao, W.; Huang, R.; Tong, X.; Hoegner, L.; Stilla, U. Classification of LiDAR Point Clouds Using Supervoxel-Based Detrended Feature and Perception-Weighted Graphical Model. IEEE J. Stars 2019, 13, 72–88. [Google Scholar] [CrossRef]

- Zhao, H.; Xi, X.; Wang, C.; Pan, F. Ground Surface Recognition at Voxel Scale From Mobile Laser Scanning Data in Urban Environment. IEEE Geosci. Remote Sens. 2019, 17, 317–321. [Google Scholar] [CrossRef]

- Aijazi, A.K.; Checchin, P.; Trassoudaine, L. Segmentation based classification of 3D urban point clouds: A super-voxel based approach with evaluation. Remote Sens. Basel 2013, 5, 1624–1650. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in neural information processing systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Komarichev, A.; Zhong, Z.; Hua, J. A-CNN: Annularly convolutional neural networks on point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 18–20 June 2019; pp. 7421–7430. [Google Scholar]

- Chen, C.; Fragonara, L.Z.; Tsourdos, A. GAPNet: Graph attention based point neural network for exploiting local feature of point cloud. arXiv 2019, arXiv:1905.08705. [Google Scholar]

- Liu, Y.; Fan, B.; Xiang, S.; Pan, C. Relation-shape convolutional neural network for point cloud analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 18–20 June 2019; pp. 8895–8904. [Google Scholar]

- Xing, X.; Mostafavi, M.; Chavoshi, S.H. A knowledge base for automatic feature recognition from point clouds in an urban scene. ISPRS Int. J. Geo. Inf. 2018, 7, 28. [Google Scholar] [CrossRef]

- Rao, Y.; Lu, J.; Zhou, J. Global-Local Bidirectional Reasoning for Unsupervised Representation Learning of 3D Point Clouds. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020; pp. 5376–5385. [Google Scholar]

- Lin, Q.; Zhang, Y.; Yang, S.; Ma, S.; Zhang, T.; Xiao, Q. A self-learning and self-optimizing framework for the fault diagnosis knowledge base in a workshop. Robot Cim. Int. Manuf. 2020, 65, 101975. [Google Scholar] [CrossRef]

- Poux, F.; Ponciano, J. Self-Learning Ontology For Instance Segmentation Of 3d Indoor Point Cloud. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 309–316. [Google Scholar] [CrossRef]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-rcnn: Point-voxel feature set abstraction for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020; pp. 10529–10538. [Google Scholar]

- Konig, D.; Adam, M.; Jarvers, C.; Layher, G.; Neumann, H.; Teutsch, M. Fully convolutional region proposal networks for multispectral person detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR), Honolulu, HI, USA, 22–25 July 2017; pp. 49–56. [Google Scholar]

- Guan, D.; Cao, Y.; Yang, J.; Cao, Y.; Yang, M.Y. Fusion of multispectral data through illumination-aware deep neural networks for pedestrian detection. Inform Fusion 2019, 50, 148–157. [Google Scholar] [CrossRef]

- Li, C.; Song, D.; Tong, R.; Tang, M. Illumination-aware faster R-CNN for robust multispectral pedestrian detection. Pattern Recogn. 2019, 85, 161–171. [Google Scholar] [CrossRef]

- Zhang, Y.; Yin, Z.; Nie, L.; Huang, S. Attention Based Multi-Layer Fusion of Multispectral Images for Pedestrian Detection. IEEE Access 2020, 8, 165071–165084. [Google Scholar] [CrossRef]

- Li, P.; Chen, X.; Shen, S. Stereo r-cnn based 3d object detection for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 18–20 June 2019; pp. 7644–7652. [Google Scholar]

- Rashed, H.; Ramzy, M.; Vaquero, V.; Sallab, A.E.; Sistu, G.; Yogamani, S. FuseMODNet: Real-Time Camera and LiDAR based Moving Object Detection for robust low-light Autonomous Driving. In Proceedings of the the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 29 October–1 November 2019. [Google Scholar]

- Wang, Z.; Zhan, W.; Tomizuka, M. Fusing bird’s eye view lidar point cloud and front view camera image for 3d object detection. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1–6. [Google Scholar]

- Kim, J.; Koh, J.; Kim, Y.; Choi, J.; Hwang, Y.; Choi, J.W. Robust deep multi-modal learning based on gated information fusion network. In Proceedings of the Asian Conference on Computer Vision (ACCV), Perth, WA, Australia, 2–6 December 2018; pp. 90–106. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.; So Kweon, I. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Bottou, L. Neural networks: Tricks of the trade; Springer: Heidelberg/Berlin, Germany, 2012; Chapter 18. Stochastic Gradient Descent Tricks; pp. 421–436. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3d object proposal generation and detection from point cloud. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 18–20 June 2019; pp. 770–779. [Google Scholar]

- Shi, W.; Rajkumar, R. Point-gnn: Graph neural network for 3d object detection in a point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020; pp. 1711–1719. [Google Scholar]

- Wang, Z.; Jia, K. Frustum convnet: Sliding frustums to aggregate local point-wise features for amodal 3d object detection. arXiv 2019, arXiv:1903.01864. [Google Scholar]

- Liang, Z.; Zhang, M.; Zhang, Z.; Zhao, X.; Pu, S. RangeRCNN: Towards Fast and Accurate 3D Object Detection with Range Image Representation. arXiv 2020, arXiv:2009.00206. [Google Scholar]

- Bhattacharyya, P.; Czarnecki, K. Deformable PV-RCNN: Improving 3D Object Detection with Learned Deformations. arXiv 2020, arXiv:2008.08766. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. Std: Sparse-to-dense 3d object detector for point cloud. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 18–20 June 2019; pp. 1951–1960. [Google Scholar]

| Models. | Car | Pedestrian | Cyclists | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Easy | Moderate | Hard | Easy | Moderate | Hard | Easy | Moderate | Hard | |

| Baseline | 92.12 | 87.10 | 75.61 | 79.20 | 69.85 | 60.59 | 78.95 | 61.50 | 56.53 |

| VI-fusion | 97.99 | 90.70 | 82.43 | 83.26 | 76.42 | 65.84 | 82.07 | 70.50 | 61.53 |

| Models | Car | Pedestrian | Cyclists | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Easy | Moderate | Hard | Easy | Moderate | Hard | Easy | Moderate | Hard | |

| Model-v1 | 97.99 | 90.70 | 82.43 | 83.26 | 76.42 | 65.84 | 82.07 | 70.50 | 61.53 |

| Model-v2 | 98.83 | 95.18 | 84.86 | 86.30 | 78.28 | 70.65 | 84.20 | 71.33 | 63.25 |

| Model-v3 | 97.61 | 92.42 | 81.36 | 84.12 | 76.47 | 67.51 | 83.33 | 69.38 | 60.41 |

| Model-v1-att | 94.79 | 88.36 | 80.62 | 82.86 | 73.98 | 62.86 | 78.71 | 58.30 | 56.83 |

| Model-v2-att | 95.11 | 90.12 | 82.05 | 85.40 | 74.45 | 64.65 | 81.60 | 59.83 | 57.05 |

| Method. | Cars | Pedestrians | Cyclists | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Easy | Moderate | Hard | Easy | Moderate | Hard | Easy | Moderate | Hard | |

| MMLab–PointRCNN [53] | 83.68 | 72.31 | 63.17 | 46.68 | 39.34 | 36.01 | 74.83 | 58.65 | 52.37 |

| MV3D [23] | 74.97 | 63.63 | 54.00 | - | - | - | - | - | - |

| AVOD [22] | 76.39 | 66.47 | 60.23 | 36.10 | 27.86 | 25.76 | 57.19 | 42.08 | 38.29 |

| PointGNN [54] | 88.33 | 79.47 | 72.29 | 51.92 | 43.77 | 40.14 | 78.60 | 63.48 | 57.08 |

| Frustum ConvNet [55] | 87.36 | 76.39 | 66.9 | 52.16 | 43.38 | 38.80 | 78.68 | 65.07 | 56.54 |

| F-Pointnet++ [12] | 82.15 | 68.47 | 62.07 | 64.02 | 53.84 | 49.17 | 72.27 | 56.12 | 50.06 |

| Range RCNN [56] | 88.47 | 81.33 | 77.09 | - | - | - | - | - | - |

| Deformable PV-RCNN [57] | 83.30 | 81.46 | 76.96 | 46.97 | 40.89 | 38.80 | 73.46 | 68.54 | 61.33 |

| STD [58] | 86.61 | 77.63 | 76.06 | 53.08 | 44.24 | 41.97 | 78.89 | 62.53 | 55.77 |

| Ours (v2) | 84.05 | 71.50 | 63.01 | 64.26 | 52.71 | 48.72 | 72.15 | 55.70 | 49.24 |

| Method | Input Data | Latency |

|---|---|---|

| MMLab–PointRCNN [53] | Point | 112.4 ms/frame |

| MV3D [23] | Point + RGB | 360 ms/frame |

| AVOD [22] | Point + RGB | 80 ms/frame |

| PointGNN [54] | Point | 80 ms/frame |

| Frustum ConvNet [55] | Point + RGB | 470 ms/frame |

| F-Pointnet++ [12] | Point + RGB | 97.3 ms/frame |

| Range RCNN [56] | Range | 60 ms/frame |

| PV-RCNN [57] | Point | 80 ms/frame |

| STD [58] | Point | 80 ms/frame |

| Ours (v2) | Point + RGB + Intensity | 59.6 ms/frame |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiao, Y.; Yin, Z. A Two-Phase Cross-Modality Fusion Network for Robust 3D Object Detection. Sensors 2020, 20, 6043. https://doi.org/10.3390/s20216043

Jiao Y, Yin Z. A Two-Phase Cross-Modality Fusion Network for Robust 3D Object Detection. Sensors. 2020; 20(21):6043. https://doi.org/10.3390/s20216043

Chicago/Turabian StyleJiao, Yujun, and Zhishuai Yin. 2020. "A Two-Phase Cross-Modality Fusion Network for Robust 3D Object Detection" Sensors 20, no. 21: 6043. https://doi.org/10.3390/s20216043

APA StyleJiao, Y., & Yin, Z. (2020). A Two-Phase Cross-Modality Fusion Network for Robust 3D Object Detection. Sensors, 20(21), 6043. https://doi.org/10.3390/s20216043