Empirical Mode Decomposition Based Multi-Modal Activity Recognition

Abstract

:1. Introduction

2. Multi-Modal Activity Recognition

2.1. Feature Extraction

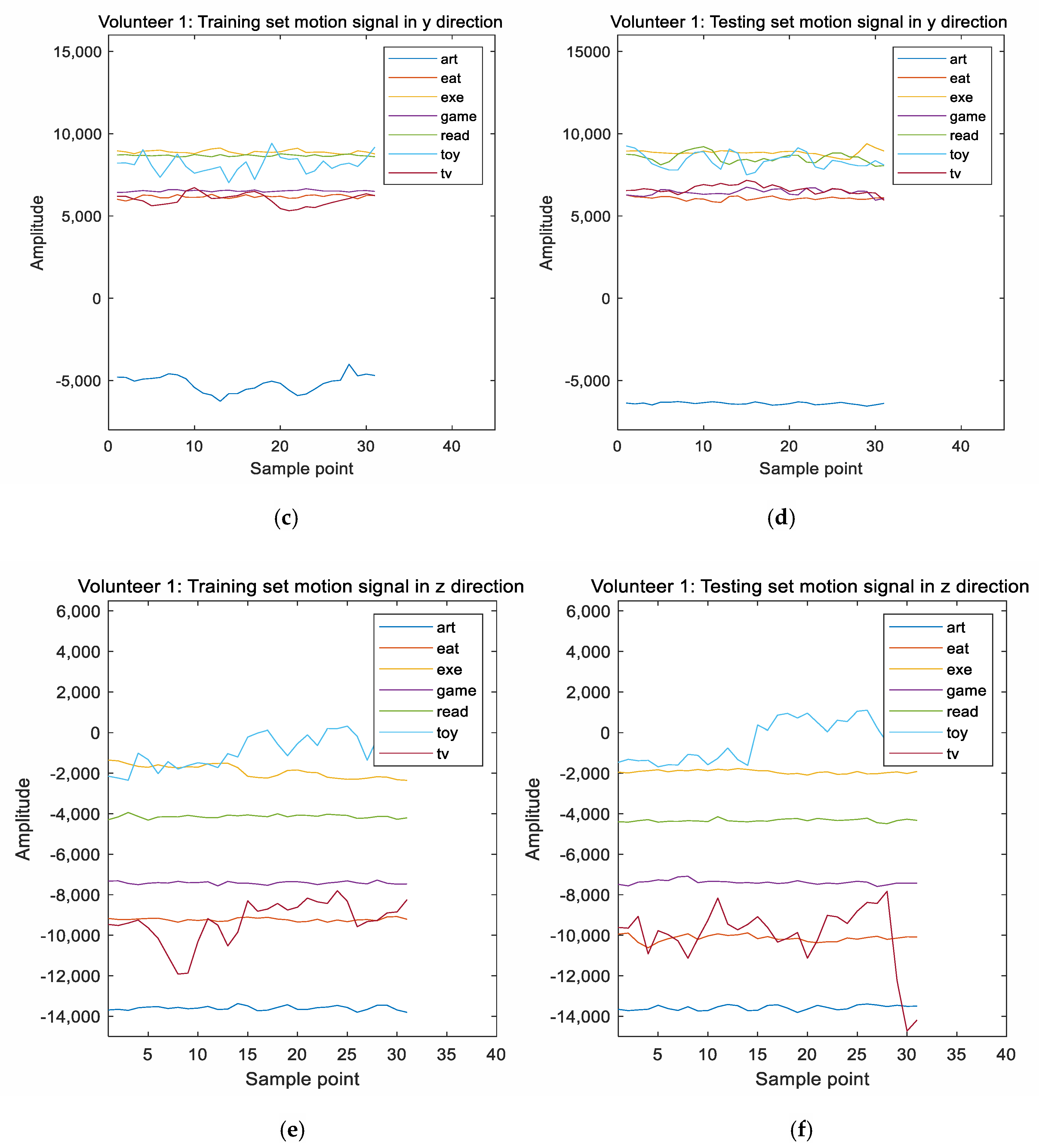

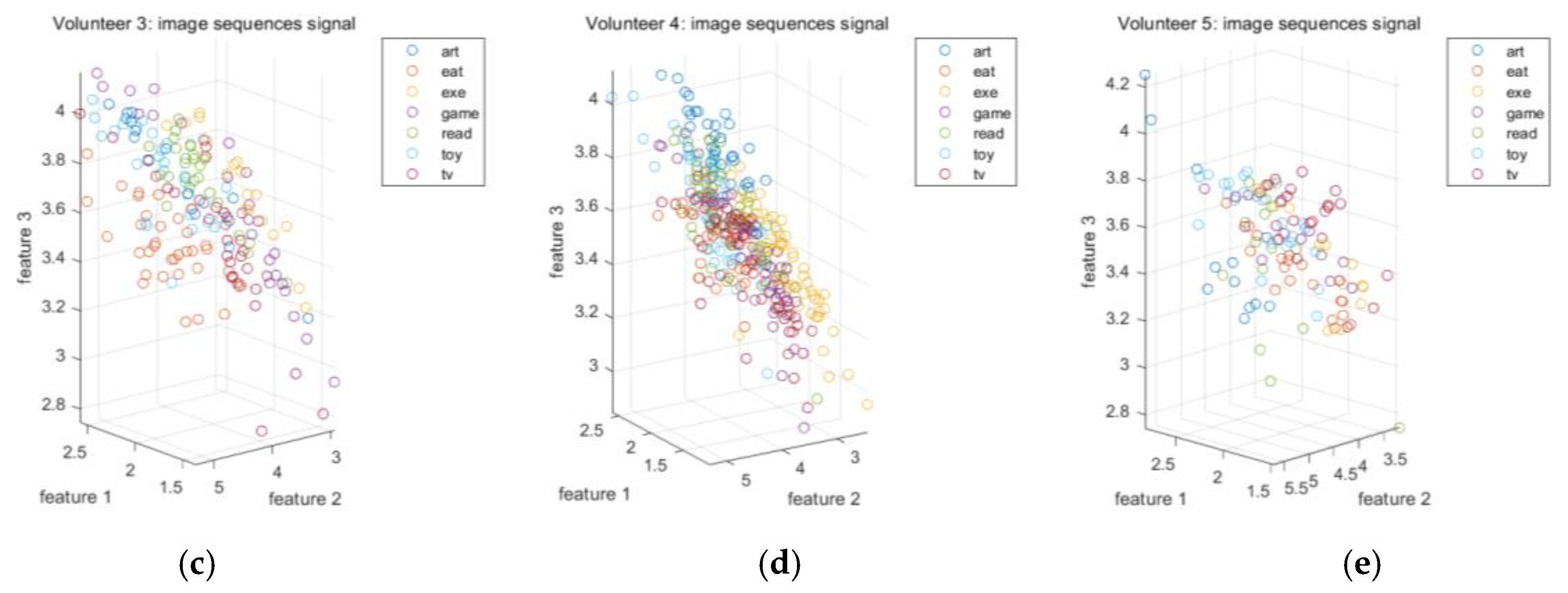

2.1.1. Features Extracted from the Electroencephalograms

- Step 1:

- Initialization: let , and a threshold value equal to 0.3.

- Step 2:

- Let the ith intrinsic mode function be . This can be obtained as follows:

- (a)

- Initialization: let , and .

- (b)

- Find all the maxima and minima of .

- (c)

- Denote the upper envelope and the lower envelope of as and , respectively. Obtain and by interpolating the cubic spline function at the maxima and the minima of , respectively.

- (d)

- Let the mean of the upper envelope and the lower envelope of be .

- (e)

- Define .

- (f)

- Compute . If SD is not greater than the given threshold, then set . Otherwise, increment the value of and go back to Step (b).

- Step 3:

- Set . If satisfies the properties of the intrinsic mode function or it is a monotonic function, then the decomposition is completed.

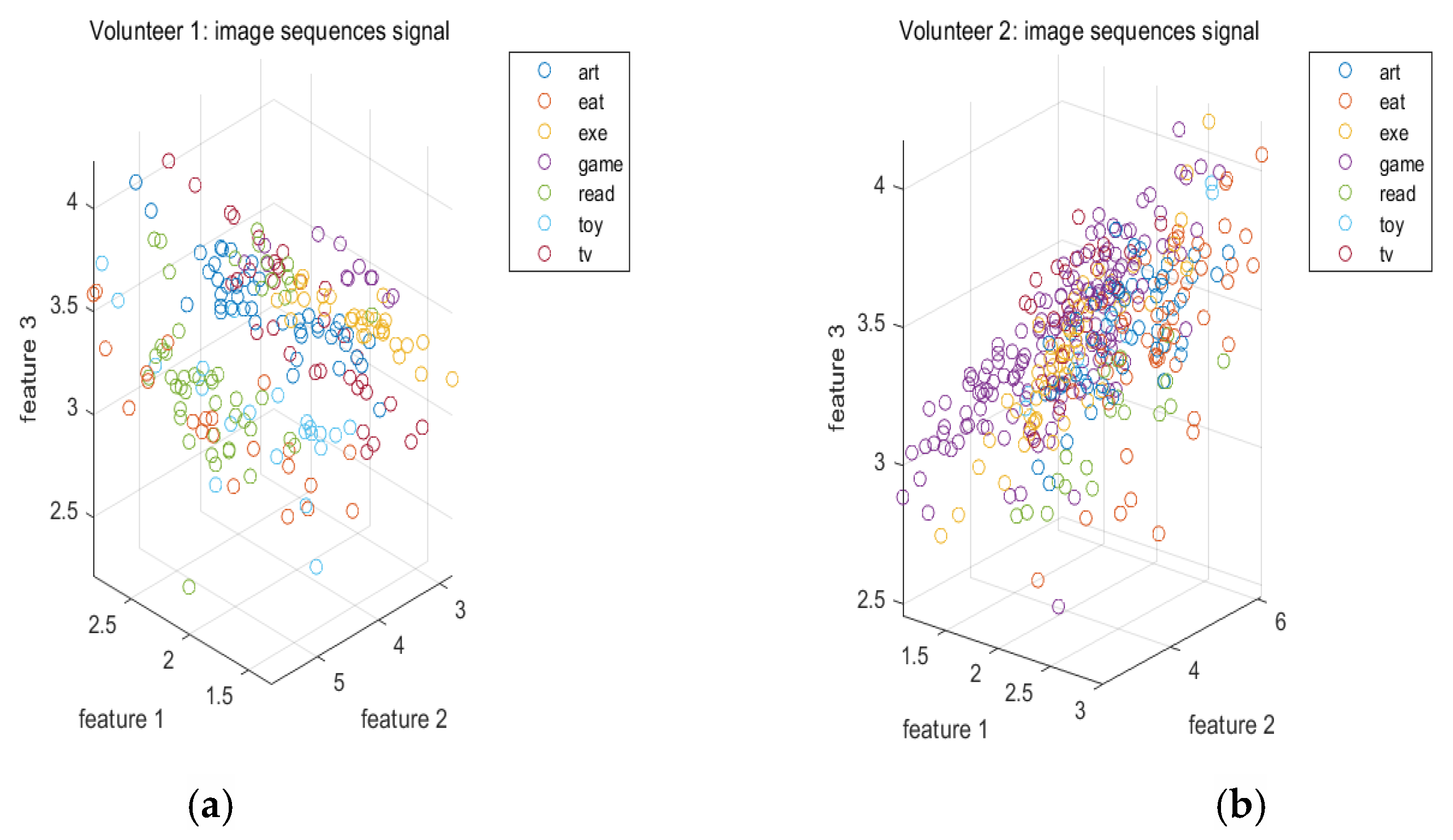

2.1.2. Features Extracted from the Image Sequences

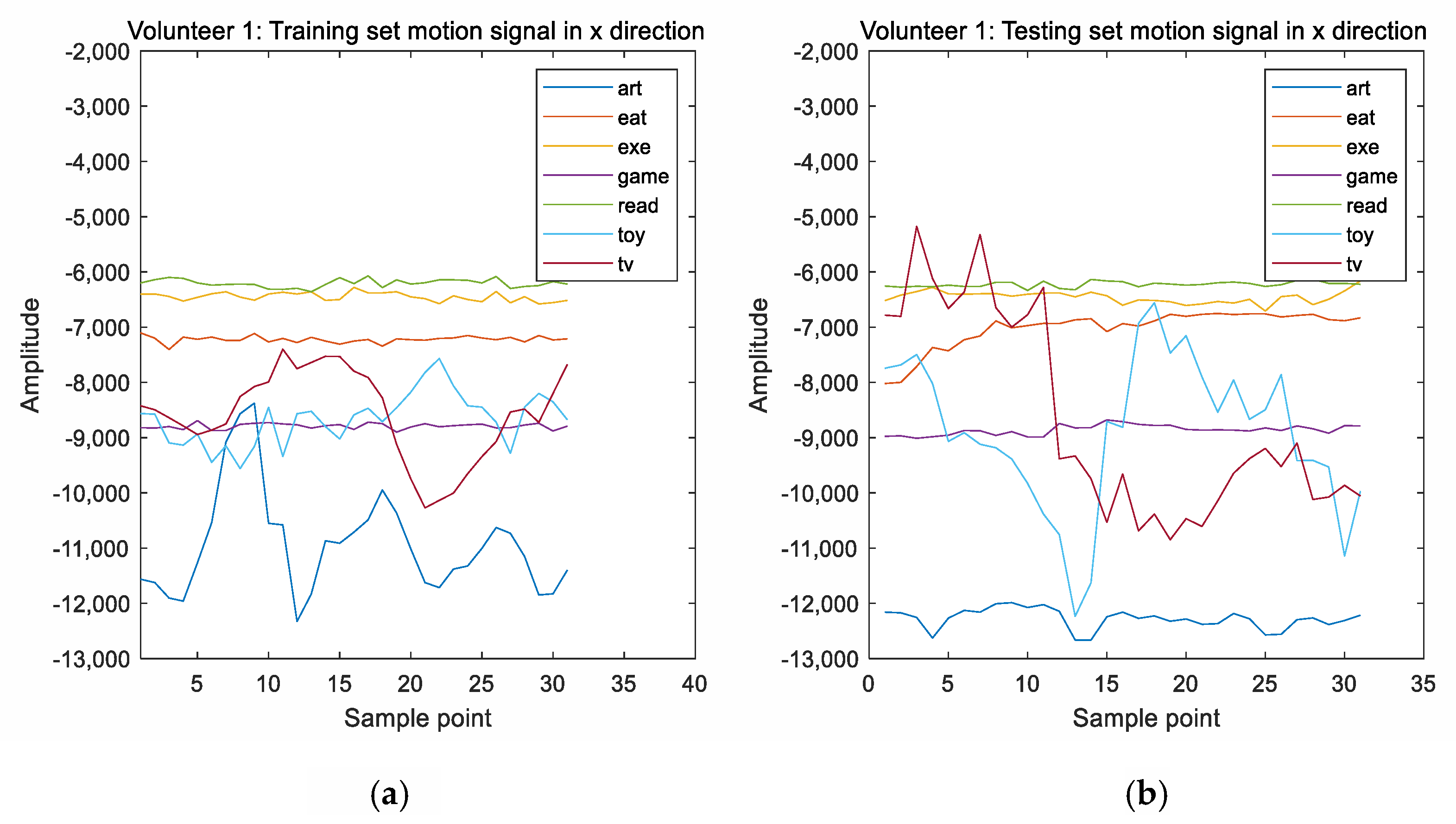

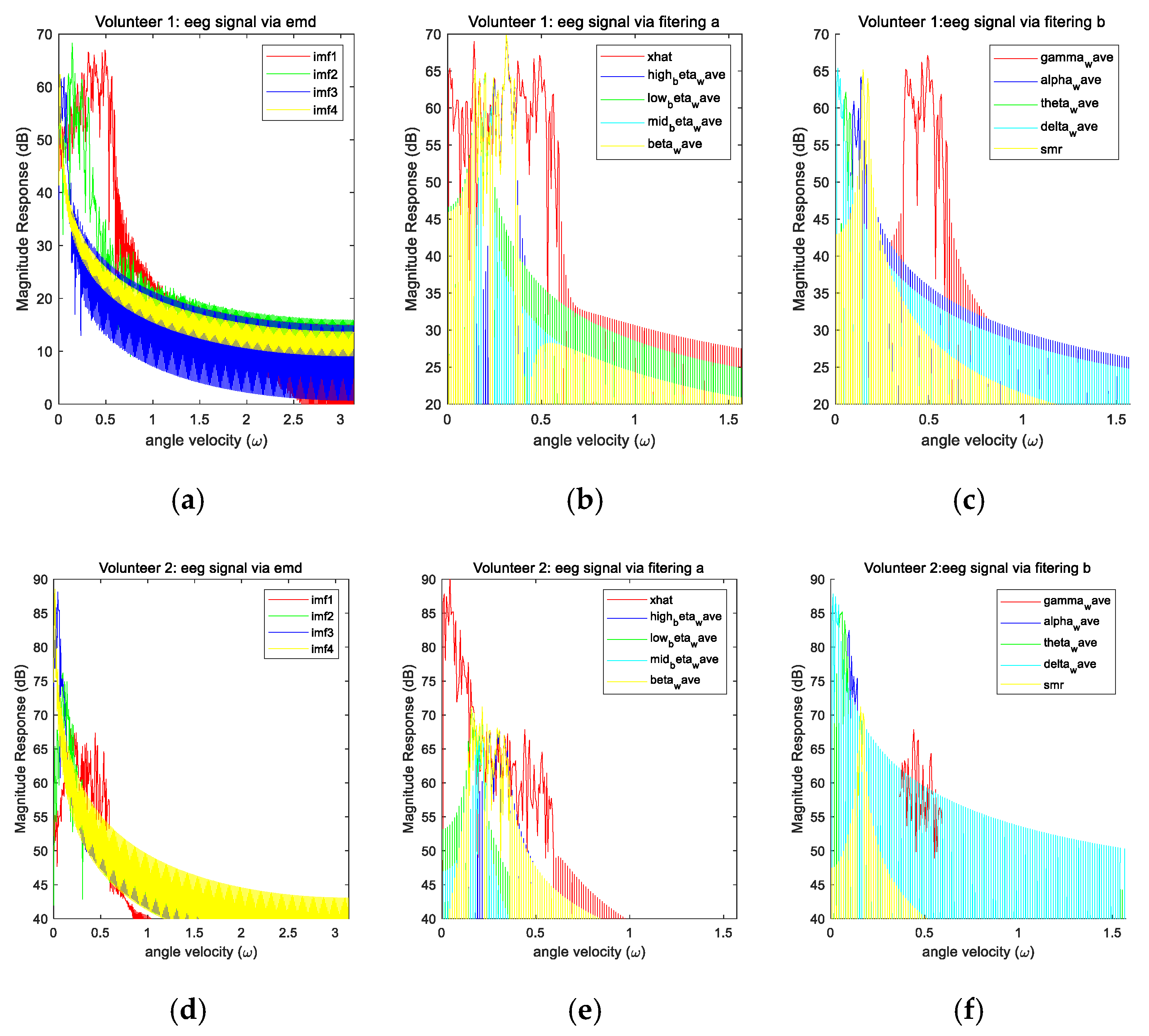

2.1.3. Features Extracted from the Motion Signals

2.1.4. Fusion of All the Features Together

2.2. Classification

- Step 1:

- If there are N samples, then these N samples are selected in a random sequence. Here, each sample is selected randomly at each time. That is, the algorithm selects another sample randomly after the previous sample is selected. These selected N samples form the decision nodes and are used to train a decision tree.

- Step 2:

- Suppose that each sample has M attributes; m attributes are selected randomly such that m << M is satisfied. Then, some strategies such as the information gain are adopted to evaluate these m attributes. Each node of the decision tree needs to split. One attribute is selected as the split attribute of the node.

- Step 3:

- During the formation of the decision tree, each node is split according to Step 2 until it can no longer be split.

- Step 4:

- Repeat Step 1 to Step 3 to establish a large number of decision trees. Thus, a random forest is formed.

2.3. Computational Complexity Analysis

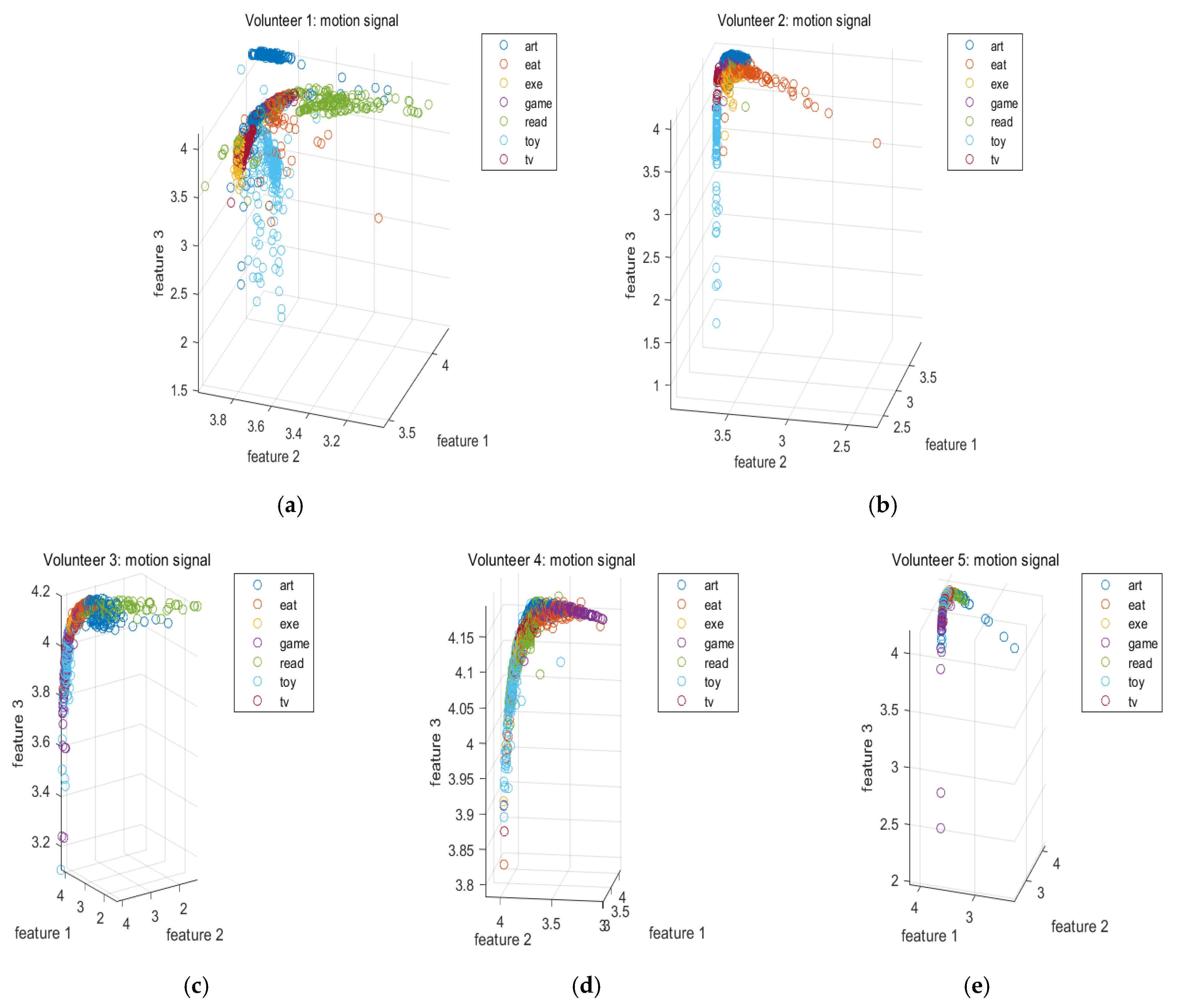

3. Computer Numerical Simulation Results

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ni, Q.; Fan, Z.; Zhang, L.; Nugent, C.D.; Cleland, I.; Zhang, Y.; Zhou, N. Leveraging wearable sensors for human daily activity recognition with stacked denoising autoencoders. Sensors 2020, 20, 5114. [Google Scholar] [CrossRef] [PubMed]

- Kunze, K.; Shiga, Y.; Ishimaru, S.; Kise, K. Reading activity recognition using an off-the-shelf EEG—Detecting reading activities and distinguishing genres of documents. In Proceedings of the 12th International Conference on Document Analysis and Recognition, Washington, DC, USA, 25–28 August 2013; pp. 96–100. [Google Scholar]

- Zhou, X.; Ling, B.W.K.; Li, C.; Zhao, K. Epileptic seizure detection via logarithmic normalized functional values of singular values. Biomed. Signal Process. Control 2020, 62, 102086. [Google Scholar] [CrossRef]

- Lin, Y.; Ling, B.W.K.; Xu, N.; Lam, R.W.K.; Ho, C.Y.F. Effectiveness analysis of bio-electronic stimulation therapy to Parkinson’s diseases via joint singular spectrum analysis and discrete fourier transform approach. Biomed. Signal Process. Control 2020, 62, 102131. [Google Scholar] [CrossRef]

- Hadjidimitriou, S.K.; Hadjileontiadis, L.J. Toward an EEG-Based Recognition of Music Liking Using Time-Frequency Analysis. IEEE Trans. Biomed. Eng. 2012, 59, 3498–3510. [Google Scholar] [CrossRef] [PubMed]

- Johnson, J.; Williams, E.; Swindon, M.; Ricketts, K.; Mottahed, B.; Rashad, S.; Integlia, R. A wearable mobile exergaming system for activity recognition and relaxation awareness. In Proceedings of the International Systems Conference, Orlando, FL, USA, 8–11 April 2019. [Google Scholar]

- Zhang, L.; Zeng, Z.; Ji, Q. Probabilistic image modeling with an extended chain graph for human activity recognition and image segmentation. IEEE Trans. Image Process. 2011, 20, 2401–2413. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Ling, B.W.K.; Mo, X.; Guo, Y.; Tian, Z. Empirical Mode Decomposition-Based Hierarchical Multiresolution Analysis for Suppressing Noise. IEEE Trans. Instrum. Meas. 2019, 69, 1833–1845. [Google Scholar] [CrossRef]

- Rakshit, M.; Das, S. An efficient ECG denoising methodology using empirical mode decomposition and adaptive switching mean filter. Biomed. Signal Process. Control 2018, 40, 140–148. [Google Scholar] [CrossRef]

- Tang, Y.W.; Tai, C.C.; Su, C.C.; Chen, C.Y.; Chen, J.F. A correlated empirical mode decomposition method for partial discharge signal denoising. Meas. Sci. Technol. 2010, 21, 085106. [Google Scholar] [CrossRef]

- Srinivasan, V.; Eswaran, C.; Sriraam, N. Approximate entropy-based epileptic EEG detection using artificial neural networks. IEEE Trans. Inf. Technol. Biomed. 2007, 11, 288–295. [Google Scholar] [CrossRef] [PubMed]

- Yazdanpanah, H.; Diniz, P.S.R.; Lima, M.V.S. Feature Adaptive Filtering: Exploiting Hidden Sparsity. IEEE Trans. Circuits Syst. I Regul. Pap. 2020, 67, 2358–2371. [Google Scholar] [CrossRef]

- Kim, H.Y.; Meyer, E.R.; Egawa, R. Frequency Domain Filtering for Down Conversion of a DCT Encoded Picture. U.S. Patent 6,175,592, 16 January 2001. [Google Scholar]

- Jing, X.Y.; Zhang, D. A face and palmprint recognition approach based on discriminant DCT feature extraction. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2004, 34, 2405–2415. [Google Scholar] [CrossRef] [Green Version]

- Niu, F.; Abdel-Mottaleb, M. View-invariant human activity recognition based on shape and motion features. In Proceedings of the IEEE Sixth International Symposium on Multimedia Software Engineering, Miami, FL, USA, 13–15 December 2004; pp. 546–556. [Google Scholar]

- Ishimaru, S.; Kunze, K.; Kise, K.; Weppner, J.; Dengel, A.; Lukowicz, P.; Bulling, A. In the blink of an eye: Combining head motion and eye blink frequency for activity recognition with google glass. In Proceedings of the 5th Augmented Human International Conference, Kobe Convention Center, Kobe, Japan, 7–9 March 2014; pp. 1–4. [Google Scholar]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Archer, K.J.; Kimes, R.V. Empirical characterization of random forest variable importance measures. Comput. Stat. Data Anal. 2008, 52, 2249–2260. [Google Scholar] [CrossRef]

- Ahmadi, M.N.; Pavey, T.G.; Trost, S.G. Machine learning models for classifying physical activity in free-living preschool children. Sensors 2020, 20, 4364. [Google Scholar] [CrossRef] [PubMed]

| Volunteer Identity Number | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Total number of data points in both the training set and the test set | 1336 | 1122 | 1044 | 1422 | 400 |

| Total number of data points in the training set | 400 | 336 | 313 | 426 | 120 |

| Total number of data points in the test set | 936 | 786 | 731 | 996 | 280 |

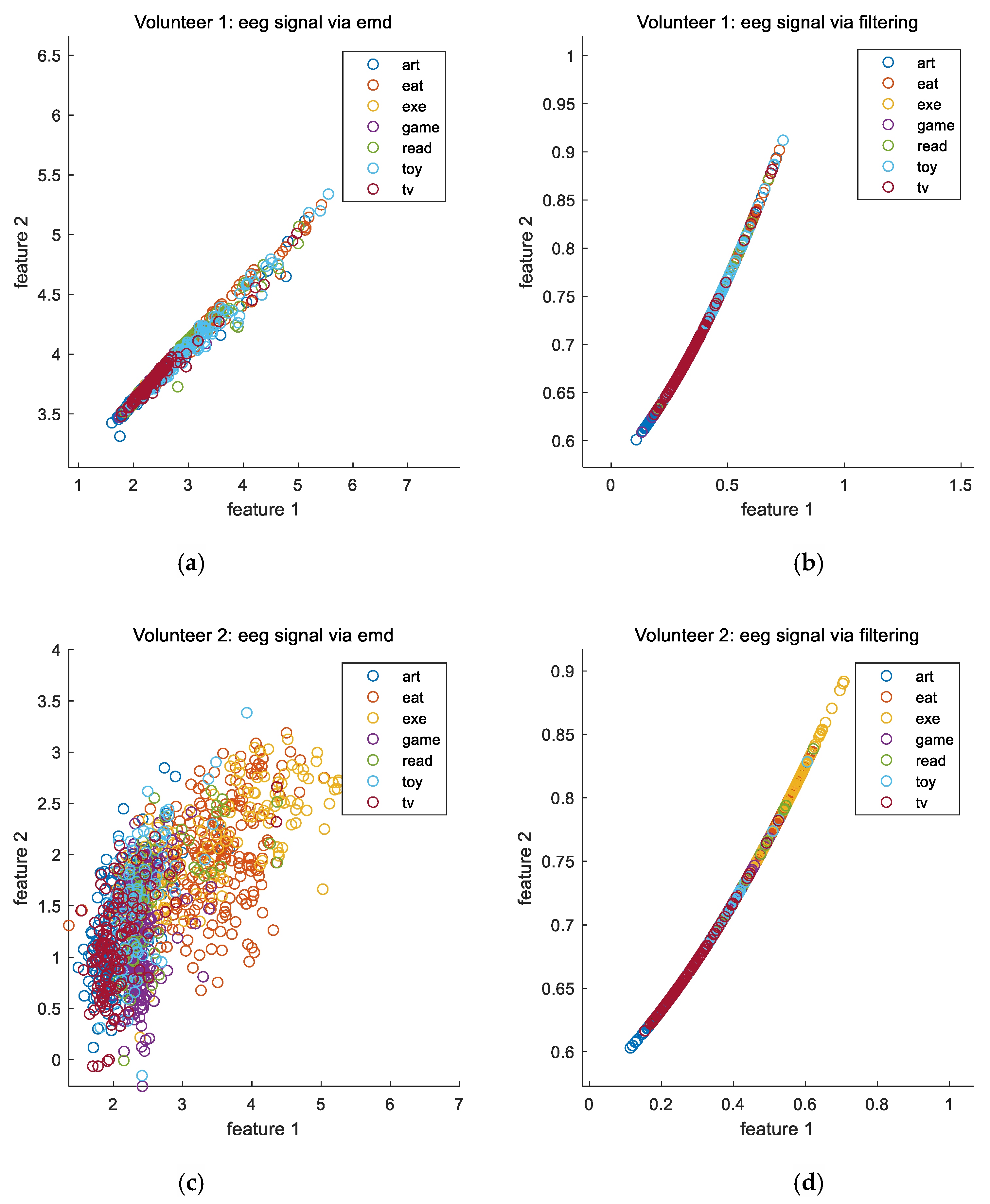

| The Percentage Accuracies and the Macro F1 Scores Obtained by Our Proposed Empirical Mode Decomposition-Based Method | The Percentage Accuracies and the Macro F1 Scores Obtained by the Conventional Filtering-Based Method | |||

|---|---|---|---|---|

| Percentage Accuracies | Macro F1 Scores | Percentage Accuracies | Macro F1 Scores | |

| The results based on the motion signals, the electroencephalograms, and the image sequences | 0.9690 | 0.8923 | 0.9733 | 0.8708 |

| The results based on the motion signals and the image sequences | 0.9466 | 0.8916 | 0.9466 | 0.8916 |

| The results based on the electroencephalograms and the image sequences | 0.9423 | 0.8172 | 0.9658 | 0.7858 |

| The results based on the motion signals and the electroencephalograms | 0.8921 | 0.7820 | 0.8953 | 0.8289 |

| The results based on the image sequences | 0.8590 | 0.8100 | 0.8590 | 0.8100 |

| The results based on the electroencephalograms | 0.2874 | 0.4081 | 0.4605 | 0.4765 |

| The results based on the motion signals | 0.8771 | 0.8171 | 0.8771 | 0.8171 |

| The Percentage Accuracies and the Macro F1 Scores Obtained by Our Proposed Empirical Mode Decomposition-Based Method | The Percentage Accuracies and the Macro F1 Scores Obtained by the Conventional Filtering-Based Method | |||

|---|---|---|---|---|

| Percentage Accuracies | Macro F1 Scores | Percentage Accuracies | Macro F1 Scores | |

| The results based on the motion signals, the electroencephalograms, and the image sequences | 0.9326 | 0.8343 | 0.9237 | 0.8337 |

| The results based on the motion signals and the image sequences | 0.9186 | 0.8289 | 0.9186 | 0.8289 |

| The results based on the electroencephalograms and the image sequences | 0.8779 | 0.7798 | 0.8677 | 0.7313 |

| The results based on the motion signals and the electroencephalograms | 0.8384 | 0.7896 | 0.8397 | 0.7626 |

| The results based on the image sequences | 0.8410 | 0.7813 | 0.8410 | 0.7613 |

| The results based on the electroencephalograms | 0.4593 | 0.4177 | 0.5394 | 0.4859 |

| The results based on the motion signals | 0.8079 | 0.7638 | 0.8079 | 0.7438 |

| The Percentage Accuracies and the Macro F1 Scores Obtained by Our Proposed Empirical Mode Decomposition-Based Method | The Percentage Accuracies and the Macro F1 Scores Obtained by the Conventional Filtering-Based Method | |||

|---|---|---|---|---|

| Percentage Accuracies | Macro F1 Scores | Percentage Accuracies | Macro F1 Scores | |

| The results based on the motion signals, the electroencephalograms, and the image sequences | 0.9384 | 0.8492 | 0.8960 | 0.8406 |

| The results based on the motion signals and the image sequences | 0.8782 | 0.8340 | 0.8782 | 0.8340 |

| The results based on the electroencephalograms and the image sequences | 0.8892 | 0.7349 | 0.8782 | 0.7586 |

| The results based on the motion signals and the electroencephalograms | 0.7373 | 0.7214 | 0.7442 | 0.7309 |

| The results based on the image sequences | 0.8536 | 0.7456 | 0.8536 | 0.7456 |

| The results based on the electroencephalograms | 0.3912 | 0.3965 | 0.3666 | 0.4489 |

| The results based on the motion signals | 0.6977 | 0.6709 | 0.6977 | 0.6709 |

| The Percentage Accuracies and the Macro F1 Scores Obtained by Our Proposed Empirical Mode Decomposition-Based Method | The Percentage Accuracies and the Macro F1 Scores Obtained by the Conventional Filtering-Based Method | |||

|---|---|---|---|---|

| Percentage Accuracies | Macro F1 Scores | Percentage Accuracies | Macro F1 Scores | |

| The results based on the motion signals, the electroencephalograms, and the image sequences | 0.8494 | 0.8532 | 0.8464 | 0.7864 |

| The results based on the motion signals and the image sequences | 0.8394 | 0.8085 | 0.8394 | 0.8085 |

| The results based on the electroencephalograms and the image sequences | 0.8092 | 0.6463 | 0.8283 | 0.6232 |

| The results based on the motion signals and the electroencephalograms | 0.7028 | 0.6592 | 0.6687 | 0.6822 |

| The results based on the image sequences | 0.7892 | 0.6213 | 0.7892 | 0.6213 |

| The results based on the electroencephalograms | 0.3936 | 0.3833 | 0.3353 | 0.3660 |

| The results based on the motion signals | 0.6295 | 0.5848 | 0.6295 | 0.5848 |

| The Percentage Accuracies and the Macro F1 Scores Obtained by Our Proposed Empirical Mode Decomposition-Based Method | The Percentage Accuracies and the Macro F1 Scores Obtained by the Conventional Filtering-Based Method | |||

|---|---|---|---|---|

| Percentage Accuracies | Macro F1 Scores | Percentage Accuracies | Macro F1 Scores | |

| The results based on the motion signals, the electroencephalograms, and the image sequences | 0.7821 | 0.6961 | 0.7750 | 0.6317 |

| The results based on the motion signals and the image sequences | 0.7464 | 0.6904 | 0.7464 | 0.6904 |

| The results based on the electroencephalograms and the image sequences | 0.7643 | 0.6321 | 0.6964 | 0.5865 |

| The results based on the motion signals and the electroencephalograms | 0.5107 | 0.5066 | 0.4786 | 0.4839 |

| The results based on the image sequences | 0.6786 | 0.6656 | 0.6786 | 0.6656 |

| The results based on the electroencephalograms | 0.1857 | 0.2292 | 0.3107 | 0.2523 |

| The results based on the motion signals | 0.4429 | 0.4693 | 0.4429 | 0.4693 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, L.; Zhao, K.; Zhou, X.; Ling, B.W.-K.; Liao, G. Empirical Mode Decomposition Based Multi-Modal Activity Recognition. Sensors 2020, 20, 6055. https://doi.org/10.3390/s20216055

Hu L, Zhao K, Zhou X, Ling BW-K, Liao G. Empirical Mode Decomposition Based Multi-Modal Activity Recognition. Sensors. 2020; 20(21):6055. https://doi.org/10.3390/s20216055

Chicago/Turabian StyleHu, Lingyue, Kailong Zhao, Xueling Zhou, Bingo Wing-Kuen Ling, and Guozhao Liao. 2020. "Empirical Mode Decomposition Based Multi-Modal Activity Recognition" Sensors 20, no. 21: 6055. https://doi.org/10.3390/s20216055

APA StyleHu, L., Zhao, K., Zhou, X., Ling, B. W.-K., & Liao, G. (2020). Empirical Mode Decomposition Based Multi-Modal Activity Recognition. Sensors, 20(21), 6055. https://doi.org/10.3390/s20216055