Autonomous Deployment of Underwater Acoustic Monitoring Devices Using an Unmanned Aerial Vehicle: The Flying Hydrophone

Abstract

1. Introduction

2. Hardware Architecture

- A UAV capable of autonomous navigation and persistent landing and take-off on water. The system described will enable a UAV to fly to predefined GPS waypoints using a high-level interface designed on a mobile device. Using an autonomous UAV system has the advantage of introducing an agile, more manoeuvrable method for rapid deployment of marine mammal monitoring equipment. UAVs also potentially reduce the cost of marine mammal surveys and enable faster deployment and data acquisition for short range operations. Using UAVs in this context will help reduce the data gaps between large survey intervals. There is also the potential to reduce human error and save lives as autonomous systems completing repetitive tasks reduce the risk to human lives, especially far out at sea.

- A low cost data acquisition system that records and captures underwater acoustic signals between 90 and 150 kHz (3 dB). The system is an inexpensive and light weight alternative to the current options available in the market. Designed using mostly off-the-shelf parts and weighing less than 250 g without the hydrophone element, it is integrated with the UAV and records for user-defined periods when the UAV lands on water.

2.1. UAV

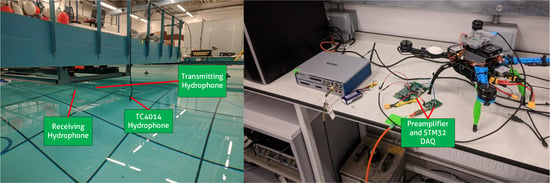

2.2. Underwater Acquisition Device

2.2.1. Hydrophone

2.2.2. Preamplifier

2.2.3. Signal Processing Board

3. System Software Architecture

3.1. Firmware of the Data Acquisition Device

3.2. UAV Software Architecture

3.2.1. Estimation

3.2.2. UAV Control

3.2.3. High Level Mission Planner

3.2.4. Flight Control

4. Results

4.1. Outdoor Experiments

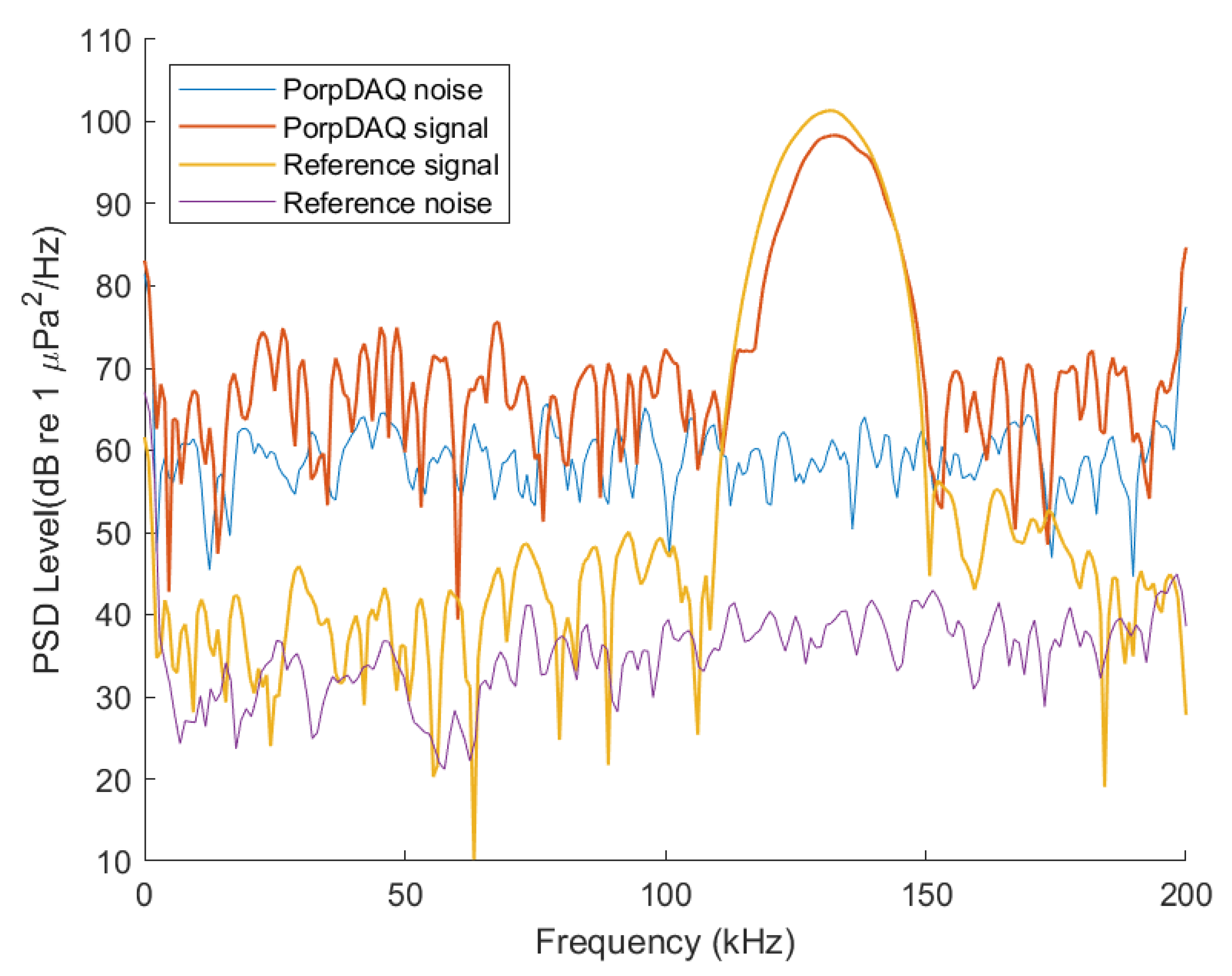

4.2. Underwater Acoustics Device

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Grid, N. Britain’s Clean Energy System Achieves Historic Milestone in 2019. 2019. Available online: https://www.nationalgrid.com/stories/journey-to-net-zero/britains-clean-energy-system-achieves-historic-milestone-2019 (accessed on 15 August 2020).

- Skopljak, N. Offshore Wind Generates 9.9% of UK Energy in 2019. 2020. Available online: https://www.offshorewind.biz/2020/03/27/offshore-wind-generates-9-9-of-uk-energy-in-2019/ (accessed on 15 August 2020).

- UK Government Affirms 40 GW Offshore Wind Energy Commitment. 2019. Available online: https://www.evwind.es/2019/12/19/uk-government-affirms-40-gw-offshore-wind-energy-commitment/72645 (accessed on 15 August 2020).

- Richardson, W.J.; Greene, C.R.; Malme, C.I.; Thomson, D.H. CHAPTER 8—MARINE MAMMAL HEARING By W. John Richardson, LGL Ltd. In Marine Mammals and Noise; Richardson, W.J., Greene, C.R., Malme, C.I., Thomson, D.H., Eds.; Academic Press: San Diego, CA, USA, 1995; pp. 205–240. [Google Scholar] [CrossRef]

- European Commission. Directive 2008/56/EC of the European Parliament and of the Council of 17 June 2008 Establishing a Framework for Community Action in the Field of Marine Environmental Policy (Marine Strategy Framework Directive). Off. J. Eur. Union 2008, 26, 136–157. [Google Scholar]

- HM Government. Marine Strategy Part One: UK Initial Assessment and Good Environmental Status. 2012. Available online: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/69632/pb13860-marine-strategy-part1-20121220.pdf (accessed on 28 December 2019).

- Dekeling, R.P.A.; Tasker, M.L.; Van der Graaf, S.; Andersson, M.H.; André, M.; Borsani, J.; Brensing, K.; Castellote, M.; Dalen, J.; Folegot, T.; et al. Monitoring Guidance for Underwater Noise in European Seas. Part I: Executive Summary; Publications Office of the European Union: Luxembourg, 2014. [Google Scholar] [CrossRef]

- Dekeling, R.P.A.; Tasker, M.L.; Van der Graaf, S.; Andersson, M.H.; André, M.; Borsani, J.; Brensing, K.; Castellote, M.; Dalen, J.; Folegot, T.; et al. Monitoring Guidance for Underwater Noise in European Seas. Part II: Monitoring Guidance Specifications; Publications Office of the European Union: Luxembourg, 2014. [Google Scholar] [CrossRef]

- Dekeling, R.P.A.; Tasker, M.L.; Van der Graaf, S.; Andersson, M.H.; André, M.; Borsani, J.; Brensing, K.; Castellote, M.; Dalen, J.; Folegot, T.; et al. Monitoring Guidance for Underwater Noise in European Seas. Part III: Background Information and Annexes; Publications Office of the European Union: Luxembourg, 2014. [Google Scholar] [CrossRef]

- JNCC. JNCC Guidelines for Minimising the Risk of Injury and Disturbance to Marine Mammals from Seismic Surveys. 2010. Available online: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/50005/jncc-seismic-guide.pdf (accessed on 28 July 2019).

- Prideaux, G. Technical Support Information to the CMS Family Guidelines on Environmental Impact Assessments for Marine Noise-generating Activities’, Convention on Migratory Species of Wild Animals. 2017. Available online: https://www.cms.int/sites/default/files/basic_page_documents/CMS-Guidelines-EIA-Marine-Noise_TechnicalSupportInformation_FINAL20170918.pdf (accessed on 1 October 2020).

- Accobams. Methodological Guide Guidance on Underwater Noise Mitigation Measures. 2019. Available online: https://accobams.org/wp-content/uploads/2019/04/MOP7.Doc31Rev1_Methodological-Guide-Noise.pdf (accessed on 1 October 2020).

- Defra, D.F.E.F.; Affairs, R. Marine Strategy Part One: UK Updated Assessment and Good Environmental Status. 2019. Available online: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/841246/marine-strategy-part1-october19.pdf (accessed on 28 December 2019).

- JNCC. European Community Directive on the Conservation of Natural Habitats and of Wild Fauna and Flora (92/43/EEC), S1351—Harbour Porpoise (Phocoena Phocoena). 2019. Available online: https://jncc.gov.uk/jncc-assets/Art17/S1351-UK-Habitats-Directive-Art17-2019.pdf (accessed on 28 December 2019).

- JNCC. Marine Protected Areas in the UK. 2017. Available online: http://jncc.defra.gov.uk/page-5201 (accessed on 11 December 2018).

- Iammwg Camphuysen, C.; Siemensma, M. A Conservation Literature Review for the Harbour Porpoise (Phocoena Phocoena). 2015. Available online: http://jncc.defra.gov.uk/pdf/JNCCReport566_AConservationLiteratureReviewForTheHarbourPorpoise.pdf (accessed on 2 January 2020).

- Seymour, A.C.; Dale, J.; Hammill, M.; Halpin, P.N.; Johnston, D.W. Automated detection and enumeration of marine wildlife using unmanned aircraft systems (UAS) and thermal imagery. Sci. Rep. 2017, 7. [Google Scholar] [CrossRef] [PubMed]

- Benke, H.; Bräger, S.; Dähne, M.; Gallus, A.; Hansen, S.; Honnef, C.; Jabbusch, M.; Koblitz, J.; Krügel, K.; Liebschner, A.; et al. Baltic Sea harbour porpoise populations: Status and conservation needs derived from recent survey results. Mar. Ecol. Prog. Ser. 2014, 495, 275–290. [Google Scholar] [CrossRef]

- Verfuß, U.K.; Honnef, C.G.; Meding, A.; Dähne, M.; Mundry, R.; Benke, H. Geographical and seasonal variation of harbour porpoise (Phocoena phocoena) presence in the German Baltic Sea revealed by passive acoustic monitoring. J. Mar. Biol. Assoc. UK 2007, 87, 165–176. [Google Scholar] [CrossRef]

- Read, A.J.; Westgate, A.J. Monitoring the movements of harbour porpoises ( Phocoena phocoena ) with satellite telemetry. Mar. Biol. 1997, 130, 315–322. [Google Scholar] [CrossRef]

- Viquerat, S.; Herr, H.; Gilles, A.; Peschko, V.; Siebert, U.; Sveegaard, S.; Teilmann, J. Abundance of harbour porpoises (Phocoena phocoena) in the western Baltic, Belt Seas and Kattegat. Mar. Biol. 2014, 161, 745–754. [Google Scholar] [CrossRef]

- Wilson, B.; Benjamins, S.; Elliott, J. Using drifting passive echolocation loggers to study harbour porpoises in tidal-stream habitats. Endanger. Species Res. 2013, 22, 125–143. [Google Scholar] [CrossRef]

- Jung, J.-L.; Ephan, E.; Louis, M.; Alfonsi, E.; Liret, C.; Carpentier, F.G.; Hassani, S. Harbour porpoises (Phocoena phocoena) in north-western France: Aerial survey, opportunistic sightings and strandings monitoring. J. Mar. Biol. Assoc. UK 2009, 89. [Google Scholar] [CrossRef]

- Scheidat, M.; Verdaat, H.; Aarts, G. Using aerial surveys to estimate density and distribution of harbour porpoises in Dutch waters. J. Sea Res. 2012, 69, 1–7. [Google Scholar] [CrossRef]

- Hammond, P.; Berggren, P.; Benke, H.; Borchers, D.; Collet, A.; Heide-Jørgensen, M.; Heimlich, S.; Hiby, A.; Leopold, M.; Øien, N. Abundance of harbour porpoise and other cetaceans in the North Sea and adjacent waters. J. Appl. Ecol. 2002, 39, 361–376. [Google Scholar] [CrossRef]

- Hammond, P.S.; Macleod, K.; Berggren, P.; Borchers, D.L.; Burt, L.; Cañadas, A.; Desportes, G.; Donovan, G.P.; Gilles, A.; Gillespie, D.; et al. Cetacean abundance and distribution in European Atlantic shelf waters to inform conservation and management. Biol. Conserv. 2013, 164, 107–122. [Google Scholar] [CrossRef]

- Estimates of Cetacean Abundance in European Atlantic Waters in Summer 2016 from the SCANS-III Aerial and Shipboard Surveys. 2017. Available online: https://synergy.st-andrews.ac.uk/scans3/files/2017/05/SCANS-III-design-based-estimates-2017-05-12-final-revised.pdf (accessed on 11 December 2018).

- Gil, Á.; Correia, A.M.; Sousa-Pinto, I. Records of harbour porpoise (Phocoena phocoena) in the mouth of the Douro River (northern Portugal) with presence of an anomalous white individual. Mar. Biodivers. Rec. 2019, 12. [Google Scholar] [CrossRef]

- Carlén, I.; Thomas, L.; Carlström, J.; Amundin, M.; Teilmann, J.; Tregenza, N.; Tougaard, J.; Koblitz, J.C.; Sveegaard, S.; Wennerberg, D.; et al. Basin-scale distribution of harbour porpoises in the Baltic Sea provides basis for effective conservation actions. Biol. Conserv. 2018, 226, 42–53. [Google Scholar] [CrossRef]

- Bailey, H.; Clay, G.; Coates, E.A.; Lusseau, D.; Senior, B.; Thompson, P.M. Using T-PODs to assess variations in the occurrence of coastal bottlenose dolphins and harbour porpoises. Aquat. Conserv. Mar. Freshw. Ecosyst. 2010, 20, 150–158. [Google Scholar] [CrossRef]

- Adams, M.; Sanderson, B.; Porskamp, P.; Redden, A.M. Comparison of co-deployed drifting passive acoustic monitoring tools at a high flow tidal site: c-pods and iclistenhf hydrophones. J. Ocean Technol. 2019, 14, 59–83. [Google Scholar]

- Yack, T.M.; Barlow, J.; Calambokidis, J.; Southall, B.; Coates, S. Passive acoustic monitoring using a towed hydrophone array results in identification of a previously unknown beaked whale habitat. J. Acoust. Soc. Am. 2013, 134, 2589–2595. [Google Scholar] [CrossRef]

- Zimmer, W. Passive Acoustic Monitoring of Cetaceans; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar] [CrossRef]

- Sveegaard, S.; Teilmann, J.; Berggren, P.; Mouritsen, K.N.; Gillespie, D.; Tougaard, J. Acoustic surveys confirm the high-density areas of harbour porpoises found by satellite tracking. ICES J. Mar. Sci. 2011, 68, 929–936. [Google Scholar] [CrossRef]

- OSPAR. Abundance and Distribution of Cetaceans. 2016. Available online: https://oap.ospar.org/en/ospar-assessments/intermediate-assessment-2017/biodiversity-status/marine-mammals/abundance-distribution-cetaceans/abundance-and-distribution-cetaceans/ (accessed on 28 December 2019).

- Chelonia Limited. Wildlife Acoustic Monitoring. Available online: https://www.chelonia.co.uk/cpod_specifications.htm (accessed on 25 October 2020).

- Ocean Instruments Acoustic Monitoring Systems. Available online: http://www.oceaninstruments.co.nz/new-soundtrap-st500/ (accessed on 30 July 2019).

- Loggerhead Instruments Underwater Acoustic Recorders. Available online: https://www.loggerhead.com/snap-underwater-acoustic-recorder (accessed on 30 July 2019).

- Oceansonics. Smart Hydrophones. Available online: https://oceansonics.com/product-types/smart-hydrophones/ (accessed on 30 July 2019).

- Macaulay, J.; Gordon, J.; Gillespie, D.; Malinka, C.; Johnson, M.; Northridge, S. Tracking Harbour Porpoises in Tidal Rapids. 2015. Available online: https://www.smru.st-andrews.ac.uk/files/2016/10/NERC_MRE_KEP_Tracking_Harbour_Porpoises_in_Tidal_Rapids.pdf (accessed on 30 July 2019).

- Macaulay, J.; Gordon, J.; Gillespie, D.; Malinka, C.; Northridge, S. Passive acoustic methods for fine-scale tracking of harbour porpoises in tidal rapids. J. Acoust. Soc. Am. 2017, 141, 1120–1132. [Google Scholar] [CrossRef]

- Fiori, L.; Doshi, A.; Martinez, E.; Orams, M.B.; Bollard-Breen, B. The Use of Unmanned Aerial Systems in Marine Mammal Research. Remote Sens. 2017, 9, 543. [Google Scholar] [CrossRef]

- Aniceto, A.S.; Biuw, M.; Lindstrøm, U.; Solbø, S.A.; Broms, F.; Carroll, J. Monitoring marine mammals using unmanned aerial vehicles: Quantifying detection certainty. Ecosphere 2018, 9, e02122. [Google Scholar] [CrossRef]

- Torres, L.G.; Nieukirk, S.L.; Lemos, L.; Chandler, T.E. Drone Up! Quantifying Whale Behavior From a New Perspective Improves Observational Capacity. Front. Mar. Sci. 2018, 5. [Google Scholar] [CrossRef]

- Lloyd, S.; Lepper, P.; Pomeroy, S. Evaluation of UAVs as an underwater acoustics sensor deployment platform. Int. J. Remote Sens. 2016, 38, 2808–2817. [Google Scholar] [CrossRef]

- Frouin-Mouy, H.; Tenorio-Hallé, L.; Thode, A.; Swartz, S.; Urbán, J. Using two drones to simultaneously monitor visual and acoustic behaviour of gray whales (Eschrichtius robustus) in Baja California, Mexico. J. Exp. Mar. Biol. Ecol. 2020, 525, 151321. [Google Scholar] [CrossRef]

- DJI. DJI Onboard SDK. 2017. Available online: https://github.com/dji-sdk/Onboard-SDK-ROS (accessed on 28 July 2019).

- TEXAS Instruments. Filter Design Tool. Available online: https://webench.ti.com/filter-design-tool/# (accessed on 28 July 2019).

- Miller, L.A.; Wahlberg, M. Echolocation by the harbour porpoise: Life in coastal waters. Front. Physiol. 2013, 4. [Google Scholar] [CrossRef] [PubMed]

- Cucknell, A.C.; Boisseau, O.; Leaper, R.; McLanaghan, R.; Moscrop, A. Harbour porpoise (Phocoena phocoena) presence, abundance and distribution over the Dogger Bank, North Sea, in winter. J. Mar. Biol. Assoc. UK 2016, 97, 1455–1465. [Google Scholar] [CrossRef]

- Barry, R. FreeRTOS Reference Manual: API Functions and Configuration Options; Real Time Engineers Limited: Bristol, UK, 2009. [Google Scholar]

- Mehralian, M.A.; Soryani, M. EKFPnP: Extended Kalman Filter for Camera Pose Estimation in a Sequence of Images. arXiv 2019, arXiv:1906.10324. [Google Scholar]

- Alatise, M.; Hancke, G. Pose Estimation of a Mobile Robot Based on Fusion of IMU Data and Vision Data Using an Extended Kalman Filter. Sensors 2017, 17, 2164. [Google Scholar] [CrossRef]

- Jeaong, H.; Jo, S.; Kim, S.; Suk, J.; Lee, Y.G. Simulation and Flight Experiment of a Quadrotor Using Disturbance Observer Based Control. In Proceedings of the 10th International Micro Air Vehicle Competition and Conference, Melbourne, Australia, 22–23 November 2018; pp. 336–347. [Google Scholar]

- Bradski, G. The OpenCV Library. Dr. Dobb’s J. Softw. Tools 2000, 25, 120–125. [Google Scholar]

- Villadsgaard, A.; Wahlberg, M.; Tougaard, J. Echolocation signals of wild harbour porpoises, Phocoena phocoena. J. Exp. Biol. 2007, 210, 56–64. [Google Scholar] [CrossRef]

- Smith, C.E.; Sykora-Bodie, S.T.; Bloodworth, B.; Pack, S.M.; Spradlin, T.R.; LeBoeuf, N.R. Assessment of known impacts of unmanned aerial systems (UAS) on marine mammals: Data gaps and recommendations for researchers in the United States. J. Unmanned Veh. Syst. 2016, 4, 31–44. [Google Scholar] [CrossRef]

- Pomeroy, P.; O’Connor, L.; Davies, P. Assessing use of and reaction to unmanned aerial systems in gray and harbor seals during breeding and molt in the UK. J. Unmanned Veh. Syst. 2015, 3, 102–113. [Google Scholar] [CrossRef]

- Ramos, E.A.; Maloney, B.; Magnasco, M.O.; Reiss, D. Bottlenose Dolphins and Antillean Manatees Respond to Small Multi-Rotor Unmanned Aerial Systems. Front. Mar. Sci. 2018, 5. [Google Scholar] [CrossRef]

- Raoult, V.; Colefax, A.P.; Allan, B.M.; Cagnazzi, D.; Castelblanco-Martínez, N.; Ierodiaconou, D.; Johnston, D.W.; Landeo-Yauri, S.; Lyons, M.; Pirotta, V.; et al. Operational Protocols for the Use of Drones in Marine Animal Research. Drones 2020, 4, 64. [Google Scholar] [CrossRef]

- Christiansen, F.; Rojano-Doñate, L.; Madsen, P.T.; Bejder, L. Noise Levels of Multi-Rotor Unmanned Aerial Vehicles with Implications for Potential Underwater Impacts on Marine Mammals. Front. Mar. Sci. 2016, 3. [Google Scholar] [CrossRef]

- Erbe, C.; Parsons, M.; Duncan, A.J.; Osterrieder, S.; Allen, K. Aerial and underwater sound of unmanned aerial vehicles (UAV, drones). J. Unmanned Veh. Syst. 2017. [Google Scholar] [CrossRef]

- Miljković, D. Methods for attenuation of unmanned aerial vehicle noise. In Proceedings of the 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 21–25 May 2018; pp. 0914–0919. [Google Scholar]

- Ren, H.; Halvorsen, M.B.; Deng, Z.D.; Carlson, T.J. Aquatic Acoustic Metrics Interface Utility for Underwater Sound Monitoring and Analysis. Sensors 2012, 12, 7438–7450. [Google Scholar] [CrossRef]

- Erbe, C.; Reichmuth, C.; Cunningham, K.; Lucke, K.; Dooling, R. Communication masking in marine mammals: A review and research strategy. Mar. Pollut. Bull. 2016, 103, 15–38. [Google Scholar] [CrossRef]

- Irwin, J.; Summers, R. Hydrophone Development. Available online: http://robosub.eecs.wsu.edu/ (accessed on 30 September 2019).

| Specification | PorpDAQ | Reference System |

|---|---|---|

| ADC Resolution | 12-bit | 16-bit |

| Sampling Rate | 400 kHz | 400 kHz |

| Output Range | 3.3 V | ±10 V |

| Hydrophone Sensitivity | −204 dB re 1 V/ Pa | −180 dB re 1 V/Pa |

| Amplifier Gain | 36.5 dB | 20 dB |

| Combined Sensitivity | −167.5 dB re 1 V/ Pa | −160 dB re 1 V/ Pa |

| Dynamic Range | 72 dB | 96 dB |

| Device | Recorded | Expected | Recorded | Expected | Average Centre Frequency (kHz) |

|---|---|---|---|---|---|

| PorpDAQ | 147.6 | 150 | 159.1 | 160 | 131.6 |

| Reference System | 149.7 | 150 | 160.9 | 160 | 130.4 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Babatunde, D.; Pomeroy, S.; Lepper, P.; Clark, B.; Walker, R. Autonomous Deployment of Underwater Acoustic Monitoring Devices Using an Unmanned Aerial Vehicle: The Flying Hydrophone. Sensors 2020, 20, 6064. https://doi.org/10.3390/s20216064

Babatunde D, Pomeroy S, Lepper P, Clark B, Walker R. Autonomous Deployment of Underwater Acoustic Monitoring Devices Using an Unmanned Aerial Vehicle: The Flying Hydrophone. Sensors. 2020; 20(21):6064. https://doi.org/10.3390/s20216064

Chicago/Turabian StyleBabatunde, Daniel, Simon Pomeroy, Paul Lepper, Ben Clark, and Rebecca Walker. 2020. "Autonomous Deployment of Underwater Acoustic Monitoring Devices Using an Unmanned Aerial Vehicle: The Flying Hydrophone" Sensors 20, no. 21: 6064. https://doi.org/10.3390/s20216064

APA StyleBabatunde, D., Pomeroy, S., Lepper, P., Clark, B., & Walker, R. (2020). Autonomous Deployment of Underwater Acoustic Monitoring Devices Using an Unmanned Aerial Vehicle: The Flying Hydrophone. Sensors, 20(21), 6064. https://doi.org/10.3390/s20216064