Privacy-Preserving Distributed Analytics in Fog-Enabled IoT Systems

Abstract

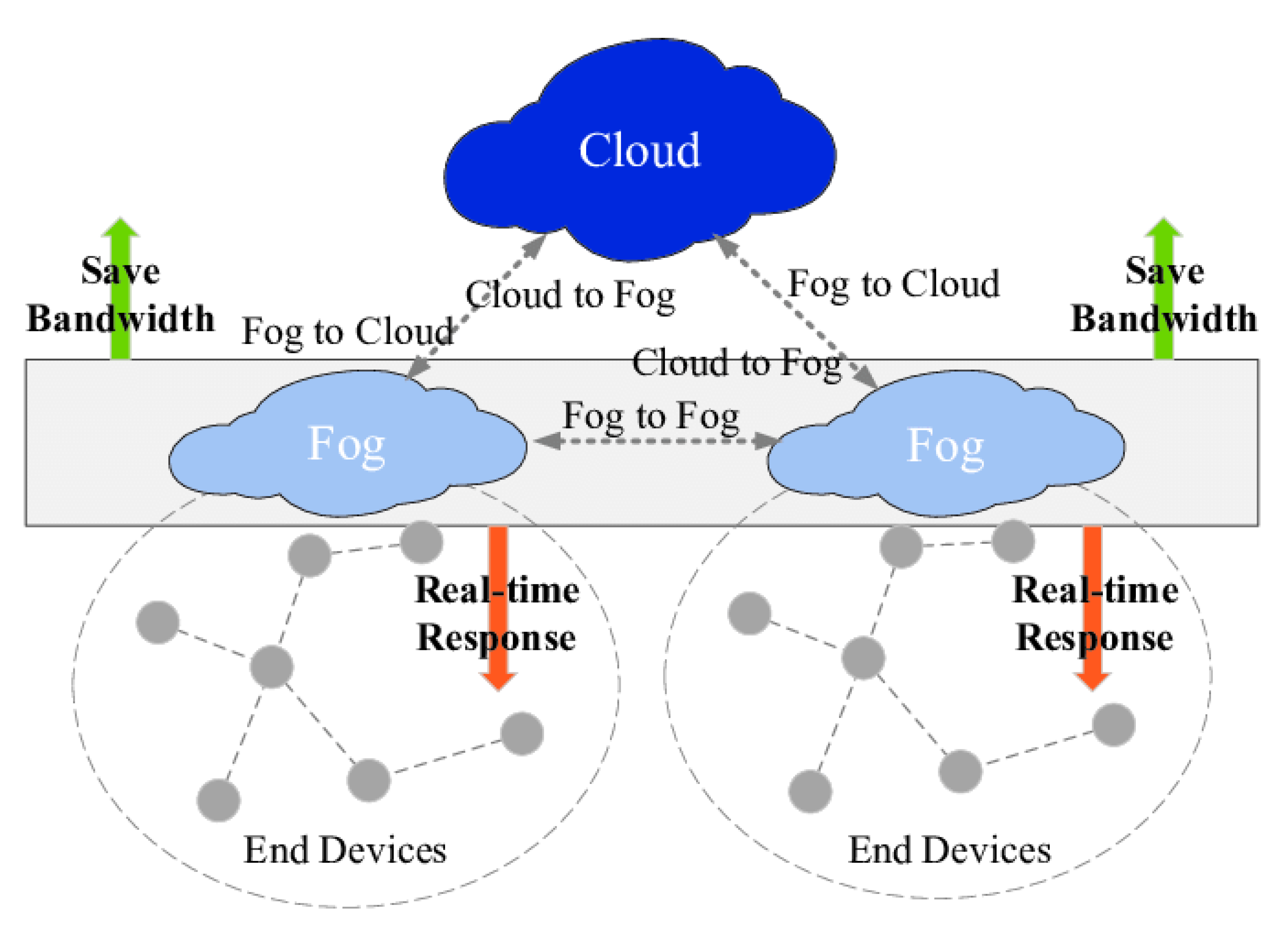

1. Introduction

2. Related Work

2.1. Distributed Analytics

2.2. Privacy-Preserving Schemes

3. Distributed Algorithm Design

3.1. Decomposed Problem Formulation

3.2. Distributed Algorithm

- Fog nodes:

- In each iteration, a pair of fog nodes are selected to exchange their estimates. The mixed estimates would be sent to their corresponding edge devices and the fog nodes would wait for edge devices’ returned gradients for updating their estimates. All other fog nodes that have not been selected in the current iteration perform in a similar way but send their individual estimates to edge devices instead.

- Edge devices:

- In each iteration, the edge devices compute the gradients with respect to the estimates (received from the fog nodes) using their local objective functions and return them back to the fog nodes for updating estimates.

| Algorithm 1: Fog node procedure |

| Input: Starting point . Initialize the iteration number k. and are momentum and step size parameters used by fog node i at iteration k. |

| 1: while the stopping criterion have not been reached, all the fog nodes do |

| 2: if fog node i’s clock ticks at iteration k, and selects a neighboring fog node j, then |

| 3: Node i and j exchange their current estimates and and update in parallel. |

| 4: Fog node i updates as follows. |

| 5: , |

| 6: Fog node i sends mixed estimate to its corresponding edge devices. |

| 7: Fog node i waits for the edge devices to return their gradients and aggregate them (the summation) as . |

| 8: Fog node i updates its estimate . |

| 9: Fog node j updates as follows. |

| 10: , |

| 11: Fog node j sends mixed estimate to its corresponding edge devices. |

| 12: Fog node j waits for the edge devices to return their gradients and aggregate them (the summation) as . |

| 13: Fog node j updates its estimate . |

| 14: Other fog nodes q, which are not i or j update as follows. |

| 15: , |

| 16: Fog node q sends mixed estimate to its corresponding edge devices. |

| 17: Fog node q waits for the edge devices to return their gradients and aggregate them (the summation) as . |

| 18: Fog node q updates its estimate . |

| 19: end if |

| 20: Increment k. |

| 21: end while |

| 22: Send EXIT signal. |

| Algorithm 2: Edge device procedure |

| 1: while EXIT signal has not been received, each edge device j with j belongs to the set of edge devices that associated with fog node i do |

| 2: Edge device j receives edge node i’s mixed estimate . |

| 3: Edge device j computes the gradient with respect to using its local objective function . |

| 4: Edge device j sends the computed gradient to its corresponding fog node i. |

| 5: end while |

3.3. Algorithm Interpretation

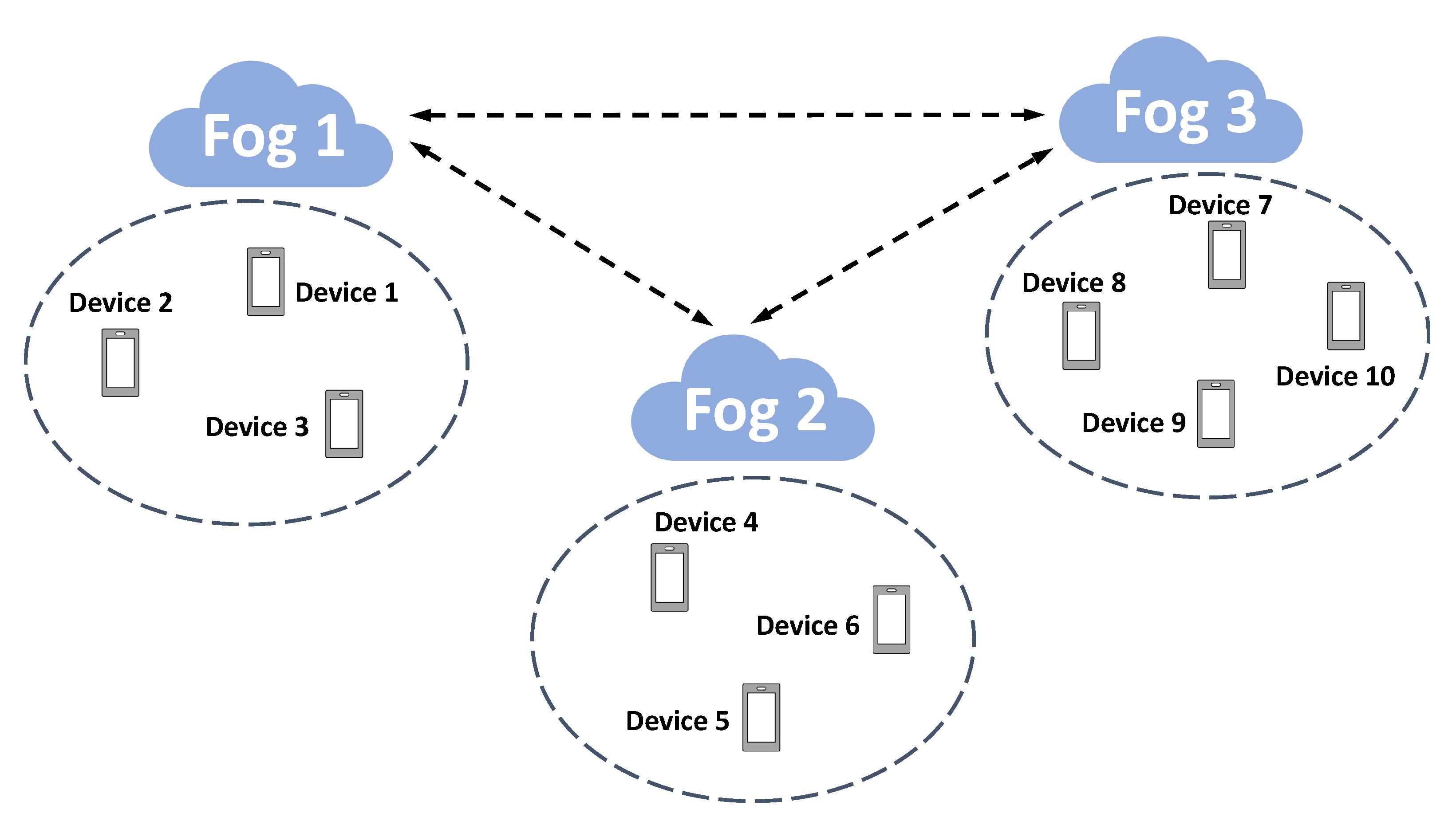

3.4. An Illustrative Example of Executing the Distributed Algorithm

- Iteration 1:

- Fog node 2’s clock ticks and it selects node 1 for exchanging their estimates and . Fog node 2 computes and then node 2 sends mixed estimate to its corresponding Edge Devices 4-6. Edge Devices 4–6 compute their gradients using their private functions with respect to . These gradients are returned to fog node 2 and aggregated as . Fog node 2 updates its estimate .For fog node 1, it computes and then sends mixed estimate to its corresponding Edge Devices 1–3. Edge Devices 1–3 compute their gradients using their private functions with respect to . These gradients are returned to fog node 1 and aggregated as . Fog node 1 updates its estimate .The remaining fog node 3 receives signal that it will not exchange its estimate with others and thus update as follows. It calculates and then sends mixed estimate to its corresponding Edge Devices 7–10. Edge Devices 7–10 compute their gradients using their private functions with respect to . These gradients are returned to fog node 3 and aggregated as . Fog node 3 updates its estimate .

4. Secure Privacy-Preserving Protocol

- Example:

- In Algorithm 2, edge devices computes their gradients and return them to their corresponding fog nodes. Assume that edge device j computes the gradient with respect to and sends it to fog node i. In addition, assume that the model we use is least square such that the global objective is as follows.Following the decomposed formulation in Section 3.1, the local objective function for edge device j can be expressed as follows.where matrix and vector contain the raw data for device j. The gradient of is:where represents the transpose of matrix . Assume that fog node i keeps the received edge device j’s gradient at iteration k and and they are:

4.1. Paillier Cryptosystem

- Select two equal length large prime numbers p and q.

- Calculate and set .

- Set where is Euler’s totient function.

- Find and is the modular multiplicative inverse of .

- The public (encryption) key: .

- The private (decryption) key: .

- Suppose m is the plaintext, where . Select a random r where .

- Calculate ciphertext as: .

- Suppose c is the ciphertext, where . Select a random r where .

- Calculate the plaintext as: , where .

- The ciphertext of the sum of two messages can be obtained by the product of two individual ciphertexts of the messages, respectively.

- Decrypting a ciphertext raised to a constant k yields the product of the plaintext and the constant.

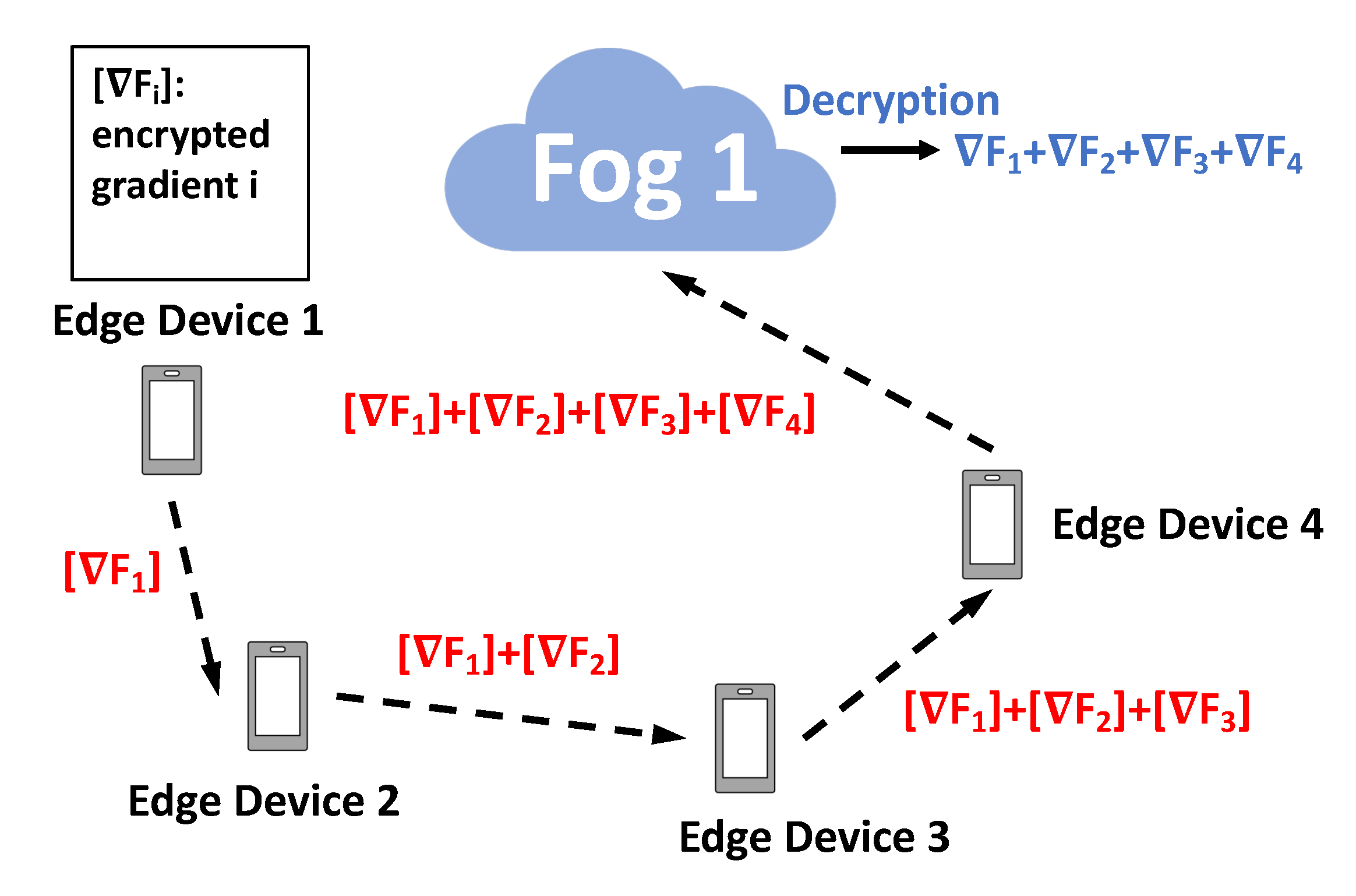

4.2. Secure Protocol Design

| Algorithm 3: Secure fog node procedure |

| Input: Starting point . Initialize the iteration number . All the fog nodes generate their public and private key pairs. and are momentum and step size parameters used by fog node i at iteration k. |

| 1: while the stopping criterion have not been reached, all the fog nodes do |

| 2: if fog node i’s clock ticks at iteration k, and selects a neighboring fog node j, then |

| 3: Fog node i updates as follows. |

| 4: Node i encrypts its estimate using its public key and sends the encrypted estimate to node j. |

| 5: Node j encrypts its own estimate using node i’s public key and obtains . Perform the addition and then multiply a private random number uniformly sampled from to the summation and finally sends it back to node i. |

| 6: Node i receives the message and decrypts it and then multiply with a private random number uniformly sampled from . |

| 7: Node i obtain the mixed average as . |

| 8: , |

| 9: Fog node i sends mixed estimate to its corresponding edge devices. |

| 10: Fog node i waits for the summation of the encrypted gradients from the edge devices and then decrypts it as using its private key . |

| 11: Fog node i updates its estimate . |

| 12: Fog node j updates as follows. |

| 13: Node j encrypts its estimate using its public key and sends the encrypted estimate to node i. |

| 14: Node i encrypts its own estimate using node j’s public key and obtains . Perform the addition and then multiply a private random number uniformly sampled from to the summation and finally sends it back to node j. |

| 15: Node j receives the message and decrypts it and then multiply with a private random number uniformly sampled from . |

| 16: Node j obtain the mixed average as . |

| 17: , |

| 18: Fog node j sends mixed estimate to its corresponding edge devices. |

| 19: Fog node j waits for the summation of the encrypted gradients from the edge devices and then decrypts it as using its private key . |

| 20: Fog node j updates its estimate . |

| 21: Other fog nodes q, which are not i or j update as follows. |

| 22: , |

| 23: Fog node q sends mixed estimate to its corresponding edge devices. |

| 24: Fog node q waits for the summation of the encrypted gradients from the edge devices and then decrypts it as using its private key . |

| 25: Fog node q updates its estimate . |

| 26: end if |

| 27: Increment k. |

| 28: end while |

| 29: Send EXIT signal. |

| Algorithm 4: Secure edge device procedure |

| 1: while EXIT signal has not been received, each edge device j with j belongs to the set of edge devices that associated with fog node i do |

| 2: Edge device j receives fog node i’s mixed estimate . |

| 3: Edge device j computes the gradient with respect to using its local objective function . |

| 4: Edge device j encrypts its gradient using its corresponding fog node i’s public key . |

| 5: The edge devices belong to the area of fog node i pass and do summation on their encrypted gradient in order. |

| 6: The last edge device with the summation of all the gradients sends the aggregated encrypted gradients to its corresponding fog node i. |

| 7: end while |

4.3. Security Analysis

5. Experimental Evaluation

5.1. Seismic Imaging

- Objective value: We took the average solution of all the p fog nodes and evaluated the objective value of the global function . This metric tracks how good of the average model is in reaching optimal over iterations.

- Disagreement: We took the difference of each fog node’s solution with the average solution. This quantity measures the disagreement among all the fog nodes in their estimates. Hence, it indicates how fast these fog nodes reach consensus.

5.2. Diabetes Progression Prediction

5.3. Enron Spam Email Classification

6. Conclusions and Future Directions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| IoT | Internet of Things |

| , | Fog node i’s estimate and auxiliary variable at iteration k |

| , | Fog node i’s momentum parameter and step size parameter at iteration k |

| , | Fog node i’s public and private key |

| Fog node i’s private random number prepared for node j | |

| Fog node j’s private random number prepared for node i |

References

- Evans, D. The Internet of Things: How the Next Evolution of the Internet Is Changing Everything. CISCO White Pap. 2011, 1, 1–11. Available online: https://www.cisco.com/c/dam/en_us/about/ac79/docs/innov/IoT_IBSG_0411FINAL.pdf (accessed on 5 September 2020).

- CS Inc. Fog Computing and the Internet of Things: Extend the Cloud to Where the Things Are. CISCO White Pap. 2016, 1, 1–6. Available online: https://www.cisco.com/c/dam/en_us/solutions/trends/iot/docs/computing-overview.pdf (accessed on 5 September 2020).

- Chang, Z.; Zhou, Z.; Ristaniemi, T.; Niu, Z. Energy Efficient Optimization for Computation Offloading in Fog Computing System. In Proceedings of the GLOBECOM 2017—2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar]

- Liu, L.; Chang, Z.; Guo, X.; Mao, S.; Ristaniemi, T. Multiobjective Optimization for Computation Offloading in Fog Computing. IEEE Internet Things J. 2018, 5, 283–294. [Google Scholar] [CrossRef]

- Hidano, S.; Murakami, T.; Katsumata, S.; Kiyomoto, S.; Hanaoka, G. Model Inversion Attacks for Prediction Systems: Without Knowledge of Non-Sensitive Attributes. In Proceedings of the 2017 15th Annual Conference on Privacy, Security and Trust (PST), Calgary, AB, Canada, 28–30 August 2017. [Google Scholar]

- Zhu, L.; Liu, Z.; Han, S. Deep Leakage from Gradients. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., dAlché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 14774–14784. [Google Scholar]

- Hu, P.; Dhelim, S.; Ning, H.; Qiu, T. Survey on fog computing: Architecture, key technologies, applications and open issues. J. Netw. Comput. Appl. 2017, 98, 27–42. [Google Scholar] [CrossRef]

- Sayed, A.H.; Lopes, C.G. Distributed Recursive Least-Squares Strategies Over Adaptive Networks. In Proceedings of the 2006 Fortieth Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 29 October–1 November 2006; pp. 233–237. [Google Scholar]

- Cattivelli, F.; Lopes, C.; Sayed, A.H. Diffusion recursive least-squares for distributed estimation over adaptive networks. IEEE Trans. Signal Process. 2008, 56, 1865–1877. [Google Scholar] [CrossRef]

- Lopes, C.G.; Sayed, A.H. Diffusion Least-Mean Squares over Adaptive Networks: Formulation and Performance Analysis. IEEE Trans. Signal Process. 2008, 56, 3122–3136. [Google Scholar] [CrossRef]

- Cattivelli, F.S.; Sayed, A.H. Diffusion LMS algorithms with information exchange. In Proceedings of the 2008 42nd Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 26–29 October 2008; pp. 251–255. [Google Scholar]

- Cattivelli, F.S.; Sayed, A.H. Diffusion LMS Strategies for Distributed Estimation. IEEE Trans. Signal Process. 2010, 58, 1035–1048. [Google Scholar] [CrossRef]

- Mateos, G.; Giannakis, G.B. Distributed recursive least-squares: Stability and performance analysis. IEEE Trans. Signal Process. 2012, 60, 3740–3754. [Google Scholar] [CrossRef]

- Dimakis, A.G.; Kar, S.; Moura, J.M.F.; Rabbat, M.G.; Scaglione, A. Gossip Algorithms for Distributed Signal Processing. Proc. IEEE 2010, 98, 1847–1864. [Google Scholar] [CrossRef]

- Matei, I.; Baras, J. Performance Evaluation of the Consensus-Based Distributed Subgradient Method Under Random Communication Topologies. IEEE J. Sel. Top. Signal Process. 2011, 5, 754–771. [Google Scholar] [CrossRef]

- Nedic, A.; Ozdaglar, A. Distributed Subgradient Methods for Multi-Agent Optimization. IEEE Trans. Autom. Control 2009, 54, 48–61. [Google Scholar] [CrossRef]

- Nedic, A.; Olshevsky, A. Distributed optimization over time-varying directed graphs. In Proceedings of the 2013 IEEE 52nd Annual Conference on Decision and Control (CDC 2013), Firenze, Italy, 10–13 December 2013; pp. 6855–6860. [Google Scholar] [CrossRef]

- Nedic, A.; Olshevsky, A. Stochastic Gradient-Push for Strongly Convex Functions on Time-Varying Directed Graphs. arXiv 2014, arXiv:1406.2075. [Google Scholar] [CrossRef]

- Chen, I.-A. Fast Distributed First-Order Methods. Ph.D. Thesis, Massachusetts Institute of Technology, Boston, MA, USA, 2012. [Google Scholar]

- Yuan, K.; Ling, Q.; Yin, W. On the convergence of decentralized gradient descent. arXiv 2013, arXiv:1310.7063. [Google Scholar] [CrossRef]

- Zargham, M.; Ribeiro, A.; Jadbabaie, A. A distributed line search for network optimization. In Proceedings of the American Control Conference (ACC 2012), Montreal, QC, Canada, 27–29 June 2012; pp. 472–477. [Google Scholar] [CrossRef]

- Xiao, L.; Boyd, S.; Lall, S. A Scheme for Robust Distributed Sensor Fusion Based on Average Consensus. In Proceedings of the 4th International Symposium on Information Processing in Sensor Networks, 2005 (IPSN ’05), Los Angeles, CA, USA, 24–27 April 2005. [Google Scholar]

- Tsitsiklis, J.; Bertsekas, D.; Athans, M. Distributed asynchronous deterministic and stochastic gradient optimization algorithms. IEEE Trans. Autom. Control 1986, 31, 803–812. [Google Scholar] [CrossRef]

- Tsitsiklis, J.N. Problems in Decentralized Decision Making and Computation; Technical Report; DTIC Document: 1984. Available online: https://apps.dtic.mil/dtic/tr/fulltext/u2/a150025.pdf (accessed on 10 July 2020).

- Terelius, H.; Topcu, U.; Murray, R.M. Decentralized multi-agent optimization via dual decomposition. IFAC 2011, 44, 11245–11251. [Google Scholar] [CrossRef]

- Shi, G.; Johansson, K.H. Finite-time and asymptotic convergence of distributed averaging and maximizing algorithms. arXiv 2012, arXiv:1205.1733. [Google Scholar]

- Rabbat, M.; Nowak, R. Distributed optimization in sensor networks. In Proceedings of the 3rd International Symposium on Information Processing in Sensor Networks (IPSN’04), Berkeley, CA, USA, 26–27 April 2004; pp. 20–27. [Google Scholar] [CrossRef]

- Jakovetić, D.; Xavier, J.; Moura, J.M. Fast Distributed Gradient Methods. arXiv 2014, arXiv:1112.2972v4. [Google Scholar] [CrossRef]

- Shi, W.; Ling, Q.; Wu, G.; Yin, W. EXTRA: An Exact First-Order Algorithm for Decentralized Consensus Optimization. arXiv 2014, arXiv:1404.6264. [Google Scholar] [CrossRef]

- Wei, E.; Ozdaglar, A. On the O(1/k) convergence of asynchronous distributed alternating direction method of multipliers. arXiv 2013, arXiv:1307.8254. [Google Scholar]

- Iutzeler, F.; Bianchi, P.; Ciblat, P.; Hachem, W. Asynchronous Distributed Optimization using a Randomized Alternating Direction Method of Multipliers. arXiv 2013, arXiv:1303.2837. [Google Scholar]

- Boyd, S.; Ghosh, A.; Prabhakar, B.; Shah, D. Randomized Gossip Algorithms. IEEE/ACM Trans. Netw. 2006, 14, 2508–2530. [Google Scholar] [CrossRef]

- Nedic, A. Asynchronous Broadcast-Based Convex Optimization Over a Network. IEEE Trans. Autom. Control 2011, 56, 1337–1351. [Google Scholar] [CrossRef]

- Zhao, L.; Song, W.Z.; Ye, X.; Gu, Y. Asynchronous broadcast-based decentralized learning in sensor networks. Int. J. Parallel Emergent Distrib. Syst. 2018, 33, 589–607. [Google Scholar] [CrossRef]

- Wang, T.; Zheng, Z.; Rehmani, M.H.; Yao, S.; Huo, Z. Privacy Preservation in Big Data From the Communication Perspective—A Survey. IEEE Commun. Surv. Tutor. 2019, 21, 753–778. [Google Scholar] [CrossRef]

- Dwork, C.; Roth, A. The Algorithmic Foundations of Differential Privacy. Found. Trends Theor. Comput. Sci. 2014, 9, 211–407. [Google Scholar] [CrossRef]

- Zhu, X.; Sun, Y. Differential Privacy for Collaborative Filtering Recommender Algorithm. In Proceedings of the 2016 ACM on International Workshop on Security And Privacy Analytics (IWSPA ’16), New Orleans, LA, USA, 11 March 2016; pp. 9–16. [Google Scholar] [CrossRef]

- Grishin, D.; Obbad, K.; Church, G.M. Data privacy in the age of personal genomics. Nat. Biotechnol. 2019, 37, 1115–1117. [Google Scholar] [CrossRef]

- Geng, Q.; Viswanath, P. The Optimal Noise-Adding Mechanism in Differential Privacy. IEEE Trans. Inf. Theory 2016, 62, 925–951. [Google Scholar] [CrossRef]

- Ram Mohan Rao, P.; Murali Krishna, S.; Siva Kumar, A.P. Privacy preservation techniques in big data analytics: A survey. J. Big Data 2018, 5, 33. [Google Scholar] [CrossRef]

- Halevi, S. Homomorphic Encryption. 2017. Available online: https://shaih.github.io/pubs/he-chapter.pdf (accessed on 15 August 2020).

- Goldwasser, S.; Micali, S. Probabilistic encryption. J. Comput. Syst. Sci. 1984, 28, 270–299. [Google Scholar] [CrossRef]

- Paillier, P. Public-Key Cryptosystems Based on Composite Degree Residuosity Classes. In Advances in Cryptology—EUROCRYPT ’99; Stern, J., Ed.; Springer: Berlin/Heidelberg, Germany, 1999; pp. 223–238. [Google Scholar]

- Cevher, V.; Becker, S.; Schmidt, M. Convex Optimization for Big Data: Scalable, randomized, and parallel algorithms for big data analytics. IEEE Signal Process. Mag. 2014, 31, 32–43. [Google Scholar] [CrossRef]

- Nedić, A.; Liu, J. Distributed Optimization for Control. Annu. Rev. Control Robot. Auton. Syst. 2018, 1, 77–103. [Google Scholar] [CrossRef]

- Van Waterschoot, T.; Leus, G. Distributed estimation of static fields in wireless sensor networks using the finite element method. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 2853–2856. [Google Scholar]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Yang, T.; Yi, X.; Wu, J.; Yuan, Y.; Wu, D.; Meng, Z.; Hong, Y.; Wang, H.; Lin, Z.; Johansson, K.H. A survey of distributed optimization. Annu. Rev. Control 2019, 47, 278–305. [Google Scholar] [CrossRef]

- Molzahn, D.K.; Dörfler, F.; Sandberg, H.; Low, S.H.; Chakrabarti, S.; Baldick, R.; Lavaei, J. A Survey of Distributed Optimization and Control Algorithms for Electric Power Systems. IEEE Trans. Smart Grid 2017, 8, 2941–2962. [Google Scholar] [CrossRef]

- Nesterov, Y. A method for unconstrained convex minimization problem with the rate of convergence O(1/k2). Dokl. Sssr 1983, 269, 543–547. Available online: http://mpawankumar.info/teaching/cdt-big-data/nesterov83.pdf (accessed on 12 June 2020).

- Nesterov, Y. Introductory Lectures on Convex Optimization: A Basic Course (Applied Optimization), 1st ed.; Springer: Boston, MA, USA, 2004. [Google Scholar]

- Li, S.; Maddah-Ali, M.A.; Yu, Q.; Avestimehr, A.S. A Fundamental Tradeoff Between Computation and Communication in Distributed Computing. IEEE Trans. Inf. Theory 2018, 64, 109–128. [Google Scholar] [CrossRef]

- Yao, A.C. How to generate and exchange secrets. In Proceedings of the 27th Annual Symposium on Foundations of Computer Science (SFCS 1986), Toronto, ON, Canada, 27–29 October 1986; pp. 162–167. [Google Scholar]

- Yung, M. From Mental Poker to Core Business: Why and How to Deploy Secure Computation Protocols? In Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security (CCS ’15), Denver, CO, USA, 12–16 October 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 1–2. [Google Scholar] [CrossRef]

- Damgård, I.; Pastro, V.; Smart, N.; Zakarias, S. Multiparty Computation from Somewhat Homomorphic Encryption. In Advances in Cryptology—CRYPTO 2012; Safavi-Naini, R., Canetti, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 643–662. [Google Scholar]

- Konečný, J.; McMahan, H.B.; Yu, F.X.; Richtarik, P.; Suresh, A.T.; Bacon, D. Federated Learning: Strategies for Improving Communication Efficiency. NIPS Workshop on Private Multi-Party Machine Learning. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- Lian, X.; Zhang, C.; Zhang, H.; Hsieh, C.J.; Zhang, W.; Liu, J. Can Decentralized Algorithms Outperform Centralized Algorithms? A Case Study for Decentralized Parallel Stochastic Gradient Descent. In Advances in Neural Information Processing Systems 30; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 5330–5340. [Google Scholar]

- Hegedűs, I.; Danner, G.; Jelasity, M. Gossip Learning as a Decentralized Alternative to Federated Learning. Distributed Applications and Interoperable Systems; Pereira, J., Ricci, L., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 74–90. [Google Scholar]

- Ahrenholz, J. Comparison of CORE network emulation platforms. In Proceedings of the Military Communications Conference, 2010—Milcom 2010, San Jose, CA, USA, 31 October–3 November 2010; pp. 166–171. [Google Scholar]

- Data61/Python Paillier Library. 2013. Available online: https://github.com/data61/python-paillier (accessed on 20 July 2020).

- Bording, R.P.; Gersztenkorn, A.; Lines, L.R.; Scales, J.A.; Treitel, S. Applications of seismic travel-time tomography. Geophys. J. R. Astron. Soc. 1987, 90, 285–303. [Google Scholar] [CrossRef]

- Hansen, P.C.; Saxild-Hansen, M. AIR Tools—A MATLAB package of algebraic iterative reconstruction methods. J. Comput. Appl. Math. 2012, 236, 2167–2178. [Google Scholar] [CrossRef]

- Sklearn Diabetes Dataset. Available online: http://scikit-learn.org/stable/datasets/index.html#diabetes-dataset (accessed on 20 August 2020).

- Enron Email Dataset. Available online: https://www.cs.cmu.edu/~./enron/ (accessed on 20 October 2020).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, L. Privacy-Preserving Distributed Analytics in Fog-Enabled IoT Systems. Sensors 2020, 20, 6153. https://doi.org/10.3390/s20216153

Zhao L. Privacy-Preserving Distributed Analytics in Fog-Enabled IoT Systems. Sensors. 2020; 20(21):6153. https://doi.org/10.3390/s20216153

Chicago/Turabian StyleZhao, Liang. 2020. "Privacy-Preserving Distributed Analytics in Fog-Enabled IoT Systems" Sensors 20, no. 21: 6153. https://doi.org/10.3390/s20216153

APA StyleZhao, L. (2020). Privacy-Preserving Distributed Analytics in Fog-Enabled IoT Systems. Sensors, 20(21), 6153. https://doi.org/10.3390/s20216153