Anomaly Detection of Power Plant Equipment Using Long Short-Term Memory Based Autoencoder Neural Network

Abstract

1. Introduction

- The proposal of an anomaly detection framework for multivariable time-series based on an LSTM-AE neural network.

- The similarity analysis of the NBM output distribution and the corresponding measurement distribution.

2. The Proposed Anomaly Detection Framework

2.1. Framework Flow Chart

2.1.1. Offline Training NBM

- (1)

- Eliminate data at the time of equipment downtime.

- (2)

- Eliminate data at the time of equipment failure according to operation logs.

- (3)

- Eliminate abnormal data based on statistical characteristics. These abnormalities originate from sensors or stored procedures. Boxplots are used in this work. Then, the cleaned dataset is divided into a training dataset and a test dataset.

2.1.2. Online Anomaly Detection by NBM

2.2. The Normal Behavior Model

| Algorithm 1 Anomaly detection using the NBM |

| INPUT: normal dataset , the measured values at a certain moment , threshold |

| OUTPUT: reconstruction residual |

| represents the NBM trained by |

| if reconstruction residual > then |

| is an anomaly |

| Else |

| is not an anomaly |

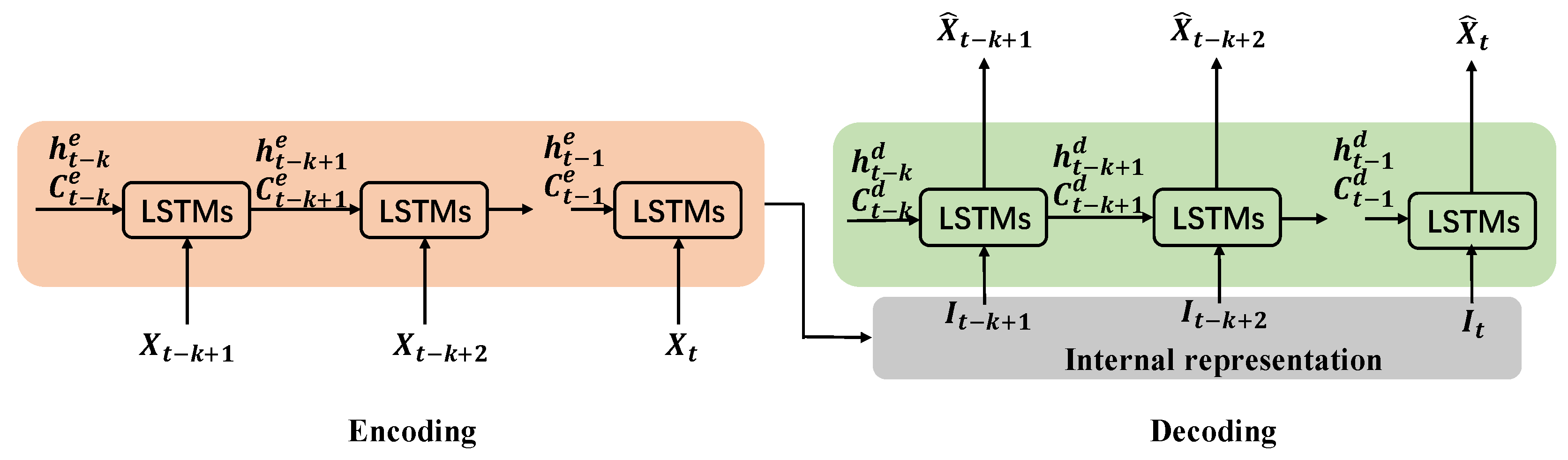

2.3. The LSTM-AE Neural Network

2.3.1. The AE Neural Network

2.3.2. The LSTM Unit

2.3.3. The LSTM-AE Neural Network

3. Case Study

3.1. Data Preparation

3.2. Data Cleaning

3.3. NBM Based on LSTM-AE

Comparative Analysis

3.4. Statistical Analysis on the Residuals

3.5. Abnormality Detection

3.5.1. Normal Operation Case

3.5.2. Abnormal Operation Case

4. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Kim, H.; Na, M.G.; Heo, G. Application of monitoring, diagnosis, and prognosis in thermal performance analysis for nuclear power plants. Nucl. Eng. Technol. 2014, 46, 737–752. [Google Scholar] [CrossRef]

- Fast, M. Artificial neural networks for gas turbine monitoring. In Sweden: Division of Thermal Power Engineering; Department of Energy Sciences, Faculty of Engineering, Lund University: Lund, Sweden, 2010; ISBN 978-91-7473-035-7. [Google Scholar]

- Wu, S.X.; Banzhaf, W. The use of computational intelligence in intrusion detection systems: A review. Appl. Soft Comput. 2010, 10, 1–35. [Google Scholar] [CrossRef]

- Gómez, C.Q.; Villegas, M.A.; García, F.P.; Pedregal, D.J. Big Data and Web Intelligence for Condition Monitoring: A Case Study on Wind Turbines//Big Data: Concepts, Methodologies, Tools, and Applications; IGI global: Pennsylvania, PA, USA, 2016; pp. 1295–1308. [Google Scholar] [CrossRef]

- Dao, P.B.; Staszewski, W.J.; Barszcz, T.; Uhl, T. Condition monitoring and fault detection in wind turbines based on cointegration analysis of SCADA data. Renew. Energy 2018, 116, 107–122. [Google Scholar] [CrossRef]

- Fast, M.; Palme, T. Application of artificial neural networks to the condition monitoring and diagnosis of a combined heat and power plant. Energy 2010, 35, 1114–1120. [Google Scholar] [CrossRef]

- Liu, J.Z.; Wang, Q.H.; Fang, F. Data-driven-based Application Architecture and Technologies of Smart Power Generation. Proc. CSEE 2019, 39, 3578–3587. [Google Scholar] [CrossRef]

- Moleda, M.; Mrozek, D. Big Data in Power Generation. In International Conference: Beyond Databases, Architectures and Structures; Springer: Cham, Switzerland, 2019; pp. 15–29. [Google Scholar] [CrossRef]

- Garcia, M.C.; Sanz-Bobi, M.A.; Del Pico, J. SIMAP: Intelligent System for Predictive Maintenance. Comput. Ind. 2006, 57, 552–568. [Google Scholar] [CrossRef]

- Muñoz, A.; Sanz-Bobi, M.A. An incipient fault detection system based on the probabilistic radial basis function network: Application to the diagnosis of the condenser of a coal power plant. Neurocomputing 1998, 23, 177–194. [Google Scholar] [CrossRef]

- Sanz-Bobi, M.A.; Toribio, M.A.D. Diagnosis of Electrical Motors Using Artificial Neural Networks; IEEE International SDEMPED: Gijón, Spain, 1999; pp. 369–374. [Google Scholar]

- Haykin, S.; Network, N. A comprehensive foundation. Neural Netw. 2004, 2, 41. [Google Scholar] [CrossRef]

- Lu, S.; Hogg, B.W. Dynamic nonlinear modelling of power plant by physical principles and neural networks. Int. J. Electr. Power Energy Syst. 2000, 22, 67–78. [Google Scholar] [CrossRef]

- Sun, Y.; Gao, J.; Zhang, H.; Peng, D. (2016, September). The application of BPNN based on improved PSO in main steam temperature control of supercritical unit. In Proceedings of the 2016 22nd International Conference on Automation and Computing (ICAC), Colchester, UK, 7–8 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 188–192. [Google Scholar] [CrossRef]

- Huang, C.; Li, J.; Yin, Y.; Zhang, J.; Hou, G. State monitoring of induced draft fan in thermal power plant by gravitational searching algorithm optimized BP neural network. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 4616–4621. [Google Scholar] [CrossRef]

- Tan, P.; Zhang, C.; Xia, J.; Fang, Q.; Chen, G. NOx Emission Model for Coal-Fired Boilers Using Principle Component Analysis and Support Vector Regression. J. Chem. Eng. Jpn. 2016, 49, 211–216. [Google Scholar] [CrossRef]

- Zhang, L.; Zhou, L.; Zhang, Y.; Wang, K.; Zhang, Y.; Zhijun, E.; Gan, Z.; Wang, Z.; Qu, B.; Li, G. Coal consumption prediction based on least squares support vector machine. EEs 2019, 227, 032007. [Google Scholar] [CrossRef]

- Guanglong, W.; Meng, L.; Wenjie, Z. The LS-SVM modeling of power station boiler NOx emission based on genetic algorithm. Autom. Instrum. 2016, 2, 26. [Google Scholar] [CrossRef]

- Wang, C.; Liu, Y.; Zheng, S.; Jiang, A. Optimizing combustion of coal fired boilers for reducing NOx emission using Gaussian Process. Energy 2018, 153, 149–158. [Google Scholar] [CrossRef]

- Hu, D.; Chen, G.; Yang, T.; Zhang, C.; Wang, Z.; Chen, Q.; Li, B. An Artificial Neural Network Model for Monitoring Real-Time Variables and Detecting Early Warnings in Induced Draft Fan. In Proceedings of the ASME 2018 13th International Manufacturing Science and Engineering Conference. American Society of Mechanical Engineers Digital Collection, College Station, TX, USA, 18–22 June 2018. [Google Scholar] [CrossRef]

- Safdarnejad, S.M.; Tuttle, J.F.; Powell, K.M. Dynamic modeling and optimization of a coal-fired utility boiler to forecast and minimize NOx and CO emissions simultaneously. Comput. Chem. Eng. 2019, 124, 62–79. [Google Scholar] [CrossRef]

- Bangalore, P.; Tjernberg, L.B. An Artificial Neural Network Approach for Early Fault Detection of Gearbox Bearings. IEEE Trans. Smart Grid 2015, 6, 980–987. [Google Scholar] [CrossRef]

- Asgari, H.; Chen, X.; Morini, M.; Pinelli, M.; Sainudiin, R.; Spina, P.R.; Venturini, M. NARX models for simulation of the start-up operation of a single-shaft gas turbine. Appl. Eng. 2016, 93, 368–376. [Google Scholar] [CrossRef]

- Lee, W.J.; Na, J.; Kim, K.; Lee, C.J.; Lee, Y.; Lee, J.M. NARX modeling for real-time optimization of air and gas compression systems in chemical processes. Comput. Chem. Eng. 2018, 115, 262–274. [Google Scholar] [CrossRef]

- Hu, D.; Guo, S.; Chen, G.; Zhang, C.; Lv, D.; Li, B.; Chen, Q. Induced Draft Fan Early Anomaly Identification Based on SIS Data Using Normal Behavior Model in Thermal Power Plant. In Proceedings of the ASME Power Conference. American Society of Mechanical Engineers, Salt Lake City, UT, USA, 15–18 July 2019; Volume 59100, p. V001T08A002. [Google Scholar] [CrossRef]

- Tan, P.; He, B.; Zhang, C.; Rao, D.; Li, S.; Fang, Q.; Chen, G. Dynamic modeling of NOX emission in a 660MW coal-fired boiler with long short-term memory. Energy 2019, 176, 429–436. [Google Scholar] [CrossRef]

- Laubscher, R. Time-series forecasting of coal-fired power plant reheater metal temperatures using encoder-decoder recurrent neural networks. Energy 2019, 189, 116187. [Google Scholar] [CrossRef]

- Yang, G.; Wang, Y.; Li, X. Prediction of the NOx emissions from thermal power plant using long-short term memory neural network. Energy 2020, 192, 116597. [Google Scholar] [CrossRef]

- Pan, H.; Su, T.; Huang, X.; Wang, Z. LSTM-based soft sensor design for oxygen content of flue gas in coal-fired power plant. Trans. Inst. Meas. Control 2020. [Google Scholar] [CrossRef]

- Sakurada, M.; Yairi, T. Anomaly detection using autoencoders with nonlinear dimensionality reduction. In Proceedings of the MLSDA 2014 2nd Workshop on Machine Learning for Sensory Data Analysis, Gold Coast, QLD, Australia, 2 December 2014; pp. 4–11. [Google Scholar] [CrossRef]

- Lu, K.; Gao, S.; Sun, W.; Jiang, Z.; Meng, X.; Zhai, Y.; Han, Y.; Sun, M. Auto-encoder based fault early warning model for primary fan of power plant. In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2019; Volume 358, p. 042060. [Google Scholar] [CrossRef]

- Roy, M.; Bose, S.K.; Kar, B. A stacked autoencoder neural network based automated feature extraction method for anomaly detection in on-line condition monitoring. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bangalore, India, 18–21 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1501–1507. [Google Scholar] [CrossRef]

- Li, Y.; Hong, F.; Tian, L.; Liu, J.; Chen, J. Early Warning of Critical Blockage in Coal Mills based on Stacked Denoising Autoencoders. IEEE Access 2020. [Google Scholar] [CrossRef]

- An, J.; Cho, S. Variational autoencoder based anomaly detection using reconstruction probability. Spec. Lect. IE 2015, 2, 1–18. [Google Scholar]

- Zong, B.; Song, Q.; Min, M.R.; Cheng, W.; Lumezanu, C.; Cho, D.; Chen, H. Deep autoencoding gaussian mixture model for unsupervised anomaly detection. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Ng, A. Sparse autoencoder. CS294A Lect. Notes 2011, 72, 1–19. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Williams, R.J.; Zipser, D. Gradient-based learning algorithms for recurrent. In Backpropagation: Theory, Architectures, and Applications; Psychology Press: Hove, UK, 1995; Volume 433. [Google Scholar]

- Werbos, P.J. Backpropagation through time: What it does and how to do it. Proc. IEEE 1990, 78, 1550–1560. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent neural network regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Benjamini, Y. Opening the box of a boxplot. Am. Stat. 1988, 42, 257–262. [Google Scholar] [CrossRef]

- Wang, Y.; Miao, Q.; Ma, E.W.; Tsui, K.L.; Pecht, M.G. Online anomaly detection for hard disk drives based on mahalanobis distance. IEEE Trans. Reliab. 2013, 62, 136–145. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On Information and Sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Shimazaki, H.; Shinomoto, S. Kernel bandwidth optimization in spike rate estimation. J. Comput. Neurosci. 2010, 29, 171–182. [Google Scholar] [CrossRef] [PubMed]

| RMSE on Training Dataset | RMSE on Test Dataset | MAPE on Training Dataset | MAPE on Test Dataset | |

|---|---|---|---|---|

| AE | 0.111 | 0.191 | 0.172 | 0.172 |

| LSTM-AE | 0.026 | 0.035 | 0.027 | 0.027 |

| RMSE on Training Dataset | RMSE on Test Dataset | MAPE on Training Dataset | MAPE on Test Dataset | |

|---|---|---|---|---|

| LSTM-AE | 0.026 | 0.035 | 0.027 | 0.027 |

| PCA-NARX | 0.044 | 0.044 | 0.032 | 0.032 |

| AE | 0.111 | 0.191 | 0.172 | 0.172 |

| OR | LOT | POT_A1 | POA_A2 | MNDT | MDT | MBT_1 | MBT_2 | MBT_3 | |

|---|---|---|---|---|---|---|---|---|---|

| R_lower | --- | −0.048 | −0.228 | −0.315 | −0.041 | −0.027 | −0.063 | −0.029 | −0.037 |

| R_upper | 0.325 | 0.084 | 0.341 | 0.374 | 0.037 | 0.066 | 0.042 | 0.095 | 0.064 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, D.; Zhang, C.; Yang, T.; Chen, G. Anomaly Detection of Power Plant Equipment Using Long Short-Term Memory Based Autoencoder Neural Network. Sensors 2020, 20, 6164. https://doi.org/10.3390/s20216164

Hu D, Zhang C, Yang T, Chen G. Anomaly Detection of Power Plant Equipment Using Long Short-Term Memory Based Autoencoder Neural Network. Sensors. 2020; 20(21):6164. https://doi.org/10.3390/s20216164

Chicago/Turabian StyleHu, Di, Chen Zhang, Tao Yang, and Gang Chen. 2020. "Anomaly Detection of Power Plant Equipment Using Long Short-Term Memory Based Autoencoder Neural Network" Sensors 20, no. 21: 6164. https://doi.org/10.3390/s20216164

APA StyleHu, D., Zhang, C., Yang, T., & Chen, G. (2020). Anomaly Detection of Power Plant Equipment Using Long Short-Term Memory Based Autoencoder Neural Network. Sensors, 20(21), 6164. https://doi.org/10.3390/s20216164