Deep Photometric Stereo Network with Multi-Scale Feature Aggregation

Abstract

1. Introduction

2. Related Work

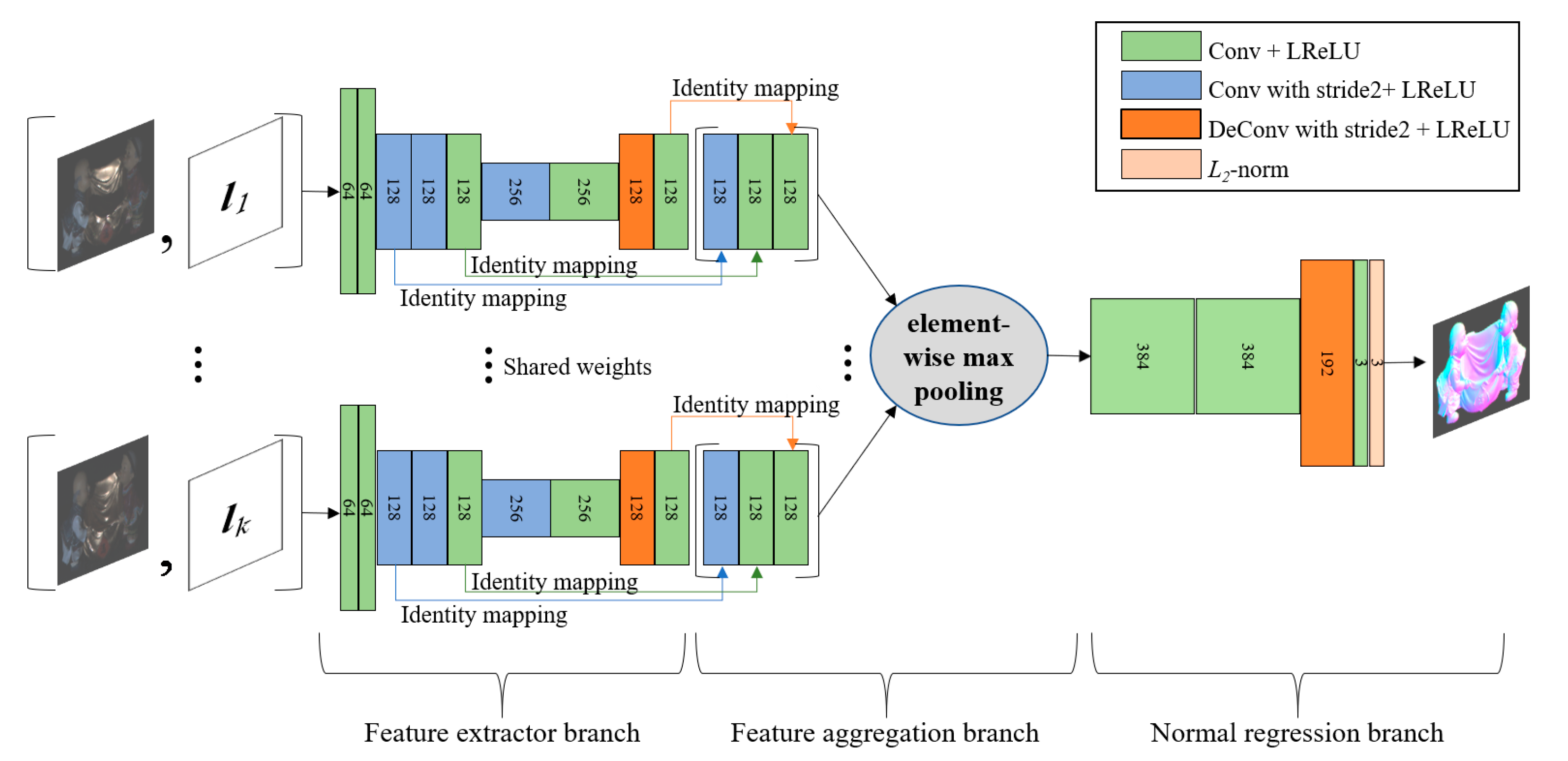

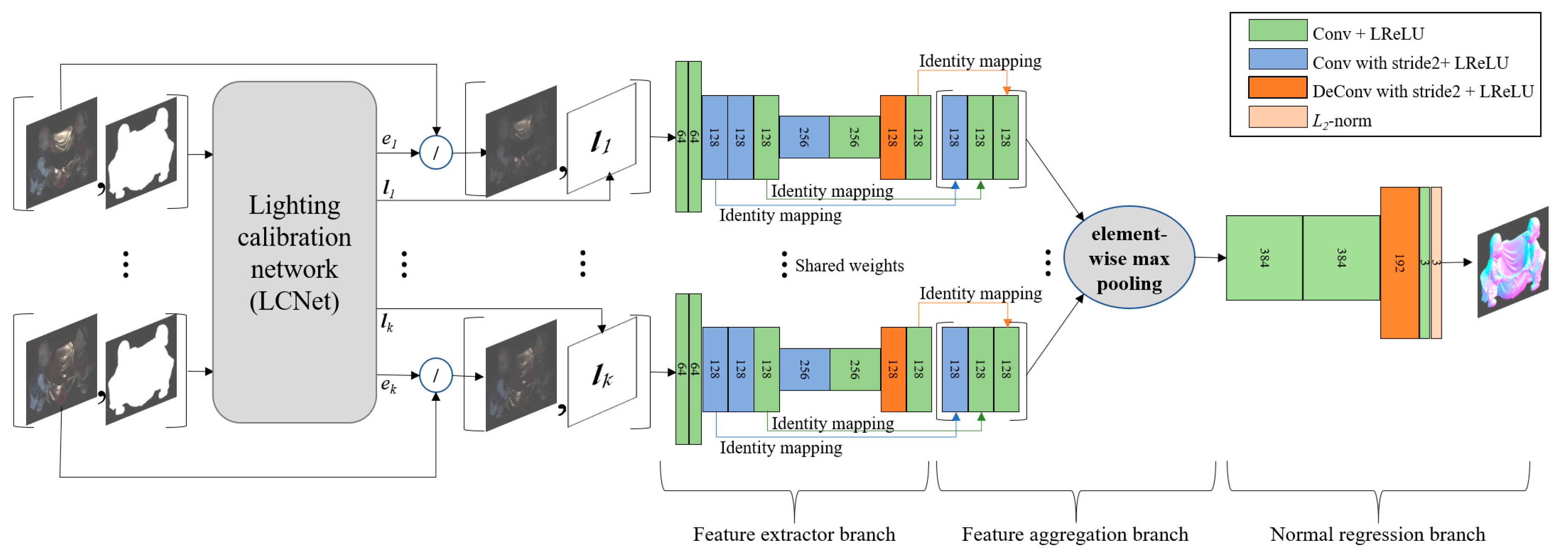

3. Fully Convolutional Neural Network with a Multi-Scale Feature Aggregation

3.1. Image Formation Model

3.2. Calibrated Photometric Stereo Network

3.3. Uncalibrated Photometric Stereo Network

3.4. Loss Function and Training Data

4. Experimental Results

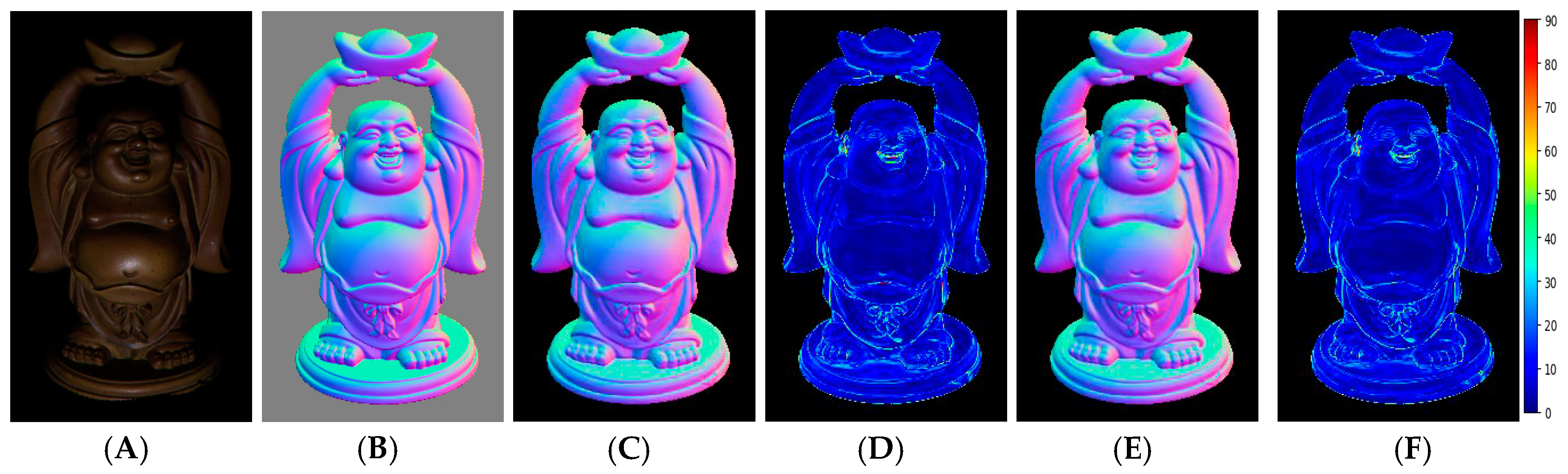

4.1. Comparision with the Baseline Model

4.2. Comparision with Other Models

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Woodham, R.J. Photometric method for determining surface orientation from multiple images. Opt. Eng. 1980, 19, 191139. [Google Scholar] [CrossRef]

- Hao, Y.; Li, J.; Meng, F.; Zhang, P.; Ciuti, G.; Dario, P.; Huang, Q. Photometric stereo-based depth map reconstruction for monocular capsule endoscopy. Sensors 2020, 20, 5403. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.; Xu, K.; Li, M.; Wu, M. Improved visual inspection through 3D image reconstruction of defects based on the photometric stereo technique. Sensors 2019, 19, 4970. [Google Scholar] [CrossRef] [PubMed]

- Yoda, T.; Nagahara, H.; Taniguchi, R.; Kagawa, K.; Yasutomi, K.; Kawahito, S. The dynamic photometric stereo method using a multi-tap CMOS image sensor. Sensors 2018, 18, 786. [Google Scholar] [CrossRef] [PubMed]

- Lu, L.; Qi, L.; Luo, Y.; Jiao, H.; Dong, J. Three-dimensional reconstruction from single image base on combination of CNN and multi-spectral photometric stereo. Sensors 2018, 18, 764. [Google Scholar] [CrossRef] [PubMed]

- Koppal, S.J. Lambertian reflectance. In Computer Vision: A Reference Guide; Ikeuchi, K., Ed.; Springer: Boston, MA, USA, 2014; pp. 441–443. ISBN 978-0-387-31439-6. [Google Scholar]

- Hayakawa, H. Photometric stereo under a light source with arbitrary motion. JOSA A 1994, 11, 3079–3089. [Google Scholar] [CrossRef]

- Coleman, E.N.; Jain, R. Obtaining 3-dimensional shape of textured and specular surfaces using four-source photometry. Comput. Graph. Image Process. 1982, 18, 309–328. [Google Scholar] [CrossRef]

- Barsky, S.; Petrou, M. The 4-source photometric stereo technique for three-dimensional surfaces in the presence of highlights and shadows. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1239–1252. [Google Scholar] [CrossRef]

- Chandraker, M.; Agarwal, S.; Kriegman, D. ShadowCuts: Photometric stereo with shadows. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 18–23 June 2007; pp. 1–8. [Google Scholar]

- Wu, T.-P.; Tang, C.-K. Photometric stereo via expectation maximization. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 546–560. [Google Scholar] [CrossRef]

- Verbiest, F.; Van Gool, L. Photometric stereo with coherent outlier handling and confidence estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 24–26 June 2008; pp. 1–8. [Google Scholar]

- Mukaigawa, Y.; Ishii, Y.; Shakunaga, T. Analysis of photometric factors based on photometric linearization. JOSA A 2007, 24, 3326–3334. [Google Scholar] [CrossRef]

- Sunkavalli, K.; Zickler, T.; Pfister, H. Visibility subspaces: Uncalibrated photometric stereo with shadows. In Proceedings of the European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 251–264. [Google Scholar]

- Yu, C.; Seo, Y.; Lee, S.W. Photometric stereo from maximum feasible lambertian reflections. In Proceedings of the European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; pp. 115–126. [Google Scholar]

- Miyazaki, D.; Ikeuchi, K. Photometric stereo under unknown light sources using robust SVD with missing data. In Proceedings of the IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 4057–4060. [Google Scholar]

- Wu, L.; Ganesh, A.; Shi, B.; Matsushita, Y.; Wang, Y.; Ma, Y. Robust photometric stereo via low-rank matrix completion and recovery. In Proceedings of the Asian Conference on Computer Vision, Queenstown, New Zealand, 8–12 November 2010; pp. 703–717. [Google Scholar]

- Ikehata, S.; Wipf, D.; Matsushita, Y.; Aizawa, K. Robust photometric stereo using sparse regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 318–325. [Google Scholar]

- Oh, T.-H.; Tai, Y.-W.; Bazin, J.-C.; Kim, H.; Kweon, I.S. Partial sum minimization of singular values in robust PCA: Algorithm and applications. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 744–758. [Google Scholar] [CrossRef] [PubMed]

- Nicodemus, F.E. Directional reflectance and emissivity of an opaque surface. Appl. Opt. 1965, 4, 767–775. [Google Scholar] [CrossRef]

- Mallick, S.P.; Zickler, T.E.; Kriegman, D.J.; Belhumeur, P.N. Beyond Lambert: Reconstructing specular surfaces using color. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 619–626. [Google Scholar]

- Sato, Y.; Ikeuchi, K. Temporal-color space analysis of reflection. JOSA A 1994, 11, 2990–3002. [Google Scholar] [CrossRef]

- Chen, L.; Zheng, Y.; Shi, B.; Subpa-asa, A.; Sato, I. A Microfacet-based model for photometric stereo with general isotropic reflectance. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 1. [Google Scholar] [CrossRef]

- Shi, B.; Tan, P.; Matsushita, Y.; Ikeuchi, K. Bi-polynomial modeling of low-frequency reflectances. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1078–1091. [Google Scholar] [CrossRef]

- Georghiades, A.S. Incorporating the torrance and sparrow model of reflectance in uncalibrated photometric stereo. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; pp. 816–823. [Google Scholar]

- Tagare, H.D.; de Figueiredo, R.J.P. A theory of photometric stereo for a class of diffuse non-Lambertian surfaces. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 133–152. [Google Scholar] [CrossRef]

- Chung, H.-S.; Jia, J. Efficient photometric stereo on glossy surfaces with wide specular lobes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Wu, Z.; Tan, P. Calibrating photometric stereo by holistic reflectance symmetry analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1498–1505. [Google Scholar]

- Ikehata, S.; Aizawa, K. Photometric stereo using constrained bivariate regression for general isotropic surfaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2187–2194. [Google Scholar]

- Alldrin, N.G.; Kriegman, D.J. Toward reconstructing surfaces with arbitrary isotropic reflectance: A stratified photometric stereo approach. In Proceedings of the IEEE International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–20 October 2007; pp. 1–8. [Google Scholar]

- Alldrin, N.; Zickler, T.; Kriegman, D. Photometric stereo with non-parametric and spatially-varying reflectance. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 24–26 June 2008; pp. 1–8. [Google Scholar]

- Shi, B.; Tan, P.; Matsushita, Y.; Ikeuchi, K. Elevation angle from reflectance monotonicity: Photometric stereo for general isotropic reflectances. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 455–468. [Google Scholar]

- Lu, F.; Chen, X.; Sato, I.; Sato, Y. SymPS: BRDF symmetry guided photometric stereo for shape and light source estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 221–234. [Google Scholar] [CrossRef]

- Hertzmann, A.; Seitz, S.M. Shape and materials by example: A photometric stereo approach. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003. [Google Scholar]

- Hui, Z.; Sankaranarayanan, A.C. Shape and spatially-varying reflectance estimation from virtual exemplars. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2060–2073. [Google Scholar] [CrossRef]

- Enomoto, K.; Waechter, M.; Kutulakos, K.N.; Matsushita, Y. Photometric stereo via discrete hypothesis-and-test search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 2308–2316. [Google Scholar]

- Herbort, S.; Wöhler, C. An introduction to image-based 3D surface reconstruction and a survey of photometric stereo methods. 3D Research 2011, 2, 4. [Google Scholar] [CrossRef]

- Ackermann, J.; Goesele, M. A survey of photometric stereo techniques. Found. Trends® Comput. Graph. Vis. 2015, 9, 149–254. [Google Scholar] [CrossRef]

- Shi, B.; Mo, Z.; Wu, Z.; Duan, D.; Yeung, S.-K.; Tan, P. A benchmark dataset and evaluation for non-lambertian and uncalibrated photometric stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 2–271. [Google Scholar] [CrossRef]

- Santo, H.; Samejima, M.; Sugano, Y.; Shi, B.; Matsushita, Y. Deep photometric stereo network. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 501–509. [Google Scholar]

- Chen, G.; Han, K.; Wong, K.-Y.K. PS-FCN: A flexible learning framework for photometric stereo. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Ikehata, S. CNN-PS: CNN-based photometric stereo for general non-convex surfaces. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Chen, G.; Han, K.; Shi, B.; Matsushita, Y.; Wong, K.-Y.K.K. Self-calibrating deep photometric stereo networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 June 2019; pp. 8731–8739. [Google Scholar]

- Li, J.; Robles-Kelly, A.; You, S.; Matsushita, Y. Learning to minify photometric stereo. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 June 2019; pp. 7560–7568. [Google Scholar]

- Taniai, T.; Maehara, T. Neural inverse rendering for general reflectance photometric stereo. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm Sweden, 10–15 July 2018; pp. 4857–4866. [Google Scholar]

- Zheng, Q.; Jia, Y.; Shi, B.; Jiang, X.; Duan, L.; Kot, A. SPLINE-Net: Sparse photometric stereo through lighting interpolation and normal estimation networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 8548–8557. [Google Scholar]

- Wu, T.-P.; Tang, K.-L.; Tang, C.-K.; Wong, T.-T. Dense photometric stereo: A Markov random field approach. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1830–1846. [Google Scholar] [CrossRef] [PubMed]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Shafer, S.A. Using color to separate reflection components. Color Res. Appl. 1985, 10, 210–218. [Google Scholar] [CrossRef]

- Torrance, K.E.; Sparrow, E.M. Theory for off-specular reflection from roughened surfaces*. JOSA 1967, 57, 1105–1114. [Google Scholar] [CrossRef]

- Ward, G.J. Measuring and modeling anisotropic reflection. ACM SIGGRAPH Comput. Graph. 1992, 26, 265–272. [Google Scholar] [CrossRef]

- Goldman, D.B.; Curless, B.; Hertzmann, A.; Seitz, S.M. Shape and spatially-varying BRDFs from photometric stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1060–1071. [Google Scholar] [CrossRef]

- Matusik, W.; Pfister, H.; Brand, M.; McMillan, L. A data-driven reflectance model. ACM Trans. Graph. 2003, 22, 759–769. [Google Scholar] [CrossRef]

- Hartmann, W.; Galliani, S.; Havlena, M.; Van Gool, L.; Schindler, K. Learned Multi-patch Similarity. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1595–1603. [Google Scholar]

- Wiles, O.; Zisserman, A. SilNet: Single- and multi-view reconstruction by learning from silhouettes. In Proceedings of the British Machine Vision Conference, London, UK, 4–7 September 2017. [Google Scholar]

- Aittala, M.; Durand, F. Burst image deblurring using permutation invariant convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 748–764. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Jakob, W. Mitsuba—Physically Based Renderer. Available online: https://www.mitsuba-renderer.org/download.html (accessed on 25 September 2020).

- Johnson, M.K.; Adelson, E.H. Shape estimation in natural illumination. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2553–2560. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems 32; Curran Associates, Inc.: Vancouver, BC, Canada, 8–14 December 2019; pp. 8026–8037. [Google Scholar]

- Papadhimitri, T.; Favaro, P. A closed-form, consistent and robust solution to uncalibrated photometric stereo via local diffuse reflectance maxima. Int. J. Comput. Vis. 2014, 107, 139–154. [Google Scholar] [CrossRef]

- Lu, F.; Matsushita, Y.; Sato, I.; Okabe, T.; Sato, Y. Uncalibrated photometric stereo for unknown isotropic reflectances. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1490–1497. [Google Scholar]

- Shi, B.; Matsushita, Y.; Wei, Y.; Xu, C.; Tan, P. Self-calibrating photometric stereo. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1118–1125. [Google Scholar]

- Alldrin, N.G.; Mallick, S.P.; Kriegman, D.J. Resolving the generalized bas-relief ambiguity by entropy minimization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 18–23 June 2007; pp. 1–7. [Google Scholar]

| Method | Ball | Cat | Pot1 | Bear | Pot2 | Buddha | Goblet | Reading | Cow | Harvest | Average |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ours | 2.84 | 5.71 | 6.65 | 6.87 | 7.03 | 7.88 | 8.67 | 12.85 | 6.39 | 15.62 | 8.05 |

| CNN-PS [42] | 2.20 | 4.60 | 5.40 | 4.10 | 6.00 | 7.90 | 7.30 | 12.60 | 8.00 | 14.00 | 7.20 |

| HS17 [35] | 1.33 | 4.88 | 5.16 | 5.58 | 6.41 | 8.48 | 7.57 | 12.08 | 8.23 | 15.81 | 7.55 |

| PS-FCN [41] | 2.82 | 6.16 | 7.13 | 7.55 | 7.25 | 7.91 | 8.60 | 13.33 | 7.33 | 15.85 | 8.39 |

| TM18 [45] | 1.47 | 5.44 | 6.09 | 5.79 | 7.76 | 10.36 | 11.47 | 11.03 | 6.32 | 22.59 | 8.83 |

| DPSN [40] | 2.02 | 6.54 | 7.05 | 6.31 | 7.86 | 12.68 | 11.28 | 15.51 | 8.01 | 16.86 | 9.41 |

| EW20 [36] | 1.58 | 6.30 | 6.67 | 6.38 | 7.26 | 13.69 | 11.42 | 15.49 | 7.80 | 18.74 | 9.53 |

| ST14 [24] | 1.74 | 6.12 | 6.51 | 6.12 | 8.78 | 10.60 | 10.09 | 13.63 | 13.93 | 25.44 | 10.30 |

| IA14 [29] | 3.34 | 6.74 | 6.64 | 7.11 | 8.77 | 10.47 | 9.71 | 14.19 | 13.05 | 25.95 | 10.60 |

| GC10 [52] | 3.21 | 8.22 | 8.53 | 6.62 | 7.90 | 14.85 | 14.22 | 19.07 | 9.55 | 27.84 | 12.00 |

| AZ08 [31] | 2.71 | 6.53 | 7.23 | 5.96 | 11.03 | 12.54 | 13.93 | 14.17 | 21.48 | 30.50 | 12.61 |

| WG10 [17] | 2.06 | 6.73 | 7.18 | 6.50 | 13.12 | 10.91 | 15.70 | 15.39 | 25.89 | 30.01 | 13.35 |

| Least Squares [1] | 4.10 | 8.41 | 8.89 | 8.39 | 14.65 | 14.92 | 18.50 | 19.80 | 25.60 | 30.62 | 15.39 |

| Method | Ball | Cat | Pot1 | Bear | Pot2 | Buddha | Goblet | Reading | Cow | Harvest | Average |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ours | 2.75 | 9.35 | 7.73 | 5.45 | 6.96 | 8.88 | 11.24 | 14.87 | 6.72 | 16.77 | 9.07 |

| SDPS-Net [43] | 2.77 | 8.06 | 8.14 | 6.89 | 7.50 | 8.97 | 11.91 | 14.90 | 8.48 | 17.43 | 9.51 |

| UPS-FCN [41] | 6.62 | 14.68 | 13.98 | 11.23 | 14.19 | 15.87 | 20.72 | 23.26 | 11.91 | 27.79 | 16.02 |

| LC18 [33] | 9.30 | 12.60 | 12.40 | 10.90 | 15.70 | 19.00 | 18.30 | 22.30 | 15.00 | 28.00 | 16.30 |

| PF14 [61] | 4.77 | 9.54 | 9.51 | 9.07 | 15.90 | 14.92 | 29.93 | 24.18 | 19.53 | 29.21 | 16.66 |

| WT13 [28] | 4.39 | 36.55 | 9.39 | 6.42 | 14.52 | 13.19 | 20.57 | 58.96 | 19.75 | 55.51 | 23.93 |

| LM13 [62] | 22.43 | 25.01 | 32.82 | 15.44 | 20.57 | 25.76 | 29.16 | 48.16 | 22.53 | 34.45 | 27.63 |

| SM10 [63] | 8.90 | 19.84 | 16.68 | 11.98 | 50.68 | 15.54 | 48.79 | 26.93 | 22.73 | 73.86 | 29.59 |

| AM07 [64] | 7.27 | 31.45 | 18.37 | 16.81 | 49.16 | 32.81 | 46.54 | 53.65 | 54.72 | 61.70 | 37.25 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, C.; Lee, S.W. Deep Photometric Stereo Network with Multi-Scale Feature Aggregation. Sensors 2020, 20, 6261. https://doi.org/10.3390/s20216261

Yu C, Lee SW. Deep Photometric Stereo Network with Multi-Scale Feature Aggregation. Sensors. 2020; 20(21):6261. https://doi.org/10.3390/s20216261

Chicago/Turabian StyleYu, Chanki, and Sang Wook Lee. 2020. "Deep Photometric Stereo Network with Multi-Scale Feature Aggregation" Sensors 20, no. 21: 6261. https://doi.org/10.3390/s20216261

APA StyleYu, C., & Lee, S. W. (2020). Deep Photometric Stereo Network with Multi-Scale Feature Aggregation. Sensors, 20(21), 6261. https://doi.org/10.3390/s20216261