A Multimodal Real-Time Feedback Platform Based on Spoken Interactions for Remote Active Learning Support

Abstract

:1. Introduction

2. Related Work

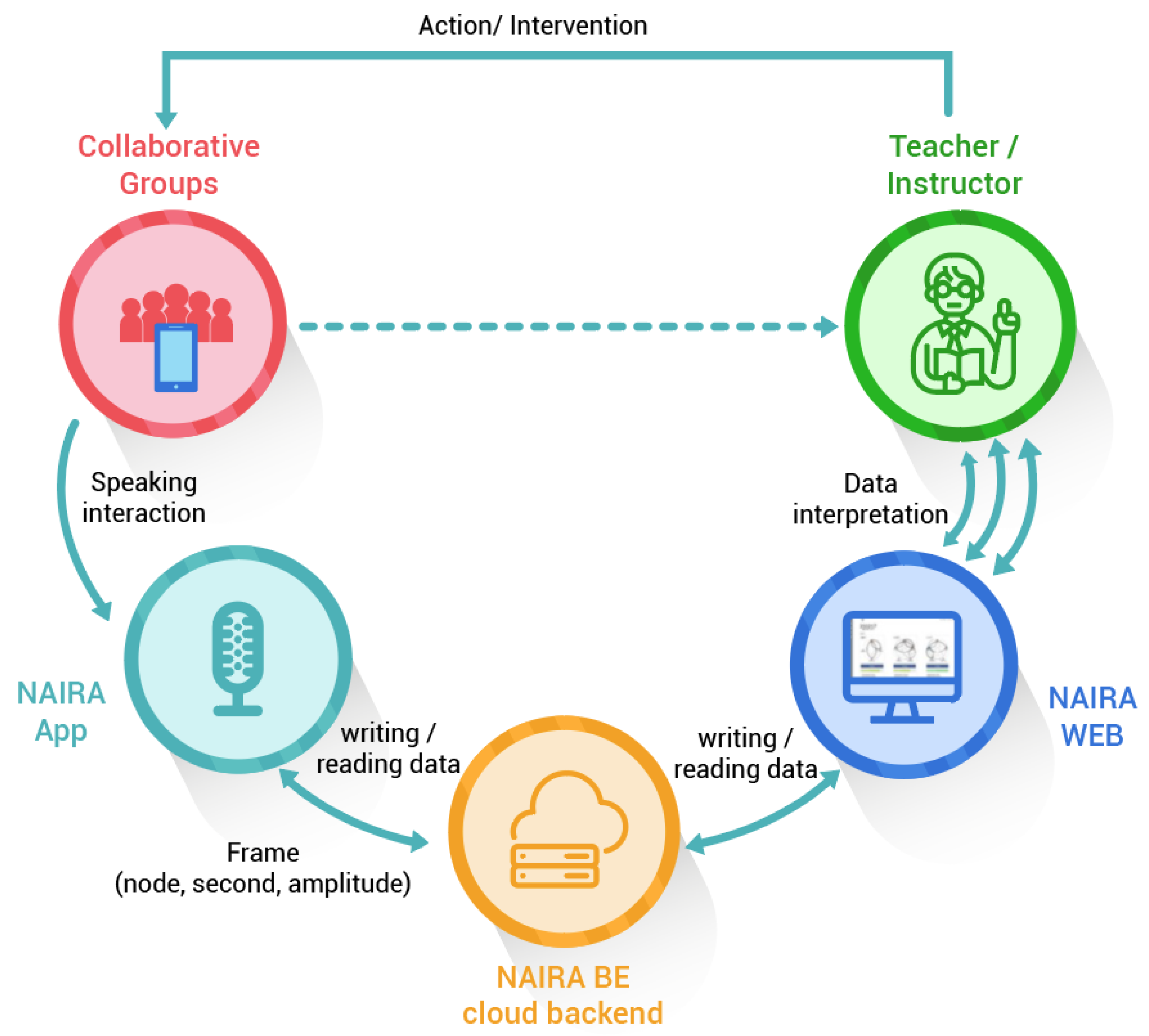

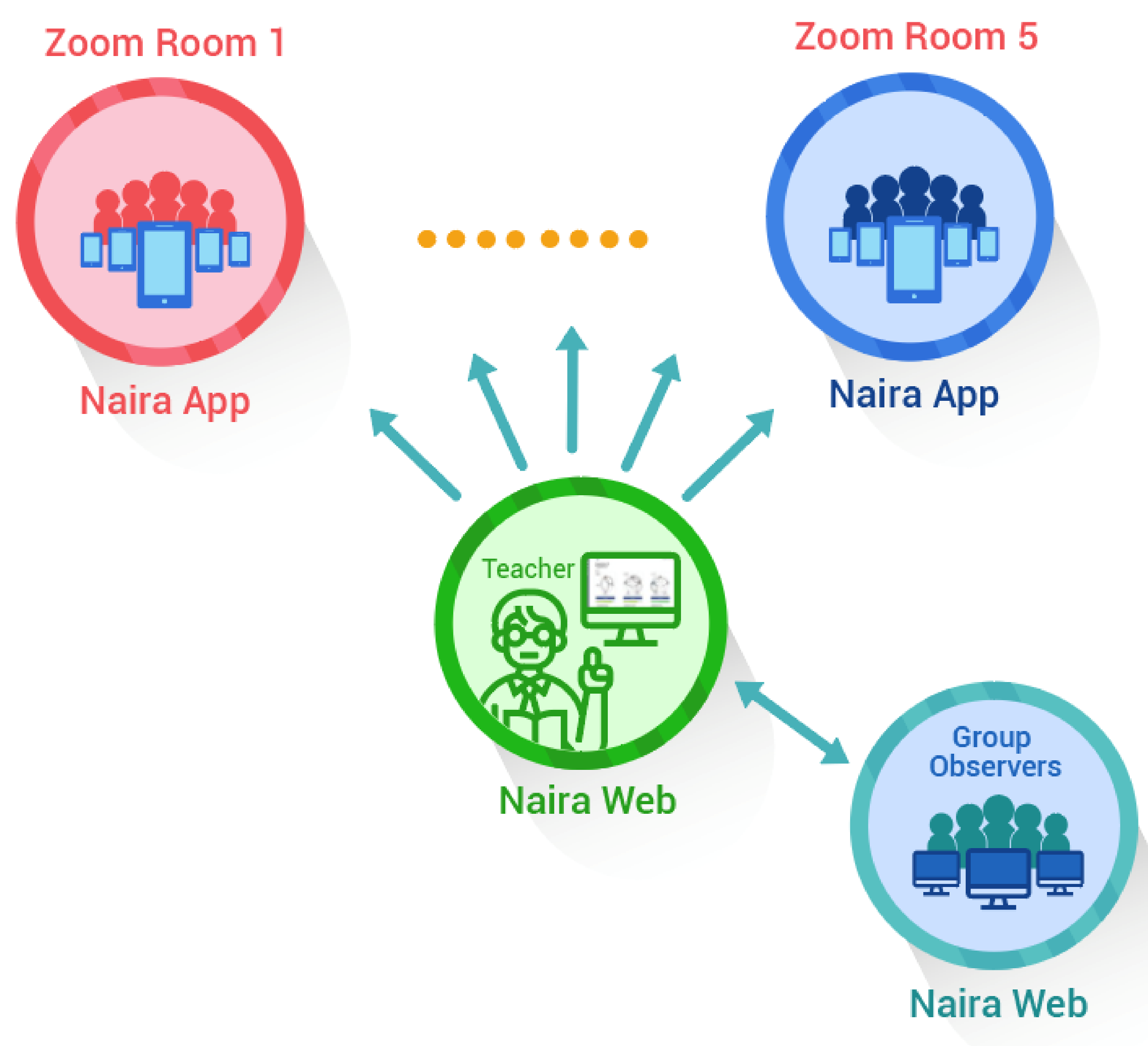

3. MMLA Real-Time Feedback Platform Overview

3.1. NAIRA App

- NAIRA App—Android version. It was developed using the Android Studio IDE and JAVA programming language. The data collection process of the microphone devices uses the fast Fourier transform (FFT). FFT is a mathematical transformation used to convert signals between the time (or spatial) domain and the frequency domain. In this case, as the input is a periodic, limited, and discrete signal, the Fourier transform can be simplified to calculate a discrete set of complex amplitudes. The signal coming from the microphone is transformed with the AudioRecord library, which allows the management of the audio input resources for the applications and can analyze the audio directly from the hardware in real time. When the activity starts, the amplitude data is captured from the microphone every 200 milliseconds in the device (amplitudes are captured 5 times per second). This operation consists of analyzing the bits stored in the input buffer referenced by a 16-bit short variable. These bits are squared to convert it into a range of numerical data [0,32,767] where the maximum number is related to the type of the manipulated variable. If the numerical amplitude data obtained from the transform is in the range of operability established for the user, the frame is sent to the cloud. The frame structure consists of the user’s number, the second in which the capture is recorded, and the amplitude of the same capture. The data collection process continues until the activity is finished in a centralized way, calling the “stop” function of the AudioRecord library and displaying the end of the activity.

- NAIRA App—iOS version. It was developed for versions higher than iOS 9 using the programming languages Swift 5 and Xcode in version 11. The only difference in programming in the Android version is the real-time audio data collection process, since the AudioKit library was used to obtain the amplitude of the signal in this case. This library has a function that allows the obtainment of the signal amplitude just by setting an object of type AKFrequencyTracker with a null audio output. This object indicates the system that we are going to capture the data in real time and they will not be stored on the device. When we start the activity, the function self.tracker.amplitude is called every 200 milliseconds and it returns as amplitude a value in the range [0,1]. This amplitude value is transformed into a similar data with range [0,32,767], as the one obtained by the FFT on Android APP. If this amplitude data is in the range of operability captured for the individual, the data is sent to the Firebase database and so on in a loop until the activity is completed centrally (a change of Boolean variable in the database).

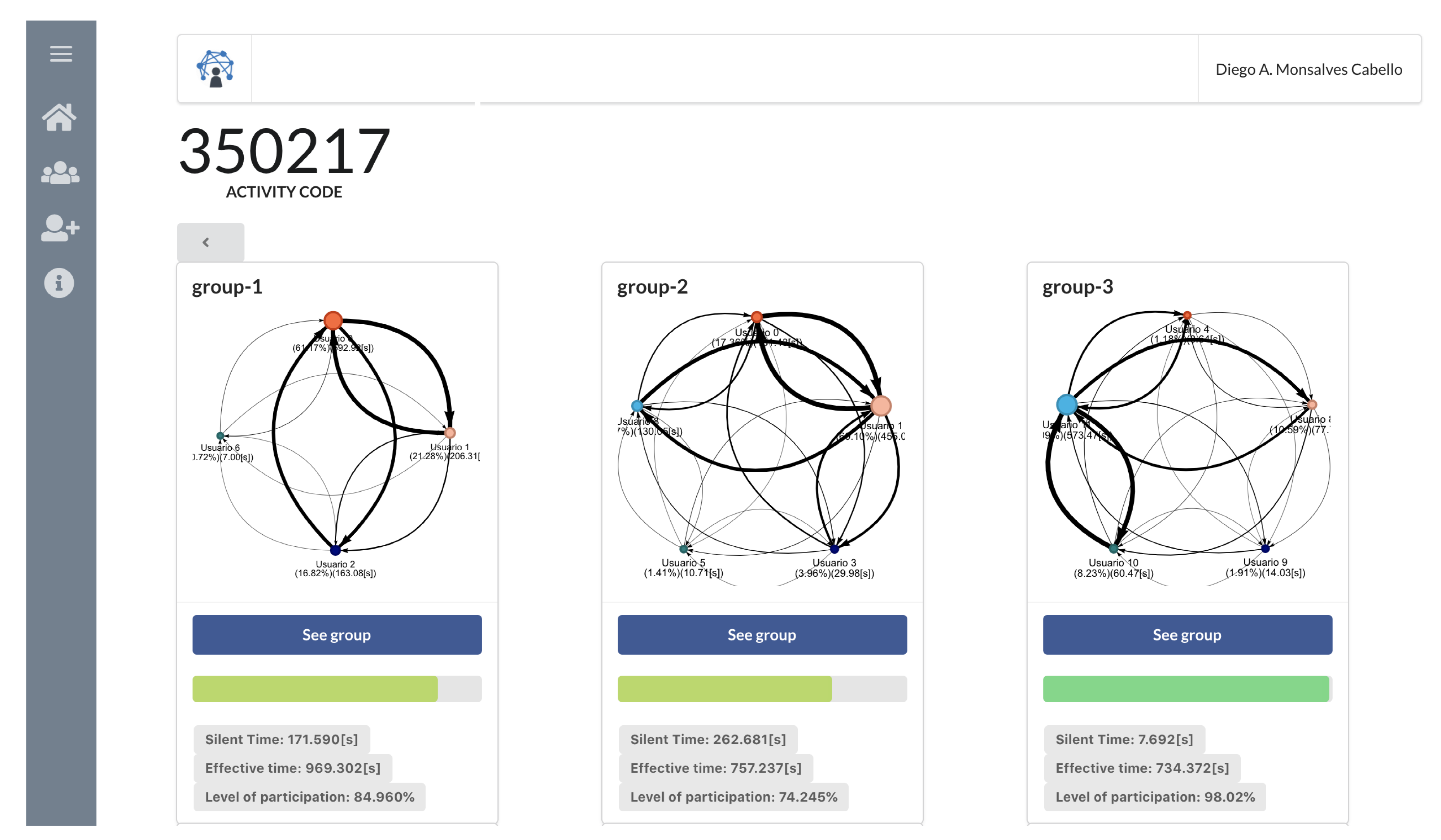

3.2. NAIRA Web

- 1.

- Influence graph, which depicts the number, duration, and sequence of spoken interactions within a group (Figure 2a);

- 2.

- Silent time bar, which goes from full to empty and from green to red as the time with no streams from any of the group members increases;

- 3.

- Speaking time distribution pie chart (Figure 2b);

- 4.

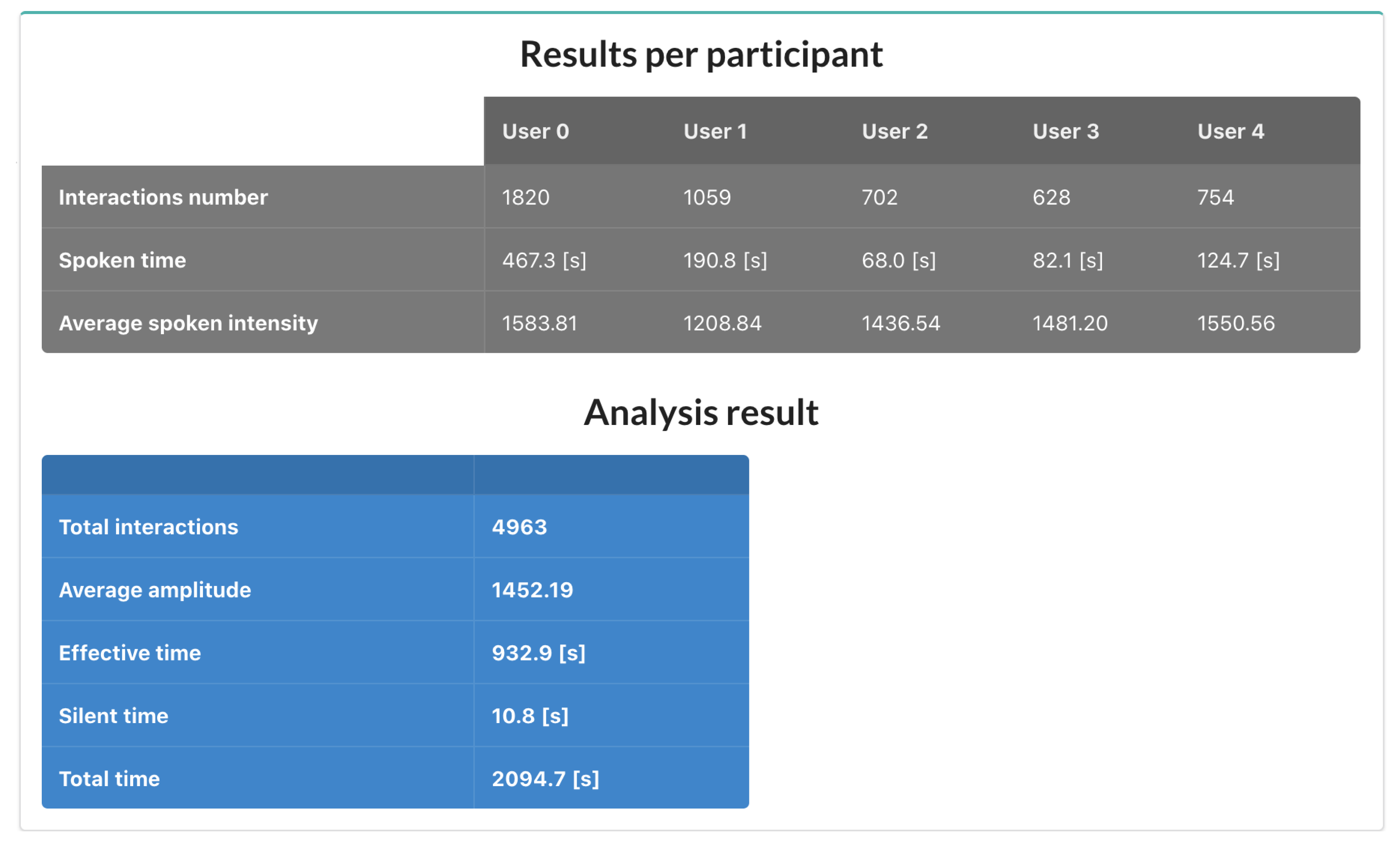

- Table with the total number and duration of the interactions of the group members as well as collaboration metrics (Figure 3).

3.3. Influence Graphs, Silent Time Bar Indicators, and Speaking Time Distribution

3.3.1. Influence Graph

- The vertex set V is the set of participants. In this case, there are no restrictions on the number of students/participants per group.

- The (directed) edge set E represents the communicational relationships between the participants. An edge means that participant b is replicating interventions from participant a, so that a exerts a certain influence on b.

- is a weight function, such that denotes the total number of interventions of a being replied by b during the whole activity. We denote as the number of interventions until time t.

- is a labeled function, such that denotes the total speaking time of participant a during the whole activity. We denote as the speaking time of a until time t.

3.3.2. Silent Time Bar

- Silent Time: A numerical value obtained when data processing detects that the time between two input metrics exceeds five seconds. This data is not added to any node as it is considered as silence in the activity.

- Effective time: It represents the speaking time of the participants. It is obtained by adding up the times that are processed for the activities when more than two interventions of the same node are detected. Note that the sum of the effective times is not the total time of the activity.

- Level of participation: Metrics that represent the participation of a group of participants. This is based on the theory proposed by Bruffee [33] that says: when people collaborate, they need to talk to share their ideas. The more they talk, the more they sharpen their thinking skills. It follows from this theory that a group that learns best is one where there is a high level of synergy and constructive interactions among participants. This metric is obtained by relating the effective time of the participants in a group with the sum of the quiet times plus the effective time.

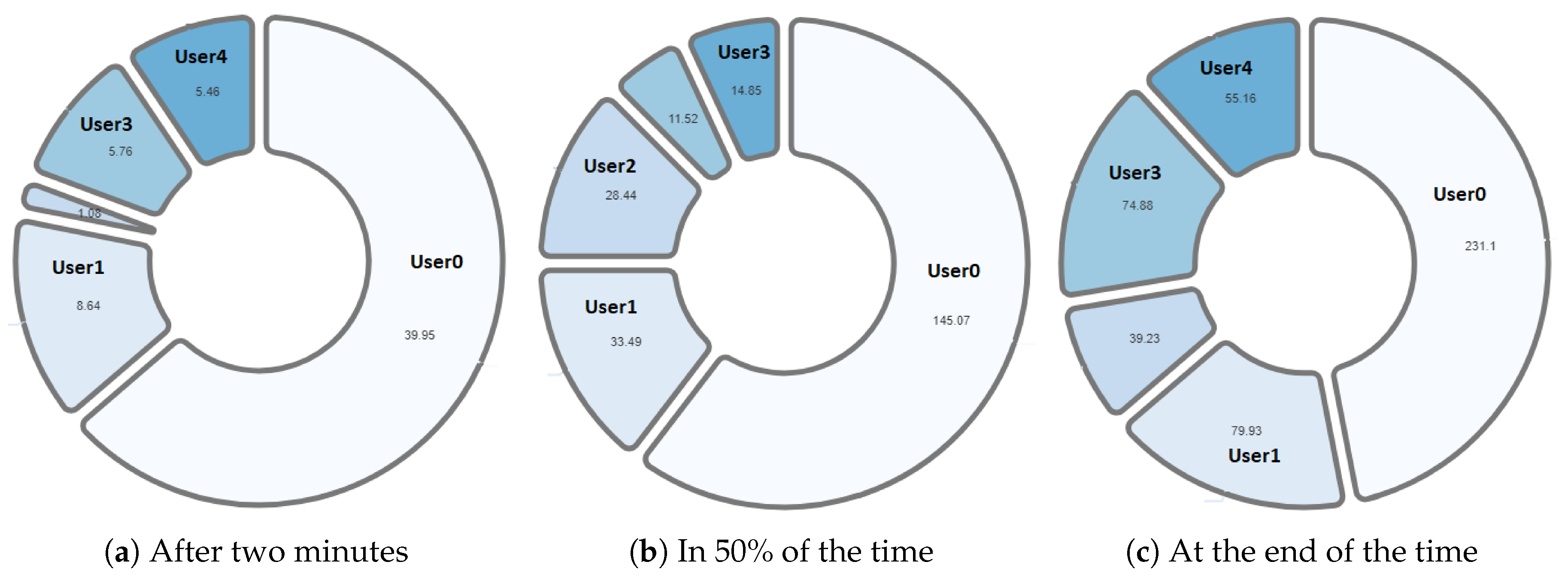

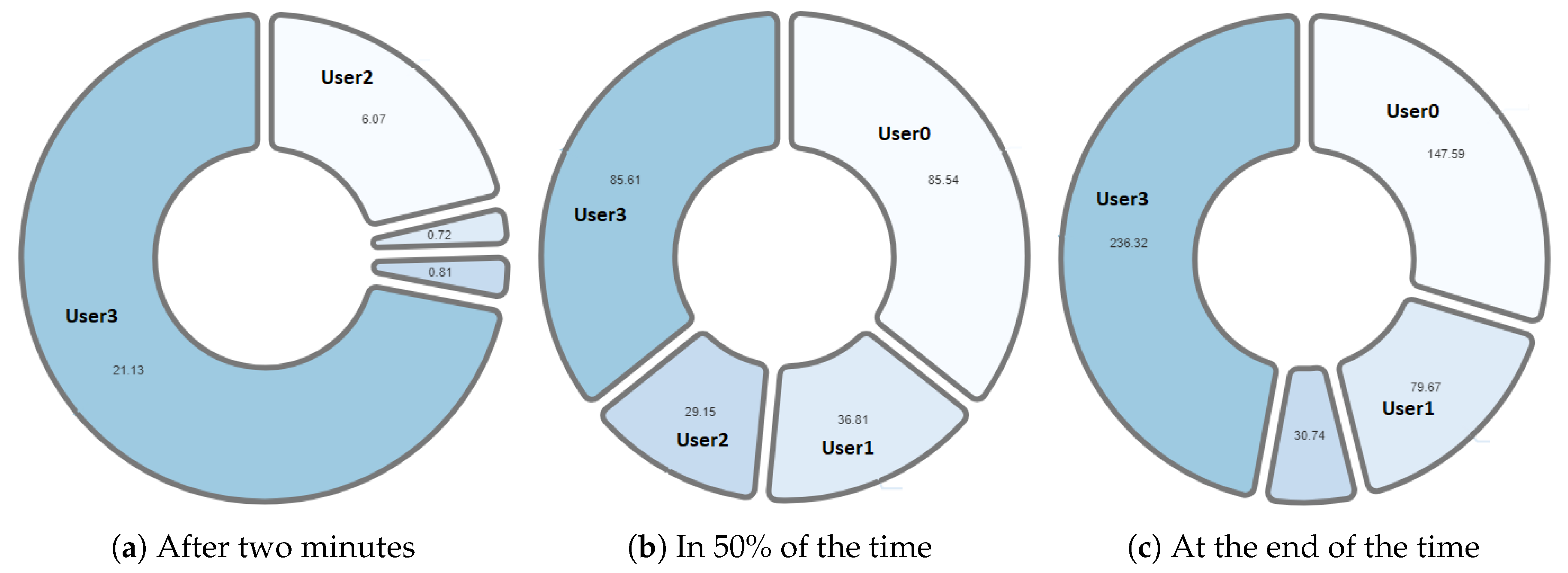

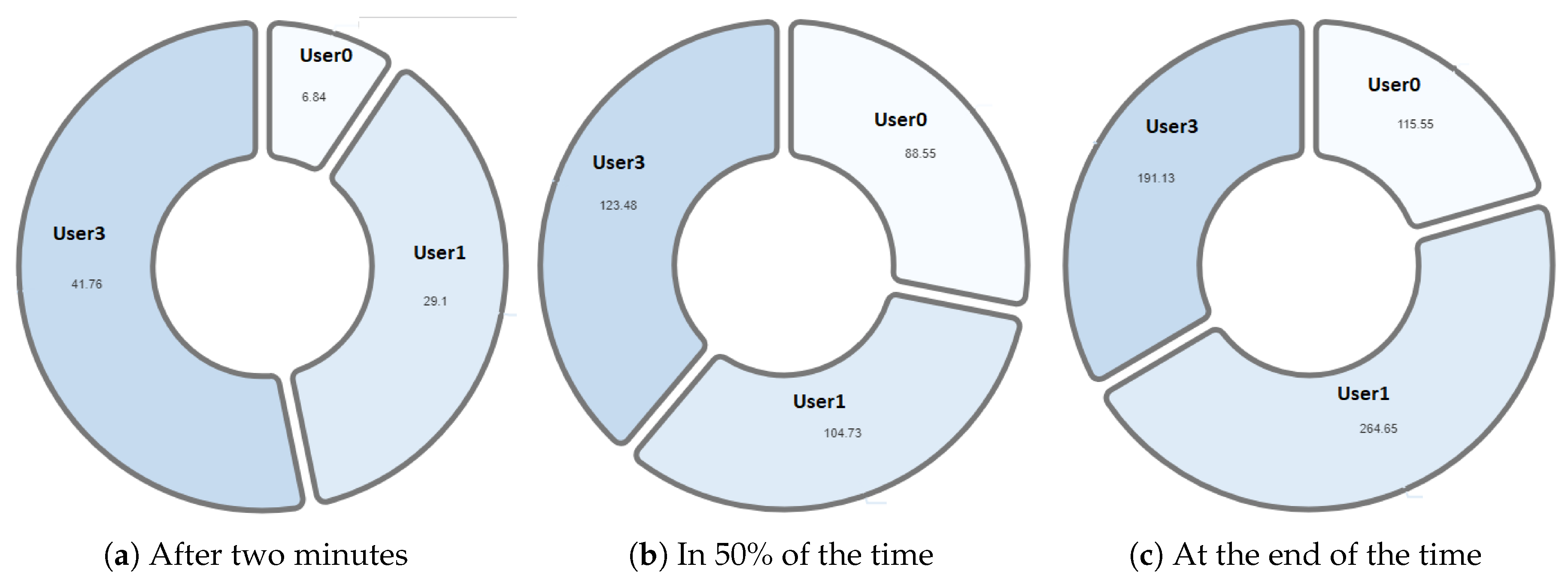

3.3.3. Speaking Time Distribution

- Dominant student: This behavior is identified when a student concentrates a high percentage of the group’s speaking time distribution. This can impair the performance of a collaborative activity. Faced with this situation, the teacher must intervene to encourage the participation of other students.

- Passive student: Contrary to the previous one, this is a behavior where one or more students show values far below the rest of the group members. In this situation, it is also convenient that the teacher intervenes to generate more conversation among the students.

- Balanced group: This is the ideal behavior in collaborative activities and results when the graph shows balanced distributions in the group’s speaking times.

3.4. Cloud Back-End Services

- A Firebase real-time database service, for collecting data streamed by NAIRA App.

- A Firebase Cloud Firestore, for storing definitions of users, activities, and groups.

- A Node JS central processing component, for the processing of the input stream and the generation of the output values and metrics for its visualization in NAIRA Web.

4. Case Study

4.1. Case Study Definition

- RQ1: Are the provided indicators and visualizations useful for the teacher to monitor a remote learning activity in real time?

- RQ2: Do NAIRA’s visualizations and data provide insights about how the remote collaborative activity was performed?

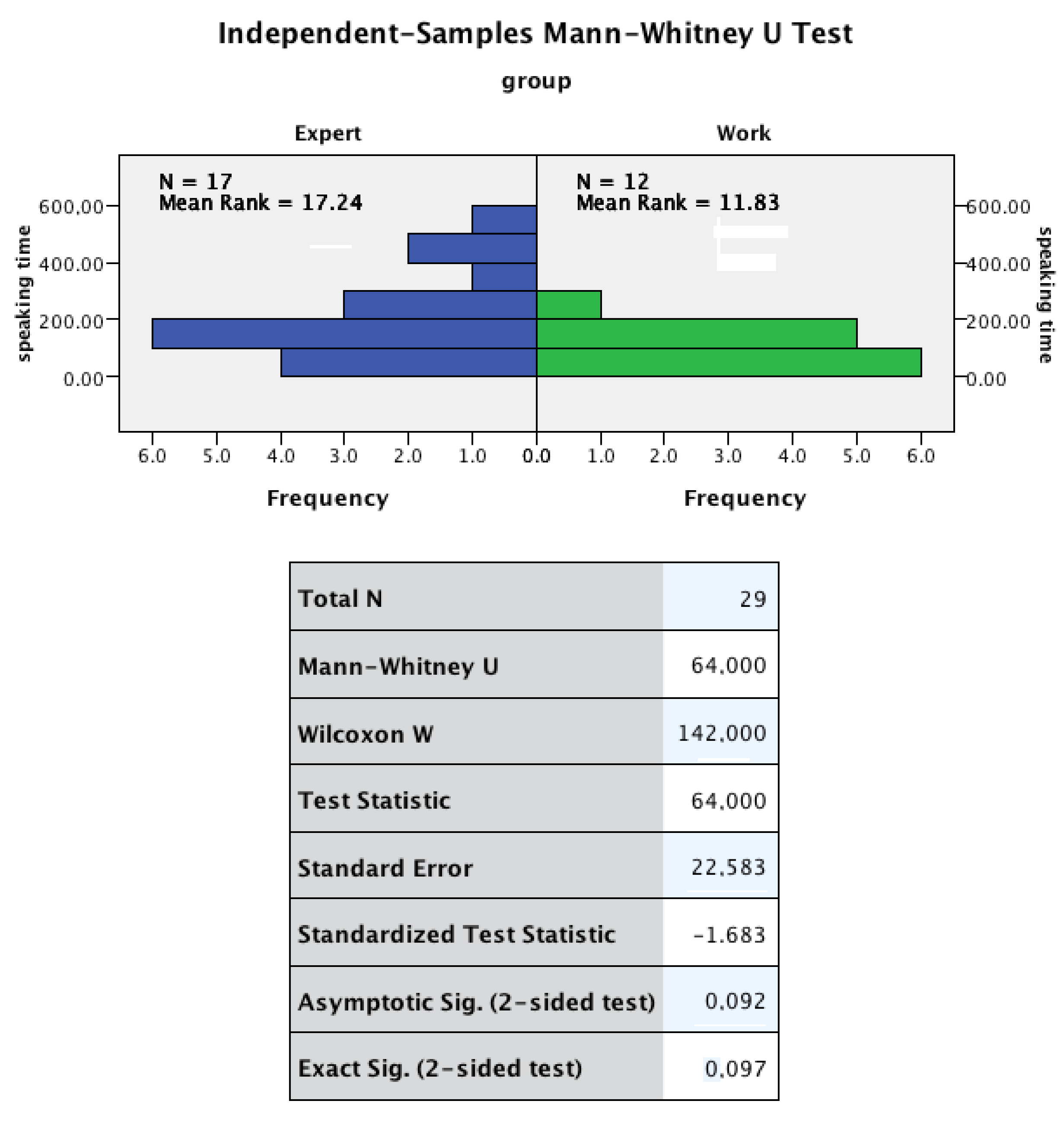

- RQ3: Are there differences in students’ participation between the expert group stage and the working group stage?

- Jigsaw provides a protocol that ensures that communication among the students will produce collaborative teamwork.

- Jigsaw defines when and how the teacher must take action to ensure the efficiency of the learning activity: the teacher must foster communication when students are not interacting and stop disruptive or dominant interactions from one or a set of participants.

- The real-time feedback provided by the platform allows identifying two kinds of situations. On the one hand, the silence time bar allows the teacher to identify if groups are diminishing the interactions. On the other hand, the dashboard visualizations for effective time and level of participation allows the identification of either dominant interaction (e.g., students that spoke far more time or in more instances) or disruptive interaction (e.g., a subset of students that just communicated among them).

4.2. Learning Activity Design

- At the start of the session, the problem is presented to all the students, specifying that each role has responsibility in acquiring specific knowledge that will contribute to solving the problem. In particular, the problem is to assess an object-oriented design from the perspective of the design principles Single Responsibility, Open-Closed, Liskov Substitution, Interface Segregation and Dependency Inversion (S.O.L.I.D.) [35].

- As proposed in the Jigsaw methodology, students are randomly assigned to one out of five expert groups (one for each S.O.L.I.D. principles). After that, they are again randomly gathered in Jigsaw groups of five students each, where each member is the expert in a design principle.

- In the expert groups stage, the students must read a definition of the principle and then analyze a design (a class diagram) to identify if that design follows the principle.

- In the Jigsaw groups stage, each expert must describe the principle and its assessment of the design to the other team members and solve questions. A group leader is chosen as a moderator of the activity; its main role is to ensure that all the students present their design principle and to prevent disruptive behavior (and in such a case, alert the teacher).

- In case the teacher is alerted by a group leader or by the group behavior (through NAIRA Web) that the activity is not working, the teacher must take action to ensure that the students behave as planned.

4.3. Case Study Execution

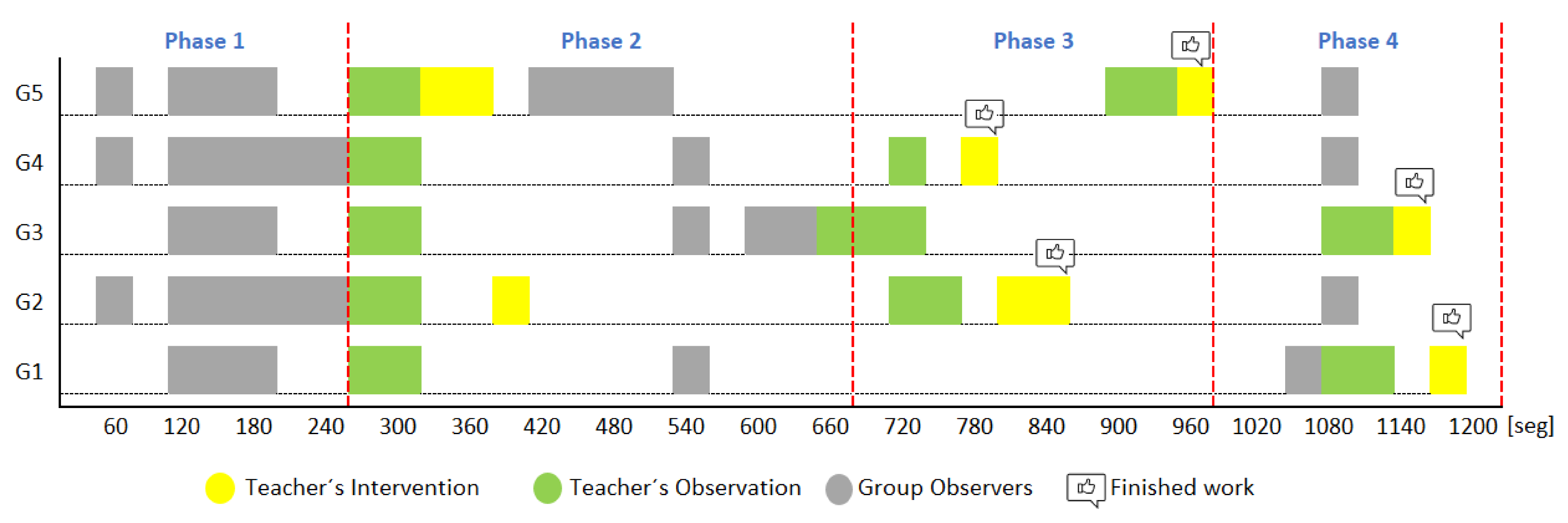

- Phase 1—Initial coordination: This phase lasted four minutes. During this phase, the teacher allowed the students to organize themselves according to the activity instructions. In this initial stage, the teacher deliberately did not perform any observations or interventions. In the third minute of activity, both researchers and the teacher noticed a risky behavior on G2, G4, and G5 (as later shown in Figure 8) when one of the nodes increased considerably in size. As this could refer to either a student member disruptively dominating the interactions or a very active group leader, the teacher and the observers agreed to monitor the potentially disruptive subjects. It is worth noting that User 10 from Group 5 (G5-U10) engaged later with the activity and had some trouble initializing the data streaming in the already started activity.

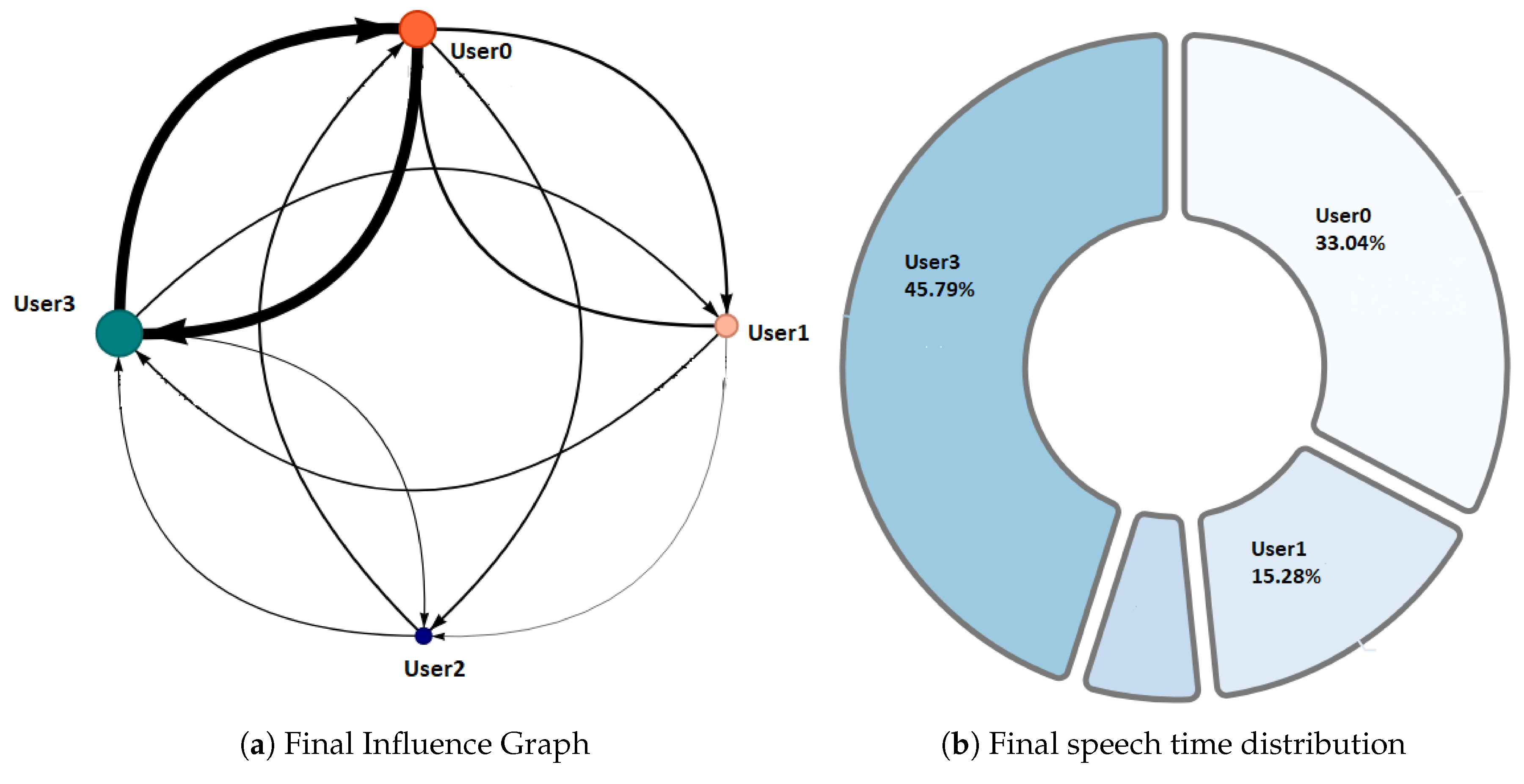

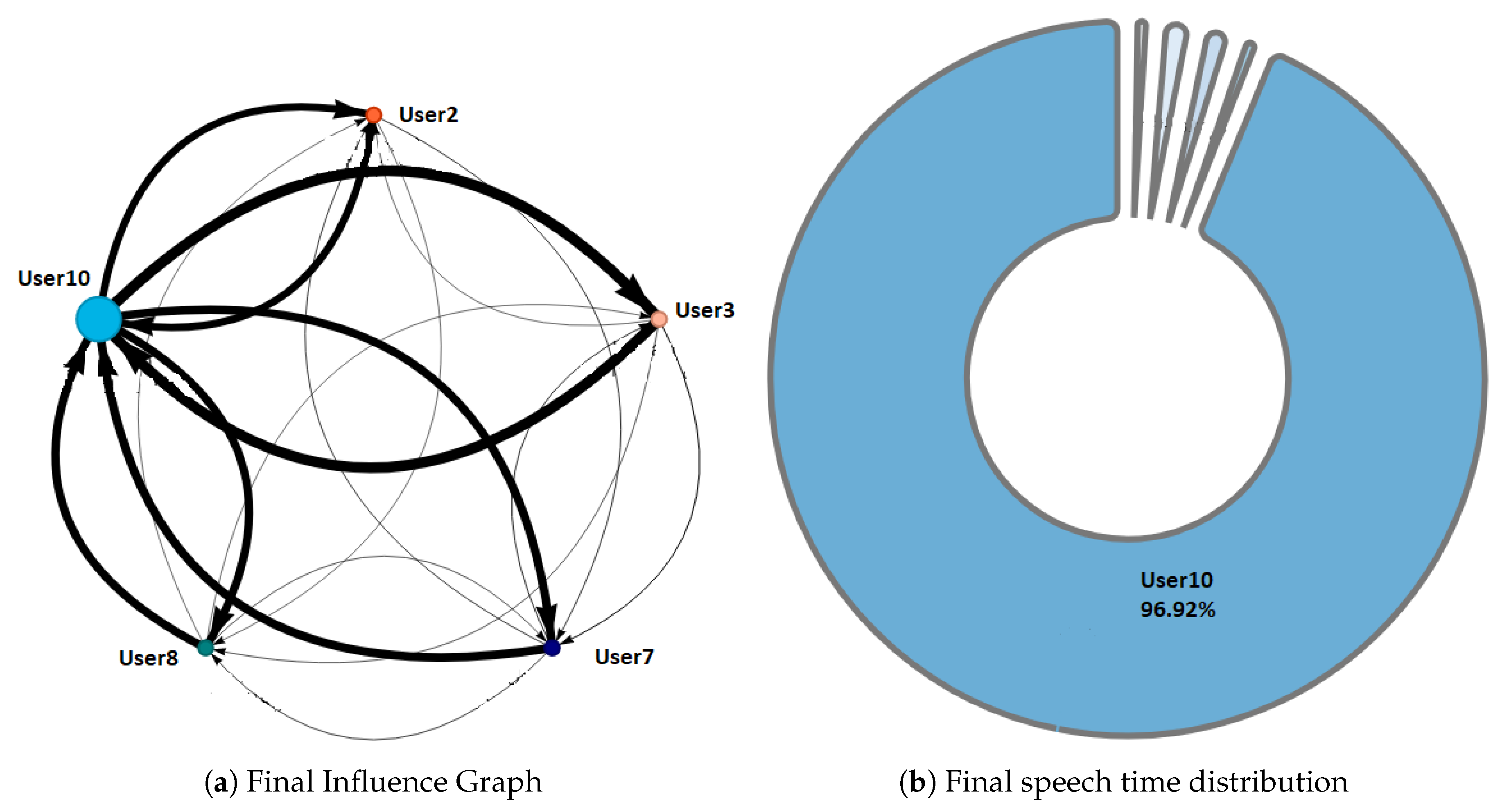

- Phase 2—Development and monitoring: This phase lasted from minute 4 to minute 11 of the activity. At the beginning of this phase, the teacher made his first observations of each group based on the dashboard influence graphs shown and silent time bars for all the groups. As a result of this observation, the teacher decided to intervene in G2 and G5 because, compared to the other groups, they were very active according to the silent time bar. A very active group presents a green and completely filled up bar, which might mean there was an actual high intensity in the group’s spoken interactions or because of technical problems, such as microphone feedback, poor voice calibration, or problems in the data streaming. After visiting the individual rooms of G2 and G5, the teacher confirmed that G2 subjects were highly active, while G5 did not seem to have an intense interaction. For some reason, one student (user 10) accessed the NAIRA App with a small time lag with respect to the rest of the G5 students. This caused the bar with the group’s silence times to provide misleading feedback to the teacher regarding the initial behavior of G5. It is important to note that as time passed, the data were adjusted, and soon, the information on the silence bar was normalized.Almost at the end of this phase, the teacher noticed that for G3 (see Figure 9c), the influence graph’s node size among subjects was not balanced as compared to the rest of the groups. He complimented the observation by looking at the distribution pie chart (Figure 9a) and observed the dynamically increasing pie sections as well as the appearance of new ones, which was consistent with the Jigsaw design of the students taking turns to present their results. The use of both visualizations was very insightful for the teacher, as he could ensure that the students were performing according to the instructions, thereby preventing him from intervening unnecessarily. One of the students (G3-U0) concentrated throughout almost all the speaking time of his group (93%). However, while observing, the teacher identified that the graph changed its distribution because another student (G3-U2) began to lead the conversation (see Figure 9b).This feedback helped the teacher to confirm that the interactions were happening according to the design of the activity. It is very difficult (if not impossible) for a teacher to obtain this type of feedback without the use of these types of applications. This difficulty is maintained even if face-to-face rather than remote activities are considered.At the end of this phase, the feedback given by the platform indicated that all groups had almost balanced the influence graphs, so if students followed the work instructions, the groups should have been close to completing the activity.

- Phase 3—Premature closure: This phase took place during minutes 11–13 of the activity. The teacher noted that the silent time bar of G2 and G4 decreased, suggesting that interactions had ended. The teacher decided to take action; in the Zoom rooms of each group, the members reported they had finished the activity. The teacher verified which groups had finished and proceeded to give final instructions for completing the exit quiz and survey. With this, the activity monitoring for G2 and G4 was completed. Almost close to the finish this phase, the teacher noticed an increase in the silence times of the G5 group. By attending to them, he was able to confirm, as in previous cases, that this group had also completed their work. Figure 10 shows the results at the moment of finishing the work of G2, Figure 11 of G4 and Figure 12 of G5.

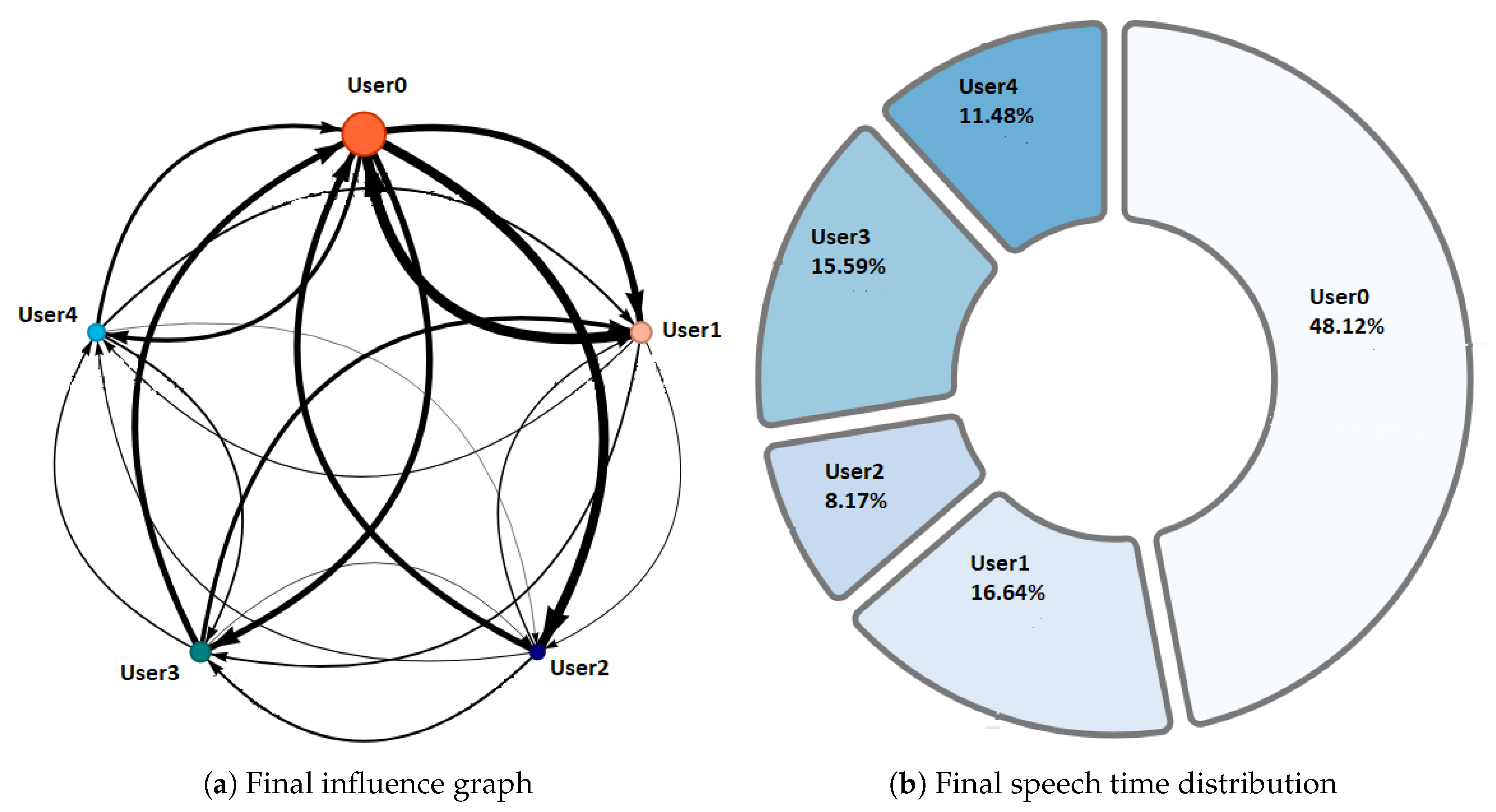

- Phase 4—Closure of the activity:This phase took place during minutes 13–20 (thus, till the end) of the activity. As in the previous groups, the teacher observed a decrease in the times of silence in the remaining groups. Therefore, he decided to intervene in G1 and G3 and confirmed that both groups had finished. The teacher repeated the closing instructions of the activities, ending the activity when 1200 s had elapsed.Figure 13 shows the results at the moment of finishing the work of G1 and Figure 14 corresponds to G3.

- Group communication

- GC1. Group communication was fluid at all times.

- GC2. A more active role of the leader was required to improve group collaboration.

- GC3. Our group did not communicate well; it would have been good to receive help from the teacher to coordinate my group’s activity.

- Group members’ contribution

- MC1. The contribution of the expert on the principle of Single Responsibility was satisfactory.

- MC2. The expert’s contribution to the Open/Closed principle was satisfactory.

- MC3. The expert’s contribution to the Liskov Substitution principle was satisfactory.

- MC4. The expert’s contribution to the Segregation of Interfaces principle was satisfactory.

- MC5. The expert’s contribution to the Dependency Inversion principle was satisfactory.

- MC6. The knowledge achieved in the group of experts was satisfactory to me.

- MC7. My presentation and resolution of doubts in the group was satisfactory to my group.

- Activity design

- AD1. I consider that the time for the expert group phase was sufficient.

- AD2. I think the time for the Jigsaw group stage was sufficient.

- AD3. I think the time for the quiz was sufficient.

5. Results and Discussion

5.1. Quantitative Results and Analysis

5.2. Qualitative Analysis

- Of the seven interventions performed by the teacher based on real-time feedback, just one was a false-positive, due to a technical issue in data streaming. In the other six cases, valuable pedagogical actions were taken, such as confirming that a highly interactive group was working in the activity and providing closing instructions for each group.

- The Jigsaw activity was successfully conducted by the teacher, in a remote learning setting, through private virtual classrooms for each group based only on the feedback of the platform and the information collected in the indicators-triggered interventions.

- The communication among the group members and the activity design was positively evaluated by the students, and as GC3 suggests, no additional interventions by the teacher were needed. This is important considering that the teacher was guided just by the NAIRA web, and as commented, he performed precise interventions during the development and closing of the activity; additionally, the visualizations prevented him from performing unneeded—and potentially intrusive—interventions.

- The contribution of the team members and the design of the activity was positively perceived by the students. According to them, the use of the NAIRA app did not affect the overall perception of the activity.

- Considering the final grades of the students compared with a traditional activity and the teacher’s experience, it cannot be stated that the results suggest an abnormal execution of the activity.

- In phase 2, supported by two complementary visualizations (the influence graph and the pie chart of interactions distribution), the teacher was able to realize that although the interactions were unbalanced in the influence graph at a given moment, the distribution of the interactions in the pie chart were consistent with the expected behavior in the Jigsaw design. In fact, the sections of the pie increased as subjects subsequently stepped in to report their findings.

- As each group ended the activity at its own time, the silent time bar successfully represented the lowering in the spoken interactions, thus allowing the teacher to timely assist (and almost to anticipate) the group in performing the final actions to close the activity.

- In phase 1, when two groups seemed to have dominant interactions with the presence of a dominant student, the teacher was triggered to track if this behavior persisted. This behavior was comparable to keeping track of a student speaking out loud in the classroom and checking if they were effectively working as requested.

- Similarly, in phase 3, the teacher visited highly interactive groups, which were the balanced groups; this is similar to checking if bustling groups are being enthusiastic about the work or are not working as requested.

- Even when technical problems were detected, it was comparable to when a student engaged late into a group, introducing variability in the expected behavior and, thus, forcing the teacher to provide special instructions to carry out the activity.

6. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Hilbert, M.; Lopez, P. The World’s Technological Capacity to Store, Communicate, and Compute Information. Science 2011, 332, 60–65. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kivunja, C. Innovative Pedagogies in Higher Education to Become Effective Teachers of 21st Century Skills: Unpacking the Learning and Innovations Skills Domain of the New Learning Paradigm. Int. J. High. Educ. 2014, 3, 37–48. [Google Scholar] [CrossRef] [Green Version]

- Lucas, B.; Claxton, G.; Hanson, J. Thinking Like an Engineer: Implications for the Education System; Technical Report; Royal Academy of Engineering: Winchester, UK, 2014. [Google Scholar]

- Voogt, J.; Roblin, N.P. A comparative analysis of international frameworks for 21stcentury competences: Implications for national curriculum policies. J. Curric. Stud. 2012, 44, 299–321. [Google Scholar] [CrossRef] [Green Version]

- Riquelme, F.; Munoz, R.; Lean, R.M.; Villarroel, R.; Barcelos, T.S.; de Albuquerque, V.H.C. Using multimodal learning analytics to study collaboration on discussion groups. Univers. Access Inf. Soc. 2019, 18, 633–643. [Google Scholar] [CrossRef]

- Elrehail, H.; Emeagwali, O.L.; Alsaad, A.; Alzghoul, A. The impact of Transformational and Authentic leadership on innovation in higher education: The contingent role of knowledge sharing. Telemat. Inform. 2018, 35, 55–67. [Google Scholar] [CrossRef]

- Picatoste, J.; Pérez-Ortiz, L.; Ruesga-Benito, S.M. A new educational pattern in response to new technologies and sustainable development. Enlightening ICT skills for youth employability in the European Union. Telemat. Inform. 2018, 35, 1031–1038. [Google Scholar] [CrossRef]

- Prince, M. Does active learning work? A review of the research. J. Eng. Educ. 2004, 93, 223–231. [Google Scholar] [CrossRef]

- Freeman, S.; Eddy, S.L.; McDonough, M.; Smith, M.K.; Okoroafor, N.; Jordt, H.; Wenderoth, M.P. Active learning increases student performance in science, engineering, and mathematics. Proc. Natl. Acad. Sci. USA 2014, 111, 8410–8415. [Google Scholar] [CrossRef] [Green Version]

- Nealy, C. Integrating soft skills through active learning in the management classroom. J. Coll. Teach. Learn. (TLC) 2005, 2. [Google Scholar] [CrossRef]

- Jain, A.; Dwivedi, P.K. The Evidence for the Effectiveness of Active Learning. Orient. J. Comput. Sci. Technol. 2014, 7, 401–407. [Google Scholar]

- Rock, M.L.; Gregg, M.; Thead, B.K.; Acker, S.E.; Gable, R.A.; Zigmond, N.P. Can you hear me now? Evaluation of an online wireless technology to provide real-time feedback to special education teachers-in-training. Teach. Educ. Spec. Educ. 2009, 32, 64–82. [Google Scholar] [CrossRef]

- Imig, D.G.; Imig, S.R. The teacher effectiveness movement: How 80 years of essentialist control have shaped the teacher education profession. J. Teach. Educ. 2006, 57, 167–180. [Google Scholar] [CrossRef]

- Barmaki, R.; Hughes, C.E. Providing real-time feedback for student teachers in a virtual rehearsal environment. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 9–13 November 2015; pp. 531–537. [Google Scholar]

- Cornide-Reyes, H.; Noël, R.; Riquelme, F.; Gajardo, M.; Cechinel, C.; Lean, R.M.; Becerra, C.; Villarroel, R.; Munoz, R. Introducing Low-Cost Sensors into the Classroom Settings: Improving the Assessment in Agile Practices with Multimodal Learning Analytics. Sensors 2019, 19, 3291. [Google Scholar] [CrossRef] [Green Version]

- Noel, R.; Riquelme, F.; Lean, R.M.; Merino, E.; Cechinel, C.; Barcelos, T.S.; Villarroel, R.; Munoz, R. Exploring Collaborative Writing of User Stories With Multimodal Learning Analytics: A Case Study on a Software Engineering Course. IEEE Access 2018, 6, 67783–67798. [Google Scholar] [CrossRef]

- Munoz, R.; Villarroel, R.; Barcelos, T.S.; Souza, A.; Merino, E.; Guiñez, R.; Silva, L.A. Development of a Software that Supports Multimodal Learning Analytics: A Case Study on Oral Presentations. J. Univers. Comput. Sci. 2018, 24, 149–170. [Google Scholar] [CrossRef]

- Riquelme, F.; Noel, R.; Cornide-Reyes, H.; Geldes, G.; Cechinel, C.; Miranda, D.; Villarroel, R.; Munoz, R. Where are You? Exploring Micro-Location in Indoor Learning Environments. IEEE Access 2020, 8, 125776–125785. [Google Scholar] [CrossRef]

- Rapanta, C.; Botturi, L.; Goodyear, P.; Guàrdia, L.; Koole, M. Online university teaching during and after the Covid-19 crisis: Refocusing teacher presence and learning activity. Postdigital Sci. Educ. 2020, 2, 923–945. [Google Scholar] [CrossRef]

- Chen, T.; Peng, L.; Yin, X.; Rong, J.; Yang, J.; Cong, G. Analysis of User Satisfaction with Online Education Platforms in China during the COVID-19 Pandemic. Healthcare 2020, 8, 200. [Google Scholar] [CrossRef]

- Cao, C.; Li, J.; Zhu, Y.; Gong, Y.; Gao, M. Evaluation of Online Teaching Platforms Based on AHP in the Context of COVID-19. Open J. Soc. Sci. 2020, 8, 359–369. [Google Scholar] [CrossRef]

- Savery, J.R. Overview of problem-based learning: Definitions and distinctions. In Essential Readings in Problem-Based Learning: Exploring and Extending the Legacy of Howard S. Barrows; Purdue University Press: West Lafayette, IN, USA, 2015; Volume 9, pp. 5–15. [Google Scholar]

- Häkkinen, P.; Järvelä, S.; Mäkitalo-Siegl, K.; Ahonen, A.; Näykki, P.; Valtonen, T. Preparing teacher-students for twenty-first-century learning practices (PREP 21): A framework for enhancing collaborative problem-solving and strategic learning skills. Teach. Teach. 2017, 23, 25–41. [Google Scholar] [CrossRef] [Green Version]

- Blikstein, P.; Worsley, M. Multimodal Learning Analytics and Education Data Mining: Using computational technologies to measure complex learning tasks. J. Learn. Anal. 2016, 3, 220–238. [Google Scholar] [CrossRef] [Green Version]

- Ochoa, X. Multimodal Learning Analytics. In The Handbook of Learning Analytics; Lang, C., Siemens, G., Wise, A., Gasević, D., Eds.; Society for Learning Analytics Research (SoLAR): Montenideo, Uruguay, 2017; Chapter 11; pp. 129–141. [Google Scholar] [CrossRef]

- Vrzakova, H.; Amon, M.J.; Stewart, A.; Duran, N.D.; D’Mello, S.K. Focused or stuck together: Multimodal patterns reveal triads’ performance in collaborative problem solving. In Proceedings of the Tenth International Conference on Learning Analytics & Knowledge, Frankfurt, Germany, 25–27 March 2020; pp. 295–304. [Google Scholar]

- Sharma, K.; Leftheriotis, I.; Giannakos, M. Utilizing Interactive Surfaces to Enhance Learning, Collaboration and Engagement: Insights from Learners’ Gaze and Speech. Sensors 2020, 20, 1964. [Google Scholar] [CrossRef] [Green Version]

- Vujovic, M.; Tassani, S.; Hernández-Leo, D. Motion Capture as an Instrument in Multimodal Collaborative Learning Analytics. In Proceedings of the European Conference on Technology Enhanced Learning, Delft, The Netherlands, 16–19 September 2019; pp. 604–608. [Google Scholar]

- Praharaj, S.; Scheffel, M.; Drachsler, H.; Specht, M. Group Coach for Co-located Collaboration. In Proceedings of the European Conference on Technology Enhanced Learning, Delft, The Netherlands, 16–19 September 2019; pp. 732–736. [Google Scholar]

- MacNeil, S.; Kiefer, K.; Thompson, B.; Takle, D.; Latulipe, C. IneqDetect: A Visual Analytics System to Detect Conversational Inequality and Support Reflection during Active Learning. In Proceedings of the ACM Conference on Global Computing Education, Chengdu, China, 17–19 May 2019; pp. 85–91. [Google Scholar]

- Chandrasegaran, S.; Bryan, C.; Shidara, H.; Chuang, T.Y.; Ma, K.L. TalkTraces: Real-time capture and visualization of verbal content in meetings. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Scotland, UK, 4–9 May 2019; pp. 1–14. [Google Scholar]

- Molinero, X.; Riquelme, F.; Serna, M. Cooperation through social influence. Eur. J. Oper. Res. 2015, 242, 960–974. [Google Scholar] [CrossRef] [Green Version]

- Bruffee, K.A. Collaborative Learning and the “Conversation of Mankind”. Coll. Engl. 1984, 46, 635–652. [Google Scholar] [CrossRef]

- The Jigsaw Classroom. Available online: https://www.jigsaw.org/ (accessed on 17 June 2020).

- Martin, R.C. Design principles and design patterns. Object Mentor 2000, 1, 1–34. [Google Scholar]

| Event | n | Duration | Average | Sd | Max | Min |

|---|---|---|---|---|---|---|

| Teacher’s observation | 5 | 277 | 55 | 20 | 87 | 35 |

| Teacher’s intervention | 7 | 307 | 44 | 25 | 96 | 20 |

| Group observers | 8 | 518 | 65 | 32 | 111 | 21 |

| Event | G1 | G2 | G3 | G4 | G5 | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| n | Time | n | Time | n | Time | n | Time | n | Time | |

| Teacher’s observation | 2 | 112 | 2 | 98 | 3 | 199 | 2 | 98 | 2 | 90 |

| Teacher’s intervention | 1 | 20 | 2 | 86 | 1 | 25 | 1 | 40 | 2 | 136 |

| Group observers | 3 | 147 | 4 | 253 | 3 | 215 | 5 | 297 | 4 | 280 |

| Total | 6 | 279 | 8 | 437 | 7 | 439 | 8 | 435 | 8 | 506 |

| Survey Item | Median | Mode |

|---|---|---|

| Group Communication | ||

| GC1 | 5 | 5 |

| GC2 | 1 | 1 |

| GC3 | 1 | 1 |

| Group Members Contribution | ||

| MC1 | 5 | 5 |

| MC2 | 5 | 5 |

| MC3 | 5 | 5 |

| MC4 | 5 | 5 |

| MC5 | 5 | 5 |

| MC6 | 4 | 5 |

| MC7 | 5 | 5 |

| Activity Design | ||

| AD1 | 5 | 5 |

| AD2 | 5 | 5 |

| AD3 | 5 | 5 |

| id | exp grp | st(exp) | ni(exp) | wrk grp | st(wrk) | ni(wrk) |

|---|---|---|---|---|---|---|

| 1 | 1 | 401 | 592.90 | 1 | 835 | 179.32 |

| 2 | 2 | 136 | 30.00 | 1 | 145 | 15.20 |

| 3 | 3 | 425 | 28.80 | 1 | ||

| 4 | 4 | 298 | 135.00 | 1 | 322 | 18.70 |

| 5 | 5 | 968 | 373.40 | 1 | 420 | 38.90 |

| 6 | 1 | 24 | 7.00 | 2 | 79 | 29.40 |

| 7 | 2 | 185 | 130.00 | 2 | 425 | 115.80 |

| 8 | 3 | 143 | 79.90 | 2 | ||

| 9 | 4 | 183 | 155.70 | 2 | 354 | 189.50 |

| 10 | 1 | 277 | 206.30 | 3 | ||

| 11 | 2 | 224 | 131.40 | 3 | ||

| 12 | 3 | 3 | ||||

| 13 | 4 | 379 | 223.60 | 3 | ||

| 14 | 5 | 683 | 217.40 | 3 | ||

| 15 | 1 | 230 | 163.10 | 4 | 165 | 156.60 |

| 16 | 2 | 365 | 455.10 | 4 | 193 | 270.30 |

| 17 | 3 | 4 | ||||

| 18 | 4 | 344 | 419.70 | 4 | 72 | 105.50 |

| 19 | 5 | 353 | 90.20 | 4 | ||

| 20 | 1 | 5 | ||||

| 21 | 2 | 16 | 10.70 | 5 | ||

| 22 | 3 | 5 | ||||

| 23 | 4 | 5 | ||||

| 24 | 5 | 510 | 134.70 | 5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cornide-Reyes, H.; Riquelme, F.; Monsalves, D.; Noel, R.; Cechinel, C.; Villarroel, R.; Ponce, F.; Munoz, R. A Multimodal Real-Time Feedback Platform Based on Spoken Interactions for Remote Active Learning Support. Sensors 2020, 20, 6337. https://doi.org/10.3390/s20216337

Cornide-Reyes H, Riquelme F, Monsalves D, Noel R, Cechinel C, Villarroel R, Ponce F, Munoz R. A Multimodal Real-Time Feedback Platform Based on Spoken Interactions for Remote Active Learning Support. Sensors. 2020; 20(21):6337. https://doi.org/10.3390/s20216337

Chicago/Turabian StyleCornide-Reyes, Hector, Fabián Riquelme, Diego Monsalves, Rene Noel, Cristian Cechinel, Rodolfo Villarroel, Francisco Ponce, and Roberto Munoz. 2020. "A Multimodal Real-Time Feedback Platform Based on Spoken Interactions for Remote Active Learning Support" Sensors 20, no. 21: 6337. https://doi.org/10.3390/s20216337

APA StyleCornide-Reyes, H., Riquelme, F., Monsalves, D., Noel, R., Cechinel, C., Villarroel, R., Ponce, F., & Munoz, R. (2020). A Multimodal Real-Time Feedback Platform Based on Spoken Interactions for Remote Active Learning Support. Sensors, 20(21), 6337. https://doi.org/10.3390/s20216337