Industrial Robot Control by Means of Gestures and Voice Commands in Off-Line and On-Line Mode

Abstract

1. Introduction

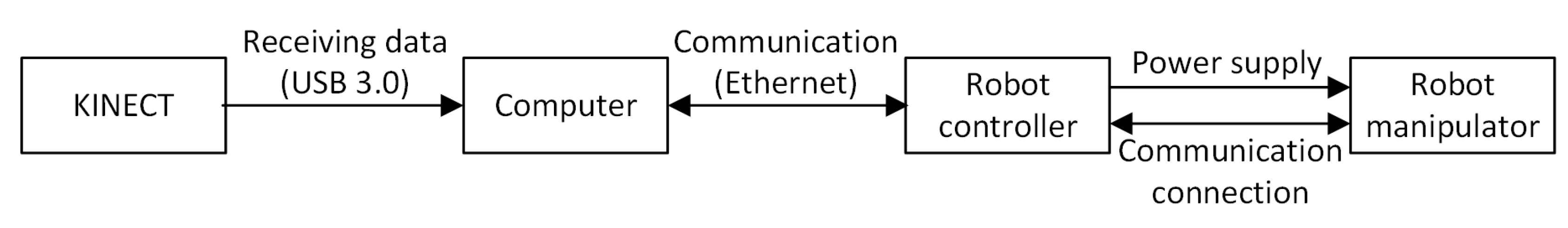

2. Station Design

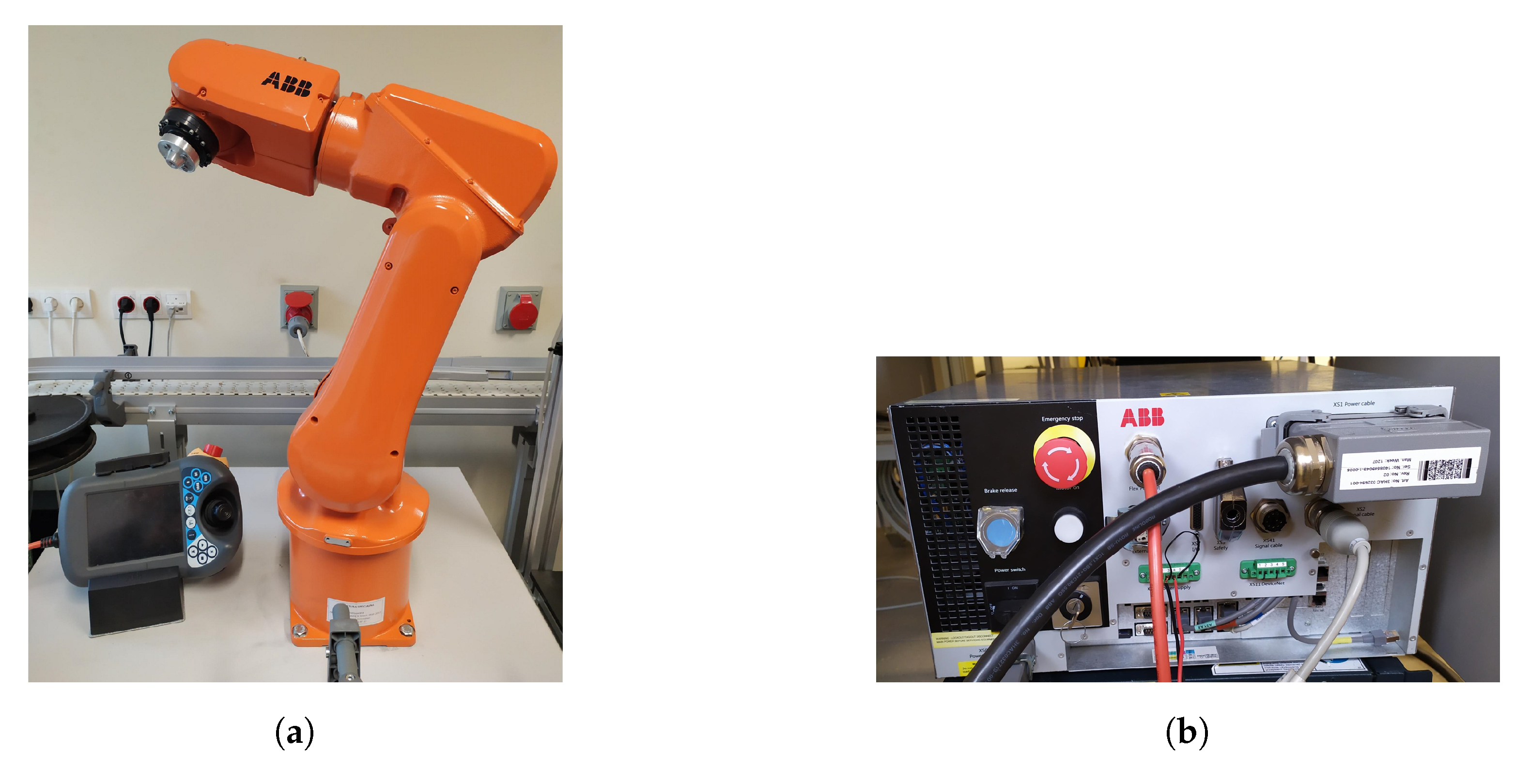

2.1. ABB IRB 120 Robot with the IRC5 Compact Controller

2.2. Kinect V2 Sensor

2.3. PC

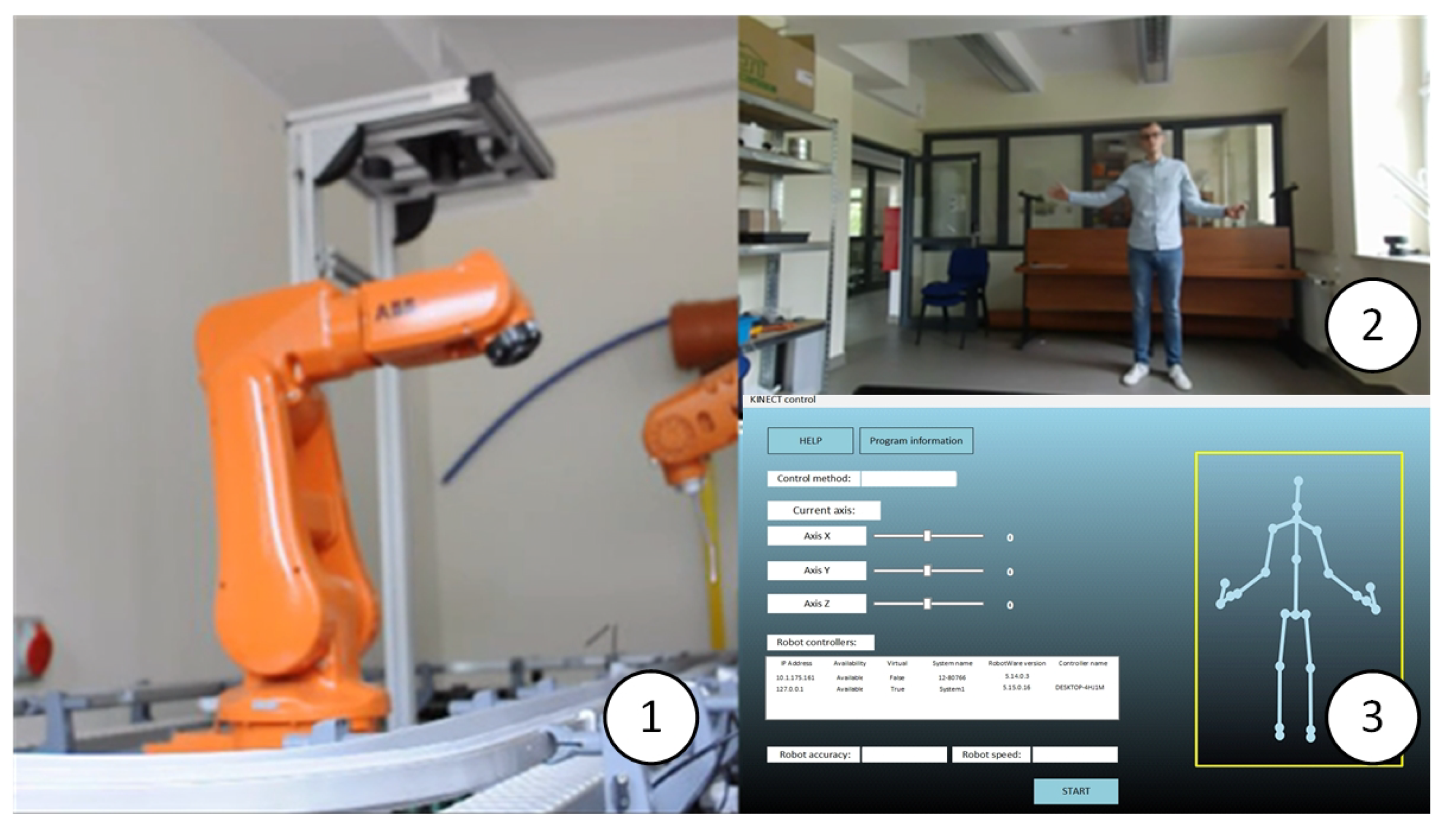

2.4. Test Station and Its Digital Twin

3. Application Design

- Thread 1 (Main) is responsible for setting the parameters, interpreting the robot’s direction of motion, and controlling the robot’s movements.

- Thread 2 is responsible for the TCP/IP communication between the robot and the computer and transferring the data received from the Kinect sensor to the main thread.

3.1. User Interface

3.2. Application Tests

- launching all prepared applications on one computer. The virtual station model was launched in the RobotStudio environment, and communication with the application supporting the Kinect sensor and with the user interface was carried out using the localhost;

- launching the prepared applications on two computers. The virtual station model was launched in the RobotStudio environment on one computer(simulation of the operation of a real station). The application supporting the Kinect sensor and the user interface was launched on the second computer. The computers were connected to the local Ethernet.

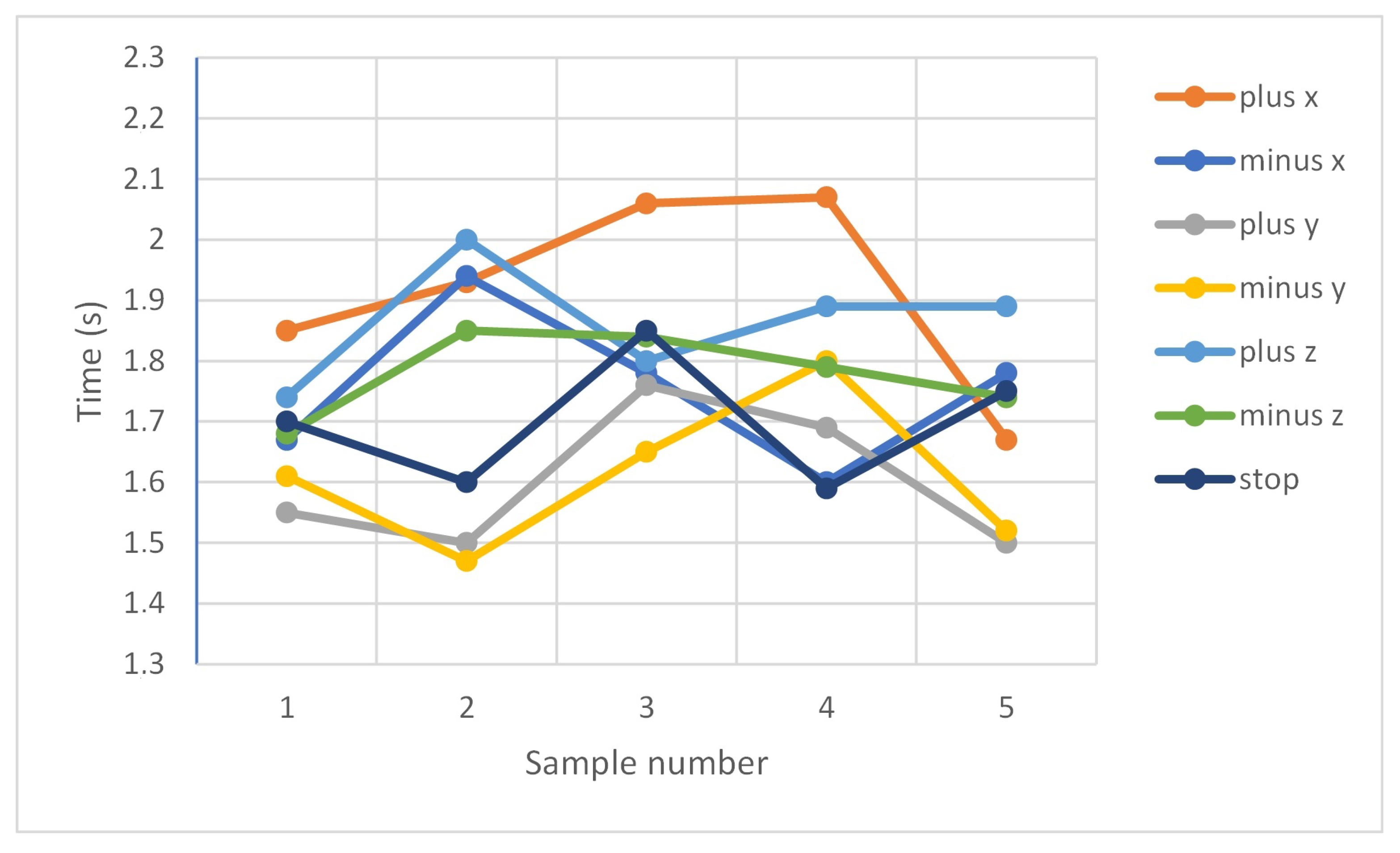

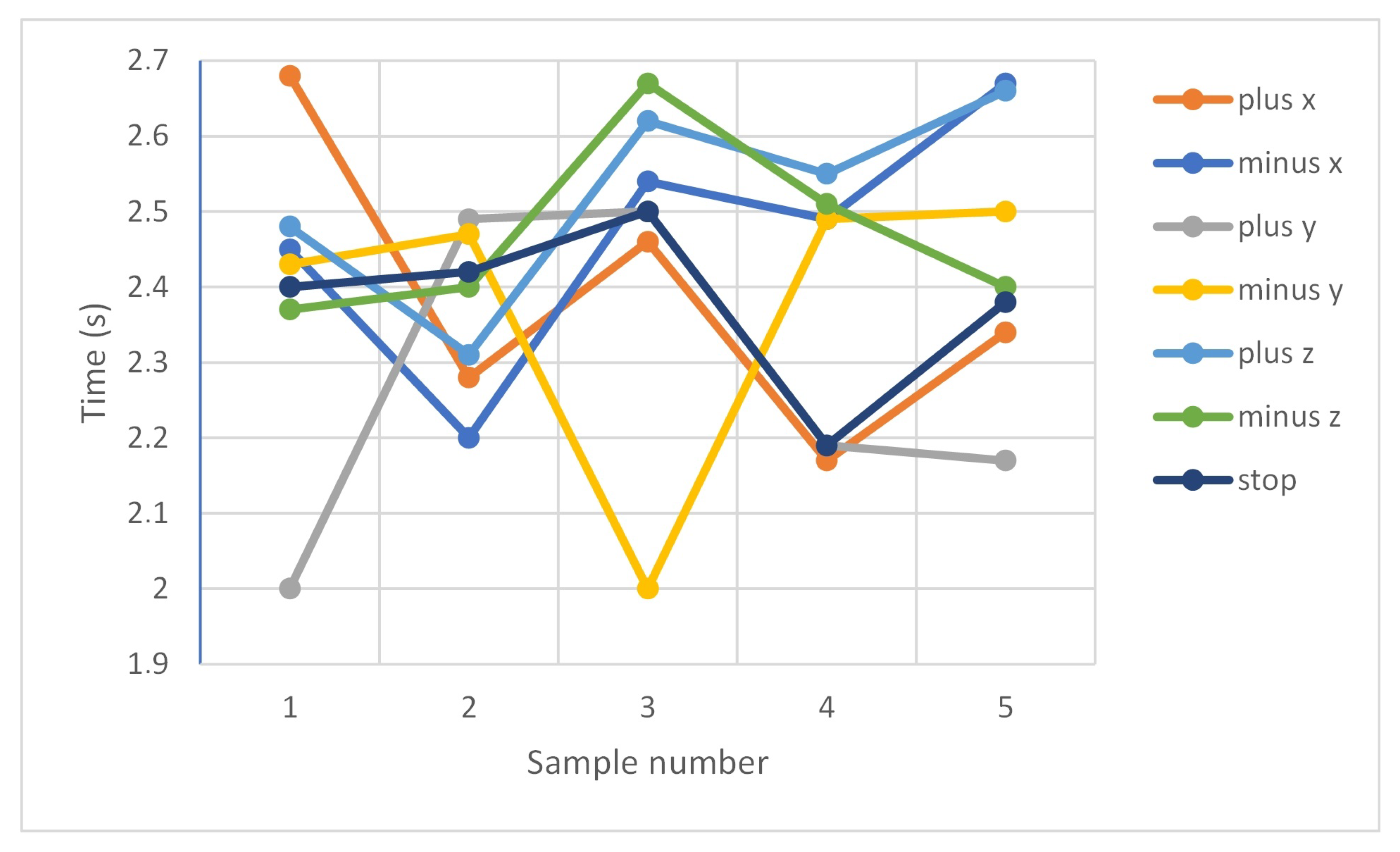

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Peursum, P.; Bui, H.; Venkatesh, S.; West, G. Human action segmentation via controlled use of missing data in HMMs. In Proceedings of the 17th International Conference on Pattern Recognition, Cambridge, UK, 26–26 August 2004; Volume 4, pp. 440–445. [Google Scholar] [CrossRef]

- Kaczmarek, W.; Panasiuk, J.; Borys, S.; Pobudkowska, A.; Majsterek, M. Analysis of the kinetics of swimming pool water reaction in analytical device reproducing its circulation on a small scale. Sensors 2020, 20, 17. [Google Scholar] [CrossRef] [PubMed]

- Wortmann, A.; Barais, O.; Combemale, B.; Wimmer, M. Modeling languages in Industry 4.0: An extended systematic mapping study. Softw. Syst. Model. 2020, 19, 67–94. [Google Scholar] [CrossRef]

- Bect, P.; Simeu-Abazi, Z.; Maisonneuve, P.L. Identification of abnormal events by data monitoring: Application to complex systems. Comput. Ind. 2015, 68, 78–88. [Google Scholar] [CrossRef]

- Konieczny, Ł; Burdzik, R. Comparison of Characteristics of the Components Used in Mechanical and Non-Conventional Automotive Suspensions. Solid State Phenom. 2013, 210, 26–31. [Google Scholar] [CrossRef]

- Gasiorek, D.; Baranowski, P.; Malachowski, J.; Mazurkiewicz, L.; Wiercigroch, M. Modelling of guillotine cutting of multi-layered aluminum sheets. J. Manuf. Process. 2018, 34, 374–388. [Google Scholar] [CrossRef]

- Borys, S.; Kaczmarek, W.; Laskowski, D. Selection and Optimization of the Parameters of the Robotized Packaging Process of One Type of Product. Sensors 2020, 20, 5378. [Google Scholar] [CrossRef]

- Sekala, A.; Gwiazda, A.; Kost, G.; Banas, W. Modelling of a production system using the multi-agent network approach. IOP Conf. Ser.: Mater. Sci. Eng. 2018, 400, 052009. [Google Scholar] [CrossRef]

- Vera-Baquero, A.; Colomo-Palacios, R.; Molloy, O. Towards a Process to Guide Big Data Based Decision Support Systems for Business Processes. Procedia Technol. 2014, 16, 11–21. [Google Scholar] [CrossRef]

- Esmaeilian, B.; Behdad, S.; Wang, B. The evolution and future of manufacturing: A review. J. Manuf. Syst. 2016, 39, 79–100. [Google Scholar] [CrossRef]

- Prusaczyk, P.; Kaczmarek, W.; Panasiuk, J.; Besseghieur, K. Integration of robotic arm and vision system with processing software using TCP/IP protocol in industrial sorting application. In Proceedings of the 15th Conference on Computational Technologies in Engineering, Svratka, Czech Republic, 13–16 May 2019; p. 020032. [Google Scholar] [CrossRef]

- Kaczmarek, W.; Panasiuk, J.; Borys, S. Środowiska Programowania Robotów; PWN Scientific Publishing House: Warsaw, Poland, 2017. [Google Scholar]

- Kaczmarek, W.; Panasiuk, J. Robotyzacja Procesow Produkcyjnych; PWN Scientific Publishing House: Warsaw, Poland, 2017. [Google Scholar]

- Kaczmarek, W.; Panasiuk, J. Programowanie Robotów Przemysłowych; PWN Scientific Publishing House: Warsaw, Poland, 2017. [Google Scholar]

- Park, C.B.; Lee, S.W. Real-time 3D pointing gesture recognition for mobile robots with cascade HMM and particle filter. Image Vis. Comput. 2011, 29, 51–63. [Google Scholar] [CrossRef]

- Faudzi, A.M.; Ali, M.H.K.; Azman, M.A.; Ismail, Z.H. Real-time Hand Gestures System for Mobile Robots Control. Procedia Eng. 2012, 41, 798–804. [Google Scholar] [CrossRef]

- Rosenstrauch, M.J.; Pannen, T.J.; Krüger, J. Human robot collaboration—Using Kinect v2 for ISO/TS 15066 speed and separation monitoring. Procedia CIRP 2018, 76, 183–186. [Google Scholar] [CrossRef]

- Rosen, R.; von Wichert, G.; Lo, G.; Bettenhausen, K.D. About The Importance of Autonomy and Digital Twins for the Future of Manufacturing. IFAC-PapersOnLine 2015, 48, 567–572. [Google Scholar] [CrossRef]

- Bouteraa, Y.; Ben Abdallah, I. A gesture-based telemanipulation control for a robotic arm with biofeedback-based grasp. Ind. Robot Int. J. 2017, 44, 575–587. [Google Scholar] [CrossRef]

- Bernier, E.; Chellali, R.; Thouvenin, I.M. Human gesture segmentation based on change point model for efficient gesture interface. In Proceedings of the 2013 IEEE RO-MAN, Gyeongju, Korea, 26–29 August 2013; pp. 258–263. [Google Scholar] [CrossRef]

- Ghonge, E.P.; Kulkarni, M.N. Gesture based control of IRB1520ID using Microsoft’s Kinect. In Proceedings of the 2017 IEEE 2nd International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 19–20 October 2017; pp. 355–358. [Google Scholar] [CrossRef]

- Jingbiao, L.; Huan, X.; Zhu, L.; Qinghua, S. Dynamic gesture recognition algorithm in human computer interaction. In Proceedings of the 2015 IEEE 16th International Conference on Communication Technology (ICCT), Hangzhou, China, 18–20 October 2015; pp. 425–428. [Google Scholar] [CrossRef]

- Popov, V.; Ahmed, S.; Shakev, N.; Topalov, A. Gesture-based Interface for Real-time Control of a Mitsubishi SCARA Robot Manipulator. IFAC-PapersOnLine 2019, 52, 180–185. [Google Scholar] [CrossRef]

- Torres, S.H.M.; Kern, M.J. 7 DOF industrial robot controlled by hand gestures using microsoft Kinect v2. In Proceedings of the 2017 IEEE 3rd Colombian Conference on Automatic Control (CCAC), Cartagena, Colombia, 18–20 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Moe, S.; Schjolberg, I. Real-time hand guiding of industrial manipulator in 5 DOF using Microsoft Kinect and accelerometer. In Proceedings of the 2013 IEEE RO-MAN, Gyeongju, Korea, 26–29 August 2013; pp. 644–649. [Google Scholar] [CrossRef]

- Jha, A.; Chiddarwar, S.S.; Alakshendra, V.; Andulkar, M.V. Kinematics-based approach for robot programming via human arm motion. J. Braz. Soc. Mech. Sci. Eng. 2017, 39, 2659–2675. [Google Scholar] [CrossRef]

- Duque, D.A.; Prieto, F.A.; Hoyos, J.G. Trajectory generation for robotic assembly operations using learning by demonstration. Robot. Comput. Integr. Manuf. 2019, 57, 292–302. [Google Scholar] [CrossRef]

- Jha, A.; Chiddarwar, S.S.; Andulkar, M.V. An integrated approach for robot training using Kinect and human arm kinematics. In Proceedings of the 2015 IEEE International Conference on Advances in Computing, Communications and Informatics (ICACCI), Kochi, India, 10–13 August 2015; pp. 216–221. [Google Scholar] [CrossRef]

- Chen, C.S.; Chen, P.C.; Hsu, C.M. Three-Dimensional Object Recognition and Registration for Robotic Grasping Systems Using a Modified Viewpoint Feature Histogram. Sensors 2016, 16, 1969. [Google Scholar] [CrossRef]

- Zhou, Y.; Chen, M.; Du, G.; Zhang, P.; Liu, X. Intelligent grasping with natural human-robot interaction. Ind. Robot Int. J. 2018, 45, 44–53. [Google Scholar] [CrossRef]

- Wang, F.; Liang, C.; Ru, C.; Cheng, H. An Improved Point Cloud Descriptor for Vision Based Robotic Grasping System. Sensors 2019, 19, 2225. [Google Scholar] [CrossRef]

- Panasiuk, J.; Kaczmarek, W.; Besseghieur, K.; Borys, S.; Siwek, M.; Goławski, K. Study of the 3D scanning accuracy using industrial robot. In Proceedings of the 24th International Conference—Engineering Mechanics, Svratka, Czech Republic, 14–17 May 2018; pp. 641–644. [Google Scholar] [CrossRef]

- Dybedal, J.; Aalerud, A.; Hovland, G. Embedded Processing and Compression of 3D Sensor Data for Large Scale Industrial Environments. Sensors 2019, 19, 636. [Google Scholar] [CrossRef]

- Ullo, S.L.; Zarro, C.; Wojtowicz, K.; Meoli, G.; Focareta, M. LiDAR-Based System and Optical VHR Data for Building Detection and Mapping. Sensors 2020, 20, 1285. [Google Scholar] [CrossRef]

- Perkins, B.; Hammer, J.V.; Reid, J.D. Beginning C# 7 Programming with Visual Studio® 2017; Wiley: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Product Specification IRB 120; ABB AB Robotics: Västerås, Sweden. Available online: https://library.e.abb.com/public/7139d7f4f2cb4d0da9b7fac6541e91d1/3HAC035960%20PS%20IRB%20120-en.pdf (accessed on 15 August 2020).

- KINECT for Windows. Human Interface Guidelines v2.0. Microsoft Corporation, USA. Available online: https://download.microsoft.com/download/6/7/6/676611B4-1982-47A4-A42E-4CF84E1095A8/KinectHIG.2.0.pdf (accessed on 15 August 2020).

- Albahari, J.; Albahari, B. C# 7.0 in a Nutshell: The Definitive Reference; O’Reilly Media Inc.: Sevastopol, CA, USA, 2017. [Google Scholar]

- Troelsen, A.; Japikse, P. C# 6.0 and the .NET 4.6 Framework; Apress: Berkeley, CA, USA, 2015. [Google Scholar] [CrossRef]

- Webb, J.; Ashley, J. Beginning Kinect Programming with the Microsoft Kinect SDK; Apress: Berkeley, CA, USA, 2012. [Google Scholar] [CrossRef]

- Greenberg, S.; Ainsworth, W.; Popper, A.; Fay, R. Speech Processing in the Auditory System; Springer Handbook of Auditory Research; Springer: New York, NY, USA, 2004; Volume 18. [Google Scholar] [CrossRef]

- PC SDK API Reference. ABB AB Robotics: Västerås, Sweden. Available online: https://developercenter.robotstudio.com/api/pcsdk/api/ (accessed on 15 August 2020).

| Gesture * | Robot Action | Operator’s Body Position |

|---|---|---|

| Axis X move forward | Right hand on the left, left hand below shoulder |

| Axis X move backwards | Right hand on the right, left hand below shoulder |

| Axis Y move forward | Right hand on the left, left hand between shoulder and head |

| Axis Y move backwards | Right hand on the right, left hand between shoulder and head |

| Axis Z move forward | Right hand on the left, left hand above head |

| Axis Z move backwards | Right hand on the right, left hand above head |

| Confirm movement | Close left hand |

| Sample Nb | Commands | ||||||

|---|---|---|---|---|---|---|---|

| “plus x” | “minus x” | “plus y” | “minus y” | “plus z” | “minus z” | “stop” | |

| Sample 1 (s) | 1.85 | 1.67 | 1.55 | 1.61 | 1.74 | 1.68 | 1.70 |

| Sample 2 (s) | 1.93 | 1.94 | 1.50 | 1.47 | 2.00 | 1.85 | 1.60 |

| Sample 3 (s) | 2.06 | 1.78 | 1.76 | 1.65 | 1.80 | 1.84 | 1.85 |

| Sample 4 (s) | 2.07 | 1.60 | 1.69 | 1.80 | 1.89 | 1.79 | 1.59 |

| Sample 5 (s) | 1.67 | 1.78 | 1.50 | 1.52 | 1.89 | 1.74 | 1.75 |

| Average delay (s) | 1.92 | 1.75 | 1.60 | 1.61 | 1.86 | 1.78 | 1.70 |

| Sample Nb | Commands | ||||||

|---|---|---|---|---|---|---|---|

| “plus x” | “minus x” | “plus y” | “minus y” | “plus z” | “minus z” | “stop” | |

| Sample 1 (s) | 2.68 | 2.45 | 2.00 | 2.43 | 2.48 | 2.37 | 2.40 |

| Sample 2 (s) | 2.28 | 2.20 | 2.49 | 2.47 | 2.31 | 2.40 | 2.42 |

| Sample 3 (s) | 2.46 | 2.54 | 2.50 | 2.00 | 2.62 | 2.67 | 2.50 |

| Sample 4 (s) | 2.17 | 2.49 | 2.19 | 2.49 | 2.55 | 2.51 | 2.19 |

| Sample 5 (s) | 2.34 | 2.67 | 2.17 | 2.50 | 2.66 | 2.40 | 2.38 |

| Average delay (s) | 2.39 | 2.47 | 2.27 | 2.38 | 2.52 | 2.47 | 2.39 |

| Sample Nb | Commands | ||||||

|---|---|---|---|---|---|---|---|

| “plus x” | “minus x” | “plus y” | “minus y” | “plus z” | “minus z” | “stop” | |

| Sample 1 | ok | ok | ok | ok | ok | ok | ok |

| Sample 2 | ok | ok | ok | ok | ok | ok | ok |

| Sample 3 | ok | ok | ok | ok | ok | ok | ok |

| Sample 4 | ok | ok | x | ok | ok | ok | ok |

| Sample 5 | ok | ok | x | ok | ok | ok | ok |

| Sample 6 | ok | ok | x | ok | ok | ok | ok |

| Sample 7 | ok | ok | x | ok | ok | ok | ok |

| Sample 8 | ok | ok | ok | ok | x | ok | ok |

| Sample 9 | ok | ok | ok | ok | ok | ok | ok |

| Sample 10 | ok | ok | x | ok | ok | ok | ok |

| Recognition rate (%) | 100 | 100 | 50 | 100 | 90 | 100 | 100 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaczmarek, W.; Panasiuk, J.; Borys, S.; Banach, P. Industrial Robot Control by Means of Gestures and Voice Commands in Off-Line and On-Line Mode. Sensors 2020, 20, 6358. https://doi.org/10.3390/s20216358

Kaczmarek W, Panasiuk J, Borys S, Banach P. Industrial Robot Control by Means of Gestures and Voice Commands in Off-Line and On-Line Mode. Sensors. 2020; 20(21):6358. https://doi.org/10.3390/s20216358

Chicago/Turabian StyleKaczmarek, Wojciech, Jarosław Panasiuk, Szymon Borys, and Patryk Banach. 2020. "Industrial Robot Control by Means of Gestures and Voice Commands in Off-Line and On-Line Mode" Sensors 20, no. 21: 6358. https://doi.org/10.3390/s20216358

APA StyleKaczmarek, W., Panasiuk, J., Borys, S., & Banach, P. (2020). Industrial Robot Control by Means of Gestures and Voice Commands in Off-Line and On-Line Mode. Sensors, 20(21), 6358. https://doi.org/10.3390/s20216358