Traffic Intersection Re-Identification Using Monocular Camera Sensors

Abstract

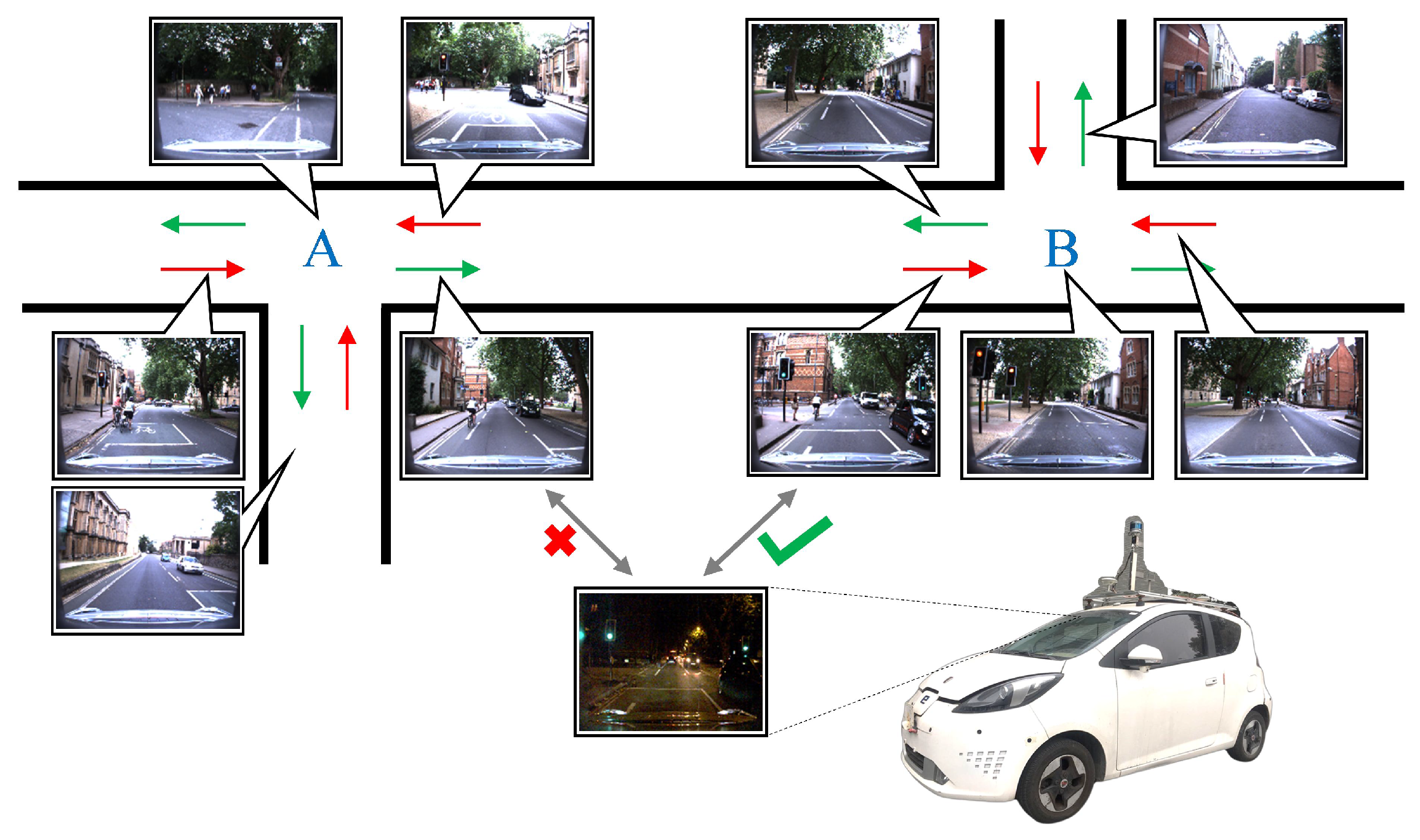

:1. Introduction

2. Related Works

2.1. Intersection Detection

2.2. Visual Place Recognition

2.3. Visual Place Re-ID Datasets

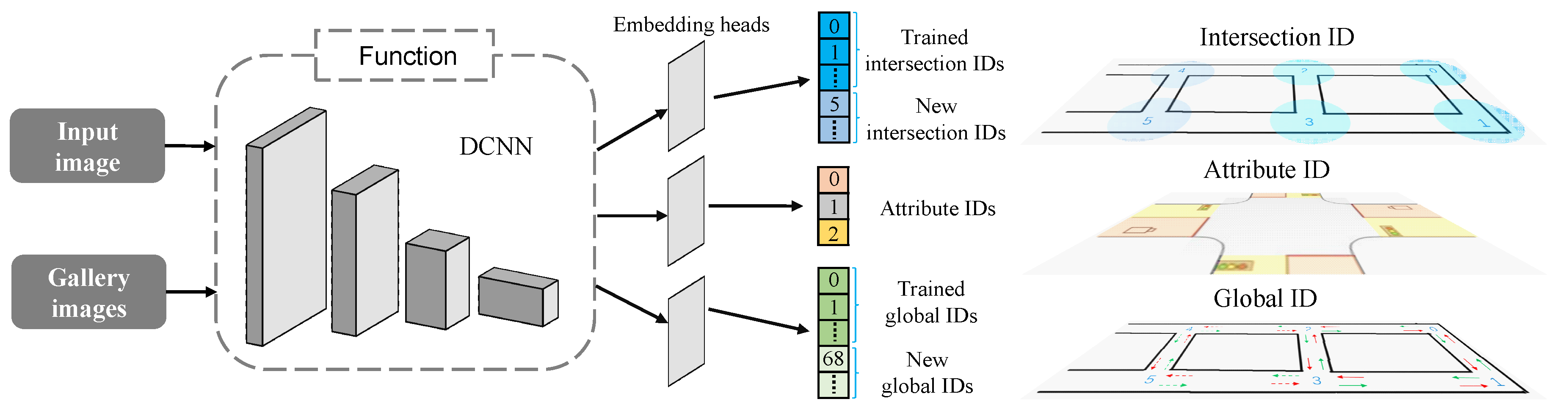

3. Traffic Intersection Re-Identification Approach

3.1. Task Formulation

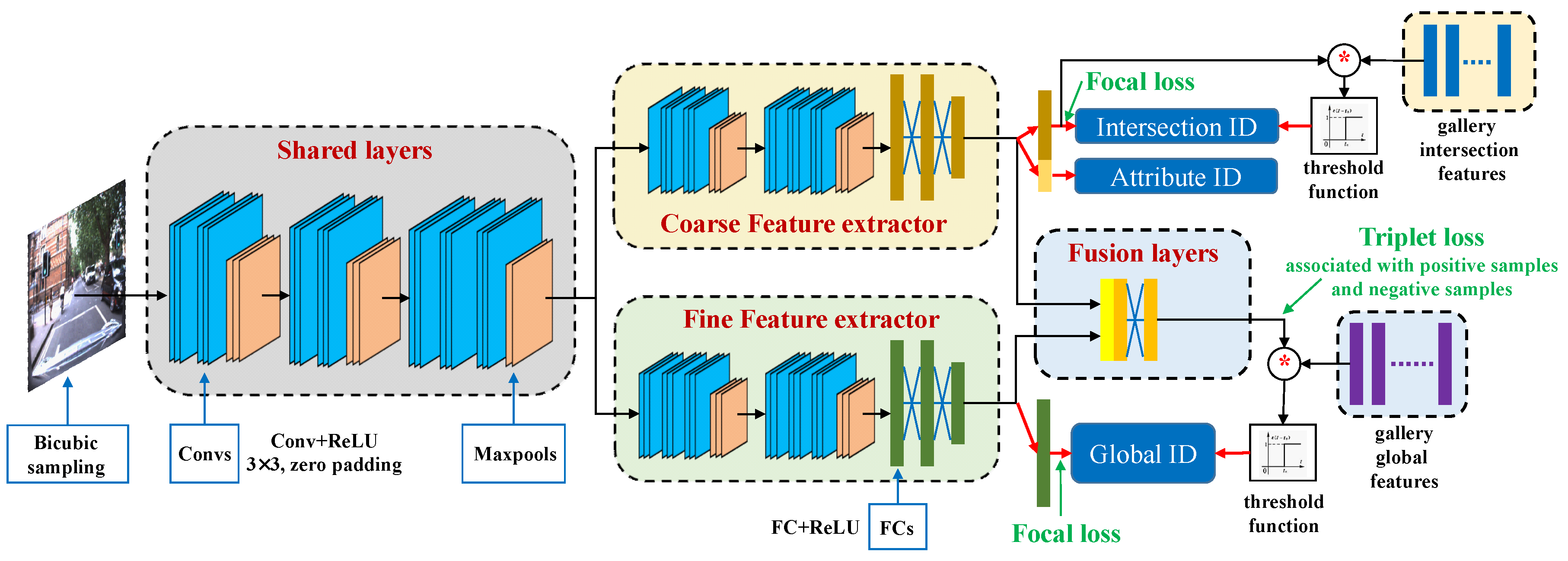

3.2. HDL Network Architecture

3.3. Mixed Loss Training

3.4. Updating Strategy of Topology Map

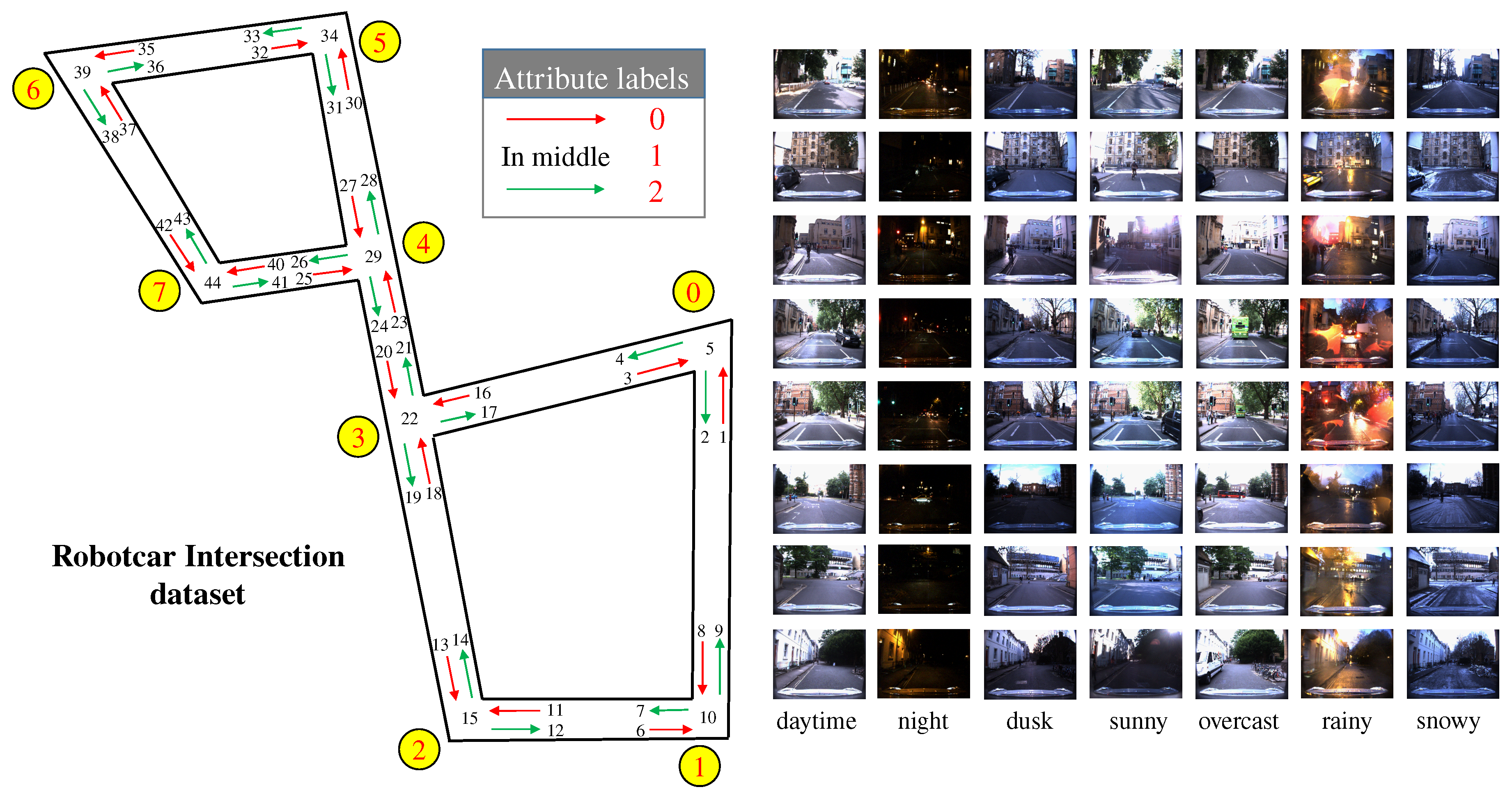

4. Proposed Intersection Datasets

4.1. RobotCar Intersection Dataset

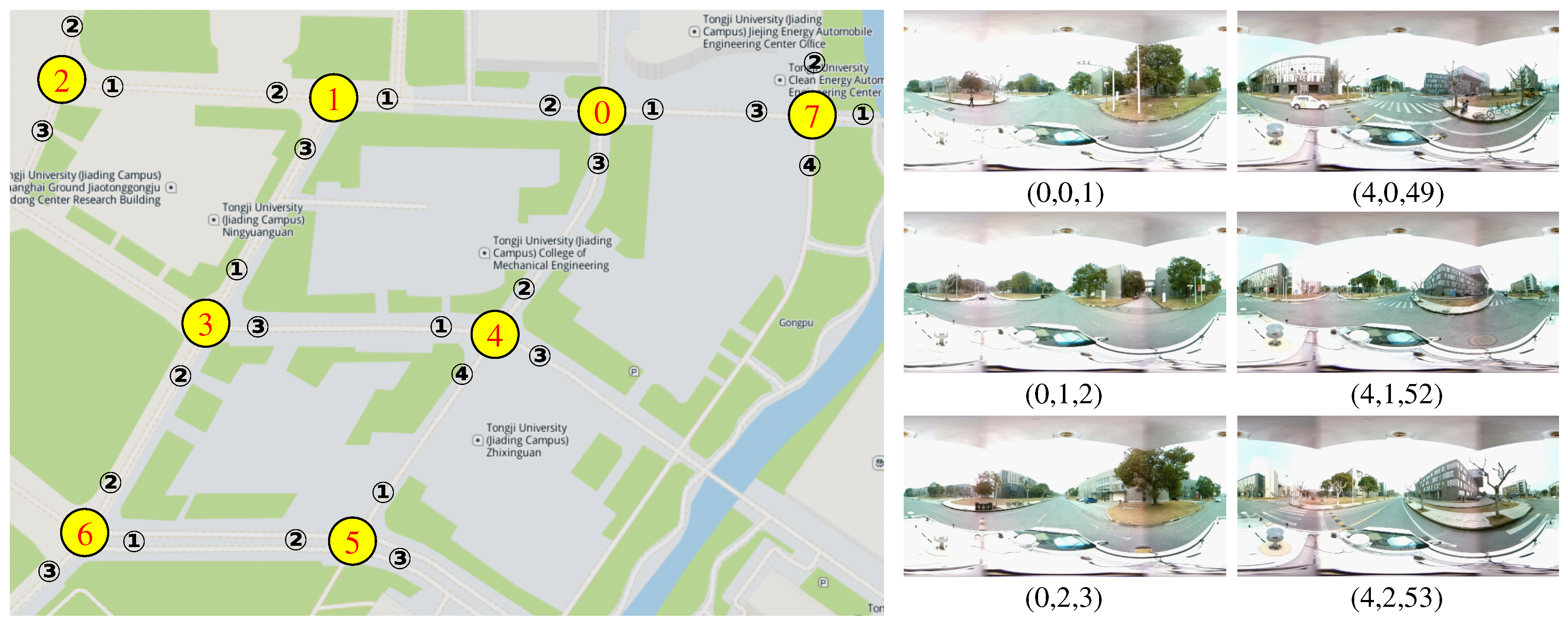

4.2. Campus Intersection Dataset

5. Experiments

5.1. Training Configuration

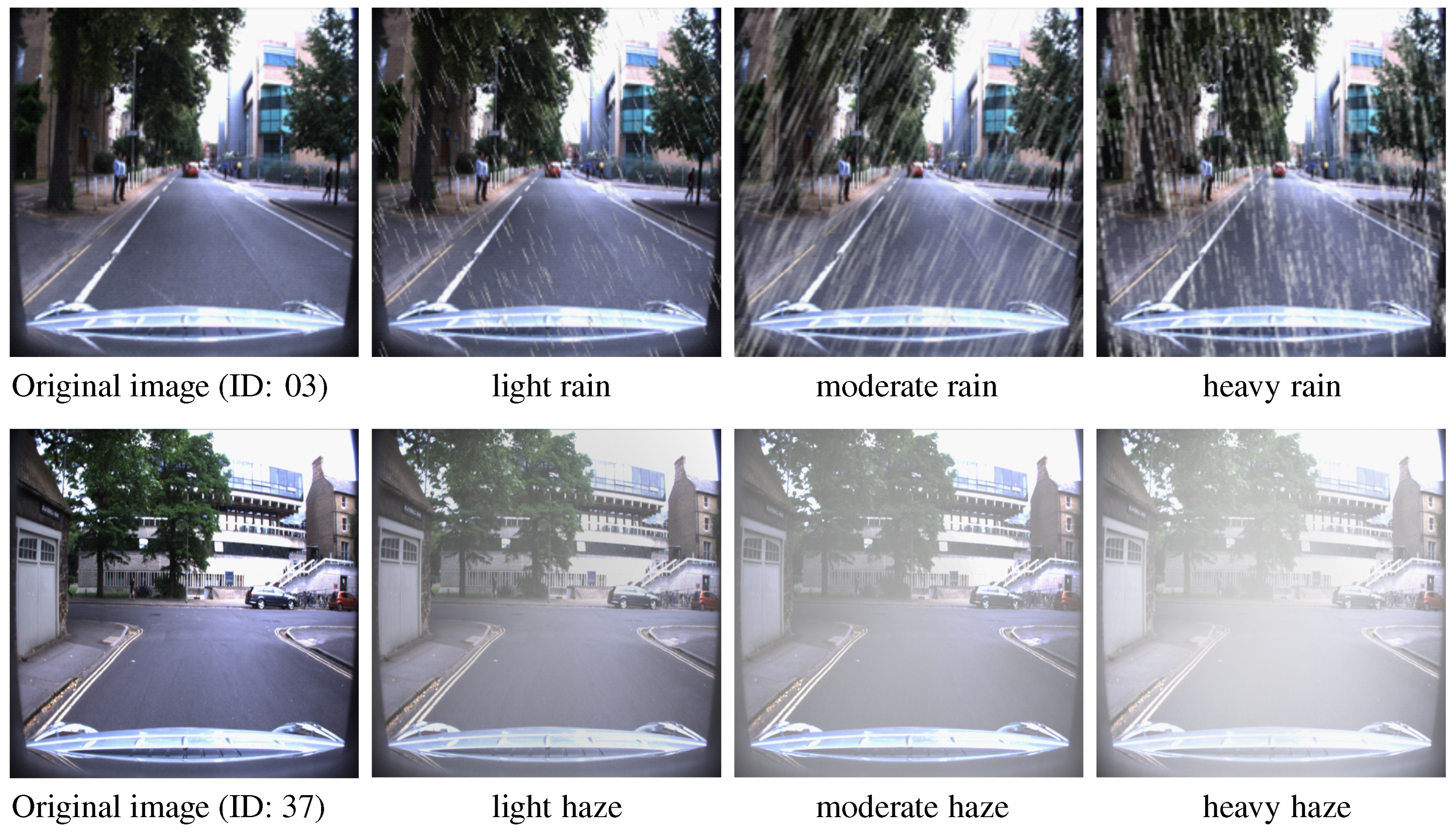

5.2. Data Augmentation

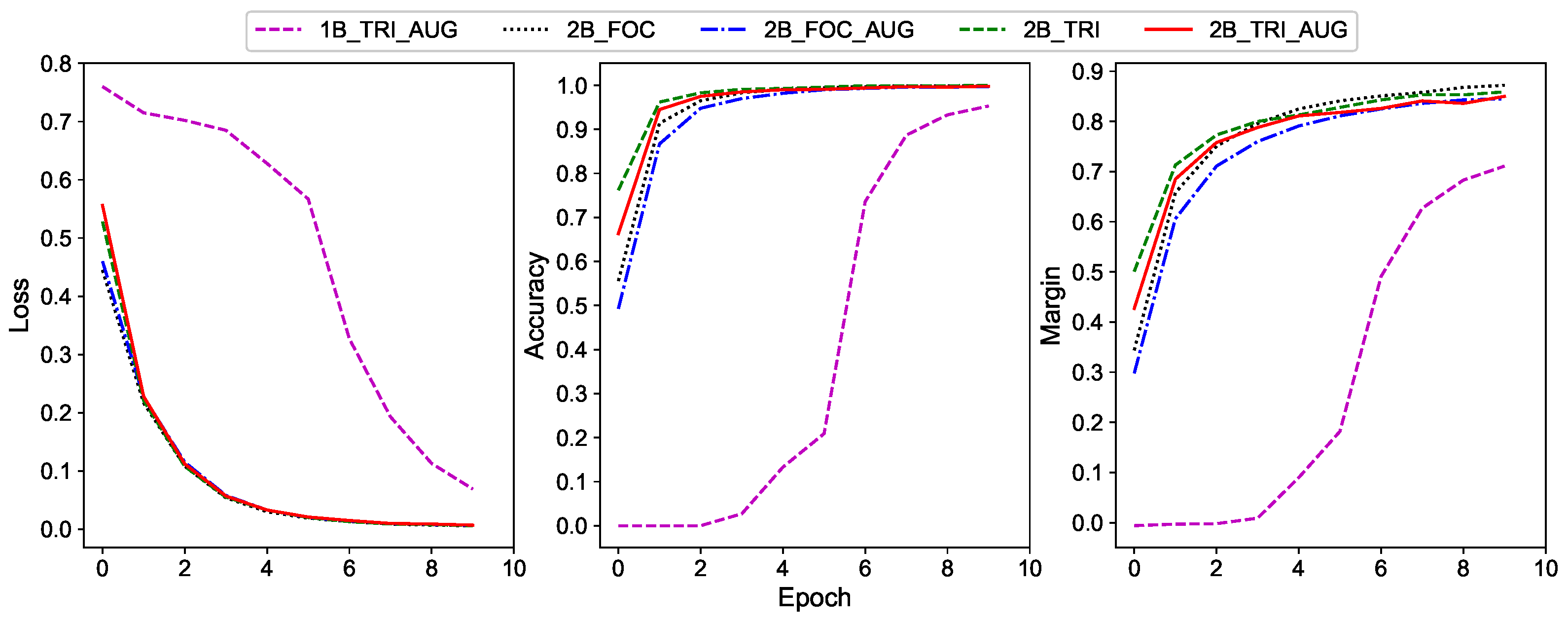

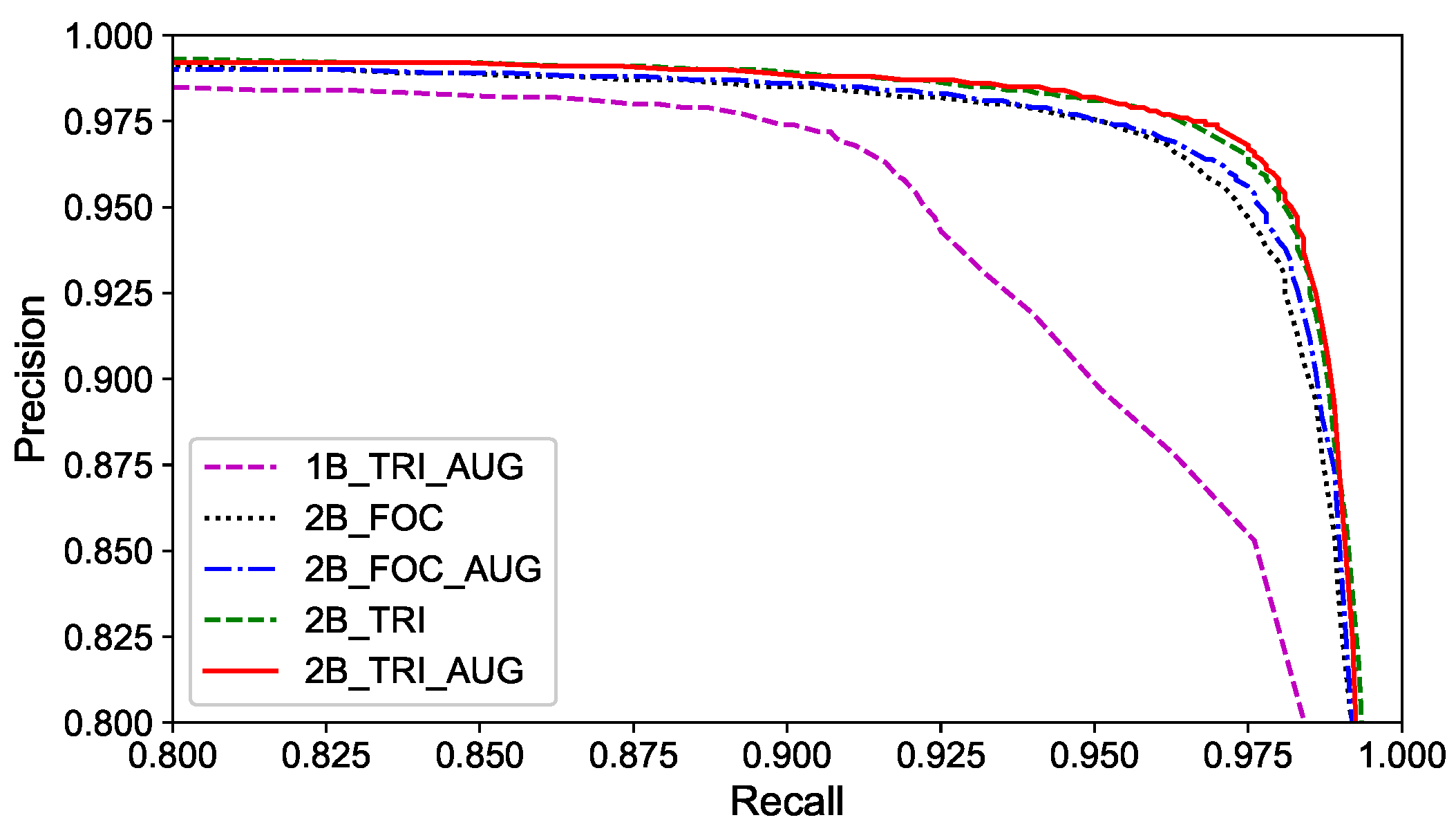

5.3. Evaluation on RobotCar Intersection

5.4. Results on Campus Intersection

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Lowry, S.; Sunderhauf, N.; Newman, P.; Leonard, J.; Cox, D.; Corke, P.; Milford, M. Visual place recognition: A survey. IEEE Trans. Robot. 2016, 32, 1–19. [Google Scholar] [CrossRef] [Green Version]

- Bhatt, D.; Sodhi, D.; Pal, A.; Balasubramanian, V.; Krishna, M. Have I reached the intersection: A deep learning-based approach for intersection detection from monocular cameras. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 4495–4500. [Google Scholar]

- Poggenhans, F.; Pauls, J.; Janosovits, J.; Orf, S.; Naumann, M.; Kuhnt, F.; Mayr, M. Lanelet2: A high-definition map framework for the future of automated driving. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems, Maui, HI, USA, 4–7 November 2018; pp. 4495–4500. [Google Scholar]

- Datondji, S.; Dupuis, Y.; Subirats, P.; Vasseur, P. A survey of vision-based traffic monitoring of road intersections. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2681–2698. [Google Scholar] [CrossRef]

- Yu, C.; Feng, Y.; Liu, X.; Ma, W.; Yang, X. Integrated optimization of traffic signals and vehicle trajectories at isolated urban intersections. Transp. Res. Part B-Methodol. 2018, 112, 89–112. [Google Scholar] [CrossRef] [Green Version]

- Maddern, W.; Pascoe, G.; Linegar, C.; Newman, P. 1 Year, 1000 km: The Oxford RobotCar dataset. Int. J. Robot. Res. 2016, 36, 3–15. [Google Scholar] [CrossRef]

- Zhao, J.; Huang, Y.; He, X.; Zhang, S.; Ye, C.; Feng, T.; Xiong, L. Visual semantic landmark-based robust mapping and localization for autonomous indoor parking. Sensors 2019, 19, 161. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kushner, T.; Puri, S. Progress in road intersection detection for autonomous vehicle navigation. Proc. SPIE 1987, 852, 19–25. [Google Scholar]

- Bhattacharyya, P.; Gu, Y.; Bao, J.; Liu, X.; Kamijo, S. 3D scene understanding at urban intersection using stereo vision and digital map. In Proceedings of the IEEE International Conference on Vehicular Technology, Sydney, Australia, 4–7 June 2017; pp. 1–5. [Google Scholar]

- Habermann, D.; Vido, C.; Osorio, F. Road junction detection from 3D point clouds. In Proceedings of the IEEE International Conference on Neural Networks, Vancouver, BC, Canada, 24–29 July 2016; pp. 4934–4940. [Google Scholar]

- Xie, X.; Philips, W. Road intersection detection through finding common sub-tracks between pairwise gnss traces. ISPRS Int. J. Geo-Inf. 2017, 6, 311. [Google Scholar] [CrossRef] [Green Version]

- Cheng, J.; Gao, G.; Ku, X.; Sun, J. A novel method for detecting and identifying road junctions from high resolution SAR images. J. Radars 2012, 1, 100–109. [Google Scholar]

- Kuipers, B.; Byun, Y. A robot exploration and mapping strategy based on a semantic hierarchy of spatial representations. Robot. Auton. Syst. 1991, 8, 47–63. [Google Scholar] [CrossRef]

- Lowe, D. Object recognition from local scale-invariant features. In Proceedings of the IEEE International Conference on Computer Vision, Liege, Belgium, 13–15 October 2009; pp. 1150–1157. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van, L. SURF: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 430–443. [Google Scholar]

- Sivic, Z. Video Google: A text retrieval approach to object matching in videos. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 14–17 October 2003; pp. 1470–1477. [Google Scholar]

- Ulrich, I.; Nourbakhsh, I. Appearance-based place recognition for topological localization. In Proceedings of the IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 24–28 April 2000; pp. 1023–1029. [Google Scholar]

- Krose, B.; Vlassis, N.; Bunschoten, R.; Motomura, Y. A probabilistic model for appearance-based robot localization. Image Vis. Comput. 2001, 19, 381–391. [Google Scholar] [CrossRef]

- Oliva, A.; Torralba, A. Building the gist of a scene: The role of global image features in recognition. Prog. Brain Res. 2006, 115, 23–36. [Google Scholar]

- Liu, Y.; Zhang, H. Visual loop closure detection with a compact image descriptor. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; pp. 1051–1056. [Google Scholar]

- Chen, Z.; Jacobson, A.; Sunderhauf, N. Deep learning features at scale for visual place recognition. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 3223–3230. [Google Scholar]

- Bromley, J.; Guyon, I.; Lecun, Y.; Sackinger, E.; Shah, R. Signature Verification using a “Siamese” Time Delay Neural Network. In Proceedings of the NIPS Conference on Neural Information Processing Systems, Denver, CO, USA, 26–28 May 1993; pp. 737–744. [Google Scholar]

- Olid, D.; Facil, J.M.; Civera, J. Single-view place recognition under seasonal changes. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 1–6. [Google Scholar]

- Ye, M.; Shen, J.; Lin, G.; Xiang, T.; Shao, L.; Hoi, S. Deep learning for person re-identification: A survey and outlook. arXiv 2020, arXiv:2001.04193. [Google Scholar]

- Liu, H.; Tian, Y.; Wang, Y.; Pang, L.; Huang, T. Deep relative distance learning: Tell the difference between similar vehicles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–29 June 2016; pp. 737–744. [Google Scholar]

- Milford, M.; Wyeth, G. SeqSLAM: Visual route-based navigation for sunny summer days and stormy winter nights. In Proceedings of the IEEE Conference on Robotics and Automation, Saint Paul, MN, USA, 15–18 May 2012; pp. 1643–1649. [Google Scholar]

- Pandey, G.; Mcbride, J.; Eustice, R. Ford Campus vision and lidar data set. Int. J. Robot. Res. 2011, 30, 1543–1552. [Google Scholar] [CrossRef]

- Cummins, M.; Newman, P. FAB-MAP: Probabilistic localization and mapping in the space of appearance. Int. J. Robot. Res. 2008, 27, 647–665. [Google Scholar] [CrossRef]

- Glover, A.; Maddern, W.; Milford, M.; Wyeth, G. FAB-MAP+RatSLAM: Appearance-based SLAM for multiple times of day. In Proceedings of the IEEE Conference on Robotics and Automation, Anchorage, Alaska, 3–8 May 2010; pp. 3507–3512. [Google Scholar]

- Badino, H.; Huber, D.; Kanade, T. Visual topometric localization. In Proceedings of the IEEE Conference on Intelligent Vehicles Symposium, Baden-Baden, Germany, 5–9 June 2011; pp. 794–799. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. In Proceedings of the IEEE Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 318–327. [Google Scholar]

- Xiong, L.; Deng, Z.; Zhang, S.; Du, W.; Shan, F. Panoramic image mosaics assisted by lidar in vehicle system. In Proceedings of the IEEE Conference on Advanced Robotics and Mechatronics, Shenzhen, China, 18–21 December 2020; pp. 1–6. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Li, P.; Tian, J.; Tang, Y.; Wang, G.; Wu, C. Model-based deep network for single image deraining. IEEE Access 2020, 8, 14036–14047. [Google Scholar] [CrossRef]

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking single-image dehazing and beyond. IEEE Trans. Image Process. 2019, 28, 492–505. [Google Scholar] [CrossRef] [Green Version]

| 1B | 2B | FOC | TRI | AUG | |

|---|---|---|---|---|---|

| 1B + TRI + AUG | ✓ | ✓ | ✓ | ||

| 2B + FOC | ✓ | ✓ | |||

| 2B + FOC + AUG | ✓ | ✓ | ✓ | ||

| 2B + TRI | ✓ | ✓ | |||

| 2B + TRI + AUG | ✓ | ✓ | ✓ |

| Methods | Total | Day | Night | Sunny | Overcast | Rainy |

|---|---|---|---|---|---|---|

| BOW [17] | 0.689 | 0.736 | 0.311 | 0.685 | 0.746 | 0.544 |

| ConvNet [22] | 0.797 | 0.798 | 0.788 | 0.787 | 0.808 | 0.769 |

| SiameseNet [24] | 0.844 | 0.849 | 0.798 | 0.834 | 0.867 | 0.775 |

| 1B + TRI + AUG | 0.964 | 0.963 | 0.972 | 0.956 | 0.968 | 0.893 |

| 2B + TRI | 0.971 | 0.970 | 0.989 | 0.970 | 0.973 | 0.948 |

| 2B + TRI + AUG | 0.971 | 0.971 | 0.989 | 0.972 | 0.972 | 0.956 |

| Methods | 1B + TRI + AUG | 2B + FOC | 2B + FOC + AUG | 2B + TRI | 2B + TRI + AUG |

|---|---|---|---|---|---|

| Precision | 0.933 | 0.906 | 0.958 | 0.901 | 0.963 |

| Methods | Total | Day | Night | Sunny | Overcast | Rainy |

|---|---|---|---|---|---|---|

| BOW [17] | 0.728 | 0.743 | 0.349 | 0.652 | 0.777 | 0.356 |

| ConvNet [22] | 0.853 | 0.853 | 0.796 | 0.878 | 0.846 | 0.812 |

| SiameseNet [24] | 0.887 | 0.887 | 0.854 | 0.881 | 0.896 | 0.803 |

| 1B + TRI + AUG | 0.975 | 0.975 | 0.968 | 0.983 | 0.976 | 0.931 |

| 2B + TRI | 0.982 | 0.982 | 0.987 | 0.982 | 0.984 | 0.940 |

| 2B + TRI + AUG | 0.982 | 0.982 | 0.989 | 0.986 | 0.983 | 0.941 |

| Methods | Total | Day | Night | Sunny | Overcast | Rainy |

|---|---|---|---|---|---|---|

| BOW [17] | 0.664 | 0.639 | 0.105 | 0.644 | 0.691 | 0.307 |

| ConvNet [22] | 0.738 | 0.738 | 0.745 | 0.707 | 0.763 | 0.713 |

| SiameseNet [24] | 0.861 | 0.861 | 0.910 | 0.887 | 0.887 | 0.690 |

| 1B + TRI + AUG | 0.955 | 0.955 | 0.980 | 0.958 | 0.959 | 0.927 |

| 2B + TRI | 0.958 | 0.957 | 0.980 | 0.970 | 0.967 | 0.911 |

| 2B + TRI + AUG | 0.958 | 0.958 | 0.980 | 0.961 | 0.961 | 0.928 |

| Task | Intersection ID | Attribute ID | Global ID |

|---|---|---|---|

| existed intersection re-ID (task 1) | 0.985 | 0.987 | 0.998 |

| new intersection detection (task 2) | - | 0.855 | 0.990 |

| new intersection re-ID (task 2) | - | 0.855 | 0.991 |

| all intersection re-ID (task 2) | - | 0.924 | 0.996 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiong, L.; Deng, Z.; Huang, Y.; Du, W.; Zhao, X.; Lu, C.; Tian, W. Traffic Intersection Re-Identification Using Monocular Camera Sensors. Sensors 2020, 20, 6515. https://doi.org/10.3390/s20226515

Xiong L, Deng Z, Huang Y, Du W, Zhao X, Lu C, Tian W. Traffic Intersection Re-Identification Using Monocular Camera Sensors. Sensors. 2020; 20(22):6515. https://doi.org/10.3390/s20226515

Chicago/Turabian StyleXiong, Lu, Zhenwen Deng, Yuyao Huang, Weixin Du, Xiaolong Zhao, Chengyu Lu, and Wei Tian. 2020. "Traffic Intersection Re-Identification Using Monocular Camera Sensors" Sensors 20, no. 22: 6515. https://doi.org/10.3390/s20226515

APA StyleXiong, L., Deng, Z., Huang, Y., Du, W., Zhao, X., Lu, C., & Tian, W. (2020). Traffic Intersection Re-Identification Using Monocular Camera Sensors. Sensors, 20(22), 6515. https://doi.org/10.3390/s20226515