5.2.2. Indoor Tests—Scenario 2

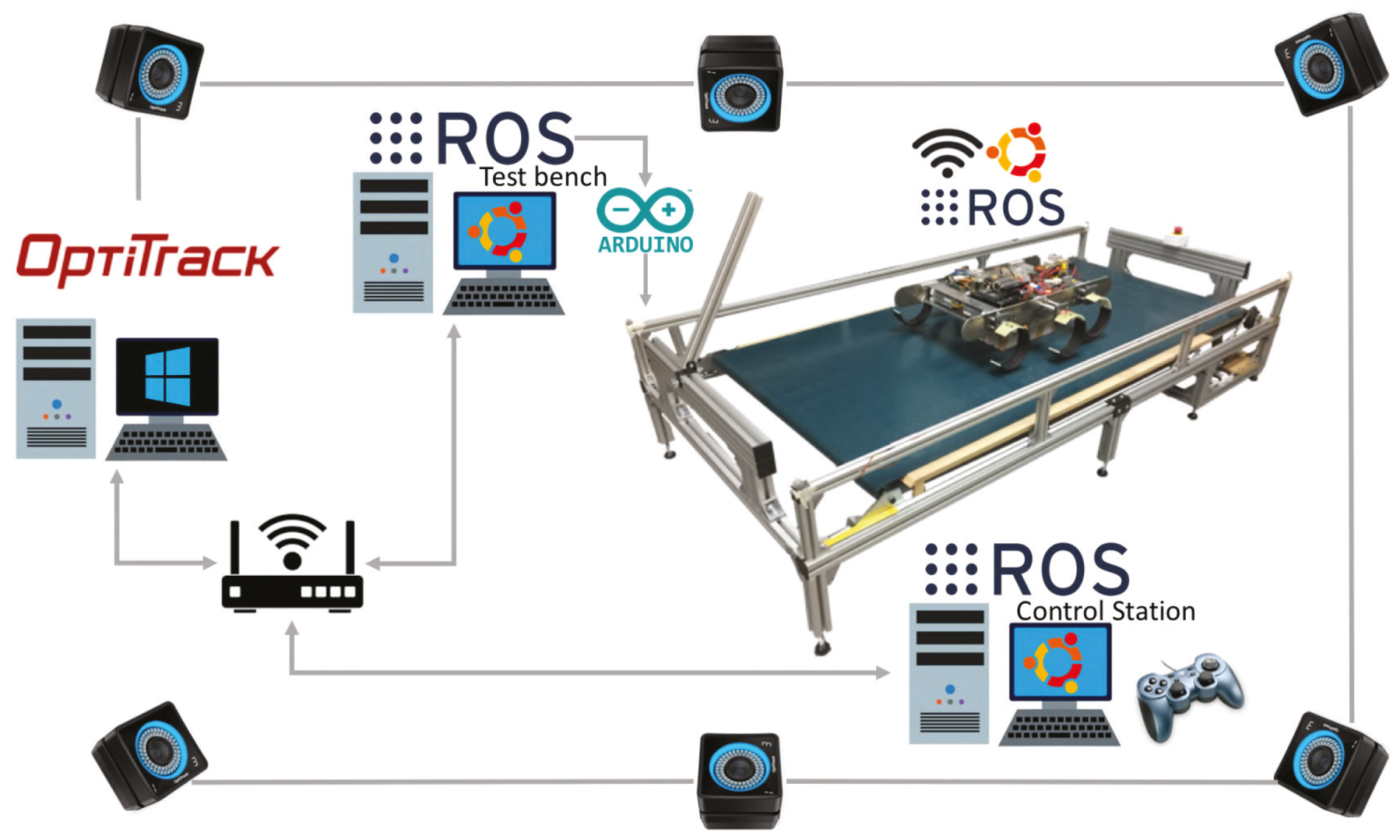

Test conditions: For the indoor scenario 2, three different tests were performed: Walking straight, turn in place and complete a circuit. The first one, walking straight, can be considered as an extension of the test in the indoor scenario 1. However, this time the analysis will include all the sensors installed in the robotic platform (IMU, RealSense D435 and T265). On one side, the IMU is used together with the odometry algorithm and the ROS package

Robot pose EKF to estimate the pose of the robot. It uses an extended Kalman filter with a 6D model (3D position and 3D orientation) to combine the input measurements. On the other side, the RealSense D435 is used with the algorithm ORB-SLAM2 [

60] which is a SLAM solution to compute in realtime the camera trajectory and a sparse 3D reconstruction. It is able to detect loops and relocalize the camera in realtime. Finally, the RealSense T265 uses the Intel tracking software to calculate the position and orientation of the robot.

Figure 17 shows all the components for this test and the following.

Walking straight test: The walking straight test can be subdivided into two different tests. The first one consists in 5 trials to analyze the final localization of the robot and the error measurement from each sensor respect to the ground truth system. These analyzes include the XY trajectory of the robot and three individual studies of the displacement in the three axis respect to the time (

X,

Y,

Z). The

XY trajectory is used to recreate the path followed by the robot in the trial and get the final error in the coordinates (

X,

Y). The individual studies of each variable is used to find some periodic behaviors or disturbance in the sensor measurements. For this trials the robot configuration is the same as in the indoor scenario 1 (see

Table 5).

Table 7 resumes the final errors for each trial and sensor. The “Odometry” tag results are the EKF values with the input of the odometry algorithm and the IMU sensor, but is called with that name, for a better comprehension.

In the

Figure 18 and

Figure 19, the results of the final

X and

Y errors are shown. The T265 presents much worse errors than the ORB SLAM2 and EKF algorithms, even though

Intel specifies that the algorithm of the T265 uses an EKF algorithm together with the onboard IMU sensor of the camera. The magnitude of the error for the trials 1 and 2 is even bigger than the length of the body of the robot. However, if we study, for example, the XY graph of the trial 1 (see

Figure 20), it shows that the T265 presents a more erratic and not as smooth path as the ORB SLAM2 path. After some more tests, the reason why the T265 presents this error in the measurements is because the Intel’s Visual SLAM requires more features in the scene than the ORB SLAM2 algorithm. When the amount of features that are present in the scene increases, this error is reduced.

So, the error measure in the final X position has acceptable results, specially for the EKF and ORB SLAM algorithms, however, none of the three methods presents a perfect estimation for the displacement in the Y axis. However, the errors measured are smaller than the half of the width of the length of the chassis of the robot, which can be marked as an acceptable result.

Another important point is that, for all the trials, the T265 presents a worse estimation of the robot position in the Z axis. While the measure should be between 0.0 [m] and −0.1 [m] in some trial, the T265 exceeds the +0.2 [m], which is a difference as big as go up one step.

Figure 21 resumes the Z measures from the first test, the black line is the T265 camera.

The second test consisted in 3 different trials, and each one was repeated twice. Moreover, in each one of the three trials the ground sweep angle was modified (30°, 45° and 60°). The objective of this second test is to analyze if different gait patterns configurations help to achieve a better pose estimation and try to find a better configuration to solve the errors showed by the T265 camera. For the six tests, the CLHeRo was programmed to walk for a period of sixteen strides and not to achieve a certain distance, because the distance travelled in each step is directly correlated with the ground sweep angle. The ground rotation speed parameter has kept constant at 1 rads/s. Moreover, for this test, more EKF filters were configured. This new EKF filters have been configured in pairs of sensors (Odometry + IMU, Odometry + ORB SLAM2, IMU + ORB SLAM2), so now the study can also indicate if a couple of sensors make a great difference in the pose estimation of the robot. Moreover, the EKF filter for this test include three sensors measurements (Odometry, IMU and ORB SLAM2). To verify the conclusion from the previous test, where the T265 was not very accurate, some objects were included in the scene, so the T265 can extract more features in each frame.

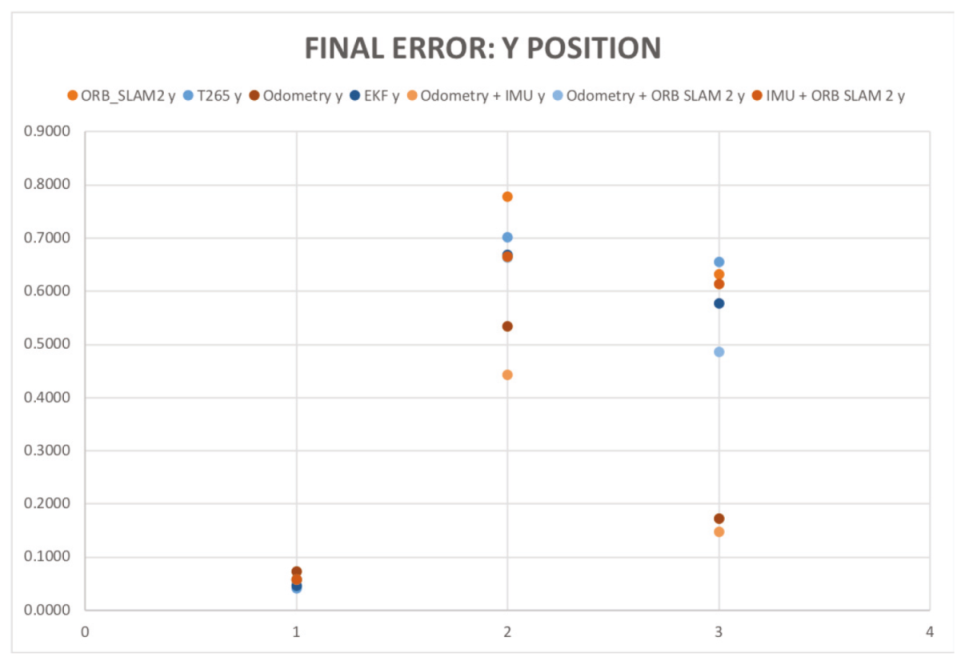

Table 8 resumes the final mean errors for each trial, sensor and EKF combination. In the

Figure 22 in and

Figure 23 the results of the final X and Y errors are shown.

The first thing that the reader can notice from the results is that the final error in X position for the T265 has decreased considerably (in some cases more than 0.6 m which is an improve of the ). In the 6 trials the raw measurements of all the sensors is below than 0.2 m of error and in the majority of cases under 0.1 m, which can be considered as a very precise results. The worst results are the combination of the EKFs that combines the IMU with another sensor (odometry or camera), it could be caused by the oscillations and forces that the robot suffers at every step. Decreasing the rotation speed of the motors can help to reduce this negative effect.

The final Y error position has also been significantly improved. The gait pattern with the ground sweep angle of 30 degrees has proved to be a very precise gait pattern in order to estimate the pose of the robot in both axis (X and Y). While in the two other configurations (45 degrees and 60 degrees) a major oscillation of the robot’s body provokes that the cameras have several problems to perform a better estimation and therefore the EKF that includes the odometry and the IMU presents the better results.

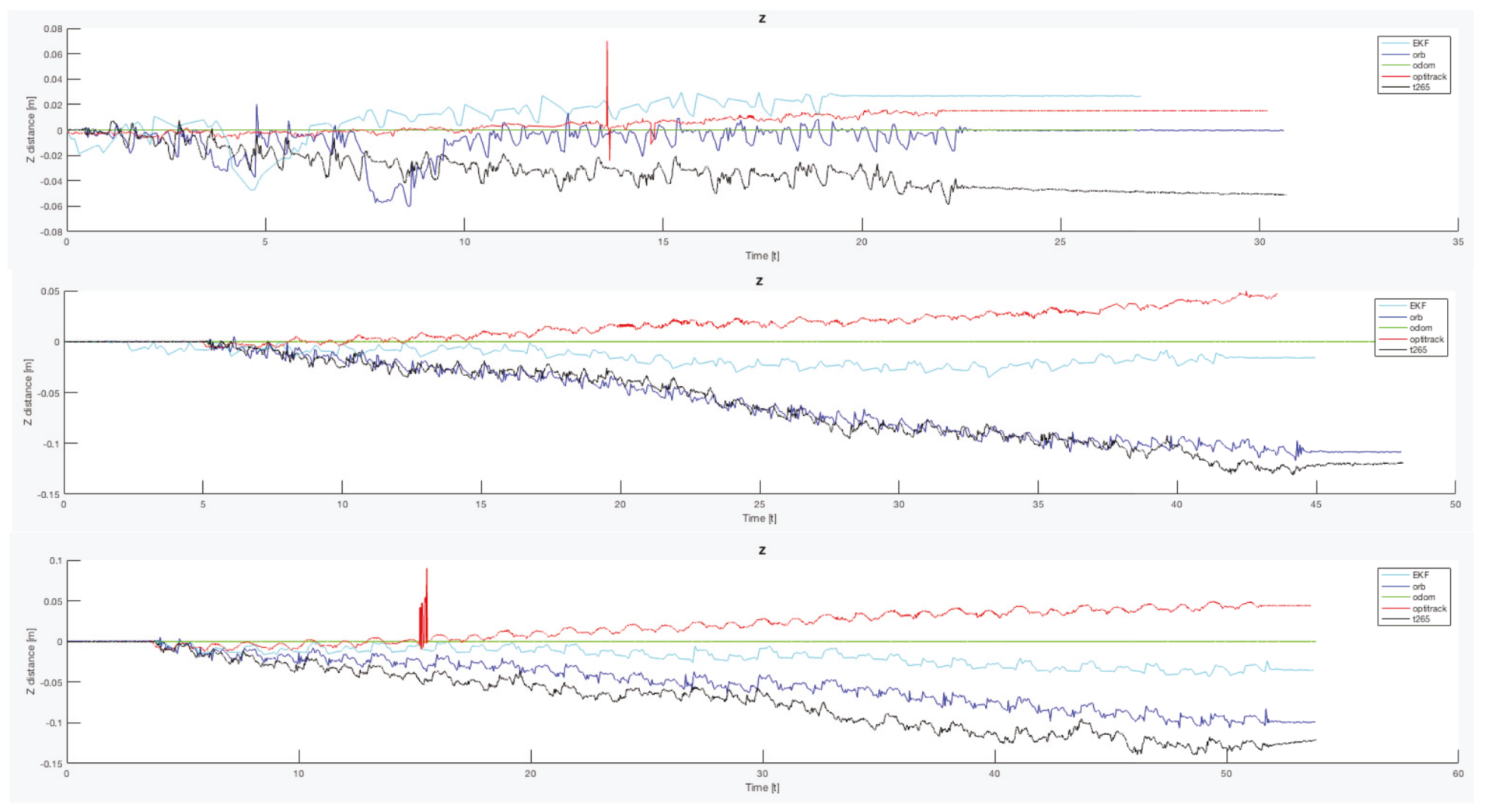

Finally, to complete this analysis, is necessary to compare the oscillation in the Z axis (

Figure 24). As happened with the X and Y final errors, the Z position error with the legs angle configured as 30 degrees presents the better estimation, but only for the ORB SLAM2 algorithm. For the other two configurations, both visual estimators presents a negative derivative, but with the combination of the EKF algorithm with the three inputs (odometry, IMU, ORB SLAM2) this negative error can be solved and the Z error position is almost null.

Turn in place: This type of movement has been the second to be implemented in the CLHeRo. Having this two different actions (walking straight and turning in place) for moving the robot, allows to achieve more complex tasks in a future, e.g., path following or autonomous navigation.

In order to have a more detailed study for this test, it was divided in five trials, where only the ground sweep angle was modified. The first trial begin with a configuration for walking straight, 60 degrees ( rads). After that, in the next trials the angle was reduced: degrees ( rads). However, in all the trials the ground rotation velocity was kept constant: rads/s.

Moreover, in this test and in the futures one, to have a more detailed analysis the EKF combinations with couples of sensors have been configured, as in the previous test. And taking in consideration some lessons learned from the previous test, different objects were included in the scene in order to have more features and get a better tracking.

The first trial, with the 60 degrees configuration failed, the robot was only able to do some steps and then was necessary to do some recovery maneuvers and resynchronize the legs to be able to continue turning. After several tries, this trial was considered as null due to the lack of torque of the motors.

The second trial, with 45 degrees ( rads) configuration, was also repeated several times, and each trial consisted in two and a half turns. The extra turns in each trial were done because both cameras were lost. So, closing the loop was intended to relocalize the robot after each complete turn. However, this only was useful to correct the ending position of the turn, during the rest of the turn there was a translational error that could not be reduced.

The configuration with 30 degrees ( rads) was the third trial. In this case, the same strategy as in the previous configuration was repeated, turn more than one turn in order to close the loop and relocate the robot.

For the last two trials, and degrees ( and rads) configuration, only one turn was executed, because in these configurations, the oscillation in the Y and Z are less aggressive than in the previous cases.

Figure 25 shows the tracked XY position for the trials 2–5. It is important to notice that both localization methods based on images present the same and constant error. It draws the attention that for all the trials, the visual error have more or less the same magnitude and presents the shape of a circumference. In the case that the robot needs to realize one complete turn, there should not be any problem because the XY localization is correct (less than 10 cm of error, which is an acceptable value). Nevertheless if the robot only turns a portion of a complete turn, a translational error appears in the robot localization, it can get a maximum value of the length of the robot’s body for 180 degrees or half of the body length for 90 degrees or 270 degrees,

Table 9 and

Table 10 show in detail this error.

For its part, the odometry algorithm, except for the last trial, presents a good estimation of the position of the robot, with only a few centimeters of error. This error is provoke because the algorithm takes in consideration the maneuver of turn in place as an ideal turn with no friction or drift, but this is not true, because of the morphology of the robot and its properties to rotate the legs, is necessary some drift to be able to turn.

With the final results of the previous tests, the authors can confirm that the odometry algorithm presents a good accuracy to estimate the pose of the robot. Some reasons to accept the algorithm as valid are that the estimation error is not increased with the distance and the estimation is better when it is compared with other sensors like the T265 that is highly accepted for autonomous navigation with ground and aerial robots.

Now, analyzing the EKF results, in one hand, the

IMU + VO combination does not improve the estimation, so this configuration can be rejected. In the other hand, the complete EKF and the

odometry + VO reduces the effect of the translational error in a range about the

(see

Table 11). And finally the

odometry + IMU shows an excellent result, drawing a perfect turn in place movement.

One possible reason why the visual odometry sensors a loosing the localization of the robot is due to the complex movement of the turn. In each step, the robot rotates, but also some vertical displacement is registered. This vertical displacement is bigger as the ground sweep angle is increased, table shows the average vertical displacement at every step depending on the angle.

Circuit: This test combine the movements studied in the last two previous explained tests. This test can be used as a first approach to achieve autonomous navigation for robots with “C” shape legs.

The circuit consisted of traversing a rectangle with approximate dimensions of 3 × 2 m. A total of four trials were done for this test, and all of them were teleoperated, so each one differ a little bit in the path generated. The important facts for the study is not to do always the same path, but are: first, that the path covered presents the minimum possible error between the Optitrack system and the information from the sensors. Second, the final position must be as close as possible to the physical final position.

Figure 26 shows the trajectory followed by each trial. The three first trials were done with a turning configuration of: ground sweep angle 0.39 rads and a ground rotation velocity of 0.5 rads/s. In the third trial, the ORB SLAM2 algorithm lost the robot localization. For that reason, a more conservative setting was selected (ground sweep angle of 0.2 rads and ground rotation velocity of 0.1 rads/s). However, for both cases, the walking straight configuration was 60 degrees and 1.0 rads/s.

Table 12 resumes this information.

Analyzing the results, some conclusions can be done from a first sight: the odometry algorithm has failed in all the trials at every turn action. So, the odometry algorithm needs to be improved to have a better accuracy when alternating different types of movement. The second conclusion is that the translational error described in by the visual odometry sensors in the turn in place test is also present in the turns of this test. Eventhough the translational error exists, there is not a problem with the orientation because the walking straight path is parallel to the path described by the Optitrack system.

Now, studying the final error position error, there is not a great difference between the different sensor to identify which one is the best one. The ORB SLAM2 algorithm and the T265 camera present very similar results. For the X position error, both sensors are giving a precision under the 4 cm of error. Meanwhile, for the Y position, the T265 average error is m and for the ORB SLAM2 (excluding the trial 3) the average error is m.

Attending now to the EKF combinations, only the

odom+vo configuration helps to reduce the final error in both axis (

X,

Y). Although for the trials

2 and

4 there is not a real benefit or improve. In the opposite side, the

imu+vo configuration have a worse result in all the trials than the Visual SLAM methods by themselves. Finally, the complete EKF presents a similar performance as the

odom+vo, but in this case, it is able to correct the predicted trajectory along the path and is very similar to the one followed by the robot.

Table 13 resumes all the final errors from this test.