Continual Learning Strategy in One-Stage Object Detection Framework Based on Experience Replay for Autonomous Driving Vehicle

Abstract

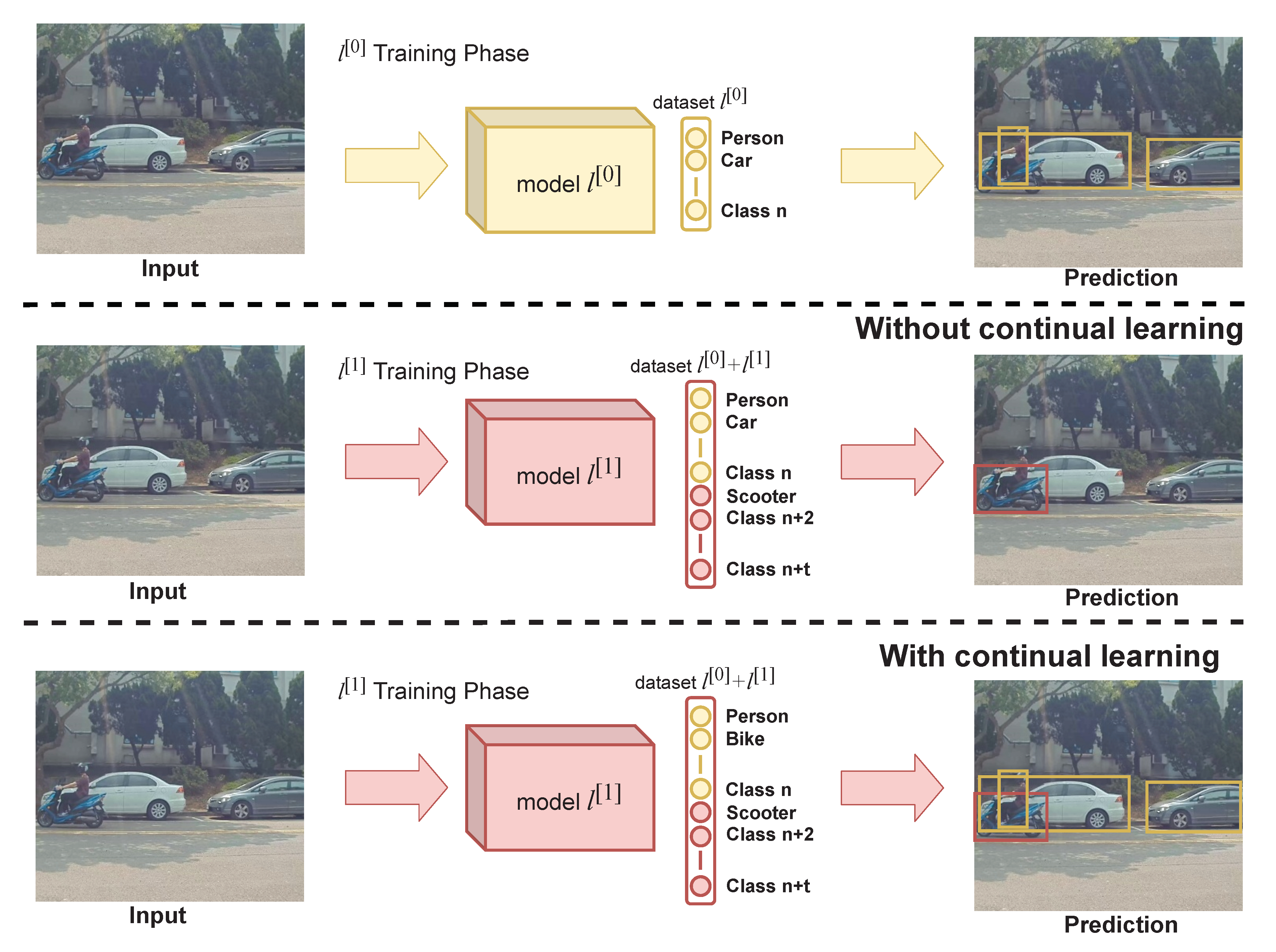

:1. Introduction

2. Related Work

3. Proposed Methodology

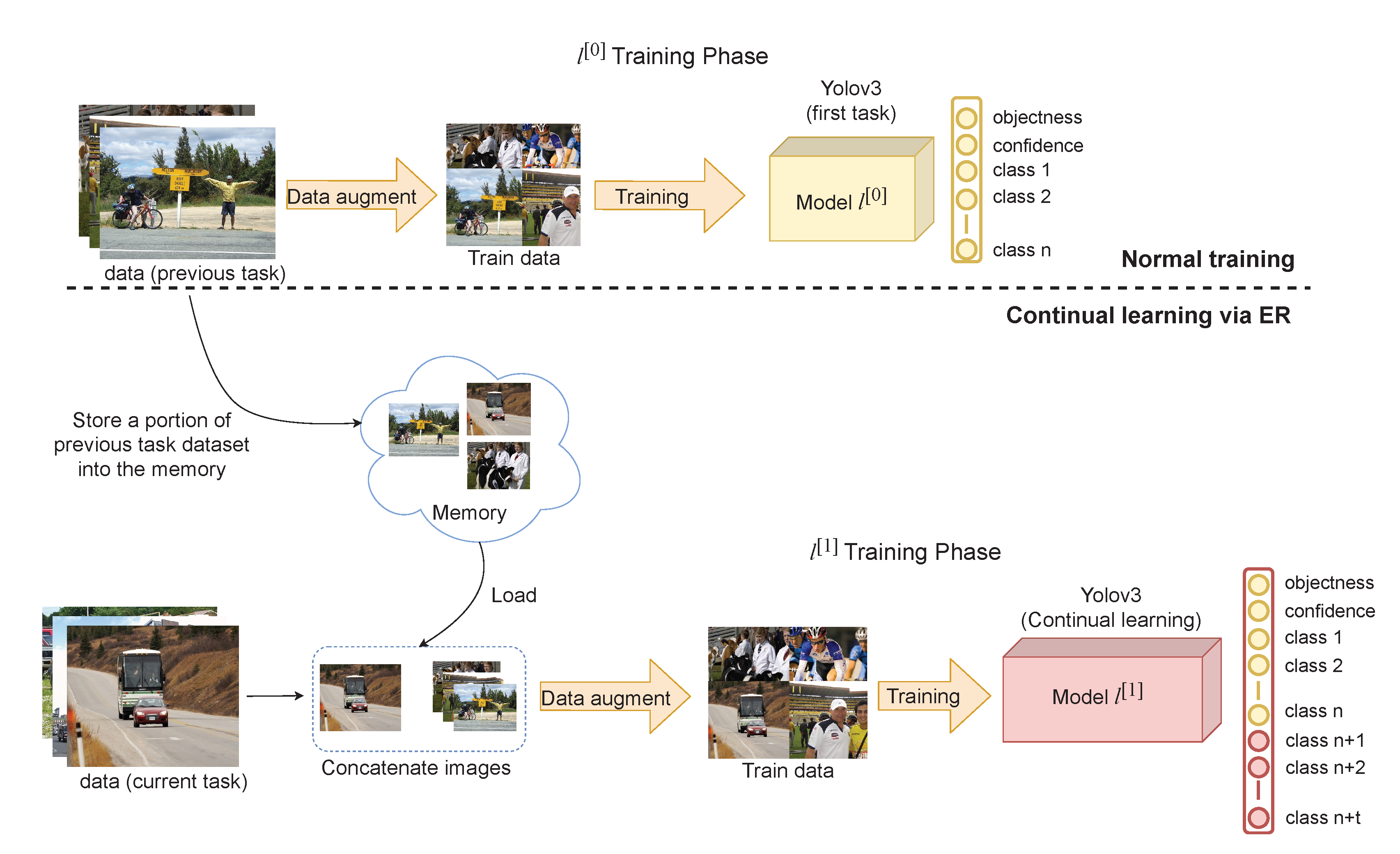

3.1. YOLO Architecture

3.2. Task Distribution and Data Augmentation

3.3. Memory Replay on Continual Learning

| Algorithm 1 The proposed continual learning strategy |

|

4. Results

4.1. Performance Evaluation Parameters

4.2. Addition of Classes Incrementally

4.3. Visualization and Effect of Different Memory Size

4.4. Performance Evaluation on ITRI-DrvieNet60 Dataset

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ADV | Autonomous Driving Vehicles |

| AP | Average Precision |

| BCE | Binary Cross Entropy |

| DMC | Deep Model Consolidation |

| ER | Experience Replay |

| Faster R-CNN | Faster Region Convolution Neural Networks |

| GEM | Gradient Episodic Memory |

| GIOU | Generalized Intersection Over Union |

| ITRI | Industrial Technology Research Institute |

| KD | Knowledge Distillation |

| YOLO | You Only Look Once |

References

- Shmelkov, K.; Schmid, C.; Alahari, K. Incremental Learning of Object Detectors without Catastrophic Forgetting. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3420–3429. [Google Scholar] [CrossRef] [Green Version]

- Ren, B.; Wang, H.; Li, J.; Gao, H. Life-long learning based on dynamic combination model. Appl. Soft Comput. 2017, 56, 398–404. [Google Scholar] [CrossRef]

- Parisi, G.I.; Kemker, R.; Part, J.L.; Kanan, C.; Wermter, S. Continual lifelong learning with neural networks: A review. Neural Netw. 2019, 113, 54–71. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.Y.; Dai, J.M.; Wu, L.T.; Chen, L.Q. A Vision-Based Driver Assistance System with Forward Collision and Overtaking Detection. Sensors 2020, 20, 5139. [Google Scholar] [CrossRef] [PubMed]

- Fayyad, J.; Jaradat, M.A.; Gruyer, D.; Najjaran, H. Deep Learning Sensor Fusion for Autonomous Vehicle Perception and Localization: A Review. Sensors 2020, 20, 4220. [Google Scholar] [CrossRef] [PubMed]

- Dominguez-Sanchez, A.; Cazorla, M.; Orts-Escolano, S. A new dataset and performance evaluation of a region-based cnn for urban object detection. Electronics 2018, 7, 301. [Google Scholar] [CrossRef] [Green Version]

- Feng, D.; Haase-Schütz, C.; Rosenbaum, L.; Hertlein, H.; Gläser, C.; Timm, F.; Wiesbeck, W.; Dietmayer, K. Deep Multi-Modal Object Detection and Semantic Segmentation for Autonomous Driving: Datasets, Methods, and Challenges. IEEE Trans. Intell. Transp. Syst. 2020, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Z.; Zheng, P.; Xu, S.; Wu, X. Object Detection With Deep Learning: A Review. IEEE Trans. Neural Networks Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Yoon, J.; Yang, E.; Lee, J.; Hwang, S.J. Lifelong learning with dynamically expandable networks. arXiv 2017, arXiv:1708.01547. [Google Scholar]

- Chen, C.P.; Liu, Z. Broad learning system: An effective and efficient incremental learning system without the need for deep architecture. IEEE Trans. Neural Networks Learn. Syst. 2017, 29, 10–24. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.P.; Liu, Z.; Feng, S. Universal approximation capability of broad learning system and its structural variations. IEEE Trans. Neural Networks Learn. Syst. 2018, 30, 1191–1204. [Google Scholar] [CrossRef] [PubMed]

- Xing, Y.; Shen, F.; Zhao, J. Perception evolution network based on cognition deepening model—Adapting to the emergence of new sensory receptor. IEEE Trans. Neural Networks Learn. Syst. 2015, 27, 607–620. [Google Scholar] [CrossRef] [PubMed]

- Lopez-Paz, D.; Ranzato, M.A. Gradient Episodic Memory for Continual Learning. In Advances in Neural Information Processing Systems 30; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Long Beach, CA, USA, 2017; pp. 6467–6476. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Zhang, J.; Zhang, J.; Ghosh, S.; Li, D.; Tasci, S.; Heck, L.; Zhang, H.; Jay Kuo, C.C. Class-incremental Learning via Deep Model Consolidation. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2020; pp. 1120–1129. [Google Scholar] [CrossRef]

- Rolnick, D.; Ahuja, A.; Schwarz, J.; Lillicrap, T.; Wayne, G. Experience Replay for Continual Learning. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., Alché-Buc, F.d., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Vancouver, BC, Canada, 2019; pp. 350–360. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015. [Google Scholar] [CrossRef] [Green Version]

- Liu, L.; Kuang, Z.; Chen, Y.; Xue, J.H.; Yang, W.; Zhang, W. Incdet: In defense of elastic weight consolidation for incremental object detection. IEEE Trans. Neural Networks Learn. Syst. 2020. [Google Scholar] [CrossRef] [PubMed]

- MacKay, D.J. A practical Bayesian framework for backpropagation networks. Neural Comput. 1992, 4, 448–472. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection over Union. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16 June–20 June 2019. [Google Scholar]

- Everingham, M.; Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vision 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Yun, S.; Han, D.; Chun, S.; Oh, S.J.; Yoo, Y.; Choe, J. CutMix: Regularization Strategy to Train Strong Classifiers With Localizable Features. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 6022–6031. [Google Scholar] [CrossRef] [Green Version]

- Zinkevich, M.; Weimer, M.; Li, L.; Smola, A.J. Parallelized stochastic gradient descent. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Vancouver, BC, Canada, 2010; pp. 2595–2603. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., dAlché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Vancouver, BC, Canada, 2019; pp. 8024–8035. [Google Scholar]

| Task Distribution | Task | Task |

|---|---|---|

| 10 + 10 | 2868 images (4852 objects) | 3252 images (7756 objects) |

| 19 + TV | 4931 images (7918 objects) | 256 images (324 objects) |

| 19 + Person | 4112 images (3552 objects) | 2008 images (4690 objects) |

| Parameters | Value |

|---|---|

| Momentum | 0.9 |

| Batch size | 32 |

| Epoch | 100 |

| Weight decay | 0.0005 |

| Initial learning rate | 0.002 |

| Final learning rate | 0.0005 |

| Image size | 416 × 416 |

| Methods | Aero | Bike | Bird | Boat | Bottle | Bus | Car | Cat | Chair | Cow | Din. Table | Dog | Horse | M Bike | Person | Plant | Sheep | Sofa | Train | TV | mAP at 0.5 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| All data | 76.5 | 84.4 | 68.9 | 59.3 | 55.6 | 82.1 | 85.9 | 79.9 | 55.4 | 73.7 | 67.8 | 79.9 | 85.2 | 84.4 | 83.1 | 46.1 | 71 | 70.3 | 82.1 | 71.7 | 73.2 |

| KD [1] | 50.2 | 53.0 | 29.8 | 27.5 | 13.3 | 55.4 | 67.2 | 45.5 | 13.3 | 36.9 | 34.2 | 38.7 | 60.7 | 56.3 | 38.5 | 10.5 | 33.8 | 33.0 | 55.6 | 15.8 | 38.4 |

| DMC [17] | 77.2 | 67.8 | 63.5 | 46.5 | 50.2 | 70 | 79.3 | 80.3 | 43.5 | 65.5 | 0.2 | 71.1 | 74.6 | 71.5 | 78.2 | 39.6 | 66.6 | 34.8 | 79.2 | 42.4 | 60.1 |

| GEM [15] | 66.6 | 72.6 | 54.9 | 48.1 | 46.7 | 72.5 | 80.5 | 68.7 | 37.9 | 66.3 | 48.4 | 62.5 | 81 | 72.5 | 67.3 | 33.5 | 68 | 45.8 | 76.9 | 28.4 | 60 |

| ER* | 73.2 | 83 | 62.9 | 56.3 | 53.7 | 81.1 | 84.9 | 77.7 | 48.4 | 69.1 | 63.6 | 78.5 | 85.7 | 82.6 | 80.9 | 43.3 | 67.7 | 60.8 | 79.6 | 39.7 | 68.6 |

| ER** | 76.7 | 83.1 | 62.5 | 56.8 | 53.2 | 83.8 | 85 | 78.1 | 50.1 | 68.6 | 62.1 | 77.4 | 85.6 | 79.7 | 80.7 | 42.8 | 70.6 | 66.2 | 81.1 | 33.3 | 68.9 |

| ER*** | 74.3 | 79.5 | 59.5 | 55.3 | 49.5 | 78.9 | 83.5 | 77.1 | 45.2 | 66 | 57.6 | 73.9 | 85.5 | 80.3 | 78.3 | 40.1 | 68 | 58.1 | 78.7 | 44.7 | 66.7 |

| Methods | Aero | Bike | Bird | Boat | Bottle | Bus | Car | Cat | Chair | Cow | Din. Table | Dog | Horse | M Bike | Person | Plant | Sheep | Sofa | Train | TV | mAP at 0.5 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| All data | 76.5 | 84.4 | 68.9 | 59.3 | 55.6 | 82.1 | 85.9 | 79.9 | 55.4 | 73.7 | 67.8 | 79.9 | 85.2 | 84.4 | 83.1 | 46.1 | 71 | 70.3 | 82.1 | 71.7 | 73.2 |

| KD [1] | 10.8 | 10.2 | 36.1 | 9.6 | 6.3 | 5.4 | 14.3 | 41.7 | 3.8 | 19.4 | 42.5 | 53.5 | 76.5 | 66.8 | 57.9 | 27.8 | 60.4 | 50.4 | 72.2 | 46 | 35.5 |

| DMC [17] | 56.6 | 46.5 | 48.7 | 25.7 | 45.5 | 62.9 | 71.5 | 65.7 | 36.8 | 52.4 | 0.6 | 60.1 | 71.2 | 58.2 | 73 | 40.7 | 58.9 | 12.6 | 64 | 66.6 | 50.9 |

| GEM [15] | 3.1 | 3.4 | 3.6 | 6.1 | 19.7 | 6.1 | 58.6 | 9.5 | 55.7 | 14.2 | 0.7 | 7.6 | 4.2 | 2.9 | 23.5 | 0.2 | 1.2 | 2.6 | 3.4 | 0.2 | 11.2 |

| ER* | 67.2 | 74.1 | 47.3 | 44.4 | 44.7 | 71 | 80.1 | 64.5 | 36.9 | 57.1 | 42.2 | 54.9 | 78.5 | 66.7 | 63.5 | 26.3 | 51.4 | 45 | 68.9 | 52.1 | 56.9 |

| ER** | 75.5 | 82 | 58.9 | 51.5 | 49.5 | 78.7 | 84.8 | 74.1 | 47.2 | 65.1 | 61 | 65.4 | 78.7 | 76.6 | 74.6 | 34.6 | 60.6 | 58.4 | 77.8 | 61.9 | 65.5 |

| ER*** | 66.7 | 68.5 | 38 | 45.7 | 40.7 | 69.8 | 81.4 | 60.3 | 30.9 | 52.3 | 67.4 | 60.4 | 81.1 | 76.6 | 79.6 | 43.9 | 61.1 | 61.3 | 77.4 | 64.7 | 61.4 |

| Methods | Aero | Bike | Bird | Boat | Bottle | Bus | Car | Cat | Chair | Cow | Din. table | Dog | Horse | M bike | TV | Plant | Sheep | Sofa | Train | Person | mAP at 0.5 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Classes (1–19) | 75.8 | 83.9 | 66.7 | 58.0 | 53.1 | 81.8 | 85.4 | 78.3 | 53.7 | 75 | 66.5 | 78.5 | 84.6 | 83 | 82.7 | 43.5 | 71.4 | 69.4 | 81.2 | - | 68.8 |

| Class (20) | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | 64.1 | 64.1 |

| GEM [15] | 61.7 | 65.9 | 42.6 | 37.2 | 34.7 | 65.2 | 68.4 | 59.6 | 30.9 | 50.3 | 45.1 | 54.9 | 65.6 | 65.2 | 52.7 | 23.8 | 52.5 | 50.1 | 62.1 | 50.3 | 51.9 |

| ER | 76.7 | 81 | 58 | 53.2 | 50.7 | 81 | 84.4 | 75 | 47 | 67.6 | 62.4 | 71.8 | 82.3 | 79.7 | 67.7 | 38.8 | 67.4 | 64.9 | 80.8 | 51.2 | 67.1 |

| Methods | mAP(1–10) | mAP(10–20) | mAP |

|---|---|---|---|

| ER** (500) | 42.5 | 64.4 | 53.5 |

| ER** (1000) | 55.4 | 67.4 | 61.4 |

| ER** (2500) | 66.3 | 65.2 | 65.7 |

| ER** (5000) | 66.7 | 65.0 | 65.8 |

| Dataset | Four-Wheel Vehicle | Rider | Two-Wheel Vehicle | Person |

|---|---|---|---|---|

| Train | 3099 images (17,879 objects) | 652 images (1911 objects) | 1146 images (5697 objects) | 726 images (1053 objects). |

| Test | 644 images (3116 objects) | 117 images (311 objects) | 195 images (881 objects) | 128 images (202 objects). |

| Total | 3643 images (20,995 objects) | 769 images (2222 objects) | 1341 images (6578 objects) | 854 images (1255 objects). |

| Methods | Four-wheel vehicle | Rider | Two-wheel vehicle | Person | mAP |

|---|---|---|---|---|---|

| Normal Training | 89.7 | 72 | 80.2 | 66.9 | 77.2 |

| Training | 91.3 | - | - | - | 91.3 |

| Training | 85.2 | 77.3 | 80.2 | 65.6 | 77.1 |

| Methods | Proccess | Time (ms) |

|---|---|---|

| Normal Training | inference + backpropagation | 2380 |

| KD [1] | inference + backpropagation | 2660 |

| DMC [17] | inference + backpropagation | 2940 |

| GEM [15] | (inference + backpropagation) | 4760 |

| ER | inference + backpropagation + augmentation | 2580 |

| Methods | Advantages | Disadvantages |

|---|---|---|

| KD [1] | No memory required and faster training time | Auxiliary data required. |

| DMC [17] | No memory required | Three models required to train and auxiliary data required |

| GEM [15] | No memory and no auxiliary data required | Higher training time and lower performance |

| ER | No auxiliary data and faster training time | Memory required |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shieh, J.-L.; Haq, Q.M.u.; Haq, M.A.; Karam, S.; Chondro, P.; Gao, D.-Q.; Ruan, S.-J. Continual Learning Strategy in One-Stage Object Detection Framework Based on Experience Replay for Autonomous Driving Vehicle. Sensors 2020, 20, 6777. https://doi.org/10.3390/s20236777

Shieh J-L, Haq QMu, Haq MA, Karam S, Chondro P, Gao D-Q, Ruan S-J. Continual Learning Strategy in One-Stage Object Detection Framework Based on Experience Replay for Autonomous Driving Vehicle. Sensors. 2020; 20(23):6777. https://doi.org/10.3390/s20236777

Chicago/Turabian StyleShieh, Jeng-Lun, Qazi Mazhar ul Haq, Muhamad Amirul Haq, Said Karam, Peter Chondro, De-Qin Gao, and Shanq-Jang Ruan. 2020. "Continual Learning Strategy in One-Stage Object Detection Framework Based on Experience Replay for Autonomous Driving Vehicle" Sensors 20, no. 23: 6777. https://doi.org/10.3390/s20236777