Motion-Aware Interplay between WiGig and WiFi for Wireless Virtual Reality

Abstract

1. Introduction

- High image quality: The quality of the VR images shown to a user is the first design factor to consider in the cooperative use of both modules, so that the user is immersed in the VR services. Increasing the quality of the encoded images (frames) requires an increased data size. Therefore, the VR system must be designed to be able to transfer large frame sizes as frequently as possible.

- Regulated latency: Since the total latency becomes excessive, Timewarp produces noticeable black borders, which disturb the immersion. Overfilling [11] is the solution to this problem; it renders an expanded area at the expense of an increased computational load. However, the fluctuating latency results in nonoptimal overfilling, since a specific overfilling factor cannot simultaneously minimize both black borders and computational load under fluctuating latency. Therefore, the cooperative use of both modules should be designed to achieve a given target latency.

- Design of the prediction scheme for the WiGig throughput and the VR frame size to be generated based on the motion awareness of a VR user,

- Design of the joint control mechanism of interface switching and encoding rate adjustment for enhanced VR frame quality and latency regulation,

- Experimental evaluation of the integrated wireless VR system to show the performance gain of the proposed design over various conventional approaches.

2. Related Work

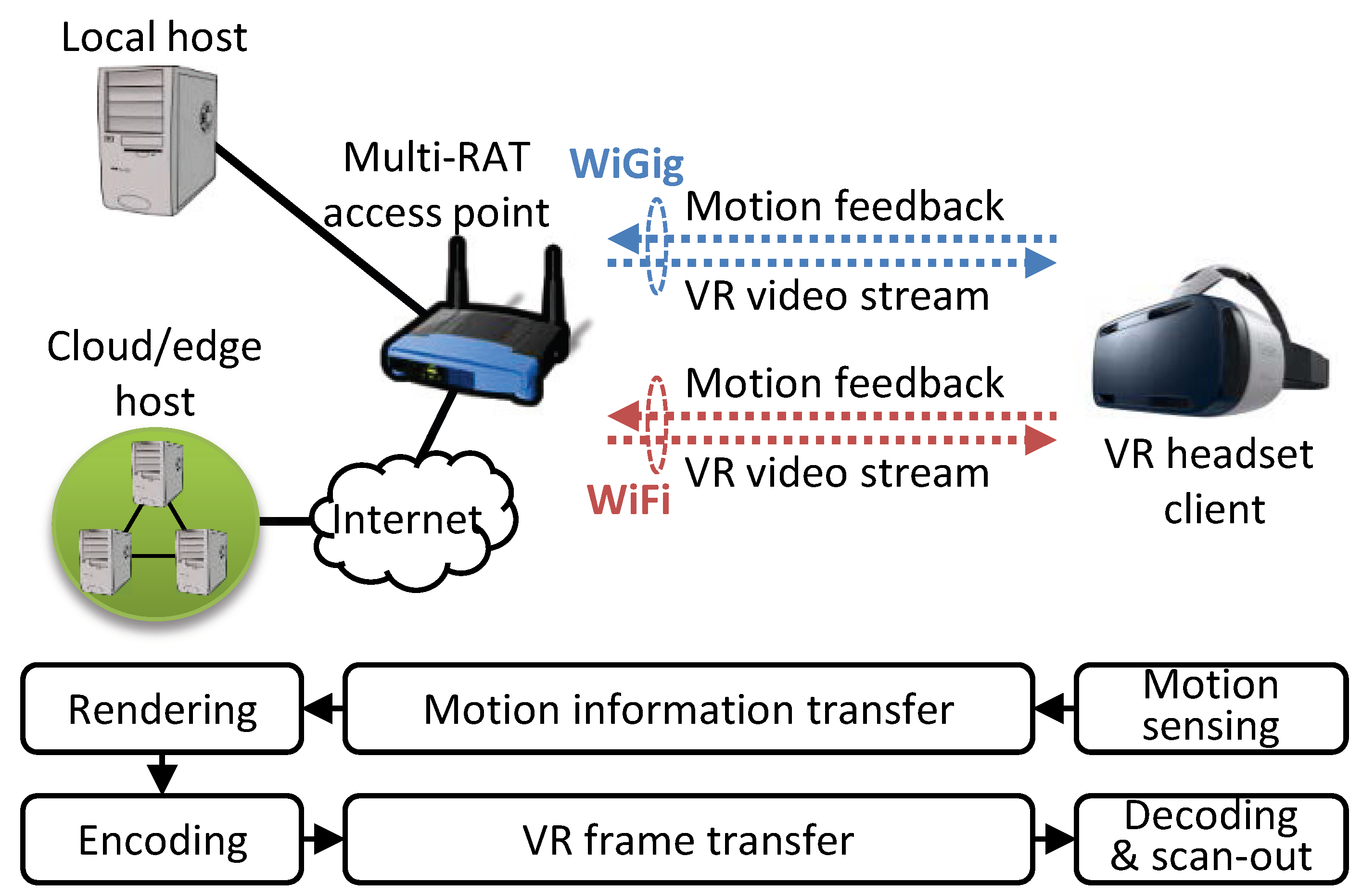

3. System Model

4. Experimental Observations on the Impact of User Motion

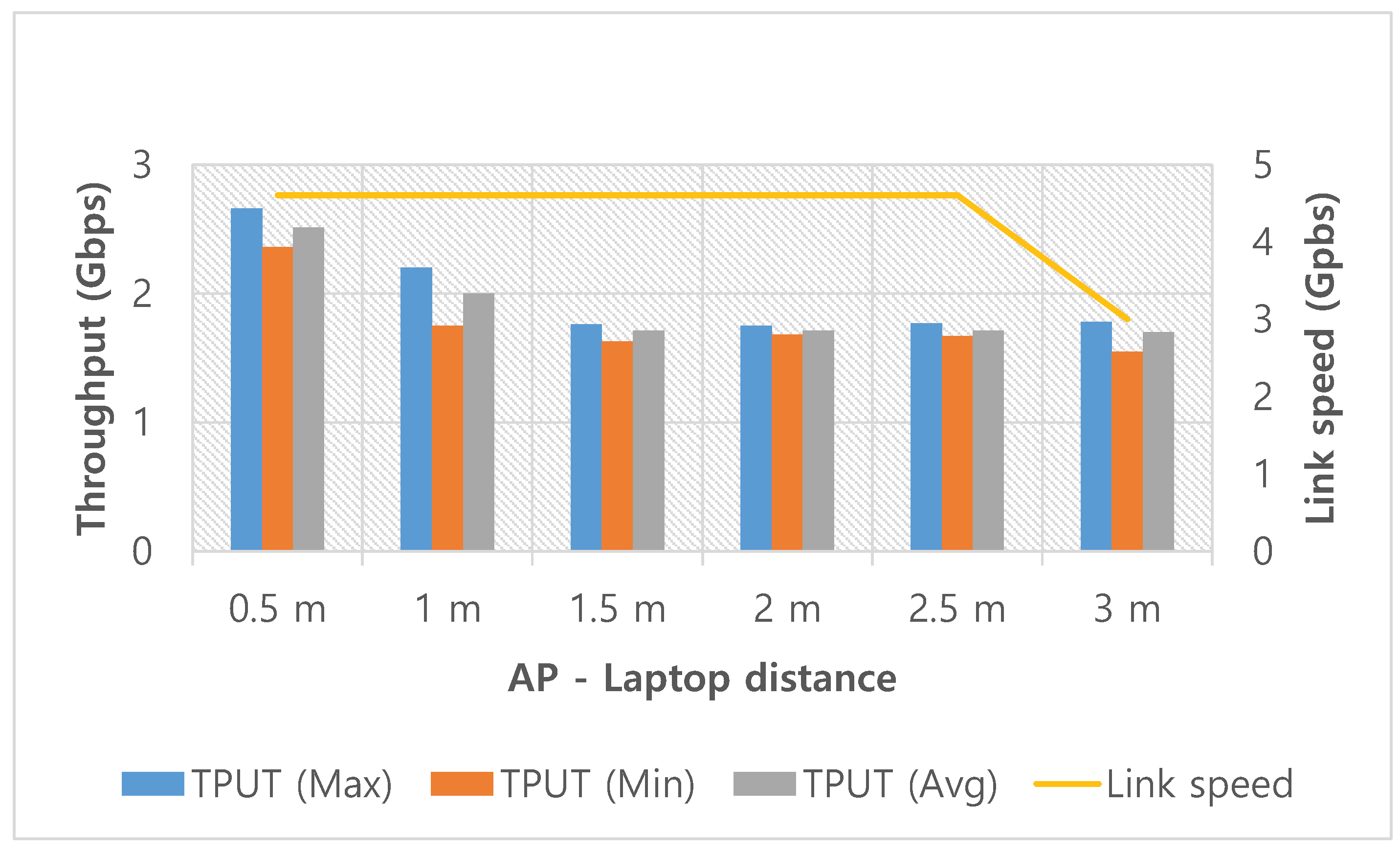

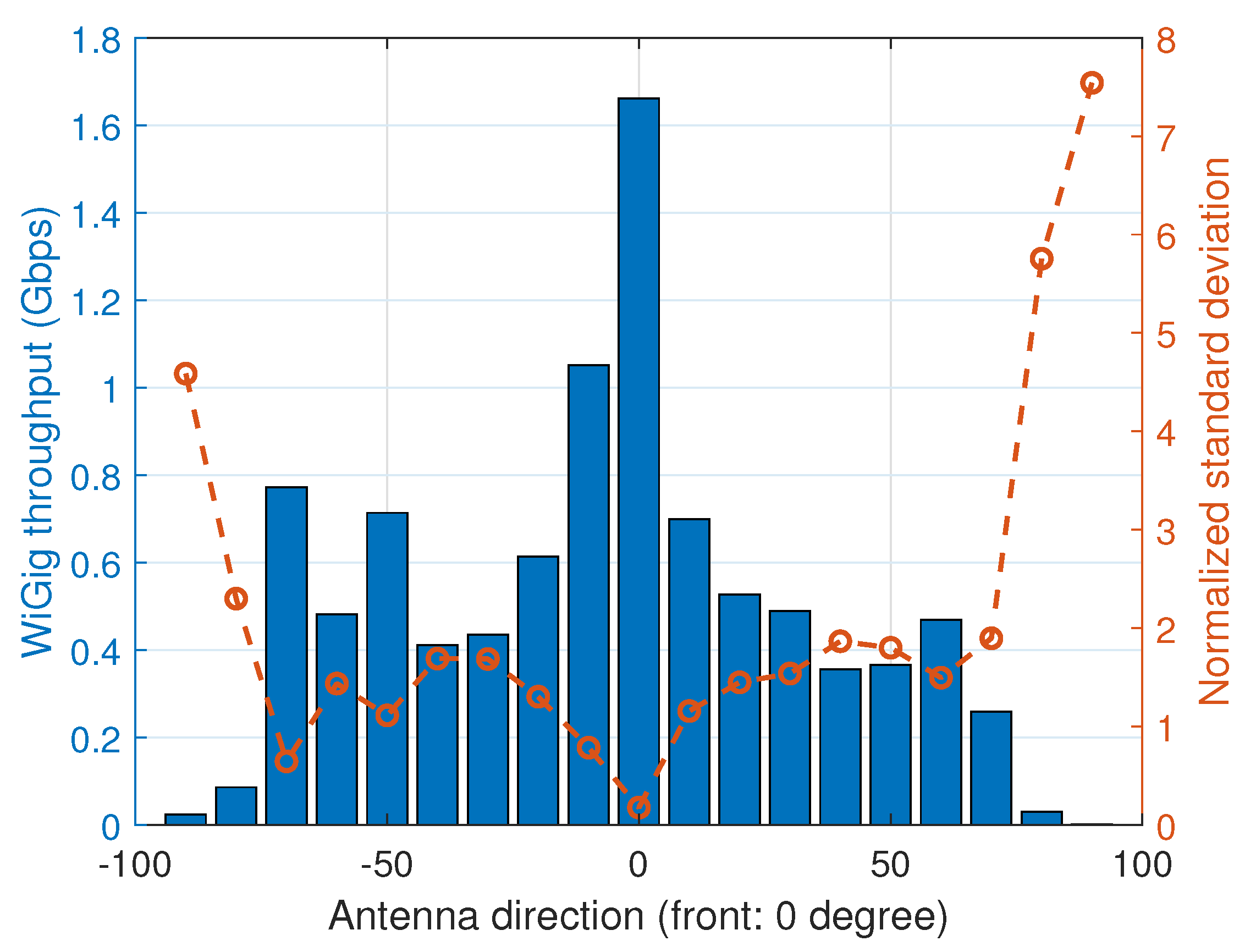

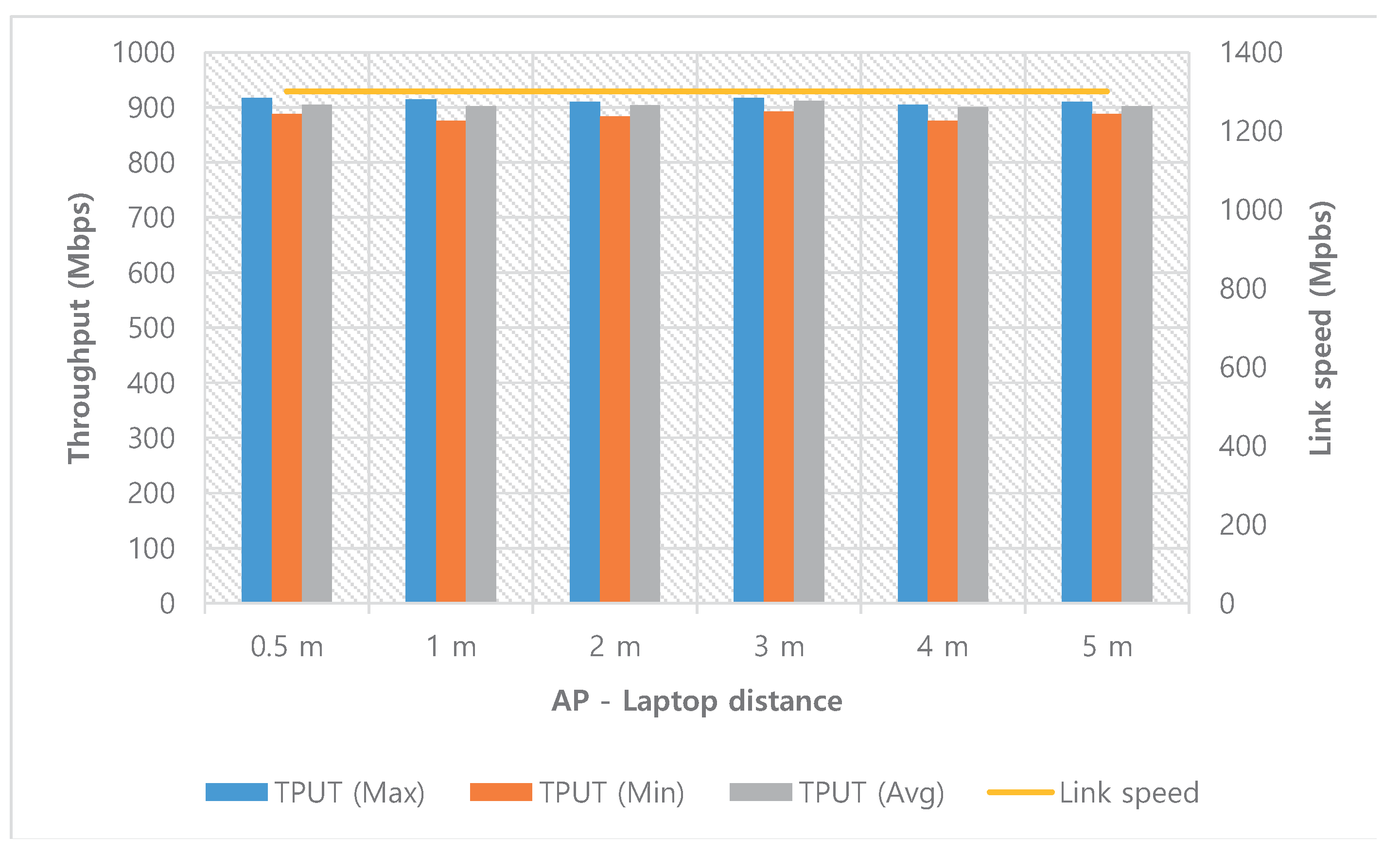

4.1. Impact of User Motion on the Wireless Performance

4.2. Impact of User Motion on VR Traffic

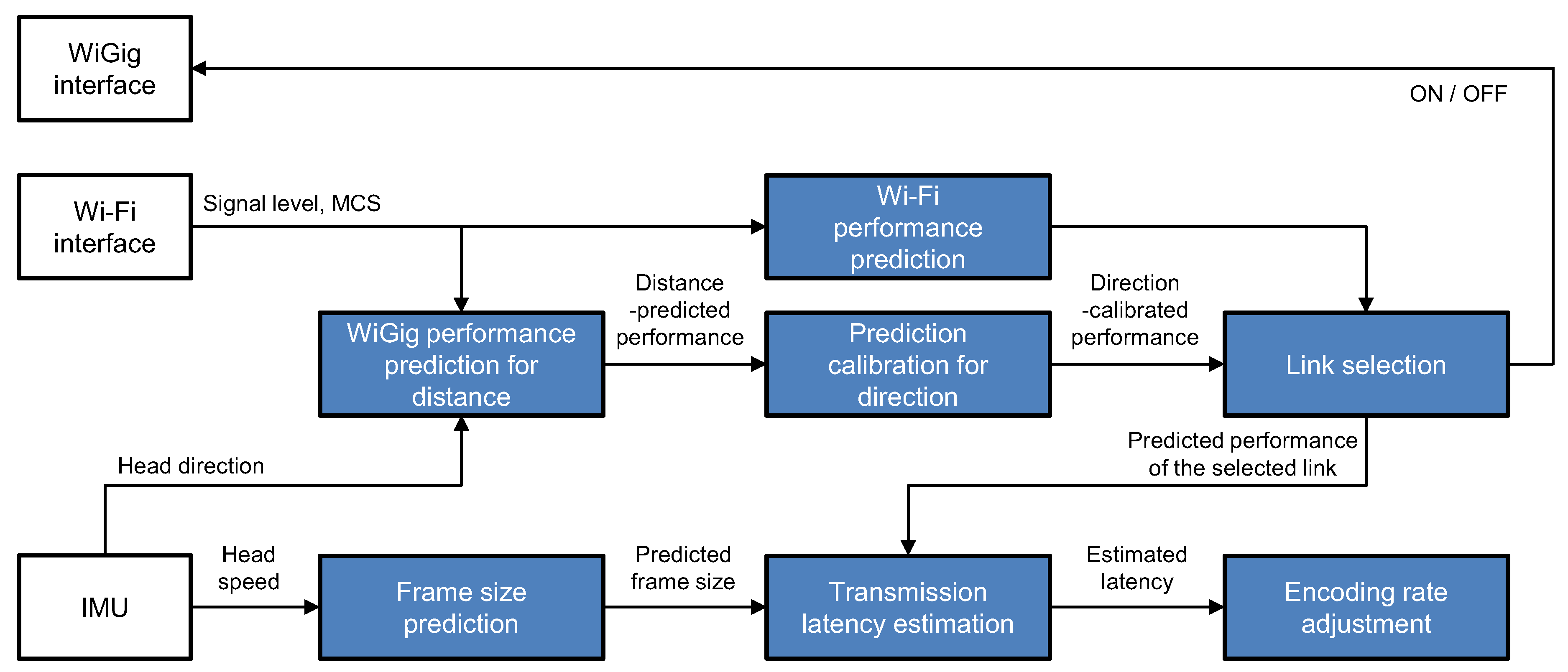

5. MWIVR

5.1. Link Selection

5.2. Encoding Rate Adjustment

6. Performance Evaluation

6.1. Evaluation Configuration

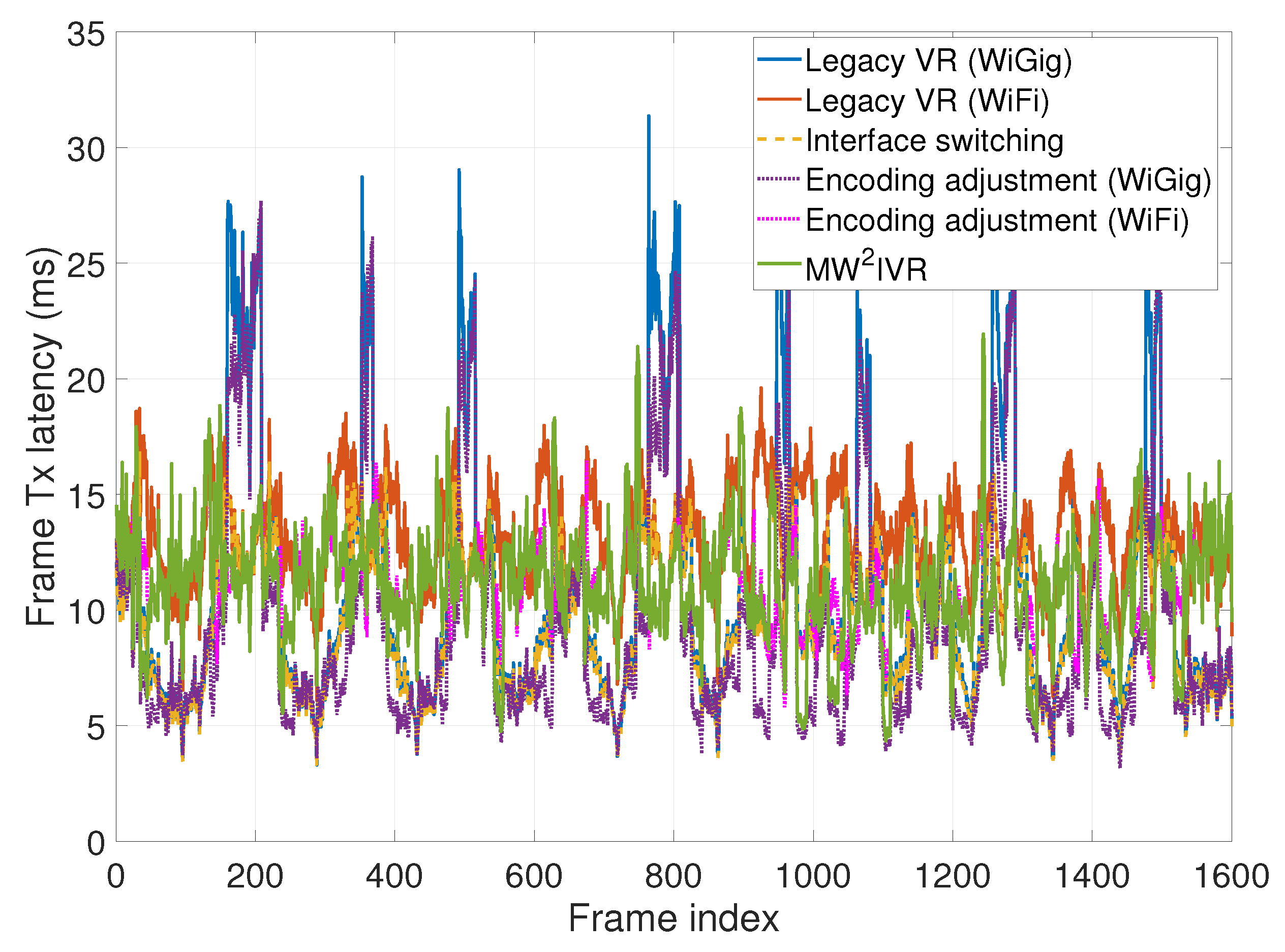

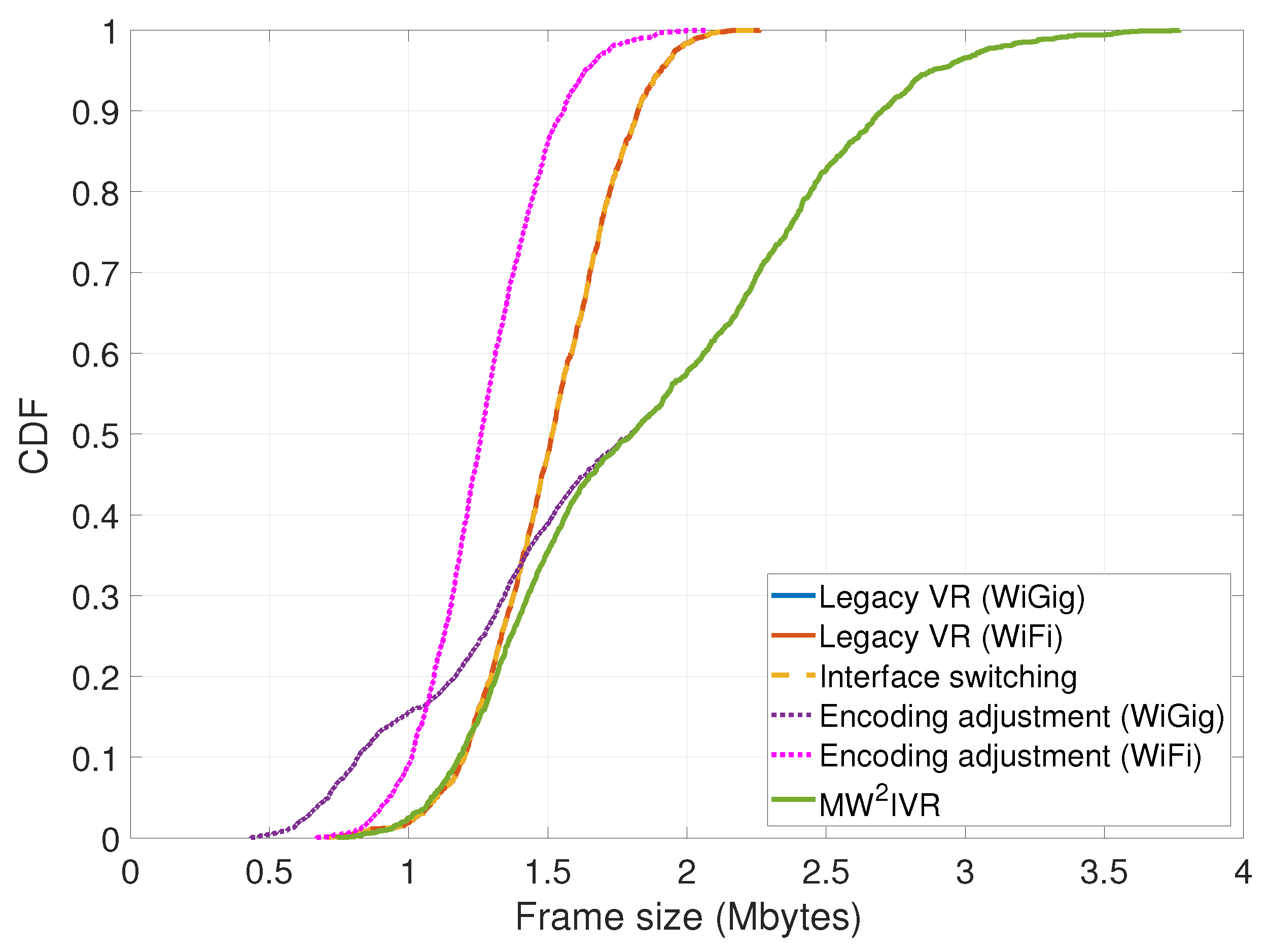

- Legacy VR with a fixed interface: The wireless interface in use is not changed during VR service. The encoding rate is fixed as well. The cases of WiGig-only and WiFi-only are considered.

- Interface switching: Switching between WiGig and WiFi interfaces is made for higher throughput during VR service. The switching algorithm of MWIVR without encoding rate adjustment is considered.

- Encoding adjustment: The encoding rate of a VR service is adjusted for latency regulation but without interface switching. The adjustment algorithm of MWIVR is considered, and thus accompanies the proposed motion-aware VR traffic prediction scheme. The cases of WiGig-only and WiFi-only are considered.

6.2. Frame Transmission Latency

6.3. Generated Frame Size

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AP | Access Point |

| CDF | Cumulative Distribution Function |

| FOV | Field Of View |

| GOP | Group Of Pictures |

| HMD | Head-Mounted Display |

| IMU | Inertial Measurement Unit |

| MAC | Medium Access Control |

| MCS | Modulation and Coding Scheme |

| PHY | Physical Layer |

| PSNR | Peak Signal-to-Noise Ratio |

| QoE | Quality of Experience |

| RAT | Radio Access Technology |

| RTP | Real-time Transport Protocol |

| VR | Virtual Reality |

References

- LaValle, S.M. Virtual Reality; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) specifications Amendment 3: Enhancements for Very High Throughput in the 60 GHz Band. IEEE Std. 802.11ad 2012.

- Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) specifications. IEEE Std. 802.11 2016.

- HTC. VIVE Wireless Adapter. Available online: https://www.vive.com/us/accessory/wireless-adapter/ (accessed on 26 November 2020).

- Sur, S.; Venkateswaran, V.; Zhang, X.; Ramanathan, P. 60 GHz Indoor Networking through Flexible Beams: A Link-Level Profiling. In Proceedings of the 2015 ACM SIGMETRICS International Conference on Measurement and Modeling of Computer Systems, Portland, OR, USA, 16–18 June 2015; pp. 71–84. [Google Scholar]

- Available online: https://www.govinfo.gov/app/details/CFR-2010-title47-vol1/CFR-2010-title47-vol1-sec15-255 (accessed on 27 November 2020).

- Oculus. The Latent Power of Prediction. Available online: https://developer.oculus.com/blog/the-latent-power-of-prediction/ (accessed on 26 November 2020).

- Clicked. onAirVR. Available online: https://onairvr.io (accessed on 26 November 2020).

- Oculus. Asynchronous Timewarp Examined. Available online: https://developer.oculus.com/blog/asynchronous-timewarp-examined/ (accessed on 26 November 2020).

- Nguyen, T.C.; Kim, S.; Son, J.; Yun, J.H. Selective Timewarp Based on Embedded Motion Vectors for Interactive Cloud Virtual Reality. IEEE Access 2019, 7, 3031–3045. [Google Scholar] [CrossRef]

- Taylor, R.M., II. VR Developer Gems: Chapter 32. Virtual Reality System Concepts Illustrated Using OSVR; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Abari, O.; Bharadia, D.; Duffield, A.; Katabi, D. Enabling High-quality Untethered Virtual Reality. In Proceedings of the 14th USENIX Symposium on Networked Systems Design and Implementation (NSDI 17), Boston, MA, USA, 27–29 March 2017; pp. 531–544. [Google Scholar]

- Kim, J.; Lee, J.J.; Lee, W. Strategic Control of 60 GHz Millimeter-Wave High-Speed Wireless Links for Distributed Virtual Reality Platforms. Mob. Inf. Syst. 2017, 2017, 10. [Google Scholar] [CrossRef]

- Na, W.; Dao, N.; Kim, J.; Ryu, E.S.; Cho, S. Simulation and measurement: Feasibility study of Tactile Internet applications for mmWave virtual reality. ETRI J. 2020, 42, 163–174. [Google Scholar] [CrossRef]

- Available online: https://slideplayer.com/slide/16811209/ (accessed on 27 November 2020).

- Ahn, J.; Young Yong Kim, R.Y.K. Delay oriented VR mode WLAN for efficient Wireless multi-user Virtual Reality device. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 8–11 January 2017; pp. 122–123. [Google Scholar]

- Tan, D.T.; Kim, S.; Yun, J.H. Enhancement of Motion Feedback Latency for Wireless Virtual Reality in IEEE 802.11 WLANs. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM), Waikoloa, HI, USA, 9–13 December 2019. [Google Scholar]

- Elbamby, M.S.; Perfecto, C.; Bennis, M.; Doppler, K. Toward Low-Latency and Ultra-Reliable Virtual Reality. IEEE Netw. 2018, 32, 78–84. [Google Scholar] [CrossRef]

- Chen, M.; Saad, W.; Yin, C.; Debbah, M. Data Correlation-Aware Resource Management in Wireless Virtual Reality (VR): An Echo State Transfer Learning Approach. IEEE Trans. Commun. 2019, 67, 4267–4280. [Google Scholar] [CrossRef]

- Guo, F.; Yu, F.R.; Zhang, H.; Ji, H.; Leung, V.C.M.; Li, X. An Adaptive Wireless Virtual Reality Framework in Future Wireless Networks: A Distributed Learning Approach. IEEE Trans. Veh. Technol. 2020, 69, 8514–8528. [Google Scholar] [CrossRef]

- Dang, T.; Peng, M. Joint Radio Communication, Caching, and Computing Design for Mobile Virtual Reality Delivery in Fog Radio Access Networks. IEEE J. Sel. Areas Commun. 2019, 37, 1594–1607. [Google Scholar] [CrossRef]

- Huang, M.; Zhang, X. MAC Scheduling for Multiuser Wireless Virtual Reality in 5G MIMO-OFDM Systems. In Proceedings of the 2018 IEEE International Conference on Communications Workshops (ICC Workshops), Kansas City, MO, USA, 20–24 May 2018; pp. 1–6. [Google Scholar]

- Li, Y.; Gao, W. DeltaVR: Achieving High-Performance Mobile VR Dynamics through Pixel Reuse. In Proceedings of the 18th International Conference on Information Processing in Sensor Networks, Montreal, QC, Canada, 16–18 April 2019; pp. 13–24. [Google Scholar]

- El-Ganainy, T.; Hefeeda, M. Streaming Virtual Reality Content. arXiv 2016, arXiv:1612.08350. Available online: http://arxiv.org/abs/1612.08350v1 (accessed on 26 November 2020).

- Nguyen, T.C.; Yun, J.H. Predictive Tile Selection for 360-Degree VR Video Streaming in Bandwidth-Limited Networks. IEEE Commun. Lett. 2018, 22, 1858–1861. [Google Scholar] [CrossRef]

- Zhang, Z.; Ma, Z.; Sun, Y.; Liu, D. Wireless Multicast of Virtual Reality Videos With MPEG-I Format. IEEE Access 2019, 7, 176693–176705. [Google Scholar]

- Manikanta Kotaru, S.K. Position Tracking for Virtual Reality Using Commodity WiFi. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2671–2681. [Google Scholar]

- Li, J.; Feng, R.; Liu, Z.; Sun, W.; Li, Q. Modeling QoE of Virtual Reality Video Transmission over Wireless Networks. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, UAE, 9–13 December 2018; pp. 1–7. [Google Scholar]

- Sur, S.; Pefkianakis, I.; Zhang, X.; Kim, K.H. WiFi-Assisted 60 GHz Wireless Networks. In Proceedings of the 23rd Annual International Conference on Mobile Computing and Networking, Snowbird, UT, USA, 16–20 October 2017; pp. 28–41. [Google Scholar]

- Saputra, Y.M.; Yun, J.H. E-MICE: Energy-Efficient Concurrent Exploitation of Multiple Wi-Fi Radios. IEEE Trans. Mobile Comput. 2017, 16, 4355–4370. [Google Scholar] [CrossRef]

- VirtualHere. VirtualHere. Available online: https://www.virtualhere.com/ (accessed on 26 November 2020).

- MulticoreWare. x265 HEVC Encoder / H.265 Video Codec. Available online: http://x265.org/ (accessed on 26 November 2020).

- iPerf. Available online: https://iperf.fr/ (accessed on 26 November 2020).

- Linux Driver for the 802.11ad Wireless card by Qualcomm. 2020. Available online: https://wireless.wiki.kernel.org/en/users/drivers/wil6210 (accessed on 26 November 2020).

- ADpower. Wattman power consmuption analyzer (HPM-100A). Available online: http://adpower21.com (accessed on 26 November 2020).

- Great Power Demo. Available online: https://forums.oculusvr.com/developer/discussion/3507/release-great-power/ (accessed on 26 November 2020).

| Target Bit Rate (%) | PSNR | Target Bit Rate (%) | PSNR |

|---|---|---|---|

| 100 | - | ||

| 83 | 46.06025 | 27 | 42.0448 |

| 67 | 45.36735 | 17 | 40.1181 |

| 50 | 44.38812 | 10 | 39.7944 |

| 33 | 42.9074 | 3 | 31.6792 |

| Quality | Frame Size (%) |

|---|---|

| 100 | 100 |

| 80 | 84.1 |

| 50 | 61.8 |

| 30 | 48.7 |

| 10 | 39.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.; Yun, J.-H. Motion-Aware Interplay between WiGig and WiFi for Wireless Virtual Reality. Sensors 2020, 20, 6782. https://doi.org/10.3390/s20236782

Kim S, Yun J-H. Motion-Aware Interplay between WiGig and WiFi for Wireless Virtual Reality. Sensors. 2020; 20(23):6782. https://doi.org/10.3390/s20236782

Chicago/Turabian StyleKim, Sanghyun, and Ji-Hoon Yun. 2020. "Motion-Aware Interplay between WiGig and WiFi for Wireless Virtual Reality" Sensors 20, no. 23: 6782. https://doi.org/10.3390/s20236782

APA StyleKim, S., & Yun, J.-H. (2020). Motion-Aware Interplay between WiGig and WiFi for Wireless Virtual Reality. Sensors, 20(23), 6782. https://doi.org/10.3390/s20236782