1. Introduction

Sensor detection is undergoing a transition from an independent style to a cooperative style, and sensor networks have found increasing numbers of applications in areas such as in the Internet of Things [

1], environmental monitoring [

2], cooperative radar detection [

3] and autonomous driving [

4]. According to the types of communication topologies, sensor networks are classified into centralized networks and distributed networks [

5]. A centralized network needs a stable central node with excellent communication performance, which is not yet realistic in large-scale networks. Therefore, a large number of researchers are focusing on distributed sensor networks [

6,

7].

Multiple nodes in a distributed sensor network can simultaneously detect and obtain target state information with noise. Each node communicates with its adjacent nodes. By using the distributed estimation algorithm, each node obtains a global and more accurate estimation than a single sensor. The distributed sensor network does not need to use a high-performance central node and is much more easily extended to a large-scale network. In short, this kind of sensor network has high stability and robustness and a low sensor cost [

8]. Therefore, it is necessary to study distributed sensor networks. There are two main existing distributed Kalman filters, namely the consensus-based algorithm and diffusion-based algorithm.

The idea of consensus-based algorithms is to obtain the global average measure or the global average information vector and information matrix by performing the average consensus algorithm [

9,

10]. Olfatih–Saber first proposed a consensus-based distributed algorithm, called the Kalman consensus filter [

11], which can obtain the mean value of global information vectors and the information matrix after infinite iterations and obtain the same global optimality estimations as centralized filters. In [

12], the authors address the problem of the consensus of the information vector and information matrix, which requires infinite iterations to achieve the optimal estimation. To handle the correlation problem of estimations, in [

13], the authors propose the information consensus filter (ICF), which fuses the prior information with low weights and the measured information of adjacent nodes. To handle local unobservability, a finite-time consensus-based distributed estimator is proposed in [

14], which is based on the max-consensus technique. In [

15,

16], the authors propose a gossip-based algorithm that adopts a random communication strategy to achieve consensus. In contrast to consensus-based algorithms, a lower bandwidth can also guarantee the consensus of gossip-based algorithms with the cost of a slow convergence speed. Consensus-based algorithms have the same estimation accuracy as the centralized filter, whereas the global consensus is only achieved asymptotically.

Diffusion-based algorithms exchange intermediate estimations between the neighborhoods of each node and calculate the final estimation by a convex combination of information from neighbors. In [

17,

18], the authors obtain the sum of global measurement information in finite communication cycles through the diffusion of measurement information and propose a finite-time distributed Kalman filter (FT-DKF). In [

19], the authors extend the algorithm proposed in [

17] to cyclic graphs. The work presented in [

20] was the first to propose a distributed estimation fusion filter based on diffusion. The accuracy of diffusion-based algorithms depends on the selection of convex combination coefficients. For this reason, in [

21], the authors discuss the optimal selection of convex combination coefficients and formulate a constrained optimization problem. In [

22], the authors propose the cost-effective diffusion Kalman filter (CE-DKF), which diffuses the information of state estimations and estimation covariances and improves the performance. The work presented in [

23] was the first to apply the covariance intersection (CI) method to deal with the diffusion-based distributed Kalman filtering problem and address the problem of the system noise correlation between sensors. In [

24], the authors use the local CI to improve local estimation performance. In [

25], the authors proposed a new distributed Kalman filter that avoids the diffusion of raw data and maintains estimation accuracy by resorting to maximum posterior probability state estimation. In [

26], the results of [

25] are improved upon and the distributed hybrid information fusion (DHIF) algorithm is proposed. The partial diffusion Kalman filter (PDKF) is proposed for the state estimation of linear dynamic systems in [

27]. By only sending partial estimation vectors at each iteration, the PDKF algorithm reduces the number of internode communication. In [

28], the authors study the performance of PDKF for networks with noises. In summary, diffusion-based algorithms improve the consensus convergence speed, but the estimation accuracy is reduced at the same time.

From the above review, we can see that the diffusion-based algorithms have some advantages and some defects. Therefore, in this paper, we aim to design a kind of diffusion distributed Kalman filter for application to a sensor network. This sensor network is time-invariant and strongly connected. Each sensor node has its own information and the information from adjacent sensors. By using the distributed Kalman filter, the estimations of all nodes contain the global information and reflect the real state of the target after finite communication iterations.

First, a non-repeated diffusion strategy is introduced. The usual diffusion strategy is bi-directional; this means that the information diffuses back in some way. The non-repeated diffusion strategy involves removing the information that is received from a node before sending all messages to this node so that the information is not diffused back. This strategy makes information diffuse only in one direction, even in an undirected graph. Second, we apply the non-repeated diffusion strategy to the diffusion-based distributed Kalman filter and propose a new distributed Kalman filter in which the coefficients of convex combinations are obtained by CI. The introduction of the non-repeated diffusion strategy prevents sensors from fusing the same estimation repeatedly, which may lead to some estimations being too highly weighted and the final estimation results to be biased toward these estimations. Compared with existing distributed Kalman filters, the algorithm in this study has a lower error of estimations. Besides, we show that the algorithm also has good performance in other aspects, including communication bandwidth requirements, communication frequency requirements, applicability to different topologies and robustness to local unobservability. A trace simulation example is provided to verify the performance of the distributed Kalman filter based on the non-repeated diffusion strategy.

The rest of the paper is organized as follows. In

Section 2, the system model and a diffusion distributed Kalman filter based on the non-repeated diffusion strategy are introduced. The result of the algorithm is shown in

Section 3, which verifies the effectiveness of the algorithm.

Section 4 discusses this result and describes the application scenarios of this algorithm. The conclusion of this paper is summarized in

Section 5.

2. Diffusion Distributed Kalman Filtering Algorithms

2.1. System Model

In this study, the sensor network is represented by a graph , where is the set of sensor nodes (where N is the number of nodes in the sensor network and E is the set of communication channels (edges) between sensors). A graph is called an undirected graph if it consists of undirected edges. If an undirected edge exists, node i and node j are neighbors and can communicate with each other. We use to represent the set of adjacent nodes connected to the node i. When there is a path between each pair of different nodes i and j, the graph is called a connected graph. A path is called a cyclic path if this path starts from node A to node B and returns to node A through node C. If a graph is connected and has no cyclic path, it is called an acyclic graph (tree graph). We use d to represent the diameter of the graph G, which is the length of the longest path. denotes the length of the shortest path between nodes i and j.

For the distributed estimation problem under this sensor network, we consider the following target state model

where

is the state vector,

is the state transition matrix, and

is a noise coefficient matrix.

is a system noise vector which is a zero-mean Gaussian white noise. The covariance matrix of

is

. Each node of the sensor network can observe the target, and the measurement equation of each node is

where

is the measurement vector of sensor

i, and

is the observation matrix.

is a measurement noise vector, which is a zero-mean Gaussian white noise. The covariance matrix of

is

. The covariance matrix of system noise

and measurement noise

is

The covariance matrix of measurement noise

and measurement noise

is

To facilitate the expression, we introduce the following notations: is the measurement of target from the node i at time t, is the state prediction at time t based on measurements by the sensor i up to time , is an estimation error covariance matrix of . and are the same as and .

2.2. A New Diffusion Distributed Kalman Filter

In this subsection, we propose a new diffusion distributed Kalman filter. First, we introduce the seminal diffusion distributed Kalman filter [

20], which is summarized in Algorithm 1.

is the estimation with local information.

is a set composed of set

and node

i, which contains node

i and all adjacent nodes of node

i.

| Algorithm 1: The seminal diffusion distributed Kalman filter. |

For the node and node ,

Initialize with:

Local update:

,

,

,

;

Communication and fusion update:

Send to adjacent node i,

where

. |

The algorithm fuses the estimation of adjacent nodes, increases the information exchange and improves the estimation accuracy compared with the Kalman filter of a single sensor. The shortcoming of this algorithm is that it does not deal with the estimation error covariance. Therefore, in Algorithm 1 is not the accurate estimation error covariance of in Algorithm 1, and the estimation accuracy of Algorithm 1 is reduced. In this work, we diffuse and fuse the estimation error covariance to optimize Algorithm 1.

In general, due to the process noise and complex sensor interactions, the cross-covariance between different estimations is unknown. In the case of unknown cross-covariance, we can use CI [

25], the ellipsoidal intersection (EI) method [

29] and the information sharing principle [

30], etc. The information sharing principle requires the distribution of the covariance of global estimation to each node according to a certain proportion, which leads to a great burden of communication. From Algorithm 1, we know that the diffusion-based distributed Kalman filtering algorithm is a convex combination of estimations of adjacent nodes. Similarly, the EI method and CI method obtain more accurate estimations by adjusting the convex combination coefficients of multiple estimates. From this perspective, we can apply the EI method and CI method to extend the diffusion-based distributed filtering algorithm. However, the EI method has some disadvantages compared with the CI method. First, the EI method can provide a more accurate estimation, but its result does not result in consistent estimation. Second, the choice of whether to use EI method is an engineering problem. Third, the calculation process of the EI method is complex, which results in it being inconvenient to describe the algorithm proposed in this paper. Therefore, we use the CI method to fuse estimations, as described in Algorithm 2.

In Algorithm 2,

is the estimation error covariance of

and

are weight coefficients of CI that minimize the following equation:

with

being the trace function. Equation (

5) is a nonlinear optimization problem whose computational cost is usually expensive and unaffordable for sensors. The weight can be set as the mean to reduce computational cost.

| Algorithm 2: A centralized Kalman filter based on the covariance intersection (CI) method. |

For the node ,

Initialize with:

Local update:

,

,

,

Fusion update:

,

,

where is calculated by Equation (5). |

The error covariance of estimation calculated by CI is approximate, and so the result of Algorithm 2 is also approximate, whereas CI provides a conservative result which guarantees that the accuracy in a specific direction is not lower than that of any node in this direction. The estimation of Algorithm 2 and the optimal estimation satisfy the following equation:

Applying Algorithm 2 to Algorithm 1, we obtain a diffusion distributed Kalman filter that fuses estimation and its error covariance concurrently, which is presented in Algorithm 3.

| Algorithm 3: A diffusion distributed Kalman filter with the CI method. |

For the node and node ,

Initialize with:

,

Local update:

,

,

,

;

Communication and fusion update:

Send and to adjacent node i,

, |

|

| (7) |

|

where is calculated by Equation (5). |

In Algorithm 3,

is calculated from

by local update.

fuses information of

. Therefore,

also contains information of

and

is correlative to

. Since

is also correlative to

,

is correlative to

. In Equation (

7), Algorithm 3 fuses

and

and omits their correlation. In order to improve the accuracy of Algorithm 3, we separate diffusion update from local update, and propose a diffusion distributed Kalman filter based on CI in Algorithm 4.

| Algorithm 4: A diffusion distributed Kalman filter separating diffusion update. |

For the node and node ,

Initialize with:

,

,

,

Local update:

,

,

,

;

Diffusion incremental Update:

,

,

,

;

Communication and fusion update:

Send and to adjacent node i,

,

where and is calculated by Equation (5). |

In Algorithm 4, each node requires a local update and diffusion incremental update. The results and of the incremental diffusion update are diffused to adjacent nodes. The results and of the local update are used to fuse with the received information. Compared with Algorithm 3, Algorithm 4 uses the local information to fuse with the received information in the fusion process instead of using the fusion results of the last moment to fuse with the received information; this solves the correlation problem caused by fusing the received information of the last moment with the received information at the current moment in Algorithm 3 at every moment.

2.3. Distributed Kalman Filter Based on the Non-Repeated Diffusion Strategy

In this subsection, we proposed a distributed Kalman filter based on the non-repeated diffusion strategy and analyze its performance. First, we introduce the non-repeated diffusion strategy in Algorithm 5. Each node in Algorithm 5 has local information

at each moment.

is all of the information of node

i at time

t and is calculated by Equation (

9).

is the information that is sent from node

i to node

at time

t and is calculated by Equation (

8). In this strategy, the diffusion information for each adjacent node is calculated. The diffusion information

is calculated by subtracting the received information from node

at the last moment from all information at the last moment. The strategy avoids the sent information from a node returning to this node again.

| Algorithm 5: The non-repeated diffusion strategy. |

For the node and node ,

Initialize information of node i at time t with ;

Initialize with:

;

Diffusion update: |

| ; | (8) |

Communication and fusion information:

Send to adjacent node i, |

| | (9) |

Assumption 1. The sensor network is an acyclic graph.

Theorem 1. Let Assumption 1 hold. Running Algorithm 5, no node of the sensor network will ever receive its own information.

Proof of Theorem 1. There is a node

i in an acyclic graph. Substituting Equation (

8) into Equation (

9), we obtain

Substituting Equation (

8) into Equation (10) many times, all information of node

i at time

t can be presented as

where

. The sets of adjacent nodes of any two points in an acyclic graph do not produce an intersection set (except for each other); otherwise, there will be a cyclic path through the intersection set. Thus, there is no intersection set among

and the element of

will constantly decrease as

n increases. There is an

m that means that

. At this moment, all information of node

i is reduced as

where

is the information that node

i already has. Therefore, the node

i never receives its own information from other nodes. □

We introduce the non-repeated diffusion strategy into Algorithm 4 and propose a new diffusion distributed Kalman filter, which is the core of this paper and is presented in Algorithm 6. In Algorithm 6,

and

are the estimation and its error covariance diffused from node

i to

j.

| Algorithm 6: A distributed Kalman filter based on the non-repeated diffusion strategy. |

For the node and node .

Initialize with:

,

;

Local update:

,

,

,

;

Diffusion incremental update: |

| | (13) |

| | (14) |

,

,

,

;

Communication and fusion update:

Send and to adjacent node i,

where is calculated by Equation (19). |

Theorem 2. Let Assumption 1 hold. The estimations calculated by Algorithm 6 fuse the global information and reflect the real state of the target after a finite number of communications.

Proof of Theorem 2. The step of the fusion update in Algorithm 4 combines information from adjacent nodes of node

i linearly. In the step of the diffusion update, all information of node

i is diffused to all adjacent nodes. Therefore, adjacent nodes of node

i can receive the information from the other adjacent nodes of node

i. In every communication, the information of each node is diffused to the next node. Algorithm 6 adds two steps—Equations (13) and (14)—which are derived from the non-repeated diffusion strategy of Algorithm 5. From Theorem 1, we know that Algorithm 6 realizes the prerequisite that each node never receives its own information. Since the information is diffused to the next node and each node does not receive its own information, the information is always diffused in one direction. Each node can receive the information from other nodes besides its neighbors. The amount of received information depends on the number of other nodes within a certain distance. Due to applying CI to the fusing of the information, a conservative estimation is obtained that is the same as Equation (

6). The estimation of node

i is

where

and

is a set of nodes, whose measures can be received by node

i.

represents the measurements of nodes in

, and

denotes the maximum moment before which the measurement information from node

l can be received by node

i.

Assume that the two nodes with the longest distance in the sensor network are node

i and node

j (

); with

d communications after time

(at time

), we obtain

At this moment, node i receives the measure . Each node can receive the estimates and covariances of all nodes in the sensor network because the two nodes with the longest distance can communicate. Due to the fusing of the estimations and covariances of all nodes by CI, each node obtains an estimation that includes the global information and reflects the real state of target after d communications. □

and

in Algorithm 6 are the weight coefficients of CI, which minimize the following equation (take

as an example):

Equation (19) is a nonlinear optimization problem, as with Equation (

5), whose computational cost is also expensive and unaffordable for sensors. The weight can be set as the mean to reduce computational cost.

3. Results

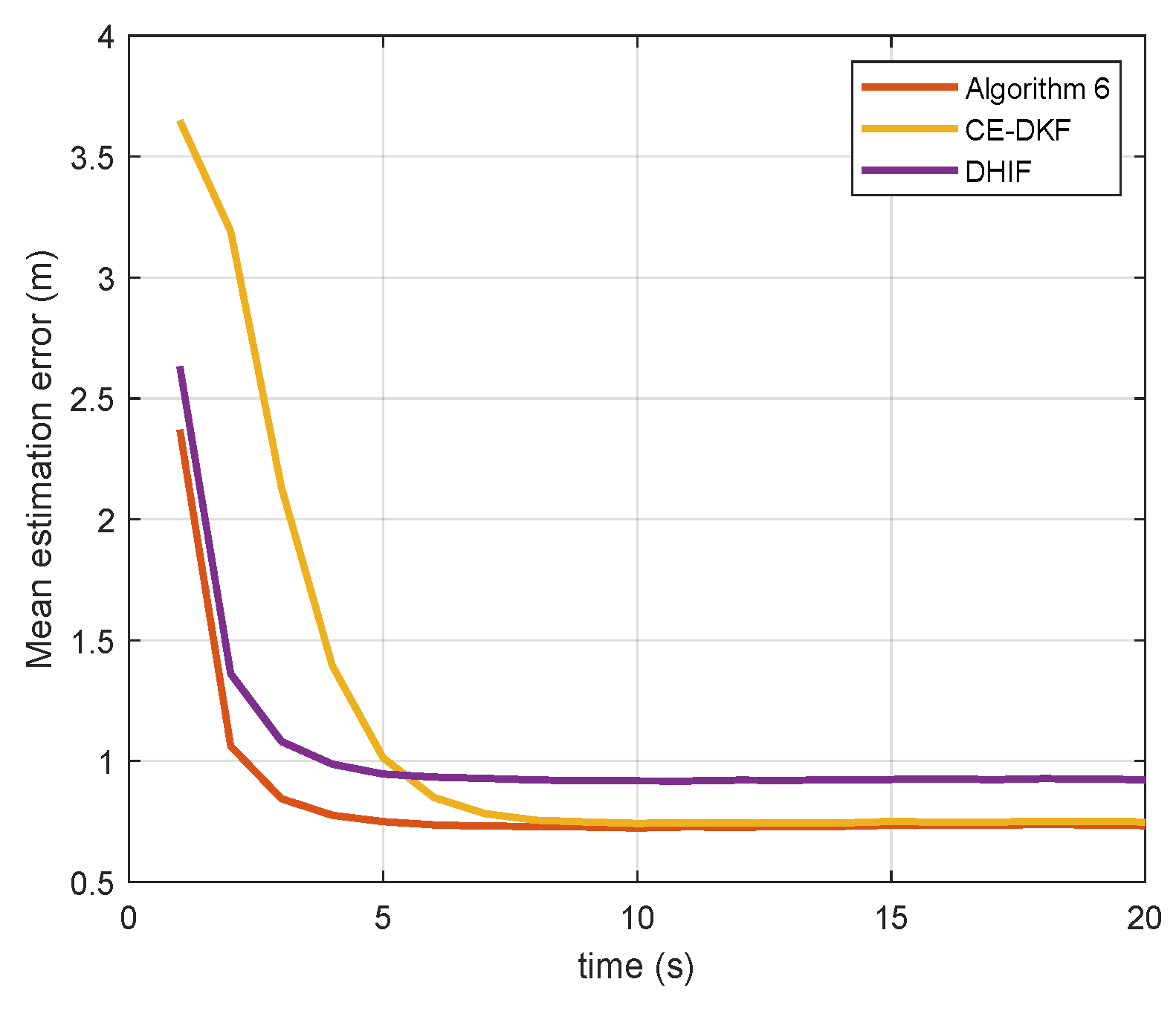

In this section, an example is simulated to show the efficiency of Algorithm 6, and the comparisons with CE-DKF [

22] and DHIF [

26] are also shown. CE-DKF and DHIF are two existing prominent diffusion-based distributed filter algorithms.

Specifically, we consider a sensor network of 20 nodes whose topological structure is shown in

Figure 1. Each node can detect the target and communicate bi-directionally with its adjacent nodes. The linear dynamical system of Equation (

1) is considered, where the state vector

consists of position

and speed

from horizontal and vertical directions, respectively. The unit is meters. The initial state is

. The acceleration of the target is modeled as the system noise

whose mean is

and variance is

Q. Here, we consider the case where

Q is equal to

or

The state transition matrix is

and the noise coefficient matrix is

The sensor measurement model is Equation (

2), where

the mean of

is

, and the variance of

is

Next, we perform a simulation 2000 times to compare Algorithm 6 with other distributed filtering algorithms. The fusion weights of both Algorithm 6 and DHIF are mean values.

Figure 2 and

Figure 3 show the estimation error of the algorithms for state

. The equation to calculate the error is

The error reduction of Algorithm 6 is the percentage of error that Algorithm 6 reduces compared to other algorithms. The error convergence time is the earliest time when the error reaches a steady state. From

Table 1, we see that Algorithm 6 reduces the estimation error by up to 20.97% and reaches a steady state faster than CE-DKF and DHIF. For the case in which the noise covariance matrix

Q is large, the performance of Algorithm 6 is more prominent. In the case of a small

Q, Algorithm 6 only reduces the error by 2.34% compared with CE-DKF. Because the maneuverability of target is small in this case, the advantages of Algorithm 6 are not obvious.

Figure 4 and

Figure 5 show the standard deviations (SDs) of the estimations of sensors for state

, which represents the degree of consensus of estimations from all nodes in the sensor network and is calculated by

The SD reduction of Algorithm 6 is the percentage by which Algorithm 6 reduces the SD compared to other algorithms. The convergence time of SD is the earliest time when SD reaches a steady state. From

Table 1, we see that Algorithm 6 reduces the SD of estimation by up to 22.34% and reaches a steady state faster than CE-DKF and DHIF. This means that Algorithm 6 can ensure that all nodes in the sensor network have a higher degree of consensus. In the case of a small

Q, Algorithm 6 only reduces SD by 0.81% compared with CE-DKF. The reason for this is the same as above: the error advantage of Algorithm 6 is small in the case of a small

Q.

In each communication, each sensor applying Algorithm 6 only needs to send its own estimation

and the estimation error covariance

. Therefore, the communication traffic is

in terms of the number of transmitted digits. We give the communication bandwidth and frequency requirement of some popular algorithms in

Table 3. The communication frequency in this study means the number of communications with an adjacent node required for each node to complete estimation. In addition to CE-DKF and DHIF, we compare the communication requirements of other two consensus-based algorithms. From

Table 3, we can see that the communication bandwidth and frequency requirement of Algorithm 6 is the lowest (or one of the lowest).

4. Discussion

In this section, we discuss the result of Algorithm 6, including its estimation accuracy, degree of consensus, communication requirement, applicability to different topologies and robustness to local unobservability.

4.1. Estimation Accuracy

The reason why Algorithm 6 has better estimation accuracy than other diffusion-based algorithms is that Algorithm 6 applies the non-repeated diffusion strategy, which eliminates repeated information and reduces redundancy. In addition, Algorithm 6 improves the efficiency of information exchange due to non-redundant information exchange, meaning that it can converge to a steady-state value faster than other diffusion-based algorithms. The accuracy advantage of Algorithm 6 is small in the case of a small Q; this means that Algorithm 6 is good at estimating unknown models and maneuvering states. The only disadvantage is that Algorithm 6 compresses the fusion weight in the process of communication, which causes the fusion weight of Algorithm 6 to deviate and reduces the accuracy. This is a limitation of Algorithm 6. Future work will present a better weighting strategy.

4.2. Degree of Consensus

In sensor network estimation, it is very important that the estimation of all nodes is consistent. According to

Section 3, Algorithm 6 has a lower estimation variance and a higher degree of consensus. It can be seen from

Table 1 and

Table 2 that the variance has a certain correlation with estimation error. Therefore, it can be considered that the reduction of error reduces the estimation dispersion range and the variance of Algorithm 6. Similarly, because the error converges faster, the consensus convergence rate is also faster. The advantage of the degree of consensus is small in the case of a small

Q; this also means that Algorithm 6 is good at estimating unknown models and maneuvering states.

4.3. Communication Requirement

There are a large number of low-cost sensor networks with low communication bandwidths. For this kind of sensor network, the communication bandwidth requirement of the distributed filter algorithm has to be considered. From

Section 3, we know that the communication traffic of Algorithm 6 is competitive against other distributed algorithms.

As with the communication bandwidth, communication frequency is limited for low-cost sensor networks. Some algorithms must satisfy a certain number of communications in order to receive global information and maintain a certain accuracy. When the number of communications is 1 Hz, Algorithm 6 can still guarantee that every node obtains global information.

When the communication frequency is less than 1 Hz, Algorithm 6 can still guarantee that every node obtains global information by combining measurements. In short, at a low communication frequency, as long as the communication frequency is greater than 0 Hz, Algorithm 6 can obtain better accuracy than some algorithms that must run in a certain communication frequency. This communication frequency requirement is also the advantage of diffusion-based algorithms, and thus we apply the diffusion-based algorithm and optimize it.

4.4. Applicability to Different Topologies

Theorem 2 assumes that the topology of the sensor network is an acyclic graph (tree graph). In fact, Algorithm 6 can also be applied to cyclic graphs. For sensor networks with a cyclic path, we can apply the spanning tree algorithm to convert a cyclic graph to an acyclic graph before applying Algorithm 6. Without doubt, this increases the complexity of Algorithm 6, which is also one of the limitations of Algorithm 6. Next, we can study this aspect and time-varying sensor networks.

4.5. Robustness to Local Unobservability

In some cases, some nodes miss the target but other nodes detect the target. The weight of the nodes that miss the target can be considered to be 0. At this moment, Assumption 1 still holds. Since Theorem 2 is independent of the node weight, Algorithm 6 still guarantees that Theorem 2 holds. Therefore, Algorithm 6 is robust to local unobservability and is suitable for wider sensor networks.