Dynamic Modeling of Weld Bead Geometry Features in Thick Plate GMAW Based on Machine Vision and Learning

Abstract

:1. Introduction

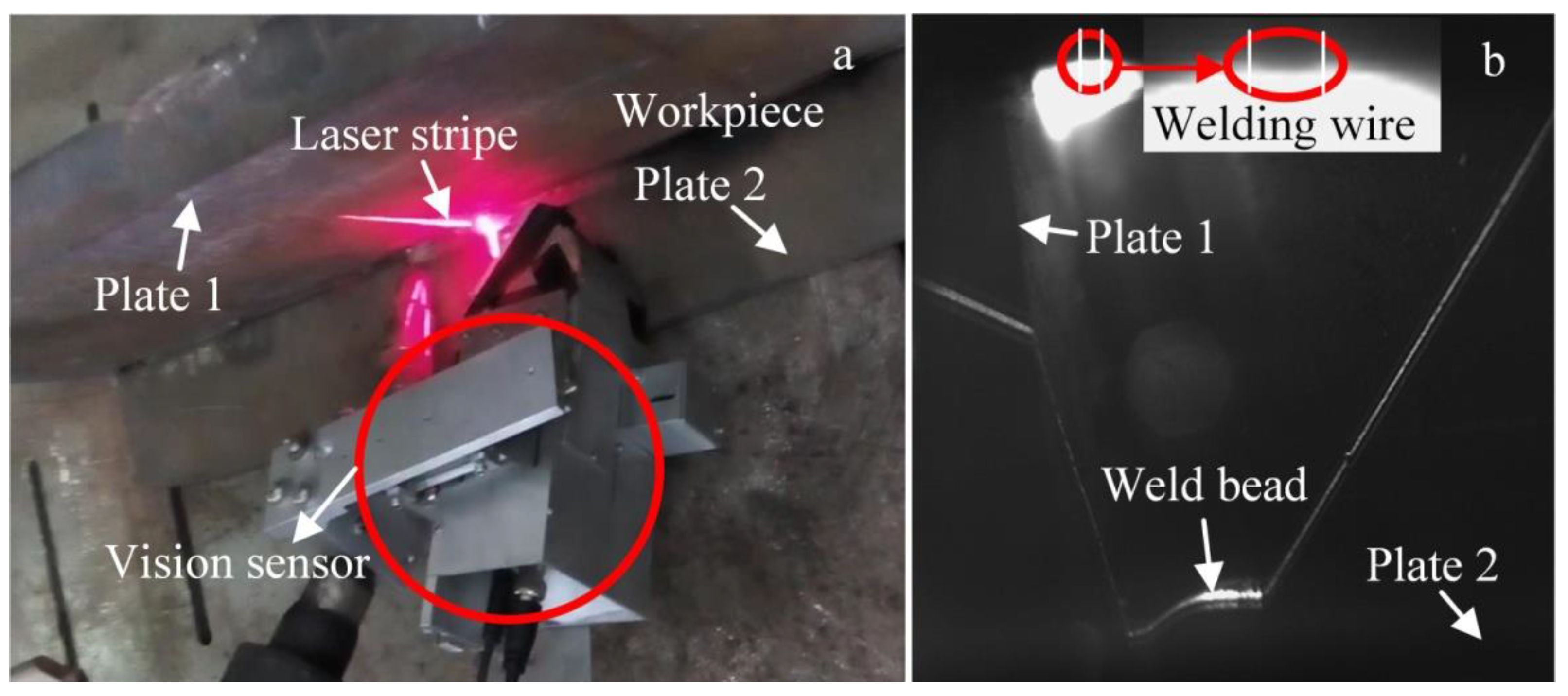

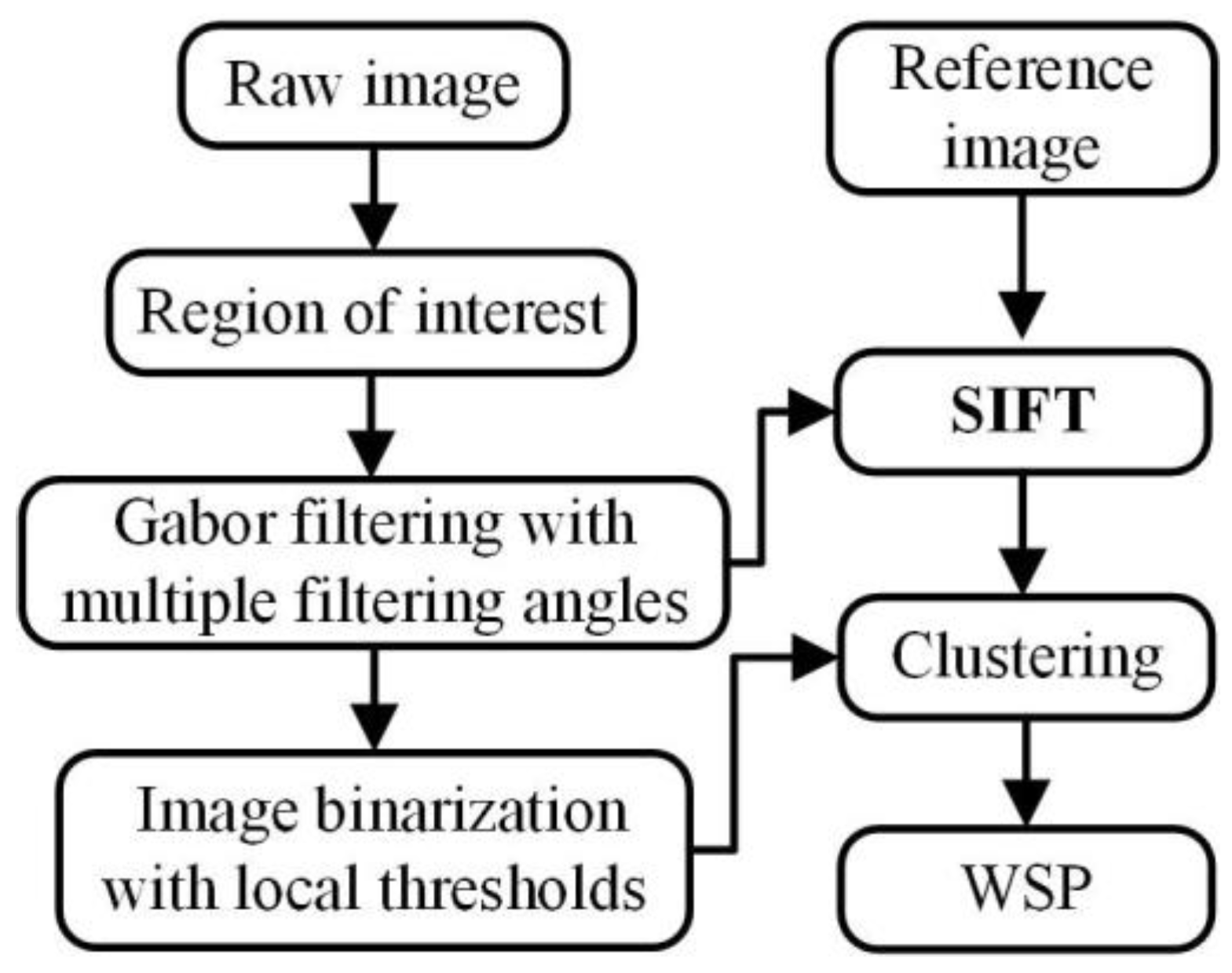

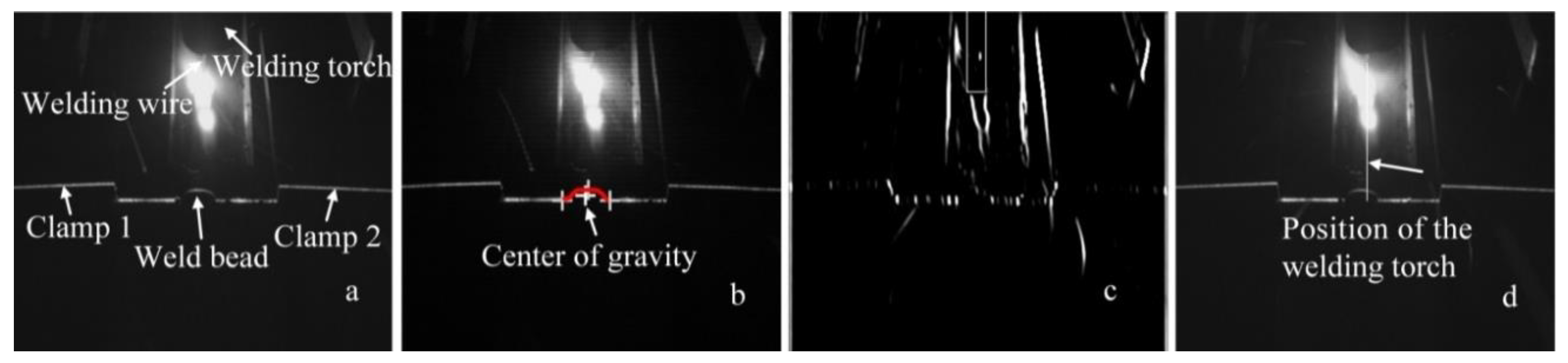

2. WSP Extraction with SIFT and Machine Learning

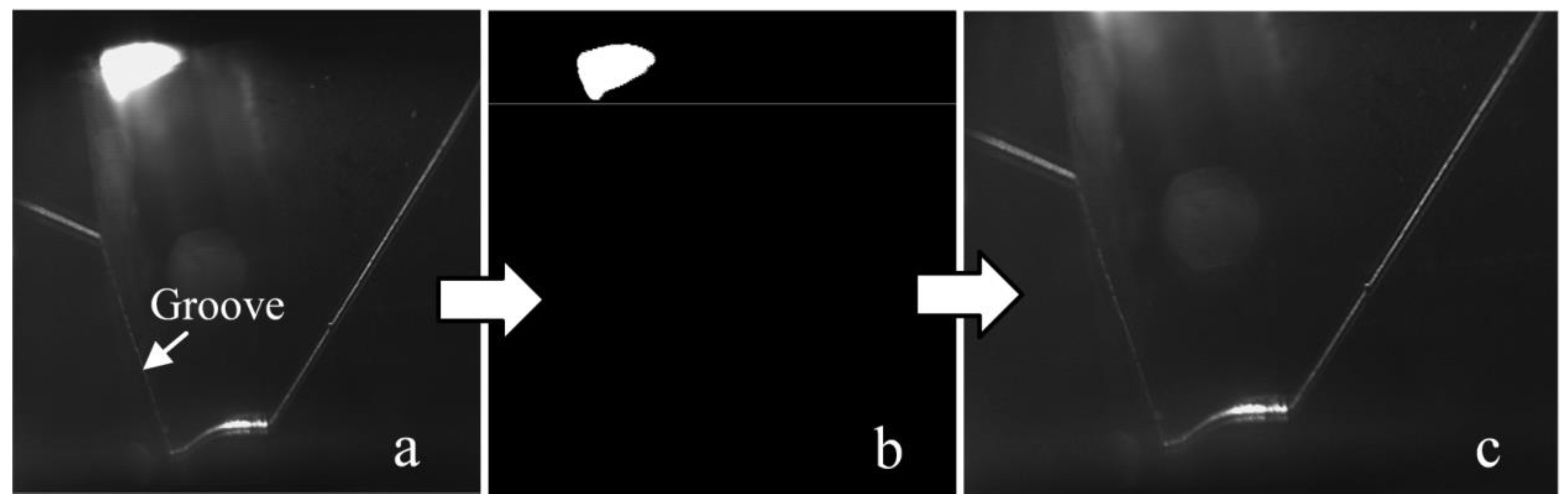

2.1. Gabor Filtering

2.2. Local Thresholding

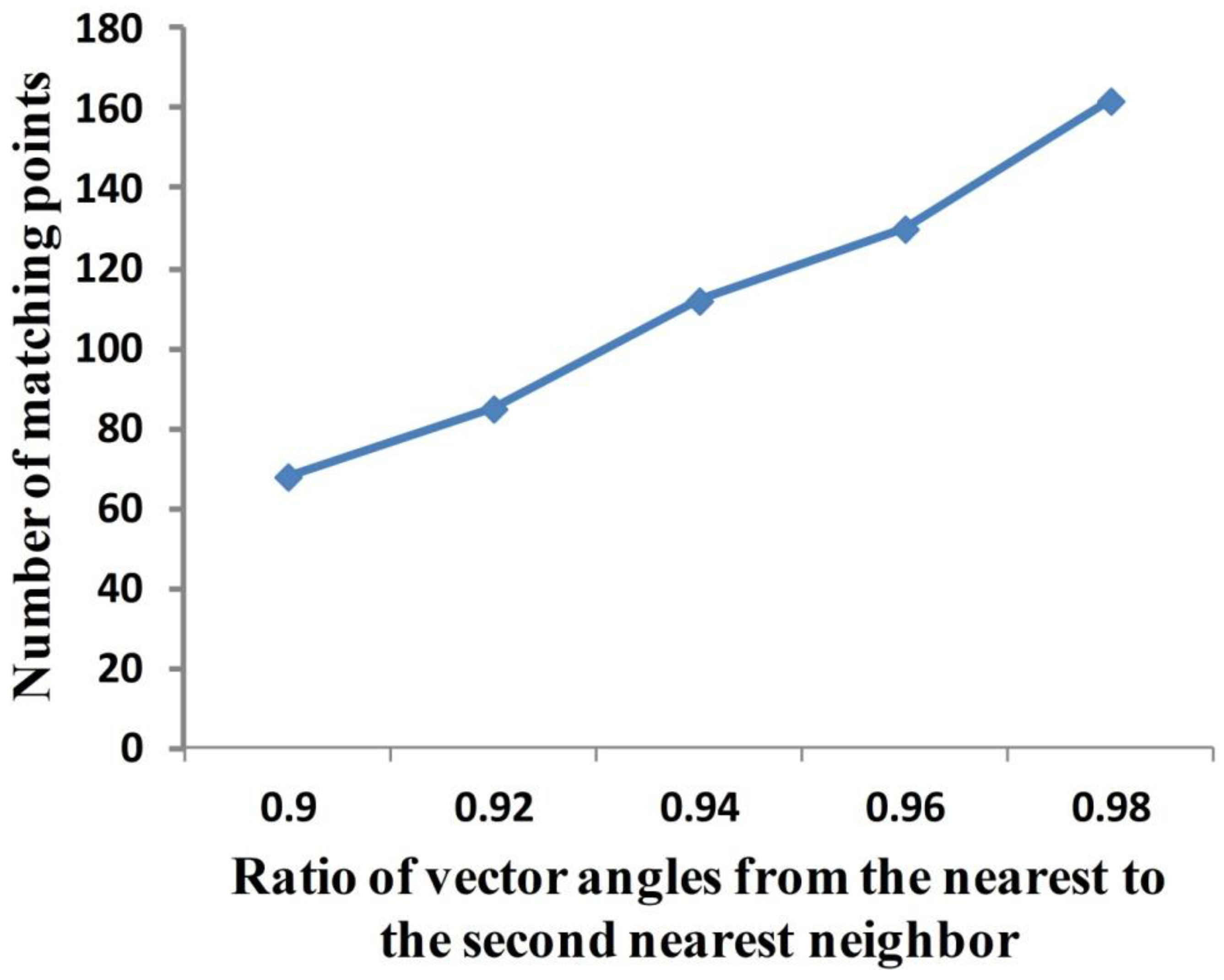

2.3. WSP Location Using SIFT

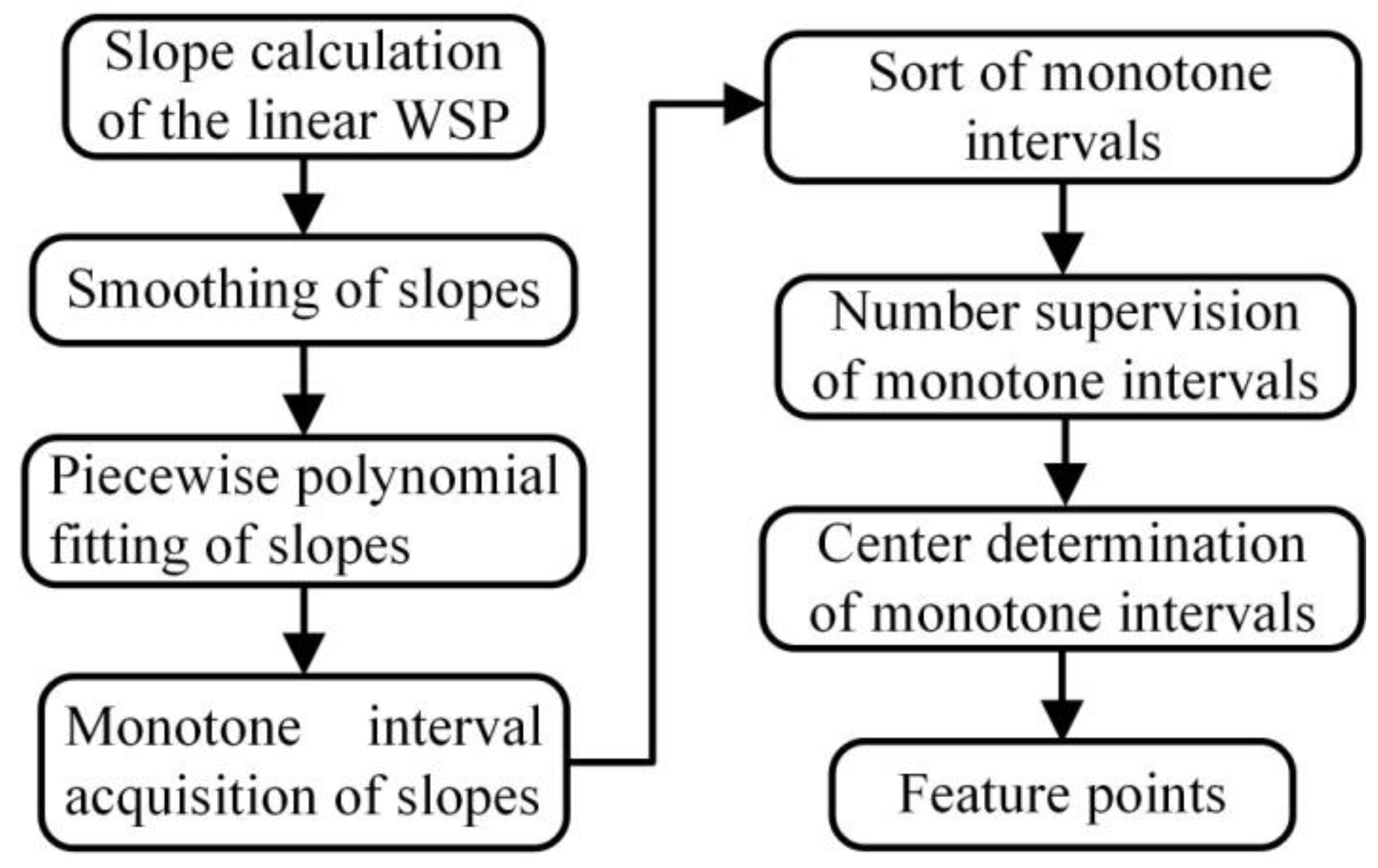

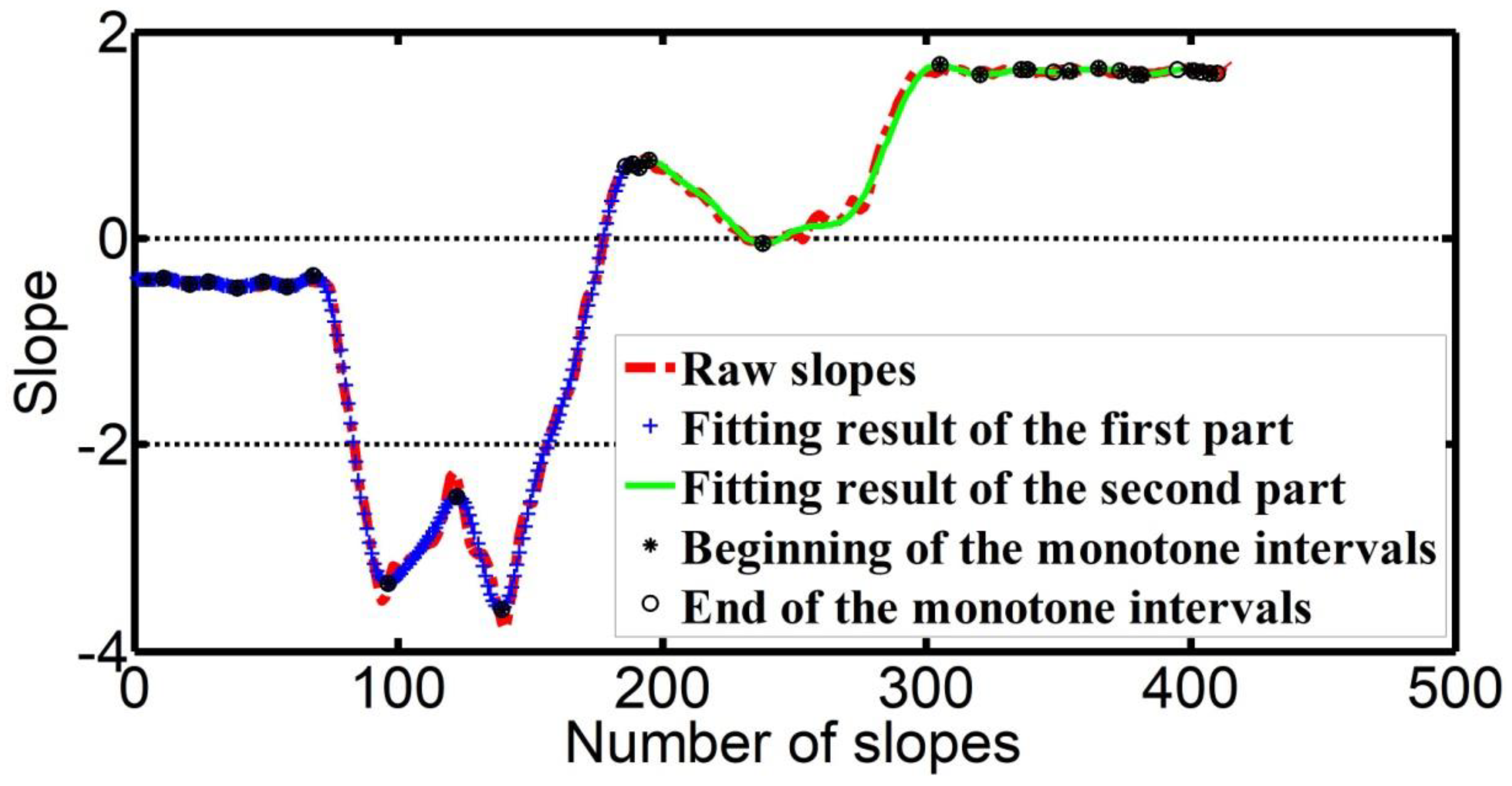

3. Feature Point Identification

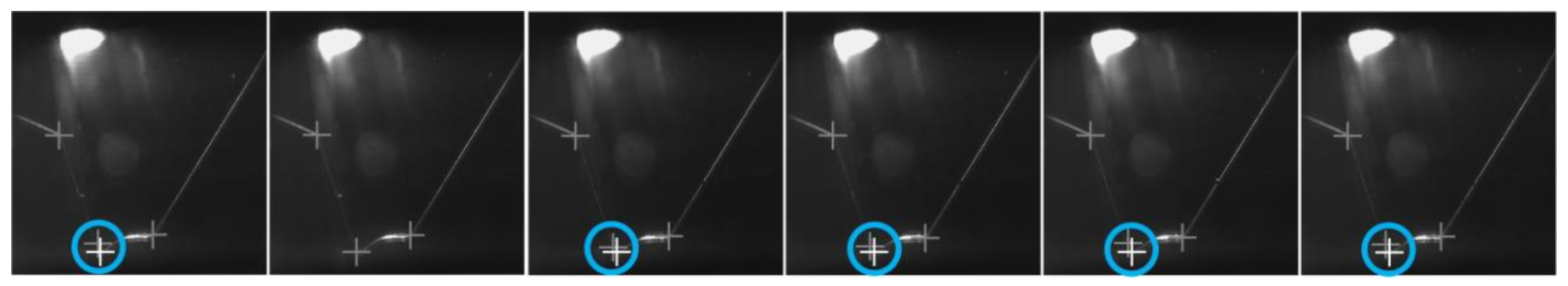

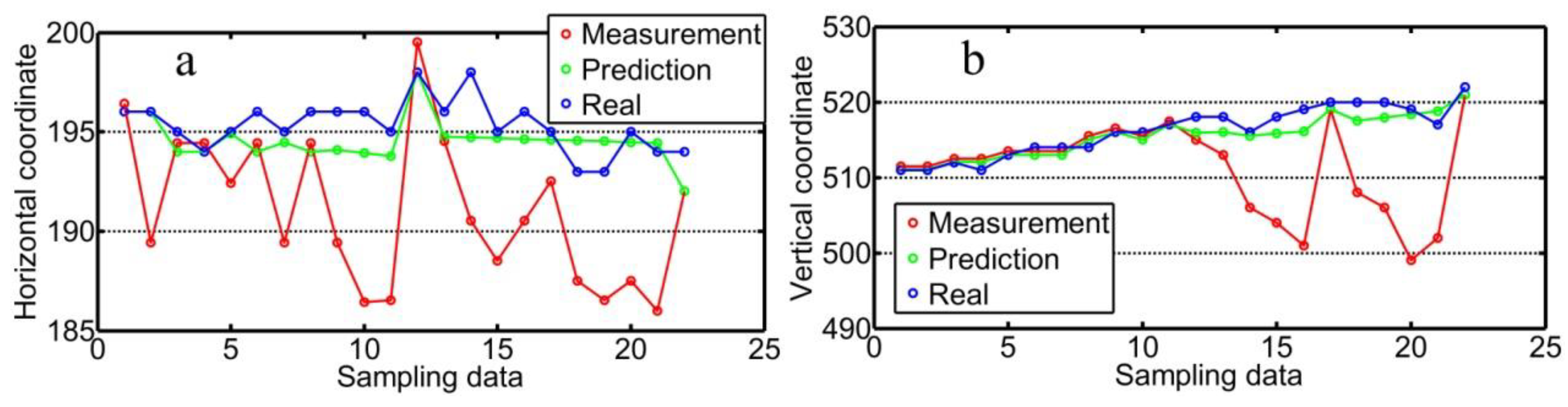

4. Cubic Exponential Smoothing for Stabilizing Feature Point Identification Process

| Algorithm 1 Feature point optimization process using cubic exponential smoothing. |

| Input |

| Output |

| • Feature point ineffectiveness judgement: |

| * |

| * Calculate , and |

| * Calculate |

| • is replaced with |

| is the coordinate of the current identified feature point. |

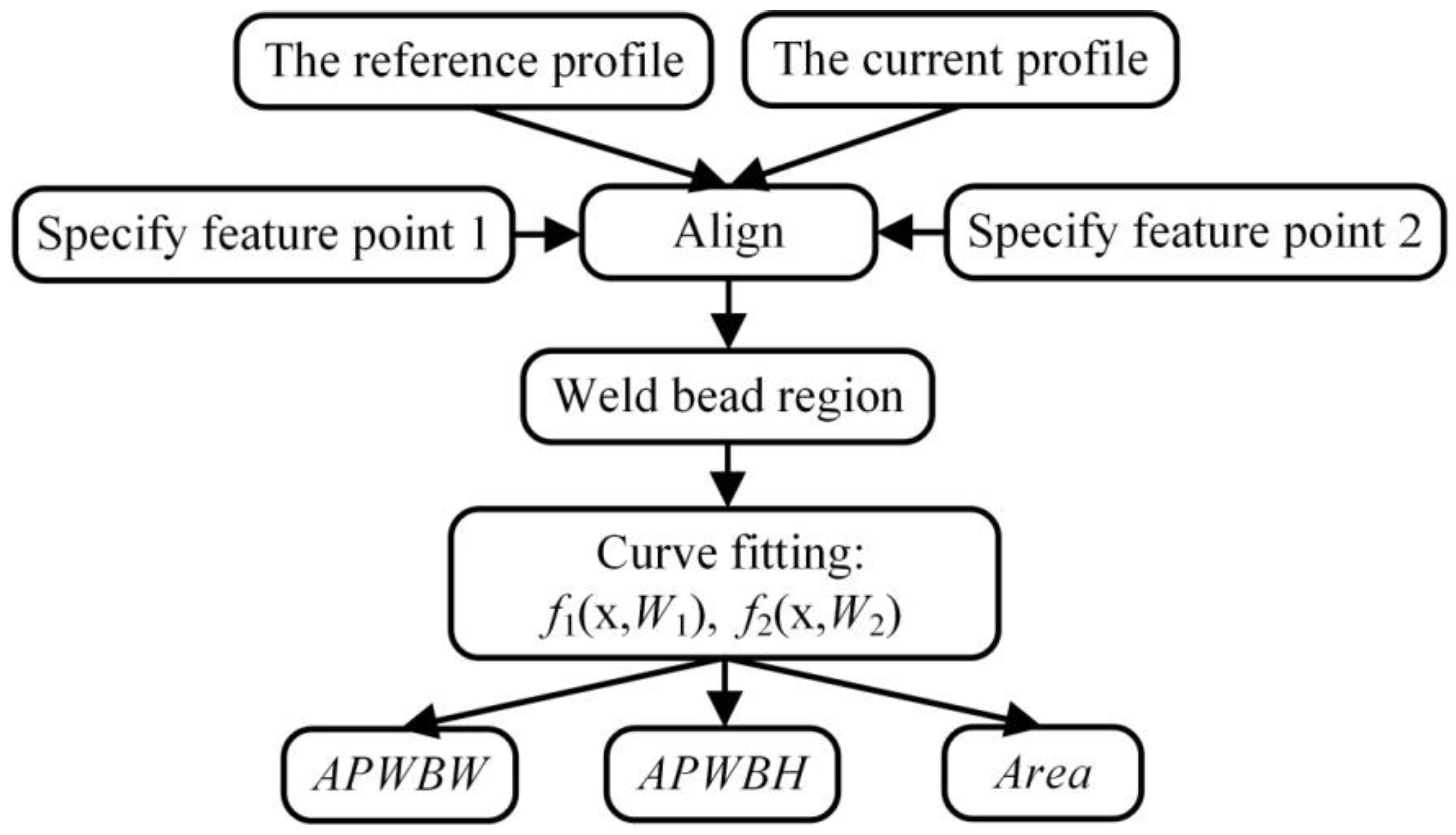

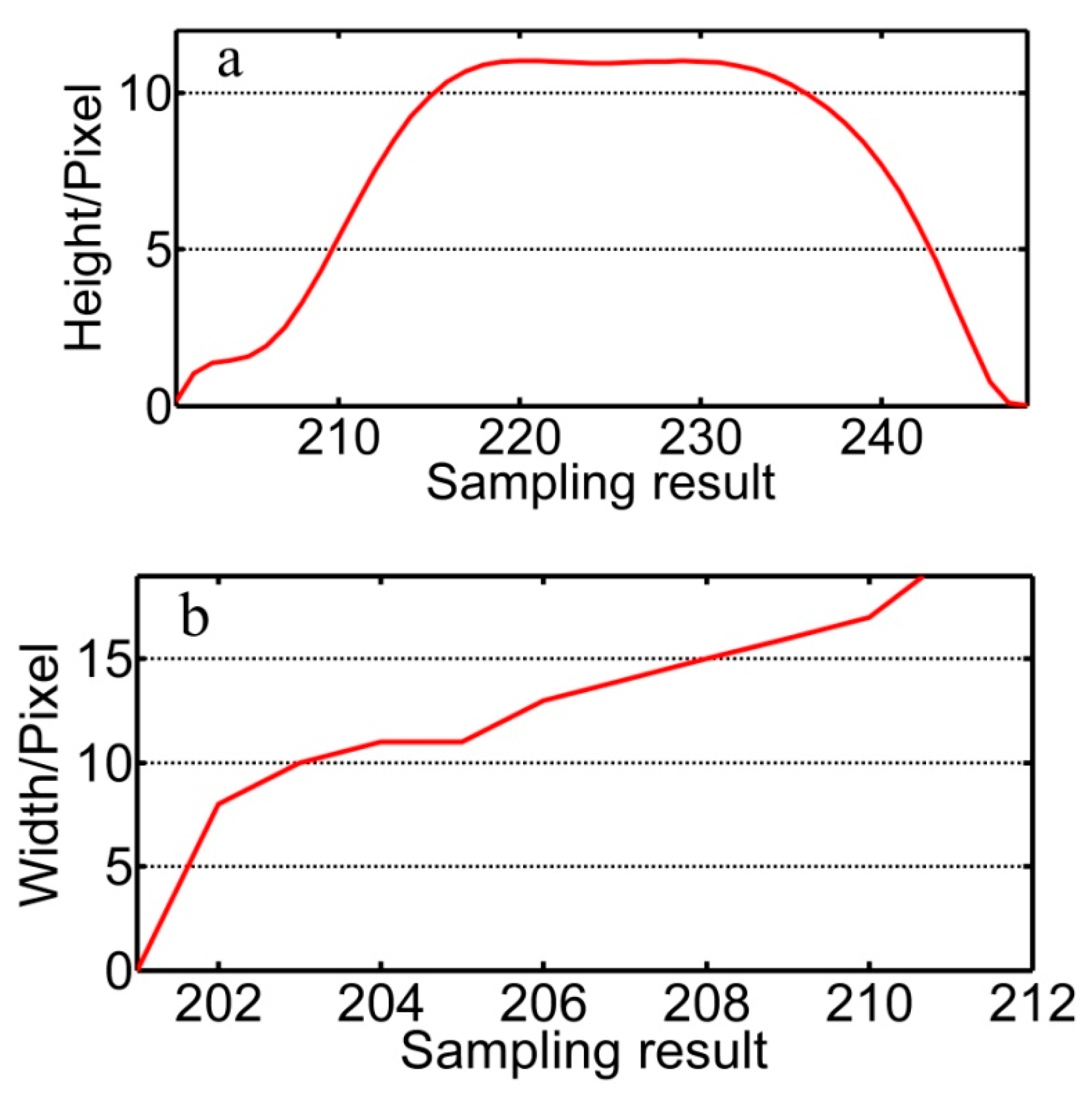

5. Modeling WBGFs

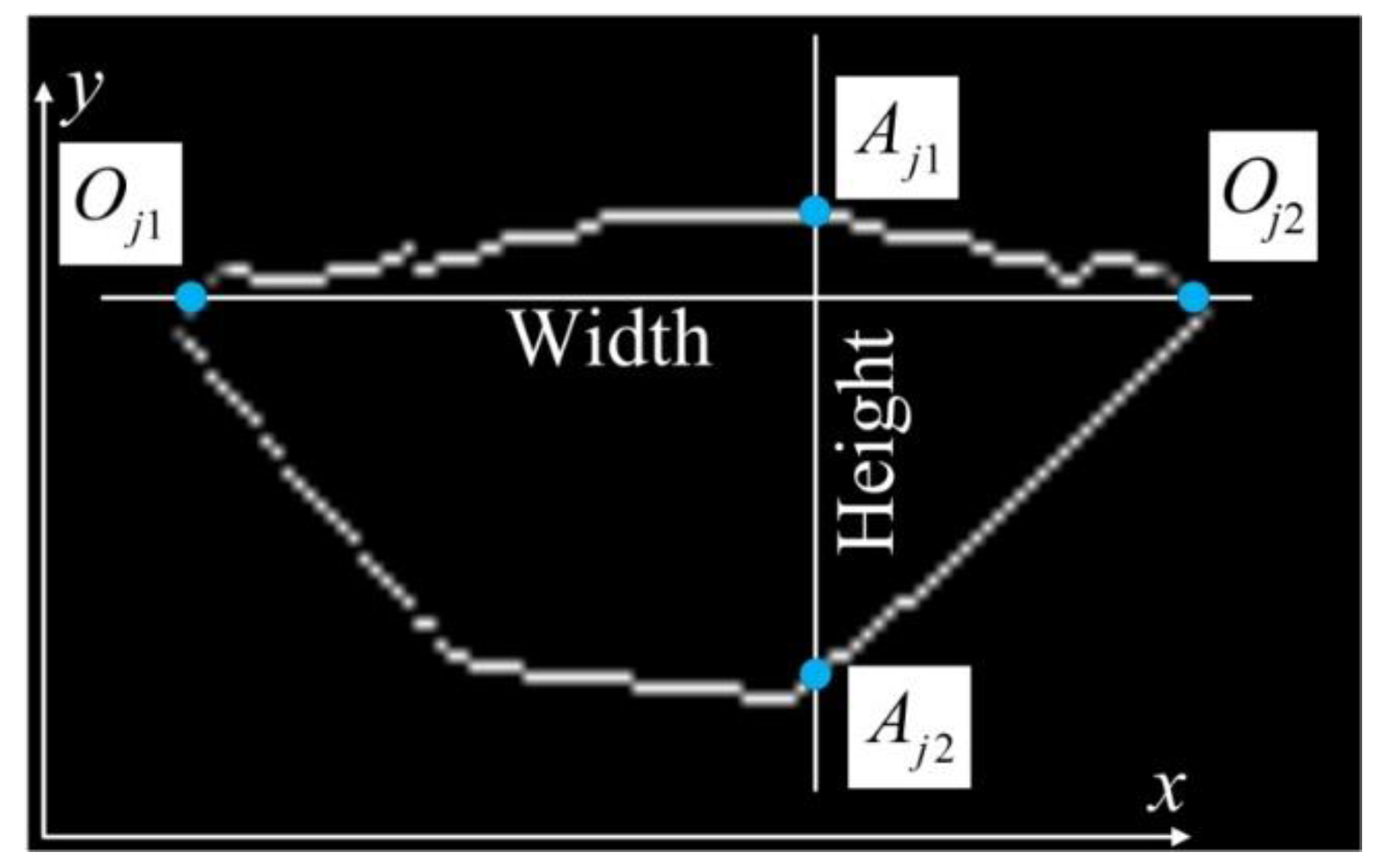

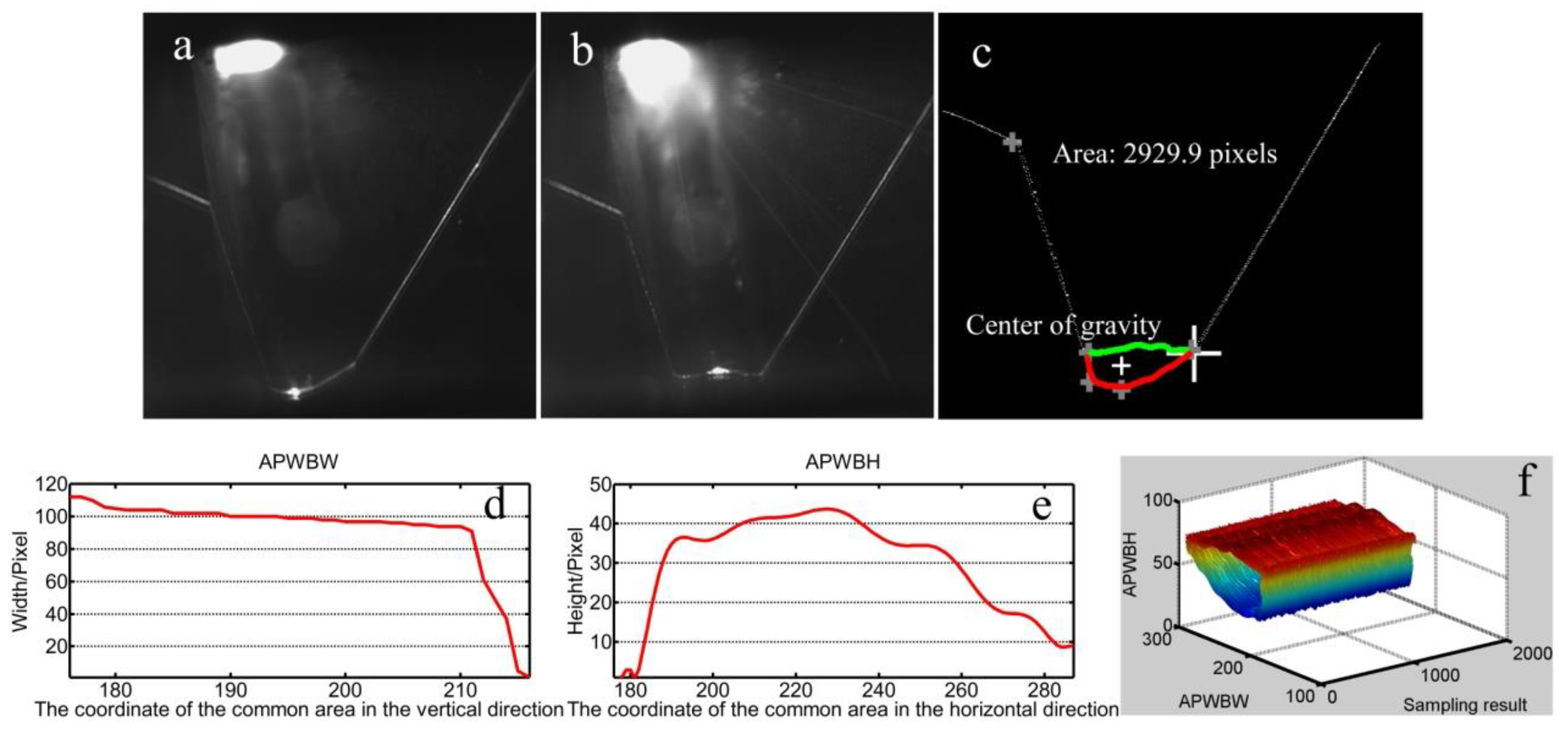

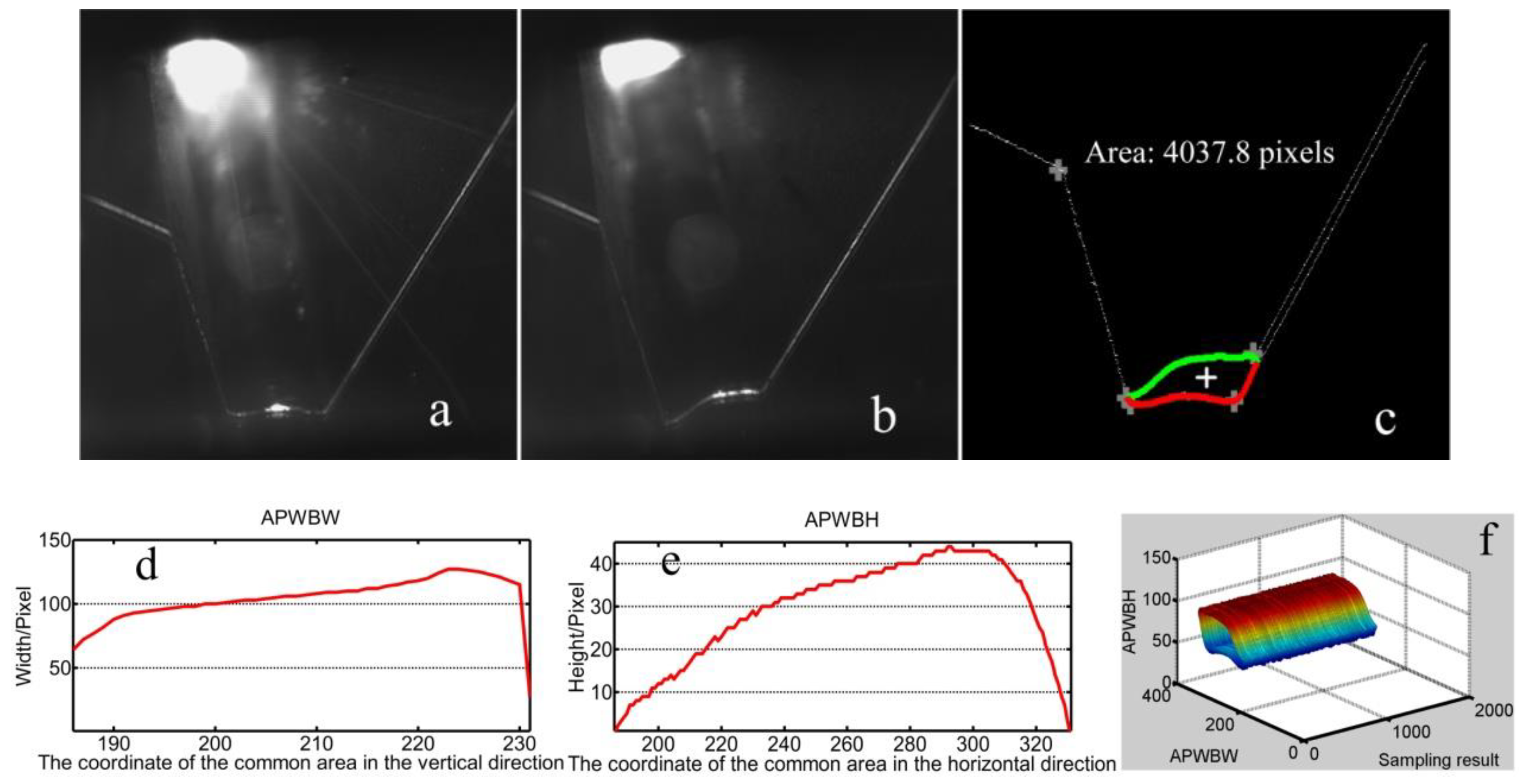

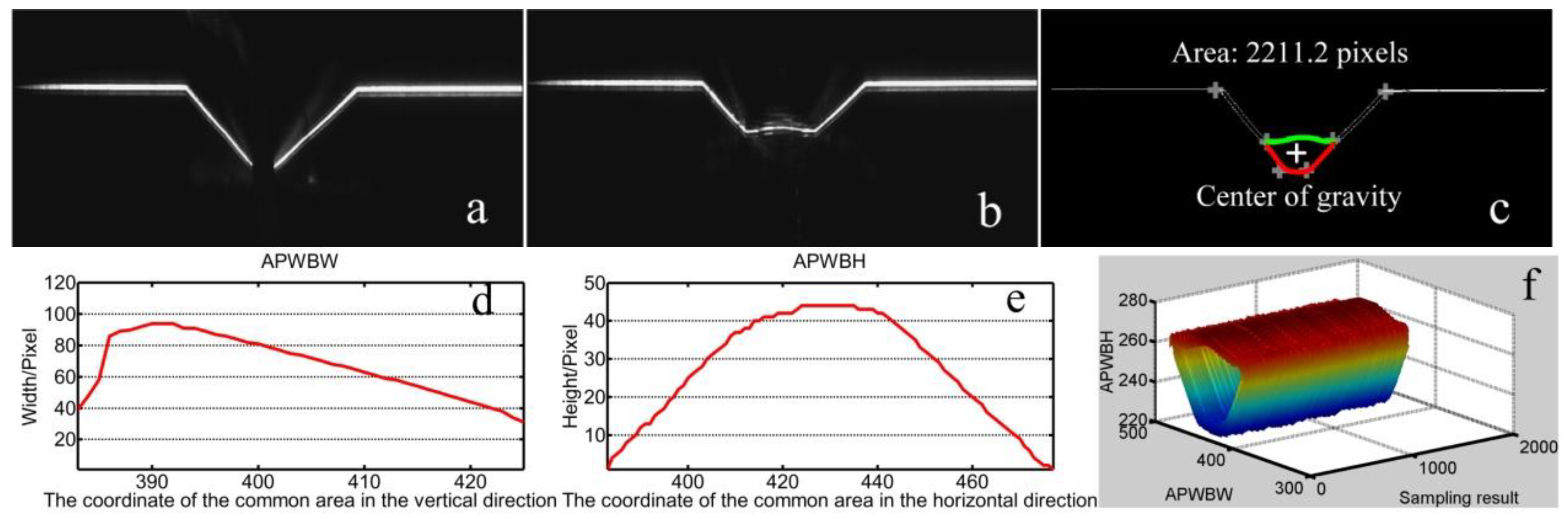

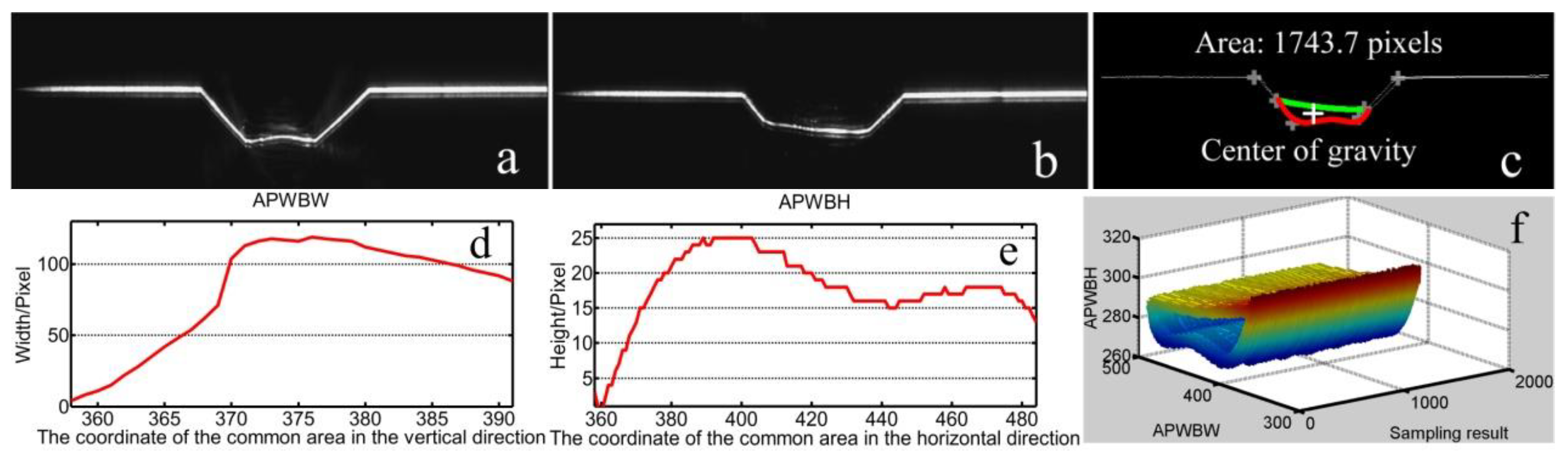

5.1. Sub Pixel Discrimination of WBGFs

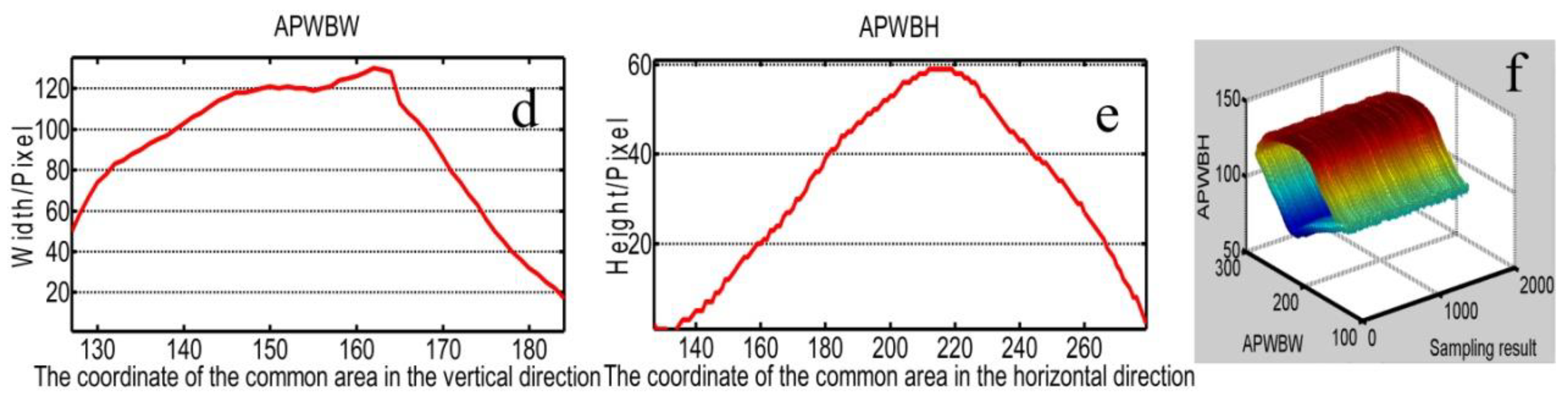

5.2. Modeling Process of WBGFs

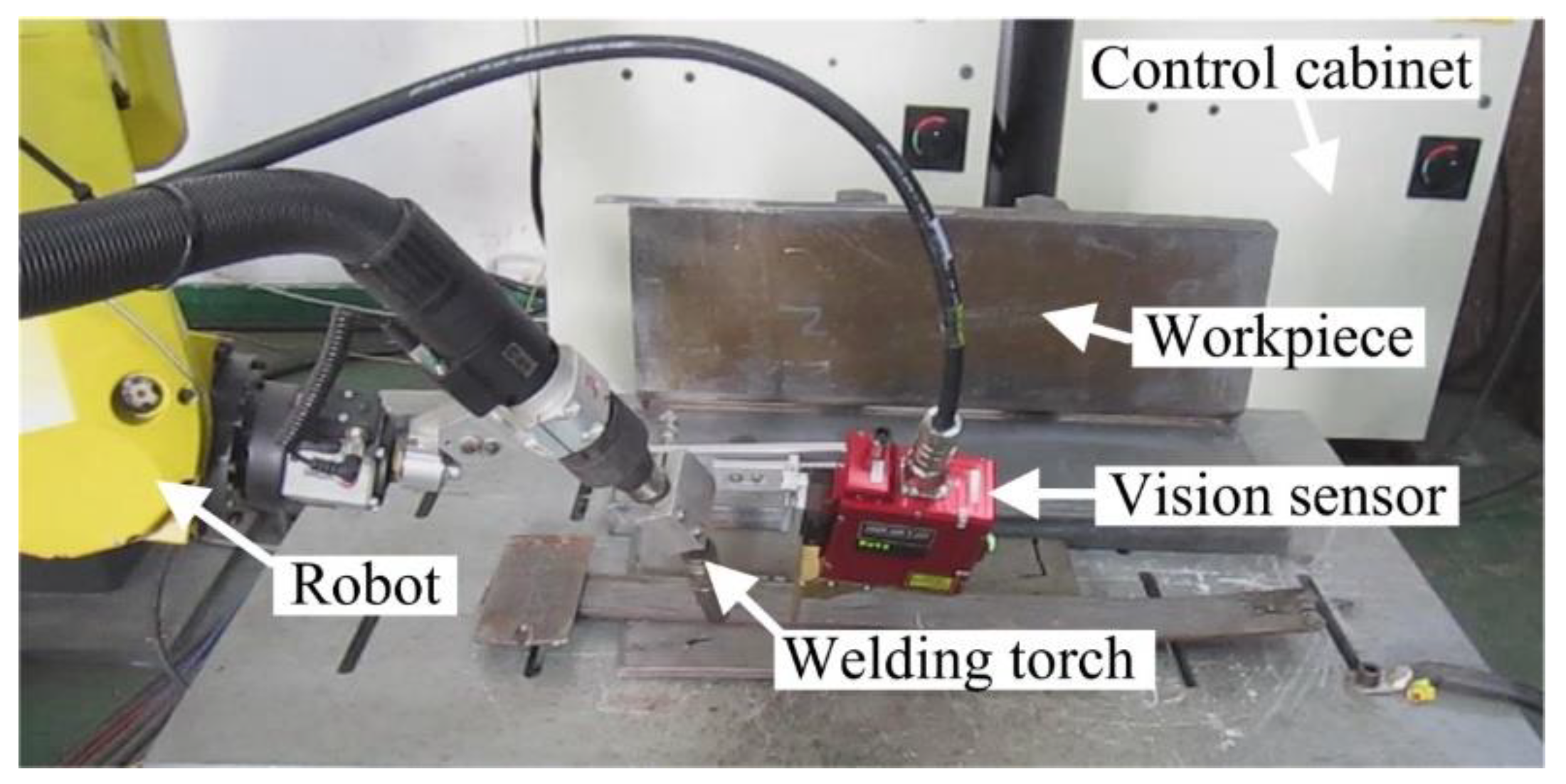

6. Experimental Results

7. Discussion

8. Conclusions

- (1)

- The proposed feature point identification method combined with the weld seam profile extraction method adapts to the various weld seam profiles. It provides the valuable reference to visual information acquisition for visual-sensing-based automated welding.

- (2)

- The proposed fault detection and diagnosis of feature point identification based on the cubic exponential smoothing method shows that this optimization process enhances the identification accuracy to 1.50 pixels. This method shows its potential application value for improving tracking accuracy and welding quality.

- (3)

- The proposed modeling method in this work can obtain the area, center of gravity, and all-position width and height of the weld bead in real time in gas metal arc welding with typical joints. This modeling method provides more effective evidence to control the weld formation and planning, particularly during the multipass arc welding process.

Author Contributions

Funding

Conflicts of Interest

References

- Henckell, P.; Gierth, M.; Ali, Y.; Reimann, J.; Bergmann, J. Reduction of Energy Input in Wire Arc Additive Manufacturing (WAAM) with Gas Metal Arc Welding (GMAW). Materials 2020, 13, 2491. [Google Scholar] [CrossRef]

- Gannon, L.; Liu, Y.; Pegg, N.; Smith, M. Effect of welding sequence on residual stress and distortion in flat-bar stiffened plates. Mar. Struct. 2010, 23, 385–404. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, Y.; Wu, L. Adaptive interval model control of weld pool surface in pulsed gas metal arc welding. Automatica 2012, 48, 233–238. [Google Scholar] [CrossRef]

- Xue, B.; Chang, B.; Peng, G.; Gao, Y.; Tian, Z.; Du, D.; Wang, G. A Vision Based Detection Method for Narrow Butt Joints and a Robotic Seam Tracking System. Sensors 2019, 19, 1144. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Wen, G.; Chen, S. Audible Sound-based Intelligent Evaluation for Aluminum Alloy in Robotic Pulsed GTAW: Mechanism, feature selection and defect detection. IEEE Trans. Ind. Inform. 2018, 14, 2973–2983. [Google Scholar] [CrossRef]

- Eda, S.; Ogino, Y.; Asai, S.; Hirata, Y. Numerical study of the influence of gap between plates on weld pool formation in arc spot welding process. Weld. World 2018, 62, 1021–1030. [Google Scholar] [CrossRef]

- Saravanan, S.; Sivagurumanikandan, N.; Raghukandan, K. Effect of process parameters in microstructural and mechanical properties of Nd: YAG laser welded super duplex stainless steel. Mater. Today Proc. 2020. [Google Scholar] [CrossRef]

- Gu, X.; Zhu, K.; Wu, S.; Duan, Z.; Zhu, L. Effect of welding parameters on weld formation quality and tensile-shear property of laser welded SUS301L stainless steel lap filet weld. J. Mater. Res. Technol. 2020, 9, 4840–4854. [Google Scholar] [CrossRef]

- Siddaiah, A.; Singh, B.K.; Mastanaiah, P. Prediction and optimization of weld bead geometry for electron beam welding of AISI 304 stainless steel. Int. J. Adv. Manuf. Tech. 2017, 89, 27–43. [Google Scholar] [CrossRef]

- Satyanarayana, G.; Narayana, K.L.; Nageswara Rao, B. Optimal laser welding process parameters and expected weld bead profile for P92 steel. SN Appl. Sci. 2019, 1, 1291. [Google Scholar] [CrossRef] [Green Version]

- Sharma, P.; Mohal, S. Parametric Optimization of Submerged Arc Welding Process Parameters by Response Surface Methodology. Mater. Today Proc. 2020, 24, 673–682. [Google Scholar] [CrossRef]

- Kun, L.; Chen, X.; Shen, Q.; Pan, Z.; Singh, A.; Jayalakshmi, S.; Konovalov, S. Microstructural evolution and mechanical properties of deep cryogenic treated Cu–Al–Si alloy fabricated by Cold Metal Transfer (CMT) process. Mater. Charact. 2020, 159, 110011. [Google Scholar]

- Xizhang, C.; Su, C.; Wang, Y.; Siddiquee, A.N.; Sergey, K.; Jayalakshmi, S.; Singh, R.A. Cold Metal Transfer (CMT) Based Wire and Arc Additive Manufacture (WAAM) System. J. Surf. Investig. X-ray Synchrotron Neutron Tech. 2018, 12, 1278–1284. [Google Scholar] [CrossRef]

- Thao, D.S.; Kim, I.S. Interaction model for predicting bead geometry for Lab Joint in GMA welding process. Arch. Comput. Mater. Sci. Eng. 2009, 1, 237–244. [Google Scholar]

- Ghosh, A.; Chattopadhyaya, S.; Hloch, S. Prediction of Weld Bead Parameters, Transient Temperature Distribution & HAZ Width of Submerged Arc Welded Structural Steel Plates. Teh. Vjesn. 2012, 19, 617–620. [Google Scholar]

- Sathiya, P.; Jaleel, M. Influence of shielding gas mixtures on bead profile and microstructural characteristics of super austenitic stainless steel weldments by laser welding. Int. J. Adv. Manuf. Technol. 2010, 54, 525–535. [Google Scholar] [CrossRef]

- Jahanzaib, M.; Hussain, S.; Wasim, A.; Aziz, H.; Mirza, A.; Ullah, S. Modeling of weld bead geometry on HSLA steel using response surface methodology. Int. J. Adv. Manuf. Technol. 2017, 89, 2087–2098. [Google Scholar] [CrossRef]

- Neelamegam, C.; Sapineni, V.; Muthukumaran, V.; Tamanna, J. Hybrid Intelligent Modeling for Optimizing Welding Process Parameters for Reduced Activation Ferritic-Martensitic (RAFM) Steel. J. Intell. Learn. Syst. Appl. 2013, 5, 39–47. [Google Scholar] [CrossRef] [Green Version]

- Singh, A.K.; Dey, V.; Rai, R.N.; Debnath, T. Weld Bead Geometry Dimensions Measurement based on Pixel Intensity by Image Analysis Techniques. J. Inst. Eng. India Ser. C 2019, 100, 379–384. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, Y.; Kovacevic, R. Noncontact Ultrasonic Sensing for Seam Tracking in Arc Welding Processes. J. Manuf. Sci. Eng. 1998, 120, 600–608. [Google Scholar] [CrossRef]

- Om, H.; Pandey, S. Effect of heat input on dilution and heat affected zone in submerged arc welding process. Sadhana 2013, 38, 1369–1391. [Google Scholar] [CrossRef] [Green Version]

- Xiao, R.; Xu, Y.; Hou, Z.; Chen, C.; Chen, S. An adaptive feature extraction algorithm for multiple typical seam tracking based on vision sensor in robotic arc welding. Sens. Actuators A Phys. 2019, 297, 111533. [Google Scholar] [CrossRef]

- Li, G.; Hong, Y.; Gao, J.; Hong, B.; Li, X. Welding Seam Trajectory Recognition for Automated Skip Welding Guidance of a Spatially Intermittent Welding Seam Based on Laser Vision Sensor. Sensors 2020, 20, 3657. [Google Scholar] [CrossRef] [PubMed]

- Xiong, J.; Zhang, G. Online measurement of bead geometry in GMAW-based additive manufacturing using passive vision. Meas. Sci. Technol. 2013, 24, 115103. [Google Scholar] [CrossRef]

- Jesús, P.L.; José, S.T.M.; Sadek, A.A. Real-Time Measurement of Width and Height of Weld Beads in GMAW Processes. Sensors 2016, 16, 1500. [Google Scholar]

- Aviles-Viñas, J.F.; Rios-Cabrera, R.; Lopez-Juarez, I. On-line learning of welding bead geometry in industrial robots. Int. J. Adv. Manuf. Technol. 2016, 83, 217–231. [Google Scholar] [CrossRef]

- Rios-Cabrera, R.; Morales-Diaz, A.B.; Aviles-Viñas, J.F.; Lopez-Juarez, I. Robotic GMAW online learning: Issues and experiments. Int. J. Adv. Manuf. Technol. 2016, 87, 2113–2134. [Google Scholar] [CrossRef]

- Luo, H.; Chen, X. Laser visual sensing for seam tracking in robotic arc welding of titanium alloys. Int. J. Adv. Manuf. Technol. 2005, 26, 1012–1017. [Google Scholar] [CrossRef]

- Nguyen, H.-C.; Lee, B.-R. Laser-vision-based quality inspection system for small-bead laser welding. Int. J. Precis. Eng. Manuf. 2014, 15, 415–423. [Google Scholar] [CrossRef]

- Chu, H.-H.; Wang, Z.-Y. A vision-based system for post-welding quality measurement and defect detection. Int. J. Adv. Manuf. Technol. 2016, 86, 3007–3014. [Google Scholar] [CrossRef]

- Yang, L.; Li, E.; Long, T.; Fan, J.; Mao, Y.; Fang, Z.; Liang, Z. A welding quality detection method for arc welding robot based on 3D reconstruction with SFS algorithm. Int. J. Adv. Manuf. Technol. 2018, 94, 1209–1220. [Google Scholar] [CrossRef]

- He, Y.; Chen, Y.; Xu, Y.; Huang, Y.; Chen, S. Autonomous Detection of Weld Seam Profiles via a Model of Saliency-Based Visual Attention for Robotic Arc Welding. J. Intell. Robot. Syst. 2016, 81, 395–406. [Google Scholar] [CrossRef]

- He, Y.; Yu, Z.; Li, J.; Ma, G.; Xu, Y. Fault correction of algorithm implementation for intelligentized robotic multipass welding process based on finite state machines. Robot. Comput. Integr. Manuf. 2019, 59, 28–35. [Google Scholar] [CrossRef]

- He, C.; Yan, X.; Huang, Y. Research on the forecasting of construction accidents with the cubic exponential smoothing method. In Proceedings of the 18th International Symposium on Advancement of Construction Management and Real Estate, Berlin/Heidelberg, Germany, 7–9 November 2014; Yang, D., Qian, Y., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; pp. 415–422. [Google Scholar]

- Li, H.-A.; Zhang, M.; Yu, K.; Zhang, J.; Hua, Q.; Wu, B.; Qi, X.; Yu, Z. Combined Forecasting Model of Cloud Computing Resource Load for Energy-efficient IoT System. IEEE Access 2019, 7, 149542–149553. [Google Scholar] [CrossRef]

- He, Y.; Xu, Y.; Chen, Y.; Chen, H.; Chen, S. Weld seam profile detection and feature point extraction for multi-pass route planning based on visual attention model. Robot. Comput. Integr. Manuf. 2016, 37, 251–261. [Google Scholar] [CrossRef]

- He, Y.; Yu, Z.; Li, J.; Yu, L.; Ma, G. Discerning Weld Seam Profiles from Strong Arc Background for the Robotic Automated Welding Process via Visual Attention Features. Chin. J. Mech. Eng. 2020, 33, 21. [Google Scholar] [CrossRef] [Green Version]

- Chen, H.; Liu, W.; Huang, L.; Xing, G.; Wang, M.; Sun, H. The decoupling visual feature extraction of dynamic three-dimensional V-type seam for gantry welding robot. Int. J. Adv. Manuf. Technol. 2015, 80, 1741–1749. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Gu, W.P.; Xiong, Z.Y.; Wan, W. Autonomous seam acquisition and tracking system for multi-pass welding based on vision sensor. Int. J. Adv. Manuf. Technol. 2013, 69, 451–460. [Google Scholar] [CrossRef]

- He, Y.; Yu, Z.; Li, J.; Ma, G. Weld seam profile extraction using top-down visual attention and fault detection and diagnosis via EWMA for the stable robotic welding process. Int. J. Adv. Manuf. Technol. 2019, 104, 3883–3897. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Y.; Li, D.; Pan, Z.; Ma, G.; Yu, L.; Yuan, H.; Le, J. Dynamic Modeling of Weld Bead Geometry Features in Thick Plate GMAW Based on Machine Vision and Learning. Sensors 2020, 20, 7104. https://doi.org/10.3390/s20247104

He Y, Li D, Pan Z, Ma G, Yu L, Yuan H, Le J. Dynamic Modeling of Weld Bead Geometry Features in Thick Plate GMAW Based on Machine Vision and Learning. Sensors. 2020; 20(24):7104. https://doi.org/10.3390/s20247104

Chicago/Turabian StyleHe, Yinshui, Daize Li, Zengxi Pan, Guohong Ma, Lesheng Yu, Haitao Yuan, and Jian Le. 2020. "Dynamic Modeling of Weld Bead Geometry Features in Thick Plate GMAW Based on Machine Vision and Learning" Sensors 20, no. 24: 7104. https://doi.org/10.3390/s20247104

APA StyleHe, Y., Li, D., Pan, Z., Ma, G., Yu, L., Yuan, H., & Le, J. (2020). Dynamic Modeling of Weld Bead Geometry Features in Thick Plate GMAW Based on Machine Vision and Learning. Sensors, 20(24), 7104. https://doi.org/10.3390/s20247104