Human Sentiment and Activity Recognition in Disaster Situations Using Social Media Images Based on Deep Learning

Abstract

1. Introduction

- We diversify sentiment analysis to visuals and combined with associated human activity to a more difficult and critical problem of disaster analysis, typically requiring several artifacts and other relevant details in the context of images.

- We suggest deep learning models fusion architecture for an automated sentiment with associated human activity analysis based on realistic social media disaster-related images.

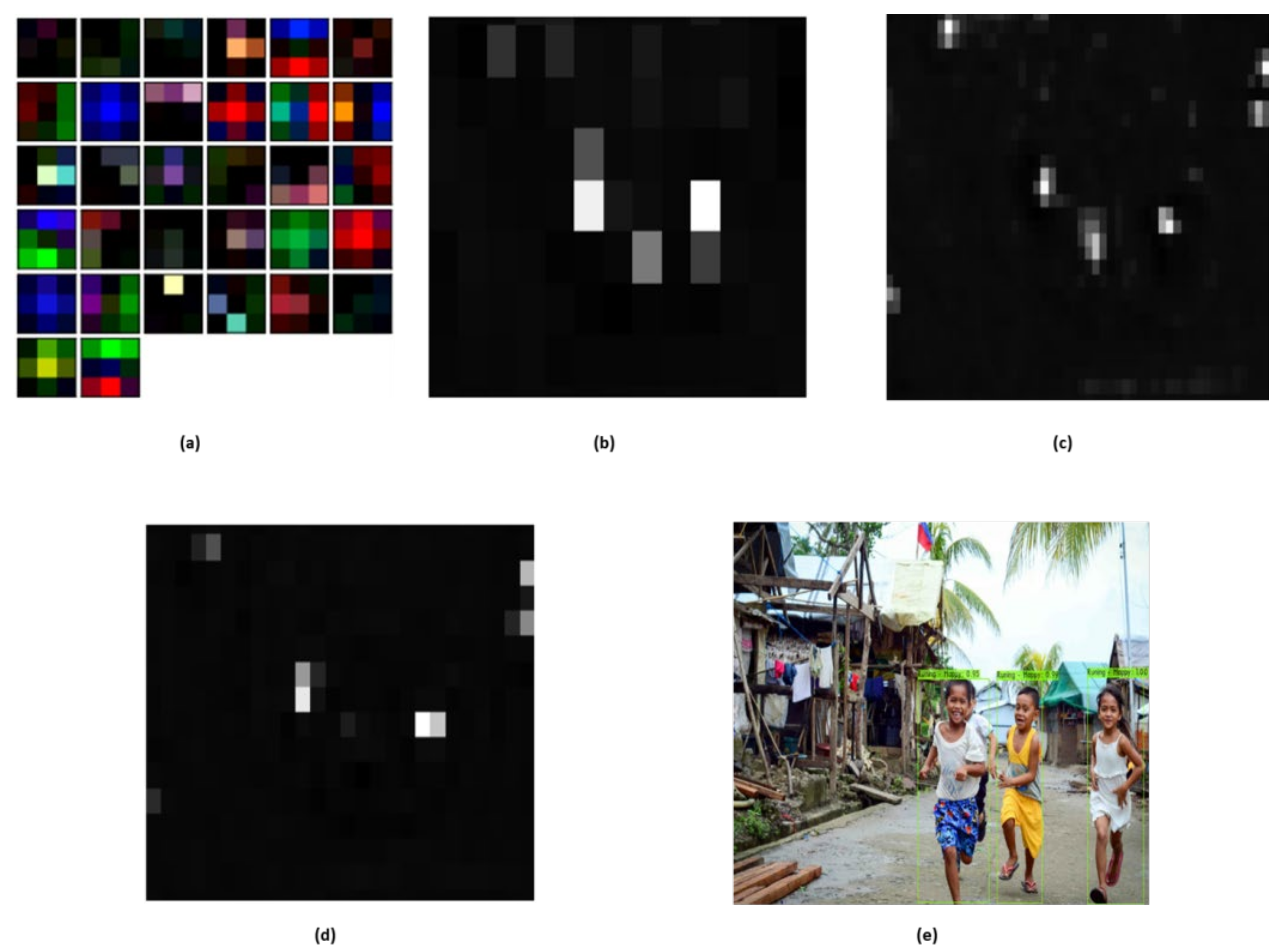

- We fused a deep human count tracker with the YOLO model that enables tracking multi persons in the occluded environment with reduced identity switches and provides an exact count of people on risk in visual content in a disastrous situation.

- Presuming that the proposed deep framework exhibits differently to an image by retrieving diverse but harmonize image features, we evaluate various benchmark pre-trained deep models separately or in combination.

- We conducted a crowd survey to annotate a disaster-related image dataset containing annotations for human sentiments and corresponding activity responses that are collected from social networks, in which 1210 participants annotated 3995 images.

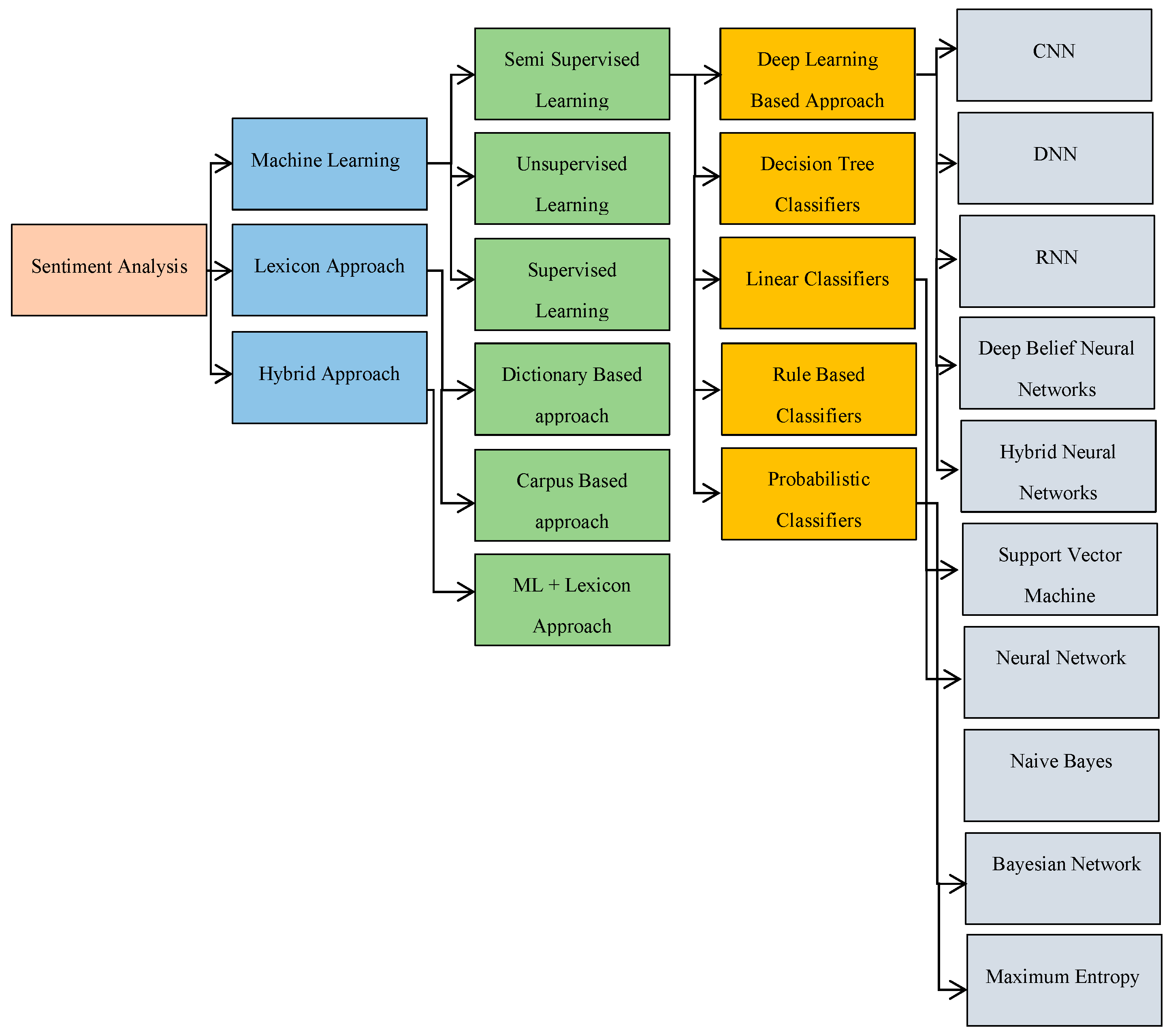

2. Related Work

3. Proposed Methodology

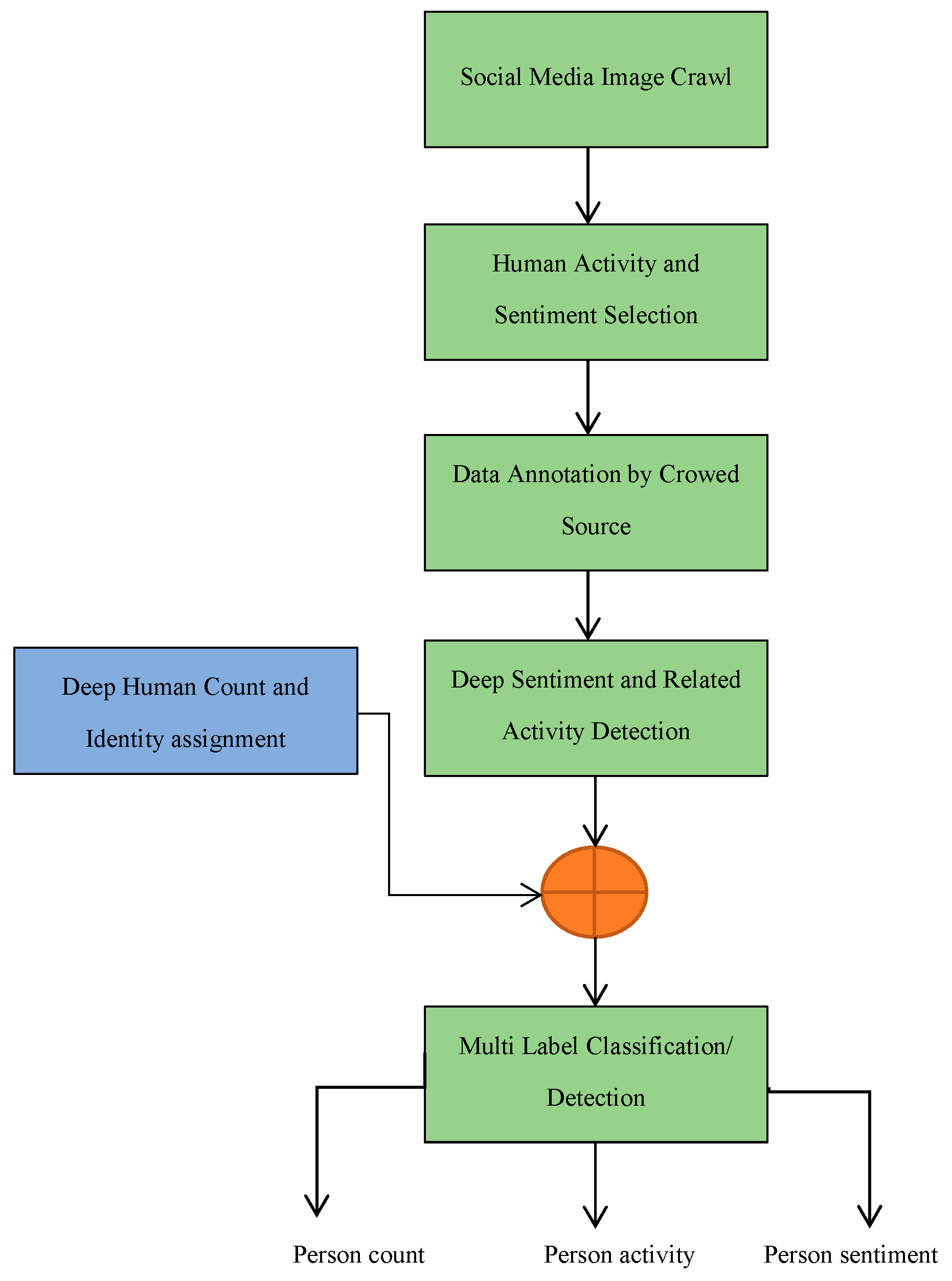

3.1. Data Crawling and Categorization of Human Activity with Associated Activity

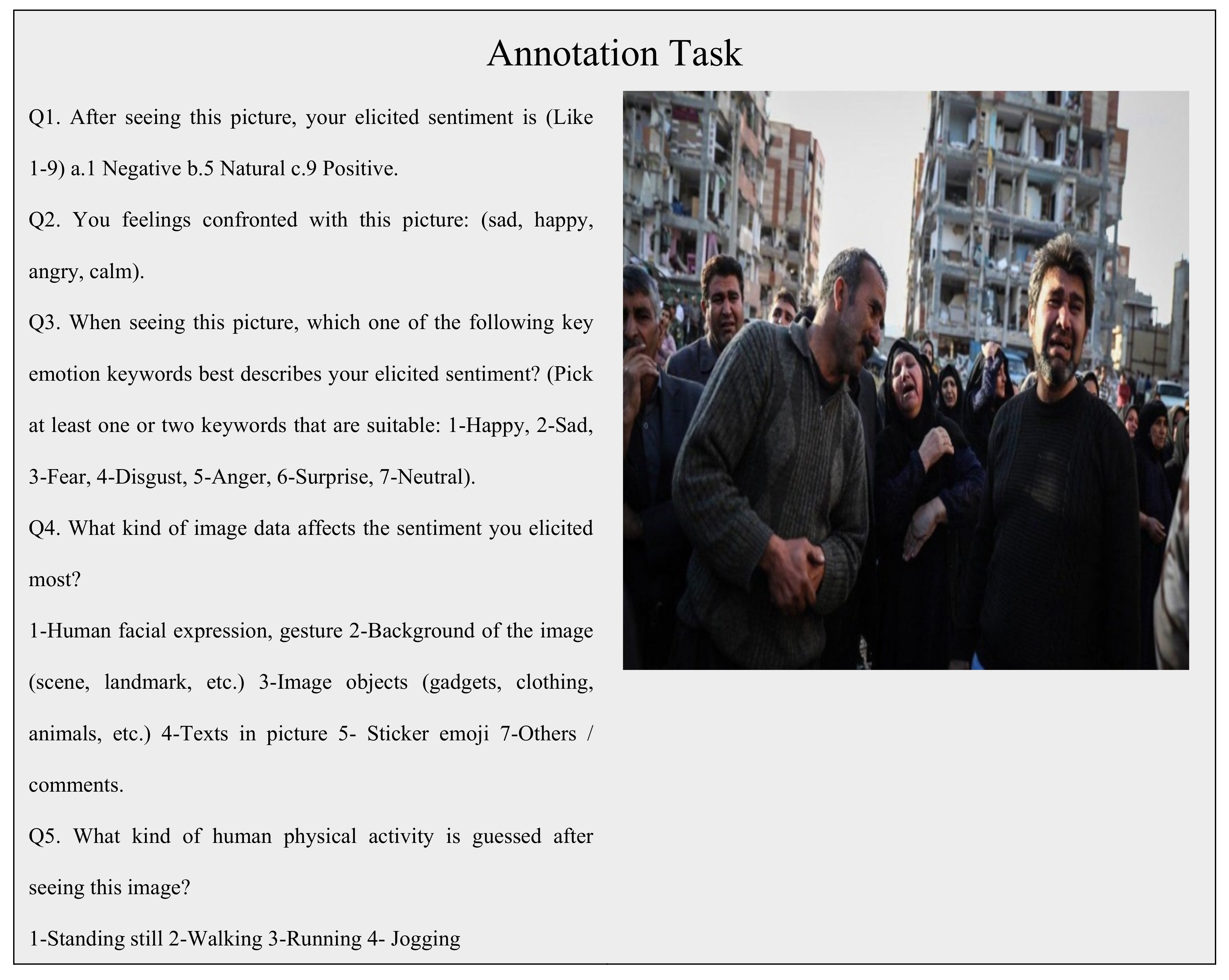

3.2. Crowdsourcing Study

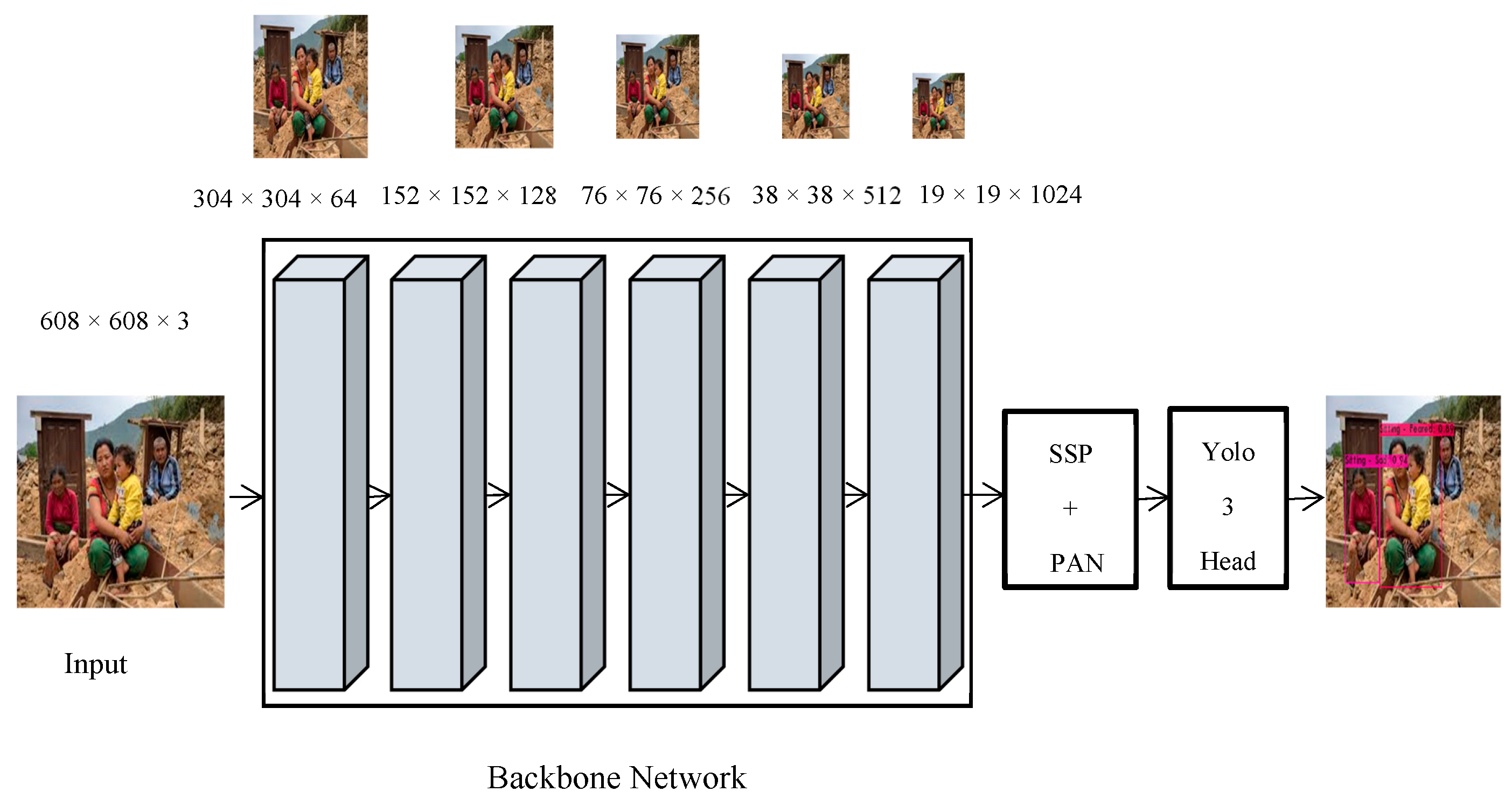

3.3. Deep Sentiment and Associated Activity Detector

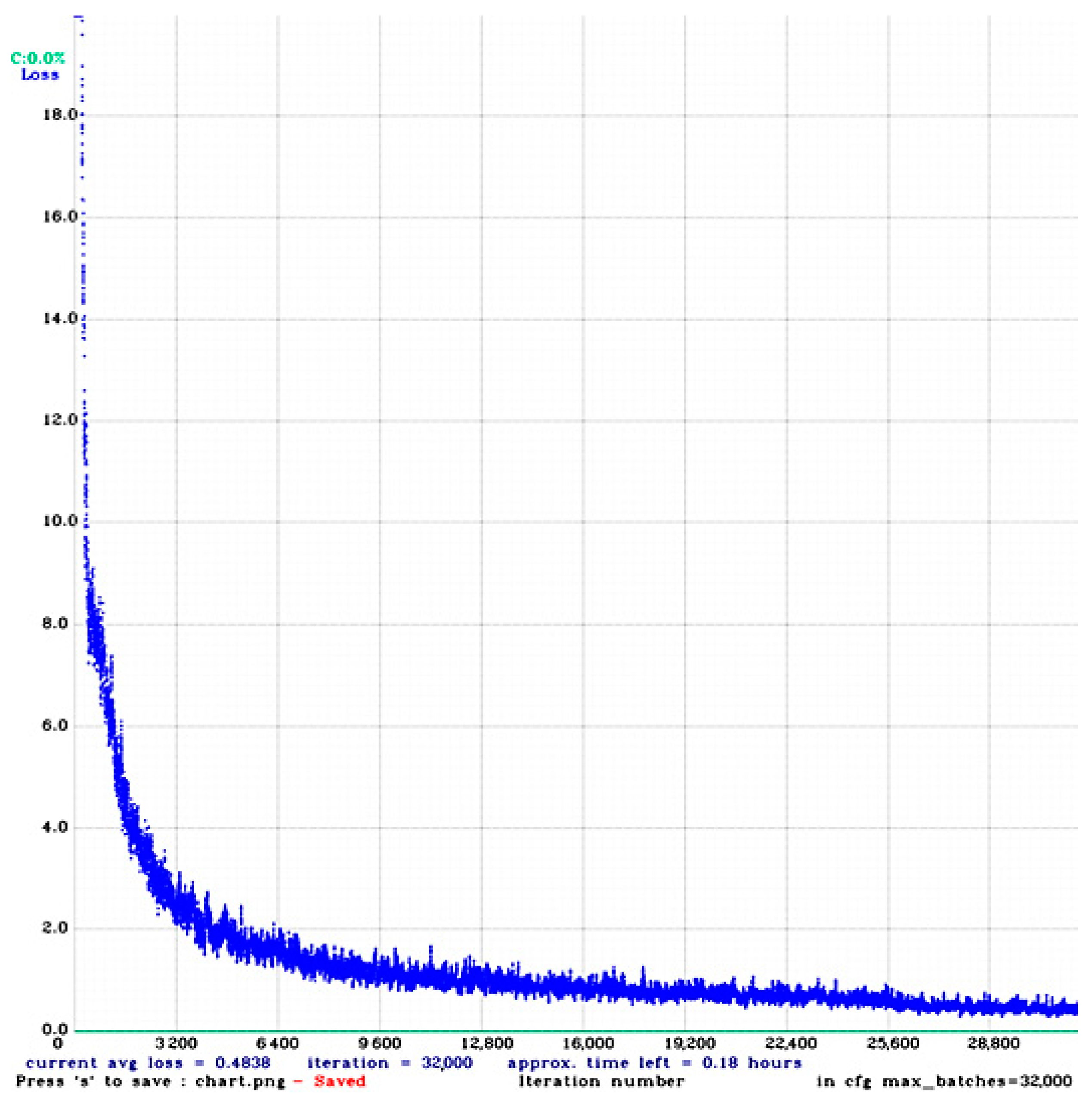

Loss Function

3.4. Deep Count and Identity Assignment

4. Experiments and Evaluations

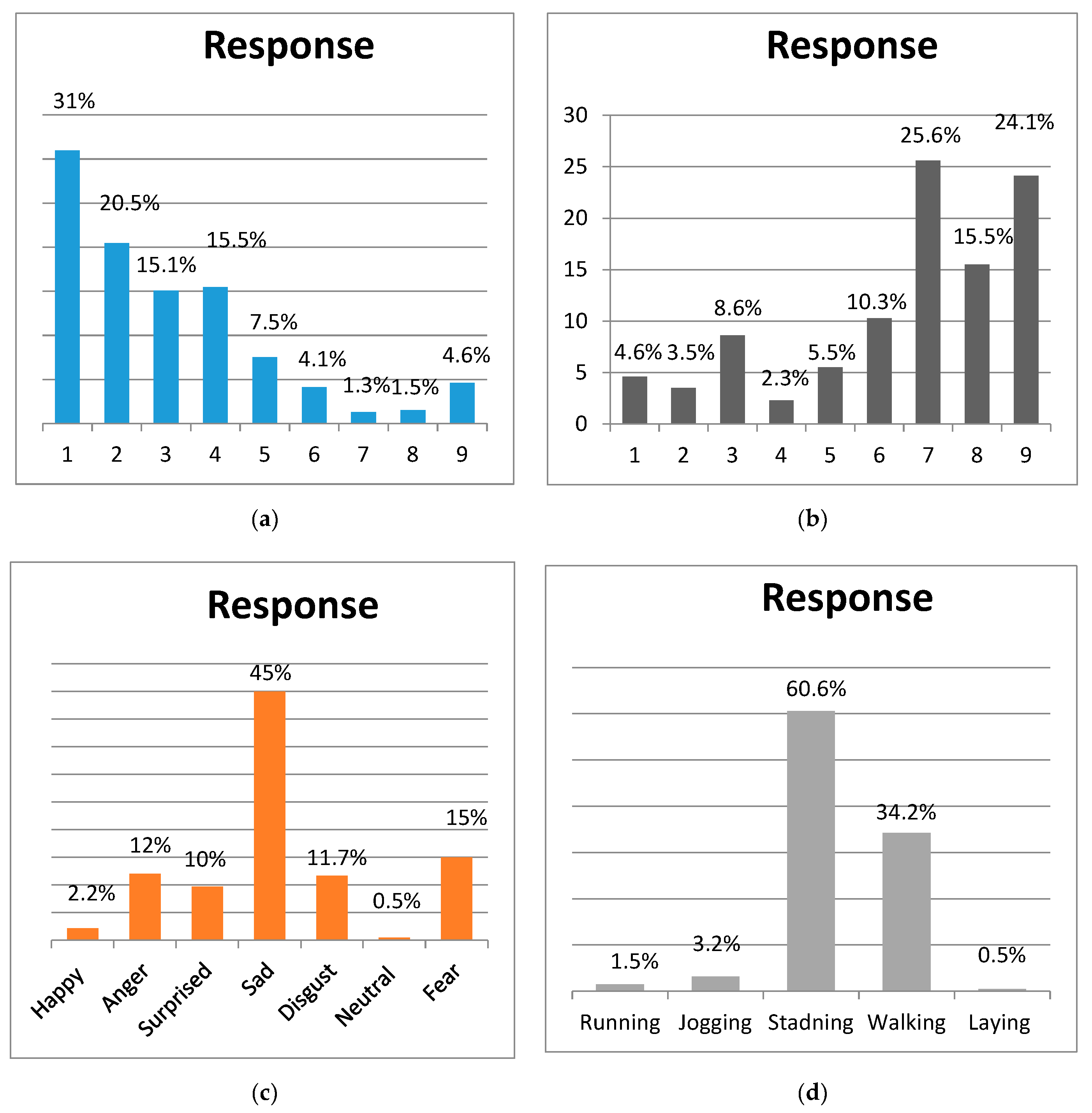

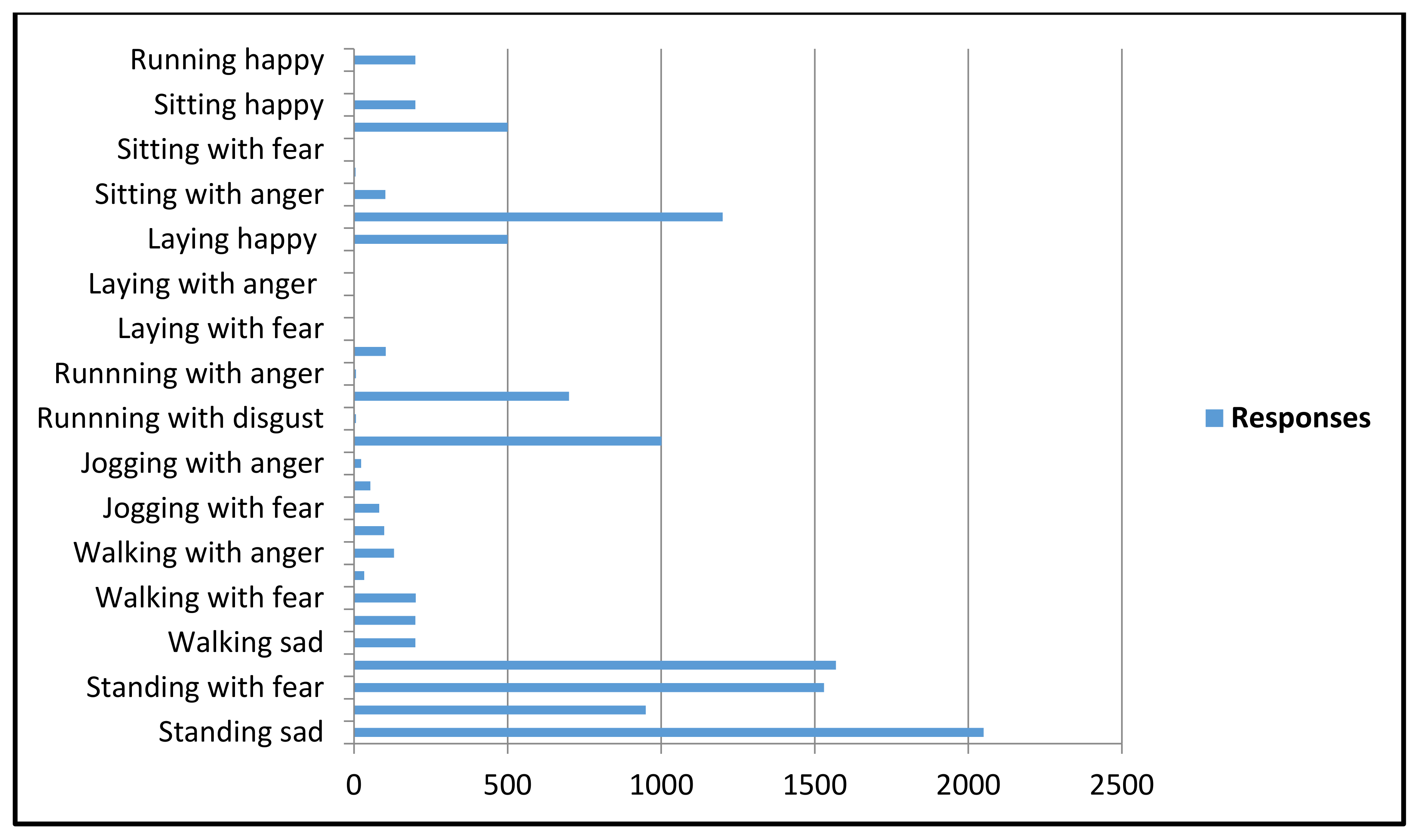

4.1. Crowed Source Analysis and Dataset

4.2. Experimental Results

- MOTA (Multi-object tracking accuracy) is the primary metric that summarizes cumulative detection accuracy in terms of false positives, false negatives, and identity switches. MOTA can be described using Equation (9):where represents missed targets and false positives (ghost paths) are , and the number of identity changes at time t is . In case the intersection of union with the ground truth is inferior to a specified threshold, a goal is deemed missing. It is worth noting that the values for MOTA can be negative:

- MOTP (Multi-Object Tracking Precision) is a relative difference between all true positives and associated actual targets. This is determined as bounding box overlap, as:where represents similarity in frame t and , with its allocated ground truth object, the bounding box overlaps target i. Thus, MOTP presents the overall overlap for all correlative predictions and ground truth targets that scales from 50 percent to 100 percent:

- MT (Mostly Tracked) is a portion of ground-truth records that have at least 80% of their life cycle under the same tag.

- ML (Mostly Lost) is a portion of targets that have a minimum 20% life span under the same tag.

- IDS (Identity Switches) are the number of changes or shifts in a ground-truth track’s recorded identity.

- Human sentiment and associated human activity analysis in disastrous situations attempt to derive the perceptions about images from people; therefore, crowd-sourcing appears to be an effective option for obtaining a data set. Nevertheless, it is not straightforward to select labels/tags to perform an effective crowd-sourcing study.

- In applications such as disaster/catastrophe analysis, the three most widely used sentiment tags, namely positive, negative, and neutral combined with associated human activities, are not adequate to completely leverage the ability of visual sentiment and associated human activity analysis. The complexity surges as we broaden the spectrum of sentiment/emotion with associated human activities.

- The plurality of social media images associated with disasters reflects negative feelings (i.e., sorrow, terror, discomfort, rage, fear, etc.). Nevertheless, we realized that there are a variety of samples that can elicit optimistic feelings, such as excitement, joy, and relief.

- Disaster-related social media images display ample features to elicit emotional responses. In the visual sentiment study of disaster-related images, objects in images (gadgets, clothing, broken buildings, and landmarks), color/contrast, human faces, movements, and poses provide vital signs. This can be a key component in representing the sentiment and associated activities of people.

- As can also be observed from the observations of the crowdsourcing analysis, human sentiments and associated tags are linked or correlated, so a multi-label framework is likely to be the most optimistic direction of research.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bevilacqua, A.; MacDonald, K.; Rangarej, A.; Widjaya, V.; Caulfield, B.; Kechadi, T. Human Activity Recognition with Convolutional Neural Netowrks. arXiv 2019, arXiv:1906.01935. [Google Scholar] [CrossRef]

- Öztürk, N.; Ayvaz, S. Sentiment analysis on Twitter: A text mining approach to the Syrian refugee crisis. Telemat. Inform. 2018, 35, 136–147. [Google Scholar] [CrossRef]

- Kušen, E.; Strembeck, M. An Analysis of the Twitter Discussion on the 2016 Austrian Presidential Elections. arXiv 2017, arXiv:1707.09939. [Google Scholar]

- Sadr, H.; Pedram, M.M.; Teshnehlab, M. A Robust Sentiment Analysis Method Based on Sequential Combination of Convolutional and Recursive Neural Networks. Neural. Process. Lett. 2019, 50, 2745–2761. [Google Scholar] [CrossRef]

- Barrett, L.F.; Adolphs, R.; Marsella, S.; Martinez, A.M.; Pollak, S.D. Emotional Expressions Reconsidered: Challenges to Inferring Emotion From Human Facial Movements. Psychol. Sci. Public Interest 2019. [Google Scholar] [CrossRef] [PubMed]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. arXiv 2019, arXiv:1812.08008. [Google Scholar] [CrossRef]

- Poria, S.; Majumder, N.; Hazarika, D.; Cambria, E.; Gelbukh, A.; Hussain, A. Multimodal Sentiment Analysis: Addressing Key Issues and Setting Up the Baselines. IEEE Intell. Syst. 2018, 33, 17–25. [Google Scholar] [CrossRef]

- Imran, M.; Ofli, F.; Caragea, D.; Torralba, A. Using AI and Social Media Multimodal Content for Disaster Response and Management: Opportunities, Challenges, and Future Directions. Inf. Process. Manag. 2020, 57, 102261. [Google Scholar] [CrossRef]

- Cognative Robotics Lab-Tongmyong University. Available online: http://tubo.tu.ac.kr/ (accessed on 9 November 2020).

- Huq, M.R.; Ali, A.; Rahman, A. Sentiment analysis on Twitter data using KNN and SVM. IJACSA Int. J. Adv. Comput. Sci. Appl. 2017, 8, 19–25. [Google Scholar]

- Soni, S.; Sharaff, A. Sentiment analysis of customer reviews based on hidden markov model. In Proceedings of the 2015 International Conference on Advanced Research in Computer Science Engineering & Technology (ICARCSET 2015), Unnao, India, 6–7 March 2015; pp. 1–5. [Google Scholar]

- Pandey, A.C.; Rajpoot, D.S.; Saraswat, M. Twitter sentiment analysis using hybrid cuckoo search method. Inf. Process. Manag. 2017, 53, 764–779. [Google Scholar] [CrossRef]

- Dang, N.C.; Moreno-García, M.N.; De la Prieta, F. Sentiment Analysis Based on Deep Learning: A Comparative Study. Electronics 2020, 9, 483. [Google Scholar] [CrossRef]

- Zhang, X.; Zheng, X. Comparison of text sentiment analysis based on machine learning. In Proceedings of the 2016 15th International Symposium on Parallel and Distributed Computing (ISPDC), Fuzhou, China, 8–10 July 2016; pp. 230–233. [Google Scholar]

- Malik, V.; Kumar, A. Sentiment Analysis of Twitter Data Using Naive Bayes Algorithm. Int. J. Recent Innov. Trends Comput. Commun. 2018, 6, 120–125. [Google Scholar]

- Firmino Alves, A.L.; Baptista, C.D.S.; Firmino, A.A.; de Oliveira, M.G.; de Paiva, A.C. A Comparison of SVM versus naive-bayes techniques for sentiment analysis in tweets: A case study with the 2013 FIFA confederations cup. In Proceedings of the 20th Brazilian Symposium on Multimedia and the Web, João Pessoa, Brazil, 18–23 November 2014; pp. 123–130. [Google Scholar]

- Ortis, A.; Farinella, G.M.; Battiato, S. Survey on Visual Sentiment Analysis. arXiv 2020, arXiv:2004.11639. [Google Scholar] [CrossRef]

- Priya, D.T.; Udayan, J.D. Affective emotion classification using feature vector of image based on visual concepts. Int. J. Electr. Eng. Educ. 2020. [Google Scholar] [CrossRef]

- Machajdik, J.; Hanbury, A. Affective image classification using features inspired by psychology and art theory. In Proceedings of the 18th ACM international conference on Multimedia, Firenze, Italy, 25–29 October 2010; Association for Computing Machinery: New York, NY, USA; pp. 83–92. [Google Scholar]

- Yadav, A.; Vishwakarma, D.K. Sentiment analysis using deep learning architectures: A review. Artif. Intell. Rev. 2020, 53, 4335–4385. [Google Scholar] [CrossRef]

- Seo, S.; Kim, C.; Kim, H.; Mo, K.; Kang, P. Comparative Study of Deep Learning-Based Sentiment Classification. IEEE Access 2020, 8, 6861–6875. [Google Scholar] [CrossRef]

- Borth, D.; Ji, R.; Chen, T.; Breuel, T.; Chang, S.-F. Large-scale visual sentiment ontology and detectors using adjective noun pairs. In Proceedings of the 21st ACM international conference on Multimedia, Barcelona, Spain, 21–25 October 2013; Association for Computing Machinery: New York, NY, USA; pp. 223–232. [Google Scholar]

- Chen, T.; Borth, D.; Darrell, T.; Chang, S.-F. DeepSentiBank: Visual Sentiment Concept Classification with Deep Convolutional Neural Networks. arXiv 2014, arXiv:1410.8586. [Google Scholar]

- Al-Halah, Z.; Aitken, A.; Shi, W.; Caballero, J. Smile, Be Happy :) Emoji Embedding for Visual Sentiment Analysis. arXiv 2020, arXiv:1907.06160 [cs]. [Google Scholar]

- Huang, F.; Wei, K.; Weng, J.; Li, Z. Attention-Based Modality-Gated Networks for Image-Text Sentiment Analysis. ACM Trans. Multimedia Comput. Commun. Appl. 2020, 16, 79. [Google Scholar] [CrossRef]

- He, J.; Zhang, Q.; Wang, L.; Pei, L. Weakly Supervised Human Activity Recognition From Wearable Sensors by Recurrent Attention Learning. IEEE Sens. J. 2019. [Google Scholar] [CrossRef]

- Memiş, G.; Sert, M. Detection of Basic Human Physical Activities With Indoor–Outdoor Information Using Sigma-Based Features and Deep Learning. IEEE Sens. J. 2019. [Google Scholar] [CrossRef]

- Zhou, X.; Liang, W.; Wang, K.I.-K.; Wang, H.; Yang, L.T.; Jin, Q. Deep-Learning-Enhanced Human Activity Recognition for Internet of Healthcare Things. IEEE Internet Things J. 2020, 7, 6429–6438. [Google Scholar] [CrossRef]

- Chen, W.-H.; Cho, P.-C.; Jiang, Y.-L. Activity recognition using transfer learning. Sens. Mater 2017, 29, 897–904. [Google Scholar]

- Hu, N.; Lou, Z.; Englebienne, G.; Kröse, B.J. Learning to Recognize Human Activities from Soft Labeled Data. In Proceedings of the Robotics: Science and Systems X, Berkeley, CA, USA, 12–16 July 2014. [Google Scholar]

- Amin, M.S.; Yasir, S.M.; Ahn, H. Recognition of Pashto Handwritten Characters Based on Deep Learning. Sensors 2020, 20, 5884. [Google Scholar] [CrossRef]

- Alex, P.M.D.; Ravikumar, A.; Selvaraj, J.; Sahayadhas, A. Research on Human Activity Identification Based on Image Processing and Artificial Intelligence. Int. J. Eng. Technol. 2018, 7. [Google Scholar]

- Jaouedi, N.; Boujnah, N.; Bouhlel, M.S. A new hybrid deep learning model for human action recognition. J. King Saud Univ. Comput. Inf. Sci. 2020, 32, 447–453. [Google Scholar] [CrossRef]

- Antón, M.Á.; Ordieres-Meré, J.; Saralegui, U.; Sun, S. Non-Invasive Ambient Intelligence in Real Life: Dealing with Noisy Patterns to Help Older People. Sensors 2019, 19, 3113. [Google Scholar] [CrossRef]

- Shahmohammadi, F.; Hosseini, A.; King, C.E.; Sarrafzadeh, M. Smartwatch based activity recognition using active learning. In Proceedings of the 2017 IEEE/ACM International Conference on Connected Health: Applications, Systems and Engineering Technologies (CHASE), Philadelphia, PA, USA, 17–19 July 2017; pp. 321–329. [Google Scholar]

- Smartphone-Based Human Activity Recognition Using Bagging and Boosting. Proc. Comput. Sci. 2019, 163, 54–61. [CrossRef]

- Štulienė, A.; Paulauskaite-Taraseviciene, A. Research on human activity recognition based on image classification methods. Comput. Sci. 2017. [Google Scholar]

- Alsheikh, M.A.; Selim, A.; Niyato, D.; Doyle, L.; Lin, S.; Tan, H.-P. Deep activity recognition models with triaxial accelerometers. arXiv 2015, arXiv:1511.04664. [Google Scholar]

- Ronao, C.A.; Cho, S.-B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Bhattacharya, S.; Lane, N.D. From smart to deep: Robust activity recognition on smartwatches using deep learning. In Proceedings of the 2016 IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops), Sydney, NSW, Australia, 14–18 March 2016; 2016; pp. 1–6. [Google Scholar]

- Ospina-Bohórquez, A.; Gil-González, A.B.; Moreno-García, M.N.; de Luis-Reboredo, A. Context-Aware Music Recommender System Based on Automatic Detection of the User’s Physical Activity. In Proceedings of the Distributed Computing and Artificial Intelligence, 17th International Conference, L’Aquila, Italy, 13–19 June 2020; Dong, Y., Herrera-Viedma, E., Matsui, K., Omatsu, S., González Briones, A., Rodríguez González, S., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 142–151. [Google Scholar]

- Luo, J.; Joshi, D.; Yu, J.; Gallagher, A. Geotagging in multimedia and computer vision—A survey. Multimed. Tools Appl. 2011, 51, 187–211. [Google Scholar] [CrossRef]

- de Albuquerque, J.P.; Herfort, B.; Brenning, A.; Zipf, A. A geographic approach for combining social media and authoritative data towards identifying useful information for disaster management. Int. J. Geogr. Inf. Sci. 2015, 29, 667–689. [Google Scholar] [CrossRef]

- Kumar, A.; Singh, J.P.; Dwivedi, Y.K.; Rana, N.P. A deep multi-modal neural network for informative Twitter content classification during emergencies. Ann. Oper. Res. 2020. [Google Scholar] [CrossRef]

- Sadiq Amin, M.; Ahn, H. Earthquake Disaster Avoidance Learning System Using Deep Learning. Cogn. Syst. Res. 2020. [Google Scholar] [CrossRef]

- Soleymani, M.; Garcia, D.; Jou, B.; Schuller, B.; Chang, S.-F.; Pantic, M. A survey of multimodal sentiment analysis. Image Vis. Comput. 2017, 65, 3–14. [Google Scholar] [CrossRef]

- Cowen, A.S.; Keltner, D. Self-report captures 27 distinct categories of emotion bridged by continuous gradients. Proc. Natl. Acad. Sci. USA 2017, 114, E7900–E7909. [Google Scholar] [CrossRef]

- HireOwl:Connecting Businesses to University Students. Available online: https://www.hireowl.com/ (accessed on 7 November 2020).

- Wang, C.-Y.; Mark Liao, H.-Y.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A new backbone that can enhance learning capability of cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–30 June 2016; 2016; pp. 770–778. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. arXiv 2017, arXiv:1612.03144 [cs]. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Kalman, R.E. A New Approach to Liner Filtering and Prediction Problems, Transaction of ASME. J. Basic Eng. 1961, 83, 95–108. [Google Scholar] [CrossRef]

- Chopra, S.; Notarstefano, G.; Rice, M.; Egerstedt, M. A Distributed Version of the Hungarian Method for Multirobot Assignment. IEEE Trans. Robot. 2017, 33, 932–947. [Google Scholar] [CrossRef]

- Zheng, L.; Bie, Z.; Sun, Y.; Wang, J.; Su, C.; Wang, S.; Tian, Q. Mars: A video benchmark for large-scale person re-identification. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 6–16 October 2016; Springer: Cham, Switzerland; pp. 868–884. [Google Scholar]

- Iandola, F.; Moskewicz, M.; Karayev, S.; Girshick, R.; Darrell, T.; Keutzer, K. Densenet: Implementing efficient convnet descriptor pyramids. arXiv 2014, arXiv:1404.1869. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Amancio, D.R.; Comin, C.H.; Casanova, D.; Travieso, G.; Bruno, O.M.; Rodrigues, F.A.; da Fontoura Costa, L. A Systematic Comparison of Supervised Classifiers. PLoS ONE 2014, 9, e94137. [Google Scholar] [CrossRef] [PubMed]

- Milan, A.; Leal-Taixé, L.; Reid, I.; Roth, S.; Schindler, K. MOT16: A benchmark for multi-object tracking. arXiv 2016, arXiv:1603.00831. [Google Scholar]

- Yu, F.; Li, W.; Li, Q.; Liu, Y.; Shi, X.; Yan, J. Poi: Multiple object tracking with high performance detection and appearance feature. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland; pp. 36–42. [Google Scholar]

- Keuper, M.; Tang, S.; Zhongjie, Y.; Andres, B.; Brox, T.; Schiele, B. A multi-cut formulation for joint segmentation and tracking of multiple objects. arXiv 2016, arXiv:1607.06317. [Google Scholar]

- Lee, B.; Erdenee, E.; Jin, S.; Nam, M.Y.; Jung, Y.G.; Rhee, P.K. Multi-class multi-object tracking using changing point detection. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 68–83. [Google Scholar]

- Sanchez-Matilla, R.; Poiesi, F.; Cavallaro, A. Online multi-target tracking with strong and weak detections. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 84–99. [Google Scholar]

| Reference | Techniques | Approach | Input Source | Activity | Performance |

|---|---|---|---|---|---|

| Alex et al. [32] | Naive Bayes, SVM, MLP, RF | Human activity classification | Images | Walking, Sleeping, holding a phone | 86% |

| Jaouedi et al. [33] | Gated Recurrent Unit, KF, GMM | Deep learning for human activity recognition | Video frames | Boxing, walking, running, waving of hands | 96.3% |

| Antón et al. [34] | RF | Deduce high-level non-invasive ambient that helps to predict abnormal behaviors | Ambient sensors | Abnormal activities: Militancy, yelling, vocal violence, physical abuse Hostility | 98.0% |

| Shahmohammadi et al. [35] | RF, Extra Trees, Naive Bayes, Logistic Regression, SV | Classification of human activities from smartwatches | Smartwatch sensors | Walk, run, sitting | 93% |

| Abdulhamit et al. [36] | ANN, k-NN, SVM, Quadratic | Classification of human activities using smartphones | Smartphones sensors | Walking | 84.3% |

| Štulien˙e et al. [37] | AlexNet, CaffeRef, k-NN, SVM, BoF | Activities classification using images | Images | Indoor activities: working on a computer, sleeping, walking | 90.75% |

| Alsheikh et al. [38] | RBM | Deep learning-based activity recognition using triaxial accelerometers | Body sensors | Walking, running, standing | 98% |

| Ronao et al. [39] | ConvNet, SVM | Classification of human activities using smartphones | Smartphone sensors | Walking upstairs, walking downstairs | 95.75% |

| Bhattacharya et al. [40] | RBM | recognition of human activities using smartwatches based on deep learning | Smartwatch sensors (Ambient sensors) | Gesture-based features, walking, running, standing | 72% |

| Sets | Sentiment Tags | Activity Tags |

|---|---|---|

| Set 1 | Negative, Positive, Neutral | Sitting, Standing, Running, Lying, Jogging |

| Set 2 | Happy, Sad, Neutral, Feared | |

| Set 3 | Anger, Disgust, Joy, Surprised, Excited, Sad, Pained, Crying, Feared, Anxious, Relieved |

| Layer | Filters | Output |

|---|---|---|

| DarknetConv2D | ||

| BN | 32 | 608 × 608 |

| Mish | ||

| ResBlock | 64 | 304 × 304 |

| 2 × ResBlock | 128 | 152 × 152 |

| 8 × ResBlock | 256 | 76 × 76 |

| 8 × ResBlock | 512 | 38 × 38 |

| 4 × ResBlock | 1024 | 19 × 19 |

| Layer | Patch Size | Stride | Output |

|---|---|---|---|

| Conv | 3 × 3 | 1 | 32 × 128 × 64 |

| Conv | 3 × 3 | 1 | 32 × 128 × 64 |

| Max Pool | 3 × 3 | 2 | 32 × 64 × 32 |

| Residual Block | 3 × 3 | 1 | 32 × 64 × 32 |

| Residual Block | 3 × 3 | 1 | 32 × 64 × 32 |

| Residual Block | 3 × 3 | 2 | 64 × 32 × 16 |

| Residual Block | 3 × 3 | 1 | 64 × 32 × 16 |

| Residual Block | 3 × 3 | 2 | 128 × 16 × 8 |

| Residual Block | 3 × 3 | 1 | 128 × 16 × 8 |

| Dense | - | - | 128 |

| Batch and l2 normalization | - | - | 18 |

| Sentiment Tags | Number of Images |

|---|---|

| Positive | 518 |

| Neutral | 480 |

| Negative | 2002 |

| Physical Activity Tags | Number of Images |

|---|---|

| Sitting | 780 |

| Standing | 713 |

| Walking | 782 |

| Running | 708 |

| Laying | 17 |

| Sentiment Tags | Number of Images |

|---|---|

| Happy | 413 |

| Excited | 105 |

| Feared | 608 |

| Anger | 92 |

| Neutral | 480 |

| Sad | 1123 |

| Disgust | 203 |

| Surprised | 180 |

| Relief | 200 |

| Sentiment | Precision (%) | Recall (%) | F1 Score (%) | AP (%) |

|---|---|---|---|---|

| Negative | 96.75 | 95.19 | 95.81 | 97.75 |

| Neutral | 94.21 | 94.02 | 94.98 | 97.62 |

| Positive | 96.52 | 95.84 | 95.26 | 97.76 |

| Activity | Precision (%) | Recall (%) | F1 Score (%) | AP (%) |

|---|---|---|---|---|

| Sitting | 95.20 | 95.04 | 95.91 | 97.02 |

| Standing | 96.21 | 96.01 | 96.46 | 97.62 |

| Walking | 96.35 | 95.93 | 96.02 | 97.36 |

| Running | 93.02 | 92.09 | 93.10 | 97.21 |

| Laying | 96.35 | 96.21 | 96.45 | 97.16 |

| Sentiment | Metric | Activity | ||||

|---|---|---|---|---|---|---|

| Sitting | Standing | Walking | Running | Laying | ||

| Happy/Joy | Precision (%) | 98.21 | 97.02 | 95.71 | 90.53 | 89.01 |

| Recall (%) | 97.51 | 96.90 | 95.10 | 91.23 | 89.00 | |

| F1 Score (%) | 97.76 | 96.95 | 95.13 | 90.15 | 88.15 | |

| Anger | Precision (%) | 95.53 | 90.20 | 94.23 | 89.13 | 80.52 |

| Recall (%) | 94.12 | 89.12 | 94.12 | 88.07 | 80.12 | |

| F1 Score (%) | 94.70 | 90.01 | 93.14 | 89.11 | 80.32 | |

| Fear | Precision (%) | 97.73 | 96.23 | 93.54 | 91.23 | 92.13 |

| Recall (%) | 97.19 | 96.05 | 92.78 | 91.11 | 91.89 | |

| F1 Score (%) | 96.46 | 96.12 | 92.17 | 90.52 | 91.78 | |

| Sad | Precision (%) | 98.57 | 95.79 | 93.56 | 90.52 | 95.02 |

| Recall (%) | 98.23 | 95.36 | 92.19 | 89.19 | 94.56 | |

| F1 Score (%) | 98.17 | 95.11 | 93.38 | 90.27 | 94.12 | |

| Neutral | Precision (%) | 98.11 | 94.34 | 89.10 | 85.12 | 78.12 |

| Recall (%) | 96.62 | 93.03 | 88.91 | 84.43 | 77.79 | |

| F1 Score (%) | 95.15 | 93.5 | 88.56 | 82.45 | 77.34 | |

| Disgust | Precision (%) | 80.12 | 80.56 | 78.78 | 77.12 | 90.56 |

| Recall (%) | 79.34 | 80.22 | 78.01 | 77.34 | 90.12 | |

| F1 Score (%) | 78.56 | 79.45 | 78.12 | 77.05 | 90.27 | |

| Surprise | Precision (%) | 94.01 | 90.12 | 81.12 | 79.01 | 85.45 |

| Recall (%) | 93.98 | 89.45 | 79.05 | 78.56 | 85.01 | |

| F1 Score (%) | 93.56 | 89.01 | 79.10 | 78.89 | 84.89 | |

| Relief/Relax | Precision (%) | 96.12 | 96.23 | 95.29 | 92.78 | 90.12 |

| Recall (%) | 96.00 | 95.13 | 94.84 | 92.19 | 90.14 | |

| F1 Score (%) | 95.78 | 96.04 | 95.11 | 92.25 | 89.34 | |

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| ResNet-50 | 89.61 | 86.32 | 85.18 | 85.63 |

| ResNet-101 | 90.01 | 87.79 | 86.84 | 86.43 |

| Dense Net | 85.77 | 79.39 | 78.53 | 78.20 |

| VGGNet (Image Net) | 92.12 | 88.64 | 87.63 | 87.89 |

| VGGNet (Places) | 92.88 | 89.92 | 88.43 | 89.07 |

| Inception-v3 | 82.59 | 76.38 | 68.81 | 71.60 |

| Efficient Net | 91.31 | 87.00 | 86.94 | 86.70 |

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| ResNet-50 | 82.74 | 80.43 | 85.61 | 82.14 |

| ResNet-101 | 85.55 | 79.26 | 85.08 | 81.16 |

| Dense Net | 81.53 | 78.21 | 89.30 | 82.27 |

| VGGNet (Image Net) | 82.56 | 80.25 | 84.51 | 81.80 |

| VGGNet (Places) | 89.88 | 88.92 | 88.43 | 89.07 |

| Inception-v3 | 82.30 | 79.90 | 84.18 | 81.60 |

| Efficient Net | 82.25 | 80.83 | 82.70 | 81.39 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sadiq, A.M.; Ahn, H.; Choi, Y.B. Human Sentiment and Activity Recognition in Disaster Situations Using Social Media Images Based on Deep Learning. Sensors 2020, 20, 7115. https://doi.org/10.3390/s20247115

Sadiq AM, Ahn H, Choi YB. Human Sentiment and Activity Recognition in Disaster Situations Using Social Media Images Based on Deep Learning. Sensors. 2020; 20(24):7115. https://doi.org/10.3390/s20247115

Chicago/Turabian StyleSadiq, Amin Muhammad, Huynsik Ahn, and Young Bok Choi. 2020. "Human Sentiment and Activity Recognition in Disaster Situations Using Social Media Images Based on Deep Learning" Sensors 20, no. 24: 7115. https://doi.org/10.3390/s20247115

APA StyleSadiq, A. M., Ahn, H., & Choi, Y. B. (2020). Human Sentiment and Activity Recognition in Disaster Situations Using Social Media Images Based on Deep Learning. Sensors, 20(24), 7115. https://doi.org/10.3390/s20247115