Real-Time Parallel-Serial LiDAR-Based Localization Algorithm with Centimeter Accuracy for GPS-Denied Environments

Abstract

:1. Introduction

1.1. State of the Art

- inertial sensors and a digital map of the mine [54];

- PF-based fusion of localization data from inertial sensors, gyroscopes, speed sensors and ultrasonic sensors [55];

- a neural network that takes a video stream as input [58];

- a combination of low-cost sensors, namely a UWB sensor, a beacon, an IMU and a magnetic field sensor, using Wi-Fi signals [59]; or

- IMU data, LiDAR data and color camera images [60].

1.2. Novelty

- a novel localization algorithm in which a 3D triangular mesh map is used as the reference for localization,

- robot pose correction calculations for each LiDAR measurement in the triangular mesh,

- serial and parallel-serial implementations of the algorithm, and

- an evaluation of the proposed algorithm on data obtained from cave and mine gallery environments.

2. Material and Methods

2.1. Localization

- prediction of the robot’s position and orientation based on inertial navigation sensors and prior knowledge concerning localization and

- updating of the robot’s position relative to the closest triangle in the map to the LiDAR scan point.

Map Search

- find the corresponding cell on the 2D surface,

- find the eight neighboring cells,

- find all mesh vertices within a radius R of point P,

- find the corresponding vertices of the 3D triangular mesh, and

- for each point within radius R, take all triangles that have point P as a vertex and choose the closest among them.

| Algorithm 1: triangleSearch method. |

|

2.2. Ekf Procedure

3. Experimental

- the first section introduces the serial algorithm, where our initial solution to the problem is discussed;

- the second section introduces the parallel algorithm, where our method inspired by [69] is discussed; and

- the third section introduces the parallel-serial algorithm, where our innovative approach for satisfying given time constraints is detailed.

3.1. Serial Algorithm

- prediction;

- map search;

- calculation of the innovation, derivative, and Kalman gain; and

- updating.

| Algorithm 2: Serial Kalman method. |

|

3.2. Parallel Algorithm

- prediction,

- map search, and

- the calculation of the innovation and derivative

| Algorithm 3: Parallel algorithm. |

|

3.3. Parallel-Serial Algorithm

| Algorithm 4: Parallel-serial Kalman method. |

|

4. Calculation

4.1. Hardware

- an NVIDIA Jetson TX2 and

- an NVIDIA Jetson Xavier AGX.

4.2. Testing Environments

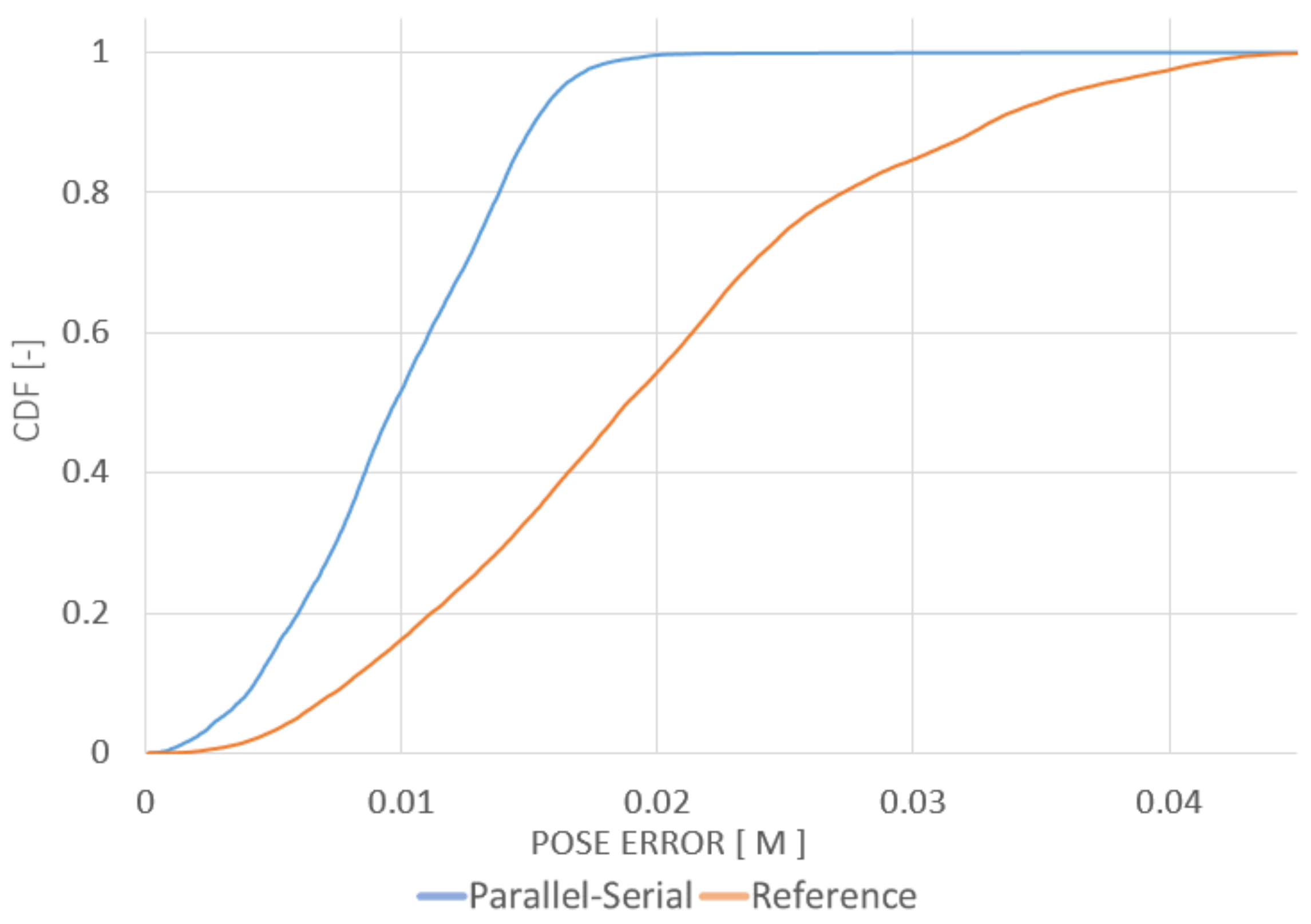

5. Results

- scanner angular speed: 600 rpm,

- scanner sampling frequency: 300,000 points per second,

- max. scan size per single registration: 10,000 points,

- linear resolution of registration: 1 mm,

- heading angular resolution of registration: 0.01 deg,

- innovation permissible value in EKF linear part: 0.25 m.

6. Discussion

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Lee, W.; Chung, W. Position estimation using multiple low-cost GPS receivers for outdoor mobile robots. In Proceedings of the 2015 12th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Goyang, Korea, 28–30 October 2015; pp. 460–461. [Google Scholar] [CrossRef]

- Ackerman, E. DARPA Subterranean Challenge: Meet the First 9 Teams. IEEE Spectrum 2019. Available online: https://spectrum.ieee.org/automaton/robotics/robotics-hardware/darpa-subt-meet-the-first-nine-teams (accessed on 10 December 2020).

- Kok, M.; Hol, J.D.; Schön, T.B. Using Inertial Sensors for Position and Orientation Estimation. arXiv 2007, arXiv:1704.06053. [Google Scholar]

- Lee, N.; Ahn, S.; Han, D. AMID: Accurate Magnetic Indoor Localization Using Deep Learning. Sensors 2018, 18, 1598. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kohler, P.; Connette, C.; Verl, A. Vehicle tracking using ultrasonic sensors & joined particle weighting. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013. [Google Scholar] [CrossRef]

- Valiente, D.; Payá, L.; Jiménez, L.; Sebastián, J.; Reinoso, Ó. Visual Information Fusion through Bayesian Inference for Adaptive Probability-Oriented Feature Matching. Sensors 2018, 18, 2041. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hay, S.; Harle, R. Bluetooth tracking without discoverability. In International Symposium on Location-and Context-Awareness; Springer: Berlin/Heidelberg, Germany, 2009; pp. 120–137. [Google Scholar]

- Uradzinski, M.; Guo, H.; Liu, X.; Yu, M. Advanced indoor positioning using zigbee wireless technology. Wirel. Pers. Commun. 2017, 97, 6509–6518. [Google Scholar] [CrossRef]

- Salamah, A.H.; Tamazin, M.; Sharkas, M.A.; Khedr, M. An enhanced WiFi indoor localization system based on machine learning. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Alcala de Henares, Spain, 4–7 October 2016; pp. 1–8. [Google Scholar]

- Popleteev, A.; Osmani, V.; Mayora, O. Investigation of indoor localization with ambient FM radio stations. In Proceedings of the 2012 IEEE International Conference on Pervasive Computing and Communications, Lugano, Switzerland, 19–23 March 2012; pp. 171–179. [Google Scholar]

- González, J.; Blanco, J.L.; Galindo, C.; Ortiz-de Galisteo, A.; Fernández-Madrigal, J.A.; Moreno, F.A.; Martinez, J.L. Mobile robot localization based on ultra-wide-band ranging: A particle filter approach. Robot. Auton. Syst. 2009, 57, 496–507. [Google Scholar] [CrossRef]

- Zafari, F.; Gkelias, A.; Leung, K.K. A Survey of Indoor Localization Systems and Technologies. IEEE Commun. Surv. Tutor. 2019, 21, 2568–2599. [Google Scholar] [CrossRef] [Green Version]

- Dagefu, F.T.; Oh, J.; Sarabandi, K. A sub-wavelength RF source tracking system for GPS-denied environments. IEEE Trans. Antennas Propag. 2012, 61, 2252–2262. [Google Scholar] [CrossRef]

- Munoz, F.I.I. Global Pose Estimation and Tracking for RGB-D Localization and 3D Mapping. Ph.D. Thesis, Université Côte d’Azur, Nice, France, 2018. [Google Scholar]

- Nam, T.; Shim, J.; Cho, Y. A 2.5D Map-Based Mobile Robot Localization via Cooperation of Aerial and Ground Robots. Sensors 2017, 17, 2730. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Zhang, Y.; Wang, J. Map-based localization method for autonomous vehicles using 3D-LIDAR. IFAC-PapersOnLine 2017, 50, 276–281. [Google Scholar] [CrossRef]

- Kohlbrecher, S.; von Stryk, O.; Meyer, J.; Klingauf, U. A flexible and scalable SLAM system with full 3D motion estimation. In Proceedings of the 2011 IEEE International Symposium on Safety, Security, and Rescue Robotics, Kyoto, Japan, 1–5 November 2011; pp. 155–160. [Google Scholar]

- Zhang, J.; Singh, S. Low-drift and Real-time Lidar Odometry and Mapping. Auton. Robot. 2017, 41, 401–416. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-Time Loop Closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Magnusson, M.; Vaskevicius, N.; Stoyanov, T.; Pathak, K.; Birk, A. Beyond points: Evaluating recent 3D scan-matching algorithms. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 3631–3637. [Google Scholar]

- Filipenko, M.; Afanasyev, I. Comparison of Various SLAM Systems for Mobile Robot in an Indoor Environment. In Proceedings of the 2018 International Conference on Intelligent Systems (IS), Funchal, Portugal, 25–27 September 2018. [Google Scholar] [CrossRef]

- Chow, J.F.; Kocer, B.B.; Henawy, J.; Seet, G.; Li, Z.; Yau, W.Y.; Pratama, M. Toward Underground Localization: Lidar Inertial Odometry Enabled Aerial Robot Navigation. arXiv 2019, arXiv:1910.13085. [Google Scholar]

- Im, J.H.; Im, S.H.; Jee, G.I. Extended line map-based precise vehicle localization using 3D LIDAR. Sensors 2018, 18, 3179. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, Y.; Ruichek, Y.; Cappelle, C. Extrinsic calibration between a stereoscopic system and a LIDAR with sensor noise models. In Proceedings of the 2012 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Hamburg, Germany, 13–15 September 2012; pp. 484–489. [Google Scholar] [CrossRef]

- Yu, H.; Zhen, W.; Yang, W.; Scherer, S. Line-Based 2-D–3-D Registration and Camera Localization in Structured Environments. IEEE Trans. Instrum. Meas. 2020, 69, 8962–8972. [Google Scholar] [CrossRef]

- Doumbia, M.; Cheng, X. Estimation and Localization Based on Sensor Fusion for Autonomous Robots in Indoor Environment. Computers 2020, 9, 84. [Google Scholar] [CrossRef]

- Jiang, G.; Lei, Y.; Jin, S.; Tian, C.; Ma, X.; Ou, Y. A Simultaneous Localization and Mapping (SLAM) Framework for 2.5D Map Building Based on Low-Cost LiDAR and Vision Fusion. Appl. Sci. 2019, 9, 2105. [Google Scholar] [CrossRef] [Green Version]

- Alexis, K.; Nikolakopoulos, G.; Tzes, A. Model predictive quadrotor control: Attitude, altitude and position experimental studies. IET Control Theory Appl. 2012, 6, 1812–1827. [Google Scholar] [CrossRef] [Green Version]

- Xu, Z.; Guo, S.; Song, T.; Zeng, L. Robust Localization of the Mobile Robot Driven by Lidar Measurement and Matching for Ongoing Scene. Appl. Sci. 2020, 10, 6152. [Google Scholar] [CrossRef]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35. [Google Scholar] [CrossRef] [Green Version]

- Musoff, H.; Zarchan, P. Fundamentals of Kalman Filtering: A Practical Approach, 4th ed.; American Institute of Aeronautics and Astronautics, Inc.: Reston, VA, USA, 2015. [Google Scholar] [CrossRef]

- Brida, P.; Machaj, J.; Benikovsky, J. A Modular Localization System as a Positioning Service for Road Transport. Sensors 2014, 14, 20274–20296. [Google Scholar] [CrossRef] [Green Version]

- Rekleitis, I.; Bedwani, J.L.; Gingras, D.; Dupuis, E. Experimental Results for Over-the-Horizon Planetary exploration using a LIDAR sensor. In Experimental Robotics; Springer: Berlin/Heidelberg, Germany, 2009; pp. 65–77. [Google Scholar]

- Akyildiz, I.F.; Sun, Z.; Vuran, M.C. Signal propagation techniques for wireless underground communication networks. Phys. Commun. 2009, 2, 167–183. [Google Scholar] [CrossRef]

- Konatowski, S.; Kaniewski, P.; Matuszewski, J. Comparison of Estimation Accuracy of EKF, UKF and PF Filters. Annu. Navig. 2016, 23, 69–87. [Google Scholar] [CrossRef] [Green Version]

- Ko, N.Y.; Kim, T.G. Comparison of Kalman filter and particle filter used for localization of an underwater vehicle. In Proceedings of the 9th International Conference Ubiquitous Robots and Ambient Intelligence (URAI), Daejeon, Korea, 26–28 November 2012; pp. 350–352. [Google Scholar] [CrossRef]

- LaViola, J.J. A comparison of unscented and extended Kalman filtering for estimating quaternion motion. In Proceedings of the 2003 American Control Conference, Denver, CO, USA, 4–6 June 2003. [Google Scholar] [CrossRef]

- Luo, J.; Sun, L.; Jia, Y. A new FastSLAM algorithm based on the unscented particle filter. In Proceedings of the 2018 Chinese Control And Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; pp. 1259–1263. [Google Scholar] [CrossRef]

- Grehl, S.; Sastuba, M.; Donner, M.; Ferber, M.; Schreiter, F.; Mischo, H.; Jung, B. Towards virtualization of underground mines using mobile robots–from 3D scans to virtual mines. In Proceedings of the 23rd International Symposium on Mine Planning & Equipment Selection, Johannesburg, South Africa, 9–11 November 2015. [Google Scholar]

- Huber, D.F.; Vandapel, N. Automatic 3D underground mine mapping. In Field and Service Robotics; Springer: Berlin/Heidelberg, Germany, 2003; pp. 497–506. [Google Scholar]

- Yin, H.; Berger, C. Mastering data complexity for autonomous driving with adaptive point clouds for urban environments. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017. [Google Scholar] [CrossRef]

- Zhang, P. A Route Planning Algorithm for Ball Picking Robot with Maximum Efficiency. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; Volume 17, pp. 6.1–6.5. [Google Scholar] [CrossRef]

- Kobbelt, L.P.; Botsch, M. An interactive approach to point cloud triangulation. In Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2000; Volume 19, pp. 479–487. [Google Scholar]

- Ruetz, F.; Hernandez, E.; Pfeiffer, M.; Oleynikova, H.; Cox, M.; Lowe, T.; Borges, P. OVPC Mesh: 3D Free-space Representation for Local Ground Vehicle Navigation. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8648–8654. [Google Scholar] [CrossRef] [Green Version]

- Fankhauser, P.; Hutter, M. A Universal Grid Map Library: Implementation and Use Case for Rough Terrain Navigation; Springer: Cham, Switzerland, 2016; Volume 625. [Google Scholar] [CrossRef]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef] [Green Version]

- Oleynikova, H.; Taylor, Z.; Fehr, M.; Siegwart, R.; Nieto, J. Voxblox: Incremental 3D Euclidean Signed Distance Fields for on-board MAV planning. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1366–1373. [Google Scholar]

- Snyder, J.; Sander, P.; Hoppe, H.; Gortler, S. Texture Mapping Progressive Meshes. In Proceedings of the ACM SIGGRAPH Conference on Computer Graphic, San Antonio, TX, USA, 21–26 July 2002; Volume 2001. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011. [Google Scholar]

- Marton, Z.C.; Rusu, R.B.; Beetz, M. On Fast Surface Reconstruction Methods for Large and Noisy Datasets. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Park, Y.; Jeong, S.; Suh, I.H.; Choi, B.U. Map-building and localization by three-dimensional local features for ubiquitous service robot. In Proceedings of the International Conference on Ubiquitous Convergence Technology, Jeju Island, Korea, 5–6 December 2006; pp. 69–79. [Google Scholar]

- Salas-Moreno, R.F.; Newcombe, R.A.; Strasdat, H.; Kelly, P.H.J.; Davison, A.J. SLAM++: Simultaneous Localisation and Mapping at the Level of Objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1352–1359. [Google Scholar] [CrossRef] [Green Version]

- Xuehe, Z.; Ge, L.; Gangfeng, L.; Jie, Z.; ZhenXiu, H. GPU based real-time SLAM of six-legged robot. Microprocess. Microsyst. 2016, 47, 104–111. [Google Scholar] [CrossRef]

- Hawkins, W.; Daku, B.L.F.; Prugger, A.F. Vehicle localization in underground mines using a particle filter. In Proceedings of the Canadian Conference on Electrical and Computer Engineering, Saskatoon, SK, Canada, 1–4 May 2005. [Google Scholar] [CrossRef]

- Hawkins, W.; Daku, B.L.F.; Prugger, A.F. Positioning in Underground Mines. In Proceedings of the IECON 2006—32nd Annual Conference on IEEE Industrial Electronics, Paris, France, 7–10 November 2006; pp. 3139–3143. [Google Scholar] [CrossRef]

- Luo, R.; Guo, Y. Real-time stereo tracking of multiple moving heads. In Proceedings of the IEEE ICCV Workshop Recognition, Analysis and Tracking of Faces and Gestures in Real-Time Systems, Vancouver, BC, Canada, 13 July 2001; pp. 55–60. [Google Scholar] [CrossRef]

- Kang, D.; Cha, Y.J. Autonomous UAVs for Structural Health Monitoring Using Deep Learning and an Ultrasonic Beacon System with Geo-Tagging. Comput. Aided Civ. Infrastruct. Eng. 2018. [Google Scholar] [CrossRef]

- Zeng, F.; Jacobson, A.; Smith, D.; Boswell, N.; Peynot, T.; Milford, M. LookUP: Vision-Only Real-Time Precise Underground Localisation for Autonomous Mining Vehicles. arXiv 2019, arXiv:1903.08313. [Google Scholar]

- Errington, A.F.C.; Daku, B.L.F.; Prugger, A.F. Vehicle Positioning in Underground Mines. In Proceedings of the Canadian Conference on Electrical and Computer Engineering, Vancouver, BC, Canada, 22–26 April 2007; pp. 586–589. [Google Scholar] [CrossRef]

- Asvadi, A.; Girão, P.; Peixoto, P.; Nunes, U. 3D object tracking using RGB and LIDAR data. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 1255–1260. [Google Scholar]

- Pomerleau, F.; Colas, F.; Siegwart, R.; Magnenat, S. Comparing ICP variants on real-world data sets. Auton. Robot. 2013. [Google Scholar] [CrossRef]

- Pomerleau, F.; Colas, F.; Siegwart, R. A Review of Point Cloud Registration Algorithms for Mobile Robotics. Found. Trends Robot. 2015, 4, 1–104. [Google Scholar] [CrossRef] [Green Version]

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October; pp. 4758–4765.

- Xu, Y.; Shmaliy, Y.S.; Li, Y.; Chen, X.; Guo, H. Indoor INS/LiDAR-Based Robot Localization with Improved Robustness Using Cascaded FIR Filter. IEEE Access 2019, 7, 34189–34197. [Google Scholar] [CrossRef]

- Li, M.; Zhu, H.; You, S.; Wang, L.; Tang, C. Efficient Laser-Based 3D SLAM for Coal Mine Rescue Robots. IEEE Access 2019, 7, 14124–14138. [Google Scholar] [CrossRef]

- Wolcott, R.W.; Eustice, R.M. Robust LIDAR localization using multiresolution Gaussian mixture maps for autonomous driving. Int. J. Robot. Res. 2017, 36, 292–319. [Google Scholar] [CrossRef]

- Niewola, A.; Podsedkowski, L.; Niedzwiedzki, J. Point-to-Surfel-Distance- (PSD-) Based 6D Localization Algorithm for Rough Terrain Exploration Using Laser Scanner in GPS-Denied Scenarios. In Proceedings of the 2019 12th International Workshop on Robot Motion and Control (RoMoCo), Poznan, Poland, 8–10 July 2019; pp. 112–117. [Google Scholar] [CrossRef]

- Jones, M. 3D Distance from a Point to a Triangle. In Technical Report CSR-5-95; Department of Computer Science, University of Wales Swansea: Swansea, UK, 1995. [Google Scholar]

- Weingarten, J.; Siegwart, R. EKF-based 3D SLAM for structured environment reconstruction. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 3834–3839. [Google Scholar] [CrossRef] [Green Version]

- Jorge Othon Esparza-Jimenez, M.D.; Gordillo, J.L. Visual EKF-SLAM from Heterogeneous Landmarks. Sensors 2016, 16, 489. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Wu, K.; Song, J.; Huang, S.; Dissanayake, G. Convergence and Consistency Analysis for a 3-D Invariant-EKF SLAM. IEEE Robot. Autom. Lett. 2017, 2, 733–740. [Google Scholar] [CrossRef] [Green Version]

- Huang, M.; Wei, S.; Huang, B.; Chang, Y. Accelerating the Kalman Filter on a GPU. In Proceedings of the 2011 IEEE 17th International Conference on Parallel and Distributed Systems, Tainan, Taiwan, 7–9 December 2011; pp. 1016–1020. [Google Scholar] [CrossRef]

| Dataset | Point Cloud Size | Triangle Mesh Size | GMM Size | Total Distance Travelled |

|---|---|---|---|---|

| Mine gallery | 360,150 kpoints 4121.5 MB | 997,884 triangles 500,466 points 10.3 MB | 500,466 cells 3.8 MB | 121.916 m |

| Cave | 144,060 kpoints 1648.6 MB | 1,727,729 triangles 865,824 points 19.2 MB | 325,254 cells 2.5 MB | 45.294 m |

| Dataset | Mine Gallery | Cave | ||||||

|---|---|---|---|---|---|---|---|---|

| Method | P-S | P | GMM | PSD | P-S | P | GMM | PSD |

| RMSE [cm] | 1.07 | 2.71 | 3.06 | 3.94 | 2.96 | 4.99 | 4.32 | 6.32 |

| Max. dev. [cm] | 3.89 | 4.37 | 8.09 | 9.75 | 5.94 | 12.29 | 7.84 | 12.66 |

| Machine | Parallel-Serial EKF (GPU) | Serial EKF (CPU) | GMM (CPU) |

|---|---|---|---|

| Jetson TX2 | 250,000 points/s | 80,000 points/s | 6000 points/s |

| Jetson Xavier | 450,000 points/s | 80,000 points/s | 6000 points/s |

| No. Measurements Points/s· 103 | 300 | 150 | 75 | 37.5 | 18.75 | 9.375 |

|---|---|---|---|---|---|---|

| Max. dev. [cm] | 5.94 | 5.85 | 6.92 | 7.81 | 9.08 | 79.83 |

| Avg error [cm] | 2.75 | 2.76 | 2.77 | 2.83 | 3.66 | 13.75 |

| RMSE [cm] | 2.96 | 2.97 | 3.04 | 3.14 | 4.17 | 22.31 |

| Machine | GPU Efficiency | CPU Efficiency |

|---|---|---|

| Jetson TX2 | 16.7 points/mJ | 5.3 points/mJ |

| Jetson Xavier | 15.4 points/mJ | 4.1 points/mJ |

| PC | 11.3 points/mJ | 3.4 points/mJ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Niedzwiedzki, J.; Niewola, A.; Lipinski, P.; Swaczyna, P.; Bobinski, A.; Poryzala, P.; Podsedkowski, L. Real-Time Parallel-Serial LiDAR-Based Localization Algorithm with Centimeter Accuracy for GPS-Denied Environments. Sensors 2020, 20, 7123. https://doi.org/10.3390/s20247123

Niedzwiedzki J, Niewola A, Lipinski P, Swaczyna P, Bobinski A, Poryzala P, Podsedkowski L. Real-Time Parallel-Serial LiDAR-Based Localization Algorithm with Centimeter Accuracy for GPS-Denied Environments. Sensors. 2020; 20(24):7123. https://doi.org/10.3390/s20247123

Chicago/Turabian StyleNiedzwiedzki, Jakub, Adam Niewola, Piotr Lipinski, Piotr Swaczyna, Aleksander Bobinski, Pawel Poryzala, and Leszek Podsedkowski. 2020. "Real-Time Parallel-Serial LiDAR-Based Localization Algorithm with Centimeter Accuracy for GPS-Denied Environments" Sensors 20, no. 24: 7123. https://doi.org/10.3390/s20247123