Spatial Topological Relation Analysis for Cluttered Scenes

Abstract

1. Introduction

- We simplified the widely used model of spatial topological relations and proposed the definition of particular formalism, which improved the accuracy of the spatial topological relation analysis in the cluttered scene.

- We proposed the method that determines the spatial topological relation by the approximate expression of the object boundary and the spatial relations of points on cluttered objects. Deviation factor is employed to improve the robustness of the algorithm.

2. Related Works

3. Methods

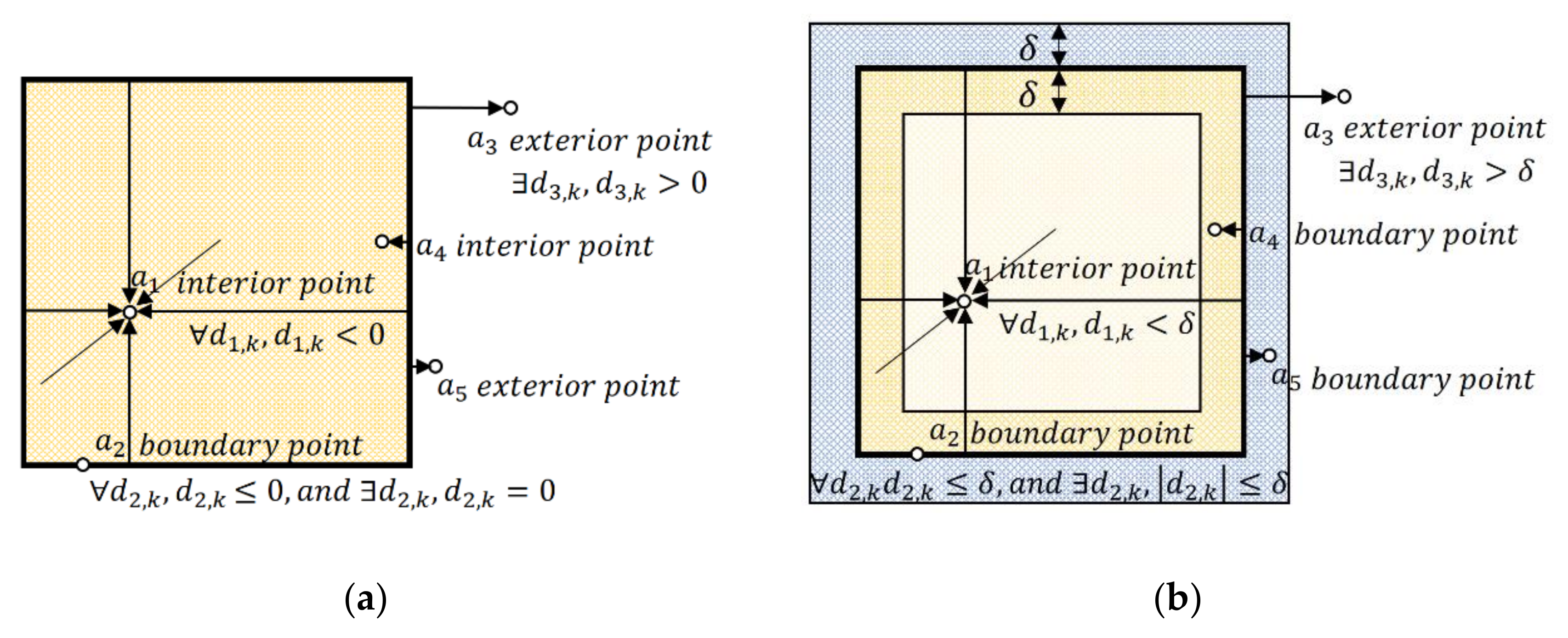

3.1. Definitions of Spatial Topological Relations

- (1)

- the parts of located at the interior of , denoted by ;

- (2)

- the parts of located on ;

- (3)

- the parts of located at the exterior of ;

- (4)

- the parts of located at the interior of ;

- (5)

- the parts of located on ;

- (6)

- the parts of located at the exterior of .

3.2. Classification Criteria of Spatial Topological Relations

| Algorithm 1. Spatial Topological Relation Analysis Algorithm. |

| Input: 3D point cloud of each object |

| Output: The spatial topological relations between the objects |

| Initialize: Create convex hull and AABB of each object from point cloud |

| begin |

| for each object A do |

| for each object B do |

| for each point in object A do |

| if is not in |

| then , and continue |

| Compute the relative position of p by formula (4) |

| Check , and for loop termination |

| end |

| end |

| end |

| for each object A do |

| for each object B do |

| if and then |

| else if |

| if then |

| else |

| else if |

| if then |

| else |

| else if , , and |

| then |

| else |

| end |

| end |

| end |

4. Experimental Results

4.1. Pretreatment

4.1.1. IIIT RGBD Dataset

4.1.2. YCB Benchmarks

4.2. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bing, Z.; Meschede, C.; Röhrbein, F.; Huang, K.; Knoll, A.C. A survey of robotics control based on learning-inspired spiking neural networks. Front. Neurorobotics 2018, 12, 35. [Google Scholar] [CrossRef] [PubMed]

- Bing, Z.; Meschede, C.; Chen, G.; Knoll, A.; Huang, K. Indirect and direct training of spiking neural networks for end-to-end control of a lane-keeping vehicle. Neural Netw. 2020, 121, 21–36. [Google Scholar] [CrossRef] [PubMed]

- Bing, Z.; Lemke, C.; Cheng, L.; Huang, K.; Knoll, A. Energy-efficient and damage-recovery slithering gait design for a snake-like robot based on reinforcement learning and inverse reinforcement learning. Neural Netw. 2020, 129, 323–333. [Google Scholar] [CrossRef] [PubMed]

- Danielczuk, M.; Kurenkov, A.; Balakrishna, A.; Matl, M.; Wang, D.; Martín-Martín, R.; Garg, A.; Savarese, S.; Goldberg, K. Mechanical search: Multi-step retrieval of a target object occluded by clutter. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 1614–1621. [Google Scholar]

- Imran, A.; Kim, S.-H.; Park, Y.-B.; Suh, I.H.; Yi, B.-J. Singulation of Objects in Cluttered Environment Using Dynamic Estimation of Physical Properties. Appl. Sci. 2019, 9, 3536. [Google Scholar] [CrossRef]

- Murali, A.; Mousavian, A.; Eppner, C.; Paxton, C.; Fox, D. 6-dof grasping for target-driven object manipulation in clutter. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 6232–6238. [Google Scholar]

- Jo, H.; Song, J.-B. Object-Independent Grasping in Heavy Clutter. Appl. Sci. 2020, 10, 804. [Google Scholar] [CrossRef]

- Hang, K.; Morgan, A.S.; Dollar, A.M. Pre-grasp sliding manipulation of thin objects using soft, compliant, or underactuated hands. IEEE Robot. Autom. Lett. 2019, 4, 662–669. [Google Scholar] [CrossRef]

- Shafii, N.; Kasaei, S.H.; Lopes, L.S. Learning to grasp familiar objects using object view recognition and template matching. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Deajeon, Korea, 9–14 October 2016; pp. 2895–2900. [Google Scholar]

- Spiers, A.J.; Liarokapis, M.V.; Calli, B.; Dollar, A.M. Single-grasp object classification and feature extraction with simple robot hands and tactile sensors. IEEE Trans. Haptics 2016, 9, 207–220. [Google Scholar] [CrossRef]

- Naseer, M.; Khan, S.; Porikli, F. Indoor scene understanding in 2.5/3d for autonomous agents: A survey. IEEE Access 2018, 7, 1859–1887. [Google Scholar] [CrossRef]

- Zheng, B.; Zhao, Y.; Yu, J.; Ikeuchi, K.; Zhu, S.-C. Scene understanding by reasoning stability and safety. Int. J. Comput. Vis. 2015, 112, 221–238. [Google Scholar] [CrossRef]

- Battaglia, P.W.; Hamrick, J.B.; Tenenbaum, J.B. Simulation as an engine of physical scene understanding. Proc. Natl. Acad. Sci. USA 2013, 110, 18327–18332. [Google Scholar] [CrossRef]

- Zlatanova, S.; Rahman, A.A.; Shi, W. Topological models and frameworks for 3D spatial objects. Comput. Geosci. 2004, 30, 419–428. [Google Scholar] [CrossRef]

- Theobald, D.M. Topology revisited: Representing spatial relations. Int. J. Geogr. Inf. Sci. 2001, 15, 689–705. [Google Scholar] [CrossRef]

- Ziaeetabar, F.; Aksoy, E.E.; Wörgötter, F.; Tamosiunaite, M. Semantic analysis of manipulation actions using spatial relations. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 4612–4619. [Google Scholar]

- Aksoy, E.E.; Aein, M.J.; Tamosiunaite, M.; Wörgötter, F. Semantic parsing of human manipulation activities using on-line learned models for robot imitation. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 2875–2882. [Google Scholar]

- Shen, J.; Zhang, L.; Chen, M. Topological relations between spherical spatial regions with holes. Int. J. Digit. Earth 2018. [Google Scholar] [CrossRef]

- Sjöö, K.; Aydemir, A.; Jensfelt, P. Topological spatial relations for active visual search. Robot. Auton. Syst. 2012, 60, 1093–1107. [Google Scholar] [CrossRef]

- Long, Z.; Li, S. A complete classification of spatial relations using the Voronoi-based nine-intersection model. Int. J. Geogr. Inf. Sci. 2013, 27, 2006–2025. [Google Scholar] [CrossRef]

- Shen, J.; Zhou, T.; Chen, M. A 27-intersection model for representing detailed topological relations between spatial objects in two-dimensional space. ISPRS Int. J. Geo-Inf. 2017, 6, 37. [Google Scholar] [CrossRef]

- Fu, L.; Yin, P.; Li, G.; Shi, Z.; Liu, Y.; Zhang, J. Characteristics and Classification of Topological Spatial Relations in 3-D Cadasters. Information 2018, 9, 71. [Google Scholar] [CrossRef]

- Xu, J.; Cao, Y.; Zhang, Z.; Hu, H. Spatial-temporal relation networks for multi-object tracking. In Proceedings of the 2019 IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 3988–3998. [Google Scholar]

- Zhou, M.; Guan, Q. A 25-Intersection Model for Representing Topological Relations between Simple Spatial Objects in 3-D Space. ISPRS Int. J. Geo-Inf. 2019, 8, 182. [Google Scholar] [CrossRef]

- Egenhofer, M.J.; Franzosa, R.D. Point-set topological spatial relations. Int. J. Geogr. Inf. Syst. 1991, 5, 161–174. [Google Scholar] [CrossRef]

- Clementini, E.; Di Felice, P.; Van Oosterom, P. A small set of formal topological relationships suitable for end-user interaction. In Proceedings of the 1993 International Symposium on Spatial Databases (ISSD), Singapore, 23–25 June 1993; pp. 277–295. [Google Scholar]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor segmentation and support inference from rgbd images. In Proceedings of the 2012 European conference on computer vision (ECCV), Florence, Italy, 7–13 October 2012; pp. 746–760. [Google Scholar]

- Panda, S.; Hafez, A.A.; Jawahar, C. Learning support order for manipulation in clutter. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, IL, USA, 3–8 November 2013; pp. 809–815. [Google Scholar]

- Kartmann, R.; Paus, F.; Grotz, M.; Asfour, T. Extraction of physically plausible support relations to predict and validate manipulation action effects. IEEE Robot. Autom. Lett. 2018, 3, 3991–3998. [Google Scholar] [CrossRef]

- Jia, Z.; Gallagher, A.C.; Saxena, A.; Chen, T. 3d reasoning from blocks to stability. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 905–918. [Google Scholar] [CrossRef] [PubMed]

- Rosman, B.; Ramamoorthy, S. Learning spatial relationships between objects. Int. J. Robot. Res. 2011, 30, 1328–1342. [Google Scholar] [CrossRef]

- Mojtahedzadeh, R.; Bouguerra, A.; Schaffernicht, E.; Lilienthal, A.J. Support relation analysis and decision making for safe robotic manipulation tasks. Robot. Autonom. Syst. 2015, 71, 99–117. [Google Scholar] [CrossRef]

- Zhuo, W.; Salzmann, M.; He, X.; Liu, M. Indoor scene parsing with instance segmentation, semantic labeling and support relationship inference. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5429–5437. [Google Scholar]

- Schneider, P.; Eberly, D.H. Geometric Tools for Computer Graphics; Elsevier: San Francisco, CA, USA, 2002; pp. 745–746. [Google Scholar]

- Panda, S.; Hafez, A.A.; Jawahar, C. Single and multiple view support order prediction in clutter for manipulation. J. Intell. Robot. Syst. 2016, 83, 179–203. [Google Scholar] [CrossRef]

- Zha, F.; Fu, Y.; Wang, P.; Guo, W.; Li, M.; Wang, X.; Cai, H. Semantic 3D Reconstruction for Robotic Manipulators with an Eye-In-Hand Vision System. Appl. Sci. 2020, 10, 1183. [Google Scholar] [CrossRef]

- Barber, C.B.; Dobkin, D.P.; Huhdanpaa, H. The quickhull algorithm for convex hulls. ACM Trans. Math. Softw. (TOMS) 1996, 22, 469–483. [Google Scholar] [CrossRef]

- Calli, B.; Singh, A.; Bruce, J.; Walsman, A.; Konolige, K.; Srinivasa, S.; Abbeel, P.; Dollar, A.M. Yale-CMU-Berkeley dataset for robotic manipulation research. Int. J. Robot. Res. 2017, 36, 261–268. [Google Scholar] [CrossRef]

| cross | * | * | * | * | ||

| within | * | * | * | |||

| partial within | * | * | * | |||

| contain | * | * | * | |||

| partial contain | * | * | * | |||

| touch | * | * | ||||

| disjoint | * | |||||

| * | ||||||

| * | |||||

| * | |||||

| Scene | Our Method | Feature Extraction | Learning Method | AABB Method | ||||

|---|---|---|---|---|---|---|---|---|

| Accuracy | Time | Accuracy | Time | Accuracy | Time | Accuracy | Time | |

| 1 | 100 | 10.2 | 66.7 | 41.8 | 83.3 | 25.7 | 50.0 | 1.4 |

| 2 | 100 | 15.8 | 83.3 | 197.9 | 83.3 | 44.3 | 66.7 | 3.0 |

| 3 | 100 | 27.5 | 66.7 | 116.0 | 100 | 33.8 | 83.3 | 2.1 |

| 4 | 83.3 | 3.1 | 83.3 | 12.8 | 83.3 | 7.9 | 66.7 | 0.9 |

| 5 | 83.3 | 3.5 | 66.7 | 30.1 | 66.7 | 13.9 | 66.7 | 1.4 |

| 6 | 100 | 55.3 | 86.7 | 775.6 | 60.0 | 77.1 | 60.0 | 4.9 |

| 7 | 100 | 16.4 | 60.0 | 304.0 | 60.0 | 28.4 | 70.0 | 4.0 |

| Scene | Our Method | Feature Extraction | Learning Method | AABB Method | ||||

|---|---|---|---|---|---|---|---|---|

| Accuracy | Time | Accuracy | Time | Accuracy | Time | Accuracy | Time | |

| 8 | 100.0 | 14.6 | 90.0 | 365.3 | 80.0 | 12.0 | 80.0 | 1.6 |

| 9 | 100.0 | 13.0 | 100.0 | 176.0 | 90.0 | 12.8 | 90.0 | 1.2 |

| 10 | 93.3 | 19.4 | 93.3 | 341.8 | 86.7 | 22.3 | 80.0 | 2.0 |

| 11 | 100.0 | 20.1 | 90.0 | 523.2 | 70.0 | 24.1 | 50.0 | 1.2 |

| 12 | 90.0 | 14.2 | 70.0 | 305.2 | 80.0 | 16.5 | 80.0 | 1.0 |

| 13 | 100.0 | 42.6 | 70.0 | 1033.9 | 70.0 | 78.1 | 70.0 | 3.2 |

| 14 | 80.0 | 7.8 | 80.0 | 257.0 | 70.0 | 9.8 | 80.0 | 0.7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, Y.; Li, M.; Zhang, X.; Zhang, S.; Wei, C.; Guo, W.; Cai, H.; Sun, L.; Wang, P.; Zha, F. Spatial Topological Relation Analysis for Cluttered Scenes. Sensors 2020, 20, 7181. https://doi.org/10.3390/s20247181

Fu Y, Li M, Zhang X, Zhang S, Wei C, Guo W, Cai H, Sun L, Wang P, Zha F. Spatial Topological Relation Analysis for Cluttered Scenes. Sensors. 2020; 20(24):7181. https://doi.org/10.3390/s20247181

Chicago/Turabian StyleFu, Yu, Mantian Li, Xinyi Zhang, Sen Zhang, Chunyu Wei, Wei Guo, Hegao Cai, Lining Sun, Pengfei Wang, and Fusheng Zha. 2020. "Spatial Topological Relation Analysis for Cluttered Scenes" Sensors 20, no. 24: 7181. https://doi.org/10.3390/s20247181

APA StyleFu, Y., Li, M., Zhang, X., Zhang, S., Wei, C., Guo, W., Cai, H., Sun, L., Wang, P., & Zha, F. (2020). Spatial Topological Relation Analysis for Cluttered Scenes. Sensors, 20(24), 7181. https://doi.org/10.3390/s20247181