1. Introduction

Sunburn is a major physiological disorder that adversely affects productivity of fruits and vegetables including apple, grapes, pepper, pumpkin and watermelons [

1,

2,

3,

4,

5]. Fruit sunburn in apples has been reported in all major apple growing regions around the globe and causes 10–40% yield losses. Sunburn is caused by sunlight with high intensity, ultra-violet (UV) radiation and photo-synthetically active radiation (PAR) that elevates maturing fruit peel temperature during the field production. Sunburn necrosis, sunburn browning and photo-oxidative sunburn are the three major sunburn types [

4,

5]. Sunburn necrosis leads to thermal death of epidermal and sub-epidermal cells (peel) and takes place when fruit surface temperature (FST), a reliable indicator of sunburn, reaches 52 ± 1 °C. Sunburn browning occurs at a slightly lower FST, i.e., between 46 and 49 °C, whereas the photo oxidative sunburn occurs at a much lower FST because of PAR and light shocks to sudden sun-exposure of shaded apple due to management practices such as hand thinning, selective picking or pruning operations [

2,

3,

4,

5,

6,

7,

8,

9]. Apart from sunburn, FST also influences several physiological and biological processes in fruit such as flavor and nutritional values, sugar and acid content, ripening process, fruit quality, size, appearance, and insect/pest infestation susceptibility.

Fruit sunburn forecasting has been conventionally done using the air temperature data collected by open field weather stations. Often, such an approach is highly unreliable and can result in untimely application of water for evaporative cooling of canopies and fruits. Excess evaporative cooling can be water and energy inefficient and may create food safety issues. A weather-data-based apple surface-temperature dynamics model [

10] that uses parameters such as wind speed, humidity, solar intensity, and dew point temperature, apart from air temperature, could be useful for FST estimates. However, it requires on-site sensors to get reliable micro-climate weather data. Thermocouples, thermometers and thermal probes are also used for FST estimation. However, such probes installation tends to be laborious, less accurate, data limited and destructive; limiting wide scale adaptation.

In recent years, portable miniaturized thermal imaging sensors have been explored in various crop-stress monitoring applications including that of rapid apple FST monitoring [

10]. During field use, thermal imagery can be inadequate as it can have challenges in segmenting regions of interests (fruits) within the background branches, stems and leaves [

11]. However, when combined with RGB imagery data, thermal imagery can be used for apple fruit surface temperature monitoring [

11]. Our team has developed and successfully tested such smart apple sunburn sensing systems [

12]. Nevertheless, these orchard sensing system needs to be rugged, economically viable, and connected to a reliable cellular network that may not be available at many orchards. These challenges with existing systems raise a need for a user friendly, low cost, and portable sunburn prediction tool that will aid growers in real-time, accurate, apple sunburn monitoring and pertinent decision-making.

Our team has envisioned such a mobile FST monitoring tool that leverages advances in low cost commercial thermal-RGB imaging sensors and smartphone technologies that allow ease of integration. Smartphones are becoming commonly available to wider demographics and have opened a new horizon for smartphone-based applications that helps in solving daily-life problems. Recently, researchers have taken advantage of the increased computing potential, accessibility, ease-of-operation and low cost of smartphones for developing pertinent use cases in medical diagnosis, environmental monitoring and food safety [

13]. Related to agriculture applications, Wang et al. [

14] utilized the smartphone built-in camera and developed an application for in-field fruit size estimation. Das et al. [

15] has developed a prototype for a smartphone-based spectrometer for rapid and non-destructive fruit ripeness testing.

Overall, smartphone-based applications integrated with compatible imaging and optical sensors have a huge potential for effective orchard crop stress monitoring. Therefore, this study focused on the development of a smartphone-enabled, thermal-RGB, and microclimate-sensing assisted, real-time apple sunburn prediction tool. The specific study objectives were to:

- (1)

Develop a smartphone enabled miniaturized and portable apple fruit surface temperature monitoring tool with a compatible application (‘AppSense 1.0’) for real-time fruit surface temperature estimation.

- (2)

Evaluate the performance of the developed tool in orchard conditions towards predicting sunburn susceptibility of four fresh market apple cultivars cv. ‘Gala’, ‘Fuji’, ‘Red delicious’ and ‘Honeycrisp’.

2. Materials and Methods

2.1. Mobile Fruit Surface Temperature Monitoring Tool

The mobile FST monitoring tool (

Figure 1) consists of a thermal-RGB sensor (FLIR One Pro, FLIR Systems, Inc., Wilsonville, Oregon, OR, USA) integrated with an Android operating system (OS) based smartphone. Typical design consideration was that such a tool should work on any smartphone that has on-the-go universal serial bus [OTG USB] and ‘Android 8.0’ or higher OS version. This tool uses an Android OS compatible application (version: 1.0; hereafter termed as ‘AppSense 1.0’) that allows data acquisition, processing, management and sharing with the end-users. The mobile FST tool enables real-time acquisition of in-field thermal-RGB images and geolocalized weather data for resulting data analysis on-board the smartphone to estimate apple FST. The imagery and weather-data-based FST estimates can be stored on board the smartphone and shared with end-users to make appropriate sunburn management related decisions. This tool was designed in such a way that a typical fruit grower or farm crew can install and use it with ease.

2.2. Smartphone Application Development

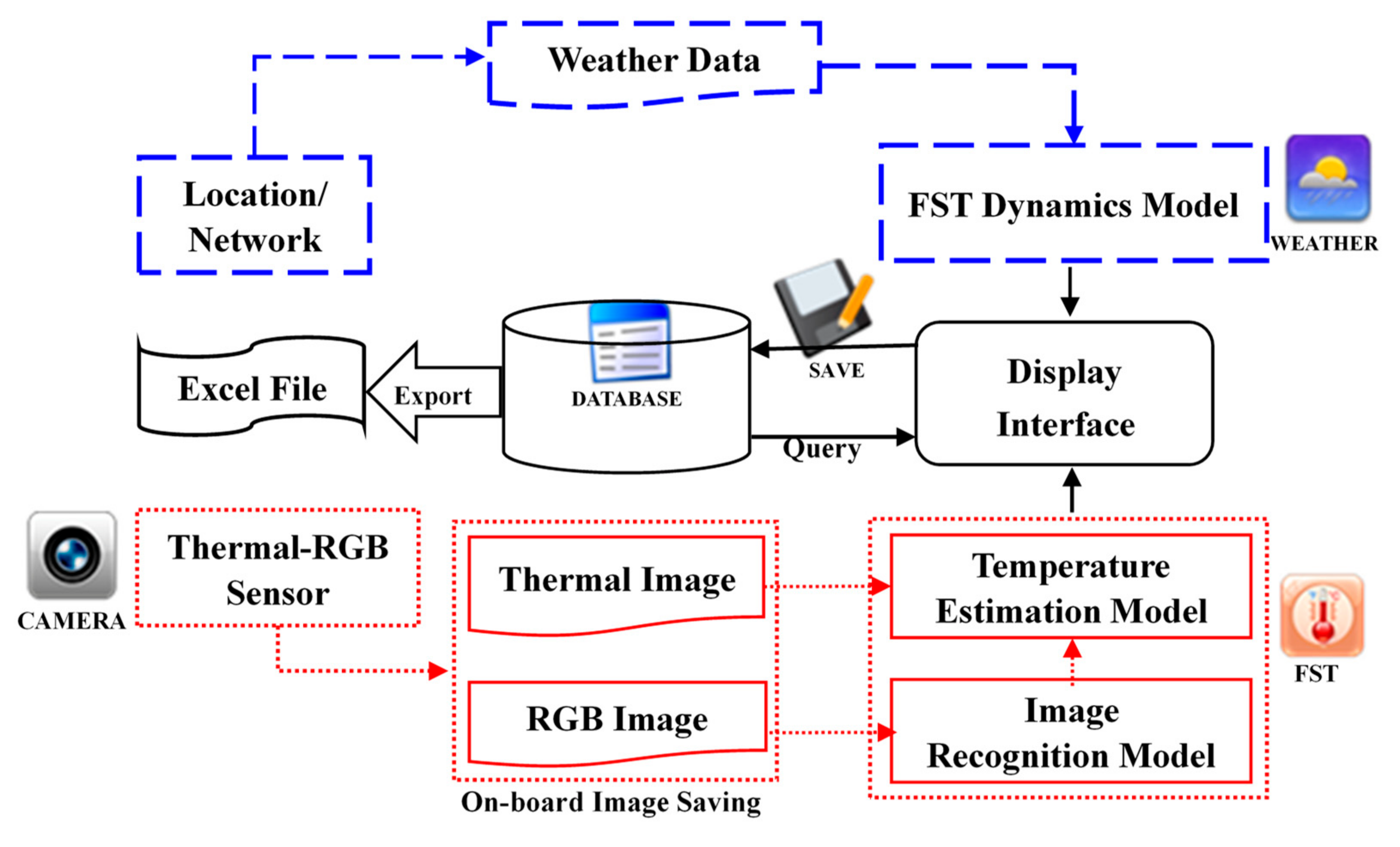

The ‘AppSense 1.0’ application was developed in ‘Android Studio’ integrated development environment (IDE) (open source, version 3.4.2). This application was designed to collect weather data from the nearest open field weather station, acquire the thermal-RGB image, estimate the weather and imagery based FSTs, save and display the processed images and FST data, and export them in excel format (‘*.xlxs’) (

Figure 2). An open source computer vision (OpenCV, version 3.4.0) Java library was utilized for on-board image processing and FST estimation. The ‘AppSense 1.0’-captured raw and processed images were stored in the internal memory of the smartphone that can be utilized for further analysis. The data analyzed by the tool is stored in the local ‘SQLite’ database and can be retrieved and managed at a later stage.

2.3. Weather Data Based FST Estimation

An apple surface temperature dynamics model by Li et al. [

10] was utilized for weather data based FST estimation. The energy balance approach was adapted for the model development. The total input energy in the fruit system that includes net short-wave radiation and net long wave radiation was balanced with total output energy, that includes emitted fruit thermal radiation, energy loss by evapotranspiration, sensible heat loss or gain, and total heat transfer within the fruit. The derived model utilizes four major weather parameters (air temperature, dew point temperature, solar radiation and wind speed) and the surrounding ground temperature to predict the mean apple FST. The open field weather data was acquired in real time from the agricultural weather network of Washington State University (WSU AgWeatherNet, available online:

http://weather.wsu.edu/index.php). This network includes 186 automated weather stations located mostly in the irrigated regions of eastern Washington State [

16]. Typically, each station collects 10 different weather parameters at a frequency of 0.2 Hz and provide mean value at every 15 min.

The process flow of weather based FST estimation is depicted in

Figure 3. Firstly, the ‘AppSense 1.0’ application acquires longitude and latitude information of all weather stations in the network and utilizes the smartphone global positioning system (GPS) coordinates (longitude and latitude) to locate the nearest weather station. The FST monitoring tool downloads the weather parameters from the located station and applies the apple surface temperature dynamics model to estimate the FST.

2.4. Thermal-RGB Imagery Based FST Estimation

A customized image-processing algorithm was developed for estimating thermal-RGB imagery data based FST (

Figure 4). The smartphone-integrated thermal-RGB imaging sensor (measurement range: −20–400 ℃, Accuracy: ±3 ℃ or ±5%, focal length: 15 cm–infinity, thermal sensitivity: 70 mK) was capable of acquiring radiometric thermal (resolution: 160 × 120 pixels) and Red-Green-Blue (RGB) (resolution: 1440 × 1080 pixels) images (

Figure 4). Radiometric thermal data consists of pixelated temperature information.

Calibration of the thermal-RGB sensor was conducted using water [

17] with an emissivity of 0.95–0.97, almost equal to that of plant leaves and apples surfaces. The water temperature was varied within a range of 10–50 °C in reference to typical FSTs. The actual temperature was measured using a liquid-in-glass thermometer for calibration of the thermal-RGB sensor-estimated temperatures. Five random circular spots were selected from the images and were analyzed for their mean temperatures in the sensor manufacturer provided Application (FLIR One, FLIR Systems Inc., Wilsonville, Oregon, OR, USA). The developed calibration equations were then used to correct the temperature data.

In this study, the pertinent RGB imagery data was used to identify and segment the apple from the rest of the canopy. The size of the RBG image was first resized to 160 × 120 pixels so that it could be overlapped with the thermal images for accurate pixel matching. Fruit color feature was used to separate the fruit from the stems, foliage, and background. For simplicity, the fruit shape feature extraction method was not employed as it is expected that the user will point the sensor to have several fruits prominent in the image.

2.4.1. Color Space Conversion and Fruit Segmentation

The

YUV color space is one of the closest to the color perception of human eyes. The use of the

YUV space may be more effective than

RGB color space [

18]. In

YUV, Y represents the brightness of color space and can be very vulnerable to the influence of illumination. Hence, only

U (red projection) and

V (blue projection) color components were used for segmentation. Moreover, such color conversion makes the algorithm simple from a computational resource use point of view [

19]. The

RGB to

YUV color transformation algorithm have been illustrated in Equation (1):

The resulting

YUV color space was then used to segment the fruit using the logic in Equation (2).

where,

U and

V is the chrominance component of the

YUV color space,

U1 and

V1 are the lower threshold, and

U2 and

V2 are the upper threshold of

U and

V, respectively. The threshold was set based on image analysis of more than a hundred mature apples of selected cultivars. First, the apples were manually segmented using an image-editing tool (Photoshop CS6, Adobe Inc., San Jose, California, CA, USA). The mean

YUV values of each of the segmented apples were then investigated and the upper and lower threshold of the chrominance components were determined. The resulting

U1 and

U2 values were set at −0.07 and 0.18, and the

V1 and

V2 values were set at −0.12 and 0.58, respectively.

Most of the segmented binary images (

Figure 5b) had residual errors. Branches, stems and leaves in the frame were sometimes closer to the color features of maturing apples and can lead to such errors. A median Blur filter (size = 25 pixels), was applied to remove these errors (

Figure 5c). This filter eliminated the small unwanted pixels from the frame. However, since only the largest apple in the image was the region of the interest for this study, the use of a fixed filter size was not completely effective and some parts of partially segmented apples remained in the region of the interest. To eliminate such areas, the maximum connected pixel domain was identified and the rest were rejected (

Figure 5d).

Figure 5e depicts the resulting segmented apple blob. Finally, the segmentation accuracy of 10 randomly selected sample images were estimated. The largest apple in the frame was manually labeled for each image and the segmentation accuracy of the algorithm was evaluated by dividing the area of the algorithm-segmented image with that of the manually segmented ground-truth image at each stage.

2.4.2. Image Overlapping and FST Estimation

A pixel offset was observed between the thermal and RGB image (

Figure 5e,f). The eccentricity between two sensors often results in such an offset. The pixel offset was found to be about 40 pixels when the image was captured from a typical imaging distance of 20 cm. An image registration was performed between the thermal and RGB images to eliminate this offset. Finally, arithmetic operations were performed on the segmented thermal image (

Figure 5h) to estimate the mean and maximum apple FST.

2.5. Field Tests

Field data was collected for four apple cultivars

cv. Honeycrisp, Fuji, Gala and Red delicious. The orchards were at various locations within the state of Washington, USA (

Table 1). First, 60 images were captured from an imaging distance of about 20 cm for

cv. Honeycrisp using thermal-RGB imaging sensor integrated with an Android OS smartphone (model: OnePlus 6, OnePlus Technology Co. Ltd., Shenzhen, China) (

Figure 6a). The image acquisition and on-board processing was performed using the ‘AppSense 1.0’ application to estimate FST. Moreover, actual surface temperature, or ground truth data was collected using a thermal probe (model: Thermapen

® Mk4, ThermoWorks, Lindon, Utah, UT, USA) of accuracy ±0.4 °C and response time of 2–3 s. Five sun-exposed apples were randomly selected in the orchard block and the thermal probe was punched into the upper 3 mm of the apple surface at five places to measure the surface temperature (

Figure 6b). A stabilization time of 3 s was used for reliable temperature measurement. This data was used to test and refine the mobile FST monitoring tool and the functionality of the developed application. The resulting tool was further tested to monitor the FST of

cv. Fuji (50 fruits), Gala (75 fruits) and Red delicious apples (20 fruits).

2.6. Data Analysis

The thermal-RGB imagery derived FST (FSTi), weather data derived FST (FSTw), ground truth measured FST (FSTG), and ambient air temperature (Tair) data was first checked for normality as a primary step in statistical analysis. A ‘Pearson product moment’ correlation test was then performed to test the relationship between FSTi, FSTG, and Tair. Moreover, a ‘two-sample t-test’ was performed to quantify the mean difference between FSTi and FSTw. A similar analysis was performed to estimate the mean difference between FSTi and FSTG, FSTi and Tair, as well as imagery based maximum FST (FSTi-max) and FSTG. A statistical analysis was performed in R-Studio® (version 1.0.153–© 2009–2017, RStudio®, Boston, MA, USA) and significance was inferred at the 5% level.