To capture the shape of the BF burden surface, a novel device capable of capturing images of the burden surface is the first requirement. Then, the sharpness of the originally captured burden surface images is analyzed, and the CLEAR algorithm, which is based on micro-pixel luminance polarization, is proposed. The details are as follows.

2.1. Introduction of Burden Surface Imaging System Based on Industrial Endoscope

Considering the high-temperature, high-pressure, high-dust, and low-light environment inside a BF, a parallel low-light-loss backlit industrial endoscope was developed to capture a video of the burden surface. A parallel low-light-loss backlit path and a low-temperature cold light source at the back end were used to overcome the challenges created by poor lighting in the closed BF environment. To ensure functioning of the equipment at high temperatures, the high-temperature-resistant lens was placed separately from the imaging chip, which is not resistant to high temperatures, by following the principle of optical fiber guiding. Furthermore, the air- and water-cooled double-circuit structure was employed to realize safety, ease of installation, and online maintenance of the equipment, in addition to equipment cooling.

The parallel low-light-loss backlit industrial endoscope used in this study is mainly composed of four functional components: an imaging component, a backlight component, protective cooling component, and power component. Schematic diagrams and dimensions of the external and internal parts of the device are shown in

Figure 2 and

Figure 3, respectively. The imaging component is mainly composed of an imaging tube and an imaging lens. The backlit assembly is mainly composed of a backlit tube. The protection cooling assembly protect the sleeve by using the double cooling assembly, as shown in

Figure 4. The power supply components mainly include the power supply and imaging drive circuits, as well as connecting lines for device power supply and video signal transmission. A photograph of the industrial endoscope used in this study is shown in

Figure 5. The endoscope works as follows. First, the cooling assembly ensures that the protection device can function in the high-temperature, high-pressure environment inside the BF. Then, the backlit assembly within the device uses light from an external source to provide sufficient illumination in the furnace for the imaging assembly at the front end. Once again, the imaging tube of the imaging assembly exports the captured BF surface images to the low-temperature region at the back end of the device digitally images the imaging chip of the imaging tube and its imaging driving circuit. Finally, the video signal line interface of the power component is realized. Digital video information of the BF burden surface is transmitted to complete the entire workflow.

For the proposed burden surface imaging system based on the industrial endoscope, reasonable installation of the industrial endoscope is essential for achieving long-term operation and high imaging quality. First, the equipment needs to be installed in a low-dust area to reduce the influence of dust on image quality. This study follows the BF top dust movement distribution outlined in [

20] to determine the location of the low-dust area. Second, the front end of the equipment should be located 1–1.5 m away from the material surface to ensure that the backlight provides an adequate level of brightness. Third, the device should be installed less than 1 m away from the inner furnace wall while ensuring that it is in a region through which no material flows with a sufficient safety margin, so that the device is not hit by the stream.

Figure 6 shows the installed industrial endoscope.

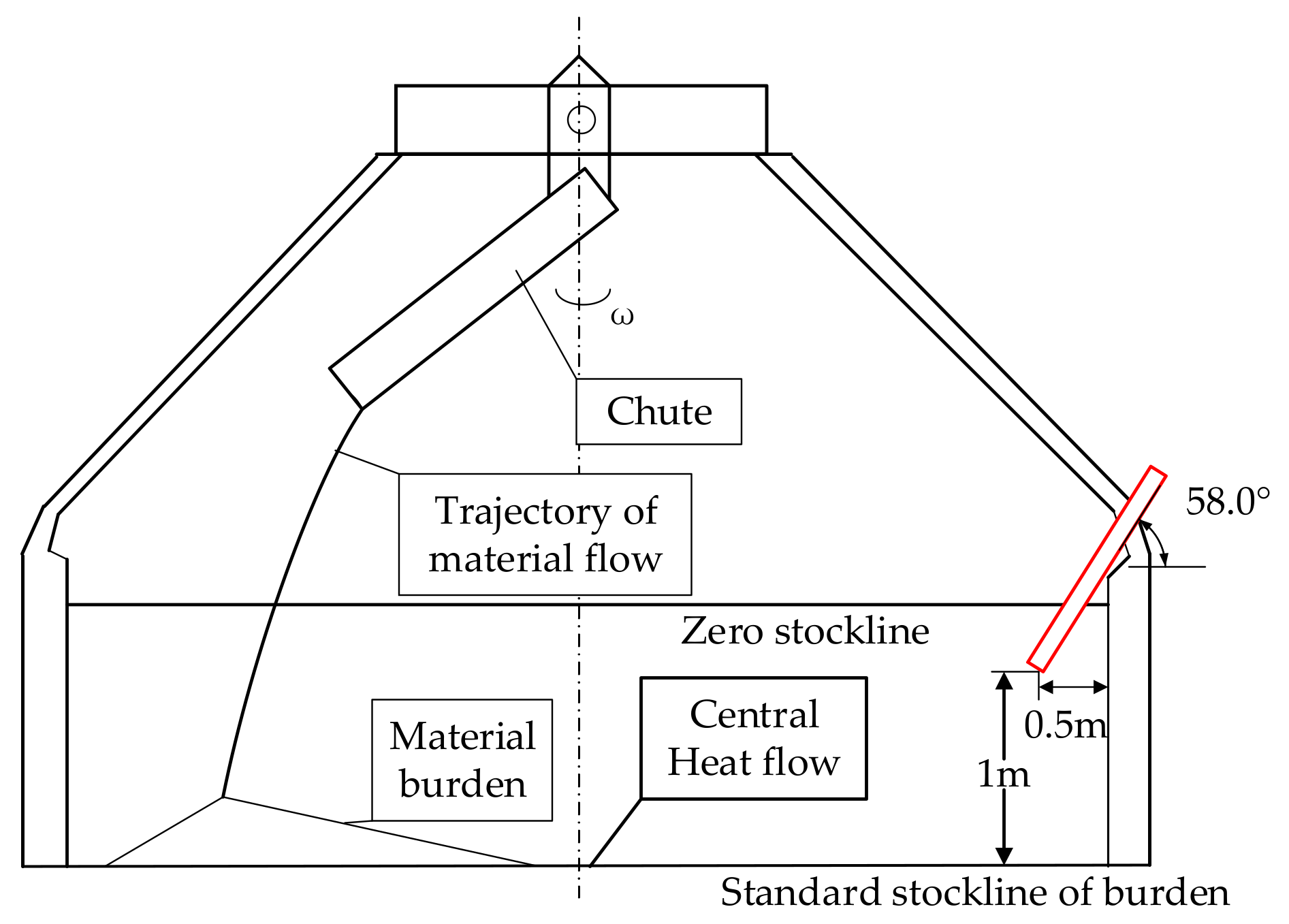

The device is installed at the position shown in

Figure 7, such that it makes an angle of 58° with the horizontal. The distance between the front end of the device and the standard material line is 1 m, and the distance between the front end of the device and the inner wall of the furnace is 0.5 m. Thus, the device lens directly captures the center airflow and the material surface. The part of the equipment outside the furnace is connected to the air- and water-cooled passage and the power–data transmission line. Therefore, videos of the material drop can be captured in real-time.

2.2. Construction of Key Frame Classifier

In the burden surface images captured using the industrial endoscope, not every image frame is clear and rich in details. A few low-quality images are obtained, as shown in

Figure 8. The images in

Figure 8 depict only a large flame in the center and marginal amounts of material information. These burden surface images cannot be used for 3D reconstruction.

Figure 9 shows a few burden surface images captured using the industrial endoscope,

Figure 9a,b is captured when the burden surface is even and average lighted,

Figure 9c,d is captured when the burden surface is tilted and not well lighted. However, the sharpness, brightness, and material information of these images are different. In the images in

Figure 9a,b, texture details are unclear, and edge strength is low. The images shown in

Figure 9c,d is characterized by low image brightness and signal-to-noise ratio, and graininess of the material is unclear. Therefore, these images were filtered out during image processing.

The BF level drop process is slow. Video sequences captured during a falling plane typically contain more than 9000 highly overlapping frames. Therefore, one must judge whether the candidate key frames can actually be used as key frames. During falling of the charge, the central airflow area in the video sequence is overexposed, central airflow is unstable, and the key frame cannot be satisfied simply by a fixed interval. In the videos captured inside the BF, image details are very important, and the central airflow area cannot be changed rapidly because doing so would destabilize feature tracking.

Image description is often performed using descriptors of the target features, which represent the characteristics of the target image. An important task in image analysis is obtaining quantized values of the target features from an image. Features for image recognition can pass through edges, outlines, textures, and regions of the image, all of which are intuitive features of image grayscale. Their physical meanings are clear, extraction is relatively easy, and the corresponding extraction algorithm can be designed for specific problems. Thereafter, the designed algorithm can be used as an input training classifier. For the image grayscale features, edge intensity is considered, luminance valued are normalized, and amount of noise is considered the image sharpness feature. The key frame discriminator designed for the burden surface videos captured using the industrial endoscope is shown schematically in

Figure 10.

(1) Edge intensity can reflect image clarity. The larger the value, the definition of the image sharpness based on the Laplacian gradient function is as follows,

where

is the convolution of the Laplacian operator at pixel

.

(2) Luminance value is normalized, and uniform luminance of the image is defined as the sum of the pixels exceeding the high-luminance threshold and low-luminance threshold. Superimpose to obtain an image with uniform luminance distribution.

(3) Noise is a high-frequency component, and the image edges are high-frequency components with high gradient values. Nonetheless, the image edges have clear structural features. According to these features, image noise can be separated from image edges. In this study, the Tenenbaum gradient function is modified such that the original vertical and horizontal gradients are transformed into four-direction gradients. Tenenbaum gradient function convolves an image with Sobel operators, and then sums the square of the gradient vector components. After the image is convoluted with the Sobel operators to gain four-direction gradients, the minimum of the four directional gradients is defined as the final gradient of a pixel. When the gradient of a pixel is greater than a preset threshold, the pixel is classified as a noise pixel.

where

is the gradient value,

is the pixel value at the coordinates

, and

is the direction operator that is defined as

(4) Image Displacement Features: To ensure that the acquired key frames can be used for 3D reconstruction, a certain baseline distance should be maintained between the key frames used in the calculation. It is necessary to consider the displacement between a given frame and the previous frame. In this study, a relative frame-based optical flow method was developed to estimate the displacement of the blast furnace surface in images. The proposed optical flow method, which adds the magnitude of the optical flow value of each frame pair and normalizes the sum against the total image displacement sum, was used because it outperformed other optical flow methods in this object application. The normalized pixel displacement input classifier was used as the image pixel displacement feature to ensure that the total pixel displacements of the images were not excessively small, and for making threshold judgments.

Assume that the local light flow and gradient are constant, that is,

where

E represents the input image and

X is a variable that represents the 2D coordinates of the image. Thus, we have

combine it, we have

where

.

Suppose that the displacement between the current frame and the previous key frame is d. Then, the current frame image can be expressed as follows.

Thus, the pixel shift displacement can be calculated as

Because the image of the falling burden surface cannot simply be considered a binary polynomial, as for the amount of displacement of the burden surface, approximate the true value of

A(

X) by averaging.

Then, an objective function is constructed to optimize the displacement as follows.

Thereafter, through

we have

where

i denotes the index of each pixel in the field.

The displacement vectors along the x and y directions can be obtained. In Equation (17), only

is unknown. To find the solution of

, an a priori estimate

, which is an estimate of the true displacement, is introduced in this study.

By combining Equations (20) and (14), we have

First, is set to 0, and a new displacement value is calculated using Equation (22). This displacement is more accurate than the previous one, and this new displacement value is substituted as the new value of . After multiple iterations, the exact displacement value is obtained.

The output of the proposed key frame classifier is shown in

Figure 11. Compared with the burden surface image in

Figure 9, the key frame has stronger edges, higher brightness, and higher signal-to-noise ratio. Moreover, when the first frame key frame is selected, the second frame key frame selected by the discriminator has a large pixel movement.

Figure 11 shows the existence of a certain baseline distance between the given frame and the previous frame.

2.3. Burden Surface Image Sharpening Algorithm Based on Micropixel Brightness Polarization

2.3.1. Industrial Endoscope Image Clarity

During the material discharge process, the light source of the central gas flow is easily blocked by the material peak. Consequently, the overall video of the burden surface is dark, and the central gas flow is easily overexposed. The brightness of the image is polarized, and the image contains both overexposed and overly dark regions. Owing to the influence of dust in the furnace, the image is very noisy. The material burden contains multiple burden loops, and a non-single burden loop contour distribution is formed. The polarized area of the burden surface image is shown in

Figure 12.

Figure 12a area represents the polarized dark region that is focused on the material and away from the highlight area.

Figure 12b area represents the polarized bright region that is focused on the highlight area.

The characteristics of the BF burden surface video lead to the following difficulties from the viewpoint of achieving the desired image clarity: (a) The noise in the video makes it more difficult to clear up the video, and when the brightness is poor, it is difficult to filter out noise from the image detail features while preserving the texture details. (b) Extremely bright and extremely dark areas are difficult to segment, extremely bright edges are ambiguous, central airflow is directed opposite to the material surface and is affected by airflow disturbances in the BF, and jitter is large. (c) The requirements of the sharpening algorithm in the extremely bright and extremely dark areas are contradictory, that is, the algorithm needs to suppress brightness in the extremely bright areas, whereas it needs to enhance detail and increase brightness in the extremely dark areas. In summary, image subregions are sharpened to ensure that the brightness and sharpness of the material falling process sequence are consistent. This is beneficial for tracking the depth characteristics of the burden ring, which is a prerequisite for 3D reconstruction.

The high-gloss area can be ascribed to the high-temperature airflow at the center of the BF. Because of the high-temperature airflow and splashing of the charge, some areas of the image are extremely bright, and image details in these areas are suppressed. Therefore, suppressing the highlight areas is important for ensuring consistent surface reflection characteristics and sharpness.

A block diagram of the micro-pixel luminance polarization method developed in this study is shown in

Figure 13. The proposed sharpening algorithm performs highlight detection and suppression and increases the brightness of the surrounding dark regions by using the micro-pixel luminance polarization method. For realizing detection of the luminance polarization region, we propose a method for determining the polarization region by using gray information. This method simultaneously computes image grayscale. An adaptive threshold that can be used to identify the highlight region is determined based on the average grayscale value and the standard deviation of the gray image.

2.3.2. Principle of Micro-Pixel Luminance Polarization

A micro-pixel is a set of pixels that have morphological consistency and continuous brightness. A micro-pixel uses the same quantization step size and motion matrix to form the grayscale polarization region, and it takes square area pixels. When the BF material surface is subjected to luminance polarization division, image details are enhanced for the characteristics of the micro-pixel. Then, the polarization area is tracked to ensure the correctness of block matching. The micro-pixel quantization formula is given in Equation (23)

where

m is average value of the squares in the micro-pixel region.

First, the continuous frame average pixel is calculated. Then, the inter-frame moving pixels are taken as

. The sum of the micro-pixel moving speeds is

, and the predicted value of change in the velocity of the micro-pixel in the polarization region can be obtained as follows.

where

and

denote the grayscale mean and standard deviation of the grayscale images, respectively.

When the highlight area is detected, the number of saturated pixels can be reduced by replacing the highlight region with the intensity value derived from the combination of adjacent pixels’ values. In this manner, the compensation value of the highlight edge region can be obtained.

where

determines whether a point requires compensation in the highlight area

,

is compensation value calculated by the pixel value it is adjacent to. The compensation adjustment value can be computed as follows:

where R, G, and B denote the red, green, and blue colors when finding the optimal matching compensation, respectively. The optimal match is found when the average pixel value difference between the highlighted micro-pixel

and the compensation micro-pixel

is the minimum. The three color components comprise the compensation block, and the uneven illumination distribution at the image center is processed by applying the radial gradient forced symmetry method. In this study, the vignette correction method is applied to strengthen the dark areas around the image by forcing the symmetry of the radial gradient from the center toward the boundary.

First, background information is extracted by using variance information of the scene. Then, the background gray distribution is approximated using a binary cubic polynomial, and the distribution of the entire image plane correction factor is calculated. Correction of the vignette effect is realized by processing each image frame.

The incident light beams parallel to the lens are collected at the center of the image plane at point

. Assuming that the illuminance at this point is

, light beams that make an angle

with the optical axis are concentrated in image

.

where

K is the fading coefficient.

By analyzing the histogram of the image, we can determine whether the target and the background have independent gray distribution intervals. By setting a reasonable threshold, we can segment the target and the background. The specific operation method is as follows.

Step 1: Take a frame of size k from the image, find the average k value of each pixel in the frame , and then compute the variance of each pixel .

Step 2: Calculate the global threshold.

Step 3: Calculate the background pixels.

Step 4: Refer to the background gray distribution model obtained by approximation.

Step 5: Compensate for the vignette effect.