Abstract

Remote sensing images have been widely used in many applications. However, the resolution of the obtained remote sensing images may not meet the increasing demands for some applications. In general, the sparse representation-based super-resolution (SR) method is one of the most popular methods to solve this issue. However, traditional sparse representation SR methods do not fully exploit the complementary constraints of images. Therefore, they cannot accurately reconstruct the unknown HR images. To address this issue, we propose a novel adaptive joint constraint (AJC) based on sparse representation for the single remote sensing image SR. First, we construct a nonlocal constraint by using the nonlocal self-similarity. Second, we propose a local structure filter according to the local gradient of the image and then construct a local constraint. Next, the nonlocal and local constraints are introduced into the sparse representation-based SR framework. Finally, the parameters of the joint constraint model are selected adaptively according to the level of image noise. We utilize the alternate iteration algorithm to tackle the minimization problem in AJC. Experimental results show that the proposed method achieves good SR performance in preserving image details and significantly improves the objective evaluation indices.

1. Introduction

With the constant development of the remote sensing technology in recent years, many remote sensing image applications have been proposed, such as fine-grained classification [1,2], detailed land monitoring [3], and target recognition [4,5]. The performance of these applications is closely related to the image quality. However, the resolution of the remote sensing image is largely affected by the spatial resolution of the optical sensor. To improve the quality of remote sensing images, many super-resolution (SR) methods [6,7,8,9,10] have been proposed. They can be divided into multi-frame [11,12,13,14,15,16,17] and single-image SR methods [18,19,20,21,22,23].

The multi-frame SR methods aim to recover a high-resolution (HR) image from multiple low-resolution (LR) frames. A multi-frame SR method based on frequency domain was proposed in [13]. Though this method can be implemented fast, it leads to serious visual artifacts [12]. To address the above issue, many spatial domain-based multi-frame SR methods have been proposed [15,16]. Farsiu et al. [15] proposed an iterative method using -norm in the fidelity and regularization terms. Patanavijitt and Jitapunkult [16] proposed a stochastic regularization term according to the Bayesian maximum a posteriori (MAP) estimation. Huang et al. [14] proposed a bidirectional recurrent convolutional network for multi-frame images SR. Although the spatial domain-based multi-frame SR methods can achieve good SR performance, they either consume quite a lot of memory or take a large amount of running time [15]. Moreover, in some cases, only one image is available instead of multi-images, which is more challenging. Therefore, single-image SR methods are significant in practical applications [23].

Generally, the problem of single-image SR can be formulated as:

where is the observed LR image, denotes the original HR image, represents the blurring operator, denotes the downsampling operator, and is the additive Gaussian white noise with standard deviation of . Single-image SR methods can be roughly divided into interpolation-based prior-based methods. Interpolation-based methods [24,25] exploit adjacent pixels to estimate the unknown pixel. The interpolation-based methods have low computational complexity and can recover degraded images fast, but they may generate severe staircase artifacts and smooth out image details.

Many prior-based methods have been used to address the single-image SR problem. Tai et al. [26] exploited edge prior and single-image detail synthesis to enhance the resolution of the LR image. Some researchers have proposed example learning-based methods by exploiting the priors according to the similarity of the image. Yang et al. [22] proposed a joint sparse dictionary (HR and LR dictionaries) to reconstruct the HR image. This method assumes that the sparse vector of the HR patch is the same as the corresponding LR patch. Timofte et al. [27] proposed a joint sparse dictionary-based anchored neighborhood regression (ANR) approach, where regressors are anchored to the dictionary atoms. A joint dictionary-based statistical prediction model that assesses the statistical relationships between the sparse coefficients of LR and HR patches was introduced by Peleg and Elad [28]. NPDDR [29] trained a joint dictionary to deal with the lack of HR component and employed improved nonlocal self-similarity and local kernel to constrain the optimization process. Hou et al. [7] proposed a global joint dictionary model for the single remote sensing image SR, including the local constraint within each patch and the nonlocal constraint between HR and LR images. A single dictionary-based adaptive sparse representation scheme was presented in [30]. Dong et al. [31] proposed a single dictionary-based method, which restores the HR image by using the nonlocal self-similarity of the sparse vector. Compared with the joint dictionary methods, the single dictionary methods do not require external examples to train. Although the above methods enhance the resolution of the LR image, they are prone to generate some artifacts. Recently, deep learning technologies have been introduced into SR. For example, Dong et al. [32] proposed a deep convolutional neural network for the SR methods. Haut et al. [6] exploited a novel convolutional generator model to improve the quality of remote sensing images. Li et al. [33] applied spatial-spectral group sparsity and spectral mixture analysis to the SR problem. Tai et al. [34] proposed a deep recursive residual network(DRRN) that contains 52 convolutional layers. A generative adversarial network (GAN) for image SR methods was introduced in [35].

However, deep learning-based methods take a great deal of time to train models by using external datasets. Furthermore, they require retraining for each different degradation, which costs more training time. In contrast, the single dictionary-based sparse representation SR methods require neither external datasets nor retraining for each degradation. However, traditional single dictionary-based sparse representation SR methods ignore some complementary constraints of the image itself. Previous works show that sparse representation-based SR methods are of great significance in improving the quality of remote sensing images [7,8]. Based on the above reasons, we focus on improving the single dictionary-based sparse representation SR methods to enhance the quality of the reconstructed HR image.

Inspired by [7], we consider introducing a joint constraint for the single dictionary-based sparse representation SR methods. In the references [7,8], authors pointed out that the sparse representation model is beneficial to improve the quality of the remote sensing image. However, this kind of method does not consider the continuity and correlation of an image’s local neighborhood, and the edge preservation ability should be further improved. To address this issue, we propose a local structure constraint based on the local gradient of the image, which can further improve the edge-preserving ability. For the sparse representation-based SR methods, it is usually assumed that the sparse coefficients corresponding to the LR image and the HR image are equal. However, due to the degradation of the LR image such as blurring and down-sampling, there is a gap between the LR sparse coefficient and the HR sparse coefficient, i.e., sparse noise. In addition, image artifacts tend to appear in the reconstructed images. Since the sparse noise and image artifacts can be well suppressed by exploiting these overlapping nonlocal similar patches, as shown in [7,31], we use the nonlocal self-similarity of the image to construct a nonlocal constraint. By using the nonlocal constraint and the proposed local structure constraint, we further propose a novel adaptive joint constraint (AJC) for the single remote sensing image SR. In the reconstruction phase, according to the level of image noise, we adaptively select regularized parameters for the proposed AJC model to obtain better reconstructed images. Our main contributions are summarized as follows:

(1) Since human visual system is more sensitive to image edges, we propose a local structure filter based on the local gradient of the remote sensing image and then construct a local structural prior.

(2) The fusion of the complementary local and nonlocal priors can achieve higher SR performance. Based on this, we combine the nonlocal self-simialrity prior and the local structural prior and then propose a novel adaptive joint constraint (AJC) for the single remote sensing image SR.

(3) To further improve the proposed AJC SR method, the regularization parameters are selected adaptively according to the level of remote sensing image noise.

The organization of this paper is as follows. Section 1 is the introduction. Section 2 introduces the related work about the sparse coding-based single image SR. In Section 3, the proposed AJC algorithm is described in detail. Section 4 introduces the basic parameters setting and shows experiment results. Section 5 summarizes our work.

2. Related Work

The single-image SR reconstruction methods aim to transform the LR image into the HR image. To achieve this goal, sparse priors have been proposed [22,27,30,31,36,37]. Researchers have found that the image can be sparsely represented as , where is a sparse vector composed of coefficients, and denotes an over-complete dictionary, such as a discrete cosine transform (DCT) or a wavelet dictionary [30,38]. The sparse vector can be evaluated via the following formula:

where represents a regularization parameter, is a HR image, denotes the dictionary of , and represents the sparse vector of . Since -minimization is an NP-hard problem, in general, -minimization is used to approximate the -minimization. In the single-image SR problem, we want to restore the missing details of the LR image to enhance the spatial resolution. For this problem, we can address it by the following equation:

where denotes the estimated sparse vector of the LR image , and is a regularization parameter of the sparse term. Since the sparse vector of the HR patch is the same as the corresponding LR patch [22], we can get the reconstructed HR image by .

3. Proposed Method

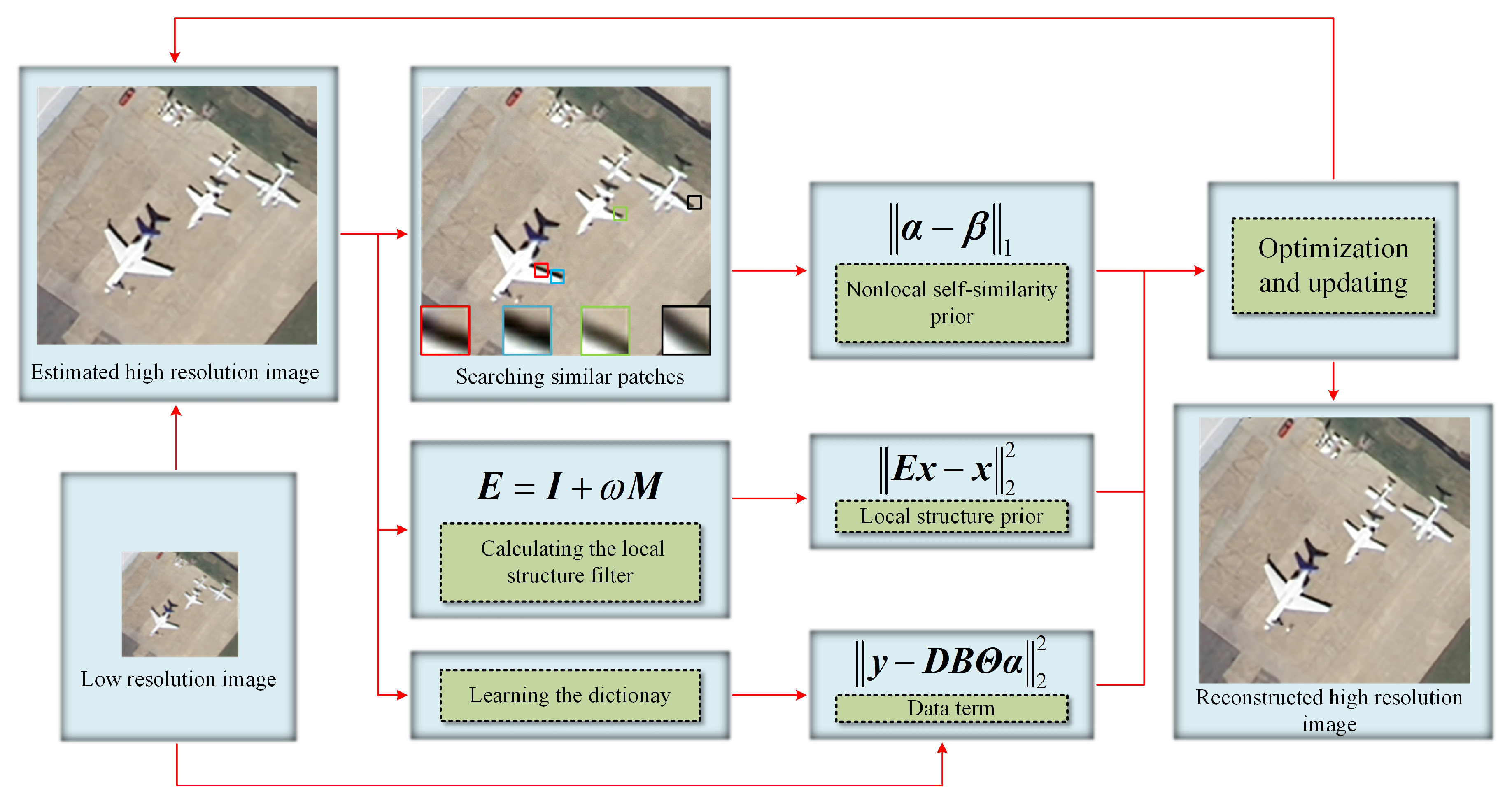

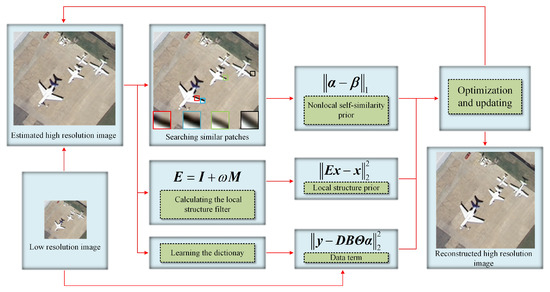

To remove artifacts and improve the quality of reconstructed HR images, we propose the AJC model that includes the nonlocal and local priors. The proposed framework is shown in Figure 1, the nonlocal prior is constructed by exploiting the nonlocal self-similarity of the sparse vector, and the local prior can be obtained according to the proposed local structure filter. The proposed AJC model can be formulated as:

where the first term is the data term, denotes the nonlocal constraint, represents the local prior, is a parameter to balance the data term and the nonlocal constraint, and is a regularization parameter used to keep balance between the data term and the local structural constraint. For the subsequent reconstruction process, we first need to use the self-learning method to train the compact dictionary. Then, the nonlocal and local priors are constructed. Finally, we use the iterative shrinkage algorithm [39] to solve the SR reconstruction problem.

Figure 1.

The proposed framework.

3.1. The Compact Dictionary Learning

In sparse representation, it is important to select a suitable dictionary for the image. In this paper, we learn a compact dictionary for the image according to [31]. It is worth noting that our dictionary learning uses a LR image as the input of training. The compact dictionary learning first needs to extract the HR image patches ( For the first reconstruction, the HR image was reconstructed from the corresponding LR image by Bicubic.). Considering that human vision is sensitive to edges, we remove extremely smooth image patches in and express them in . Furthermore, to further supplement the high frequency details of the images, we learn the dictionary in the high-frequency feature space of patches. Specifically, we adopt a rotationally symmetric Laplacian of Gaussian filter (size with standard deviation ), which can improve the average PSNR of the test images by 0.11dB in SR application. The high-frequency feature of is denoted by , where N represents the number of patches. Then, we adopt the K-means algorithm to divide into K clusters . Next, the compact dictionary can be learned from . Specially, since the PCA approach can reduce dimension and realize de-correlation, we apply the PCA algorithm to each cluster . After PCA, the sub-dictionary corresponding to each cluster can be obtained according to [31]. Finally, the compact dictionary corresponding to the reconstructed image can be constructed by . After learning the dictionary , we apply the dictionary to the proposed AJC model for obtaining the reconstructed HR image . It should be noted that the estimated will be used to update the dictionary and K will be updated in the dictionary learning phase.

3.2. The Joint Priors

3.2.1. The Nonlocal Self-Similarity Prior

The remote sensing image has a large number of repetitive structures. That means, for a given image patch, we can find its similar patches in other parts of the image. We illustrate the nonlocal self-similarity of the remote sensing image by using the “airplane” image in Figure 1. The red patch is a target patch, its nonlocal similar patches can be found in the image, such as the blue, green, black patches. Thus, we can use the nonlocal self-similarity of the remote sensing image to guide the image SR reconstruction. According to [31], the sparse vector also has the nonlocal self-similarity. Thus, we can exploit the nonlocal self-similarity of the sparse vector to guide the image SR reconstruction, where the nonlocal prior can be formulated as:

where , , and is the estimated by using the nonlocal self-similarity of .

In view of the above, the closer the patches are, the greater the similarity weight will be. This can be achieved by gaussian weighting. Thus, we can estimate by the nonlocal gaussian weighting of the nonlocal similar sparse vector . For each patch , can be computed by the following equation:

where is the collection of similar patches of , denotes the s-th similar patch of , is a constant that controls the degree of decay, and represents the s-th similar vector of .

3.2.2. The Local Structure Prior

However, the previous prior term can not sufficiently constrain the local structures of remote sensing images. Therefore, we propose a local prior as a complementary prior. The methods [7,22,40] exploit local features of the images to constrain the solution of SR problem. JIDM [7] and SRSC [22] employed local image patches as local features. K-SVD [40] ensured that mean square error (MSE) of each pixel neither reduction nor change in each iteration, which constrains the local structures. However, it is not sufficient for these methods to preserve edge energy while taking into account the relationship between adjacent pixels. To address the above issue, we use the local gradient of the image to solve the local structures-preserving problem in the SR reconstruction. The image gradient is sensitive to the image edge. The large gradients correspond to sharp edges of the image, while the small gradients correspond to smooth regions of the image. The objective function based on the local gradient of the image can be expressed as:

where p is the pixel location in the image, ( represents the size of the image) denotes the clean image, represents the smoother image of , Q is the number of pixels in the image, and are weight vectors, and are the square of gradients along the horizontal and the vertical directions at the p-th position of the image , respectively. In Equation (7), the first term is the data term to ensure that the image is close to the image , the second term is a regularization term that makes the gradient of as small as possible (except where the image has significant gradients) and constrains the edge energy, and the parameter balances the data term and the regularization term.

By using matrix notations, we can rewrite Equation (7) as:

where ,

and are discrete difference operations along the h and v directions, respectively, which are defined as follows:

where c and r are the column and row numbers of and , respectively, , and is the value of r in the case of . Minimizing Equation (8), we can acquire the following formula:

where , denotes an identity matrix, and we call the local structure filter. Inspired by [41], we calculate weight vectors and by:

where is a constant for mathematical stability, and . Since is unknown, and are estimated by exploiting the image . The calculation of the local structure filter is summarized in Algorithm 1.

| Algorithm 1 The calculation of the local structure filter E. |

| Input: Z |

| Output: E |

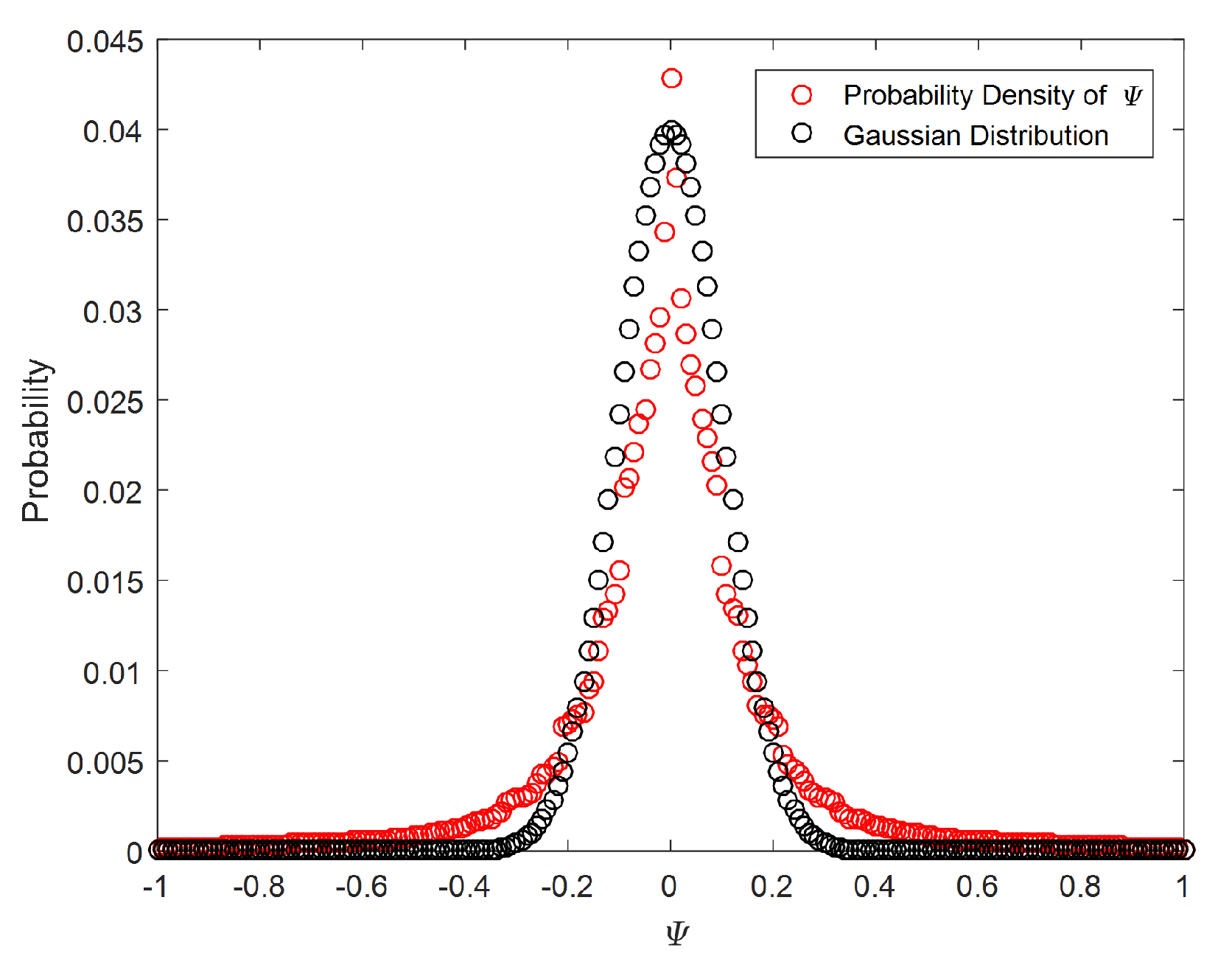

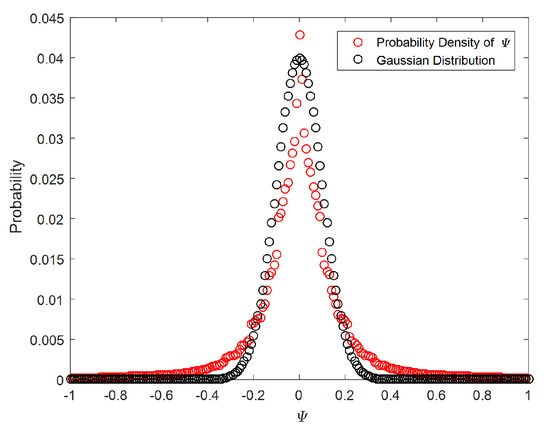

The proposed local structure filter has strong local correlation because it takes into account the local gradient of the image. Moreover, remote sensing images are usually terrain and targets images, such as airplane, harbor, parking lot, river, and mountain. These images have local correlation. To describe the local correlation of remote sensing images, we construct a local prior for remote sensing images. In order to explore the local property, we perform statistical analysis on a large number of remote sensing images. Since can describe the local continuity of the image, we analyze the statistic property of . For the image “Building” as an example, the distribution function curves are shown in Figure 2. We can find that the probability density of is close to Gaussian distribution (note that the value of each entry in is normalized to [−1, 1] in this test). According to the results, we can obtain the following formula:

where is the the probability density of , and denotes a standard deviation. Then the local constraint can be constructed according to in the MAP estimate, and the mathematical expression is as follows:

Figure 2.

The statistic property of .

3.3. Regularized Parameter Settings

Proper parameters are conducive to improve the performance of SR reconstruction, so we adopt the parametric adaptive method. Since the noise level is related to the regularization parameters [23], in this paper, and are both selected adaptively according to the noise level. The noise level can be calculated by [42]. Let , and assume that and are independent. The MAP estimation considering both and is a multi-prior estimation. Multi-prior estimation methods have been widely used in image processing [43,44]. The MAP estimation of and can be expressed as:

Since is a pre-calculated matrix and , is only related to . We can obtain .

and are generally characterized by the Gaussian distribution [43,44]:

where and are assumed to be independent according to [31]. Since is pre-calculated and , .

can be obtained according to [31,45]:

where is the g-th entry of , and represents a standard deviation of the error corresponding to the Laplace distribution.

Substituting Equations (13), (16), (17) and (18) into Equation (15), and ignoring constant terms, we can get:

where denotes the g-th entry of , and represents the g-th entry of .

Comparing Equation (4) with Equation (19), we can obtain parameters and . Furthermore, to ensure mathematical stability, we add additional parameters and in the calculation of and , respectively.

where denotes the evaluated noise level from during each iteration.

Finally, we apply the iterative shrinkage algorithm [39] to the AJC model, and the details of solution are given in Algorithm 2.

| Algorithm 2 Details of solution. |

|

4. Experimental Results

4.1. Experimental Setting

In this subsection, we show minutely the datasets and parameters settings. Test images are shown in Figure 3. These images come from three different remote sensing image datasets: UCMLUD [46], USC-SIPI-aerials [47] and NWPU-RESISC45 [48]. UCMLUD contains 21 classes of remote images, and each class contains 100 images with size . The spatial resolution for this dataset is 0.3 m/pixel. The spatial resolution for USC-SIPI-aerials is 1 m/pixel. NWPU-RESISC45 contains more kinds of images than UCMLUD. Specifically, NWPU-RESISC45 contains 45 categories, and each class has 700 images with size . The spatial resolution of this database ranges from 0.2 m/pixel to 30 m/pixel.

Figure 3.

Experimental test images, including the following images: aerial, airplane, residential, parking-lot, terrace, farmland, mountain, industrial-area, river, building, meadow, island, runaway, storage-tank, harbor.

Furthermore, the basic parameters settings in the proposed AJC method are as follows: the number of inner loop J is 160, the number of outer loop L is 5, is set to , and . In addition, to generate the test LR images, first, the HR image is blurred by the Gaussian Blur Kernel with size and standard deviation 1.6. Then, we downsample the blurred image by a scale factor of 3 [7,49].

4.2. Parameters Setting

The size () of patch and the number (K) of clustering have an important impact on the SR performance. Too few clusters will eliminate the gaps between classes. Too many clusters will make the dictionary lose its representativeness and reliability. So we need to find an optimal K by [30]. Specifically, we first divide the training patches into K clusters, and then merge the classes containing a few image patches into the nearest neighboring classes. We analyze the impact of and on peak signal-to-noise ratio (PSNR) for all the test images, where denotes the predefined clustering number of K, and the results are shown in Table 1. The average PSNR varies with the patch size. In the case of the patch size of 5, the average PSNRs of the test images are superior. In the case of the same patch size and larger than 10, PSNRs are close. This phenomenon shows the robustness of the method to find an optimal K [30]. Furthermore, the larger the number of clustering, the more time it takes. In order to obtain a higher PSNR at a reasonable time, we set to 5 and to 50.

Table 1.

Average PSNR (Peak Signal-to-noise Ratio) of Test Images with Different Parameters (Scale Factor = 3, Noise = 0).

4.3. Comparison with Different Traditional Methods

To demonstrate the SR performance of the proposed AJC algorithm, we compare it with other SR methods, including Bicubic, SRSC [22], ASDS [30], MSEPLL [43], NARM [50] and LANR-NLM [51]. PSNR, structural similarity index (SSIM) [52] and erreur relative globale adimensionnelle de synthèse (ERGAS) [53] are used as the objective evaluation indices. The result with higher PSNR/SSIM and smaller ERGAS means the quality of the reconstructed image is better.

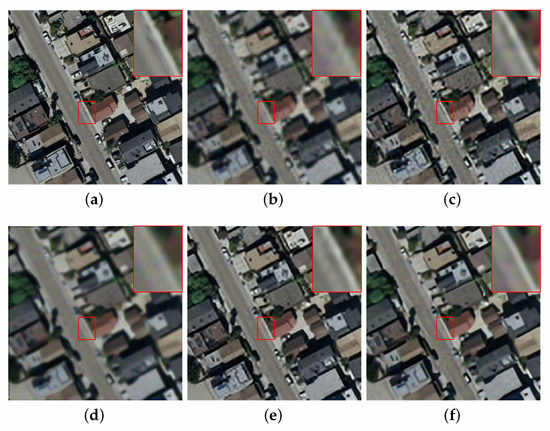

4.3.1. Noiseless Remote Sensing Images

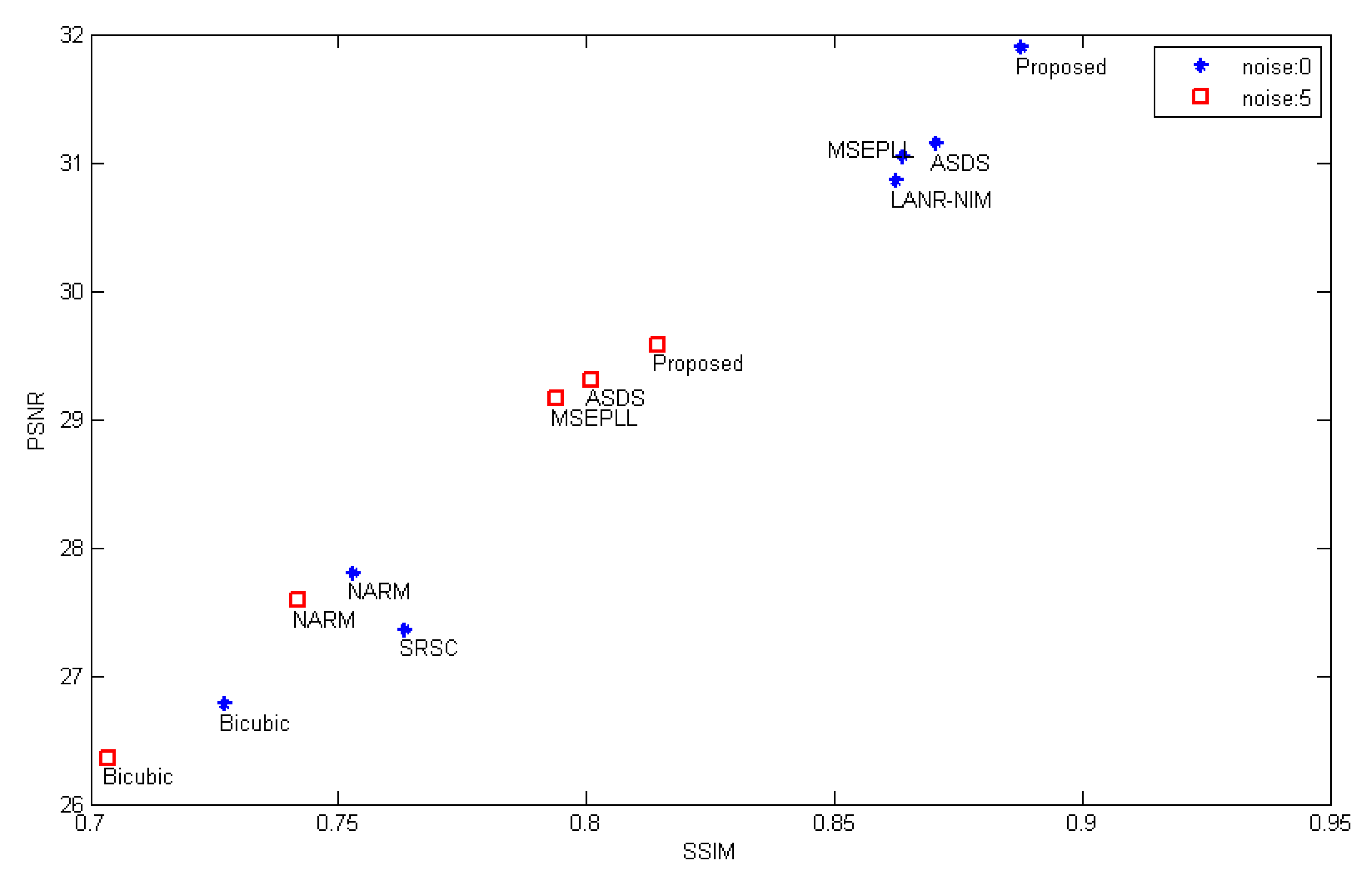

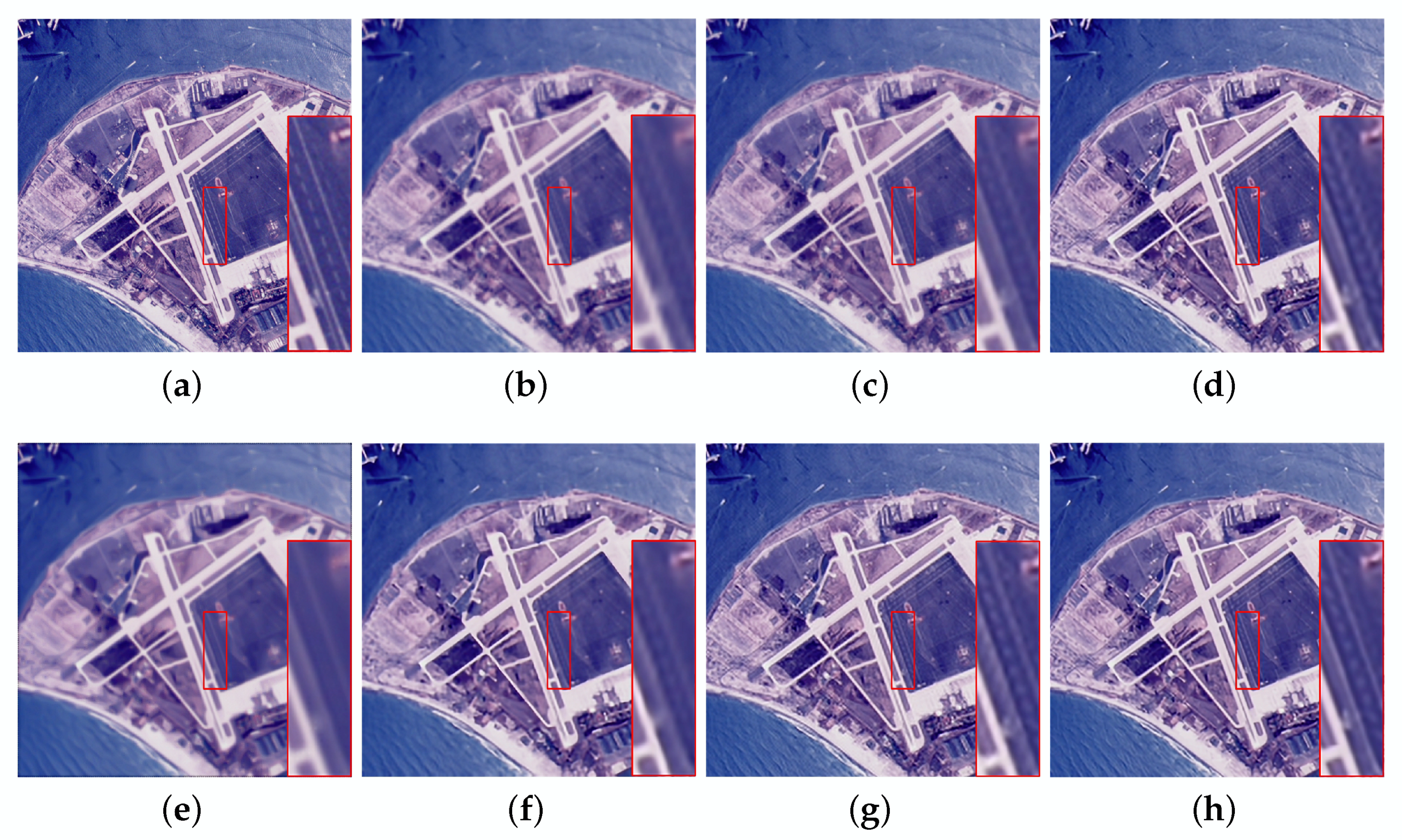

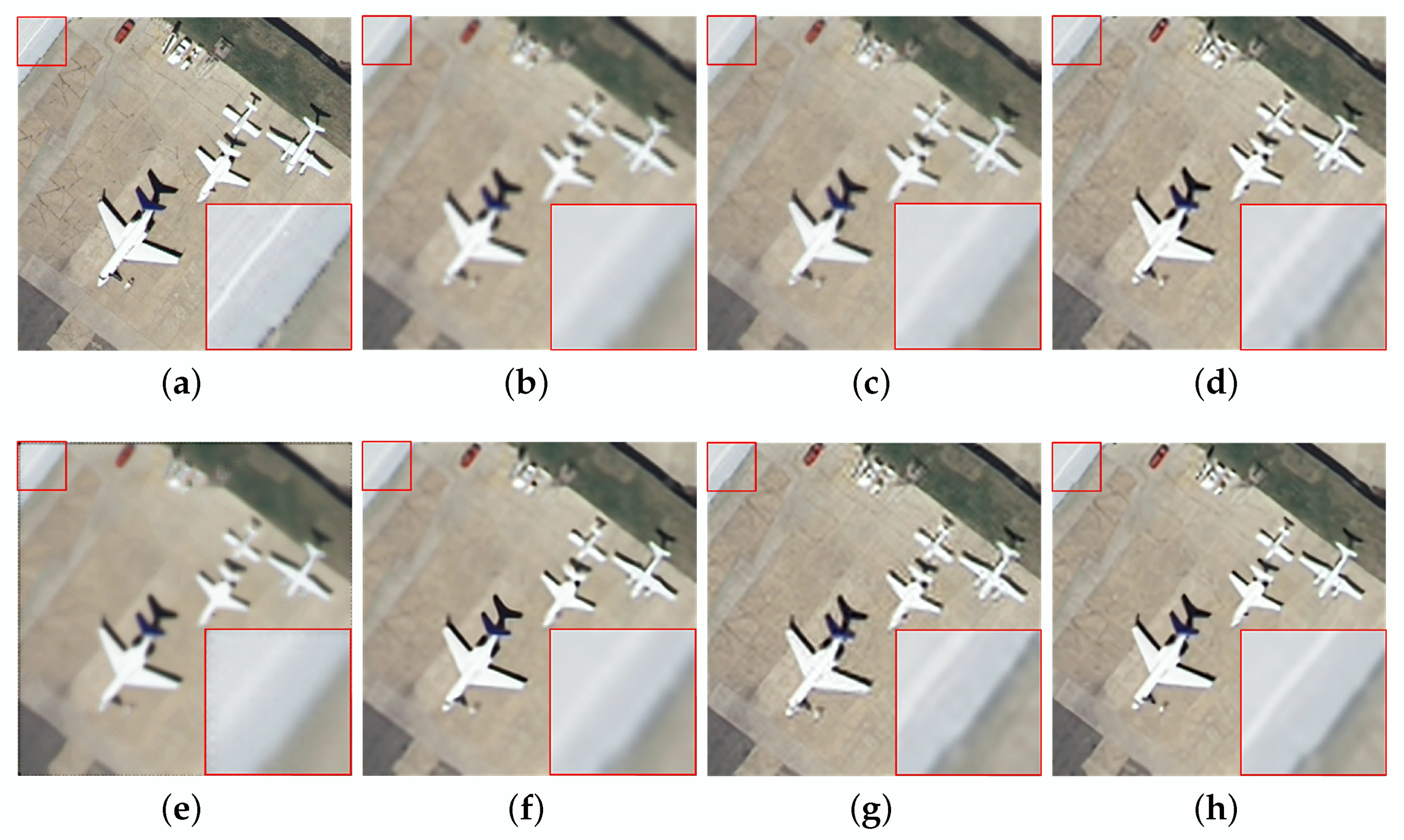

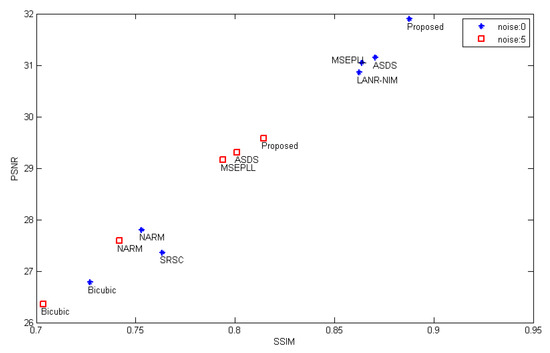

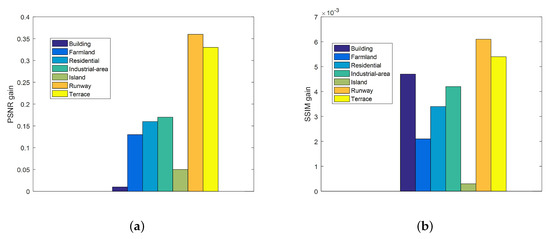

In the noiseless case, the reconstruction results using different methods are shown in Table 2. For “Island”, the LANR-NLM method acquires better objective evaluation indices. However, considering all the test images, our algorithm has superior objective indices. Specifically, the average PSNR, SSIM and ERGAS are 31.90 dB, 0.8876 and 2.2912, respectively. In addition, a graph is drawn in Figure 4 for the results provided in Table 2. From Figure 4, it can be intuitively observed that our method has better objective indices than other methods. To intuitively show the visual quality of the reconstructed image, we compare the visual results as shown in Figure 5 and Figure 6. The SR performance of Bicubic interpolation is the worst. NARM produces smoother images. As shown in Figure 5f and Figure 6f, the MSEPLL method also smoothes many details of the image. In Figure 5, compare with other SR reconstructed methods, the proposed method reconstructs the HR image with fewer artifacts and clearer edges.

Table 2.

PSNR, SSIM (Structural Similarity Index) and ERGAS (Erreur Relative Globale Adimensionnelle De synthèse) Results of Reconstruction for Test Images (Scale Factor = 3, Noise = 0).

Figure 4.

The average objective indices for the test images in the case of noise = 0 and noise = 5.

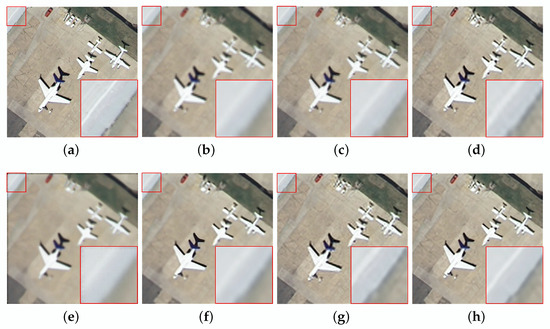

Figure 5.

Visual comparisons of the proposed method and other methods on the aerial image (noise = 0). (a) original image. (b) Bicubic interpolation (PSNR:24.67, SSIM:0.6537, ERGAS:3.9962). (c) SRSC (PSNR:25.10, SSIM:0.6925, ERGAS:3.8693). (d) ASDS (PSNR:29.24, SSIM:0.8242, ERGAS:2.3713). (e) NARM (PSNR:25.97, SSIM:0.6757, ERGAS:3.6780). (f) MSEPLL (PSNR:29.09, SSIM:0.8138, ERGAS:2.4803). (g) LANR-NLM (PSNR:29.03, SSIM:0.8220, ERGAS:2.4152). (h) Ours (PSNR:29.61, SSIM:0.8355, ERGAS:2.2616).

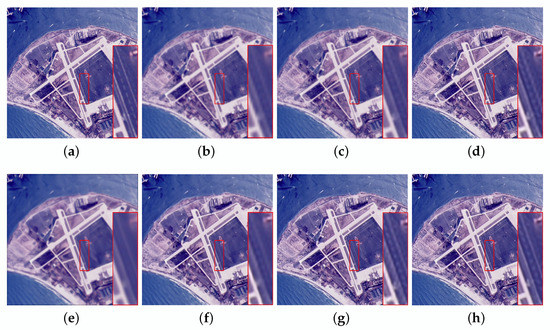

Figure 6.

Visual comparisons of the proposed method and other methods on the airplane image (noise = 0). (a) original image. (b) Bicubic interpolation (PSNR:26.27, SSIM:0.7989, ERGAS:2.6212). (c) SRSC (PSNR:26.95, SSIM:0.8205, ERGAS:2.4746). (d) ASDS (PSNR:30.95, SSIM:0.8949, ERGAS:1.4973). (e) NARM (PSNR:27.20, SSIM:0.8108, ERGAS:2.6506). (f) MSEPLL (PSNR:31.45, SSIM:0.8944, ERGAS:1.5388). (g) LANR-NLM (PSNR:30.69, SSIM:0.8919, ERGAS:1.5706). (h) Ours (PSNR:32.19, SSIM:0.9078, ERGAS:1.3277).

4.3.2. Noisy Remote Sensing Images

To demonstrate the effectiveness of our method in the noisy case, we add noise with a standard deviation of 5 to the degraded image. The results are shown in Table 3.

Table 3.

PSNR/SSIM/ERGAS Results of Reconstruction for Test Images (Scale Factor = 3, Noise = 5).

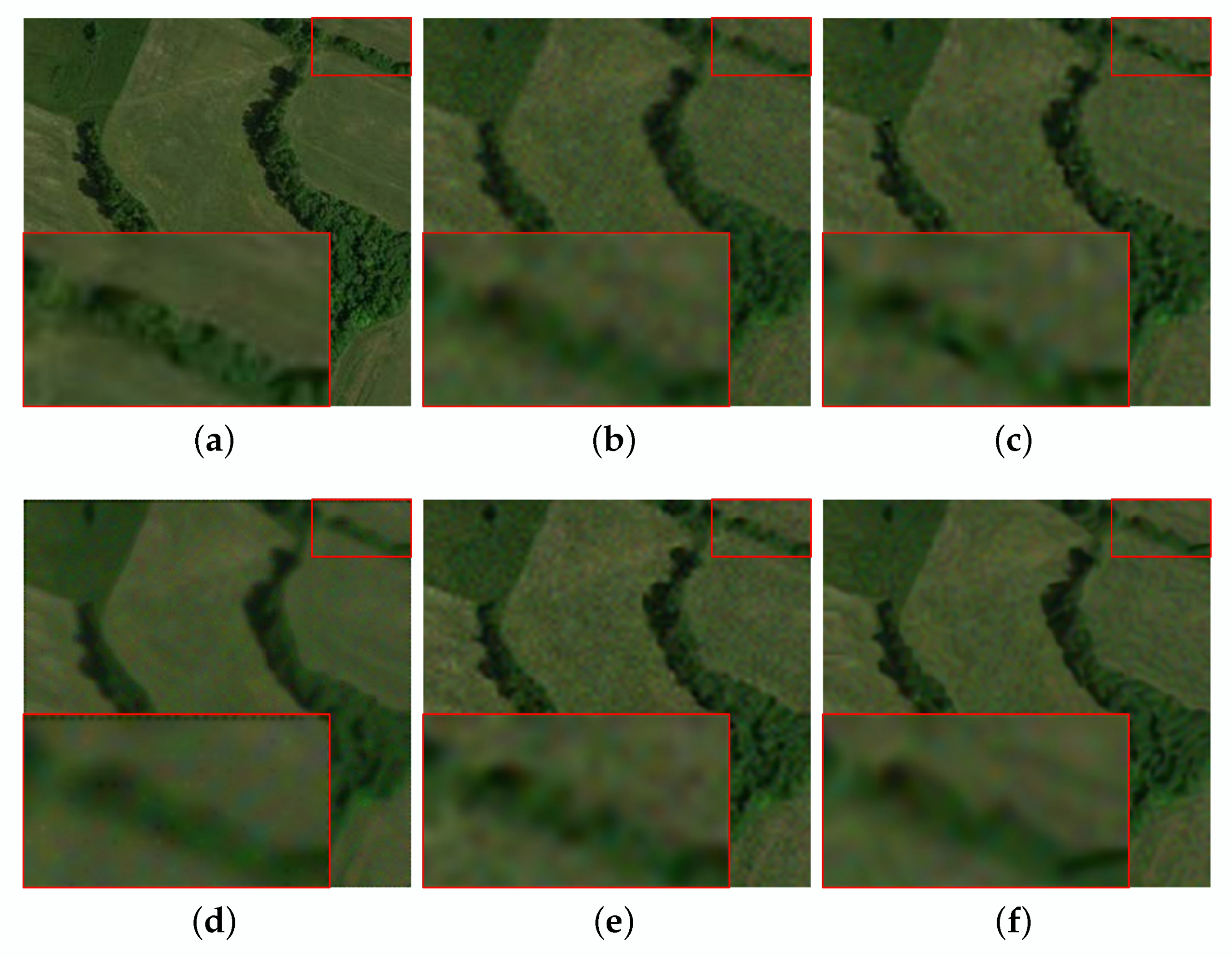

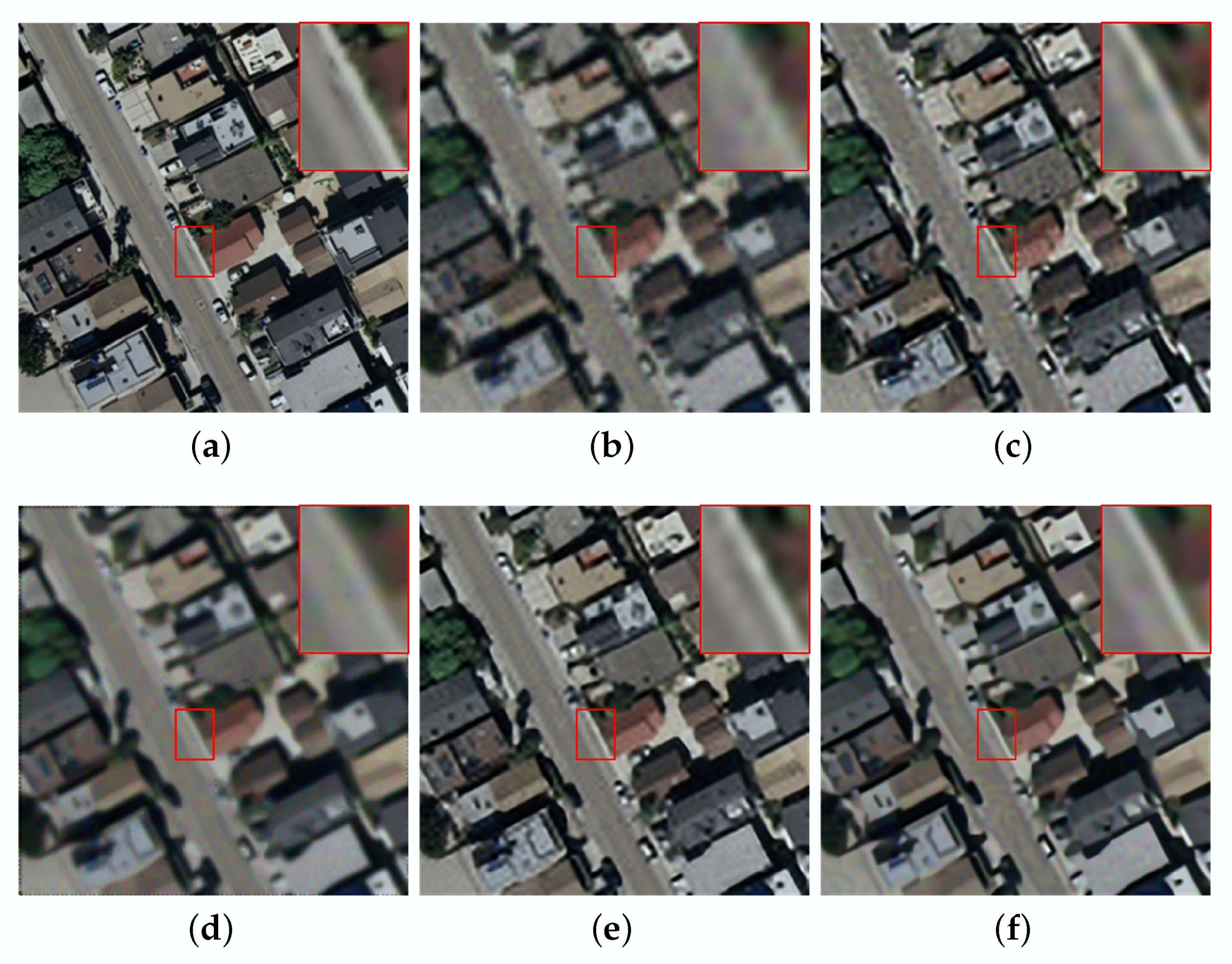

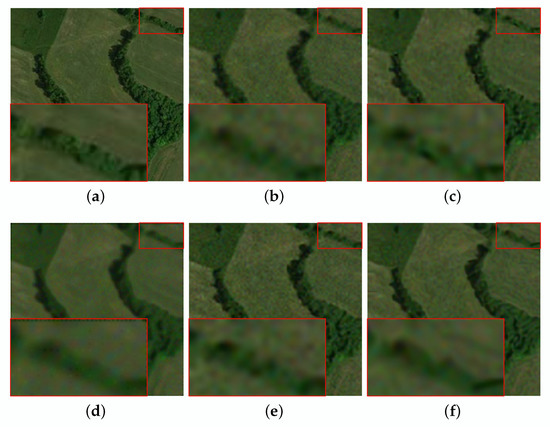

Compared with other methods, the ASDS method achieves better SR results on the “Aerial” image. However, the average PSNR, SSIM and ERGAS of our algorithm are the highest among these SR methods, which are 29.58 dB, 0.8144 and 2.8257, respectively. In addition, to more intuitively reflect the performance of our method, we draw a graph for the results provided in Table 3, as shown in Figure 4. Furthermore, we give visual effects as shown in Figure 7 and Figure 8. We can find that the proposed method also has better SR performance in suppressing image noise and preserving details and edges.

Figure 7.

The reconstruction for the meadow of noisy image with different methods (noise = 5). (a) original image. (b) bicubic interpolation (PSNR:32.19, SSIM:0.7914, ERGAS:2.6744). (c) ASDS (PSNR:33.39, SSIM:0.8274, ERGAS:2.3056). (d) NARM (PSNR:32.56, SSIM:0.7993, ERGAS:2.7643). (e) MSEPLL (PSNR:33.42, SSIM:0.8260, ERGAS:/2.2176). (f) ours (PSNR:33.65, SSIM:0.8302, ERGAS:2.2605).

Figure 8.

The reconstruction for the residential of noisy image with different methods (noise = 5). (a) original image. (b) bicubic interpolation (PSNR:22.05, SSIM:0.6359, ERGAS:6.6547). (c) ASDS (PSNR:26.16, SSIM:0.7958, ERGAS:4.1301). (d) NARM (PSNR:23.61, SSIM:0.6981, ERGAS:5.6835). (e) MSEPLL (PSNR:26.21, SSIM:0.8071, ERGAS:4.0817). (f) ours (PSNR:26.47, SSIM:0.8209, ERGAS:3.9976).

Consequently, according to the experiments on test images, the proposed method can achieve the best SR results in both noiseless and noisy cases.

4.4. Comparison with Different Deep Learning Methods

To reasonably compare with deep learning methods, we chose the classic deep learning methods SRCNN [32] and SRGAN [35], and the recently proposed remote sensing images method LGCnet [54]. For these methods, we retrain and fine tune their models, and then use these models for SR reconstruction. The training data come from three remote sensing image datasets: UCMLUD [46], USC-SIPI-aerials [47] and NWPU-RESISC45 [48]. All the training images are first blurred and then down-sampled to obtain the low-resolution images. Next, both the obtained low-resolution images and their original versions are collected as training pairs to train SRCNN [32], SRGAN [35], and LGCnet [54]. When the training error stops decreasing, we reduce their initial learning rates to fine-tuned their models to achieve their best performance. The results are shown in Table 4. For “Runway”, the resolution reconstructed by the LGCnet method is higher. However, for all the test images, our method is superior to SRCNN, SRGAN and LGCnet, and the average PSNR/SSIM gains over SRCNN, SRGAN and LGCnet are 0.84 dB/0.0215, 1.64 dB/0.0778 and 0.2 dB/0.0048, respectively. For ERGAS, our method is 0.0394 better than SRGAN on the test images. Our average ERGAS is 0.04 better than LGCnet’s average ERGAS and 0.1984 better than the average ERGAS of SRCNN. In order to show that our method does not need a lot of external data compared with the deep learning methods, we provide the training time and the number of training images, as shown in Table 5. Compared with other methods, the proposed approach can train with only one image, and saves lots of training time. The experiment results show that our method has better SR performance in noiseless cases.

Table 4.

PSNR/SSIM/ERGAS Results for Test Images (Scale Factor = 3, Noise = 0).

Table 5.

Comparison for the number of training images and training time.

4.5. Comparison with Different Methods on Datasets

To verify our method performance, we use different methods to reconstruct the test databases, including the “Airplane” and “Storage-tank” sub-databases from the UCMLUD, and the “Island” sub-database from the NWPU-RESISC45. The results are shown in Table 6. In Table 6, in addition to our approach, ASDS is the best traditional method, LGCnet is superior to SRCNN in deep learning methods. For the “Airplane” sub-database, our method is superior to the ASDS and LGCnet methods, and the average PSNR/SSIM gains over ASDS and LGCnet are 0.81 dB/0.0106 and 0.32 dB/0.0026, respectively. Meanwhile, our method has a better ERGAS than other methods, it is 1.7553. For the “Storage-tank” sub-database, the PSNR/SSIM of our algorithm is 0.56 dB/0.0119 higher than ASDS. Our ERGAS is 0.1194 better than the ERGAS of ASDS. Compare with LGCnet, although LGCnet has better SSIM and ERGAS, our approach gets the larger PSNR. For the “Island” sub-database, the PSNR/SSIM of our method is 0.34 dB/0.0046 superior to ASDS, and the PSNR/SSIM of LGCnet is 0.08 dB/0.0019 inferior to ours. The results show that the images reconstructed using our method has better performance.

Table 6.

PSNR/SSIM/ERGAS Results on Databases (Scale Factor = 3, Noise = 0).

The previous experimental analysis shows the comprehensive performance of our proposed method. Specifically, for images that satisfy the joint constraint (i.e., images with repeated structures and many edges), the proposed method has superior performance. For images with highly complex texture, the proposed method is not effective enough. In our paper, the proposed approach is based on local gradient constraint and nonlocal similarity. Because images with repeated structures and many edges can perfectly satisfy these two features, they can acquire superior reconstruction performance. Thus, in most cases, this approach performs better than many other super-resolution methods. However, for images with highly complex texture, these two features are not reliable. If we use these two features for reconstruction, the reconstructed image will not be very good. In fact, many SR approaches do not work well under such extreme conditions.

4.6. The Effectiveness of Joint Constraint

The image itself comprises repetitive structures, i.e., self-similarity. Researchers often exploit the nonlocal self-similarity of images for the SR reconstruction. However, it is not enough to consider only the nonlocal self-similarity to improve the resolution of the input image. To address the above problem, we construct a local constraint according to the proposed filter. Considering the joint constraint, the quality of the reconstructed image will be greatly improved. In order to demonstrate the performance of the joint constraint, we compare the SR performance for the joint constraint and the single nonlocal constraint. The results are shown in Table 7. For all the test images, the method for the joint constraint achieves superior objective qualities. Specifically, the average PSNR and SSIM gains of the joint constraint over the single nonlocal constraint are 0.38 dB and 0.0071, respectively, and the average ERGAS of the joint constraint is 0.097 better than the single nonlocal constraint. Experiment results demonstrate that the complementary joint constraint can effectively improve the image quality.

Table 7.

PSNR/SSIM/ERGAS Results of Nonlocal and Joint constraints (Scale Factor = 3, Noise = 0).

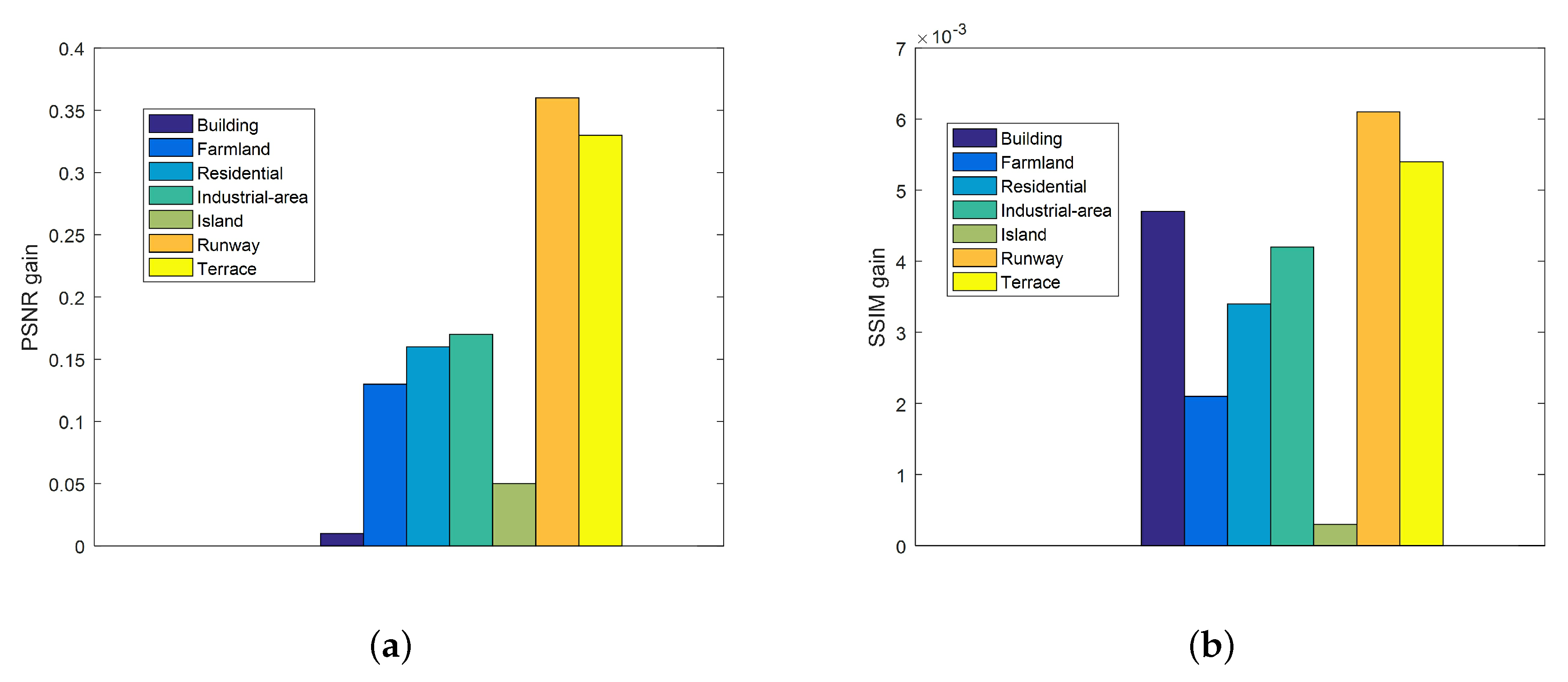

4.7. The Effectiveness of Adaptive Parameters

In the classic sparse coding problem, the choice of regularization parameters is very important. However, the parameters of most methods are fixed. In this paper, we adaptively select the parameters according to the noise level. To demonstrate the effectiveness of our adaptive parameters, we compare the results of the fixed parameters with those of the adaptive parameters as shown in Figure 9. In the case of fixed parameters, and are set to 0.33 and 0.001, respectively. In Figure 9, the maximum PSNR gain is 0.36 dB, and the corresponding image is “Runway”. At the same time, the image with the largest SSIM gain is also “Runway”. Specifically, it is 0.0061. For “Runway”, the ERGAS with adaptive parameters is 0.0408 better than that with fixed parameters. Experiment results indicate that the adaptive parameters are beneficial to improve the SR performance.

Figure 9.

Peak Signal-to-noise Ratio(PSNR) and Structural Similarity Index(SSIM) gains of the method with adaptive parameters over the method with fixed parameters. (a) PSNR gains. (b) SSIM gains.

4.8. Complexity Analysis

Our method takes major cost on three part: the sub-dictionaries learning, the calculation of local structural filter, and the nonlocal search. In the sub-dictionaries learning, the core procedure involves the clustering of K-means. Its computational complexity is . The calculation of local structural filter takes . The nonlocal search is related to the patch size, the search window size and the number of the images. It takes . Take the “Airplane” with size as a test image, the LANR-NLM method takes 40.82 s, LGCnet takes 0.74s, NARM and ASDS take 68.02 s and 107.92 s, respectively, the MSEPLL and our methods take 215.88 s and 297.91 s, respectively. Therein, traditional SR methods are implemented in MATLAB 2014a on a computer with Intel(R) Core(TM) i7-7700K CPU @ 4.20GHZ 4.20GHZ, 16.0GB RAM and 64-bit Windows 7 operating system. Our method takes a little longer time than others. This is because the proposed method learns the dictionary online for the input image and performs adaptive filtering for each iteration of the HR image. However, our method does not need external training examples, which saves a large amount of training time. In conclusion, our approach achieves the best visual and objective quality with reasonable running time.

5. Conclusions

In this paper, we propose a novel SR scheme based on sparse representation for the single remote sensing image. First, we use the single-dictionary method to learn the compact dictionary that exploits only the unique information of remote sensing image itself. Compared with the double-dictionary method and the deep learning method, this method has an advantage in the absence of external samples. Second, we propose a local structure filter based on the local gradient of image, and then a local structure prior is constructed. After that, the joint prior is constructed, including the local structure prior and the nonlocal self-similarity prior, which can effectively improve the fine structures recovery ability. Finally, the reconstructed HR images can be obtained by using the iterative shrinkage algorithm. The results show that the proposed local structure prior shows superior edge-preserving performance and the complementary prior constructed is more conducive in improving the SR performance. Compared with other methods, the HR images reconstructed by our scheme have better visual quality and higher objective evaluation indices. In the future, we will extend the proposed method to other image processing applications.

Author Contributions

L.F. wrote this manuscript. L.F., C.R., X.H., X.W. and Z.W. contributed to the writing, direction, and content, and revised the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China under Grant 61801316, the National Postdoctoral Program for Innovative Talents of China under Grant BX201700163, and the Chengdu Industrial Cluster Collaborative Innovation Project under grant no. 2016-XT00-00015-GX.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lu, X.; Zheng, X.; Yuan, Y. Remote sensing scene classification by unsupervised representation learning. IEEE Trans. Geosci. Remote. Sens. 2017, 55, 5148–5157. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhong, Y.; Wu, S.; Zhang, L.; Li, D. Scene Classification Based on the Sparse Homogeneous–Heterogeneous Topic Feature Model. IEEE Trans. Geosci. Remote. Sens. 2018, 56, 2689–2703. [Google Scholar] [CrossRef]

- Belward, A.S.; Skøien, J.O. Who launched what, when and why; trends in global land-cover observation capacity from civilian earth observation satellites. ISPRS J.Photogramm. Remote. Sens. 2015, 103, 115–128. [Google Scholar] [CrossRef]

- Duan, H.; Gan, L. Elitist chemical reaction optimization for contour-based target recognition in aerial images. IEEE Trans. Geosci. Remote. Sens. 2015, 53, 2845–2859. [Google Scholar] [CrossRef]

- Pei, J.; Huang, Y.; Huo, W.; Zhang, Y.; Yang, J.; Yeo, T.S. SAR Automatic Target Recognition Based on Multiview Deep Learning Framework. IEEE Trans. Geosci. Remote. Sens. 2018, 56, 2196–2210. [Google Scholar] [CrossRef]

- Haut, J.M.; Fernandez-Beltran, R.; Paoletti, M.E.; Plaza, J.; Plaza, A.; Pla, F. A new deep generative network for unsupervised remote sensing single-image super-resolution. IEEE Trans. Geosci. Remote. Sens. 2018, 56, 6792–6810. [Google Scholar] [CrossRef]

- Hou, B.; Zhou, K.; Jiao, L. Adaptive Super-Resolution for Remote Sensing Images Based on Sparse Representation With Global Joint Dictionary Model. IEEE Trans. Geosci. Remote. Sens. 2018, 56, 2312–2327. [Google Scholar] [CrossRef]

- Feng, R.; Zhong, Y.; Xu, X.; Zhang, L. Adaptive sparse subpixel mapping with a total variation model for remote sensing imagery. IEEE Trans. Geosci. Remote. Sens. 2016, 54, 2855–2872. [Google Scholar] [CrossRef]

- Li, F.; Jia, X.; Fraser, D.; Lambert, A. Super resolution for remote sensing images based on a universal hidden Markov tree model. IEEE Trans. Geosci. Remote. Sens. 2010, 48, 1270–1278. [Google Scholar]

- Sadjadi, F.A. Seeing through degraded visual environment. In Proceedings of the Automatic Target Recognition XXII. International Society for Optics and Photonics, Baltimore, MD, USA, 11 May 2012; Volume 8391, p. 839106. [Google Scholar]

- Chen, J.; Nunez-Yanez, J.; Achim, A. Video super-resolution using low rank matrix completion. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 1376–1380. [Google Scholar]

- Liu, X.; Chen, L.; Wang, W.; Zhao, J. Robust Multi-Frame Super-Resolution Based on Spatially Weighted Half-Quadratic Estimation and Adaptive BTV Regularization. IEEE Trans. Image Process. 2018, 27, 4971–4986. [Google Scholar] [CrossRef]

- Tsai, R. Multiframe image restoration and registration. Adv. Comput. Vis. Image Process. 1984, 1, 317–339. [Google Scholar]

- Huang, Y.; Wang, W.; Wang, L. Bidirectional recurrent convolutional networks for multi-frame super-resolution. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 235–243. [Google Scholar]

- Farsiu, S.; Robinson, M.D.; Elad, M.; Milanfar, P. Fast and robust multiframe super resolution. IEEE Trans. Image Process. 2004, 13, 1327–1344. [Google Scholar] [CrossRef] [PubMed]

- Patanavijit, V.; Jitapunkul, S. A robust iterative multiframe super-resolution reconstruction using a Huber Bayesian approach with Huber-Tikhonov regularization. In Proceedings of the International Symposium on Intelligent Signal Processing and Communications, Tottori, Japan, 12–15 December 2006; pp. 13–16. [Google Scholar]

- Wang, W.; Ren, C.; He, X.; Chen, H.; Qing, L. Video Super-Resolution via Residual Learning. IEEE Access 2018, 6, 23767–23777. [Google Scholar] [CrossRef]

- Ren, C.; He, X.; Nguyen, T.Q. Single image super-resolution via adaptive high-dimensional non-local total variation and adaptive geometric feature. IEEE Trans. Image Process. 2017, 26, 90–106. [Google Scholar] [CrossRef] [PubMed]

- Ren, C.; He, X.; Nguyen, T.Q. Adjusted Non-Local Regression and Directional Smoothness for Image Restoration. IEEE Trans. Multimed. 2019, 21, 731–745. [Google Scholar] [CrossRef]

- Ren, C.; He, X.; Teng, Q.; Wu, Y.; Nguyen, T.Q. Single image super-resolution using local geometric duality and non-local similarity. IEEE Trans. Image Process. 2016, 25, 2168–2183. [Google Scholar] [CrossRef] [PubMed]

- Ren, C.; He, X.; Pu, Y. Nonlocal Similarity Modeling and Deep CNN Gradient Prior for Super Resolution. IEEE Signal Process. Lett. 2018, 25, 916–920. [Google Scholar] [CrossRef]

- Yang, J.; Wright, J.; Huang, T.S.; Ma, Y. Image super-resolution via sparse representation. IEEE Trans. Image Process. 2010, 19, 2861–2873. [Google Scholar] [CrossRef]

- Huang, S.; Sun, J.; Yang, Y.; Fang, Y.; Lin, P.; Que, Y. Robust Single-Image Super-Resolution Based on Adaptive Edge-Preserving Smoothing Regularization. IEEE Trans. Image Process. 2018, 27, 2650–2663. [Google Scholar] [CrossRef]

- Li, X.; Orchard, M.T. New edge-directed interpolation. IEEE Trans. Image Process. 2001, 10, 1521–1527. [Google Scholar]

- Zhang, Y.; Fan, Q.; Bao, F.; Liu, Y.; Zhang, C. Single-Image Super-Resolution Based on Rational Fractal Interpolation. IEEE Trans. Image Process. 2018, 27, 3782–3797. [Google Scholar] [PubMed]

- Tai, Y.W.; Liu, S.; Brown, M.S.; Lin, S. Super resolution using edge prior and single image detail synthesis. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2400–2407. [Google Scholar]

- Timofte, R.; De Smet, V.; Van Gool, L. Anchored neighborhood regression for fast example-based super-resolution. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 1920–1927. [Google Scholar]

- Peleg, T.; Elad, M. A statistical prediction model based on sparse representations for single image super-resolution. IEEE Trans. Image Process. 2014, 23, 2569–2582. [Google Scholar] [CrossRef]

- Gou, S.; Liu, S.; Yang, S.; Jiao, L. Remote sensing image super-resolution reconstruction based on nonlocal pairwise dictionaries and double regularization. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2014, 7, 4784–4792. [Google Scholar] [CrossRef]

- Dong, W.; Zhang, L.; Shi, G.; Wu, X. Image deblurring and super-resolution by adaptive sparse domain selection and adaptive regularization. IEEE Trans. Image Process. 2011, 20, 1838–1857. [Google Scholar] [CrossRef] [PubMed]

- Dong, W.; Zhang, L.; Shi, G.; Li, X. Nonlocally centralized sparse representation for image restoration. IEEE Trans. Image Process. 2013, 22, 1620–1630. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Yuan, Q.; Shen, H.; Meng, X.; Zhang, L. Hyperspectral Image Super-Resolution by Spectral Mixture Analysis and Spatial-Spectral Group Sparsity. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1250–1254. [Google Scholar] [CrossRef]

- Tai, Y.; Yang, J.; Liu, X. Image super-resolution via deep recursive residual network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3147–3155. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Mairal, J.; Bach, F.; Ponce, J.; Sapiro, G.; Zisserman, A. Non-local sparse models for image restoration. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2272–2279. [Google Scholar]

- Zeyde, R.; Elad, M.; Protter, M. On single image scale-up using sparse-representations. In Proceedings of the International Conference on Curves and Surfaces, Avignon, France, 24–30 June 2010; pp. 711–730. [Google Scholar]

- Vera-Candeas, P.; Ruiz-Reyes, N.; Rosa-Zurera, M.; Martinez-Munoz, D.; López-Ferreras, F. Transient modeling by matching pursuits with a wavelet dictionary for parametric audio coding. IEEE Signal Process. Lett. 2004, 11, 349–352. [Google Scholar] [CrossRef]

- Daubechies, I.; Defrise, M.; De Mol, C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 2004, 57, 1413–1457. [Google Scholar] [CrossRef]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Lischinski, D.; Farbman, Z.; Uyttendaele, M.; Szeliski, R. Interactive local adjustment of tonal values. ACM Trans. Graph. (TOG) 2006, 25, 646–653. [Google Scholar] [CrossRef]

- Liu, X.; Tanaka, M.; Okutomi, M. Single-image noise level estimation for blind denoising. IEEE Trans. Image Process. 2013, 22, 5226–5237. [Google Scholar] [CrossRef] [PubMed]

- Papyan, V.; Elad, M. Multi-scale patch-based image restoration. IEEE Trans. Image Process. 2016, 25, 249–261. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Gao, X.; Tao, D.; Li, X. Single image super-resolution with non-local means and steering kernel regression. IEEE Trans. Image Process. 2012, 21, 4544–4556. [Google Scholar] [CrossRef]

- Li, Y.; Dong, W.; Shi, G.; Xie, X. Learning parametric distributions for image super-resolution: Where patch matching meets sparse coding. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 450–458. [Google Scholar]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar]

- The USC-SIPI Image Database. Available online: http://sipi.usc.edu/database/database.php (accessed on 26 February 2020).

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Li, F.; Xin, L.; Guo, Y.; Gao, D.; Kong, X.; Jia, X. Super-resolution for GaoFen-4 remote sensing images. IEEE Geosci. Remote Sens. Lett. 2017, 15, 28–32. [Google Scholar] [CrossRef]

- Dong, W.; Zhang, L.; Lukac, R.; Shi, G. Sparse representation based image interpolation with nonlocal autoregressive modeling. IEEE Trans. Image Process. 2013, 22, 1382–1394. [Google Scholar] [CrossRef]

- Jiang, J.; Ma, X.; Chen, C.; Lu, T.; Wang, Z.; Ma, J. Single image super-resolution via locally regularized anchored neighborhood regression and nonlocal means. IEEE Trans. Multimed. 2017, 19, 15–26. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error measurement to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Veganzones, M.A.; Simoes, M.; Licciardi, G.; Yokoya, N.; Bioucas-Dias, J.M.; Chanussot, J. Hyperspectral super-resolution of locally low rank images from complementary multisource data. IEEE Trans. Image Process. 2016, 25, 274–288. [Google Scholar] [CrossRef]

- Lei, S.; Shi, Z.; Zou, Z. Super-resolution for remote sensing images via local–global combined network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1243–1247. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).