Multi-Sensor Orientation Tracking for a Façade-Cleaning Robot

Abstract

1. Introduction

- Encoders (locomotion systems)

- Time-of-Flight sensors (ToF)

- Sonar beacons

- Vision systems (cameras)

- Inertial measurement units (IMUs)

2. Hardware Description of the Façade-Cleaning Robot: Mantis

2.1. Overview of the Mantis v2 Robot

2.2. Locomotion Mechanism

2.3. Rotational Mechanism

2.4. Transition Mechanism

2.5. Suction System

2.6. Mantis’s Sensors

3. Orientation Estimation

3.1. Locomotive Orientation

Problems with the Encoder’s Readings during Displacement

3.2. Orientation Using Time-of-Flight (ToF) Sensors

Problems with ToF

3.3. Orientation Based on Sonar Beacons

Problems with the Beacon-Based Orientation Method

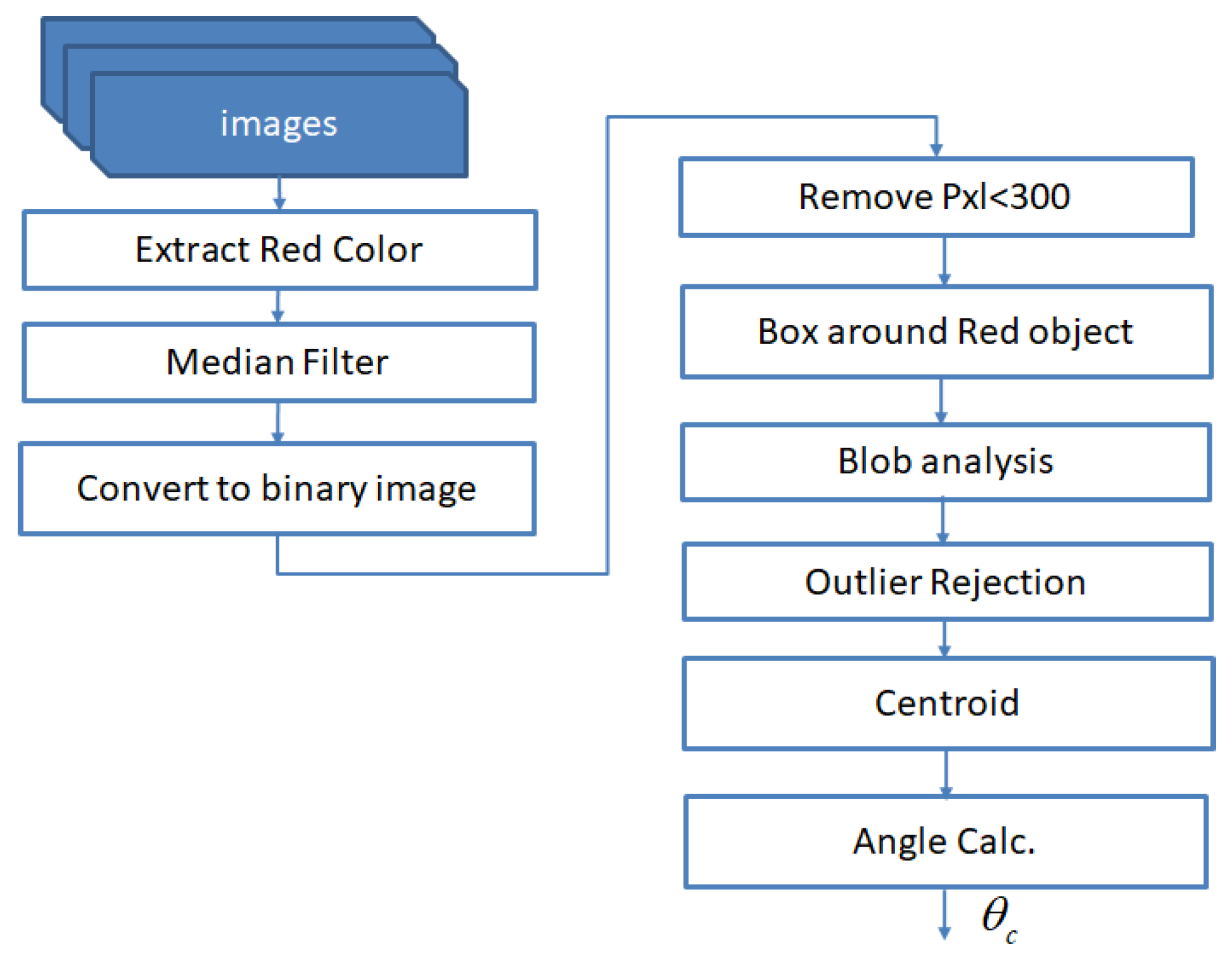

3.4. Vision-Based Orientation

Problems with the Vision-Based Orientation Tracking

3.5. Orientation Based on IMU

3.5.1. The Problem with Accelerometers

3.5.2. The Problem with Gyroscopes

3.5.3. Sensor Fusion

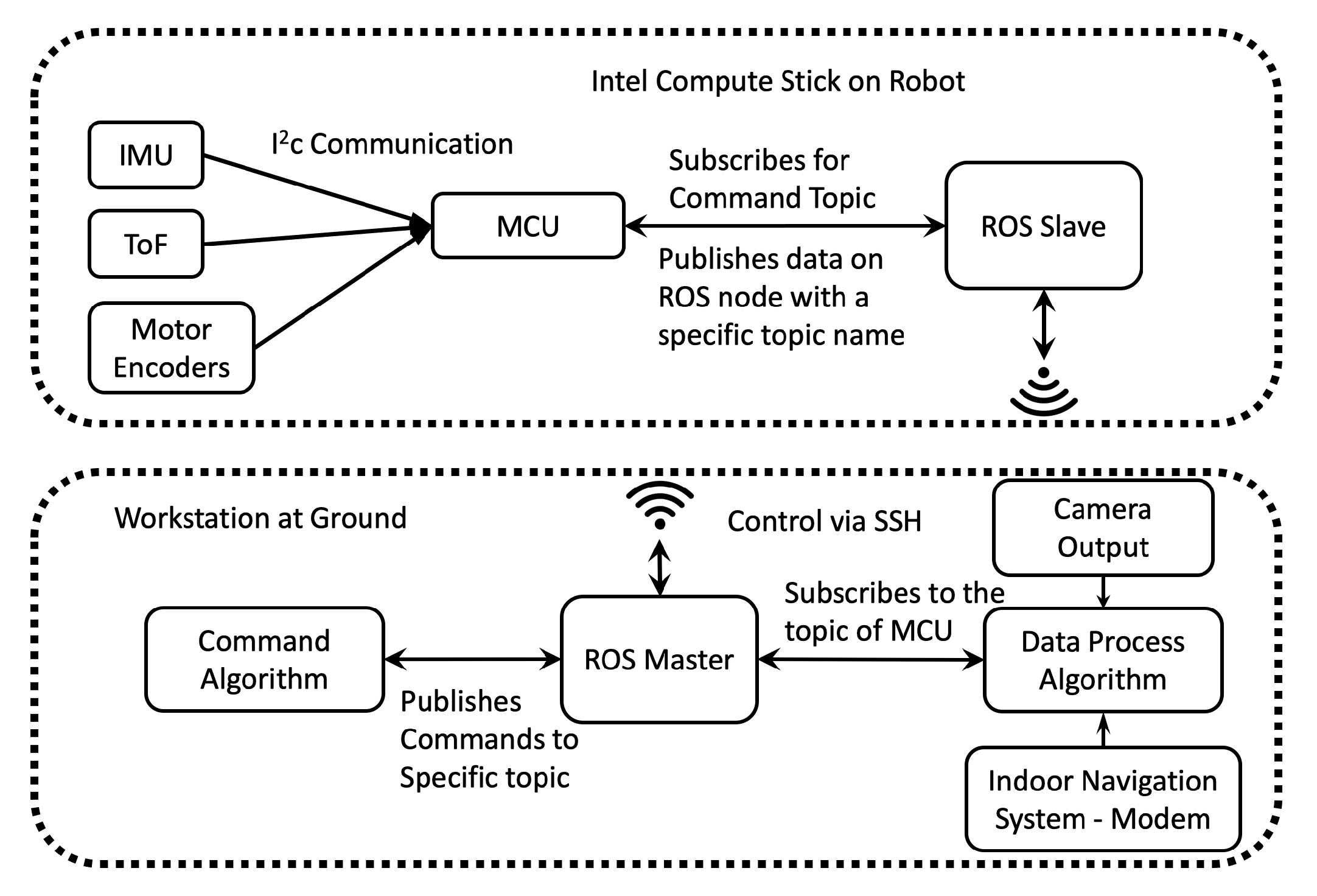

4. System Integration Using the Robot Operating System (ROS)

5. Experimental Setup and Result Discussion

5.1. Test Bed Experiments

5.2. Static Tests

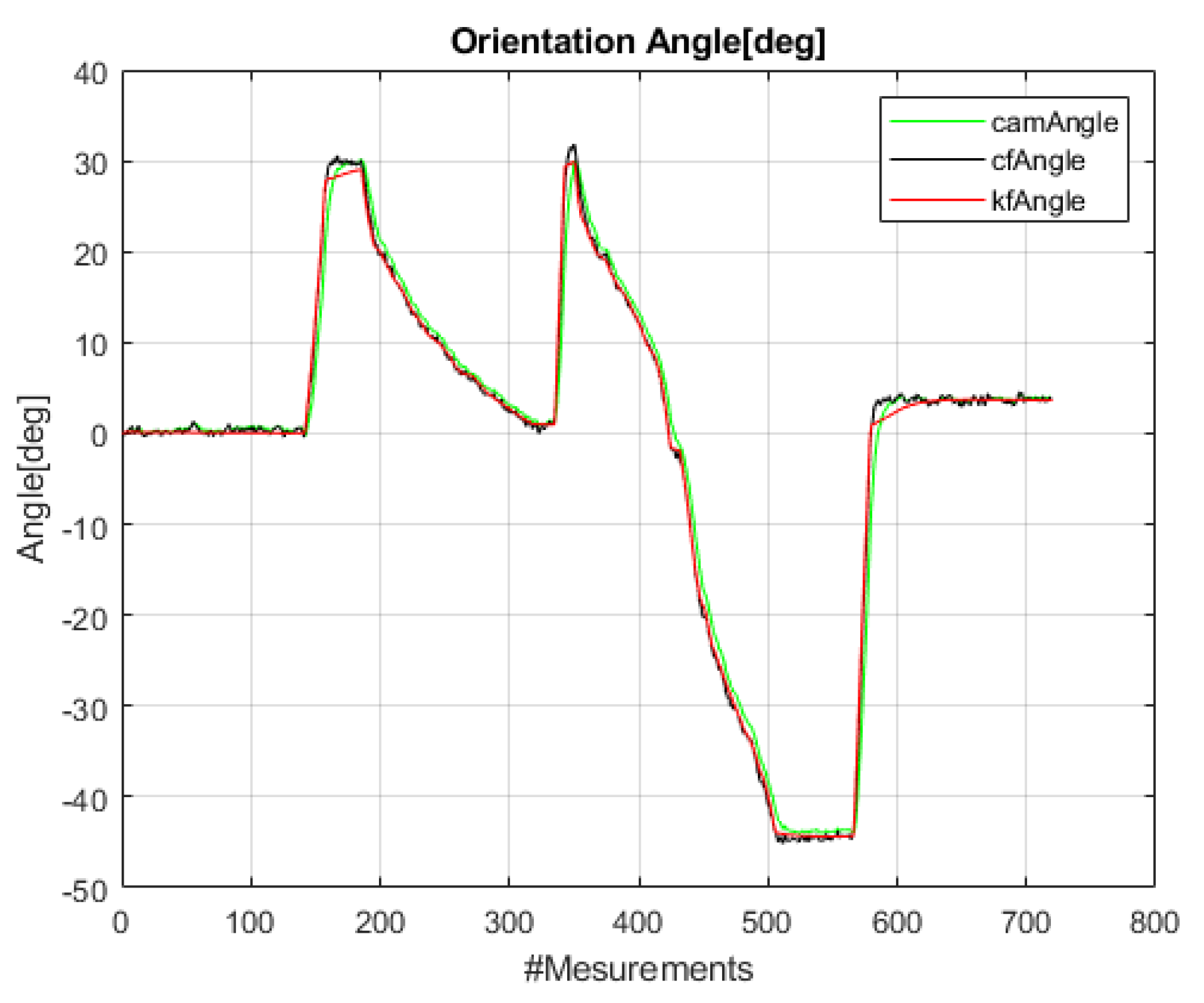

5.3. Dynamic Tests

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ROS | Robot Operating System |

| IMU | Inertial measurement unit |

| ToF | Time-of-Flight |

| KF | Kalman filter |

| CF | Complementary filter |

| AHRS | Attitude and heading reference system |

| PLA | Polylactic acid |

| TPU | Thermoplastic polyurethane-elastomere |

| Bm | Mobile beacons |

| Bs | Stationary beacons |

Appendix A

Appendix A.1. Complementary Filter

Appendix A.2. One-Dimensional Kalman Filter

References

- Sutter, B.; Lelevé, A.; Pham, M.T.; Gouin, O.; Jupille, N.; Kuhn, M.; Lulé, P.; Michaud, P.; Rémy, P. A semi-autonomous mobile robot for bridge inspection. Autom. Constr. 2018, 91, 111–119. [Google Scholar] [CrossRef]

- Chablat, D.; Venkateswaran, S.; Boyer, F. Mechanical Design Optimization of a Piping Inspection Robot. Procedia CIRP 2018, 70, 307–312. [Google Scholar] [CrossRef]

- Dertien, E.; Stramigioli, S.; Pulles, K. Development of an inspection robot for small diameter gas distribution mains. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 5044–5049. [Google Scholar] [CrossRef]

- Wang, B.; Chen, X.; Wang, Q.; Liu, L.; Zhang, H.; Li, B. Power line inspection with a flying robot. In Proceedings of the 2010 1st International Conference on Applied Robotics for the Power Industry, Montreal, QC, Canada, 5–7 October 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Moon, S.M.; Hong, D.; Kim, S.W.; Park, S. Building wall maintenance robot based on built-in guide rail. In Proceedings of the 2012 IEEE International Conference on Industrial Technology, Athens, Greece, 19–21 March 2012; pp. 498–503. [Google Scholar] [CrossRef]

- Song, Y.; Wang, H.; Zhang, J. A Vision-Based Broken Strand Detection Method for a Power-Line Maintenance Robot. IEEE Trans. Power Deliv. 2014, 29, 2154–2161. [Google Scholar] [CrossRef]

- Gao, X.; Shao, J.; Dai, F.; Zong, C.; Guo, W.; Bai, Y. Strong Magnetic Units for a Wind Power Tower Inspection and Maintenance Robot. Int. J. Adv. Robot. Syst. 2012, 9, 189. [Google Scholar] [CrossRef]

- Chabas, A.; Lombardo, T.; Cachier, H.; Pertuisot, M.; Oikonomou, K.; Falcone, R.; Verità, M.; Geotti-Bianchini, F. Behaviour of self-cleaning glass in urban atmosphere. Build. Environ. 2008, 43, 2124–2131. [Google Scholar] [CrossRef]

- Cannavale, A.; Fiorito, F.; Manca, M.; Tortorici, G.; Cingolani, R.; Gigli, G. Multifunctional bioinspired sol-gel coatings for architectural glasses. Build. Environ. 2010, 45, 1233–1243. [Google Scholar] [CrossRef]

- Henrey, M.; Ahmed, A.; Boscariol, P.; Shannon, L.; Menon, C. Abigaille-III: A Versatile, Bioinspired Hexapod for Scaling Smooth Vertical Surfaces. J. Bionic Eng. 2014, 11, 1–17. [Google Scholar] [CrossRef]

- Zhou, Q.; Li, X. Experimental comparison of drag-wiper and roller-wiper glass-cleaning robots. Ind. Robot. Int. J. 2016, 43, 409–420. [Google Scholar] [CrossRef]

- Kim, T.Y.; Kim, J.H.; Seo, K.C.; Kim, H.M.; Lee, G.U.; Kim, J.W.; Kim, H.S. Design and control of a cleaning unit for a novel wall-climbing robot. In Applied Mechanics and Materials; Trans Tech Publications Ltd.: Bäch, Switzerland, 2014; Volume 541, pp. 1092–1096. [Google Scholar]

- Ge, D.; Matsuno, T.; Sun, Y.; Ren, C.; Tang, Y.; Ma, S. Quantitative study on the attachment and detachment of a passive suction cup. Vacuum 2015, 116, 13–20. [Google Scholar] [CrossRef]

- Nansai, S.; Elara, M.R.; Tun, T.T.; Veerajagadheswar, P.; Pathmakumar, T. A Novel Nested Reconfigurable Approach for a Glass Façade Cleaning Robot. Inventions 2017, 2, 18. [Google Scholar] [CrossRef]

- Mir-Nasiri, N.; Siswoyo, H.; Ali, M.H. Portable Autonomous Window Cleaning Robot. Procedia Comput. Sci. 2018, 133, 197–204. [Google Scholar] [CrossRef]

- Warszawski, A. Economic implications of robotics in building. Build. Environ. 1985, 20, 73–81. [Google Scholar] [CrossRef]

- Wang, C.; Fu, Z. A new way to detect the position and orientation of the wheeled mobile robot on the image plane. In Proceedings of the 2014 IEEE International Conference on Robotics and Biomimetics (ROBIO 2014), Bali, Indonesia, 5–10 December 2014; pp. 2158–2162. [Google Scholar] [CrossRef]

- Kim, J.; Jung, C.Y.; Kim, S.J. Two-dimensional position and orientation tracking of micro-robot with a webcam. In Proceedings of the IEEE ISR 2013, Seoul, Korea, 24–26 October 2013; pp. 1–2. [Google Scholar] [CrossRef]

- Payá, L.; Reinoso, O.; Jiménez, L.M.; Juliá, M. Estimating the position and orientation of a mobile robot with respect to a trajectory using omnidirectional imaging and global appearance. PLoS ONE 2017, 12, 1–25. [Google Scholar] [CrossRef] [PubMed]

- Chashchukhin, V.; Knyazkov, D.; Knyazkov, M.; Nunuparov, A. Determining orientation of the aerodynamically adhesive wall climbing robot. In Proceedings of the 2017 22nd International Conference on Methods and Models in Automation and Robotics (MMAR), Międzyzdroje, Poland, 28–31 August 2017; pp. 1033–1038. [Google Scholar] [CrossRef]

- Liu, G. Two Methods of Determining Target Orientation by Robot Visual Principle. In Proceedings of the 2017 10th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 9–10 December 2017; Volume 2, pp. 22–25. [Google Scholar] [CrossRef]

- Marcu, C.; Lazea, G.; Bordencea, D.; Lupea, D.; Valean, H. Robot orientation control using digital compasses. In Proceedings of the 2013 17th International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 11–13 October 2013; pp. 331–336. [Google Scholar] [CrossRef]

- Rashid, A.T.; Frasca, M.; Ali, A.A.; Rizzo, A.; Fortuna, L. Multi-robot localization and orientation estimation using robotic cluster matching algorithm. Robot. Auton. Syst. 2015, 63, 108–121. [Google Scholar] [CrossRef]

- Pateraki, M.; Baltzakis, H.; Trahanias, P. Visual estimation of pointed targets for robot guidance via fusion of face pose and hand orientation. Comput. Vis. Image Underst. 2014, 120, 1–13. [Google Scholar] [CrossRef]

- Reina, A.; Gonzalez, J. Determining Mobile Robot Orientation by Aligning 2D Segment Maps. IFAC Proc. Vol. 1998, 31, 189–194. [Google Scholar] [CrossRef]

- Dehghani, M.; Moosavian, S.A.A. A new approach for orientation determination. In Proceedings of the 2013 First RSI/ISM International Conference on Robotics and Mechatronics (ICRoM), Tehran, Iran, 13–15 February 2013; pp. 20–25. [Google Scholar] [CrossRef]

- Wardana, A.A.; Widyotriatmo, A.; Suprijanto; Turnip, A. Wall following control of a mobile robot without orientation sensor. In Proceedings of the 2013 3rd International Conference on Instrumentation Control and Automation (ICA), Bali, Indonesia, 28–30 August 2013; pp. 212–215. [Google Scholar] [CrossRef]

- Valiente, D.; Gil, A.; Payá, L.; Sebastián, J.M.; Reinoso, Ó. Robust Visual Localization with Dynamic Uncertainty Management in Omnidirectional SLAM. Appl. Sci. 2017, 7, 1294. [Google Scholar] [CrossRef]

- Valiente, D.; Payá, L.; Jiménez, L.M.; Sebastián, J.M.; Reinoso, Ó. Visual Information Fusion through Bayesian Inference for Adaptive Probability-Oriented Feature Matching. Sensors 2018, 18, 41. [Google Scholar] [CrossRef]

- Li, C.; Li, I.; Chien, Y.; Wang, W.; Hsu, C. Improved Monte Carlo localization with robust orientation estimation based on cloud computing. In Proceedings of the 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, BC, Canada, 24–29 July 2016; pp. 4522–4527. [Google Scholar] [CrossRef]

- Zhu, J.; Zheng, N.; Yuan, Z. An Improved Technique for Robot Global Localization in Indoor Environments. Int. J. Adv. Robot. Syst. 2011, 8, 7. [Google Scholar] [CrossRef]

- Zhang, W.; Van Luttervelt, C. Toward a resilient manufacturing system. CIRP Ann. 2011, 60, 469–472. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, W.; Gupta, M.M. Resilient Robots: Concept, Review, and Future Directions. Robotics 2017, 6, 22. [Google Scholar] [CrossRef]

- Deremetz, M.; Lenain, R.; Couvent, A.; Cariou, C.; Thuilot, B. Path tracking of a four-wheel steering mobile robot: A robust off-road parallel steering strategy. In Proceedings of the 2017 European Conference on Mobile Robots (ECMR), Paris, France, 6–8 September 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Khalaji, A.K.; Yazdani, A. Orientation control of a wheeled robot towing a trailer in backward motion. In Proceedings of the 2017 IEEE 4th International Conference on Knowledge-Based Engineering and Innovation (KBEI), Tehran, Iran, 22 December 2017; pp. 907–912. [Google Scholar] [CrossRef]

- Vega-Heredia, M.; Elara, M.R. Design and Modelling of a Modular Window Cleaning Robot. Autom. Constr. 2019, 103, 268–278. [Google Scholar] [CrossRef]

- Kouzehgar, M.; Tamilselvam, Y.K.; Heredia, M.V.; Elara, M.R. Self-reconfigurable façade-cleaning robot equipped with deep-learning-based crack detection based on convolutional neural networks. Autom. Constr. 2019, 108, 102959. [Google Scholar] [CrossRef]

- Muthugala, M.A.V.J.; Vega-Heredia, M.; Vengadesh, A.; Sriharsha, G.; Elara, M.R. Design of an Adhesion-Aware Façade Cleaning Robot. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019; pp. 1441–1447. [Google Scholar] [CrossRef]

- Welch, G.; Bishop, G. An introduction to the Kalman filter. 1995, pp. 41–95. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.336.5576&rep=rep1&type=pdf (accessed on 8 March 2020).

- ROS Robot Operative System. Available online: http://www.ros.org/ (accessed on 30 January 2019).

- Yadav, N.; Bleakley, C. Accurate orientation estimation using AHRS under conditions of magnetic distortion. Sensors 2014, 14, 20008–20024. [Google Scholar] [CrossRef] [PubMed]

| ID | ID1 | ID2 | ID3 | ID4 | ID5 | ID6 |

|---|---|---|---|---|---|---|

| ID1 | - | 2.75 | - | - | 1.77 | 3.27 |

| ID2 | 2.75 | - | 3.27 | 1.77 | ||

| ID3 | - | |||||

| ID4 | - | |||||

| ID5 | 1.77 | 3.27 | - | 2.75 | ||

| ID6 | 3.27 | 1.77 | 2.75 | - |

| Ref. Angle | Odometry | ToF | Beacons | Vision | Integration |

|---|---|---|---|---|---|

| 0 | NA | 0.799 | 0.196 | 0.31 | 0.137 |

| 10 | NA | 0.391 | 0.501 | 0.772 | 0.333 |

| 20 | NA | 1.25 | 0.814 | 0.73 | 0.559 |

| 30 | NA | 2.071 | 1.011 | 0.025 | 0.217 |

| 40 | NA | NA | 0.971 | 0.854 | 0.023 |

| 45 | NA | NA | 2.772 | 0.145 | 0.583 |

| 60 | NA | NA | 0.076 | 0.061 | 0.003 |

| −10 | NA | 0.162 | 0.731 | 0.4 | 0.098 |

| −20 | NA | 0.387 | 1.763 | 1.662 | 0.607 |

| −30 | NA | 0.884 | 1.669 | 1.98 | 0.239 |

| −40 | NA | 1.731 | 0.527 | 0.082 | 0.468 |

| −45 | NA | 0.427 | 0.102 | 0.373 | 0.031 |

| −60 | NA | NA | 0.843 | 0.132 | 0.142 |

| Nav. (30 to −20) | 2.093 | 0.124 | 1.533 | 0.722 | 0.956 |

| Flat Move | 2.634 | 5.588 | 0.944 | 0.543 | 1.347 |

| Transition | 17.506 | 3.035 | 0.091 | 0.382 | 0.203 |

| Orientation System | Properties | Achieved Results (deg.) |

|---|---|---|

| Encoder | Encoder 64 pulse resolution | 10.562 |

| ToF | range = 2 m, resolution 1 mm, accuracy = 3% | 0.90 |

| Sonar Beacon Sys. | High precision (2 cm),range = 50 m, location update @ 25 Hz | 0.92 |

| Camera | 1920 × 1080 pixels @ 30 fps, 78 deg FoV | 0.58 |

| IMU | 0.5/1.0 deg. Static/Dynamic Pitch and Roll @ 400 Hz | 0.26 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vega-Heredia, M.; Muhammad, I.; Ghanta, S.; Ayyalusami, V.; Aisyah, S.; Elara, M.R. Multi-Sensor Orientation Tracking for a Façade-Cleaning Robot. Sensors 2020, 20, 1483. https://doi.org/10.3390/s20051483

Vega-Heredia M, Muhammad I, Ghanta S, Ayyalusami V, Aisyah S, Elara MR. Multi-Sensor Orientation Tracking for a Façade-Cleaning Robot. Sensors. 2020; 20(5):1483. https://doi.org/10.3390/s20051483

Chicago/Turabian StyleVega-Heredia, Manuel, Ilyas Muhammad, Sriharsha Ghanta, Vengadesh Ayyalusami, Siti Aisyah, and Mohan Rajesh Elara. 2020. "Multi-Sensor Orientation Tracking for a Façade-Cleaning Robot" Sensors 20, no. 5: 1483. https://doi.org/10.3390/s20051483

APA StyleVega-Heredia, M., Muhammad, I., Ghanta, S., Ayyalusami, V., Aisyah, S., & Elara, M. R. (2020). Multi-Sensor Orientation Tracking for a Façade-Cleaning Robot. Sensors, 20(5), 1483. https://doi.org/10.3390/s20051483