Passive Detection of Ship-Radiated Acoustic Signal Using Coherent Integration of Cross-Power Spectrum with Doppler and Time Delay Compensations

Abstract

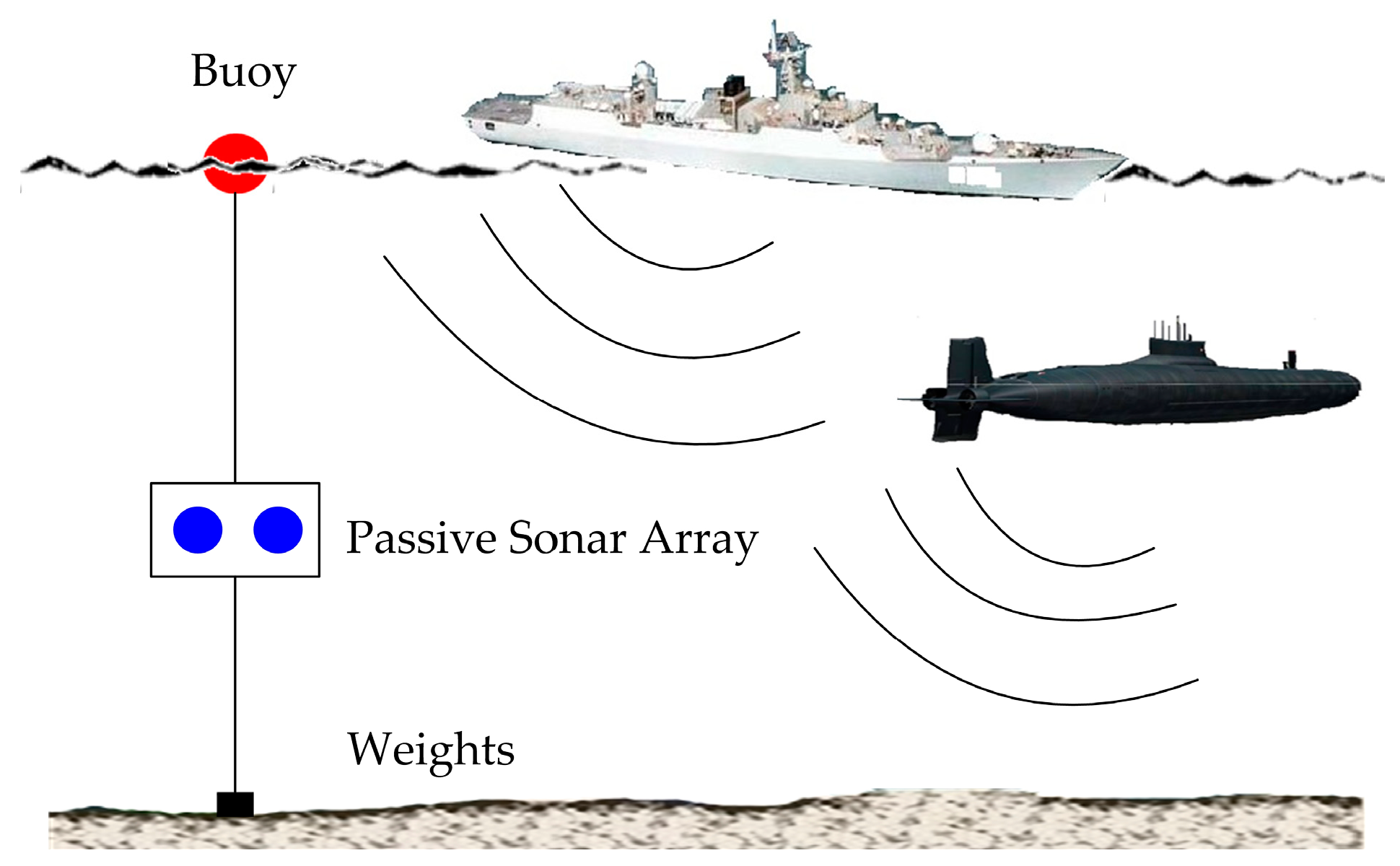

:1. Introduction

2. Theory of Coherent Integration for the Cross-Power Spectrum with Doppler and Time Delay Compensations

2.1. Signal Model and Cross-Power Spectrum Coherent Integration

2.2. Estimations and Compensations of Doppler Factor and Time Delay for Cross-Power Spectrum

2.2.1. Methods for Doppler Factor and Time Delay Estimations

2.2.2. Compensations of Doppler Factor and Time Delay for Cross-Power Spectrum

2.3. Implementation of Coherent Integration Using the Compensated Cross-Power Spectrum and Analysis of Integration Gain

3. Performance of Different Algorithms for Discrete Spectral Estimation in Simulation

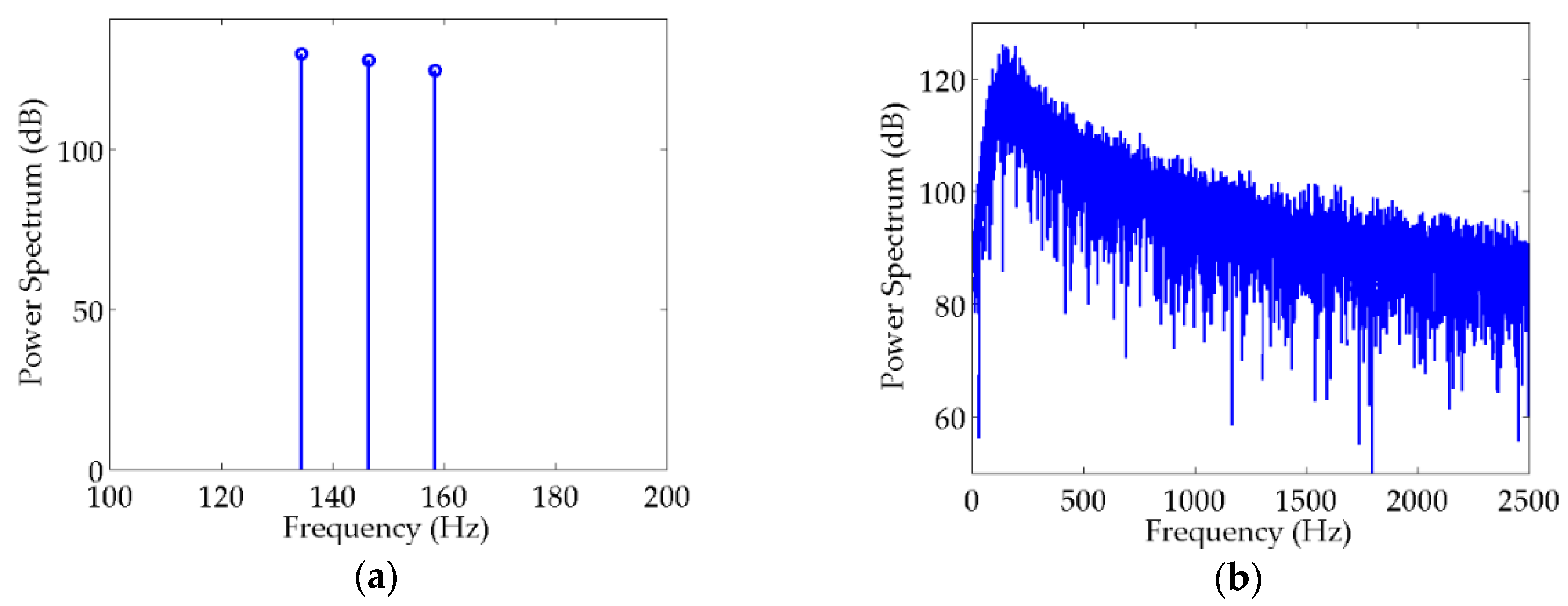

3.1. Simulated Signals

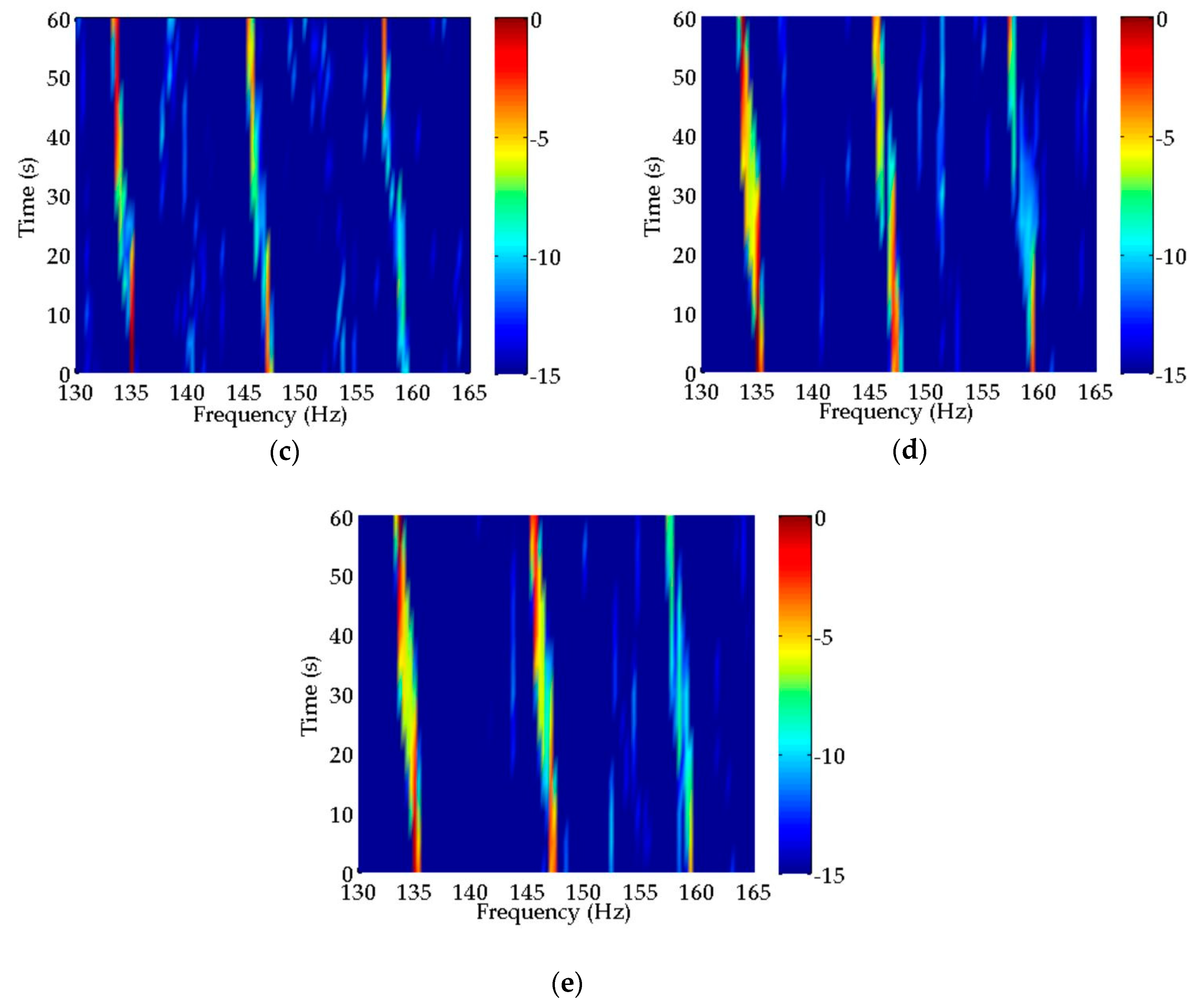

3.2. Analysis of Narrowband Discrete Spectral Estimation Results Obtained from Different Methods

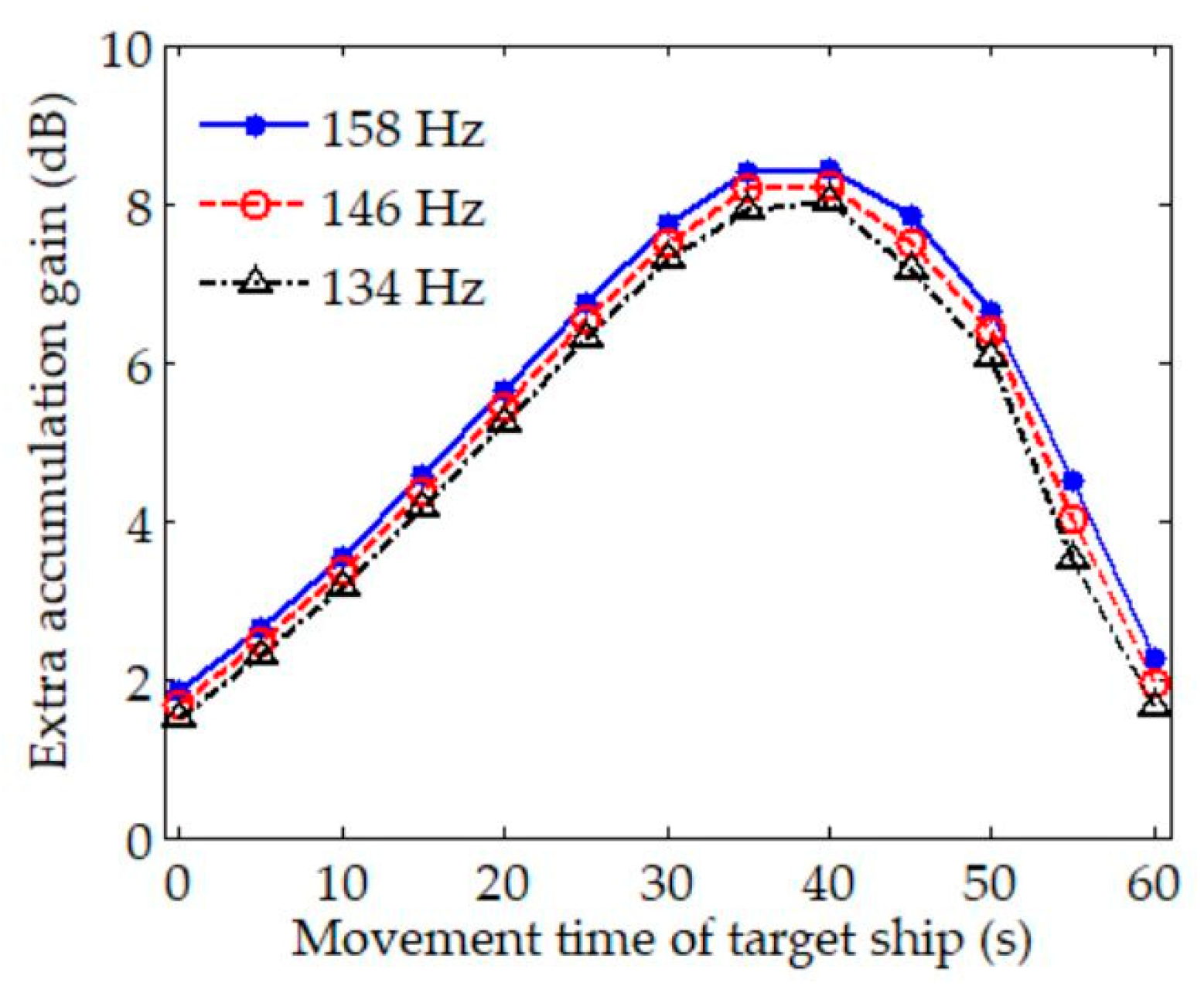

3.3. Extra Integration Gain

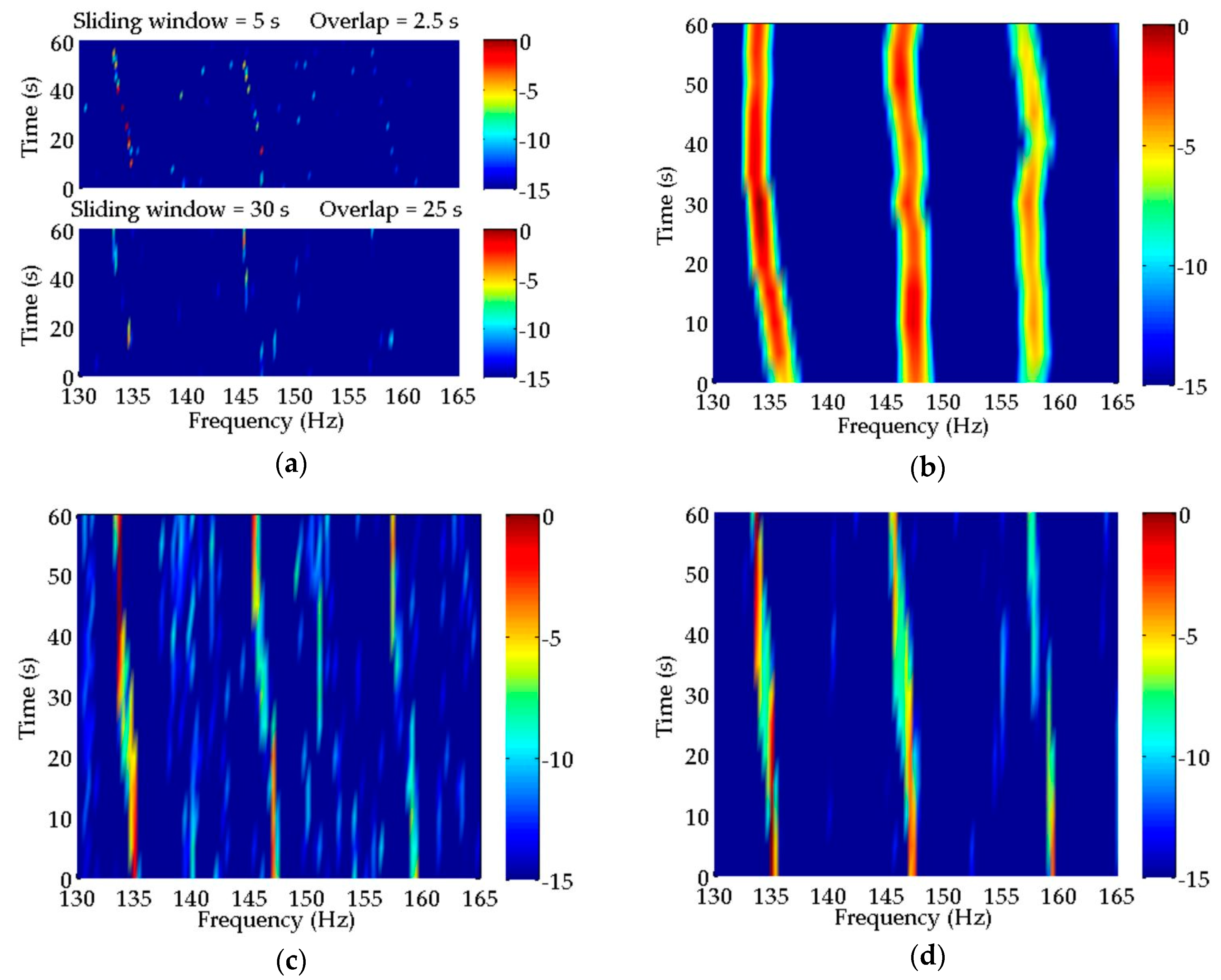

4. Analysis of Experimental Data Processing Using Different Methods

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Theory and Implementation of a Two-step Method for Time Scale Factor and Time Delay Estimations

Appendix B. Parameter settings of the Methods for Spectral Estimation

Appendix B.1. Simulated Signal Processing

- The dimension of the covariance matrix calculated by 299,000 snapshots in MUSIC [35] is . The number of discrete spectra to be estimated is set to three;

- For CPSCI, CPSII, and CCPSCI, the sliding window length of LOFAR is equal to the total integration length for one incoherent or coherent integration. The number of integrations is 10, and the time length of each integration is 3 s, i.e., there is no overlap between any two integrations during each incoherent or coherent integration. The search range of time scale factor in CCPSCI is with a search step size of . The search range can be set according to the maximum speed of the moving target;

- In order to reduce the influence from the obvious fluctuations in the continuous spectrum, the continuous spectrum of all the estimation results are made stationary with the help of detrending processing, which is achieved through polynomial fitting. The received signals are band-pass filtered in the frequency range shown in the LOFAR results before spectral estimation. Therefore, the processing of polynomial fitting is implemented in a narrowband. Generally, the continuous spectrum of sonar signal in a narrowband is linear or quadratic, which can be obtained by polynomial fitting [36,37];

- The final spectral estimation result of each method is normalized and then displayed by LOFAR.

Appendix B.2. Experimental Data Processing

- The lasso technique is applied for performing minimization in the CS;

- The dimension of the covariance matrix calculated by 292,000 snapshots in MUSIC is . The number of discrete spectra to be estimated is set to eight;

- The implementation for obtaining the incoherent and coherent integration of CPSCI, CPSII, and CCPSCI in experimental data processing is the same as in the simulation. The search range of time scale factor in CCPSCI is with a search step size of ;

- All of the detection results are detrended by polynomial fitting to remove the fluctuating background noise;

- The final spectral estimation result of each method is normalized and then displayed by LOFAR.

References

- Ainslie, M.A. Introduction. In Principles of Sonar Performance Modelling; Springer: Chichester, UK, 2010; pp. 1–25. [Google Scholar]

- Jafri, S.M.R.; TBt, A.P. Noise effects on surface ship passive sonar and possible ASW solution. IJTNR 2014, 2, 29–39. [Google Scholar]

- Komari Alaie, H.; Farsi, H.J. Passive sonar target detection using statistical classifier and adaptive threshold. Appl. Sci. 2018, 8, 61. [Google Scholar] [CrossRef] [Green Version]

- Waite, A.D.; Waite, A. Passive Sonar. In Sonar for Practising Engineers, 3rd ed.; Wiley: New York, NY, USA, 2002; pp. 125–159. [Google Scholar]

- Lourens, J. Passive sonar detection of ships with spectrograms. In Proceedings of the IEEE South African Symposium on Communications and Signal Processing, Johannesburg, South Africa, 29–29 June 1990; IEEE: Piscataway, NJ, USA, 2002; pp. 147–151. [Google Scholar]

- Masters, B.R. Signals and Systems. In Handbook for Digital Signal Processing; Madisetti, V.K., Williams, D.B., Eds.; CRC Press: Boca Raton, FL, USA, 1999; pp. 8–36. [Google Scholar]

- Gröchenig, K. Time-Frequency Analysis and the Uncertainty Principle. In Foundations of Time-Frequency Analysis; Springer Science & Business Media: New York, NY, USA, 2001; pp. 21–33. [Google Scholar]

- Yan, J.; Sun, H.; Chen, H.; Junejo, N.U.R.; Cheng, E. Resonance-Based Time-Frequency Manifold for Feature Extraction of Ship-Radiated Noise. Sensors 2018, 18, 936. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Prokopenko, I.; Churina, A. Spectral estimation by the model of Autoregressive Moving Average and its resolution power. In Proceedings of the 2008 Microwaves, Radar and Remote Sensing Symposium, Kiev, Ukraine, 22–24 September 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 162–165. [Google Scholar]

- Firat, U.; Akgül, T. Spectral estimation of cavitation related narrow-band ship radiated noise based on fractional lower order statistics and multiple signal classification. In Proceedings of the 2013 OCEANS-San Diego, San Diego, CA, USA, 23–27 September 2013; IEEE: Piscataway, NJ, USA, 2014; pp. 1–6. [Google Scholar]

- Huang, S.; Sun, H.; Zhang, H.; Yu, L.J. Line Spectral Estimation Based on Compressed Sensing with Deterministic Sub-Nyquist Sampling. Circuits Syst. Signal Process. 2018, 37, 1777–1788. [Google Scholar] [CrossRef] [Green Version]

- Yecai, G.; Junwei, Z.; Huawei, C. Special algorithm of enhancing underwater target-radiated dynamic line spectrum. J. Syst. Eng. Electron. 2005, 16, 797–801. [Google Scholar]

- Guo, Y.; Zhao, J.; Chen, H. A novel algorithm for underwater moving-target dynamic line enhancement. Appl. Acoust. 2003, 64, 1159–1169. [Google Scholar] [CrossRef]

- Hao, Y.; Chi, C.; Qiu, L.H.; Liang, G.L. Sparsity-based adaptive line enhancer for passive sonars. IET Radar Sonar Navig. 2019, 13, 1796–1804. [Google Scholar] [CrossRef]

- Yecai, G.; Longqing, H.; Yanping, Z. Coherent Accumulation Algorithm Based Multilevel Switching Adaptive Line Enhancer. In Proceedings of the 2007 8th International Conference on Electronic Measurement and Instruments, Xi’an, China, 16–18 August 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 2-357–2-362. [Google Scholar]

- Farley, D.T. Coherent integration. Int. Counc. Sci. Unions Middle Atmos. Program 1995, 9, 507–508. [Google Scholar]

- Li, X.; Cui, G.; Yi, W.; Kong, L.J. Sequence-reversing transform-based coherent integration for high-speed target detection. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 1573–1580. [Google Scholar] [CrossRef]

- Rao, X.; Tao, H.; Xie, J.; Su, J.; Li, W. Long-time coherent integration detection of weak manoeuvring target via integration algorithm, improved axis rotation discrete chirp-Fourier transform. IET Radar Sonar Navig. 2015, 9, 917–926. [Google Scholar] [CrossRef]

- Lin, L.; Sun, G.; Cheng, Z.; He, Z.S. Long-Time Coherent Integration for Maneuvering Target Detection Based on ITRT-MRFT. IEEE Sensors J. 2019. (Early Access). [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, X.; Chang, X.L. Long-time coherent accumulation algorithm based on acceleration blind estimation. J. Eng. 2019, 2019, 7440–7443. [Google Scholar] [CrossRef]

- Yang, H.; Shen, S.; Yao, X.; Sheng, M.; Wang, C. Competitive deep-belief networks for underwater acoustic target recognition. Sensors 2018, 18, 952. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, H.; Li, J.; Shen, S.; Xu, G. A deep convolutional neural network inspired by auditory perception for underwater acoustic target recognition. Sensors 2019, 19, 1104. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shen, S.; Yang, H.; Yao, X.; Li, J.; Xu, G.; Sheng, M. Ship Type Classification by Convolutional Neural Networks with Auditory-Like Mechanisms. Sensors 2020, 20, 253. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Moschas, F.; Stiros, S. Experimental evaluation of the performance of arrays of MEMS accelerometers. Mech. Syst. Signal Process. 2019, 116, 933–942. [Google Scholar] [CrossRef]

- Fang, E.Z.; Hong, L.J.; Yang, D.S. Self-noise analysis of the MEMS hydrophone. J. Harbin Eng. Univ. 2014, 35, 285–288. [Google Scholar]

- Lo, K.W.; Ferguson, B.G. Diver detection and localization using passive sonar. In Proceedings of the Acoustics, Fremantle, Australia, 21–23 November 2012; Springer: Berlin, Germany, 2012; pp. 1–8. [Google Scholar]

- Sun, T.; Shan, X.; Chen, J. Parameters estimation of LFM echoes based on relationship of time delay and Doppler shift. In Proceedings of the 2012 5th International Congress on Image and Signal Processing, Chongqing, China, 16–18 October 2012; IEEE: Piscataway, NJ, USA, 2013; pp. 1489–1494. [Google Scholar]

- Ouahabi, A.; Kouame, D. Fast techniques for time delay and Doppler estimation. In Proceedings of the ICECS 2000 7th IEEE International Conference on Electronics, Circuits and Systems (Cat. No. 00EX445), Jounieh, Lebanon, 17–20 December 2000; IEEE: Piscataway, NJ, USA, 2002; pp. 337–340. [Google Scholar]

- Guo, W.; Piao, S.C.; Li, N.S.; Han, X.; Fu, J.S. Cross-spectral time delay estimation method by Doppler estimation in frequency domain. Acta Acust. 2019. (Under review). [Google Scholar]

- Qin, L.; Bingcheng, Y.; Wenjuan, Z. Modeling of ship-radiated noise and its implement of simulator. Ship Sci. Technol. 2010, 32, 24–26. [Google Scholar]

- Gupta, P.; Kar, S. MUSIC and improved MUSIC algorithm to estimate direction of arrival. In Proceedings of the 2015 International Conference on Communications and Signal Processing (ICCSP), Melmaruvathur, India, 2–4 April 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 0757–0761. [Google Scholar]

- Ioannopoulos, G.A.; Anagnostou, D.; Chryssomallis, M.T. A survey on the effect of small snapshots number and SNR on the efficiency of the MUSIC algorithm. In Proceedings of the 2012 IEEE International Symposium on Antennas and Propagation, Chicago, IL, USA, 8–14 July 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–2. [Google Scholar]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Methodol.) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Stoica, P.; Soderstrom, T. Statistical analysis of MUSIC and subspace rotation estimates of sinusoidal frequencies. IEEE Trans. Signal Process. 1991, 39, 1836–1847. [Google Scholar] [CrossRef]

- Müller, W.G. Optimal design for local fitting. J. Stat. Plan. Inference 1996, 55, 389–397. [Google Scholar] [CrossRef]

- Abdelsalam, D.; Baek, B.J. Curvature measurement using phase shifting in-line interferometry, single shot off-axis geometry and Zernike’s polynomial fitting. Optik 2012, 123, 422–427. [Google Scholar] [CrossRef]

| Algorithm | Abbreviations |

|---|---|

| Compressed Sensing | CS |

| Multiple Signal Classification | MUSIC |

| Cross-Power Spectrum Coherent Integration | CPSCI |

| Cross-Power Spectrum Incoherent Integration | CPSII |

| Compensated Cross-Power Spectrum Coherent Integration | CCPSCI |

| Algorithm | SNR = 20 dB | SNR = −10 dB | ||||

|---|---|---|---|---|---|---|

| 134 Hz | 146 Hz | 158 Hz | 134 Hz | 146 Hz | 158 Hz | |

| CPSCI/dB | −6.902 | −8.037 | −12.865 | −9.707 | −12.515 | −11.155 |

| CPSII/dB | −6.297 | −8.406 | −11.055 | −6.262 | −8.168 | −11.165 |

| CCPSCI/dB | −6.571 | −6.455 | −8.275 | −6.331 | −7.423 | −9.267 |

| Algorithm | 199 Hz | 212 Hz | 225 Hz | 238 Hz | 251 Hz | 262 Hz | 282 Hz | 301 Hz |

|---|---|---|---|---|---|---|---|---|

| CPSCI/dB | −4.378 | −4.378 | −6.545 | −10.885 | −2.201 | −8.716 | −6.535 | −2.185 |

| CPSII/dB | −4.374 | −4.378 | −6.546 | −8.711 | −0.032 | −13.055 | −4.371 | −2.182 |

| CCPSCI/dB | −0.033 | −0.041 | −2.222 | −6.568 | −0.027 | −4.381 | −0.035 | −0.007 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, W.; Piao, S.; Guo, J.; Lei, Y.; Iqbal, K. Passive Detection of Ship-Radiated Acoustic Signal Using Coherent Integration of Cross-Power Spectrum with Doppler and Time Delay Compensations. Sensors 2020, 20, 1767. https://doi.org/10.3390/s20061767

Guo W, Piao S, Guo J, Lei Y, Iqbal K. Passive Detection of Ship-Radiated Acoustic Signal Using Coherent Integration of Cross-Power Spectrum with Doppler and Time Delay Compensations. Sensors. 2020; 20(6):1767. https://doi.org/10.3390/s20061767

Chicago/Turabian StyleGuo, Wei, Shengchun Piao, Junyuan Guo, Yahui Lei, and Kashif Iqbal. 2020. "Passive Detection of Ship-Radiated Acoustic Signal Using Coherent Integration of Cross-Power Spectrum with Doppler and Time Delay Compensations" Sensors 20, no. 6: 1767. https://doi.org/10.3390/s20061767