Structural Health Monitoring for Jacket-Type Offshore Wind Turbines: Experimental Proof of Concept

Abstract

1. Introduction

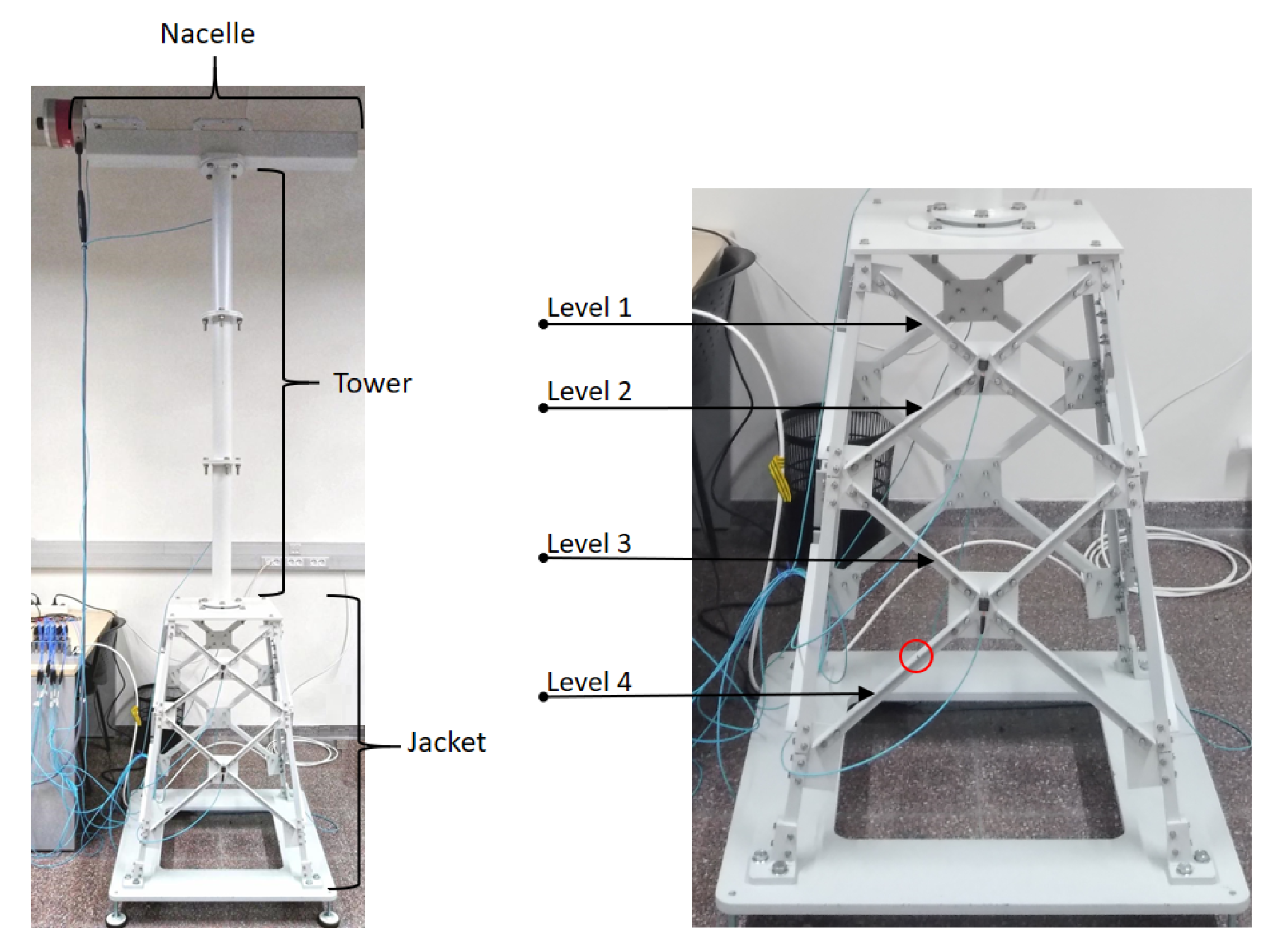

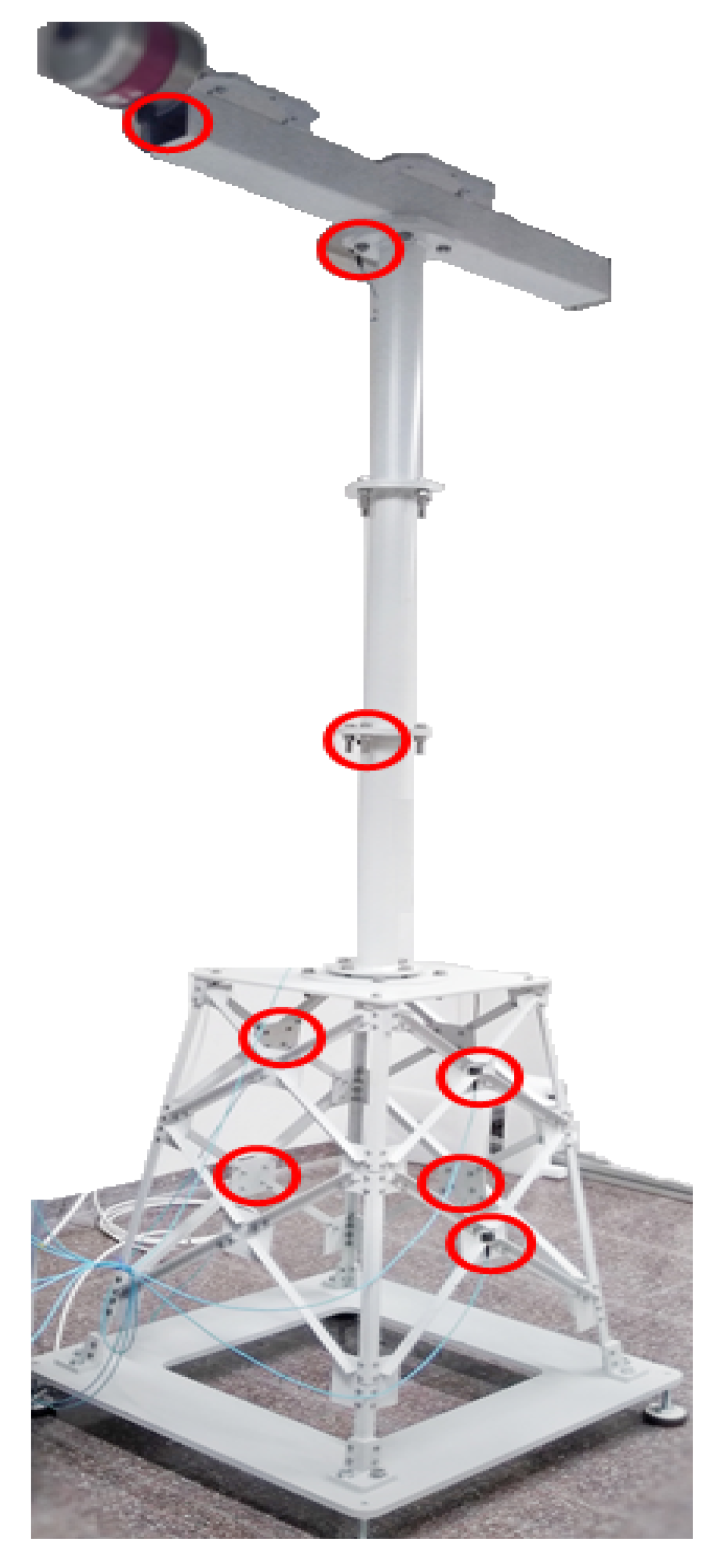

2. Experimental Testbed

2.1. Function Generator

2.2. Amplifier and Shaker

2.3. Laboratory Tower Structure and Studied Types of Damage

2.4. Sensors

2.5. Data Acquisition System

3. Damage Detection Methodology

3.1. Data Collection and Reshape

- represents the th experimental trial, while is the number of observations or experimental trials per structural state;

- is the structural state that is been measured, while J is the quantity of different structural states;

- indicates the sensor that is measuring, while K is the total number of sensors;

- identifies the time stamp, while L is the number of time stamps per experiment.

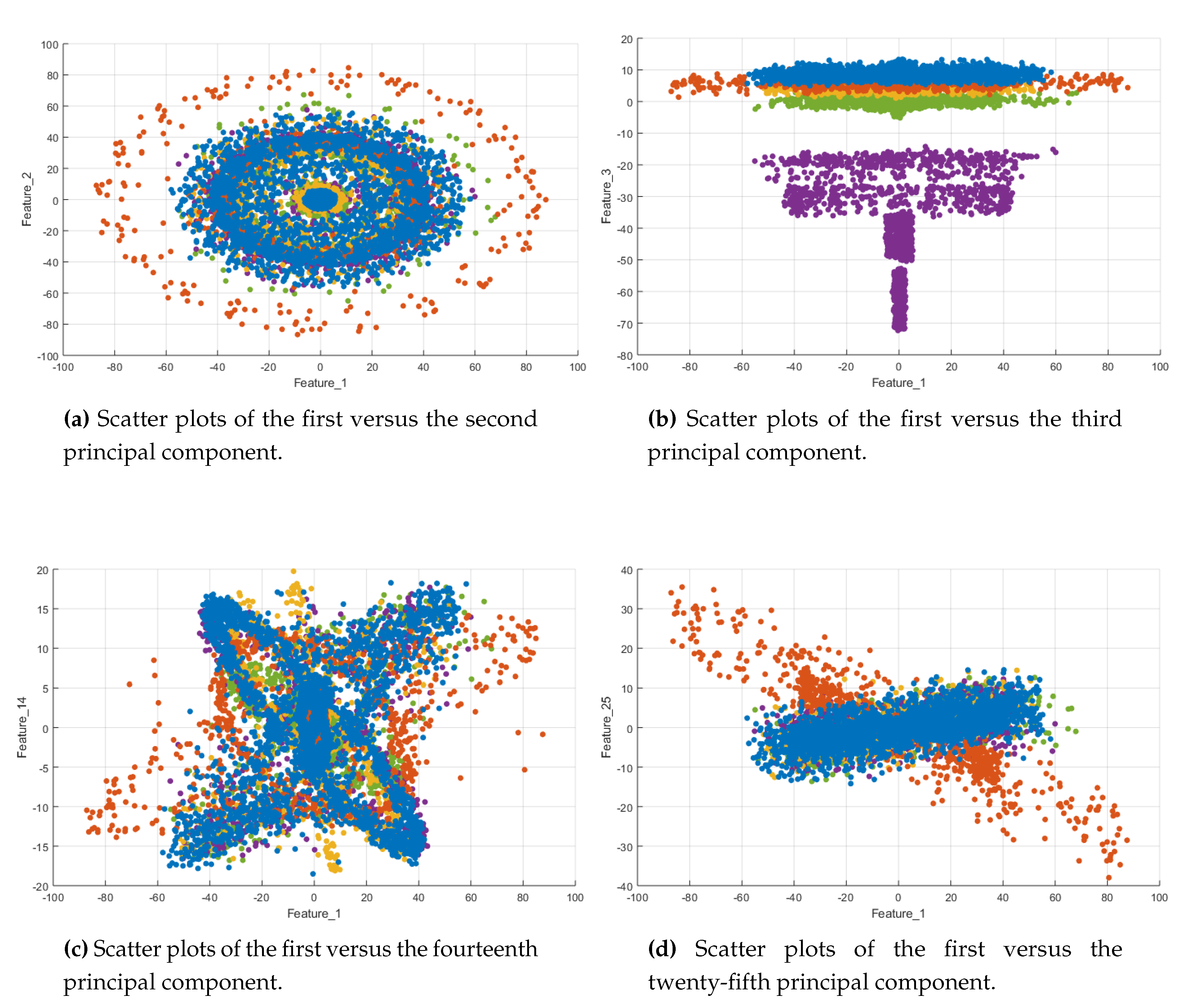

3.2. Column-Scaling and Principal Component Analysis (PCA)

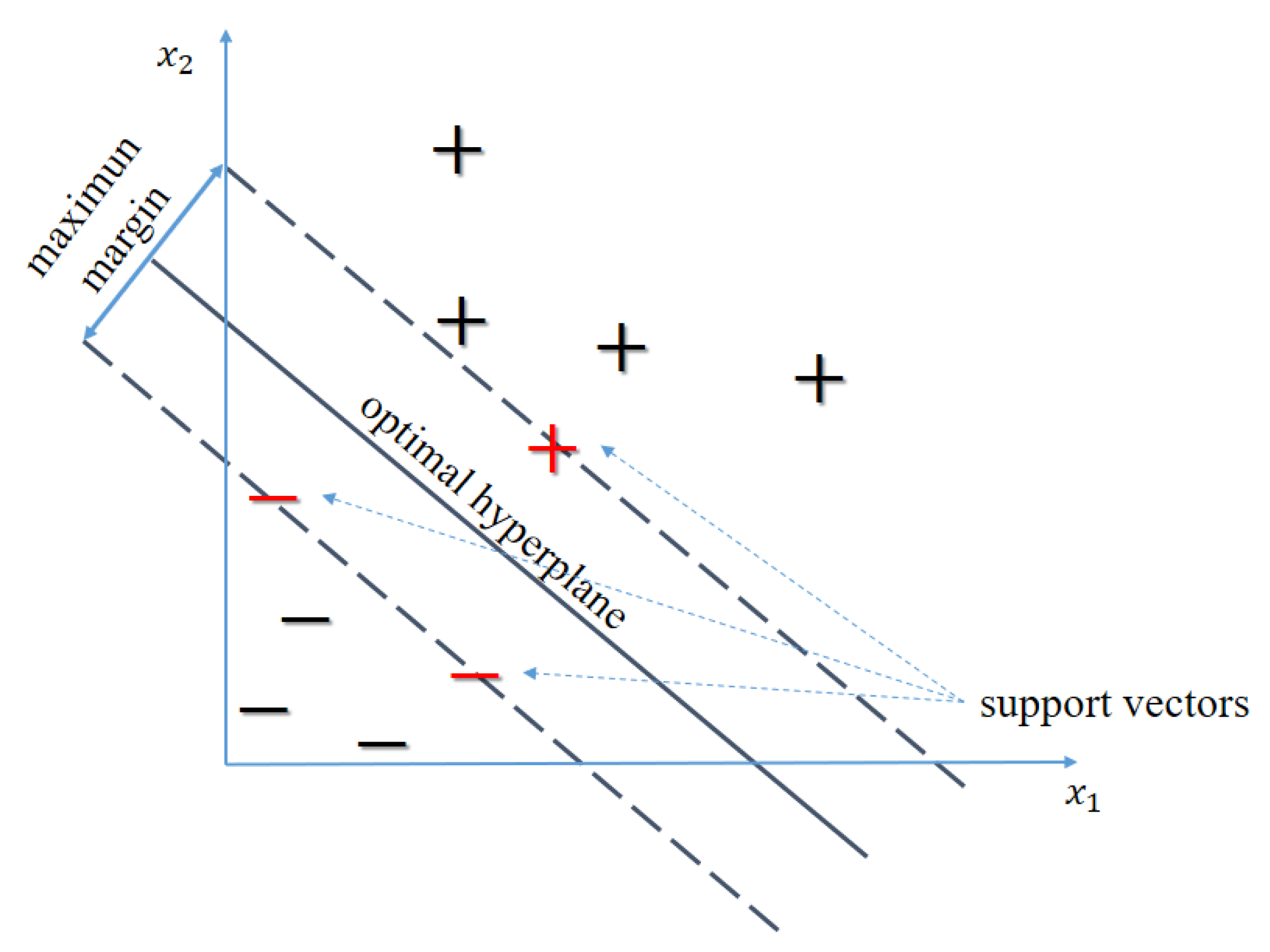

3.3. Machine-Learning Classifiers

3.3.1. k-Nearest Neighbor (k-NN)

3.3.2. Support Vector Machine (SVM)

3.4. -Fold Cross-Validation

4. Results

4.1. Experimental Set-Up

- (i)

- 1245 tests with the original healthy bar for each amplitude, i.e., tests.

- (ii)

- 415 tests with damage located at level 1 for each amplitude, i.e., tests.

- (iii)

- 415 tests with damage located at level 2 for each amplitude, i.e., tests.

- (iv)

- 415 tests with damage located at level 3 for each amplitude, i.e., tests.

- (v)

- 415 tests with damage located at level 4 for each amplitude, i.e., tests.

- (i)

- if we retain of the variance, the first 443 principal components are needed out of columns. This represents a data reduction (leading to reduced memory requirements) of .

- (ii)

- if we retain of the variance, the first 887 principal components are needed. The reduction in this case is .

- (iii)

- if we retain of the variance, the first 1770 principal components are needed. This represents a still significant reduction of .

4.2. Metrics for Evaluating Classification Models

- True positive (TP) is the number of positive cases that were correctly identified.

- False positive (FP) is the quantity of negative cases that were incorrectly classified as positive.

- True negative (TN) is defined as the sum of negative cases that were correctly classified.

- False negative (FN) is the total of positive cases that were incorrectly classified as negative.

- the green region is related to the true positive ();

- the magenta region is related to the false positive (). More precisely, is the sum of the elements in the second column but , i.e., ;

- the orange region is related to the false negative (). More precisely, is the sum of the elements in the second row but , i.e., ; and finally,

- the cyan region is related to the true negative (). More precisely, is the sum of all the elements of the confusion matrix but the ones in the second row and the second column.

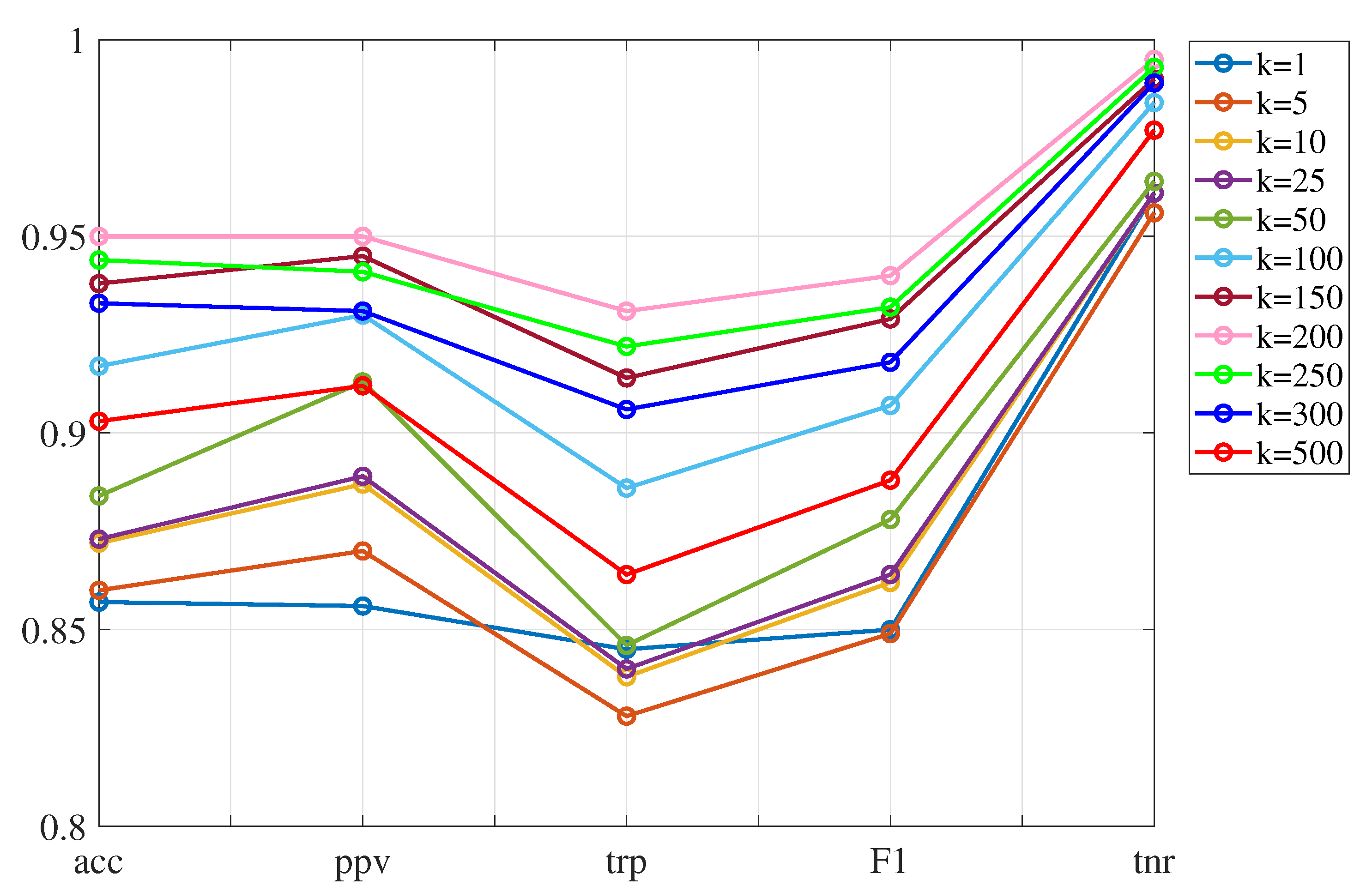

4.3. Results of k-NN Classification Method

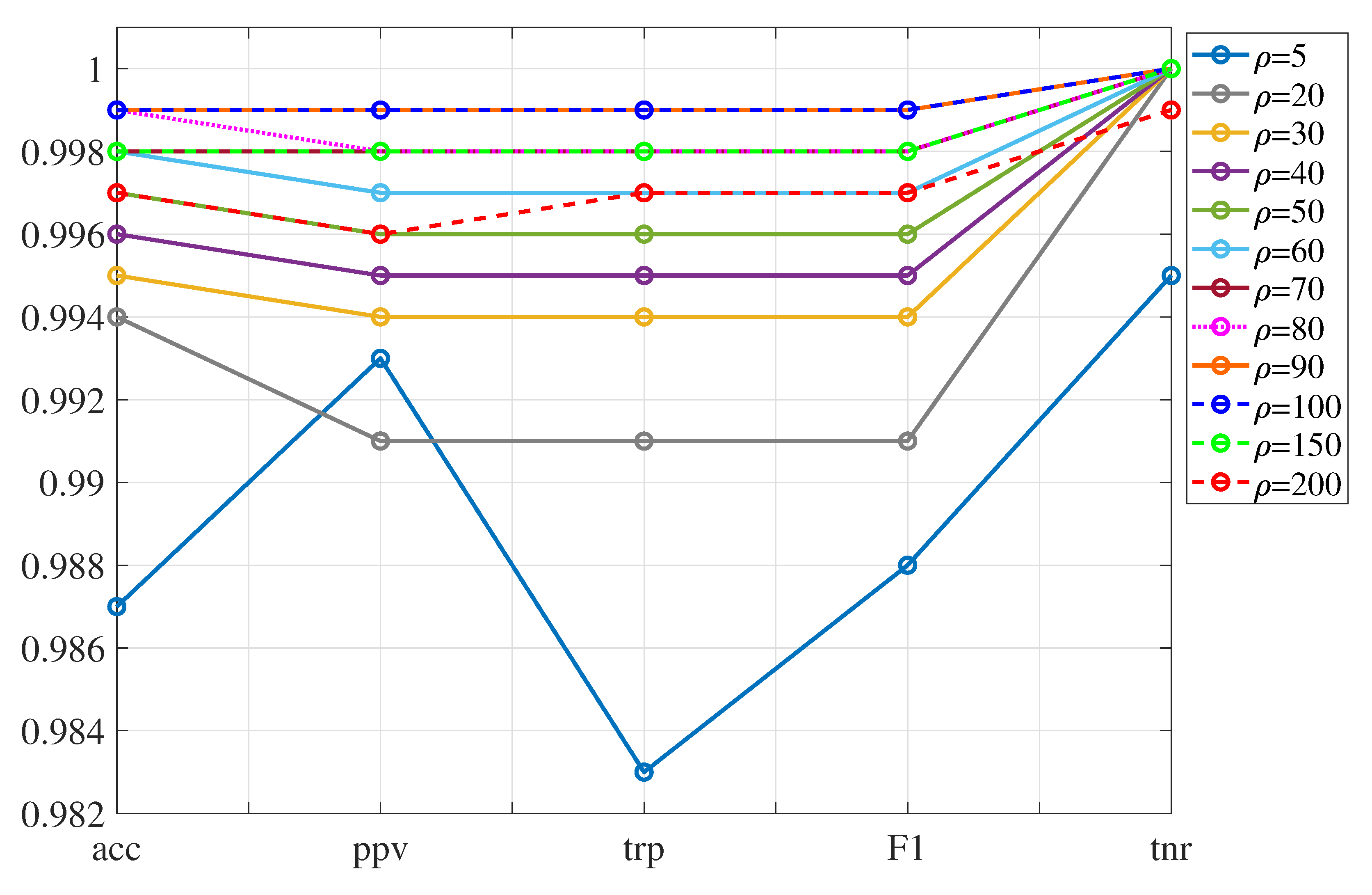

4.4. Results of SVM Classification Method

5. Conclusions

- (i)

- A vibration-response-only methodology has been conceived and a satisfactory experimental proof of concept has been conducted. However, future work is needed to validate the technology in a more realistic environment that takes into account the varying environmental and operational conditions.

- (ii)

- The contribution of this work resides in how three-dimensional data (coming from different time, sensors, and experiments) is collected, arranged, scaled, transformed, and dimension reduced following a general framework stated in Section 3.1 and Section 3.2, and afterwards particularized for the specific application that concerns us in Section 4.1.

- (iii)

- The damage detection and localization methodology with the quadratic SVM classifier, kernel scale , box constraint , and 443 principal components (85% of variance kept) has a very close to ideal performance, achieving in all indicators a result equal o higher to 99.99% with a very fast prediction speed (1700 obs/sec) and short training time (76 sec).

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| CS | column-scaling |

| FN | false negative |

| FP | false positive |

| k-NN | k nearest neighbors |

| IEPE | integrated electronic piezoelectric sensors |

| MAC | modal assurance criterion |

| PC | principal component |

| PCA | principal component analysis |

| SHM | structural health monitoring |

| SVM | support vector machine |

| TN | true negative |

| TP | true positive |

| WT | wind turbine |

References

- Klijnstra, J.; Zhang, X.; van der Putten, S.; Röckmann, C. Aquaculture Perspective of Multi-Use Sites in the Open Ocean; Technical Risks of Offshore Structures; Springer: Cham, Switzerland, 2017; pp. 115–127. [Google Scholar]

- Fritzen, C.P. Structural Health Monitoring; Vibration-Based Techniques for Structural Health Monitoring; Wiley: Hoboken, NJ, USA, 2006; pp. 45–224. [Google Scholar]

- Fassois, S.D.; Sakellariou, J.S. Time-series methods for fault detection and identification in vibrating structures. Philos. Trans. R. Soc. A 2006, 365, 411–448. [Google Scholar] [CrossRef] [PubMed]

- Goyal, D.; Pabla, B. The vibration monitoring methods and signal processing techniques for structural health monitoring: a review. Arch. Comput. Meth. Eng. 2016, 23, 585–594. [Google Scholar] [CrossRef]

- Vamvoudakis-Stefanou, K.J.; Sakellariou, J.S.; Fassois, S.D. Output-only statistical time series methods for structural health monitoring: A comparative study. In Proceedings of the 7th European Workshop on Structural Health Monitoring, Nantes, France, 8–11 July 2014. [Google Scholar]

- Liu, W.; Tang, B.; Han, J.; Lu, X.; Hu, N.; He, Z. The structure healthy condition monitoring and fault diagnosis methods in wind turbines: A review. Renew. Sustain. Energy Rev. 2015, 44, 466–472. [Google Scholar] [CrossRef]

- Martinez-Luengo, M.; Kolios, A.; Wang, L. Structural health monitoring of offshore wind turbines: A review through the Statistical Pattern Recognition Paradigm. Renew. Sustain. Energy Rev. 2016, 64, 91–105. [Google Scholar] [CrossRef]

- Lian, J.; Cai, O.; Dong, X.; Jiang, Q.; Zhao, Y. Health monitoring and safety evaluation of the offshore wind turbine structure: a review and discussion of future development. Sustainability 2019, 11, 494. [Google Scholar] [CrossRef]

- Mieloszyk, M.; Ostachowicz, W. An application of Structural Health Monitoring system based on FBG sensors to offshore wind turbine support structure model. Mar. Struct. 2017, 51, 65–86. [Google Scholar] [CrossRef]

- Fritzen, C.P.; Kraemer, P.; Klinkov, M. Structural Dynamics; An Integrated SHM Approach for Offshore Wind Energy Plants; Springer: New York, NY, USA, 2011; pp. 727–740. [Google Scholar]

- Schröder, K.; Gebhardt, C.; Rolfes, R. Damage Localization at Wind Turbine Support Structures Using Sequential Quadratic Programming for Model Updating. In Proceedings of the 8th European Workshop on Structural Health Monitoring, Bilbao, Spain, 5–8 July 2016. [Google Scholar]

- Weijtjens, W.; Verbelen, T.; De Sitter, G.; Devriendt, C. Foundation structural health monitoring of an offshore wind turbine—A full-scale case study. Struct. Health Monit. 2016, 15, 389–402. [Google Scholar] [CrossRef]

- Elshafey, A.A.; Haddara, M.R.; Marzouk, H. Damage detection in offshore structures using neural networks. Mar. Struct. 2010, 23, 131–145. [Google Scholar] [CrossRef]

- Mojtahedi, A.; Yaghin, M.L.; Hassanzadeh, Y.; Ettefagh, M.; Aminfar, M.; Aghdam, A. Developing a robust SHM method for offshore jacket platform using model updating and fuzzy logic system. Appl. Ocean Res. 2011, 33, 398–411. [Google Scholar] [CrossRef]

- Papatheou, E.; Dervilis, N.; Maguire, A.E.; Campos, C.; Antoniadou, I.; Worden, K. Performance monitoring of a wind turbine using extreme function theory. Renew. Energy 2017, 113, 1490–1502. [Google Scholar] [CrossRef]

- Zugasti Uriguen, E. Design and Validation of a Methodology for Wind Energy Structures Health Monitoring. Ph.D. Thesis, Universitat Politècnica de Catalunya, Barcelona, Spain, 2014. [Google Scholar]

- Pozo, F.; Vidal, Y. Wind turbine fault detection through principal component analysis and statistical hypothesis testing. Energies 2016, 9, 3. [Google Scholar] [CrossRef]

- Ziegler, L.; Muskulus, M. Comparing a fracture mechanics model to the SN-curve approach for jacket-supported offshore wind turbines: Challenges and opportunities for lifetime prediction. In Proceedings of the ASME 2016 35th International Conference on Ocean, Offshore and Arctic Engineering, Busan, Korea, 18–24 June 2016. [Google Scholar]

- Pozo, F.; Vidal, Y.; Serrahima, J. On real-time fault detection in wind turbines: Sensor selection algorithm and detection time reduction analysis. Energies 2016, 9, 520. [Google Scholar] [CrossRef]

- Anaissi, A.; Makki Alamdari, M.; Rakotoarivelo, T.; Khoa, N. A tensor-based structural damage identification and severity assessment. Sensors 2018, 18, 111. [Google Scholar] [CrossRef] [PubMed]

- Westerhuis, J.A.; Kourti, T.; MacGregor, J.F. Comparing alternative approaches for multivariate statistical analysis of batch process data. J. Chemom. 1999, 13, 397–413. [Google Scholar] [CrossRef]

- Mujica, L.; Rodellar, J.; Fernandez, A.; Güemes, A. Q-statistic and T2-statistic PCA-based measures for damage assessment in structures. Struct. Health Monit. 2011, 10, 539–553. [Google Scholar] [CrossRef]

- Pozo, F.; Vidal, Y.; Salgado, Ó. Wind turbine condition monitoring strategy through multiway PCA and multivariate inference. Energies 2018, 11, 749. [Google Scholar] [CrossRef]

- Wang, Y.; Ma, X.; Qian, P. Wind turbine fault detection and identification through PCA-based optimal variable selection. IEEE Trans. Sustain. Energy 2018, 9, 1627–1635. [Google Scholar] [CrossRef]

- Jolliffe, I.T.; Cadima, J. Principal component analysis: A review and recent developments. Philos. Trans. R. Soc. A 2016, 374, 20150202. [Google Scholar] [CrossRef]

- Tan, M.; Zhang, Z. Wind turbine modeling with data-driven methods and radially uniform designs. IEEE Trans. Ind. Informatics 2016, 12, 1261–1269. [Google Scholar] [CrossRef]

- Vitola, J.; Pozo, F.; Tibaduiza, D.A.; Anaya, M. A sensor data fusion system based on k-nearest neighbor pattern classification for structural health monitoring applications. Sensors 2017, 17, 417. [Google Scholar] [CrossRef]

- Smola, A.J.; Schölkopf, B. A tutorial on support vector regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

- Vidal, Y.; Pozo, F.; Tutivén, C. Wind turbine multi-fault detection and classification based on SCADA data. Energies 2018, 11, 3018. [Google Scholar] [CrossRef]

- Theodoridis, S.; Koutroumbas, K. Pattern Recognition; Elsevier: Amsterdam, The Netherlands, 2009. [Google Scholar]

- Scholkopf, B.; Sung, K.K.; Burges, C.J.; Girosi, F.; Niyogi, P.; Poggio, T.; Vapnik, V. Comparing support vector machines with Gaussian kernels to radial basis function classifiers. IEEE Trans. Signal Process. 1997, 45, 2758–2765. [Google Scholar] [CrossRef]

- Allwein, E.L.; Schapire, R.E.; Singer, Y. Reducing multiclass to binary: A unifying approach for margin classifiers. J. Mach. Learn. Res. 2000, 1, 113–141. [Google Scholar]

- Ruiz, M.; Mujica, L.E.; Alferez, S.; Acho, L.; Tutiven, C.; Vidal, Y.; Rodellar, J.; Pozo, F. Wind turbine fault detection and classification by means of image texture analysis. Mech. Syst. Sig. Process. 2018, 107, 149–167. [Google Scholar] [CrossRef]

- Hossin, M.; Sulaiman, M. A review on evaluation metrics for data classification evaluations. IJDKP 2015, 5, 1–11. [Google Scholar]

- Krüger, F. Activity, Context, and Plan Recognition with Computational Causal Behaviour Models. Ph.D. Thesis, University of Rostock, Mecklenburg, Germany, 2016. [Google Scholar]

- Hameed, N.; Hameed, F.; Shabut, A.; Khan, S.; Cirstea, S.; Hossain, A. An Intelligent Computer-Aided Scheme for Classifying Multiple Skin Lesions. Computers 2019, 8, 62. [Google Scholar] [CrossRef]

- Häckell, M.W.; Rolfes, R.; Kane, M.B.; Lynch, J.P. Three-tier modular structural health monitoring framework using environmental and operational condition clustering for data normalization: Validation on an operational wind turbine system. Proc. IEEE 2016, 104, 1632–1646. [Google Scholar] [CrossRef]

- Kraemer, P.; Friedmann, H.; Ebert, C.; Mahowald, J.; Wölfel, B. Experimental validation of stochastic subspace algorithms for structural health monitoring of offshore wind turbine towers and foundations. In Proceedings of the 8th European Workshop On Structural Health Monitoring, Bilbao, Spain, 5–8 July 2016. [Google Scholar]

- Fritzen, C.P.; Kraemer, P.; Buethe, I. Vibration-based damage detection under changing environmental and operational conditions. Adv. Sci. Technol. Water Resour. 2013, 83, 95–104. [Google Scholar] [CrossRef]

- Ostachowicz, W.; Güemes, A. New Trends in Structural Health Monitoring; Springer Science & Business Media: New York, NY, USA, 2013; Volume 542. [Google Scholar]

| Predicted Class | |||

|---|---|---|---|

| Positive | Negative | ||

| Actual class | Positive | True positive (TP) | False negative (FN) |

| Negative | False positive (FP) | True negative (TN) | |

| Metric | Formula | Description |

|---|---|---|

| Accuracy | It is the number of correct predictions made by the model according to the total number of records. The best accuracy is 100%, which indicates that all predictions are correct. Accuracy alone does not tell the full story when working with a class-imbalanced data set. | |

| Precision | This parameter evaluates the data by its performance of positive predictions, in other words, it is the proportion of positive cases that have been correctly predicted. | |

| Sensibility/Recall | This parameter calculates the fraction of the positive cases that our model has been able to identify as positive (true positive). | |

| F1-Score | It is defined as the harmonic mean of precision and sensitivity. A score reaches its best value at 1 (accuracy and perfect sensitivity) and worse at 0. | |

| Specificity | The specificity or true negative rate is calculated as the proportion of correct negative predictions divided by the total number of cases that are classified as negative. Specificity is the exact opposite of sensitivity, the proportion of negative cases predicted correctly. |

| Metric | Formula |

|---|---|

| Average Accuracy () | |

| Average Precision () | |

| Average Sensibility/Recall () | |

| Average F1-Score () | |

| Average Specificity () |

| Predicted Class | ||||||

|---|---|---|---|---|---|---|

| Class A | Class B | Class C | Class D | Class E | ||

| Actual class | Class A | AA | AB | AC | AD | AE |

| Class B | BA | BB | BC | BD | BE | |

| Class C | CA | CB | CC | CD | CE | |

| Class D | DA | DB | DC | DD | DE | |

| Class E | EA | EB | EC | ED | EE | |

| Variance | Number of PCs | Neighbors k | Accuracy | Precision | Recall | F1 score | Specificity |

|---|---|---|---|---|---|---|---|

| 85% | 443 | 1 | 0.857 | 0.860 | 0.846 | 0.853 | 0.950 |

| 5 | 0.856 | 0.869 | 0.82 | 0.844 | 0.953 | ||

| 10 | 0.867 | 0.891 | 0.827 | 0.858 | 0.956 | ||

| 25 | 0.868 | 0.898 | 0.828 | 0.862 | 0.955 | ||

| 50 | 0.874 | 0.912 | 0.831 | 0.87 | 0.958 | ||

| 100 | 0.911 | 0.928 | 0.879 | 0.903 | 0.981 | ||

| 150 | 0.933 | 0.942 | 0.908 | 0.925 | 0.988 | ||

| 200 | 0.946 | 0.947 | 0.924 | 0.936 | 0.993 | ||

| 250 | 0.940 | 0.938 | 0.917 | 0.927 | 0.991 | ||

| 300 | 0.930 | 0.929 | 0.903 | 0.915 | 0.988 | ||

| 500 | 0.899 | 0.909 | 0.859 | 0.884 | 0.976 | ||

| 90% | 887 | 1 | 0.857 | 0.856 | 0.845 | 0.85 | 0.961 |

| 5 | 0.860 | 0.870 | 0.828 | 0.849 | 0.956 | ||

| 10 | 0.872 | 0.887 | 0.838 | 0.862 | 0.961 | ||

| 25 | 0.873 | 0.889 | 0.840 | 0.864 | 0.961 | ||

| 50 | 0.884 | 0.913 | 0.846 | 0.878 | 0.964 | ||

| 100 | 0.917 | 0.930 | 0.886 | 0.907 | 0.984 | ||

| 150 | 0.938 | 0.945 | 0.914 | 0.929 | 0.990 | ||

| 200 | 0.950 | 0.950 | 0.931 | 0.940 | 0.995 | ||

| 250 | 0.944 | 0.941 | 0.922 | 0.932 | 0.993 | ||

| 300 | 0.933 | 0.931 | 0.906 | 0.918 | 0.989 | ||

| 500 | 0.903 | 0.912 | 0.864 | 0.888 | 0.977 | ||

| 95% | 1770 | 1 | 0.854 | 0.853 | 0.842 | 0.847 | 0.960 |

| 5 | 0.858 | 0.865 | 0.829 | 0.847 | 0.956 | ||

| 10 | 0.872 | 0.885 | 0.841 | 0.862 | 0.962 | ||

| 25 | 0.873 | 0.888 | 0.841 | 0.864 | 0.962 | ||

| 50 | 0.885 | 0.911 | 0.848 | 0.879 | 0.965 | ||

| 100 | 0.918 | 0.930 | 0.888 | 0.908 | 0.985 | ||

| 150 | 0.938 | 0.945 | 0.915 | 0.930 | 0.990 | ||

| 200 | 0.950 | 0.950 | 0.930 | 0.940 | 0.995 | ||

| 250 | 0.945 | 0.942 | 0.923 | 0.932 | 0.993 | ||

| 300 | 0.934 | 0.931 | 0.908 | 0.919 | 0.989 | ||

| 500 | 0.904 | 0.913 | 0.866 | 0.889 | 0.978 |

| Variance | Accuracy | Prediction Speed (obs/s) | Training Time (s) |

|---|---|---|---|

| 85% | 94.6% | 210 | 329 |

| 90% | 95.0% | 110 | 638 |

| 95% | 95.0% | 55 | 1251 |

| 0 | 1 | 2 | 3 | 4 | |

|---|---|---|---|---|---|

| 0 | |||||

| 1 | |||||

| 2 | |||||

| 3 | |||||

| 4 |

| Variance/ Number of Components | Box Constraint C | Kernel Scale | Accuracy | Precision | Recall | F1 Score | Specificity |

|---|---|---|---|---|---|---|---|

| 85%/443 | 1 | 5 | 0.987 | 0.993 | 0.983 | 0.988 | 0.995 |

| 20 | 0.994 | 0.991 | 0.991 | 0.991 | 1.000 | ||

| 30 | 0.995 | 0.994 | 0.994 | 0.994 | 1.000 | ||

| 40 | 0.996 | 0.995 | 0.995 | 0.995 | 1.000 | ||

| 50 | 0.997 | 0.996 | 0.996 | 0.996 | 1.000 | ||

| 60 | 0.998 | 0.997 | 0.997 | 0.997 | 1.000 | ||

| 70 | 0.998 | 0.998 | 0.998 | 0.998 | 1.000 | ||

| 80 | 0.999 | 0.998 | 0.998 | 0.998 | 1.000 | ||

| 90 | 0.999 | 0.999 | 0.999 | 0.999 | 1.000 | ||

| 100 | 0.999 | 0.999 | 0.999 | 0.999 | 1.000 | ||

| 150 | 0.998 | 0.998 | 0.998 | 0.998 | 1.000 | ||

| 200 | 0.997 | 0.996 | 0.997 | 0.997 | 0.999 | ||

| 300 | 0.882 | 0.926 | 0.835 | 0.878 | 0.954 | ||

| 90%/887 | 1 | 5 | 0.987 | 0.993 | 0.982 | 0.988 | 0.995 |

| 20 | 0.991 | 0.988 | 0.988 | 0.988 | 1.000 | ||

| 30 | 0.995 | 0.993 | 0.993 | 0.993 | 1.000 | ||

| 40 | 0.996 | 0.995 | 0.995 | 0.995 | 1.000 | ||

| 50 | 0.997 | 0.997 | 0.997 | 0.997 | 1.000 | ||

| 60 | 0.998 | 0.997 | 0.997 | 0.997 | 1.000 | ||

| 70 | 0.998 | 0.997 | 0.997 | 0.997 | 1.000 | ||

| 80 | 0.998 | 0.997 | 0.997 | 0.997 | 1.000 | ||

| 90 | 0.998 | 0.998 | 0.998 | 0.998 | 1.000 | ||

| 100 | 0.998 | 0.998 | 0.998 | 0.998 | 1.000 | ||

| 150 | 0.998 | 0.998 | 0.998 | 0.998 | 0.999 | ||

| 200 | 0.997 | 0.996 | 0.996 | 0.996 | 0.999 | ||

| 300 | 0.883 | 0.927 | 0.837 | 0.880 | 0.955 | ||

| 95%/1770 | 1 | 5 | 0.986 | 0.993 | 0.981 | 0.987 | 0.994 |

| 20 | 0.990 | 0.987 | 0.986 | 0.986 | 1.000 | ||

| 30 | 0.994 | 0.992 | 0.992 | 0.992 | 1.000 | ||

| 40 | 0.996 | 0.994 | 0.994 | 0.994 | 1.000 | ||

| 50 | 0.997 | 0.996 | 0.996 | 0.996 | 1.000 | ||

| 60 | 0.997 | 0.997 | 0.997 | 0.997 | 1.000 | ||

| 70 | 0.998 | 0.997 | 0.997 | 0.997 | 1.000 | ||

| 80 | 0.998 | 0.997 | 0.998 | 0.998 | 1.000 | ||

| 90 | 0.998 | 0.998 | 0.998 | 0.998 | 1.000 | ||

| 100 | 0.998 | 0.997 | 0.998 | 0.998 | 1.000 | ||

| 150 | 0.998 | 0.997 | 0.998 | 0.997 | 0.999 | ||

| 200 | 0.996 | 0.996 | 0.996 | 0.996 | 0.999 | ||

| 300 | 0.997 | 0.997 | 0.997 | 0.997 | 0.999 |

| Variance | Kernel Scale | Accuracy | Prediction Speed (obs/sec) | Training Time (sec) |

|---|---|---|---|---|

| 85% | 90 | 0.999 | 1700 | 76 |

| 100 | 0.999 | 1600 | 79 | |

| 90% | 90 | 0.998 | 320 | 256 |

| 100 | 0.998 | 580 | 364 | |

| 95% | 90 | 0.998 | 100 | 676 |

| 0 | 1 | 2 | 3 | 4 | |

|---|---|---|---|---|---|

| 0 | |||||

| 1 | |||||

| 2 | |||||

| 3 | |||||

| 4 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vidal, Y.; Aquino, G.; Pozo, F.; Gutiérrez-Arias, J.E.M. Structural Health Monitoring for Jacket-Type Offshore Wind Turbines: Experimental Proof of Concept. Sensors 2020, 20, 1835. https://doi.org/10.3390/s20071835

Vidal Y, Aquino G, Pozo F, Gutiérrez-Arias JEM. Structural Health Monitoring for Jacket-Type Offshore Wind Turbines: Experimental Proof of Concept. Sensors. 2020; 20(7):1835. https://doi.org/10.3390/s20071835

Chicago/Turabian StyleVidal, Yolanda, Gabriela Aquino, Francesc Pozo, and José Eligio Moisés Gutiérrez-Arias. 2020. "Structural Health Monitoring for Jacket-Type Offshore Wind Turbines: Experimental Proof of Concept" Sensors 20, no. 7: 1835. https://doi.org/10.3390/s20071835

APA StyleVidal, Y., Aquino, G., Pozo, F., & Gutiérrez-Arias, J. E. M. (2020). Structural Health Monitoring for Jacket-Type Offshore Wind Turbines: Experimental Proof of Concept. Sensors, 20(7), 1835. https://doi.org/10.3390/s20071835