End-to-End Deep Learning Fusion of Fingerprint and Electrocardiogram Signals for Presentation Attack Detection

Abstract

:1. Introduction

- Proposal of a novel end-to-end neural fusion architecture for fingerprints and ECG signals.

- A novel application of state-of-the-art EfficientNets for fingerprint PAD.

- Proposal of a 2D-convolutional neural network (2D-CNN) architecture for converting 1D ECG features into 2D images, yielding a better representation for ECG features compared to standard models based on fully-connected layers (FC) and 1D-convolutional neural networks (1D-CNNs).

2. Proposed Methodology

2.1. Fingerprint Branch

2.2. ECG Branch

2.3. Fusion Module

2.4. Network Optimization

3. Experiments

3.1. Datasets

3.2. Experiment Setup

4. Results and Discussions

4.1. Experiments Using Fingerprint Modality Only

4.2. Fusion of Fingerprints and ECGs

4.3. Sensitivity Analysis of the Number of Training Subjects

4.4. Sensitivity with Respect to the Pre-Trained CNN

4.5. Sensitivity of the ECG Network Architecture

4.6. Classification Time

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jain, A.K.; Kumar, A. Biometric Recognition: An Overview. In Second Generation Biometrics: The Ethical, Legal and Social Context; Mordini, E., Tzovaras, D., Eds.; Springer: Dordrecht, Netherlands, 2012; pp. 49–79. ISBN 978-94-007-3891-1. [Google Scholar]

- Standard, I. Information Technology—Biometric Presentation Attack Detection—Part 1: Framework; ISO: Geneva, Switzerland, 2016. [Google Scholar]

- Schuckers, S. Presentations and attacks, and spoofs, oh my. Image Vis. Comput. 2011, 55 Pt 1, 26–30. [Google Scholar] [CrossRef] [Green Version]

- Chugh, T.; Jain, A.K. Fingerprint Spoof Generalization. arXiv 2019, arXiv:191202710. [Google Scholar]

- Coli, P.; Marcialis, G.L.; Roli, F. Vitality detection from fingerprint images: a critical survey. In Proceedings of the International Conference on Biometrics, Berlin/Heidelberg, Germany, 27 August 2007; pp. 722–731. [Google Scholar]

- Lapsley, P.D.; Lee, J.A.; Pare, D.F., Jr.; Hoffman, N. Anti-Fraud Biometric Scanner that Accurately Detects Blood Flow. U.S. Patent Application No. US 5,737,439, 7 April 1998. [Google Scholar]

- Antonelli, A.; Cappelli, R.; Maio, D.; Maltoni, D. Fake finger detection by skin distortion analysis. IEEE Trans. Inf. Forensics Secur. 2006, 1, 360–373. [Google Scholar] [CrossRef] [Green Version]

- Baldisserra, D.; Franco, A.; Maio, D.; Maltoni, D. Fake fingerprint detection by odor analysis. In Proceedings of the International Conference on Biometrics, Berlin/Heidelberg, Germany, 5 January 2006; pp. 265–272. [Google Scholar]

- Nikam, S.B.; Agarwal, S. Texture and wavelet-based spoof fingerprint detection for fingerprint biometric systems. In Proceedings of the 2008 First International Conference on Emerging Trends in Engineering and Technology, Nagpur, Maharashtra, 16 July 2008; pp. 675–680. [Google Scholar]

- Ghiani, L.; Marcialis, G.L.; Roli, F. Fingerprint liveness detection by local phase quantization. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11 November 2012; pp. 537–540. [Google Scholar]

- Gragnaniello, D.; Poggi, G.; Sansone, C.; Verdoliva, L. Fingerprint liveness detection based on weber local image descriptor. In Proceedings of the IEEE workshop on biometric measurements and systems for security and medical applications, Naples, Italy, 9 September 2013; pp. 46–50. [Google Scholar]

- Xia, Z.; Yuan, C.; Lv, R.; Sun, X.; Xiong, N.N.; Shi, Y.-Q. A novel weber local binary descriptor for fingerprint liveness detection. IEEE Trans. Syst. Man Cybern. Syst. 2018, 50, 1526–1536. [Google Scholar] [CrossRef]

- Gragnaniello, D.; Poggi, G.; Sansone, C.; Verdoliva, L. Local contrast phase descriptor for fingerprint liveness detection. Pattern Recognit. 2015, 48, 1050–1058. [Google Scholar] [CrossRef]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the devil in the details: Delving deep into convolutional nets. arXiv 2014, arXiv:14053531. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:14091556. [Google Scholar]

- Al Rahhal, M.M.; Bazi, Y.; Almubarak, H.; Alajlan, N.; Al Zuair, M. Dense Convolutional Networks With Focal Loss and Image Generation for Electrocardiogram Classification. IEEE Access 2019, 7, 182225–182237. [Google Scholar] [CrossRef]

- Al Rahhal, M.M.; Bazi, Y.; Al Zuair, M.; Othman, E.; BenJdira, B. Convolutional neural networks for electrocardiogram classification. J. Med. Biol. Eng. 2018, 38, 1014–1025. [Google Scholar] [CrossRef]

- Al Rahhal, M.M.; Bazi, Y.; AlHichri, H.; Alajlan, N.; Melgani, F.; Yager, R.R. Deep learning approach for active classification of electrocardiogram signals. Inf. Sci. 2016, 345, 340–354. [Google Scholar] [CrossRef]

- Hammad, M.; Wang, K. Parallel score fusion of ECG and fingerprint for human authentication based on convolution neural network. Comput. Secur. 2019, 81, 107–122. [Google Scholar] [CrossRef]

- Minaee, S.; Abdolrashidi, A.; Su, H.; Bennamoun, M.; Zhang, D. Biometric Recognition Using Deep Learning: A Survey. arXiv 2019, arXiv:191200271. [Google Scholar]

- Talreja, V.; Valenti, M.C.; Nasrabadi, N.M. Multibiometric secure system based on deep learning. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; pp. 298–302. [Google Scholar]

- Al-Waisy, A.S.; Qahwaji, R.; Ipson, S.; Al-Fahdawi, S.; Nagem, T.A. A multi-biometric iris recognition system based on a deep learning approach. Pattern Anal. Appl. 2018, 21, 783–802. [Google Scholar] [CrossRef] [Green Version]

- Nogueira, R.F.; de Alencar Lotufo, R.; Machado, R.C. Fingerprint Liveness Detection Using Convolutional Neural Networks. IEEE Trans Inf. Forensics Secur. 2016, 11, 1206–1213. [Google Scholar] [CrossRef]

- Park, E.; Cui, X.; Nguyen, T.H.B.; Kim, H. Presentation attack detection using a tiny fully convolutional network. IEEE Trans. Inf. Forensics Secur. 2019, 14, 3016–3025. [Google Scholar] [CrossRef]

- Souza, G.B.; Santos, D.F.; Pires, R.G.; Marana, A.N.; Papa, J.P. Deep Boltzmann Machines for robust fingerprint spoofing attack detection. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 3 July 2017; pp. 1863–1870. [Google Scholar]

- Tolosana, R.; Gomez-Barrero, M.; Kolberg, J.; Morales, A.; Busch, C.; Ortega-Garcia, J. Towards Fingerprint Presentation Attack Detection Based on Convolutional Neural Networks and Short Wave Infrared Imaging. In Proceedings of the 2018 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 3 December 2018; pp. 1–5. [Google Scholar]

- Mura, V.; Ghiani, L.; Marcialis, G.L.; Roli, F.; Yambay, D.A.; Schuckers, S.A. LivDet 2015 fingerprint liveness detection competition 2015. In Proceedings of the 2015 IEEE 7th International Conference on Biometrics Theory, Applications and Systems (BTAS), Arlington, VA, USA, 8–11 September 2015; pp. 1–6. [Google Scholar]

- Chugh, T.; Cao, K.; Jain, A.K. Fingerprint spoof detection using minutiae-based local patches. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1 February 2017; pp. 581–589. [Google Scholar]

- Zhang, Y.; Shi, D.; Zhan, X.; Cao, D.; Zhu, K.; Li, Z. Slim-ResCNN: A Deep Residual Convolutional Neural Network for Fingerprint Liveness Detection. IEEE Access 2019, 7, 91476–91487. [Google Scholar] [CrossRef]

- Galbally, J.; Fierrez, J.; Cappelli, R. An introduction to fingerprint presentation attack detection. In Handbook of Biometric Anti-Spoofing. Advances in Computer Vision and Pattern Recognition; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Ross, A.A.; Nandakumar, K.; Jain, A.K. Handbook of multibiometrics; Springer: New York, NY, USA, 2006; Volume 6. [Google Scholar]

- Huang, Z.; Feng, Z.-H.; Kittler, J.; Liu, Y. Improve the Spoofing Resistance of Multimodal Verification with Representation-Based Measures. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Guangzhou, China, 23–26 November 2018; pp. 388–399. [Google Scholar]

- Wild, P.; Radu, P.; Chen, L.; Ferryman, J. Robust multimodal face and fingerprint fusion in the presence of spoofing attacks. Pattern Recognit. 2016, 50, 17–25. [Google Scholar] [CrossRef] [Green Version]

- Marasco, E.; Shehab, M.; Cukic, B. A Methodology for Prevention of Biometric Presentation Attacks. In Proceedings of the 2016 Seventh Latin-American Symposium on Dependable Computing (LADC), Cali, Colombia, 19–21 October 2016; pp. 9–14. [Google Scholar]

- Bhardwaj, I.; Londhe, N.D.; Kopparapu, S.K. A spoof resistant multibiometric system based on the physiological and behavioral characteristics of fingerprint. Pattern Recognit. 2017, 62, 214–224. [Google Scholar] [CrossRef]

- Komeili, M.; Armanfard, N.; Hatzinakos, D. Liveness detection and automatic template updating using fusion of ECG and fingerprint. IEEE Trans. Inf. Forensics Secur. 2018, 13, 1810–1822. [Google Scholar] [CrossRef]

- Pouryayevali, S. ECG Biometrics: New Algorithm and Multimodal Biometric System. Master’s Thesis, Department of Electrical and Computer Engineering, University of Toronto, Toronto, ON, Canada, 2015. [Google Scholar]

- Jomaa, R.M.; Islam, M.S.; Mathkour, H. Improved sequential fusion of heart-signal and fingerprint for anti-spoofing. In Proceedings of the 2018 IEEE 4th International Conference on Identity, Security, and Behavior Analysis (ISBA), Singapore, 12 March 2018; pp. 1–7. [Google Scholar]

- Regouid, M.; Touahria, M.; Benouis, M.; Costen, N. Multimodal biometric system for ECG, ear and iris recognition based on local descriptors. Multimed. Tools Appl. 2019, 78, 22509–22535. [Google Scholar] [CrossRef]

- Su, K.; Yang, G.; Wu, B.; Yang, L.; Li, D.; Su, P.; Yin, Y. Human identification using finger vein and ECG signals. Neurocomputing 2019, 332, 111–118. [Google Scholar] [CrossRef]

- Blasco, J.; Peris-Lopez, P. On the feasibility of low-cost wearable sensors for multi-modal biometric verification. Sensors 2018, 18, 2782. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jomaa, R.M.; Islam, M.S.; Mathkour, H. Enhancing the information content of fingerprint biometrics with heartbeat signal. In Proceedings of the 2015 World Symposium on Computer Networks and Information Security (WSCNIS), Hammamet, Tunisia, 4 January 2015; pp. 1–5. [Google Scholar]

- Alajlan, N.; Islam, M.S.; Ammour, N. Fusion of fingerprint and heartbeat biometrics using fuzzy adaptive genetic algorithm. In Proceedings of the 2013 World Congress on Internet Security (WorldCIS), London, UK, 9 December 2013; pp. 76–81. [Google Scholar]

- Singh, Y.N.; Singh, S.K.; Gupta, P. Fusion of electrocardiogram with unobtrusive biometrics: An efficient individual authentication system. Pattern Recognit. Lett. 2012, 33, 1932–1941. [Google Scholar] [CrossRef]

- Pinto, J.R.; Cardoso, J.S.; Lourenço, A. Evolution, current challenges, and future possibilities in ECG biometrics. IEEE Access 2018, 6, 34746–34776. [Google Scholar] [CrossRef]

- Zhao, C.; Wysocki, T.; Agrafioti, F.; Hatzinakos, D. Securing handheld devices and fingerprint readers with ECG biometrics. In Proceedings of the 2012 IEEE Fifth International Conference on Biometrics: Theory, Applications and Systems (BTAS), Arlington, VA, USA, 23–27 September 2012; pp. 150–155. [Google Scholar]

- Agrafioti, F.; Hatzinakos, D.; Gao, J. Heart Biometrics: Theory, Methods and Applications; INTECH Open Access Publisher: Shanghai, China, 2011. [Google Scholar]

- Islam, M.S.; Alajlan, N. Biometric template extraction from a heartbeat signal captured from fingers. Multimed. Tools Appl. 2016. [Google Scholar] [CrossRef]

- Minaee, S.; Bouazizi, I.; Kolan, P.; Najafzadeh, H. Ad-Net: Audio-Visual Convolutional Neural Network for Advertisement Detection In Videos. arXiv 2018, arXiv:180608612. [Google Scholar]

- Torfi, A.; Iranmanesh, S.M.; Nasrabadi, N.; Dawson, J. 3d convolutional neural networks for cross audio-visual matching recognition. IEEE Access 2017, 5, 22081–22091. [Google Scholar] [CrossRef]

- Zhu, Y.; Lan, Z.; Newsam, S.; Hauptmann, A. Hidden two-stream convolutional networks for action recognition. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2 December 2018; pp. 363–378. [Google Scholar]

- Hammad, M.; Liu, Y.; Wang, K. Multimodal biometric authentication systems using convolution neural network based on different level fusion of ECG and fingerprint. IEEE Access 2018, 7, 26527–26542. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. Efficientnet: Rethinking model scaling for convolutional neural networks. ArXiv 2019, arXiv:190511946. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Ramachandran, P.; Zoph, B.; Le, Q.V. Searching for activation functions. arXiv 2017, arXiv:171005941. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Hinton, G.; Srivastava, N.; Swersky, K. Neural networks for machine learning lecture 6a overview of mini-batch gradient descent. Cited On 2012, 14. [Google Scholar]

- Islam, S.; Ammour, N.; Alajlan, N.; Abdullah-Al-Wadud, M. Selection of heart-biometric templates for fusion. IEEE Access 2017, 5, 1753–1761. [Google Scholar] [CrossRef]

- Islam, M.S.; Alajlan, N. An efficient QRS detection method for ECG signal captured from fingers. In Proceedings of the 2013 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), San Jose, CA, USA, 15–19 July 2013; pp. 1–5. [Google Scholar]

- Islam, M.S.; Alajlan, N. Augmented-hilbert transform for detecting peaks of a finger-ECG signal. In Proceedings of the 2014 IEEE Conference on Biomedical Engineering and Sciences (IECBES), Kuala Lumpur, Malaysia, 8–10 December 2014; pp. 864–867. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NY, USA, 26–30 June 2016; pp. 2818–2826. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

| Sensor | Resolution (dpi) | Image Size (pixel) | Training | Testing | ||

|---|---|---|---|---|---|---|

| Live | Fake | Live | Fake | |||

| Green Bit | 500 | 500 × 500 | 1000 | 1000 | 1000 | 1500 |

| Biometrika (Hi Scan) | 1000 | 1000 × 1000 | 1000 | 1000 | 1000 | 1500 |

| Digital Persona | 500 | 252 × 324 | 1000 | 1000 | 1000 | 1500 |

| Crossmatch | 500 | 640 × 480 | 1500 | 1500 | 1500 | 1448 |

| Sensor | Training | Testing |

|---|---|---|

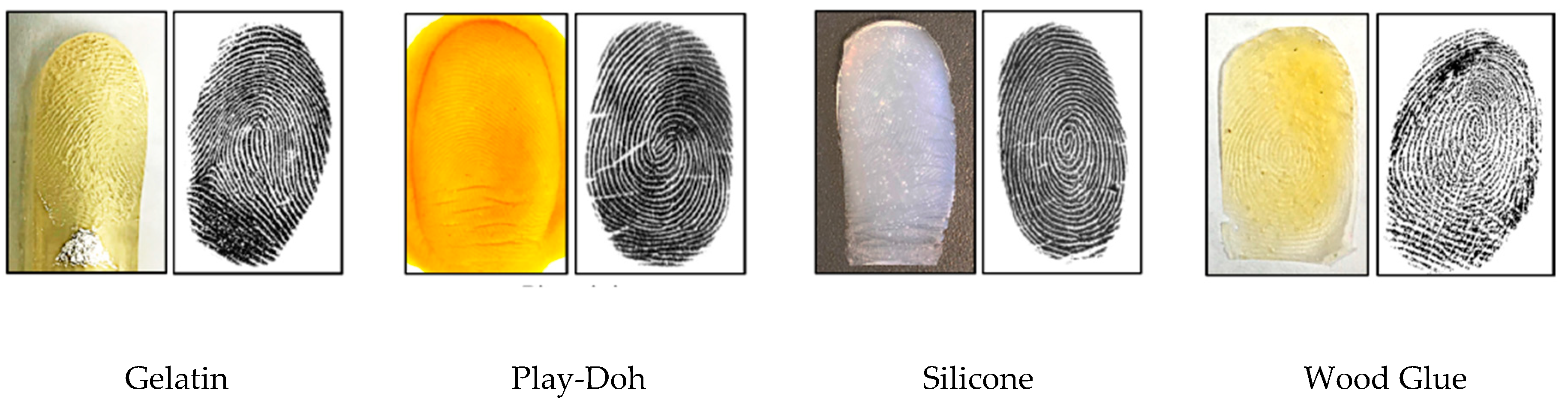

| Green Bit | Ecoflex, gelatin, latex, wood glue | Ecoflex, gelatin, latex, wood glue, Liquid Ecoflex, RTV |

| Biometrika | ||

| Digital Persona | ||

| Crossmatch | Body Double, Ecoflex, PlayDoh | Body Double, Ecoflex, PlayDoh, OOMOO, gelatin |

| Fingerprint | ECG | ||

|---|---|---|---|

| Bona Fide | Arteact | Bona Fide | |

| Number of samples per subject | 10 | 12 | 10 |

| Total number of samples | 700 | 840 | 700 |

| Algorithm | Green Bit | Biometrika | Digital Persona | Crossmatch | Overall |

|---|---|---|---|---|---|

| Nogueira (first place winner) | 95.40 | 94.36 | 93.72 | 98.10 | 95.51 |

| Proposed | 94.68 | 95.12 | 91.96 | 97.29 | 94.87 |

| Unina (second place winner) | 95.80 | 95.20 | 85.44 | 96.00 | 93.92 |

| Biometric Modality | ECG Architecture | Average Accuracy % |

|---|---|---|

| Fingerprint | (No fusion) | 92.98 |

| Fingerprint + ECG | FC | 94.99 |

| 1D-CNN | 94.84 | |

| 2D-CNN | 95.32 |

| ECG Architecture | Percentage of Subjects Used for Training | ||||

|---|---|---|---|---|---|

| 20% | 30% | 50% | 70% | 80% | |

| FC | 89.71 | 93.90 | 94.49 | 93.92 | 96.17 |

| 1D-CNN | 89.31 | 92.45 | 94.26 | 93.36 | 96.95 |

| 2D-CNN | 90.79 | 94.08 | 95.32 | 95.61 | 97.10 |

| CNN Model | Architecture | #Parameters | Average Accuracy % |

|---|---|---|---|

| EfficientNet-B3 | FC | 10 M | 94.99 |

| 1D-CNN | 94.84 | ||

| 2D-CNN | 95.32 | ||

| Inception-v3 | FC | 21 M | 92.80 |

| 1D-CNN | 94.32 | ||

| 2D-CNN | 95.20 | ||

| DenseNet-169 | FC | 12 M | 91.28 |

| 1D-CNN | 92.92 | ||

| 2D-CNN | 93.29 | ||

| ResNet-50 | FC | 23 M | 93.56 |

| 1D-CNN | 93.68 | ||

| 2D-CNN | 94.00 |

| Configuration | Configuration Description | Accuracy % |

|---|---|---|

| 1 | 2 fc = ( 1024), 2 blocks MBConv (64, 168), fc = 128 | 91.90 |

| 2 | 2 fc = (128, 4096), 2 blocks MBConv (64), fc = 128 | 93.56 |

| 3 | 2 fc= (128, 1024), 1 block MBConv (32), fc = 128 | 94.82 |

| 4 | 2 fc = (128, 1024), 1 block MBConv (64), fc = 128 | 95.58 |

| 5 | 2 fc = (128, 1024), 1 block MBConv (128), fc = 128 | 95.07 |

| 6 | 2 fc = (128, 1024), 3 blocks MBConv (64), fc = 128 | 94.68 |

| 7 | 2 fc = (128, 1024), 3 blocks MBConv (64, 128, 128), fc = 128 | 95.20 |

| 8 (Proposed) | 2 fc = (128, 1024), 2 blocks MBConv (64, 128), fc = 128 | 95.32 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

M. Jomaa, R.; Mathkour, H.; Bazi, Y.; Islam, M.S. End-to-End Deep Learning Fusion of Fingerprint and Electrocardiogram Signals for Presentation Attack Detection. Sensors 2020, 20, 2085. https://doi.org/10.3390/s20072085

M. Jomaa R, Mathkour H, Bazi Y, Islam MS. End-to-End Deep Learning Fusion of Fingerprint and Electrocardiogram Signals for Presentation Attack Detection. Sensors. 2020; 20(7):2085. https://doi.org/10.3390/s20072085

Chicago/Turabian StyleM. Jomaa, Rami, Hassan Mathkour, Yakoub Bazi, and Md Saiful Islam. 2020. "End-to-End Deep Learning Fusion of Fingerprint and Electrocardiogram Signals for Presentation Attack Detection" Sensors 20, no. 7: 2085. https://doi.org/10.3390/s20072085

APA StyleM. Jomaa, R., Mathkour, H., Bazi, Y., & Islam, M. S. (2020). End-to-End Deep Learning Fusion of Fingerprint and Electrocardiogram Signals for Presentation Attack Detection. Sensors, 20(7), 2085. https://doi.org/10.3390/s20072085