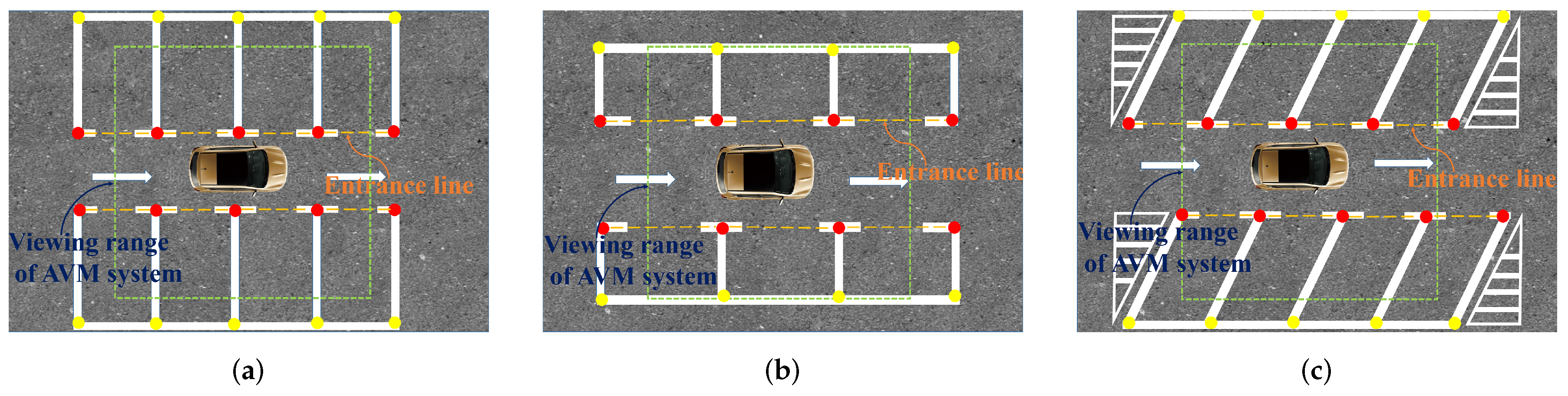

Figure 1.

Three typical kinds of parking slots. (a) perpendicular parking slots; (b) parallel parking slots; (c) slanted parking slots. A parking slot consists of four vertices, of which the paired marking points of the entrance line are marked with red dots, and the other two invisible vertices are marked with yellow dots. The entrance lines and the viewing range of an AVM system are also marked out.

Figure 1.

Three typical kinds of parking slots. (a) perpendicular parking slots; (b) parallel parking slots; (c) slanted parking slots. A parking slot consists of four vertices, of which the paired marking points of the entrance line are marked with red dots, and the other two invisible vertices are marked with yellow dots. The entrance lines and the viewing range of an AVM system are also marked out.

Figure 2.

Overview of the VPS-Net, which contains two modules: parking slot detection and occupancy classification. It takes the around view image as input and outputs the position of the vacant parking slot to the decision module of the PAS.

Figure 2.

Overview of the VPS-Net, which contains two modules: parking slot detection and occupancy classification. It takes the around view image as input and outputs the position of the vacant parking slot to the decision module of the PAS.

Figure 3.

Marking points and parking slot heads. (a) shows the geometric relationship between the paired marking points and the parking slot head. Paired marking points are marked with green dots, and the parking slot head is marked with the red rectangle; (b) shows a variety of deformations of “T-shaped” or “L-shaped” marking points; (c) shows three kinds of the parking slot head belonging to classes “right-angled head”, “obtuse-angled head”, and “acute-angled head” respectively.

Figure 3.

Marking points and parking slot heads. (a) shows the geometric relationship between the paired marking points and the parking slot head. Paired marking points are marked with green dots, and the parking slot head is marked with the red rectangle; (b) shows a variety of deformations of “T-shaped” or “L-shaped” marking points; (c) shows three kinds of the parking slot head belonging to classes “right-angled head”, “obtuse-angled head”, and “acute-angled head” respectively.

Figure 4.

The bounding boxes of the parking slot head and marking points. Each bounding box consists of three parts: coordinates of the center point, width, and height.

Figure 4.

The bounding boxes of the parking slot head and marking points. Each bounding box consists of three parts: coordinates of the center point, width, and height.

Figure 5.

The relationship between two marking points , and the bounding box of the parking slot head . (a) shows and ; (b) shows and ; (c) shows and ; (d) shows and .

Figure 5.

The relationship between two marking points , and the bounding box of the parking slot head . (a) shows and ; (b) shows and ; (c) shows and ; (d) shows and .

Figure 6.

Complete parking slot inference. (a–d) are the perpendicular parking slot, the parallel parking slot, the slanted parking with an acute angle, and the slanted parking with an obtuse angle respectively. Their depth is , and respectively, and their parking angle is , and respectively. , are two visible paired marking points, and , are two invisible vertices.

Figure 6.

Complete parking slot inference. (a–d) are the perpendicular parking slot, the parallel parking slot, the slanted parking with an acute angle, and the slanted parking with an obtuse angle respectively. Their depth is , and respectively, and their parking angle is , and respectively. , are two visible paired marking points, and , are two invisible vertices.

Figure 7.

The orientation of the parking slot when the vehicle is around it. Two rectangular boxes formed by the entrance line with a depth d are marked with red and orange dotted lines. The rectangular box formed by the car model is marked with gree dotted lines. The red arrow indicates the orientation of the parking slot.

Figure 7.

The orientation of the parking slot when the vehicle is around it. Two rectangular boxes formed by the entrance line with a depth d are marked with red and orange dotted lines. The rectangular box formed by the car model is marked with gree dotted lines. The red arrow indicates the orientation of the parking slot.

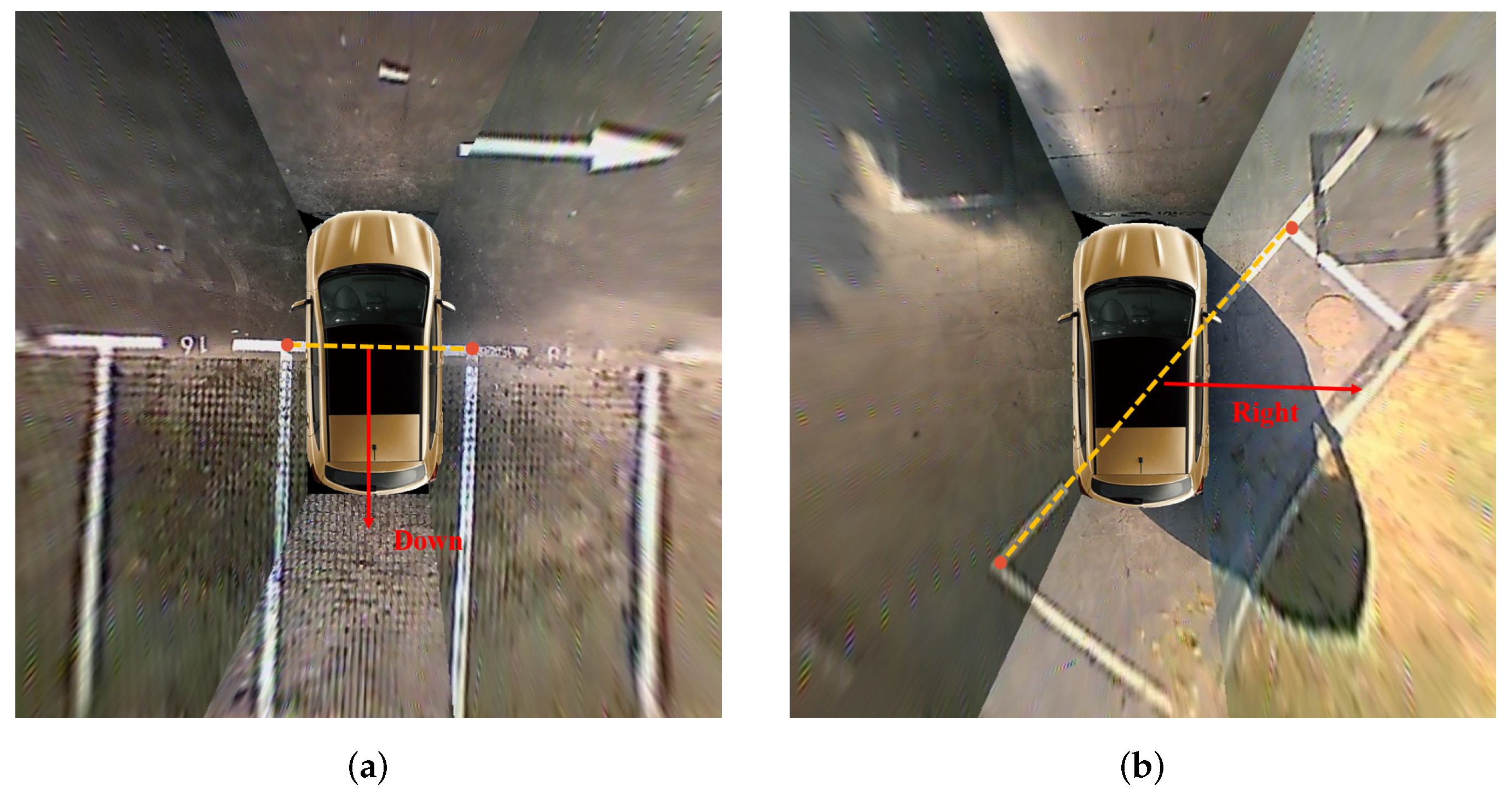

Figure 8.

The orientation of the parking slot when the vehicle is parking into it. (a) shows the orientation of the vertical parking slot. (b) shows the orientation of the parallel parking slot. The red arrow indicates the orientation of the parking slot. The yellow dotted line indicates the entrance line.

Figure 8.

The orientation of the parking slot when the vehicle is parking into it. (a) shows the orientation of the vertical parking slot. (b) shows the orientation of the parallel parking slot. The red arrow indicates the orientation of the parking slot. The yellow dotted line indicates the entrance line.

Figure 9.

Training samples for vacant parking slot classification. (a) a negative sample: a non-vacant regularized parking slot. (b) a positive sample: a vacant regularized parking slot.

Figure 9.

Training samples for vacant parking slot classification. (a) a negative sample: a non-vacant regularized parking slot. (b) a positive sample: a vacant regularized parking slot.

Figure 10.

Cases of datasets used in evaluation. Rows 1 and 2 are the annotation information that was labeled for ps2.0 and PSV datasets. The green indicates the vacant parking slot. The red indicates the non-vacant parking slot. Rows 3 and 4 are parking slot samples that were cut and warped according to the annotation information.

Figure 10.

Cases of datasets used in evaluation. Rows 1 and 2 are the annotation information that was labeled for ps2.0 and PSV datasets. The green indicates the vacant parking slot. The red indicates the non-vacant parking slot. Rows 3 and 4 are parking slot samples that were cut and warped according to the annotation information.

Figure 11.

AP histograms by three kinds of DCNN-based detectors.

Figure 11.

AP histograms by three kinds of DCNN-based detectors.

Figure 12.

Detection results by YOLOv3-based detector. The green bounding box indicates the “right-angled head”. The blue bounding box indicates the “acute-angled head”. The yellow bounding box indicates the “obtuse-angled head”. The red dot indicates the “marking point”.

Figure 12.

Detection results by YOLOv3-based detector. The green bounding box indicates the “right-angled head”. The blue bounding box indicates the “acute-angled head”. The yellow bounding box indicates the “obtuse-angled head”. The red dot indicates the “marking point”.

Figure 13.

(a,b) show representative images in the ps2.0 test dataset where the vehicle is across parking slots.

Figure 13.

(a,b) show representative images in the ps2.0 test dataset where the vehicle is across parking slots.

Figure 14.

Precision-recall curves of different methods for parking slot occupancy classification.

Figure 14.

Precision-recall curves of different methods for parking slot occupancy classification.

Figure 15.

VPS-Net detection results. Green indicates the vacant parking slot. Red indicates the non-vacant parking slot. Different rows show three kinds of parking slots in various imaging conditions like ’indoor’, ’outdoor daylight’, ’outdoor rainy’, ’outdoor shadow’, ’outdoor slanted’, ’outdoor street light’ respectively.

Figure 15.

VPS-Net detection results. Green indicates the vacant parking slot. Red indicates the non-vacant parking slot. Different rows show three kinds of parking slots in various imaging conditions like ’indoor’, ’outdoor daylight’, ’outdoor rainy’, ’outdoor shadow’, ’outdoor slanted’, ’outdoor street light’ respectively.

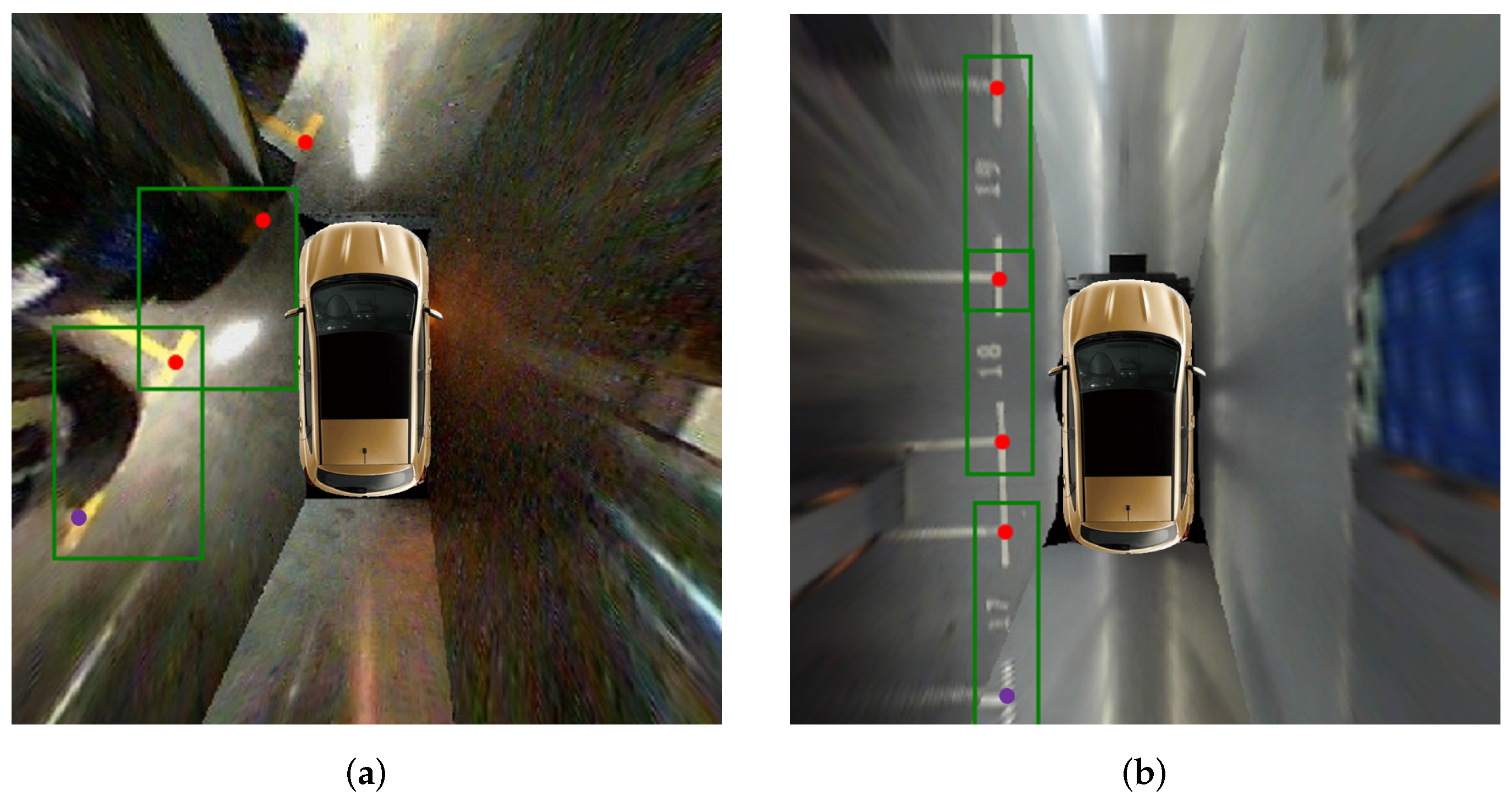

Figure 16.

Representative images with the degraded image quality of marking points in the PSV dataset. (a) shows the marking point is far from cameras. (b) shows the marking point is on the stitching lines. The green bounding box indicates the parking slot head. The red dot indicates the detected marking point, and the purple dot indicates the inferred marking point based on the parking slot head.

Figure 16.

Representative images with the degraded image quality of marking points in the PSV dataset. (a) shows the marking point is far from cameras. (b) shows the marking point is on the stitching lines. The green bounding box indicates the parking slot head. The red dot indicates the detected marking point, and the purple dot indicates the inferred marking point based on the parking slot head.

Table 1.

Detailed description of the customized DCNN for parking slot occupancy classification.

Table 1.

Detailed description of the customized DCNN for parking slot occupancy classification.

| Layer Name | Kernel | Padding | Stride | Output (CxHxW) |

|---|

| Input | - | - | - | 3 × 46 × 120 |

| Conv1 | [3, 9] | [0, 0] | [1, 2] | 40 × 44 × 56 |

| Maxpool1 | [3, 3] | [0, 0] | [2, 2] | 40 × 21 × 27 |

| Conv2 | [3, 5] | [1, 0] | [1, 1] | 80 × 21 × 23 |

| Maxpool2 | [3, 3] | [1, 0] | [2, 2] | 80 × 11 × 11 |

| Conv3 | [3, 3] | [1, 1] | [1, 1] | 120 × 11 × 11 |

| Conv4 | [3, 3] | [1, 1] | [1, 1] | 160 × 11 × 11 |

| Maxpool2 | [3, 3] | [0, 0] | [2, 2] | 160 × 5 × 5 |

| Fc1 | - | - | - | 512 × 1 × 1 |

| Fc1 | - | - | - | 2 × 1 × 1 |

Table 2.

Setting for hyper-parameters of VPS-Net.

Table 2.

Setting for hyper-parameters of VPS-Net.

| Parameter | Value (pixels) | Parameter | Value (pixels) |

|---|

| 48 | | 67 |

| 44 | | 129 |

| 40 | | 250 |

| 60 | | 125 |

| t | 190 | | 240 |

| 90 | d | 250 |

Table 3.

Localization error of marking points and running time of three kinds of DCNN-based detector.

Table 3.

Localization error of marking points and running time of three kinds of DCNN-based detector.

| Method | Localization Error (in pixel) | Localization Error (in cm) | Running Time (ms) |

|---|

| Faster-RCNN [46] | 3.67 ± 2.32 | 6.12 ± 3.87 | 45 |

| SSD [43] | 1.51 ± 1.17 | 2.52 ± 1.95 | 26 |

| YOLOv3-based | 1.03 ± 0.65 | 1.72 ± 1.09 | 18 |

Table 4.

Parking slot detection performance of different methods in the ps2.0 test set.

Table 4.

Parking slot detection performance of different methods in the ps2.0 test set.

| Method | #GT | #TP | #FP | Precision Rate | Recall Rate |

|---|

| PSD_L [10] | 2173 | 1845 | 27 | 98.55% | 84.89% |

| DeepPS [11] | 2173 | 2143 | 5 | 99.77% | 98.62% |

| VPS-Net | 2173 | 2157 | 9 | 99.58% | 99.26% |

| DeepPS (no across) | 2166 | 2137 | 5 | 99.77% | 98.66% |

| VPS-Net (no across) | 2166 | 2157 | 2 | 99.91% | 99.58% |

Table 5.

Parking slot detection performance of different methods in the ps2.0 sub-test sets.

Table 5.

Parking slot detection performance of different methods in the ps2.0 sub-test sets.

| Sub-Test Set | DeepPS [11] | VPS-Net | VPS-Net (No Across) |

|---|

| Indoor | : 100.00%; r: 97.67% | : 99.71% r: 98.54% | : 99.71%; r: 98.54% |

| Outdoor normal | : 99.87%; r: 98.85% | : 100.00%; r: 99.74% | : 100.00%;r: 99.74% |

| Street light | : 100.00%; r: 100.00% | : 100.00%; r: 100.00% | : 100.00%;r: 100.00% |

| Outdoor shadow | : 99.86%; r: 99.14% | : 100.00%; r: 99.86% | : 100.00%;r: 99.86% |

| Outdoor rainy | : 100.00%; r: 99.42% | : 100.00%; r: 100.00% | : 100.00%;r: 100.00% |

| Slanted | : 96.15%; r: 92.59 % | : 90.12%; r: 90.12% | : 98.65%;r: 98.65% |

Table 6.

Localization error of parking slots of different methods in the ps2.0 test set.

Table 6.

Localization error of parking slots of different methods in the ps2.0 test set.

| Method | Localization Error (in pixel) | Localization Error (in cm) |

|---|

| PSD_L [10] | 3.64 ± 1.85 | 6.07 ± 3.09 |

| DeepPS [11] | 1.55 ± 1.04 | 2.58 ± 1.74 |

| VPS-Net | 1.03 ± 0.64 | 1.72 ± 1.07 |

Table 7.

Performance of parking slot occupancy classification of different methods in the self-annotated testing dataset.

Table 7.

Performance of parking slot occupancy classification of different methods in the self-annotated testing dataset.

| DCNN Model | Accuracy | Running Time (ms) | Model Size (MB) |

|---|

| HOG+SVM [37] | 92.54% | 2.13 | 0.04 |

| AlexNet [49] | 99.67% | 1.75 | 228.1 |

| VGG-16 [50] | 99.62% | 2.15 | 537.1 |

| ResNet-50 [51] | 98.55% | 5.10 | 44.8 |

| MobileNetV3-Small [52] | 98.55% | 6.21 | 5.1 |

| Customized DCNN | 99.48% | 0.81 | 9.4 |

Table 8.

Overall performance of VPS-Net in the ps2.0 test set.

Table 8.

Overall performance of VPS-Net in the ps2.0 test set.

| Step | Running Time (ms) | Precision Rate | Recall Rate |

|---|

| marking points and heads detection | 18 | - | - |

| complete parking slot inference | 0.5 | 99.91% | 99.58% |

| parking slot occupancy classification | 2 | 99.86% | 99.62% |

| total | 20.5 | 99.63% | 99.31% |

Table 9.

Parking slot detection performance of different methods in the PSV test set.

Table 9.

Parking slot detection performance of different methods in the PSV test set.

| Method | #GT | #TP | #FP | Precision Rate | Recall Rate |

|---|

| DeepPS [11] | 1593 | 1396 | 63 | 95.68% | 87.63% |

| VPS-Net (no Algorithm 1) | 1593 | 1483 | 50 | 96.73% | 93.09% |

| VPS-Net | 1593 | 1507 | 54 | 96.54% | 94.60% |