Choosing the Best Sensor Fusion Method: A Machine-Learning Approach

Abstract

1. Introduction

2. State of the Art

3. Method

3.1. Finding the Best Fusion Method

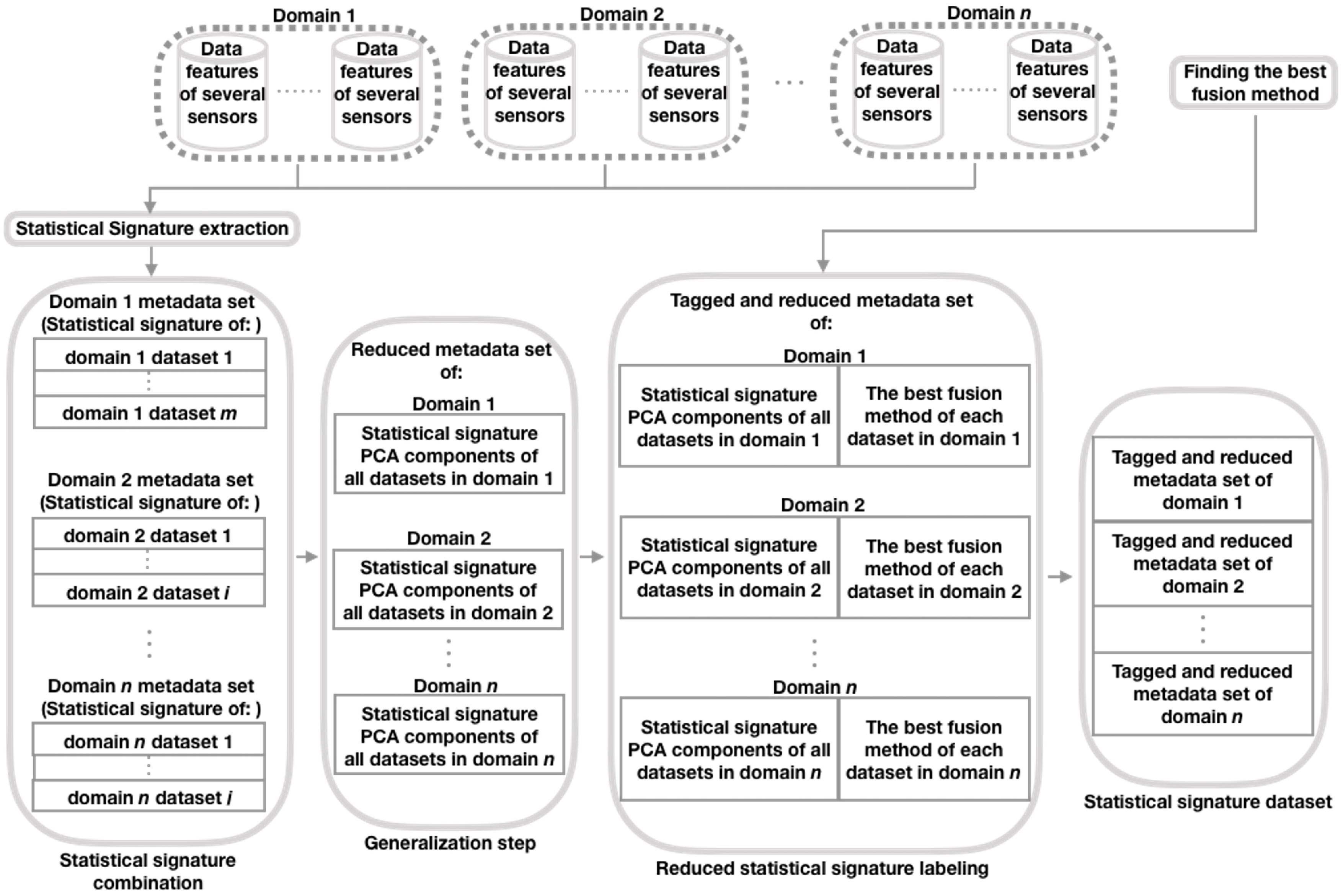

3.2. Statistical Signature Dataset

3.3. Prediction of the Best Fusion Method

4. Experimental Methodology and Results

4.1. Data Sets

4.1.1. SHA Data Sets

- The University of Texas at Dallas Multimodal Human Action Data set (UTD-MHAD) [51] was collected using a Microsoft Kinect camera and a wearable inertial sensor with a 3-axis accelerometer and a 3-axis gyroscope. This data set includes 27 actions performed by 8 subjects with 4 repetitions for each action. The actions were: 1:swipe left, 2:swipe right, 3:wave, 4:clap, 5:throw, 6:cross arm, 7:basketball shoot, 8:draw X mark, 9:draw circle CW, 10:draw circle CCW, 11:draw triangle, 12:bowling, 13:boxing, 14:baseball swing, 15:tennis swing, 16:arm curl, 17:tennis serve, 18:push, 19:knock, 20:catch, 21:pickup throw, 22:jog, 23:walk, 24:sit to stand, 25:stand to sit, 26:lunge, and 27:squat. As this base has just one gyroscope and one accelerometer (besides the Kinect, which we discarded), it gave us just one data set for the derived data sets collection.

- The OPPORTUNITY Activity Recognition data set [52] (we call Opportunity) is composed of daily activities recorded with multi modal sensors. It contains recordings of 4 subjects’ activities, including: 1:stand, 2:walk, 3:sit, and 4:lie. It has 2477 instances. Data was recorded by 5 Inertial Measurement Units (IMU) placed in several parts of the subjects’ body: Back (Ba), Right Lower Arm (Rl), Right Upper Arm (Ru), Left Upper Arm (Lu), and Left lower Arm (Ll). The derived 10 data sets from this original one are presented in Table 1.

- The Physical Activity Monitoring for Aging People Version 2 (PAMAP2) data set [53] was build from the data of three Colibri wireless IMUs: one IMU on the wrist of the dominant arm (Ha), one on the chest (Ch) and one on the dominant side’s ankle (An). Additionally, this data set included data from a Heart Rate monitor: BM-CS5SR from BM innovations GmbH. The data set considers 18 actions performed by nine subjects, including 1:lying, 2:sitting, 3:standing, 4:walking, 5:running, 6:cycling, 7:Nordic walking, 8:TV watching, 9:computer work, 10:car driving, 11:climbing stairs, 12:descending stairs, 13:vacuum cleaning, 14:ironing, 15:laundry folding, 16:house cleaning, 17:soccer playing, and 18:rope jumping. For the derived data sets, we considered the accelerometer and gyroscope data corresponding to the three IMUs for eight actions (1–4, 6, 7, 16, 17) performed by nine subjects, so taking pairs of sensors, we created seven new data sets (see Table 2) (We are not taking all the nine possible combinations due to data set balancing reasons explained later).

- The Mobile Health (MHealth) data set [54] contains information registered from body motion and vital signs recordings with ten subjects while performing 12 physical activities. The activities are 1:standing still, 2:sitting and relaxing, 3:lying down, 4:walking, 5:climbing stairs, 6:waist bends forward, 7:arms frontal elevation, 8:knees bending (crouching), 9:cycling, 10:jogging, 11:running, and 12:jump front and back. Raw data was collected using three Shimmer2 [55] wearable sensors. The sensors were placed on the subject’s chest (Ch), the right lower arm (Ra) and the left ankle (La), and they were fixed using elastic straps. For data set generation purposes, we took the acceleration and gyro data from the Ra sensor and the La sensor for the first eleven activities, giving us four new data sets (see Table 3).

- The Daily and Sports Activities (DSA) data set [56] is composed of motion sensor data captured from 19 daily and sports activities, each one carried out by eight subjects during 5 minutes. The sensors used were five Xsens MTx units placed on the Torso (To), Right Arm (Ra), Left Arm (La), Right leg (Rl), and Left leg (Ll). The activities were 1:sitting, 2:standing, 3:lying on back, 4:lying on right side, 5:climbing stairs, 6:descending stairs, 7:standing still in an elevator, 8:moving around in an elevator, 9:walking in a parking lot, 10:walking on a treadmill with a speed of 4 km/h in a flat position, 11:walking on a treadmill with a speed of 4 km/h in a 15 deg inclined position, 12:running on a treadmill with a speed of 8 km/h, 13:exercising on a stepper, 14:exercising on a cross trainer, 15:cycling on an exercise bike in a horizontal position, 16:cycling on an exercise bike in a vertical position, 17:rowing, 18:jumping, and 19:playing basketball. For data set generation purposes, we took some combinations of the accelerometer and gyroscope data corresponding to the five Xsens MTx units, giving us 17 new data sets (see Table 4).

- The Human Activities and Postural Transitions (HAPT) data set [57] is composed from motion sensor data of 12 daily activities, each one performed by 30 subjects wearing a smartphone (Samsung Galaxy S II) on the waist. The daily activities are 1:walking, 2:walking upstairs, 3:walking downstairs, 4:sitting, 5:standing, 6:laying, 7:stand to sit, 8:sit to stand, 9:sit to lie, 10:lie to sit, 11:stand to lie, and 12:lie to stand. For data set generation, we took the accelerometer and gyroscope to create one new data set.

4.1.2. Gas Data Sets

4.1.3. GFE Data Sets

4.2. Feature Extraction

4.2.1. SHA Features

4.2.2. Gas Features

4.2.3. GFE Features

4.3. Finding the Best Configuration of the Fusion Methods

- We wrote Python code on the Jupyter Notebook platform [69] to create functions that implement the fusion strategy settings studied here. These functions used the cross-validation technique with three folds.

- For each of the data sets considered here, we obtained 24 accuracy samples for each configuration of the fusion strategies. We got these samples by executing, 24 times, each of the functions created in the previous step. In cases where a fusion configuration shuffles characteristics, each of its 24 accuracies was obtained by taking the best accuracy resulting from executing the function that implements it 33 times. At each run, a different combination of features was attempted. We executed this function 33 times to try to find the best accuracy that this fusion configuration can achieve.

- We performed the Friedman test [42] with the data obtained in step 2 to see if there are significant differences (with a confidence level of 95%) between some pairs of these configurations.

- We performed the Holm test [43] with the data obtained in step 3 to see if there is a significant difference (with a confidence level of 95%) between the Aggregation (considered here the baseline for comparison purposes) and some other configuration.

- We identify the best configuration of the fusion methods for each data set considered here, based on the results of the previous step. Specifically, for each data set, from the configurations that presented a statistically significant difference (according to the results of the Holm test of the previous step), we identified the one that achieved the greatest of these differences. We consider this configuration to be the best. In Table 8, Table 9 and Table 10, we present the best configurations of the fusion methods for each of the data sets considered here.

4.4. Recognition of the Best Configuration of the Fusion Strategies

- We created the Statistical signature data set following the procedure defined in Stage Section 3.2. First, we extracted for each of the characteristics (described in Section 4.2) of the data sets (presented in Section 4.1) their Statistical signatures (defined in Section 3.2), which are the mean, the standard deviation, the maximum value, and the minimum value, i.e., the 25th, 50th, and 75th percentiles.Then, we created three sets of meta-data, one for each domain considered here (SHA, Gas, and GFE), where each row of each one of them corresponds to the Statistical signature of each of the data sets of the corresponding domain.Next, we reduced to 15 the number of columns of each of these three meta-data sets using the PCA [48] technique, for a dimensional reduction of the digital signature of the data sets of the corresponding domain. We took this number because we obtained the same amount of PCA compounds [48] per domain, and the sum of the explained variance of the corresponding compounds per domain was at least 90% (as indicated in Section 3.2): 98% for the SHA domain database, 99% for the Gas domain database, and 92% for the GFE domain database.After that, each row of each of these three meta-data sets was labeled with the MultiviewStacking, MultiViewStackingNotShuffle, Voting, or AdaBoost tags, extracted from the results of Table 8, Table 9 and Table 10. We chose the results from these tables because they show the best configurations of merge strategies, for each data set described in Section 4.1. They are the best configurations since they present the greatest significant differences concerning the Aggregation configuration. In cases where there were no significant differences between these configurations and the Aggregation configuration, we took the latter as the best option. Therefore, for the data sets that are in these cases, we tagged them with the Aggregation string.Finally, we combine, by row, the three meta-data sets, forming our Statistical signature data set (the generalized meta-data set). We present the final dimension and class distribution of the Statistical signature data set in Table 11.

- We balanced the Statistical signature data set because, in this data set, the number of observations in each class was different (class imbalance condition). This circumstance would result in a classifier issuing results with a bias towards the majority class. Even though there are different approaches to address the problem of class imbalance [60], we chose the up-sampling strategy. This strategy increases the number of minority class samples by using multiple instances of minority class samples. In particular, we used an up-sampling implementation for Python: the Scikit-Learn resampling module [61]. This module was configured to resample the minority class with replacement so that the number of samples for this class matches that of the majority class. In Table 12, we present the new dimension and evenly class distributions of the Statistical signature data set.

- To learn to recognize the best fusion configuration for a given data set that belongs to one of the domains considered here, using the data from Statistical signature data set, we trained and validated the RFC classifier using a three-fold cross-validation strategy [50]. We measure the performance of this classifier in terms of accuracy, precision, recall, f1-score [70], and support. We summarize the performance of this classifier in Table 13 and Table 14.

4.5. Experimental Results and Discussion

5. Conclusions

Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Gravina, R.; Alinia, P.; Ghasemzadeh, H.; Fortino, G. Multi-sensor fusion in body sensor networks: State-of-the-art and research challenges. Inf. Fusion 2017, 35, 68–80. [Google Scholar] [CrossRef]

- Hall, D.L.; Llinas, J. An introduction to multisensor data fusion. Proc. IEEE 1997, 85, 6–23. [Google Scholar] [CrossRef]

- Bosse, E.; Roy, J.; Grenier, D. Data fusion concepts applied to a suite of dissimilar sensors. In Proceedings of the 1996 Canadian Conference on Electrical and Computer Engineering, Calgary, AB, Canada, 26–29 May 1996; Volume 2, pp. 692–695. [Google Scholar]

- Lantz, B. Machine Learning with R; Packt Publishing Ltd.: Birmingham, UK, 2015. [Google Scholar]

- Müller, A.C.; Guido, S. Introduction to Machine Learning with Python: A Guide for Data Scientists; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2016. [Google Scholar]

- Liggins, M.E.; Hall, D.L.; Llinas, J. Handbook of Multisensor Data Fusion: Theory and Practice; CRC Press: Boca Raton, FL, USA, 2009. [Google Scholar]

- Garcia-Ceja, E.; Galván-Tejada, C.E.; Brena, R. Multi-view stacking for activity recognition with sound and accelerometer data. Inf. Fusion 2018, 40, 45–56. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Lam, L.; Suen, S. Application of majority voting to pattern recognition: An analysis of its behavior and performance. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 1997, 27, 553–568. [Google Scholar] [CrossRef]

- Aguileta, A.A.; Brena, R.F.; Mayora, O.; Molino-Minero-Re, E.; Trejo, L.A. Multi-Sensor Fusion for Activity Recognition—A Survey. Sensors 2019, 19, 3808. [Google Scholar] [CrossRef] [PubMed]

- Aguileta, A.A.; Brena, R.F.; Mayora, O.; Molino-Minero-Re, E.; Trejo, L.A. Virtual Sensors for Optimal Integration of Human Activity Data. Sensors 2019, 19, 2017. [Google Scholar] [CrossRef]

- Huynh, T.; Fritz, M.; Schiele, B. Discovery of activity patterns using topic models. In Proceedings of the 10th International Conference on Ubiquitous Computing, Seul, Korea, 21–24 September 2008; pp. 10–19. [Google Scholar]

- Vergara, A.; Vembu, S.; Ayhan, T.; Ryan, M.A.; Homer, M.L.; Huerta, R. Chemical gas sensor drift compensation using classifier ensembles. Sens. Actuators B Chem. 2012, 166, 320–329. [Google Scholar] [CrossRef]

- De Almeida Freitas, F.; Peres, S.M.; de Moraes Lima, C.A.; Barbosa, F.V. Grammatical facial expressions recognition with machine learning. In Proceedings of the Twenty-Seventh International Flairs Conference, Pensacola Beach, FL, USA, 21–23 May 2014. [Google Scholar]

- Friedman, N. Seapower as Strategy: Navies and National Interests. Def. Foreign Aff. Strateg. Policy 2002, 30, 10. [Google Scholar]

- Li, W.; Wang, Z.; Wei, G.; Ma, L.; Hu, J.; Ding, D. A survey on multisensor fusion and consensus filtering for sensor networks. Discret. Dyn. Nat. Soc. 2015, 2015, 683701. [Google Scholar] [CrossRef]

- Atrey, P.K.; Hossain, M.A.; El Saddik, A.; Kankanhalli, M.S. Multimodal fusion for multimedia analysis: A survey. Multimed. Syst. 2010, 16, 345–379. [Google Scholar] [CrossRef]

- Wang, T.; Wang, X.; Hong, M. Gas Leak Location Detection Based on Data Fusion with Time Difference of Arrival and Energy Decay Using an Ultrasonic Sensor Array. Sensors 2018, 18, 2985. [Google Scholar] [CrossRef]

- Schuldhaus, D.; Leutheuser, H.; Eskofier, B.M. Towards big data for activity recognition: A novel database fusion strategy. In Proceedings of the 9th International Conference on Body Area Networks, London, UK, 29 September–1 October 2014; pp. 97–103. [Google Scholar]

- Lai, X.; Liu, Q.; Wei, X.; Wang, W.; Zhou, G.; Han, G. A survey of body sensor networks. Sensors 2013, 13, 5406–5447. [Google Scholar] [CrossRef]

- Rad, N.M.; Kia, S.M.; Zarbo, C.; Jurman, G.; Venuti, P.; Furlanello, C. Stereotypical motor movement detection in dynamic feature space. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining Workshops (ICDMW), Barcelona, Spain, 12–15 December 2016; pp. 487–494. [Google Scholar]

- Kjærgaard, M.B.; Blunck, H. Tool support for detection and analysis of following and leadership behavior of pedestrians from mobile sensing data. Pervasive Mob. Comput. 2014, 10, 104–117. [Google Scholar] [CrossRef]

- Chen, C.; Jafari, R.; Kehtarnavaz, N. A survey of depth and inertial sensor fusion for human action recognition. Multimed. Tools Appl. 2017, 76, 4405–4425. [Google Scholar] [CrossRef]

- Yang, G.Z.; Yang, G. Body Sensor Networks; Springer: Berlin, Germany, 2006; Volume 1. [Google Scholar]

- Huang, C.W.; Narayanan, S. Comparison of feature-level and kernel-level data fusion methods in multi-sensory fall detection. In Proceedings of the 2016 IEEE 18th International Workshop on Multimedia Signal Processing (MMSP), Montreal, QC, Canada, 21–23 September 2016; pp. 1–6. [Google Scholar]

- Ling, J.; Tian, L.; Li, C. 3D human activity recognition using skeletal data from RGBD sensors. In International Symposium on Visual Computing; Springer: Berlin, Germany, 2016; pp. 133–142. [Google Scholar]

- Guiry, J.J.; Van de Ven, P.; Nelson, J. Multi-sensor fusion for enhanced contextual awareness of everyday activities with ubiquitous devices. Sensors 2014, 14, 5687–5701. [Google Scholar] [CrossRef]

- Adelsberger, R.; Tröster, G. Pimu: A wireless pressure-sensing imu. In Proceedings of the 2013 IEEE Eighth International Conference on Intelligent Sensors, Sensor Networks and Information Processing, Melbourne, Australia, 2–5 April 2013; pp. 271–276. [Google Scholar]

- Ravi, D.; Wong, C.; Lo, B.; Yang, G.Z. A deep learning approach to on-node sensor data analytics for mobile or wearable devices. IEEE J. Biomed. Health Inform. 2017, 21, 56–64. [Google Scholar] [CrossRef]

- Altini, M.; Penders, J.; Amft, O. Energy expenditure estimation using wearable sensors: A new methodology for activity-specific models. In Proceedings of the Conference on Wireless Health, San Diego, CA, USA, 23–25 October 2012; p. 1. [Google Scholar]

- John, D.; Liu, S.; Sasaki, J.; Howe, C.; Staudenmayer, J.; Gao, R.; Freedson, P.S. Calibrating a novel multi-sensor physical activity measurement system. Physiol. Meas. 2011, 32, 1473. [Google Scholar] [CrossRef]

- Bernal, E.A.; Yang, X.; Li, Q.; Kumar, J.; Madhvanath, S.; Ramesh, P.; Bala, R. Deep Temporal Multimodal Fusion for Medical Procedure Monitoring Using Wearable Sensors. IEEE Trans. Multimed. 2018, 20, 107–118. [Google Scholar] [CrossRef]

- Liu, S.; Gao, R.X.; John, D.; Staudenmayer, J.W.; Freedson, P.S. Multisensor data fusion for physical activity assessment. IEEE Trans. Biomed. Eng. 2012, 59, 687–696. [Google Scholar] [PubMed]

- Zappi, P.; Stiefmeier, T.; Farella, E.; Roggen, D.; Benini, L.; Troster, G. Activity recognition from on-body sensors by classifier fusion: Sensor scalability and robustness. In Proceedings of the 2007 3rd International Conference on Intelligent Sensors, Sensor Networks and Information, Melbourne, Australia, 3–6 December 2007; pp. 281–286. [Google Scholar]

- Banos, O.; Damas, M.; Guillen, A.; Herrera, L.J.; Pomares, H.; Rojas, I.; Villalonga, C. Multi-sensor fusion based on asymmetric decision weighting for robust activity recognition. Neural Process. Lett. 2015, 42, 5–26. [Google Scholar] [CrossRef]

- Kolter, J.Z.; Maloof, M.A. Dynamic weighted majority: An ensemble method for drifting concepts. J. Mach. Learn. Res. 2007, 8, 2755–2790. [Google Scholar]

- Fatima, I.; Fahim, M.; Lee, Y.K.; Lee, S. A genetic algorithm-based classifier ensemble optimization for activity recognition in smart homes. KSII Trans. Internet Inf. Syst. (TIIS) 2013, 7, 2853–2873. [Google Scholar]

- Nguyen, T.D.; Ranganath, S. Facial expressions in American sign language: Tracking and recognition. Pattern Recognit. 2012, 45, 1877–1891. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Hosmer, D.W., Jr.; Lemeshow, S.; Sturdivant, R.X. Applied Logistic Regression; John Wiley & Sons: Hoboken, NJ, USA, 2013; Volume 398. [Google Scholar]

- Murthy, S.K. Automatic construction of decision trees from data: A multi-disciplinary survey. Data Min. Knowl. Discov. 1998, 2, 345–389. [Google Scholar] [CrossRef]

- Friedman, M. The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J. Am. Stat. Assoc. 1937, 32, 675–701. [Google Scholar] [CrossRef]

- Holm, S. A simple sequentially rejective multiple test procedure. Scand. J. Stat. 1979, 6, 65–70. [Google Scholar]

- Fisher, R.A. Statistical Methods and Scientific Inference, 2nd ed.; Hafner Publishing Co.: New York, NY, USA, 1959. [Google Scholar]

- Demšar, J. Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Hommel, G. A stagewise rejective multiple test procedure based on a modified Bonferroni test. Biometrika 1988, 75, 383–386. [Google Scholar] [CrossRef]

- Holland, B. On the application of three modified Bonferroni procedures to pairwise multiple comparisons in balanced repeated measures designs. Comput. Stat. Q. 1991, 6, 219–231. [Google Scholar]

- Jolliffe, I. Principal Component Analysis; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar] [CrossRef]

- Tipping, M.E.; Bishop, C.M. Mixtures of probabilistic principal component analyzers. Neural Comput. 1999, 11, 443–482. [Google Scholar] [CrossRef]

- Duda, R.O.; Hart, P.E.; Stork, D.G. Pattern Classification; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Chen, C.; Jafari, R.; Kehtarnavaz, N. Utd-mhad: A multimodal dataset for human action recognition utilizing a depth camera and a wearable inertial sensor. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 168–172. [Google Scholar]

- Roggen, D.; Calatroni, A.; Rossi, M.; Holleczek, T.; Förster, K.; Tröster, G.; Lukowicz, P.; Bannach, D.; Pirkl, G.; Ferscha, A.; et al. Collecting complex activity datasets in highly rich networked sensor environments. In Proceedings of the 2010 Seventh International Conference on Networked Sensing Systems (INSS), Kassel, Germany, 15–18 June 2010; pp. 233–240. [Google Scholar]

- Reiss, A.; Stricker, D. Introducing a new benchmarked dataset for activity monitoring. In Proceedings of the 2012 16th International Symposium on Wearable Computers, Newcastle, UK, 18–22 June 2012; pp. 108–109. [Google Scholar]

- Banos, O.; Villalonga, C.; Garcia, R.; Saez, A.; Damas, M.; Holgado-Terriza, J.A.; Lee, S.; Pomares, H.; Rojas, I. Design, implementation and validation of a novel open framework for agile development of mobile health applications. Biomed. Eng. Online 2015, 14, S6. [Google Scholar] [CrossRef]

- Burns, A.; Greene, B.R.; McGrath, M.J.; O’Shea, T.J.; Kuris, B.; Ayer, S.M.; Stroiescu, F.; Cionca, V. SHIMMER™—A wireless sensor platform for noninvasive biomedical research. IEEE Sensors J. 2010, 10, 1527–1534. [Google Scholar] [CrossRef]

- Altun, K.; Barshan, B.; Tunçel, O. Comparative study on classifying human activities with miniature inertial and magnetic sensors. Pattern Recognit. 2010, 43, 3605–3620. [Google Scholar] [CrossRef]

- Reyes-Ortiz, J.L.; Oneto, L.; Samà, A.; Parra, X.; Anguita, D. Transition-aware human activity recognition using smartphones. Neurocomputing 2016, 171, 754–767. [Google Scholar] [CrossRef]

- Figaro USA Inc. Available online: http://www.figarosensor.com (accessed on 7 January 2020).

- Dua, D.; Graff, C. UCI Machine Learning Repository. 2017. Available online: http://archive.ics.uci.edu/ml (accessed on 10 February 2020).

- Tan, P.N.; Steinbach, M.; Kumar, V. Introduction to Data Mining; Pearson Addison-Wesley: Boston, MA, USA, 2005. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Banos, O.; Galvez, J.M.; Damas, M.; Pomares, H.; Rojas, I. Window size impact in human activity recognition. Sensors 2014, 14, 6474–6499. [Google Scholar] [CrossRef]

- Dernbach, S.; Das, B.; Krishnan, N.C.; Thomas, B.L.; Cook, D.J. Simple and complex activity recognition through smart phones. In Proceedings of the 2012 Eighth International Conference on Intelligent Environments, Guanajuato, Mexico, 26–29 June 2012; pp. 214–221. [Google Scholar]

- Zhang, M.; Sawchuk, A.A. Motion Primitive-based Human Activity Recognition Using a Bag-of-features Approach. In Proceedings of the 2nd ACM SIGHIT International Health Informatics Symposium; ACM: New York, NY, USA, 2012; pp. 631–640. [Google Scholar] [CrossRef]

- Llobet, E.; Brezmes, J.; Vilanova, X.; Sueiras, J.E.; Correig, X. Qualitative and quantitative analysis of volatile organic compounds using transient and steady-state responses of a thick-film tin oxide gas sensor array. Sens. Actuators B Chem. 1997, 41, 13–21. [Google Scholar] [CrossRef]

- Muezzinoglu, M.K.; Vergara, A.; Huerta, R.; Rulkov, N.; Rabinovich, M.I.; Selverston, A.; Abarbanel, H.D. Acceleration of chemo-sensory information processing using transient features. Sens. Actuators B Chem. 2009, 137, 507–512. [Google Scholar] [CrossRef]

- Zhao, J.; Xie, X.; Xu, X.; Sun, S. Multi-view learning overview: Recent progress and new challenges. Inf. Fusion 2017, 38, 43–54. [Google Scholar] [CrossRef]

- Sun, S. A survey of multi-view machine learning. Neural Comput. Appl. 2013, 23, 2031–2038. [Google Scholar] [CrossRef]

- Kluyver, T.; Ragan-Kelley, B.; Pérez, F.; Granger, B.E.; Bussonnier, M.; Frederic, J.; Kelley, K.; Hamrick, J.B.; Grout, J.; Corlay, S.; et al. Jupyter Notebooks-a publishing format for reproducible computational workflows. In ELPUB; IOS Press: Amsterdam, The Netherlands, 2016; pp. 87–90. [Google Scholar]

- Müller, H.; Müller, W.; Squire, D.M.; Marchand-Maillet, S.; Pun, T. Performance evaluation in content-based image retrieval: Overview and proposals. Pattern Recognit. Lett. 2001, 22, 593–601. [Google Scholar] [CrossRef]

| Name | Sensors |

|---|---|

| OpportunityRlAccGy | Accelerometer and Gyroscope of the Rl |

| OpportunityBaAccLlGy | Ba Accelerometer and Ll Gyroscope |

| OpportunityBaAccLuGy | Ba Accelerometer and Lu Gyroscope |

| OpportunityBaAccRlGy | Ba Accelerometer and Rl Gyroscope |

| OpportunityBaAccRuGy | Ba Accelerometer and Ru Gyroscope |

| OpportunityLlAccBaGy | Ll Accelerometer and Ba Gyroscope |

| OpportunityLlAccGy | Accelerometer and Gyroscope of the Ll |

| OpportunityRuAccGy | Accelerometer and Gyroscope of the Ru |

| OpportunityRuAccLlGy | Ru Accelerometer and Ll Gyroscope |

| OpportunityRuAccLuGy | Ru Accelerometer and Lu Gyroscope |

| Name | Sensors |

|---|---|

| PAMAP2HaAccGy | Accelerometer and Gyroscope of the Ha |

| PAMAP2AnAccGy | Accelerometer and Gyroscope of the An |

| PAMAP2AnAccHaGy | An Accelerometer and Ha Gyroscope |

| PAMAP2ChAccGy | Accelerometer and Gyroscope of the Ch |

| PAMAP2ChAccHaGy | Ch Accelerometer and Ha Gyroscope |

| PAMAP2HaAccAnGy | Ha Accelerometer and An Gyroscope |

| PAMAP2HaAccChGy | Ha Accelerometer and Ch Gyroscope |

| Name | Sensors |

|---|---|

| MHealthRaAccGy | Accelerometer and Gyroscope of the Ra |

| MHealthLaAccGy | Accelerometer and Gyroscope of the La |

| MHealthLaAccRaGy | La Accelerometer and Ra Gyroscope |

| MHealthRaAccLaGy | Ra Accelerometer and La Gyroscope |

| Name | Sensors |

|---|---|

| DSALaAccLlGy | La Accelerometer and Ll Gyroscope |

| DSALaAccRlGy | La Accelerometer and Rl Gyroscope |

| DSALlAccLaGy | Ll Accelerometer and La Gyroscope |

| DSALlAccRaGy | Ll Accelerometer and Ra Gyroscope |

| DSALlAccRlGy | Ll Accelerometer and Rl Gyroscope |

| DSARaAccRlGy | Ra Accelerometer and Rl Gyroscope |

| DSARlAccLaGy | Rl Accelerometer and La Gyroscope |

| DSARlAccLlGy | Rl Accelerometer and Ll Gyroscope |

| DSARlAccRaGy | Rl Accelerometer and Ra Gyroscope |

| DSARlAccToGy | Rl Accelerometer and To Gyroscope |

| DSARaAccGy | Accelerometer and Gyroscope of the Ra |

| DSALaAccGy | Accelerometer and Gyroscope of the La |

| DSALlAccGy | Accelerometer and Gyroscope of the Ll |

| DSARlAccGy | Accelerometer and Gyroscope of the Rl |

| DSAToAccGy | Accelerometer and Gyroscope of the To |

| DSAToAccLlGy | To Accelerometer and Ll Gyroscope |

| DSAToAccRaGy | To Accelerometer and Ra Gyroscope |

| Name | Sensors |

|---|---|

| S7S8gas | Gas sensors 7 and 8 |

| S6S16gas | Gas sensors 6 and 16 |

| S12S15gas | Gas sensors 12 and 15 |

| S10S15gas | Gas sensors 10 and 15 |

| S5S15gas | Gas sensors 5 and 15 |

| S1S2gas | Gas sensors 1 and 2 |

| S3S16gas | Gas sensors 3 and 16 |

| S9S15gas | Gas sensors 9 and 15 |

| S2S15gas | Gas sensors 2 and 15 |

| S13S15gas | Gas sensors 13 and 15 |

| S8S15gas | Gas sensors 8 and 15 |

| S3S15gas | Gas sensors 3 and 15 |

| S13S16gas | Gas sensors 13 and 16 |

| S4S16gas | Gas sensors 4 and 16 |

| S5S6gas | Gas sensors 5 and 6 |

| S10S16gas | Gas sensors 10 and 16 |

| S11S16gas | Gas sensors 11 and 16 |

| S1S16gas | Gas sensors 1 and 16 |

| S7S16gas | Gas sensors 7 and 16 |

| S8S16gas | Gas sensors 8 and 16 |

| S11S15gas | Gas sensors 11 and 15 |

| S9S10gas | Gas sensors 9 and 10 |

| S11S12gas | Gas sensors 11 and 12 |

| S14S15gas | Gas sensors 14 and 15 |

| S13S14gas | Gas sensors 13 and 14 |

| S1S15gas | Gas sensors 1 and 15 |

| S4S15gas | Gas sensors 4 and 15 |

| S3S4gas | Gas sensors 3 and 4 |

| S5S16gas | Gas sensors 5 and 16 |

| S14S16gas | Gas sensors 14 and 16 |

| S2S16gas | Gas sensors 2 and 16 |

| S15S16gas | Gas sensors 15 and 16 |

| S12S16gas | Gas sensors 12 and 16 |

| S7S15gas | Gas sensors 7 and 15 |

| S9S16gas | Gas sensors 9 and 16 |

| S6S15gas | Gas sensors 6 and 15 |

| Group Name | Facial Points |

|---|---|

| V1 | 17, 27, 10, 89, 2, 39, 57, 51, 48, 54, 12 |

| V2 | 16, 36, 1, 41, 9, 42, 69, 40, 43, 85, 50, 75, 25, 37, 21, 72, 58, 48, 77, 54 |

| V3 | 95, 31, 96, 32, 88, 14, 11, 13, 61, 67, 51, 58, 97, 98, 27, 10, 12, 15, 62, 83, 66 |

| V4 | 91, 3, 18, 73, 69, 39, 42, 44, 49, 59, 56, 86, 90, 68, 6, 70, 63, 80, 78 |

| V5 | 24, 32, 46, 28, 33, 80, 39, 44, 61, 63, 59, 55, 92, 20, 23, 74, 41, 49, 89, 53 |

| Name of Data Sets Created with Group Points: | ||||

|---|---|---|---|---|

| V1 | V2 | V3 | V4 | V5 |

| affirmativeV1 | affirmativeV2 | affirmativeV3 | affirmativeV4 | affirmativeV5 |

| conditionalV1 | conditionalV2 | conditionalV3 | conditionalV4 | conditionalV5 |

| doubts_questionV1 | doubts_questionV2 | doubts_questionV3 | doubts_questionV4 | doubts_questionV5 |

| emphasisV1 | emphasisV2 | emphasisV3 | emphasisV4 | emphasisV5 |

| relativeV1 | relativeV2 | relativeV3 | relativeV4 | relativeV5 |

| topicsV1 | topicsV2 | topicsV3 | topicsV4 | topicsV5 |

| Wh_questionsV1 | Wh_questionsV2 | Wh_questionsV3 | Wh_questionsV4 | Wh_questionsV5 |

| yn_questionsV1 | yn_questionsV2 | yn_questionsV3 | yn_questionsV4 | yn_questionsV5 |

| SHA | Voting (Shuffled Features) | Voting | Voting All Features CART-LR-RFC | Multi-View Stacking (Shuffle) | Multi-View Stacking | Multi-View Stacking All Features CART-LR-RFC | AdaBoost |

|---|---|---|---|---|---|---|---|

| DSARlAccRaGy | ✔ | ||||||

| PAMAP2HaAccGy | |||||||

| OpportunityLlAccGy | |||||||

| PAMAP2HaAccAnGy | |||||||

| OpportunityRlAccGy | |||||||

| DSALaAccRlGy | ✔ | ||||||

| DSALlAccLaGy | ✔ | ||||||

| DSALlAccRaGy | ✔ | ||||||

| OpportunityRuAccLuGy | ✔ | ||||||

| DSARlAccToGy | ✔ | ||||||

| DSALlAccRlGy | ✔ | ||||||

| DSARaAccRlGy | ✔ | ||||||

| DSARlAccLlGy | ✔ | ||||||

| DSALaAccGy | |||||||

| HAPT | ✔ | ||||||

| DSALlAccGy | ✔ | ||||||

| MHealthLaAccRaGy | ✔ | ||||||

| DSARaAccGy | ✔ | ||||||

| OpportunityBaAccLuGy | ✔ | ||||||

| OpportunityRuAccLlGy | |||||||

| MHealthRaAccLaGy | ✔ | ||||||

| OpportunityLlAccBaGy | |||||||

| DSARlAccGy | |||||||

| MHealthRaAccGy | ✔ | ||||||

| DSALaAccLlGy | ✔ | ||||||

| DSAToAccRaGy | ✔ | ||||||

| OpportunityBaAccLlGy | |||||||

| OpportunityBaAccRlGy | ✔ | ||||||

| PAMAP2ChAccHaGy | |||||||

| OpportunityBaAccRuGy | ✔ | ||||||

| PAMAP2AnAccHaGy | ✔ | ||||||

| OpportunityRuAccGy | |||||||

| PAMAP2ChAccGy | |||||||

| DSAToAccLlGy | ✔ | ||||||

| MHealthLaAccGy | ✔ | ||||||

| PAMAP2HaAccChGy | ✔ | ||||||

| PAMAP2AnAccGy | |||||||

| UTD-MHAD | |||||||

| DSARlAccLaGy | ✔ | ||||||

| DSAToAccGy | ✔ |

| Gas | Voting (Shuffled Features) | Voting | Voting All Features CART-LR-RFC | Multi-View Stacking (Shuffle) | Multi-View Stacking | Multi-View Stacking All Features CART-LR-RFC | AdaBoost |

|---|---|---|---|---|---|---|---|

| S7S8gas | ✔ | ||||||

| S6S16gas | |||||||

| S12S15gas | |||||||

| S10S15gas | |||||||

| S5S15gas | |||||||

| S1S2gas | |||||||

| S3S16gas | |||||||

| S9S15gas | |||||||

| S2S15gas | |||||||

| S13S15gas | ✔ | ||||||

| S8S15gas | ✔ | ||||||

| S3S15gas | ✔ | ||||||

| S13S16gas | ✔ | ||||||

| S4S16gas | ✔ | ||||||

| S5S6gas | |||||||

| S10S16gas | |||||||

| S11S16gas | ✔ | ||||||

| S1S16gas | |||||||

| S7S16gas | |||||||

| S8S16gas | ✔ | ||||||

| S11S15gas | ✔ | ||||||

| S9S10gas | ✔ | ||||||

| S11S12gas | |||||||

| S14S15gas | ✔ | ||||||

| S13S14gas | |||||||

| S1S15gas | |||||||

| S4S15gas | ✔ | ||||||

| S3S4gas | |||||||

| S5S16gas | ✔ | ||||||

| S14S16gas | ✔ | ||||||

| S2S16gas | |||||||

| S15S16gas | ✔ | ||||||

| S12S16gas | ✔ | ||||||

| S7S15gas | ✔ | ||||||

| S9S16gas | |||||||

| S6S15gas |

| GFE | Voting (Shuffled Features) | Voting | Voting All Features CART-LR-RFC | Multi-View Stacking (Shuffle) | Multi-View Stacking | Multi-View Stacking All Features CART-LR-RFC | AdaBoost |

|---|---|---|---|---|---|---|---|

| emphasisV4 | |||||||

| yn_questionV1 | |||||||

| wh_questionV3 | ✔ | ||||||

| wh_questionV2 | ✔ | ||||||

| yn_questionV5 | |||||||

| doubt_questionV1 | ✔ | ||||||

| wh_questionV5 | ✔ | ||||||

| emphasisV3 | |||||||

| conditionalV5 | ✔ | ||||||

| conditionalV1 | ✔ | ||||||

| emphasisV1 | |||||||

| conditionalV3 | ✔ | ||||||

| emphasisV5 | |||||||

| relativeV3 | ✔ | ||||||

| topicsV4 | ✔ | ||||||

| topicsV5 | ✔ | ||||||

| doubt_questionV5 | ✔ | ||||||

| wh_questionV1 | ✔ | ||||||

| affirmativeV3 | |||||||

| yn_questionV3 | |||||||

| topicsV2 | ✔ | ||||||

| doubt_questionV2 | ✔ | ||||||

| emphasisV2 | |||||||

| doubt_questionV3 | ✔ | ||||||

| relativeV5 | ✔ | ||||||

| yn_questionV4 | |||||||

| relativeV2 | ✔ | ||||||

| topicsV3 | ✔ | ||||||

| topicsV1 | ✔ | ||||||

| doubt_questionV4 | ✔ | ||||||

| relativeV1 | ✔ | ||||||

| affirmativeV5 | ✔ | ||||||

| yn_questionV2 | |||||||

| affirmativeV1 | |||||||

| wh_questionV4 | ✔ | ||||||

| conditionalV4 | ✔ | ||||||

| affirmativeV2 | |||||||

| relativeV4 | ✔ | ||||||

| conditionalV2 | ✔ | ||||||

| affirmativeV4 |

| Class Distribution | ||||||

|---|---|---|---|---|---|---|

| Dataset | Dimensions (Rows, Columns) | Aggregation | Multiview Stacking | Voting | Multiview Stacking NotShuffle | Adaboost |

| Statistical signature | (116, 16) | 47 | 37 | 17 | 14 | 1 |

| Class Distribution | ||||||

|---|---|---|---|---|---|---|

| Dataset | Dimensions (Rows, Columns) | Aggregation | Multiview Stacking | Voting | Multiview Stacking NotShuffle | Adaboost |

| Statistical signature | (235, 16) | 47 | 47 | 47 | 47 | 47 |

| Label | Adaboost | Aggregation | Multiview Stacking | Multiview Stacking NotShuffle | Voting |

|---|---|---|---|---|---|

| Adaboost | 47 | 0 | 0 | 0 | 0 |

| Aggregation | 0 | 41 | 4 | 2 | 0 |

| MultiViewStacking | 0 | 5 | 38 | 3 | 1 |

| MultiViewStackingNotShuffle | 0 | 1 | 0 | 43 | 3 |

| Voting | 0 | 0 | 1 | 0 | 46 |

| Label | Precision | Recall | f1-Score | Support |

|---|---|---|---|---|

| Adaboost | 1.00 | 1.00 | 1.00 | 47 |

| Aggregation | 0.87 | 0.87 | 0.87 | 47 |

| MultiViewStacking | 0.88 | 0.81 | 0.84 | 47 |

| MultiViewStackingNotShuffle | 0.90 | 0.91 | 0.91 | 47 |

| Voting | 0.92 | 0.98 | 0.95 | 47 |

| avg/total | 0.91 | 0.91 | 0.91 | 235 |

| accuracy | 0.91 | 235 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brena, R.F.; Aguileta, A.A.; Trejo, L.A.; Molino-Minero-Re, E.; Mayora, O. Choosing the Best Sensor Fusion Method: A Machine-Learning Approach. Sensors 2020, 20, 2350. https://doi.org/10.3390/s20082350

Brena RF, Aguileta AA, Trejo LA, Molino-Minero-Re E, Mayora O. Choosing the Best Sensor Fusion Method: A Machine-Learning Approach. Sensors. 2020; 20(8):2350. https://doi.org/10.3390/s20082350

Chicago/Turabian StyleBrena, Ramon F., Antonio A. Aguileta, Luis A. Trejo, Erik Molino-Minero-Re, and Oscar Mayora. 2020. "Choosing the Best Sensor Fusion Method: A Machine-Learning Approach" Sensors 20, no. 8: 2350. https://doi.org/10.3390/s20082350

APA StyleBrena, R. F., Aguileta, A. A., Trejo, L. A., Molino-Minero-Re, E., & Mayora, O. (2020). Choosing the Best Sensor Fusion Method: A Machine-Learning Approach. Sensors, 20(8), 2350. https://doi.org/10.3390/s20082350