Abstract

Since the fuzzy local information C-means (FLICM) segmentation algorithm cannot take into account the impact of different features on clustering segmentation results, a local fuzzy clustering segmentation algorithm based on a feature selection Gaussian mixture model was proposed. First, the constraints of the membership degree on the spatial distance were added to the local information function. Second, the feature saliency was introduced into the objective function. By using the Lagrange multiplier method, the optimal expression of the objective function was solved. Neighborhood weighting information was added to the iteration expression of the classification membership degree to obtain a local feature selection based on feature selection. Each of the improved FLICM algorithm, the fuzzy C-means with spatial constraints (FCM_S) algorithm, and the original FLICM algorithm were then used to cluster and segment the interference images of Gaussian noise, salt-and-pepper noise, multiplicative noise, and mixed noise. The performances of the peak signal-to-noise ratio and error rate of the segmentation results were compared with each other. At the same time, the iteration time and number of iterations used to converge the objective function of the algorithm were compared. In summary, the improved algorithm significantly improved the ability of image noise suppression under strong noise interference, improved the efficiency of operation, facilitated remote sensing image capture under strong noise interference, and promoted the development of a robust anti-noise fuzzy clustering algorithm.

1. Introduction

1.1. Image Segmentation Algorithms

Existing image segmentation methods are mainly divided into the following categories: the edge-based image segmentation methods, the region-based image segmentation methods, and the image segmentation methods based on a specific theory. Cluster segmentation, as a typical unsupervised segmentation method, has attracted the attention of many scholars and has been widely used and studied in many fields [1,2].

Clustering algorithms can be divided into hard partition clustering algorithms and soft partition clustering algorithms. Hard partition clustering algorithms are used for image segmentation. Their principle is to directly divide an image according to the similarity of pixels in terms of qualities such as grayness, color, and texture. The optimal solution or partition can be obtained by minimizing the objective function for algorithms such as the H-means algorithm [3,4], the global K-means algorithm, and the K-means algorithms, where K-means clustering is one of these methods [5,6]. This clustering algorithm has the advantages of fast segmentation, clear structure, and good usability [7,8], but it is also prone to fall into local minima in the process of optimizing the segmentations. Soft partitioning clustering algorithms use the degree of belonging or the probability of pixels to indirectly partition the similarity of pixels and search for an optimal decomposition in the process of minimizing the likelihood function of the objective function or maximizing the parameter [9,10]. For example, Dunn [4] proposed the fuzzy C-means clustering algorithm in 1947. In 1981, Bezdek [5] proved and compared the measurement theory of mean clustering and fuzzy mean clustering, and proved the convergence of the fuzzy mean clustering algorithm, established fuzzy clustering theory, promoted the development of the fuzzy clustering algorithm, and developed the fuzzy mean clustering algorithm such that it became an important branch of fuzzy theory. The theory was introduced into the clustering algorithm to improve the adaptability of the algorithm, which has been widely used [11,12].

1.2. Fuzzy Clustering Algorithm Based on Feature Selection

At present, the research of clustering analysis focuses on the scalability of the clustering method, the validity of clustering for complex shapes and data types, high-dimensional clustering analysis technology, and the clustering method for mixed data. Among them, high-dimensional data clustering is a difficult problem in clustering analysis, and solving the clustering problem with high-dimensional data is difficult for the traditional clustering algorithm. For example, there are large numbers of invalid clustering features in high-dimensional sample spaces, and the Euclidean distance is used as a distance measure in the FCM algorithm [13], but it cannot take into account the correlation of each feature space in high-dimensional space. At present, the problem of high-dimensional data is mainly dealt with using feature transformations and feature selection. The method based on feature selection can effectively reduce the dimension and has been widely applied. A subspace-based clustering image segmentation method is proposed in the literature. By defining search strategies and evaluation criteria, effective features for clustering are screened. The original data sets are clustered in different subspaces to reduce storage and computation costs [14].

The existing supervised feature selection method achieves the goal of dimensionality but reduces the operational efficiency. To achieve clustering segmentation using adaptive feature selection, a similarity measurement method for high-dimensional data, which takes into account the correlation between high-dimensional spatial features and effectively reduces the impact of a “dimensional disaster” on high-dimensional data, is proposed in the literature. However, there is a lack of theoretical guidance on how to select the similarity measurement criteria. To avoid any combination search and to apply the method to unsupervised learning, the concept of feature saliency is proposed in the literature. Considering the influence of different features on the clustering results, the Gaussian mixture model is used for clustering analysis to improve the performance of the algorithm [15].

The fuzzy Gaussian mixture models (FGMMs) algorithm replaces the Euclidean distance of the FCM algorithm with the Gaussian mixture model, which can more accurately fit multipeak data and achieves better segmentation of noiseless complex images. Traditional fuzzy C-means clustering analysis treats the different features of samples equally and ignores the important influence of key features on clustering results, which leads to the difference between the clustering segmentation results and the real classification results. According to the theory of feature selection, the concept of feature saliency is used to assume that the saliency of sample features obeys a probability distribution, and the clustering analysis is carried out by using the Gaussian mixture model. Ju and Liu [16] proposed an online feature selection method based on fuzzy clustering, along with its application (OFSBFCM), and a fuzzy C-means clustering method combined with a Gaussian mixture model with feature selection using Kullback–Leibler (KL) divergence (FSFCM) is proposed in this paper [16,17].

In short, the advantages of the feature-based selection of the GMM-based fuzzy clustering algorithm are as follows:

- (1)

- By using the Gaussian mixture model as a distance measure and by accurately fitting multifront data, compared with the FCM algorithm, the FCM algorithm can manage complexly structured data sample sets.

- (2)

- The Gaussian hybrid model algorithm for feature selection assumes that different features of samples play different roles in pattern analysis. Some features play a decisive role in model analysis and overcome the limitations of the FCM algorithm.The algorithm treats the different features of samples equally for clustering analysis, ignoring the important influence of key features on the clustering results, which leads to a certain gap between the clustering results and the real classification results.

- (3)

- KL divergence regularization clustering can be widely used in the clustering analysis of class unbalanced data.

The problems of the feature-based GMM-based fuzzy clustering algorithm are as follows:

- (1)

- The parameters need to be adjusted to increase the running time of the algorithm.

- (2)

- Like the FCM algorithm, it only clusters a single pixel without considering the influence of spatial neighborhood pixels on each central pixel. For different types of noisy images, the algorithm does not have good robustness against noise.

2. Algorithm Analysis

2.1. FLICM Algorithm

The FCM algorithm uses the fuzzy membership degree and nonsimilarity measure to construct the objective function; it also finds the corresponding membership degree and clustering center when the objective function is the smallest in the iteration process to realize the sample classification. Its structure is simple and easy to simulate, and the convergence is fast. However, it does not consider the interference from neighborhood information on the central pixel, and the results of the image segmentation with noise interference are unsatisfactory. To improve the robustness against the noise of the algorithm, Chens et al. [17]. proposed the neighborhood mean and neighborhood mean fuzzy C-means algorithms FCM-S1 and FCM-S2. Later, the Greek scholars Krinidis et al. [18,19]. proposed a neighborhood local fuzzy C-means segmentation algorithm (FLICM), which combines neighborhood pixel spatial information, gray information, and fuzzy classification information to improve the anti-noise performance of the algorithm. Its objective function expression is as follows [20,21]:

Specifically, is the first sample. represent the different attributes of the first sample. C is the number of clusters. denotes the fuzzy membership of the first pixel in the jth category; the clustering center is . is the Euclidean distance of the spatial position between the pixel point and the neighboring pixel . represents a set of neighborhood spatial pixels of pixel point ; the neighborhood window size is or . The optimal iteration expressions of the classification membership degree and the clustering center are as follows [22,23]:

2.2. Local Neighborhood Robust Fuzzy Clustering Image Segmentation Algorithm Based on an Adaptive Feature Selection Gaussian Mixture Model

2.2.1. Improved FLICM Algorithm

The FLICM algorithm does not strictly follow the Lagrangian multiplier method to solve the optimal expression of the objective function. Furthermore, it runs too long and falls into local minima. To solve these problems, the unconstrained expression of the objective function is solved using the Lagrangian multiplier method as follows [24,25]:

The partial derivative of with respect to the membership degree and clustering center is obtained, and its partial derivative is 0:

By solving Equations (6) and (7), the following solution is obtained:

Compared with the iteration expressions in the literature, the iteration formula of the clustering centers needs to consider the central pixel values. Furthermore, the influence of the neighborhood pixels on the clustering center and the degree of classification membership also have some influence on the clustering center . To accurately compare the influence of the neighborhood pixels on the central pixels, this section describes the use of neighborhood spatial classification membership to restrict the Euclidean distance of the spatial position between pixel and pixel , and redefines the ambiguity factor to be [26,27]:

2.2.2. Local Neighborhood Robust Fuzzy Clustering Algorithm Based on an Adaptive Feature Selection Gaussian Mixture Model

The FLICM algorithm introduces neighborhood spatial information into the objective function of the algorithm to enhance the anti-noise performance of the algorithm; however, the algorithm treats the different features of the samples equally for clustering analysis, ignoring the important impact of key features on the clustering results, which results in unsatisfactory segmentation results. In this section, the idea of feature selection is introduced into the improved FLICM algorithm, KL divergence is introduced as a regularization term to realize feature selection constraints, and a new objective function is obtained as follows [28,29]:

Further, ,

is the weighted Euclidean distance between the first sample and the center of class J. The Euclidean distance is the spatial position between pixel point and pixel point . is the influence degree of the first characteristic attribute on the jth class in the fist sample. is the eigenvalue corresponding to the mean of all samples. is the weight factor of the first dimension feature attribute of the sample. is used as a fuzzy factor.

In the literature, the membership degree has been obtained strictly according to the Lagrange multiplier method after finding an unconstrained solution of the objective function but the clustering center of the formula solution is directly calculated using the traditional fuzzy C-means clustering cluster center expression, which is not strictly obtained via the Lagrange method, resulting in an inconsistency between Equation (4) and the clustering objective function. In this section, the objective function of clustering is optimized strictly using the Lagrange multiplier method, and the iterative optimization expression is solved. The process is as follows [30,31]:

Finding the partial derivatives of object functions with respect to :

Let the partial derivative be zero:

The unconstrained expression of the objective function obtained using the Lagrange multiplier method is given by . Finding partial derivatives of the formula with respect to :

Bring the local ambiguity factor into the formula and set the formula equal to zero:

Constraints of membership degree .

The iteration expression of the subordinate degree is solved by introducing Equation (15) into Equation (17), as follows.

Therefore:

Finding the partial derivatives of the object functions with respect to gives:

where .

Finding the partial derivatives of the object functions with respect to gives:

Let the partial derivative be zero and obtain the expression of as follows:

For the objective function with respect to , the partial derivative is obtained, and the partial derivative is set to 0. The iterative expression is as follows.

Using the Lagrange multiplier method, the partial derivative of the objective function with respect to is set to 0:

The iterative expression of is obtained from the above formula:

2.2.3. Postprocessing Method of the Clustering Membership Degree

To further enhance the robustness against noise, the neighborhood weighting information is added to the membership degree of the iteration expression. Combined with the idea of the non-Markov random field (MRF) space-constrained Gaussian model in the literature, this section constructs a neighborhood weighting function by using the classification membership degree and the postprocessing clustering membership degree. The function considers the corresponding median to be a probability by classifying the membership degree of neighborhood pixels in ascending order, which is expressed as follows [32,33]:

A indicates that the neighborhood window sizes are for the classification membership of neighborhood pixels. represents the set of classified membership degrees of neighborhood pixels. According to the Bayesian theorem, the weight factor of the neighborhood information function is added to Equation (18), and the new expression of the membership degree is given in Equation (27):

In this equation, is the weight factor and the selection range is. a value of 2.0 is usually chosen. Its function is similar to the fuzzy weight factor m in the traditional fuzzy C-means clustering objective function.

The improved membership degree of the sample classification in this chapter has the following properties [34,35]:

- (1)

- Neighborhood weighted membership still satisfies the constraints .

- (2)

- The membership degree of the current pixel in class J is proportional to the probability that the neighborhood pixel belongs to class J.

As the probability increases, the degree of membership increases. Conversely, when neighborhood pixel belongs to class j, the probability tends to zero, and thus, the membership degree of the current pixel in class j decreases.

In addition, , such that:

The derivative is obtained, as follows:

It is proved that the weighted neighborhood membership degree can be found using the neighborhood information.

The monotone incremental function of , which uses the number to restrict the membership degree of classification, improves the performance of the sample classification to a certain extent and enhances the robustness of the algorithm against noise. To achieve image segmentation, the local fuzzy clustering algorithm based on feature selection in this chapter needs to solve the iterative optimization expression. The detailed steps are as follows [36,37]:

Step 1: Transform the image pixel value into sample eigenvector , where , N is the total number of pixels, and C is the number of clusters.

The termination condition threshold is , the maximum iteration number is , the regularization parameter is , and the feature selection parameter is .

Step 2: Initialize the feature attribute weight coefficients and to find the prior probability of sample classification.

Step 3: The central vector of the sample classification class is obtained using FCM clustering, where . Class variance matrix is . Sample eigenvalue mean vector is . Eigenvalue variance matrix is . Given the improved adaptive spatial neighborhood information, in this section, the initial values of the Gaussian mixture fuzzy clustering algorithm are selected as follows: .

Step 4: Compute the adaptive spatial neighborhood information function using Equation (26).

Step 5: Use Equation (15) to calculate the eigenweight function .

Step 6: Calculate the membership function using Equation (28).

Step 7: Update using Equations (20) to (26).

Step 8: If the number of iterations is or the convergence condition is satisfied, the iteration will stop; otherwise, the iteration returns to step 4.

Step 9: The image pixels are classified and segmented according to the principle of the maximum membership degree using the values obtained when the algorithm’s iterations have been completed.

3. Experimental Results and Analysis

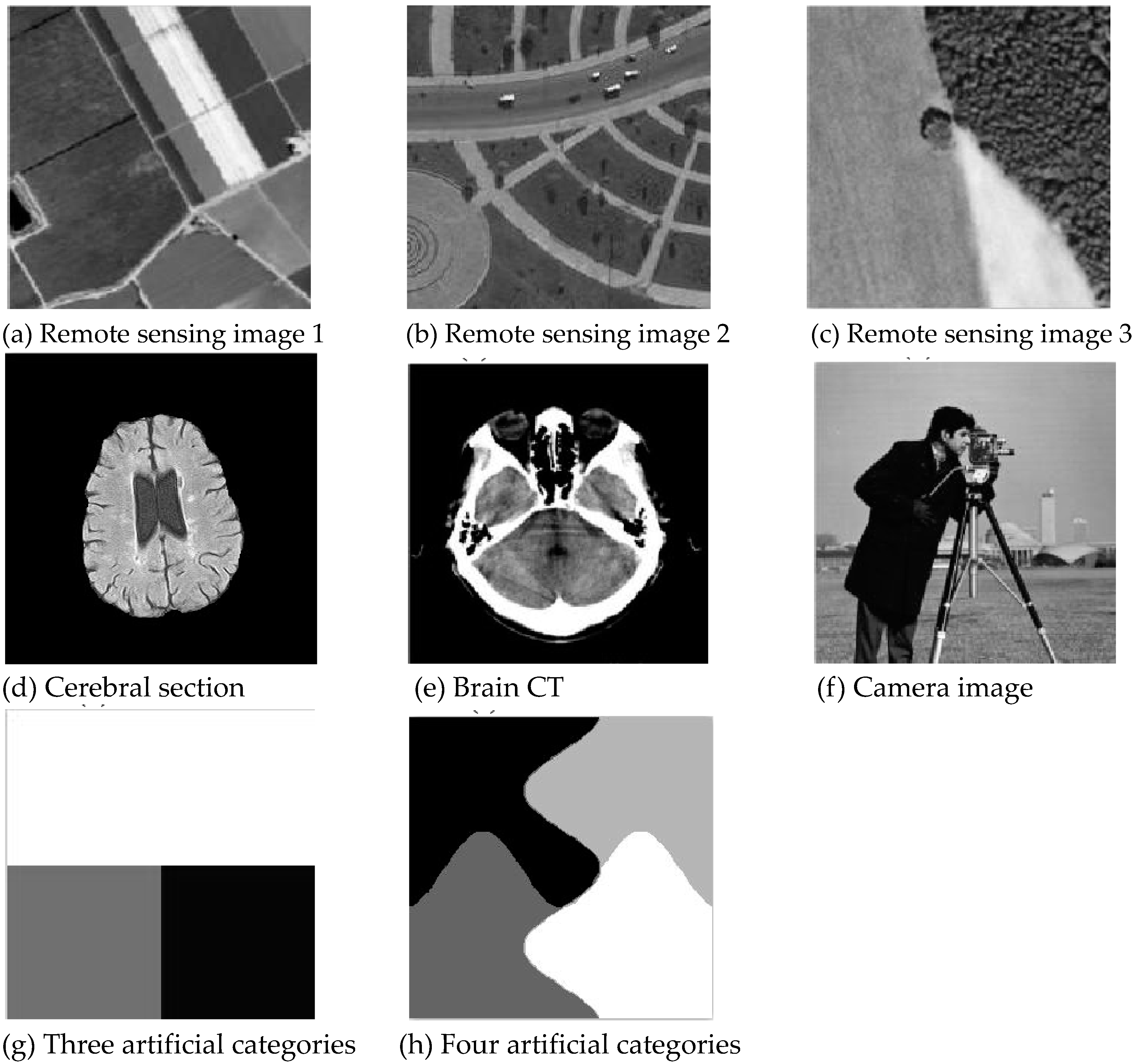

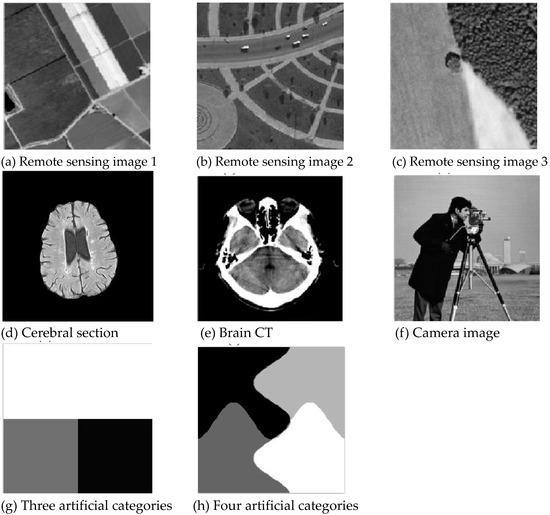

To verify the good segmentation performance and anti-noise ability of the improved algorithm, high-resolution remote sensing images, including common ground objects in remote sensing images (such as forest farmland, bare land, and grassland), synthetic images, standard images, and high-resolution medical images were selected, as is shown in Figure 1. The improved algorithm and the FCM-S, FLICM, kernel-weighted FLICM (KWFLICM), and local data and membership relative entropy-based FCM (LDMREFCM) algorithms were used to segment gray images with different noises [36,37]. The peak signal-to-noise ratio (PSNR) and the error misclassification rate (MCR) were used to compare the segmentation performance and anti-noise performance of the algorithms [38,39]. Generally, the MCR is often used to quantitatively evaluate the performance of segmentation algorithms, which is defined as:

Figure 1.

Original images.

The efficiency of the algorithms was compared using the running time after convergence and the number of iterations n. A Dell OptiPlex 360 (Intel Core 4, 8 GB of memory) running a Windows 7 system with the MATLAB 2013a (MathWorks, Natick, MA, USA)programming environment comprised the evaluation platform. The maximum number of iterations of the algorithm was set to 300. The cluster numbers C for each noise was chosen to be 2, 3, and 4. The regularization parameters and characteristic parameters were selected separately to be and , respectively. The iteration threshold was , and the neighborhood window size was set to .

3.1. Image Segmentation Test with Gaussian Noise

3.1.1. Segmentation Performance Test

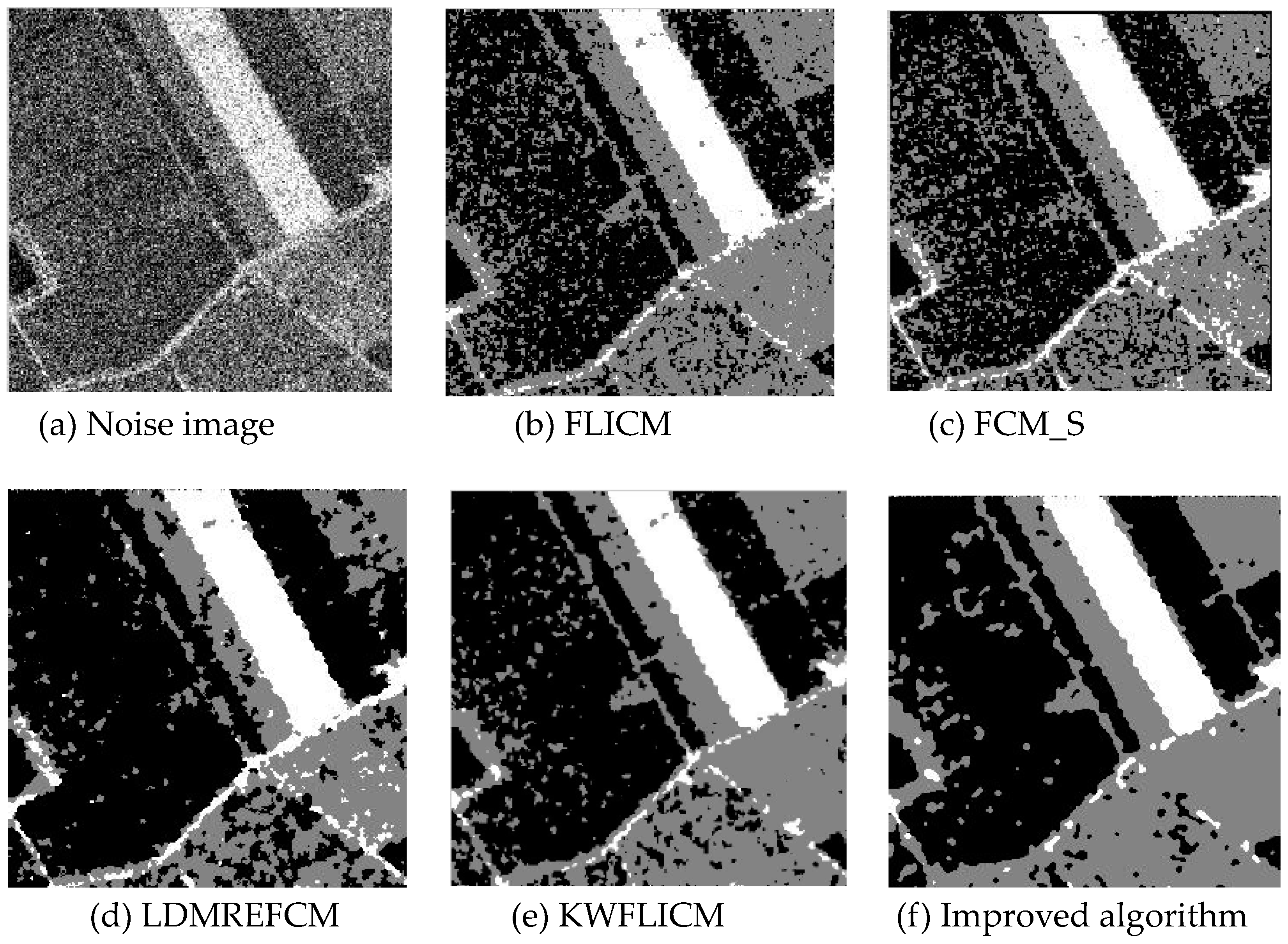

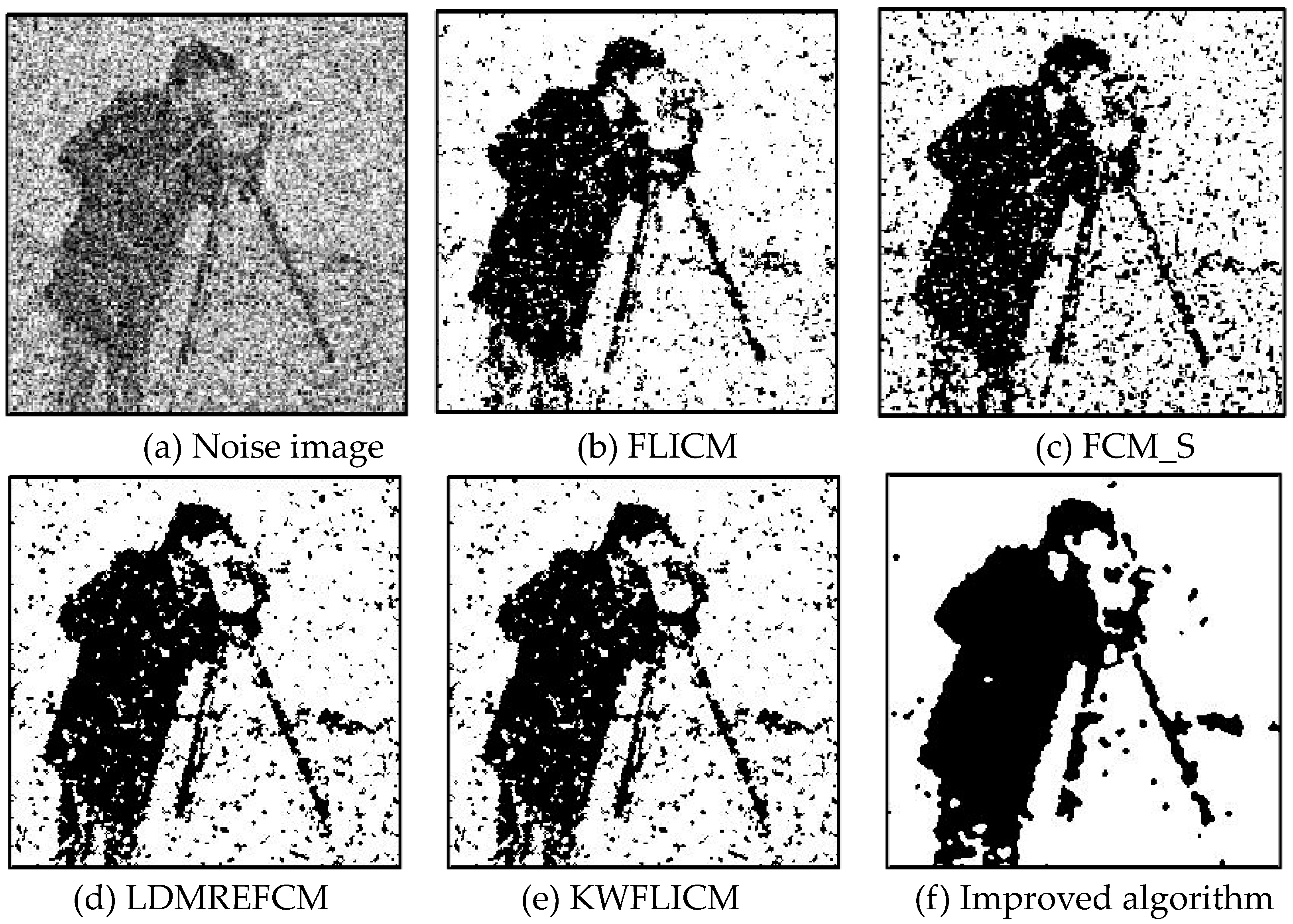

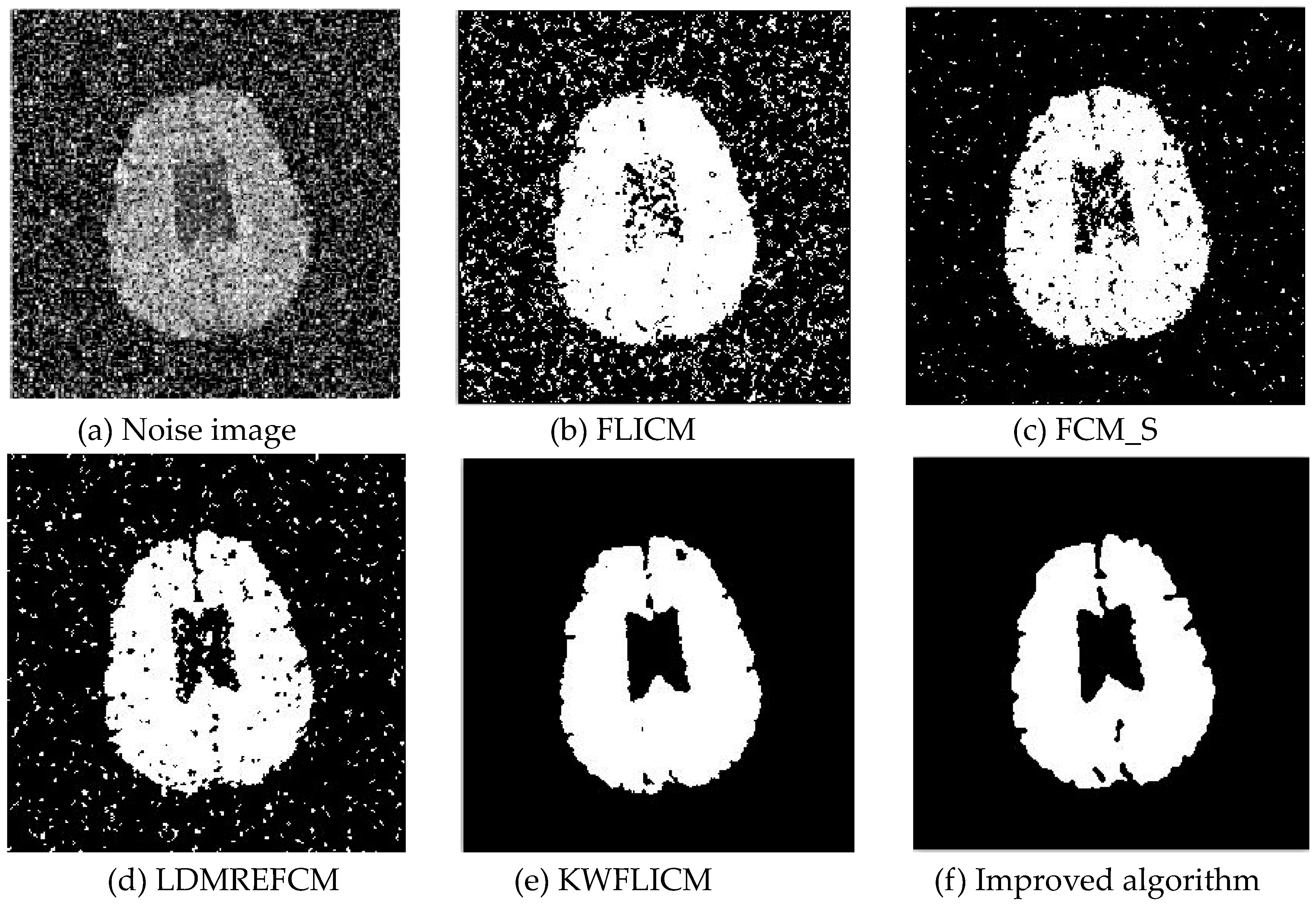

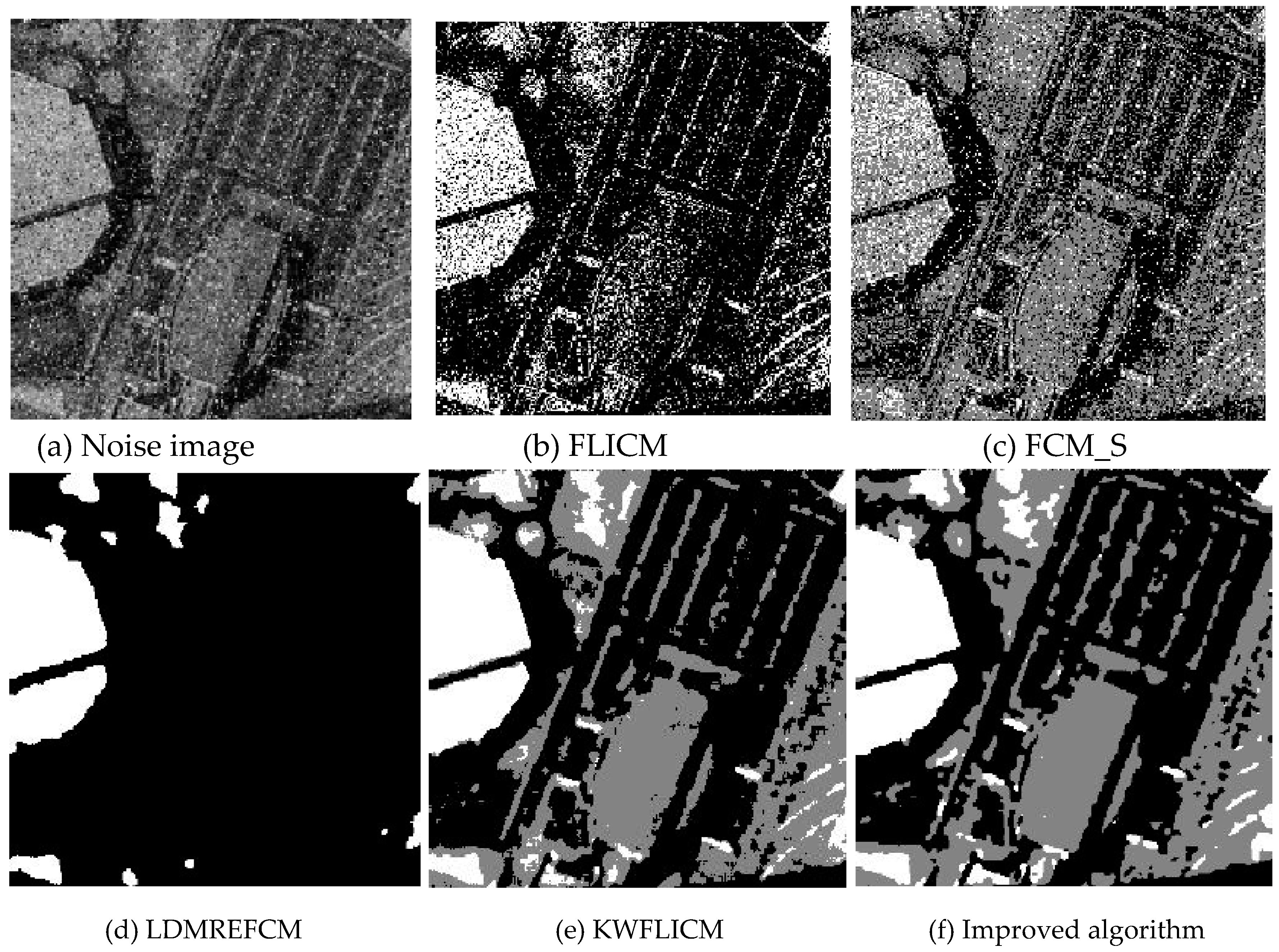

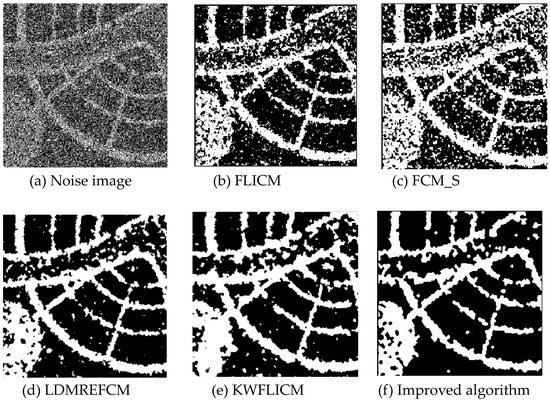

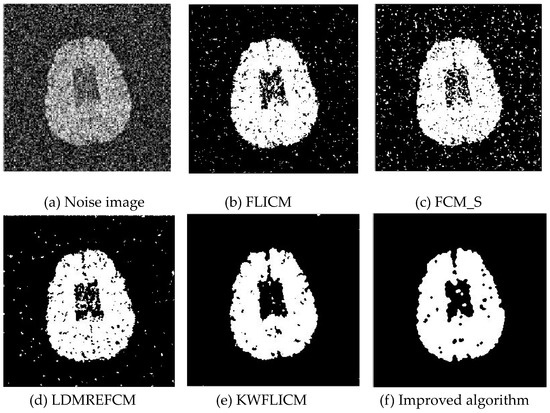

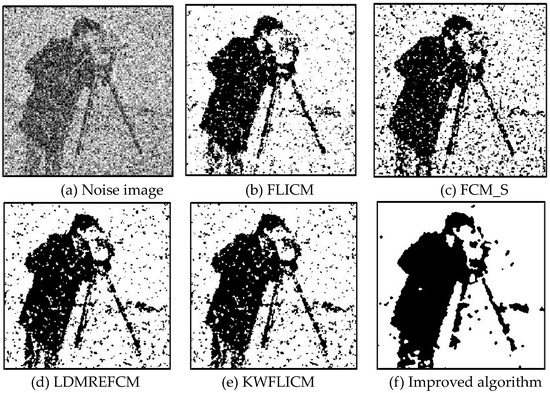

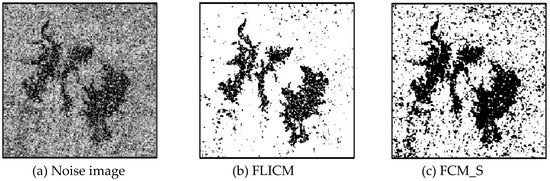

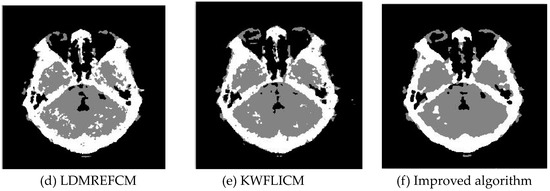

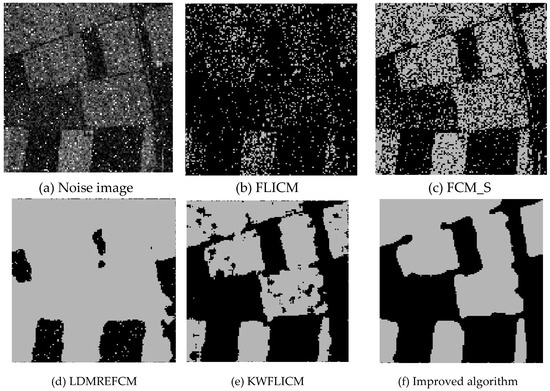

Gaussian noise was added to two remote sensing images with a mean value of 0 and mean variances of 57 and 80. Gaussian noise was added to images containing four artificial categories, brain CT (Computed Tomography) images, and camera images with a mean value of 0 and mean variances of 140 and 161. The number of clusters was set to 3, 4, 2, and 2. The results were compared using the results from the FLICM, FCM_S, LDMREFCM, and KWFLICM algorithms and the improved algorithm. The original image is shown in Figure 1, and the experimental results are shown in Figure 2, Figure 3, Figure 4 and Figure 5 (b–f). The error rate and PSNR of the segmentation results are shown in Table 1 and Table 2, and the iteration time and the number of iterations are shown in Table 3 [40,41].

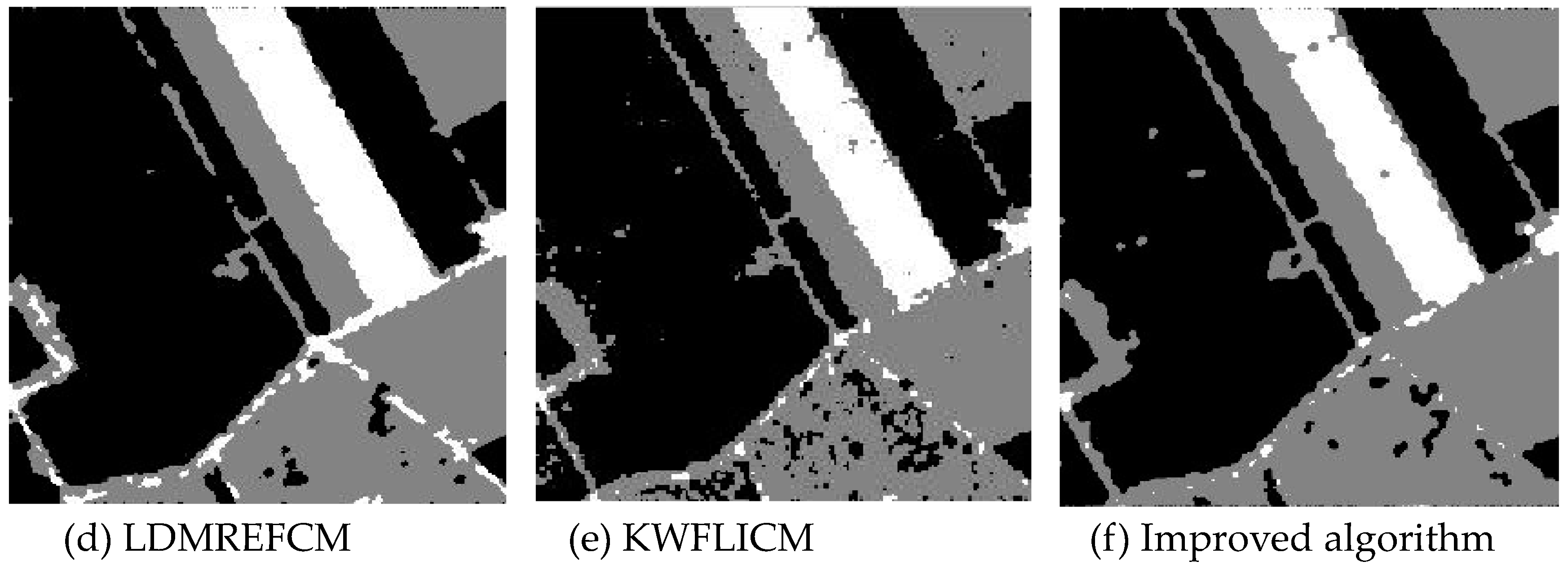

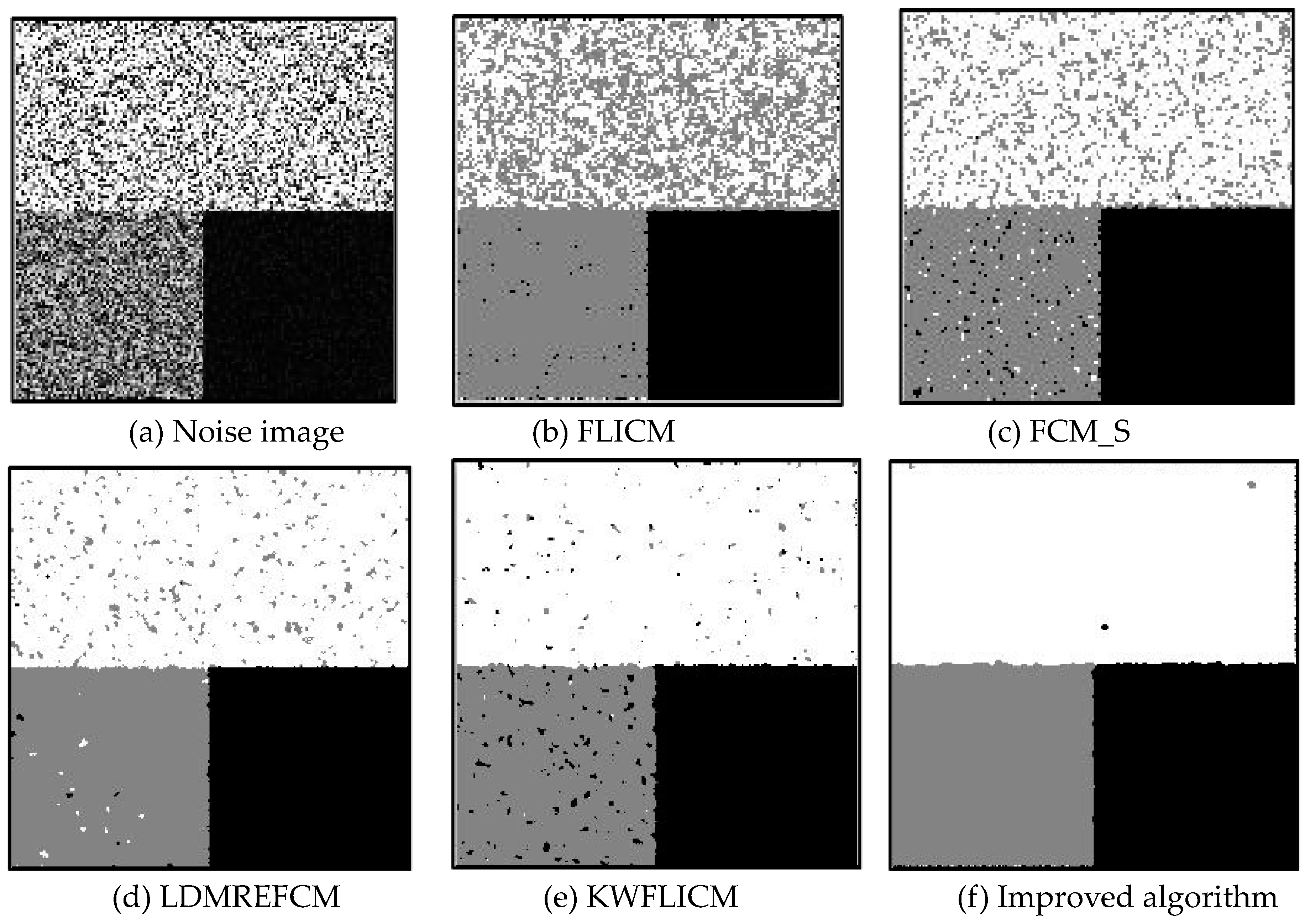

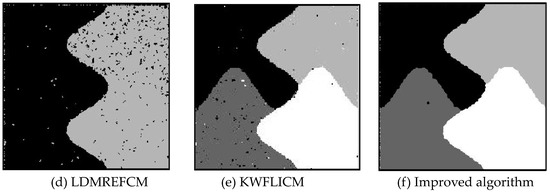

Figure 2.

Gaussian noise disturbing remote sensing image 1 (a) and the segmentation results (b–f). FLICM: Fuzzy local information C-means, FCM_S: Fuzzy C-means with spatial constraints, LDMREFCM: Local data and membership relative entropy-based FCM, KWFLICM: Kernel-weighted FLICM, and Improved algorithm.

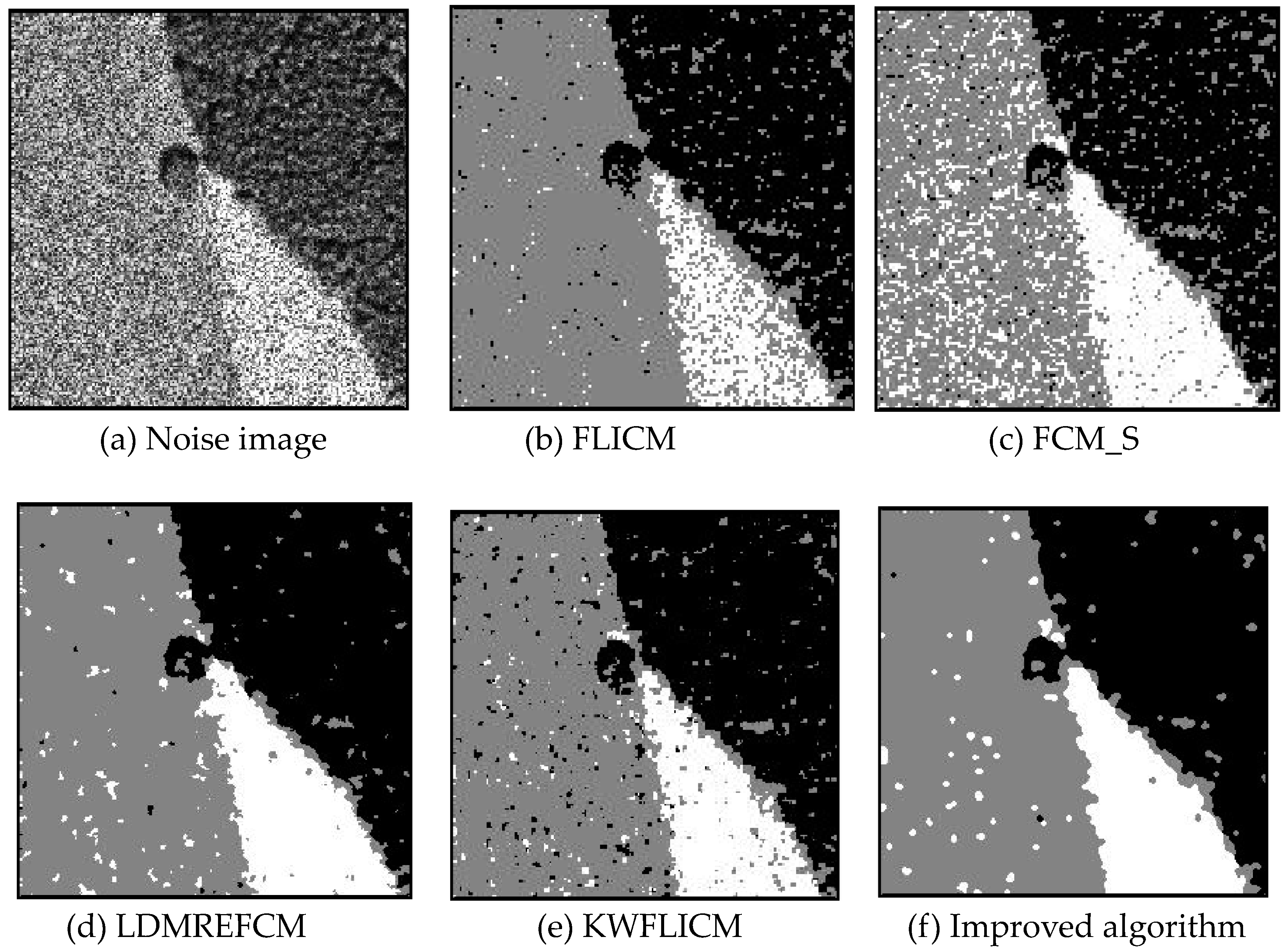

Figure 3.

Gaussian noise disturbing remote sensing image 2 (a) and the segmentation results (b–f).

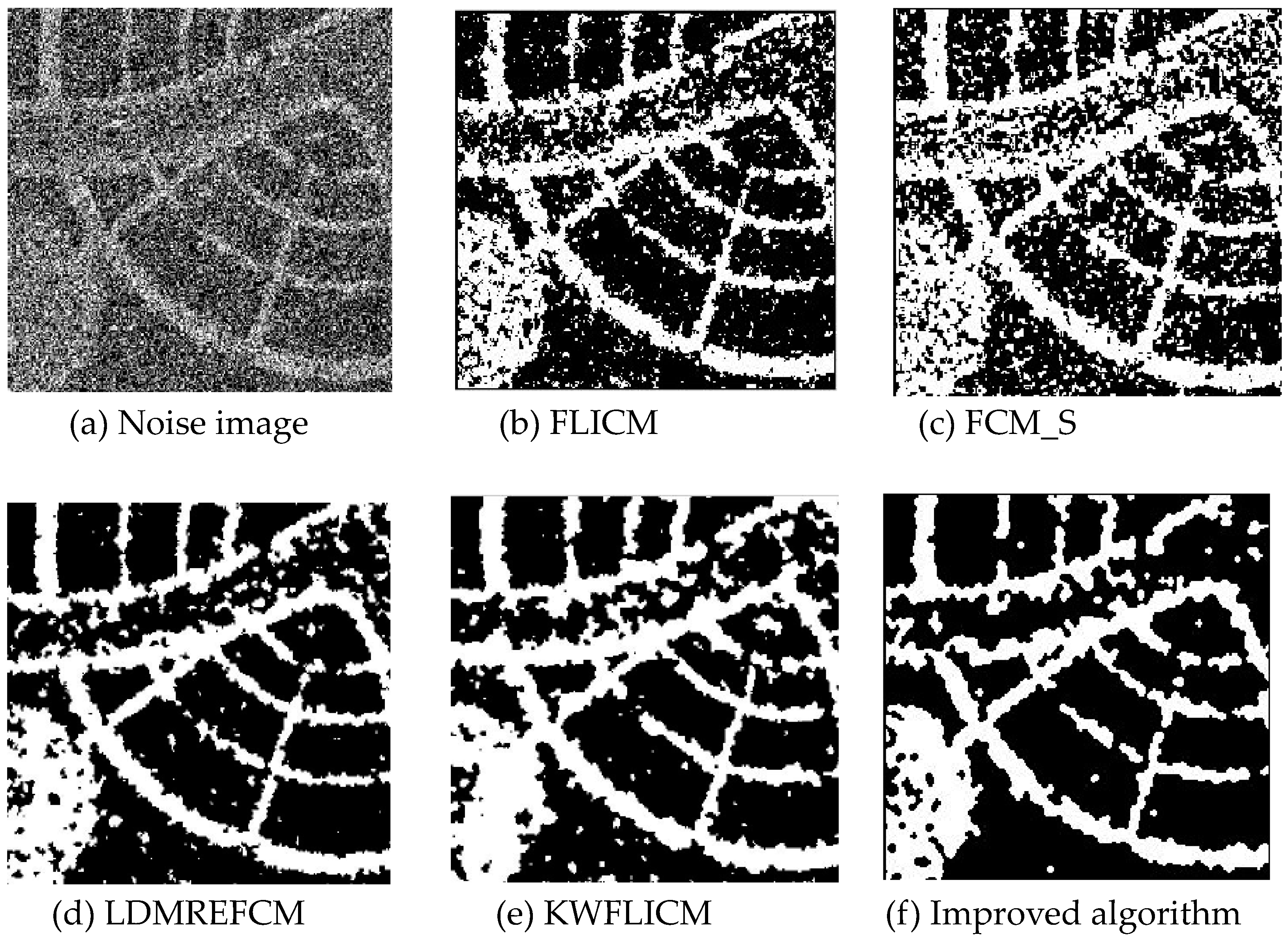

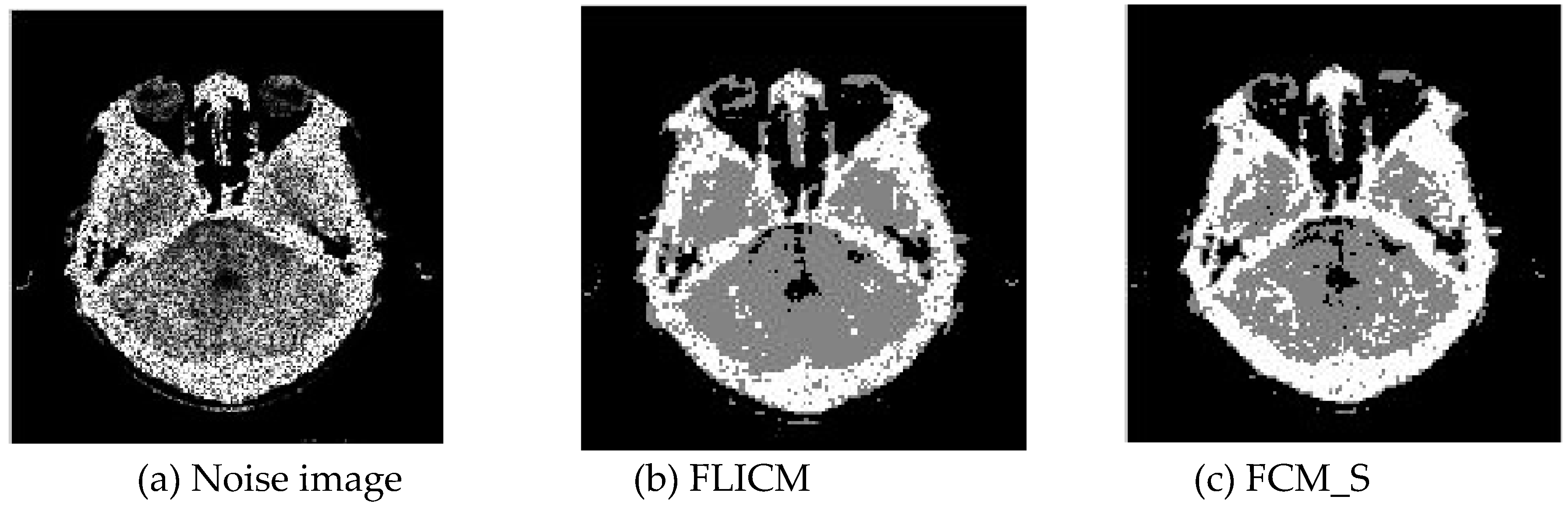

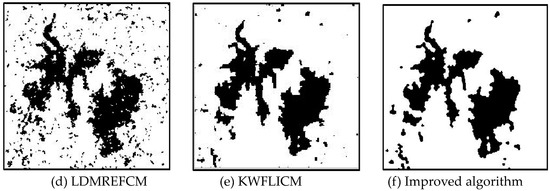

Figure 4.

Gaussian noise interfering with the brain slice image (a) and the segmentation results (b–f).

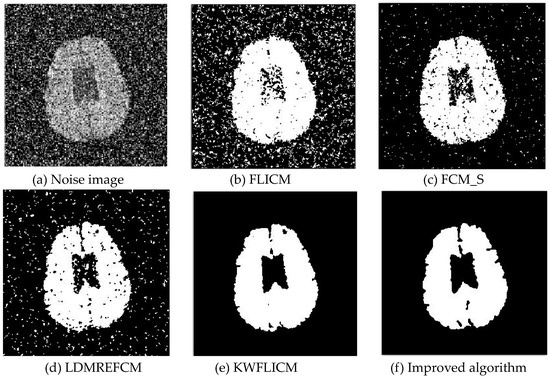

Figure 5.

Gaussian noise disturbing the camera image (a) and the segmentation results (b–f).

Table 1.

Comparison of the peak signal-to-noise ratio (PSNR) (dB) for the anti-noise and Gaussian noise after using each algorithm.

Table 2.

Comparisons of the misclassification rate (MCR) (%) against Gaussian noise using different algorithms.

Table 3.

Comparison of the iteration times and number of iterations.

3.1.2. Test Result

Comparing the segmentation results of the five algorithms in Figure 2, Figure 3, Figure 4 and Figure 5 for four images with different degrees of Gaussian noise interference, we can see that the segmentation results of the FCM_S, FLICM, and LDMREFCM algorithms still contained many noise points; the KWFLICM algorithm contained fewer noise points; while the improved algorithm has the fewest noise points. Table 1 shows that the improved algorithm had the highest signal-to-noise ratio compared with the other four algorithms, which shows that the improved algorithm had the strongest anti-Gaussian noise ability. Table 2 shows that the segmentation result of the improved algorithm was the smallest of all the algorithms, which shows that the segmentation result of the improved algorithm was closer to the ideal segmentation result and had a better segmentation performance. Comparing the PSNR and iteration time of each algorithm in Table 3, the average PSNR of the improved algorithm was 0.7 dB higher than that of the KWFLICM algorithm, and the average iteration time of the improved algorithm was 500 s less than that of the KWFLICM algorithm [42,43]. The iteration times of the FCM_S and FLICM algorithms were the lowest, but the difference between the improved algorithm results and the PSNR was 2–5 dB. The anti-noise ability of the FLCM and FCM_S method was poor. Combining the PSNR test results and the iteration time, the improved algorithm had a better anti-Gaussian noise segmentation performance.

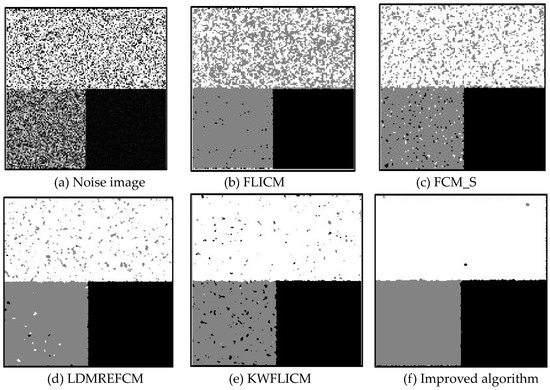

3.2. Image Segmentation Test of Salt-and-Pepper Noise

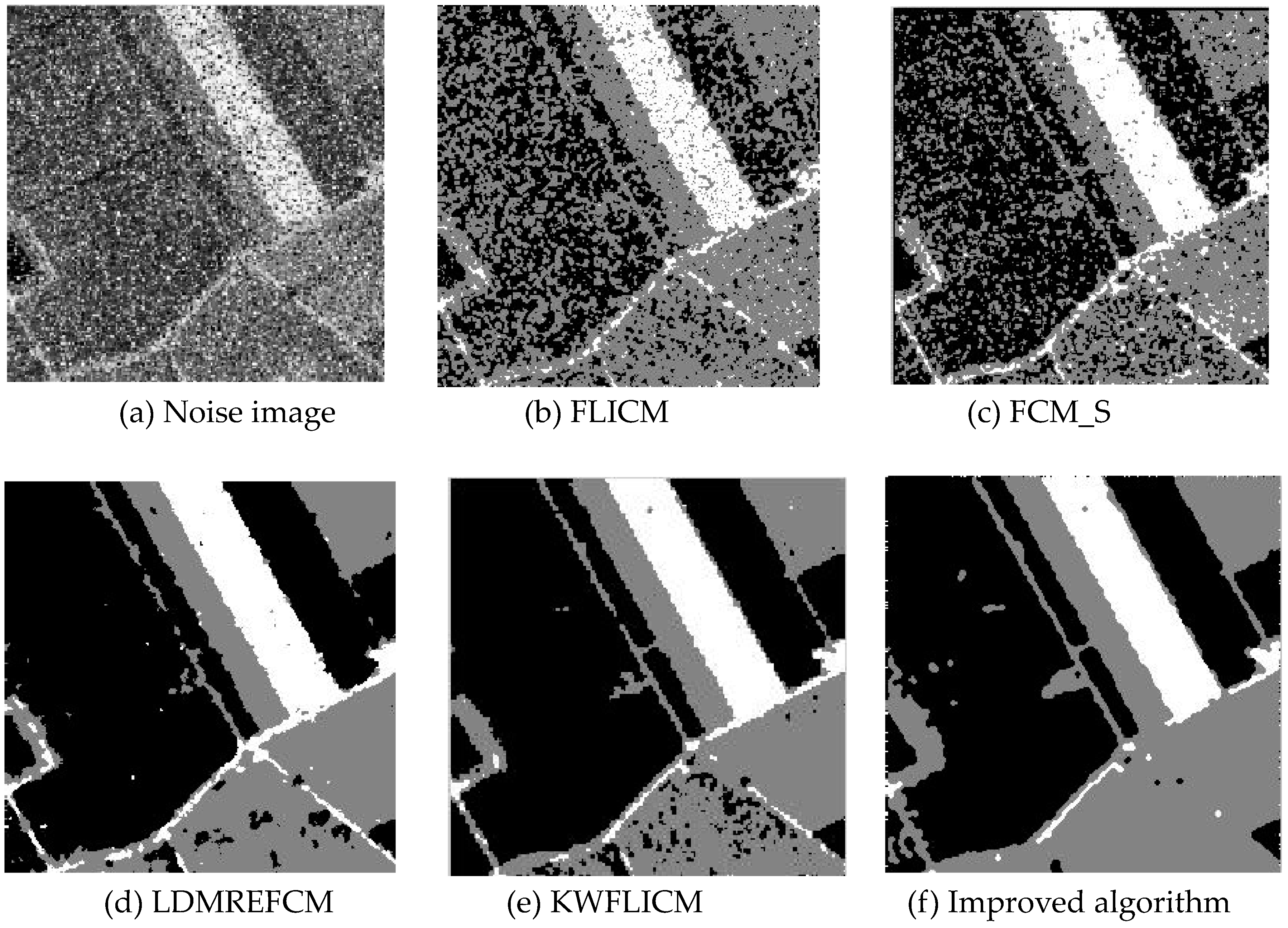

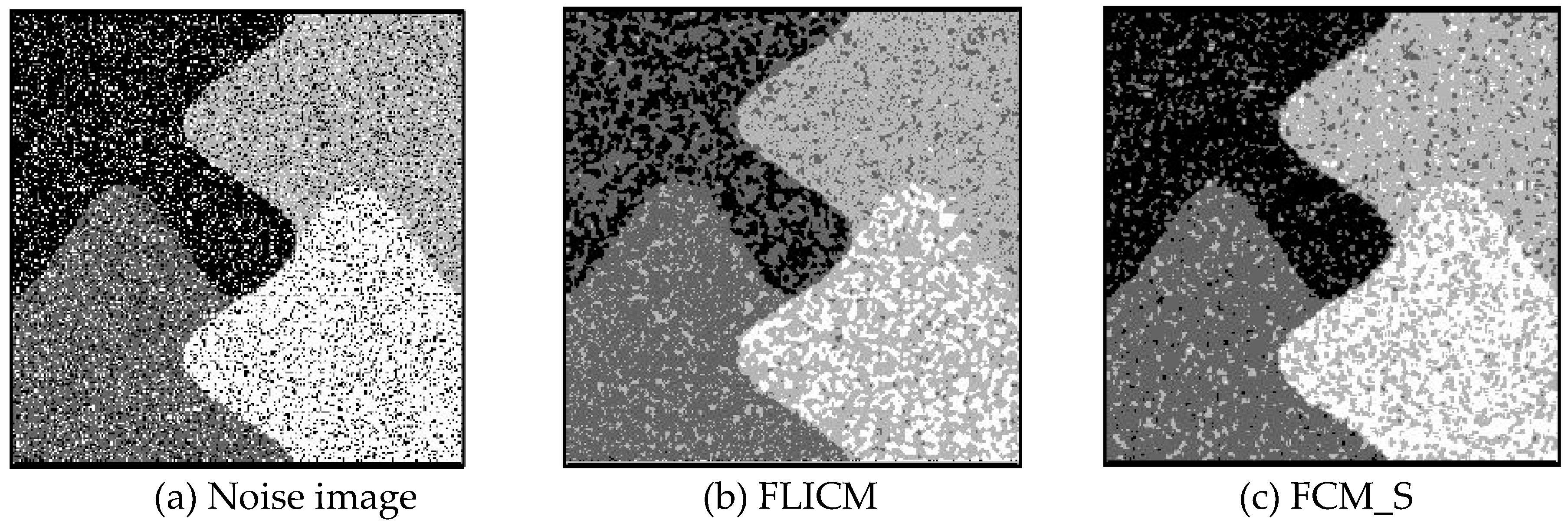

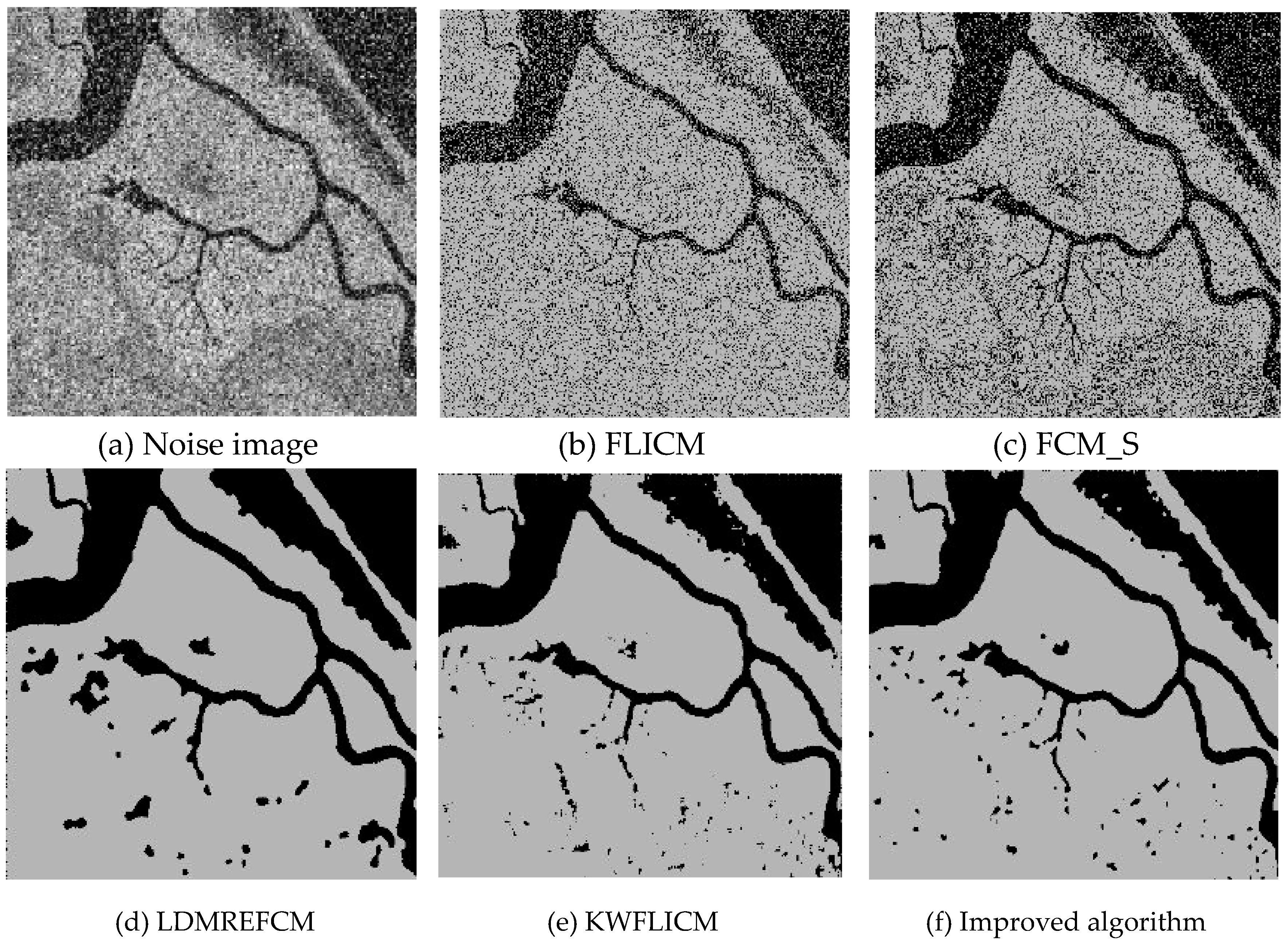

3.2.1. Segmentation Performance Test

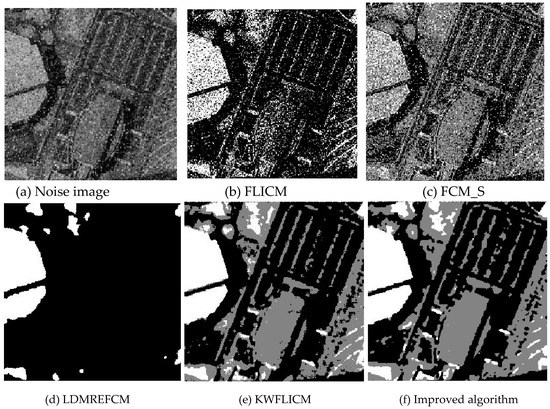

In this experiment, 20% and 40% salt-and-pepper noise were added to two remote sensing images, respectively, while 40% and 30% salt-and-pepper noise were added to brain CT images and images containing four artificial categories, respectively. The experimental results are shown in Figure 6, Figure 7, Figure 8 and Figure 9. The number of clusters was set to 3, 4, 2, and 2. The PSNRs and error rates are shown in Table 4 and Table 5, respectively, and the iterative operation time and number of iterations are shown in Table 6.

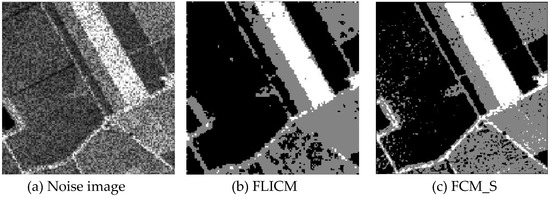

Figure 6.

Disturbance of salt-and-pepper noise on remote sensing image 1 (a) and the segmentation results (b–f).

Figure 7.

Remote sensing image 4 disturbed using salt-and-pepper noise (a) and the segmentation results (b–f).

Figure 8.

Salt-and-pepper noise interfering with brain slice images (a) and the segmentation results (b–f).

Figure 9.

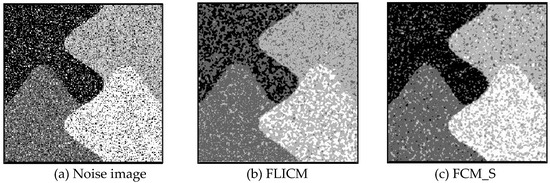

Images containing four artificial categories disturbed by salt-and-pepper noise (a) and the segmentation results (b–f).

Table 4.

Comparison of the PSNR (dB) for algorithms applied to images disturbed by salt-and-pepper noise.

Table 5.

Comparison of the MCR (%) for algorithms applied to images disturbed by salt-and-pepper noise.

Table 6.

Operation time and number of iterations for each algorithm.

3.2.2. Test Result

Comparing the results of image segmentation with the multiplicative noise in Figure 6, Figure 7, Figure 8 and Figure 9, we can see that the FCM_S and FLICM algorithms took neighborhood information into account and suppressed some of the multiplicative noise, but in the case of high noise interference, compared with the improved algorithm, the segmentation results contained a large amount of noise. As seen from the results of the artificial segmentation in Figure 6, Figure 7, Figure 8 and Figure 9, the LDMREFCM algorithm produced the phenomenon of false segmentation. The KWFLICM algorithm and the improved algorithm could remove a large number of noise points. From the test results of the PSNR and the error rate (ERR) of the algorithms in Table 4 and Table 5, along with the iteration times of the algorithms in Table 6, it can be concluded that compared with the PSNR of the FCM_S and FLICM algorithms, the LDMREFCM, KWFLICM, and improved algorithms had a significantly greater noise suppression ability. Table 6 shows that the iteration time of the improved algorithm was the lowest. Although the PSNR of the improved algorithm was 0.7 dB less than that of the KWFLICM algorithm [44,45], the iteration time was 300 s less than that of the KWFLICM algorithm, and the PSNR of the brain CT image segmentation test results in Table 6 was 0.7 dB less than that of the KWFLICM algorithm. However, the iteration time was 45 s less than that of the KWFLICM algorithm. In summary, the proposed algorithm showed a superior performance compared with the FCM_S, FLICM, KWFLICM, and LDMREFCM algorithms, where a large amount of salt-and-pepper noise is suppressed, and the iteration speed of the algorithm was faster.

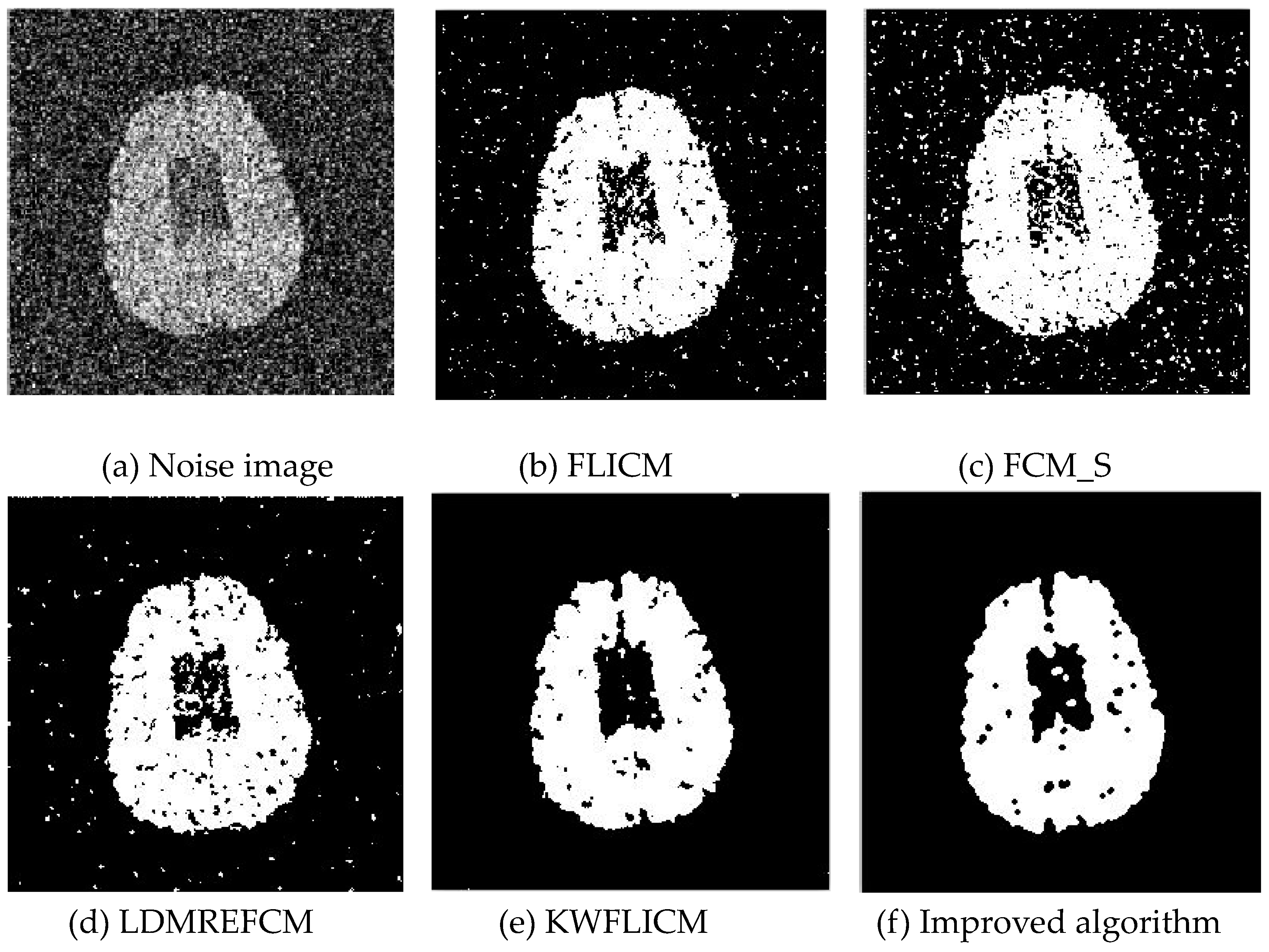

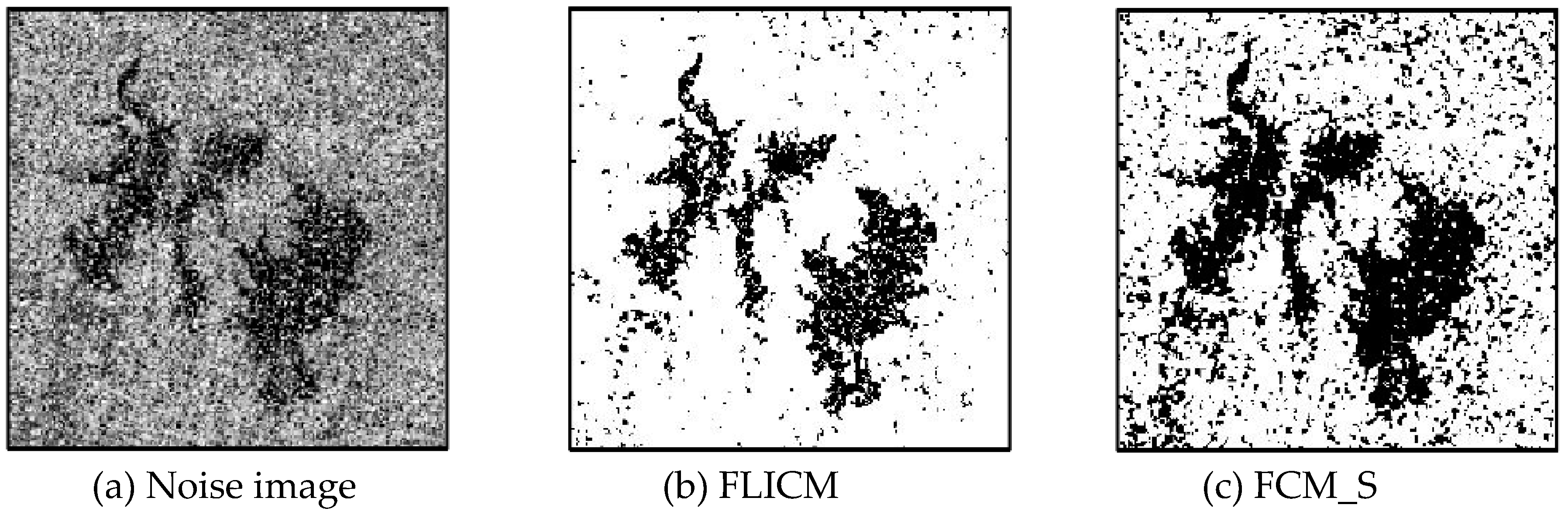

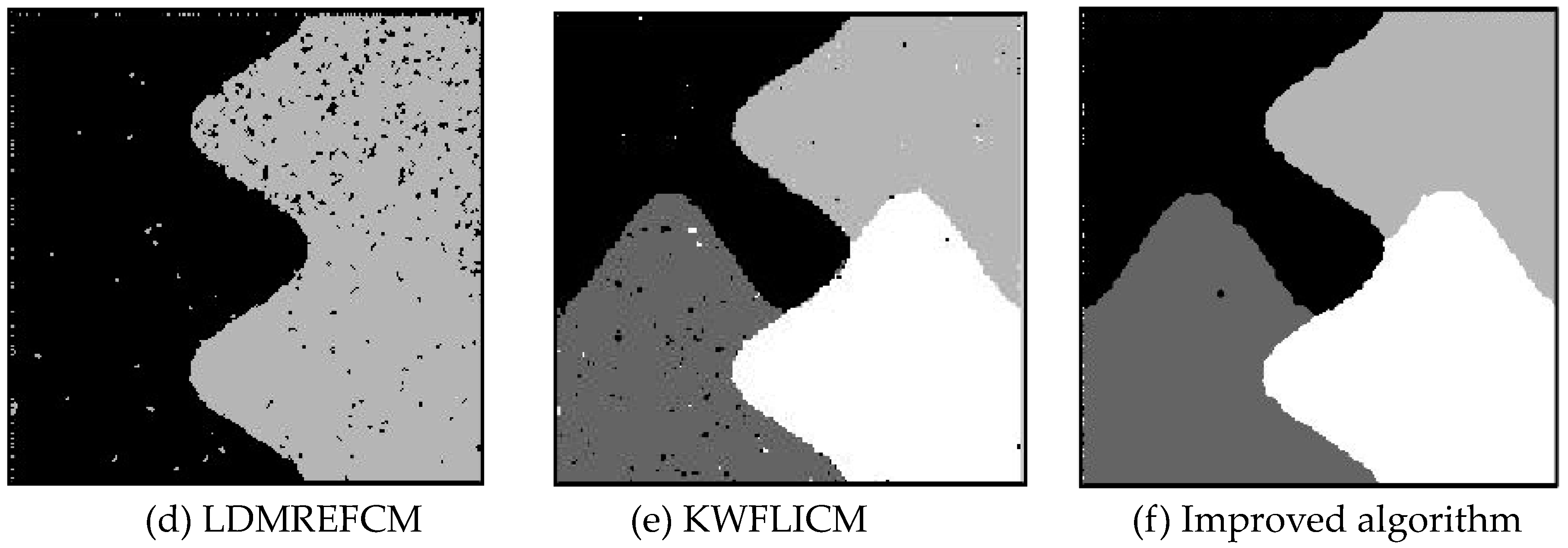

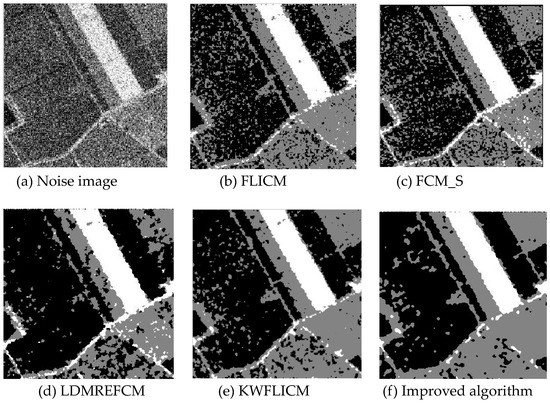

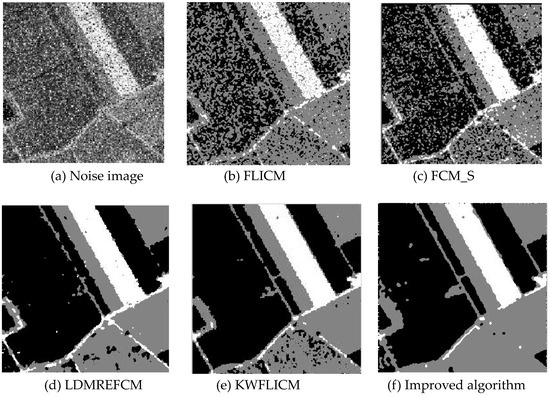

3.3. Image Segmentation Test with Multiplicative Noise

3.3.1. Segmentation Performance Test

Multiplicative noise was added to the remote sensing image, the medical image, and the man-made image with a mean value of 0 and mean variances of 80, 114, 140, and 161. The number of clusters was set to 3, 4, 2, and 2. The experimental results are shown in Figure 10, Figure 11, Figure 12 and Figure 13. The error rate of the segmentation results is shown in Table 7 and Table 8. The iteration times and number of iterations of the algorithms are shown in Table 9 [46,47,48].

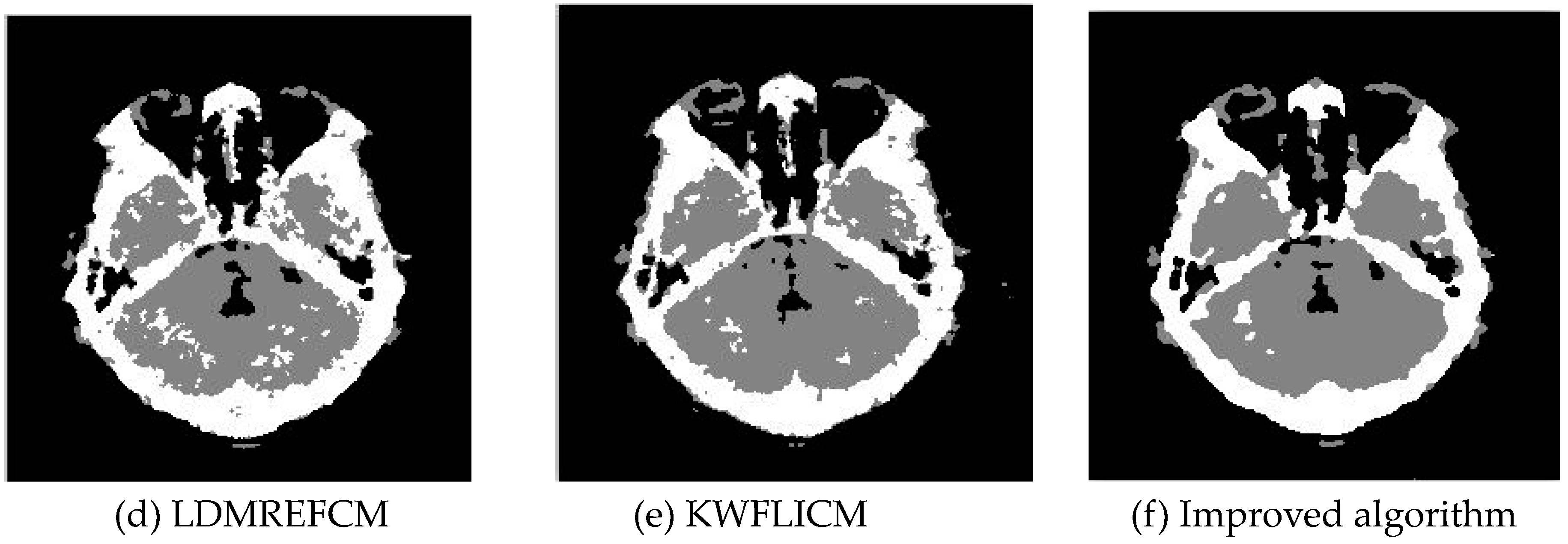

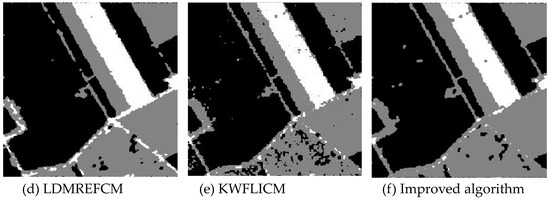

Figure 10.

Multiplicative noise disturbing remote sensing image 1 (a) and the segmentation results (b–f).

Figure 11.

Multiplicative noise disturbing remote sensing image 3 (a) and the segmentation results (b–f).

Figure 12.

Multiplicative noise disturbing brain CT images (a) and the segmentation results (b–f).

Figure 13.

Multiplicative noise interfering with three types of artificial images (a) and the segmentation results (b–f).

Table 7.

Comparison of the PSNR (dB) for the multiplicative noise resistance of algorithms.

Table 8.

Comparison of the MCR (%) for multiplicative noise resistance of algorithms.

Table 9.

Iteration time and number of iterations for each algorithm.

3.3.2. Test Result

Comparing the results of the image segmentation with multiplicative noise in Figure 10, Figure 11, Figure 12 and Figure 13, we can see that the FCM_S and FLICM algorithms took neighborhood information into account and suppressed part of the multiplicative noise. The KWFLICM and LDMREFCM algorithms could remove a large number of noise points. Compared with the other algorithms, the improved algorithm contained the fewest noise points. The edges of the segmentation results were continuous and smooth [49]. Compared with Table 7, the PSNR of the improved algorithm was the largest, which proved that the improved algorithm had a better robustness against multiplicative noise. Comparing the error rate of the segmentation results of each algorithm in Table 8 shows that the segmentation results of this algorithm were closer to the ideal segmentation results and had a better segmentation performance. Combined with the comparison of the iteration times in Table 9, the segmentation performance and PSNR of the KWFLICM algorithm were lower than those of the improved algorithm, and the iteration time of the improved algorithm was much shorter than that of the KWFLICM algorithm. In conclusion, the improved algorithm not only guaranteed good robustness against noise, but also reduced the iteration time and improved the operation efficiency of the algorithm.

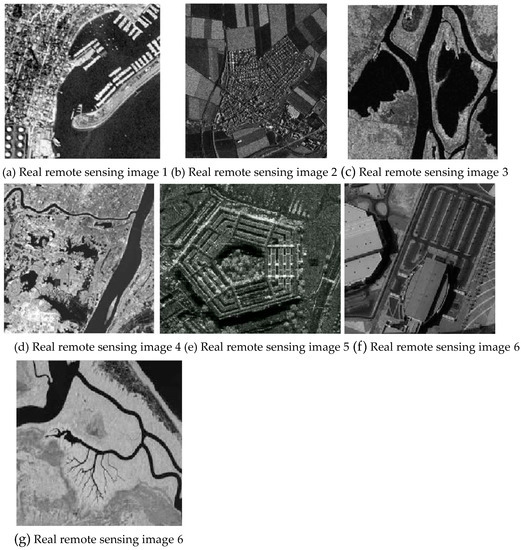

3.4. Segmentation Performance Test

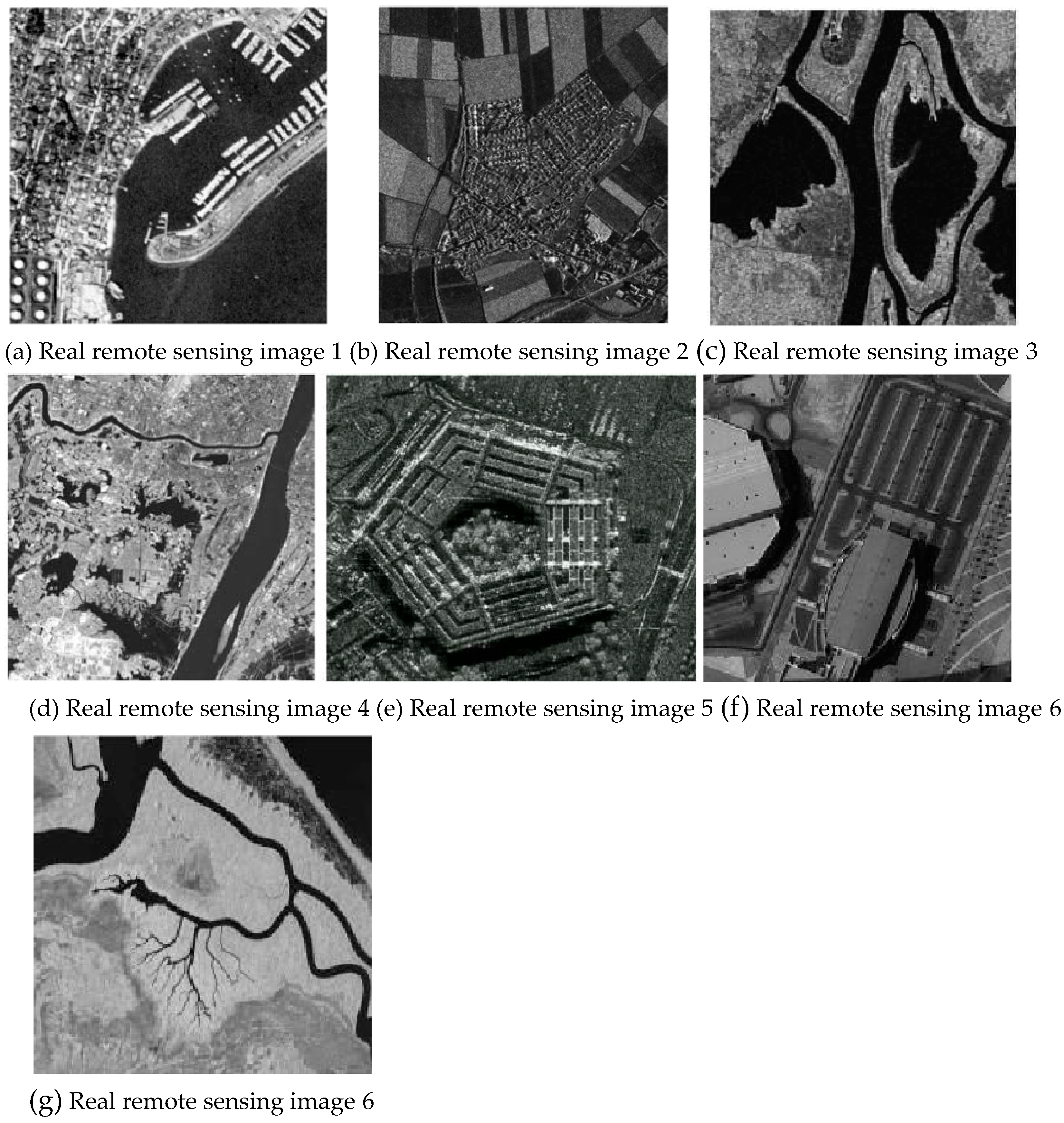

To test the segmentation efficiency of the algorithm, several real remote sensing images of different sizes were selected for segmentation. Table 10 shows the segmentation time comparison of the five real remote sensing images of different sizes (Figure 14a–g, with sizes of 256 × 256, 532 × 486, 350 × 290, 500 × 500, 590 × 490, 700 × 680, 1024 × 768, respectively), among which, the bold value is the optimal value. It can be seen from this that the segmentation efficiency of the first four comparison algorithms on each real remote sensing image is lower, and the larger the image scale is, the longer the segmentation time is; the improved algorithm can achieve less segmentation time for real remote sensing images of different sizes, and the segmentation efficiency is much higher than other algorithms. The above analysis shows that the algorithm proposed in this paper has high efficiency, and it has certain practical significance and reference value for large-scale remote sensing image processing in practical applications.

Table 10.

Segmentation time of real remote sensing images with different sizes by each algorithm.

Figure 14.

Real remote sensing images.

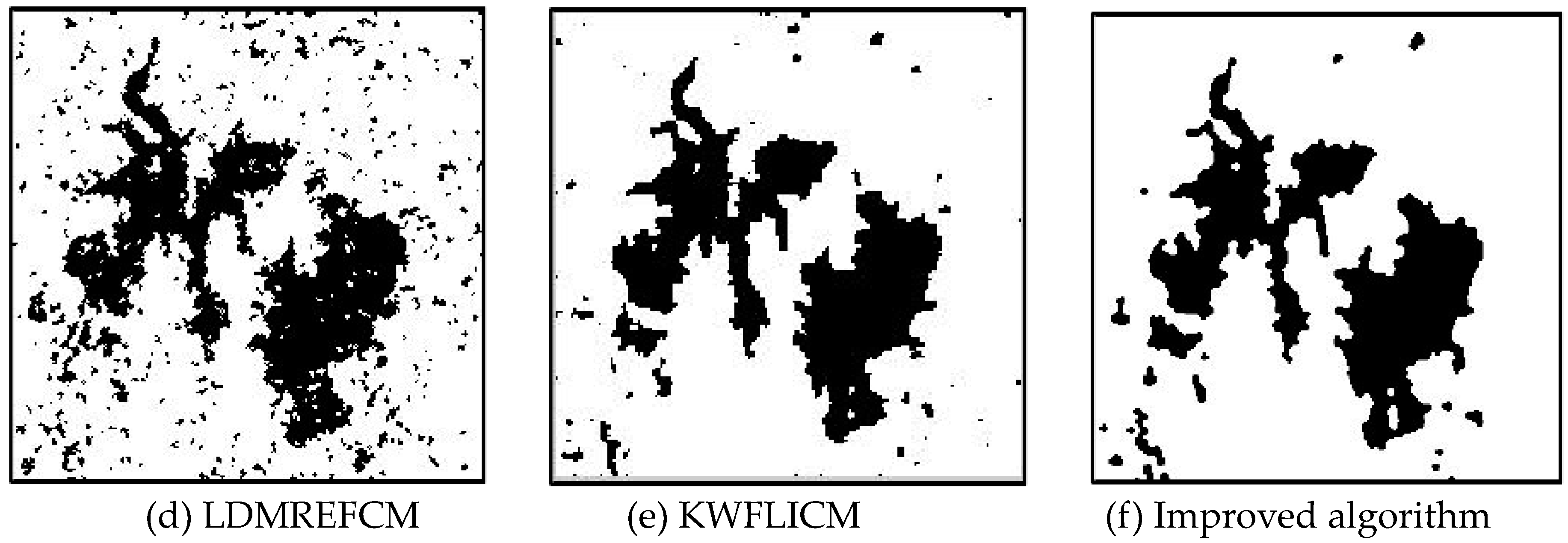

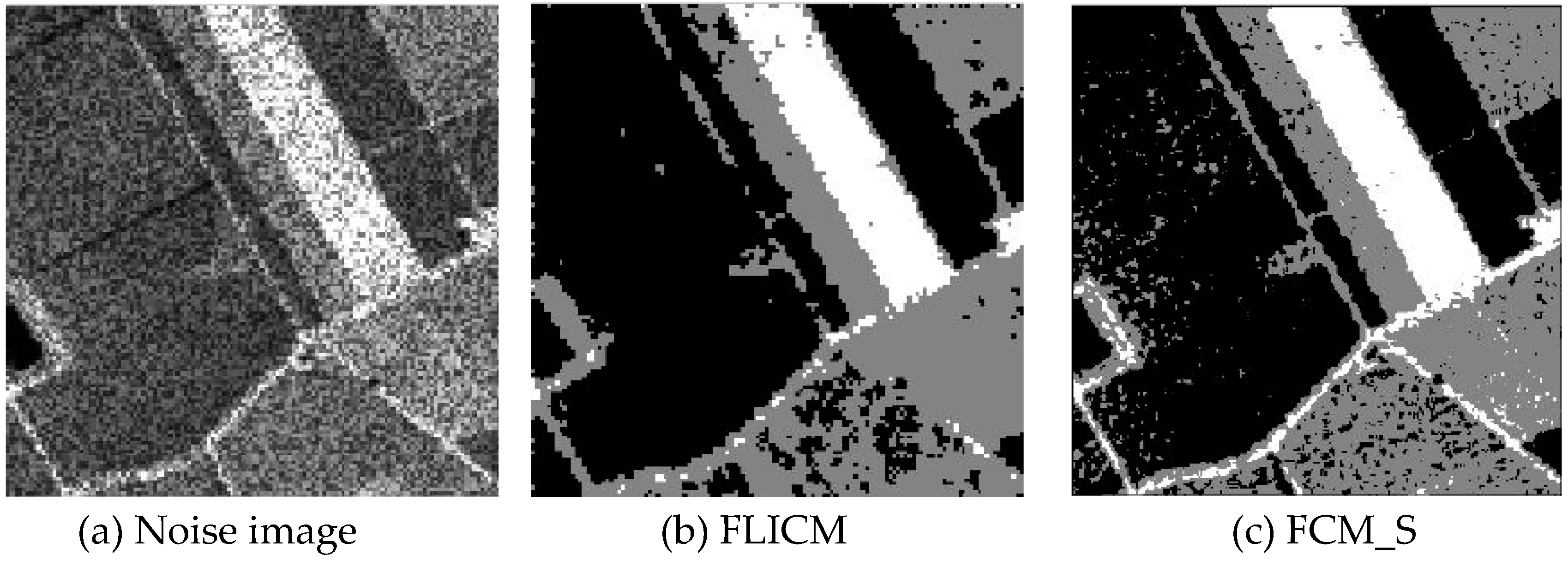

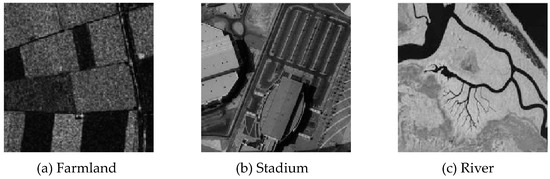

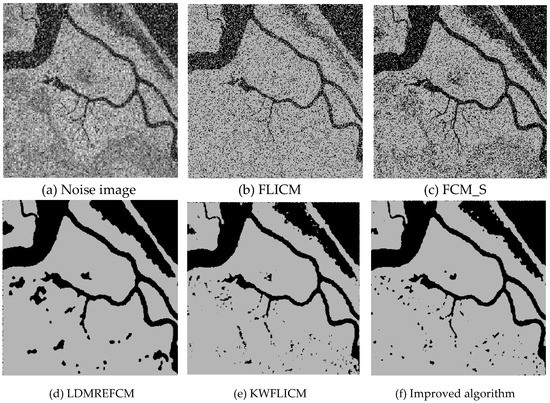

3.5. Segmentation Test of Remote Sensing Images Disturbed Using Mixed Noise

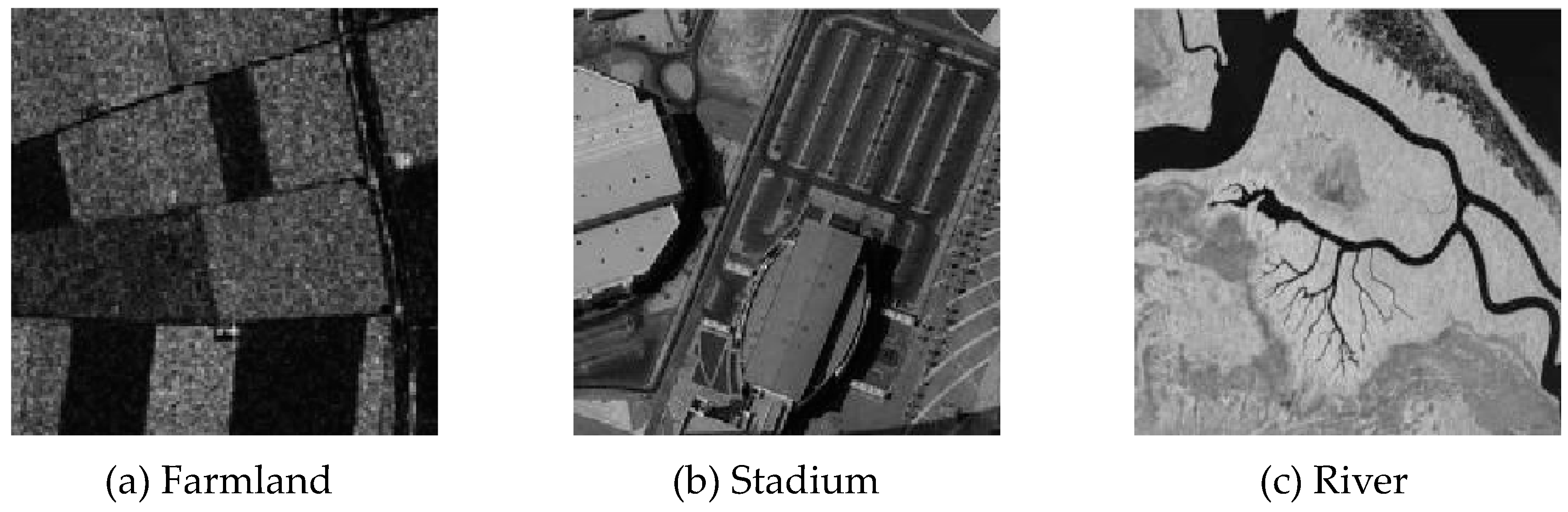

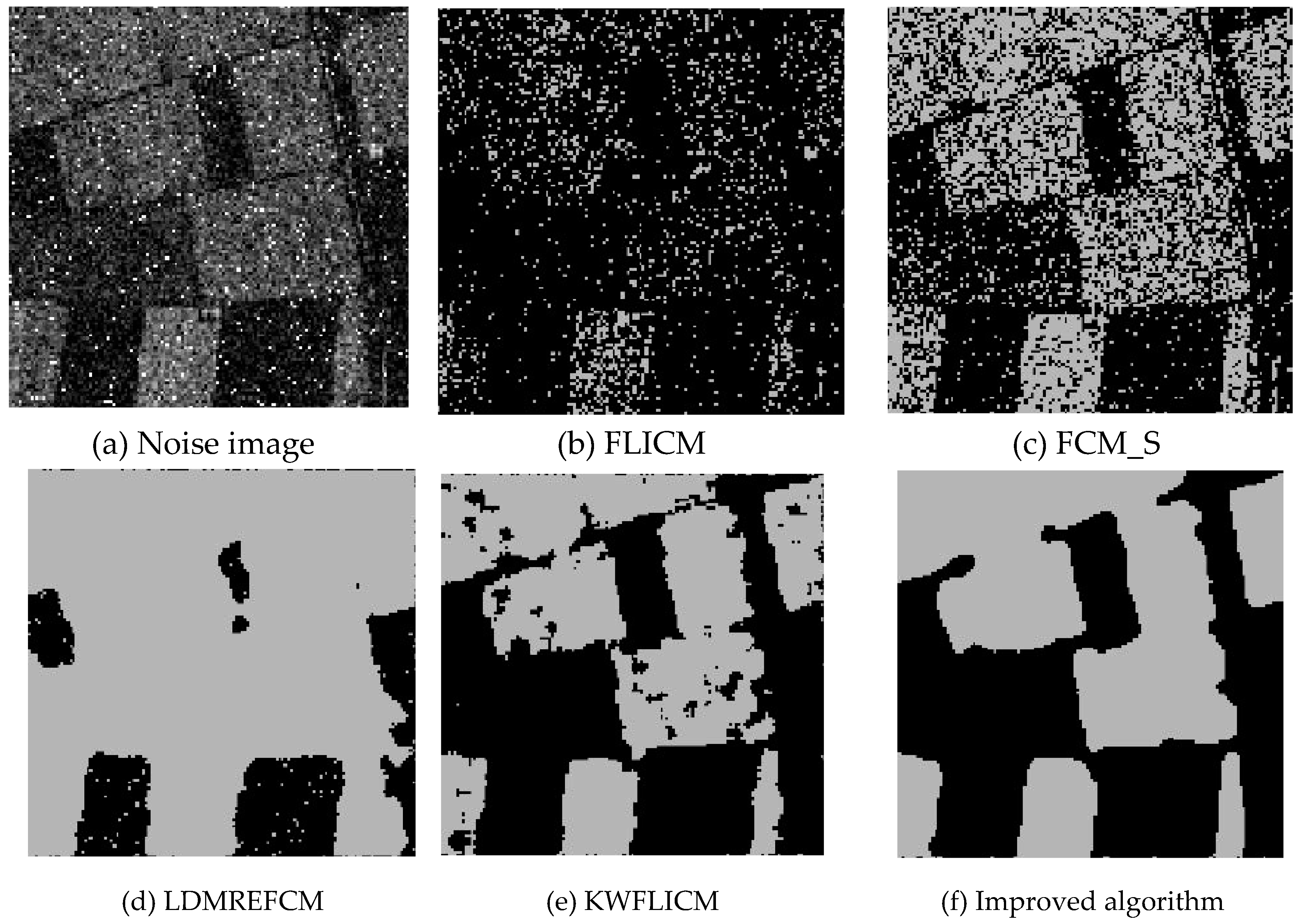

Three remote sensing images, including farmland, a stadium, and a river (Figure 15), were segmented and tested by adding Gaussian noise (mean value was 0, mean square deviation was 25) and salt-and-pepper noise of different intensities (5%, 10%, and 30%). The number of clusters was set to 2, 3, and 2, and the segmentation results are shown in Figure 16, Figure 17 and Figure 18.

Figure 15.

Original remote sensing images.

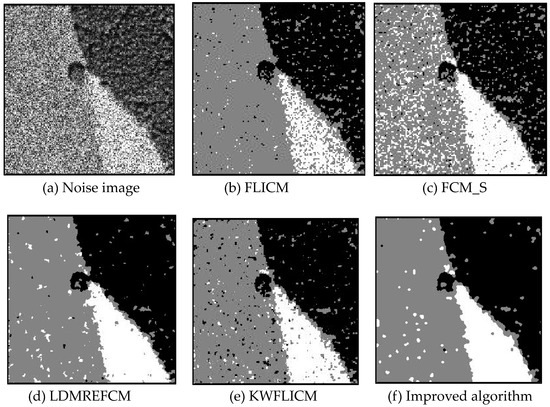

Figure 16.

Interference of mixed noise on the farmland image (a) and the segmentation results (b–f).

Figure 17.

Interference of mixed noise on the stadium image (a) and the segmentation results (b–f).

Figure 18.

Interference of mixed noise on the river image (a) and the segmentation results (b–f).

Compared with the other five algorithms, the improved algorithm was more suitable for the needs of image segmentation disturbed by salt-and-pepper and Gaussian mixture noise, as is shown in Table 11 and Table 12.

Table 11.

The PSNR (dB) comparison between different algorithms against mixed noise.

Table 12.

The MCR (%) comparison against anti-mixed noise between each algorithm.

4. Conclusions

The FLICM algorithm combines neighborhood pixel spatial information, gray information, and fuzzy classification information, which improves the anti-noise performance of the algorithm. However, the algorithm does not take into account the impact of different features on clustering. Additionally, the FLICM algorithm does not minimize the objective function strictly according to the Lagrange method, it easily falls into local optima, and the iteration speed is slow. In this study, the FLICM algorithm was improved. First, the membership degree was introduced into the local constraint information of the FLICM algorithm. Considering the influence of features on clustering, the feature saliency was then introduced into the objective function of the algorithm. Finally, the neighborhood weighting function was constructed using the classification membership degree, and the membership degree was processed to obtain the feature-based membership. The local fuzzy clustering algorithm was selected. The improved algorithm was compared with the existing robust clustering segmentation algorithm in a clustering segmentation test of noisy images. The segmentation results were objectively compared based on the PSNR and error rate, which proved the effectiveness and practicability of the proposed algorithm.

Author Contributions

All authors contributed to the article. H.R. conceived and designed the simulations under the supervision of T.H. H.R. performed the experiments, analyzed the data, and wrote the paper. T.H. reviewed the manuscript and provided valuable suggestions. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Fundamental Research Funds for the Central Universities (No. 2412019FZ037).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Vrooman, H.A.; Cocosco, C.A.; Lijn, F.v.d.; Stokking, R.; Ikram, M.A.; Vernooij, M.W.; Breteler, M.M.B.; Niessen, W.J. Multi-spectral brain tissue segmentation using automatically trained k-nearest-neighbor classification. Neuroimag 2007, 37, 71–81. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Chang, D.Y.; Nowozin, S.; Kohli, p. Image segmentation using higher-order correlation clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1761–1774. [Google Scholar] [CrossRef] [PubMed]

- Pereyra, M.; Mclaughlin, S. Fast unsupervised bayesian image segmentation with adaptive spatial regularisation. IEEE Trans. Image Process. 2017, 26, 2577–2587. [Google Scholar] [CrossRef] [PubMed]

- Dunn, J.C. A fuzzy relative of the ISODATA process and its use in detecting compact well-separated clusters. J. Cybern. 1973, 3, 32–57. [Google Scholar] [CrossRef]

- Bezdek, J.C. Pattern Recognition with Fuzzy Objective Function Algorithms; Plenum Press: New York, NY, USA, 1981. [Google Scholar]

- Herman, G.T.; Carvalho, B.M. Multiseeded segmentation using fuzzy connectedness. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 460–474. [Google Scholar] [CrossRef]

- Rueda, S.; Knight, C.L.; Papageorghiou, A.T.; Noble, J.A. Feature-based fuzzy connectedness segmentation of ultrasound images with an object completion step. Med. Image Anal. 2015, 26, 30–46. [Google Scholar] [CrossRef]

- Dokur, Z.; Olmez, T. Segmentation of ultrasound images by using a hybrid neural network. Pattern Recognit. Lett. 2002, 23, 1824–1836. [Google Scholar] [CrossRef]

- Seyedhosseini, M.; Tasdizen, T. Multi-class multi-scale series contextual model for image segmentation. IEEE Trans. Image Process. 2013, 22, 4486–4496. [Google Scholar] [CrossRef]

- Vese, L.A.; Chan, T.F. A multiphase level set framework for image segmentation using the Mumford and Shah model. Int. J. Comput. Vis. 2002, 50, 271–293. [Google Scholar] [CrossRef]

- Cai, W.; Chen, S.; Zhang, D. Fast and robust fuzzy c-means clustering algorithms incorporating local information for image segmentation. Pattern Recognit. 2007, 40, 825–838. [Google Scholar] [CrossRef]

- Nguyen, T.M.; Wu, Q.M.J. Gaussian mixture model based spatial neighborhood relationships for pixel labeling problem. IEEE Trans. Syst. Man Cybern. 2012, 42, 193–202. [Google Scholar] [CrossRef]

- Li, C.; Kao, C.Y.; Gore, J.C.; Ding, Z. Minimization of region-scalable fitting energy for image segmentation. IEEE Trans. Image Process. 2008, 17, 1940–1949. [Google Scholar] [PubMed]

- Wang, X.; Min, H.; Zou, L.; Zhang, Y.-G. A novel level set method for image segmentation by incorporating local statistical analysis and global similarity measurement. Pattern Recognit. 2015, 48, 189–204. [Google Scholar] [CrossRef]

- Wang, X.; Tang, Y.; Masnou, S.; Chen, L. A global/local affinity graph for image segmentation. IEEE Trans. Image Process. 2015, 24, 1399–1411. [Google Scholar] [CrossRef] [PubMed]

- Ju, Z.; Liu, H. Fuzzy gaussian mixture models. Pattern Recognit. 2012, 45, 1146–1158. [Google Scholar] [CrossRef]

- Chen, S.M.; Chang, Y.C. Multivariable fuzzy forecasting based on fuzzy clustering and fuzzy ruleinterpolation techniques. Inf. Sci. 2010, 180, 4772–4783. [Google Scholar] [CrossRef]

- Krinidis, S.; Chatzis, V. A robust fuzzy local information C-means clustering algorithm. IEEE Trans. Image Process. 2010, 19, 1328–1337. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, C.; Tang, W.; Wei, Z. Medical image segmentation using improved. F.C.M. Sci. China Inf. Sci. 2012, 55, 1052–1061. [Google Scholar] [CrossRef]

- Zhao, X.; Li, Y.; Zhao, Q. Mahalanobis distance based on fuzzy clustering algorithm for image segmentation. Digit. Signal Process. 2015, 3, 8–16. [Google Scholar] [CrossRef]

- Sikka, K.; Sinha, N.; Singh, P.K.; Mishra, A.K. A fully automated algorithm under modified FCM framework for improved brain MR image segmentation. Magn. Reson. Imaging 2009, 27, 994–1004. [Google Scholar] [CrossRef]

- Benaichouche, A.N.; Oulhadj, H.; Siarry, P. Improved spatial fuzzy c-means clustering for image segmentation using PSO initialization, Mahalanobis distance and post-segmentation correction. Digit. Signal Process. 2013, 23, 1390–1400. [Google Scholar] [CrossRef]

- Kandwal, R.; Kumar, A.; Bhargava, S. Review: Existing image segmentation techniques. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2014, 4, 153–156. [Google Scholar]

- Khan, A.M.; Ravi, S. Segmentation. Methods: A comparative study. Int. J. Soft Comput. Eng. 2013, 4, 84–92. [Google Scholar]

- Shivhare, P.; Gupta, V. Review of image segmentation techniques including pre & post processingoperations. Int. J. Eng. Adv. Technol. 2015, 4, 153–157. [Google Scholar]

- Dass, R.; Devi, S. Image Segmentation Techniques 1. Graph. Models Image Process. 2012, 29, 100–132. [Google Scholar]

- Marr, D.; Hildreth, E. Theory of edge detection. Proc. R. Soc. Lond. 1980, 207, 187–217. [Google Scholar] [PubMed]

- Kuang, Y.H. Applications of an enhanced cluster validity index method based on the fuzzy C-means and rough set theories to partition and classification. Expert Syst. Appl. 2010, 37, 8757–8769. [Google Scholar]

- Vandenbroucke, N.; Macaire, L.; Postaire, J.G. Color Image Segmentation by Supervised Pixel Classification in A Color Texture Feature Space: Application to Soccer Image Segmentation. In Proceedings of the 15th International Conference on Pattern Recognition, Barcelona, Spain, 3–7 September 2000. [Google Scholar]

- Hou, X.; Zhang, T.; Xiong, G.; Lu, Z.; Xie, K. A novel steganalysis framework of heterogeneous images basedon GMM clustering. Signal Process. Image Commun. 2014, 29, 385–399. [Google Scholar] [CrossRef]

- Zhao, B.; Zhong, Y.; Ma, A.; Zhang, L. A spatial Gaussian mixture model for optical remote sensing imageclustering. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1–12. [Google Scholar] [CrossRef]

- Yang, M.S.; Lai, C.Y.; Lin, C.Y. A robust EM clustering algorithm for Gaussian mixture models. Pattern Recognit. 2012, 45, 3950–3961. [Google Scholar] [CrossRef]

- Lin, P.L.; Huang, P.W.; Kuo, C.H.; Lai, Y.H. A size-insensitive integrity-based fuzzy C-means method fordata clustering. Pattern Recognit. 2014, 47, 2042–2056. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, D. Robust image segmentation using FCM with spatial constraints based on new kernel-induced distance measure. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2004, 34, 1907–1916. [Google Scholar] [CrossRef] [PubMed]

- Hussain, R.; Alican Noyan, M.; Woyessa, G.; Marín, R.R.R.; Martinez, P.A.; Mahdi, F.M.; Finazzi, V.; Hazlehurst, T.A.; Hunter, T.H.; Coll, T.; et al. An ultra-compact particle size analyser using a CMOS image sensor and machine learning. Light Sci. Appl. 2020, 9, 21. [Google Scholar] [CrossRef]

- Wei, M.; Xing, F.; You, Z. A real-time detection and positioning method for small and weak targets using a 1D morphology-based approach in 2D images. Light Sci. Appl. 2018, 7, 18006. [Google Scholar] [CrossRef] [PubMed]

- Rivenson, Y.; Liu, T.; Wei, Z.; Zhang, Y.; Hanna, K.d.; Ozvan, A. PhaseStain: The digital staining of label-free quantitative phase microscopy images using deep learning. Light Sci. Appl. 2019, 8, 23. [Google Scholar] [CrossRef]

- Zhang, D.; Lan, L.; Bai, Y.; Majeed, H.; Kandel, M.E.; Popescu, G.; Cheng, J.-X. Bond-selective transient phase imaging via sensing of the infrared photothermal effect. Light Sci. Appl. 2019, 8, 116. [Google Scholar] [CrossRef]

- Yao, R.; Ochoa, M.; Yan, P.; Intes, X. Net-FLICS: Fast quantitative wide-field fluorescence lifetime imaging with compressed sensing–a deep learning approach. Light Sci. Appl. 2019, 8, 26. [Google Scholar] [CrossRef]

- Liu, C.; Dong, W.-f.; Jiang, K.-m.; Zhou, W.-p.; Zhang, T.; Li, H.-w. Recognition of dense fluorescent droplets using an improved watershed segmentation algorithm. Chin. Opt. 2019, 12, 783–790. [Google Scholar] [CrossRef]

- Hu, H.-r.; Dan, X.-z.; Zhao, Q.-h.; Sun, F.-y.; Wang, Y.-h. Automatic extraction of speckle area in digital image correlation. Chin. Opt. 2019, 12, 1329–1337. [Google Scholar] [CrossRef]

- Wang, X.-s.; Guo, S.; Xu, X.-j.; Li, A.-z.; He, Y.-g.; Guo, W.; Liu, R.-b.; Zhang, W.-j.; Zhang, T.-l. Fast recognition and classification of tetrazole compounds based on laser-induced breakdown spectroscopy and raman spectroscopy. Chin. Opt. 2019, 12, 888–895. [Google Scholar] [CrossRef]

- Cai, H.-y.; Zhang, W.-q.; Chen, X.-d.; Liu, S.-s.; Han, X.-y. Image processing method for ophthalmic optical coherence tomography. Chin. Opt. 2019, 12, 731–740. [Google Scholar] [CrossRef]

- Wang, J.; He, X.; Wei, Z.-h.; Mu, Z.-y.; Lv, Y.; He, J.-w. Restoration method for blurred star images based on region filters. Chin. Opt. 2019, 12, 321–331. [Google Scholar] [CrossRef]

- Liu, D.-m.; Chang, F.-L. Active contour model for image segmentation based on Retinex correction and saliency. Opt. Precis. Eng. 2019, 27, 1593–1600. [Google Scholar]

- Lu, B.; Hu, T.; Liu, T. Variable Exponential Chromaticity Filtering for Microscopic Image Segmentation of Wire Harness Terminals. Opt. Precis. Eng. 2019, 27, 1894–1900. [Google Scholar]

- Deng, J.; Li, J.; Feng, H.; Zeng, Z.-m. Three-dimensional depth segmentation technique utilizing discontinuities of wrapped phase sequence. Opt. Precis. Eng. 2019, 27, 2459–2466. [Google Scholar] [CrossRef]

- Wei, T.; Zhou, Y.-h. Blind sidewalk image location based on machine learning recognition and marked watershed segmentation. Opt. Precis. Eng. 2019, 27, 201–210. [Google Scholar]

- Zhang, K.-h.; Tan, Z.-h.; Li, B. Automated image segmentation based on pulse coupled neural network with partide swarm optimization and comprehensive evaluation. Opt. Precis. Eng. 2018, 26, 962–970. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).