Real-Time Stripe Width Computation Using Back Propagation Neural Network for Adaptive Control of Line Structured Light Sensors

Abstract

:1. Introduction

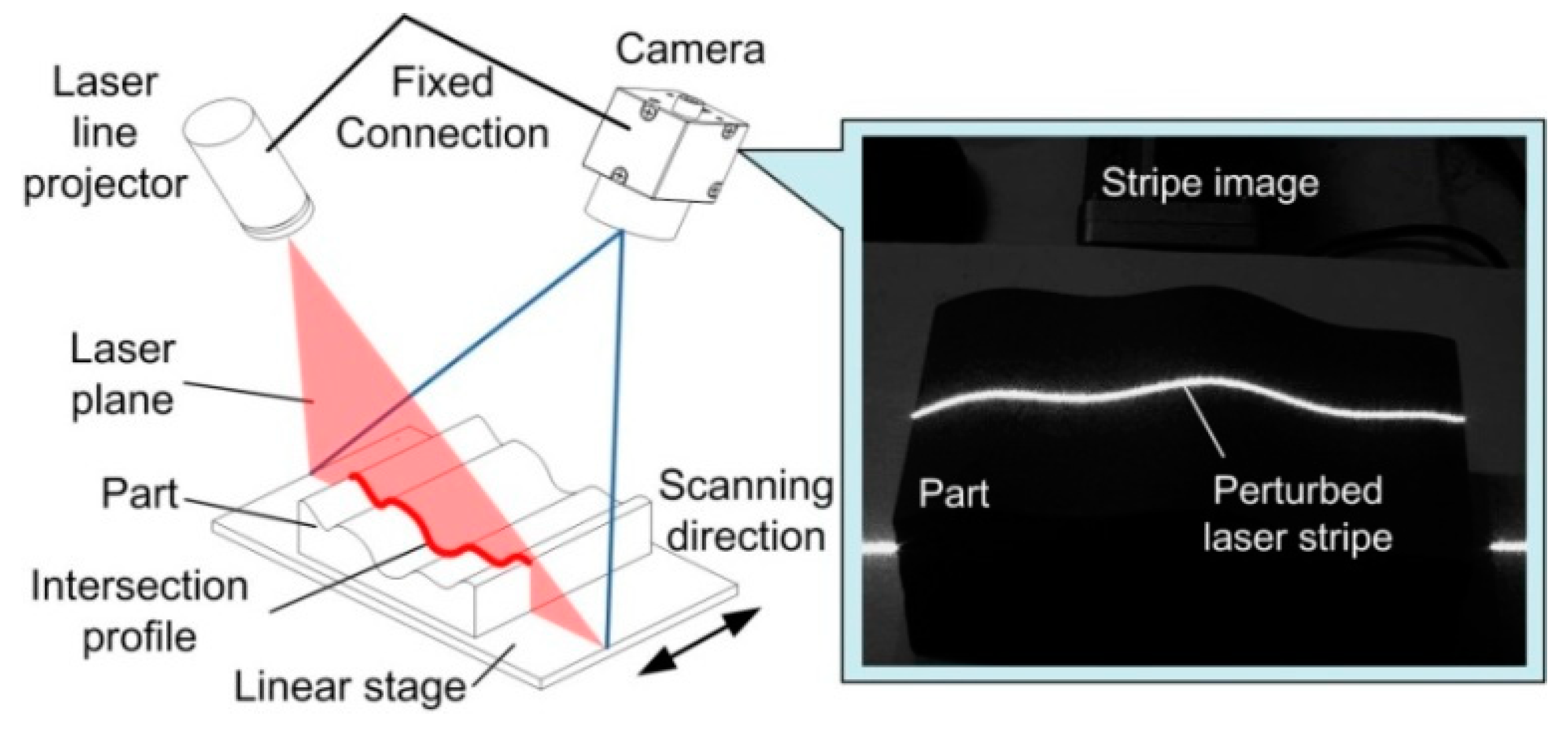

2. Measurement Principle

3. Computation of ASW Using BPNN

3.1. Computation Principle Using BPNN

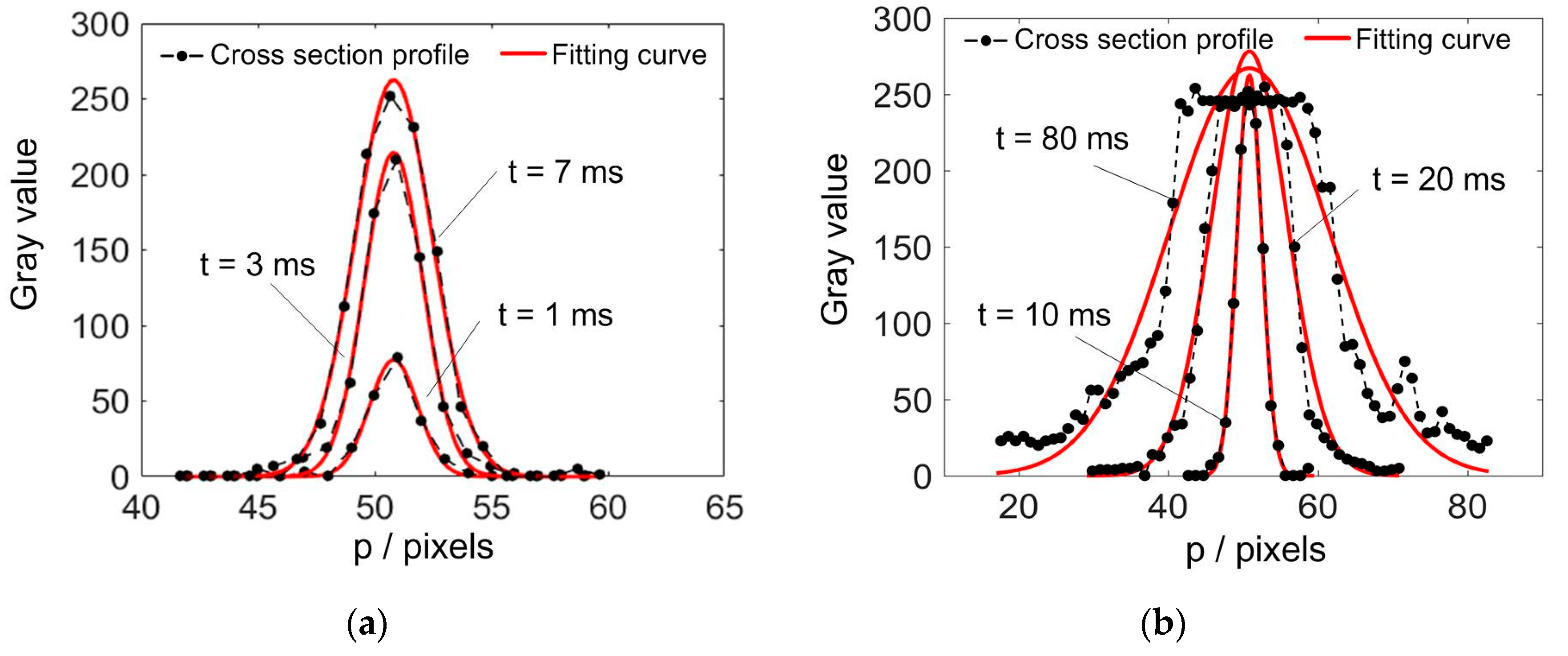

3.2. Compute Reference Cross Section Width Using Gaussian Fitting

3.3. Training of BPNN

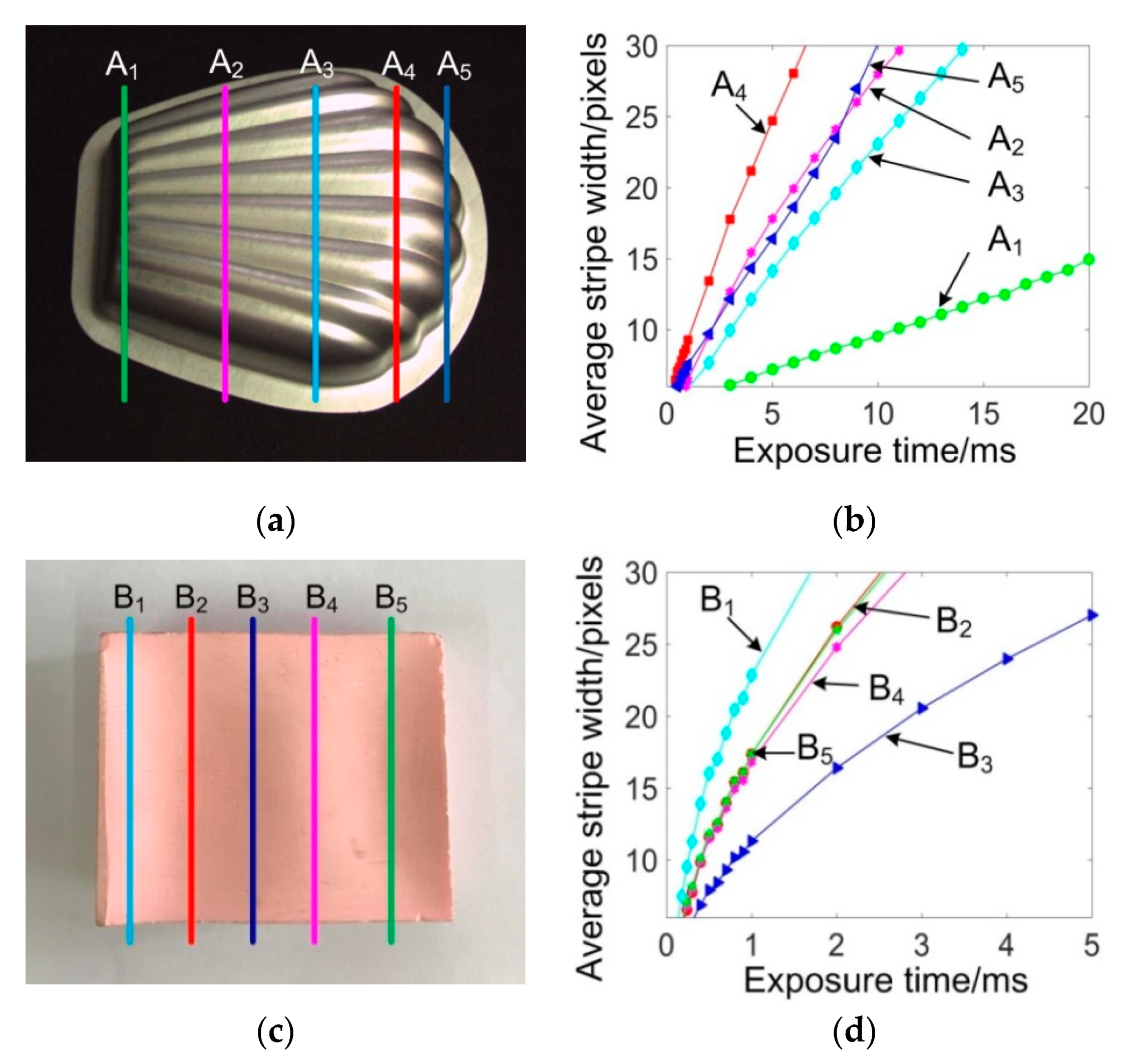

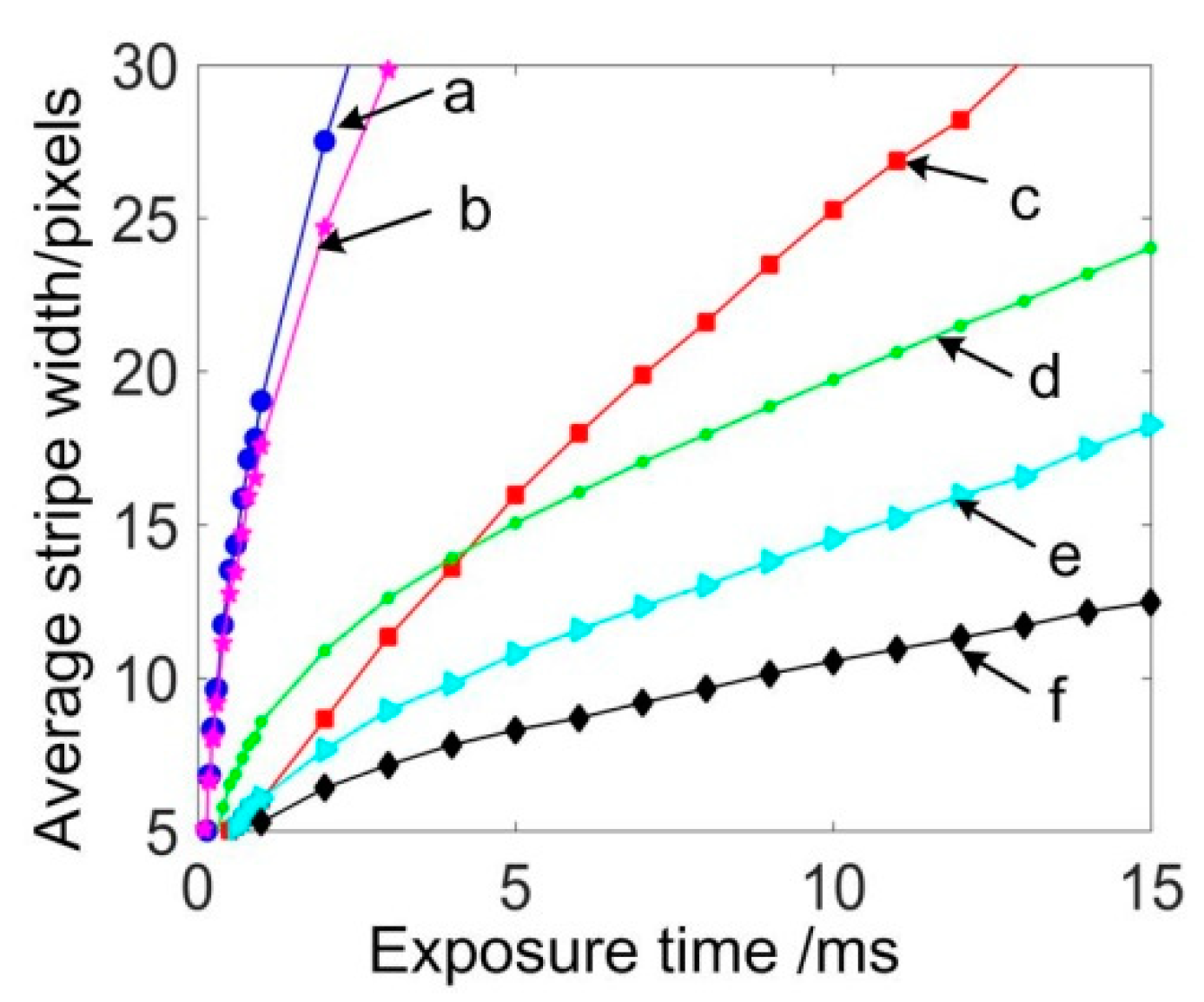

4. Relationship between ASW and Exposure Time

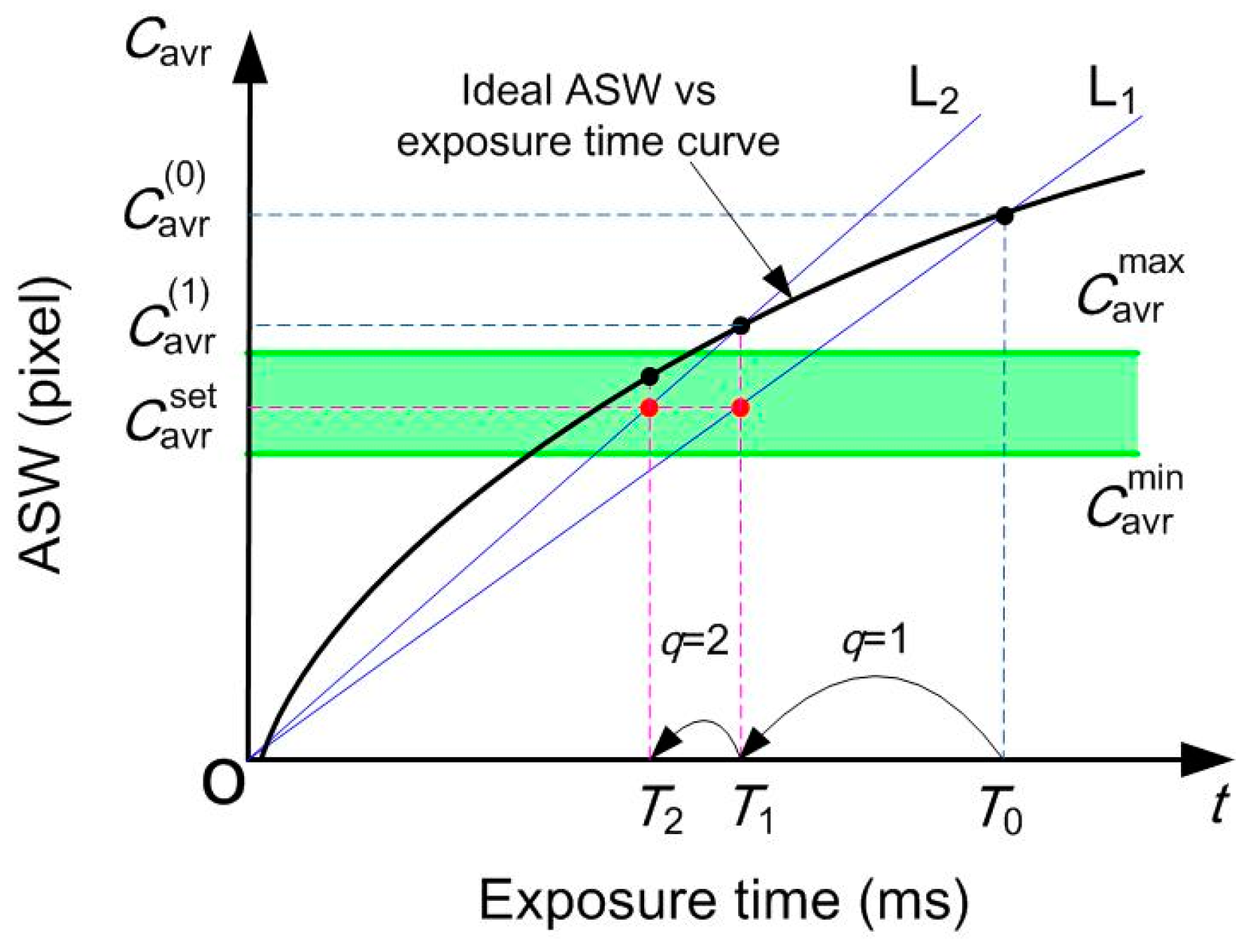

5. Adaptive Control of Exposure Time

6. Experiments and Analysis

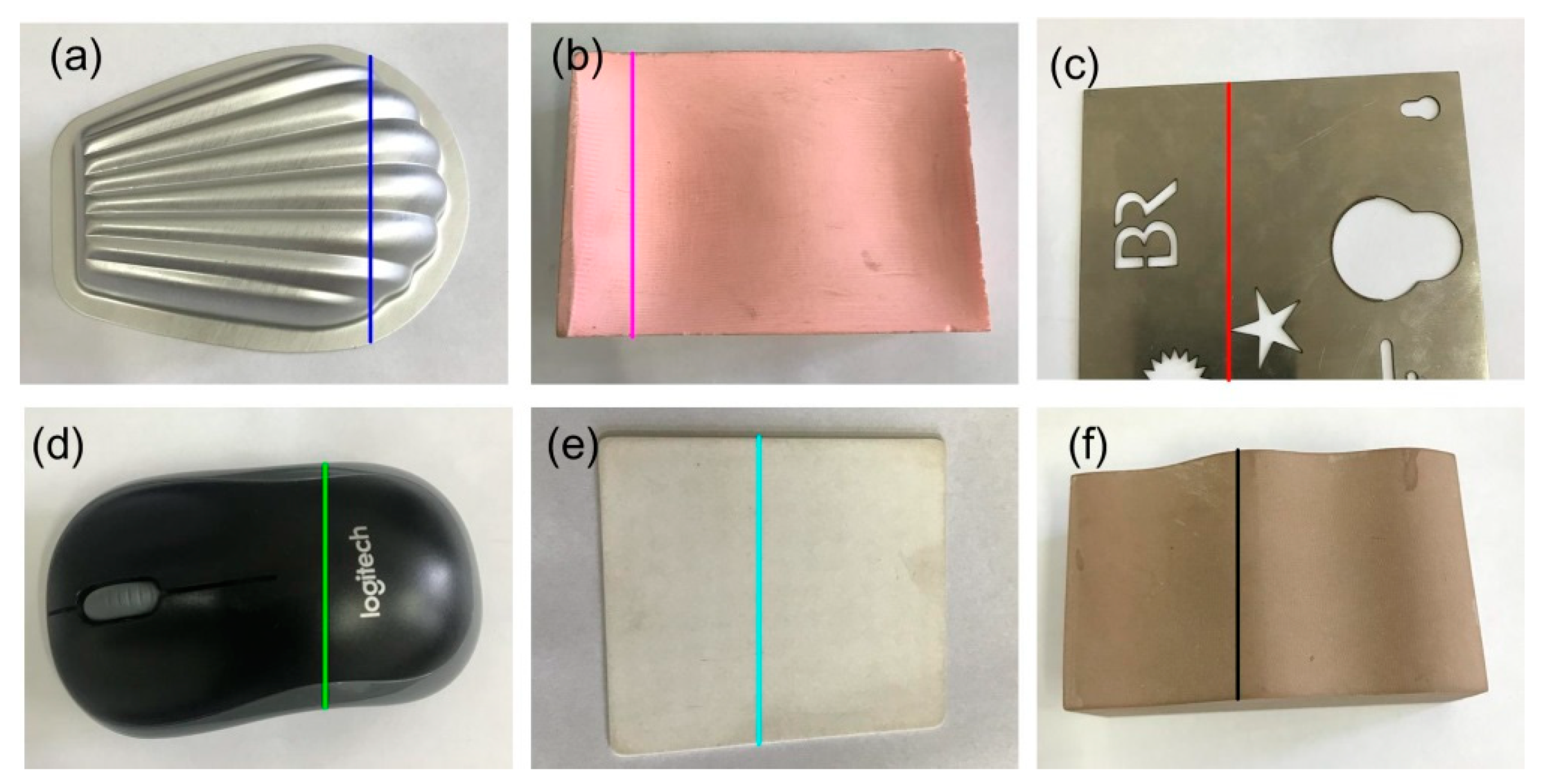

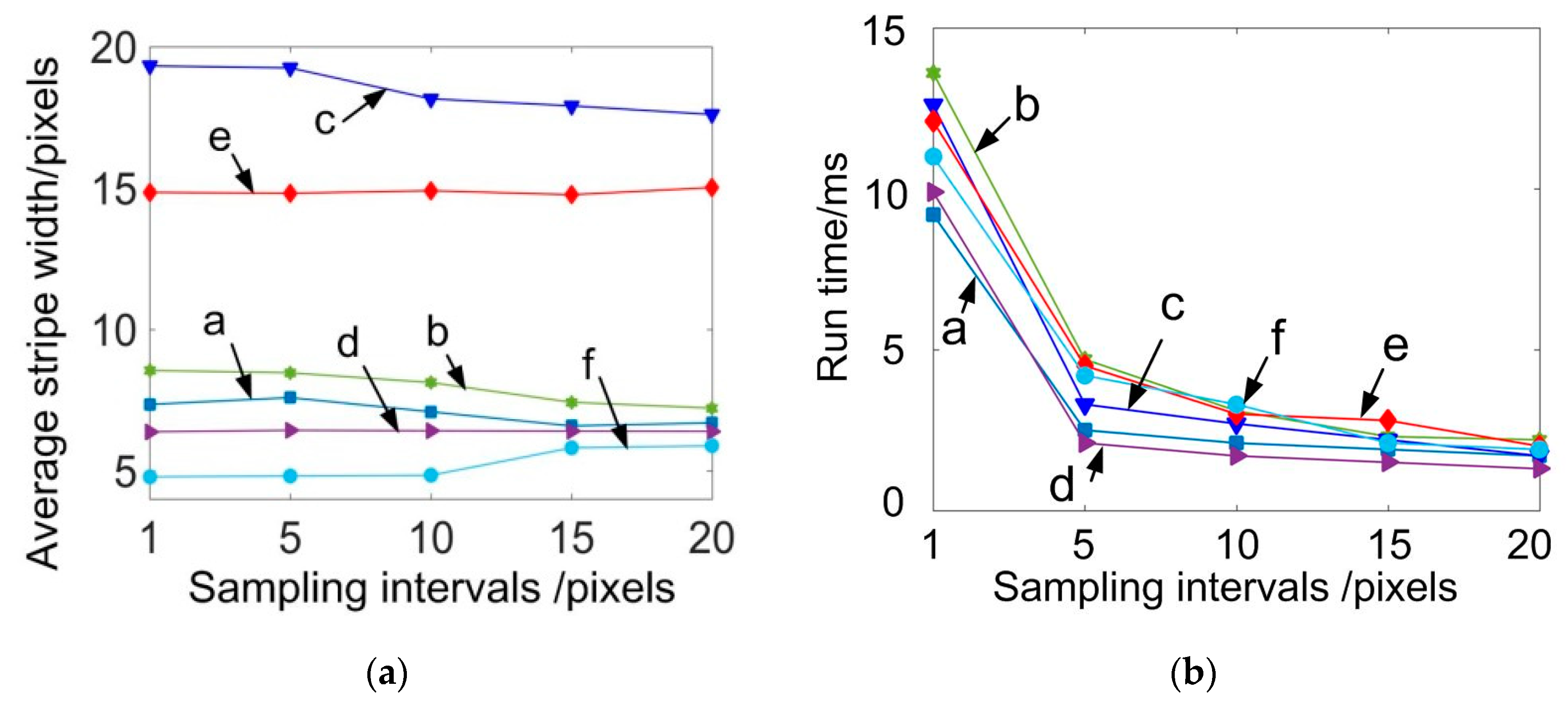

6.1. Real-Time Computation of ASW Using BPNN

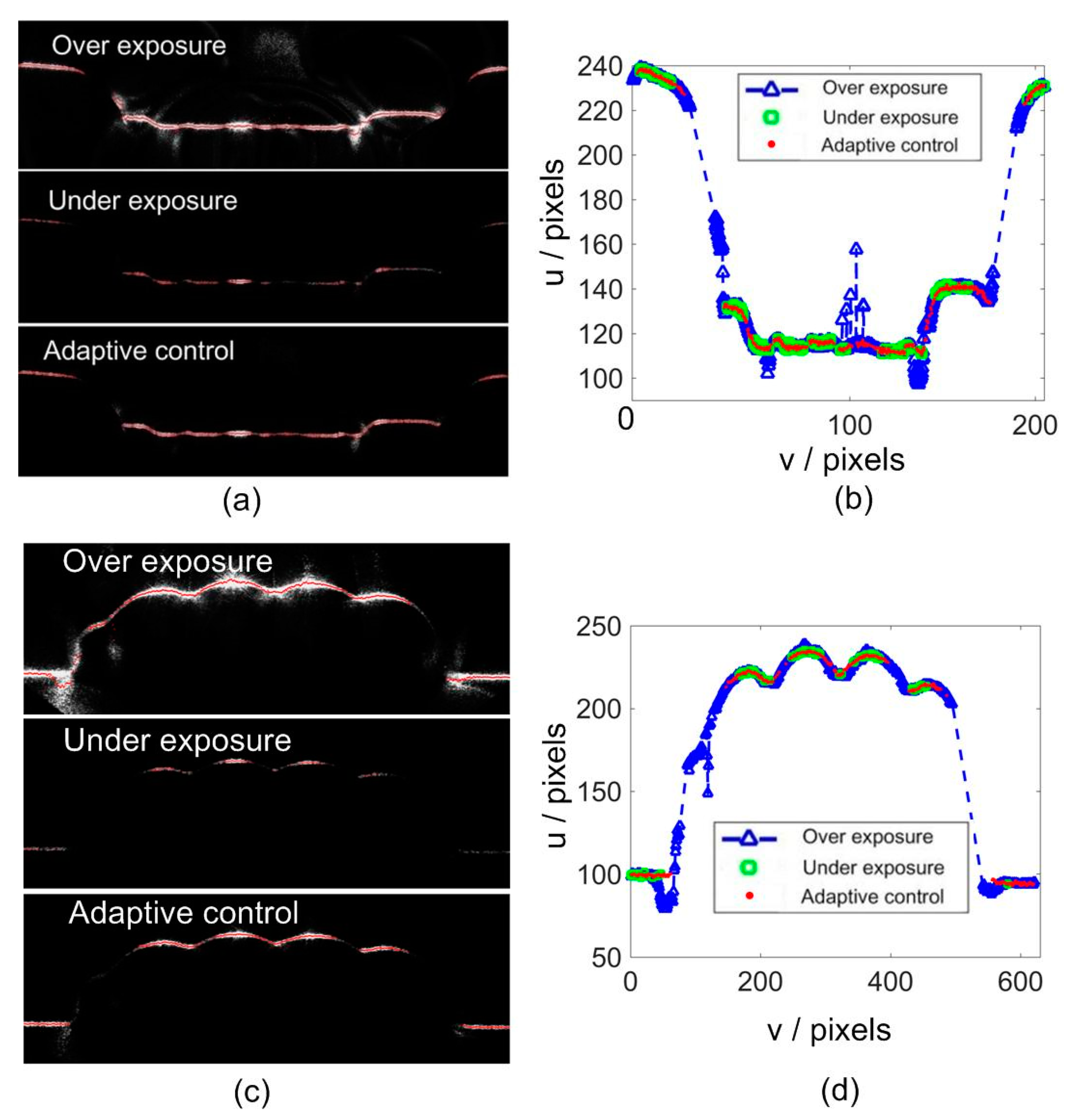

6.2. Adaptive Control for a Single Intersection Profile

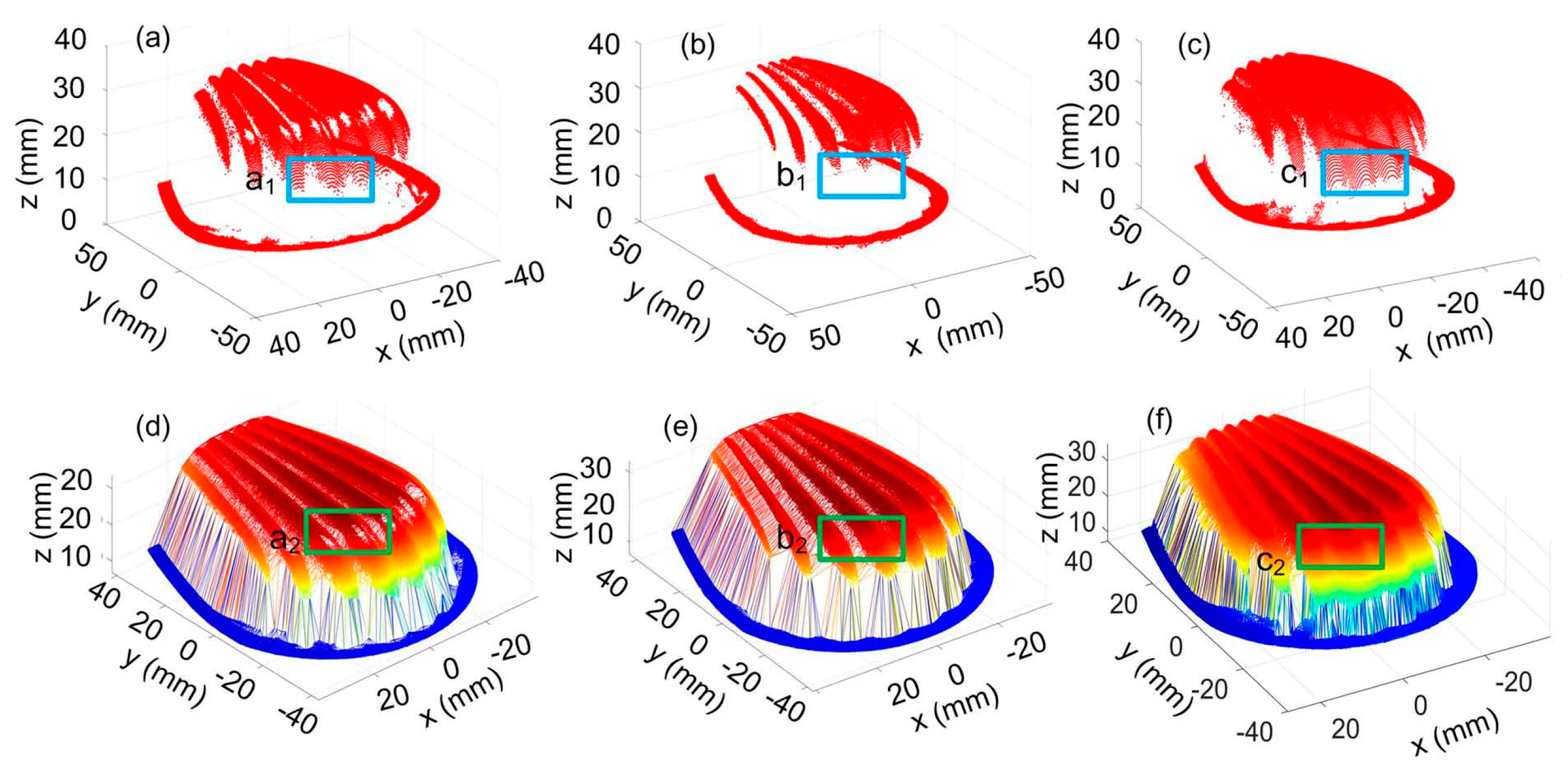

6.3. Adaptive Control for Part Scanning

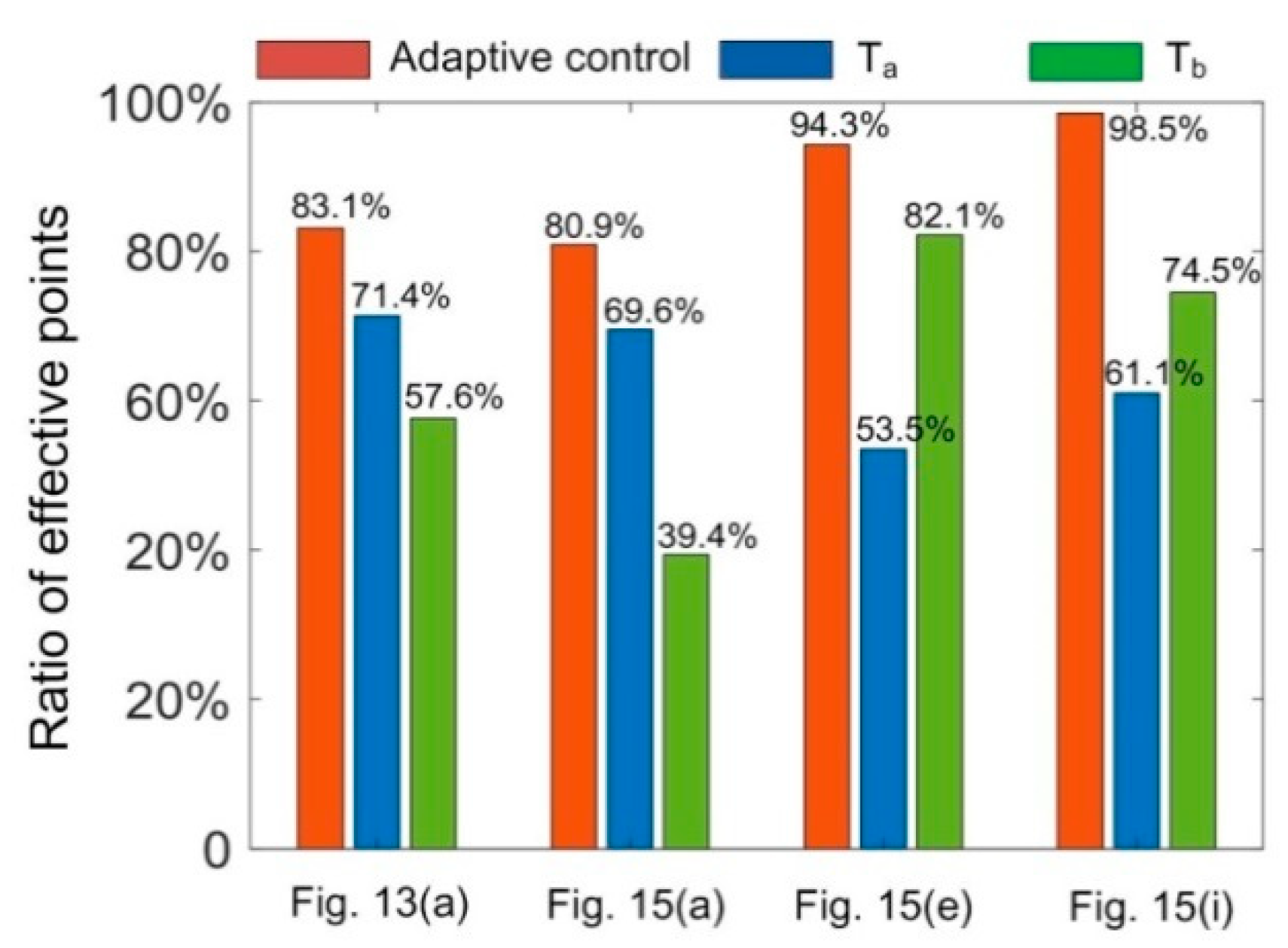

6.4. Comparative Analysis of Effective Points

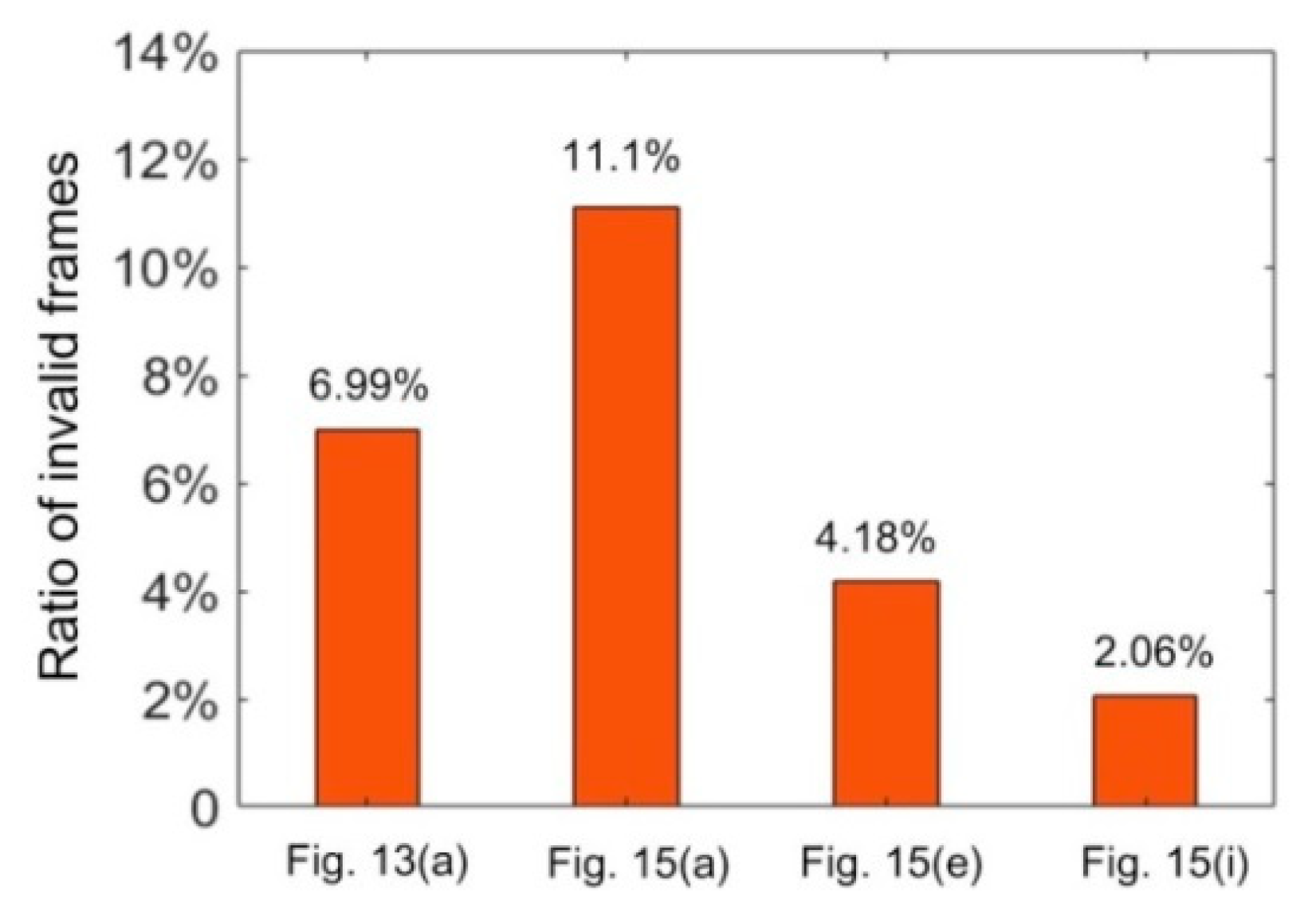

6.5. Effective Analysis of Linear Iteration

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Sansoni, G.; Trebeschi, M.; Docchio, F. State-of-The-Art and Applications of 3D Imaging Sensors in Industry, Cultural Heritage, Medicine, and Criminal Investigation. Sensors 2009, 9, 568–601. [Google Scholar] [CrossRef] [PubMed]

- Ayaz, S.M.; Khan, D.; Kim, M.Y. Three-Dimensional Registration for Handheld Profiling Systems Based on Multiple Shot Structured Light. Sensors 2018, 18, 1146. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mao, Q.; Cui, H.; Hu, Q.; Ren, X. A rigorous fastener inspection approach for high-speed railway from structured light sensors. ISPRS J. Photogramm. Remote Sens. 2018, 143, 249–267. [Google Scholar] [CrossRef]

- Usamentiaga, R.; Garcia, D.F. Multi-camera calibration for accurate geometric measurements in industrial environments. Measurement 2019, 134, 345–358. [Google Scholar] [CrossRef]

- Liu, F.; Wang, Z.; Ji, Y. Precise initial weld position identification of a fillet weld seam using laser vision technology. Int. J. Adv. Manuf. Technol. 2018, 99, 2059–2068. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, J.; Liu, L. Research progress of the line structured light measurement technique. J. Hebei Univ. Sci. Technol. 2018, 39, 116–124. [Google Scholar]

- Zhou, F.; Zhang, G. Complete calibration of a structured light stripe vision sensor through planar target of unknown orientations. Image Vis. Comput. 2005, 23, 59–67. [Google Scholar] [CrossRef]

- Qiu, Z.; Xiao, J. New calibration method of line structured light vision system and application for vibration measurement and control. Opt. Precis. Eng. 2019, 27, 230–240. [Google Scholar]

- Zhou, J.; Li, Y.; Qin, Z.; Huang, F.; Wu, Z. Calibration of Line Structured Light Sensor Based on Reference Target. Acta Opt. Sin. 2019, 39, 169–176. [Google Scholar]

- Qi, L.; Zhang, Y.; Zhang, X.; Wang, S.; Xie, F. Statistical behavior analysis and precision optimization for the laser stripe center detector based on Steger’s algorithm. Opt. Express 2013, 21, 13442–13449. [Google Scholar] [CrossRef]

- Usamentiaga, R.; Molleda, J.; García, D.F. Fast and robust laser stripe extraction for 3D reconstruction in industrial environments. Mach. Vis. Appl. 2010, 23, 179–196. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, J.; Huang, F.; Liu, L. Sub-Pixel Extraction of Laser Stripe Center Using an Improved Gray-Gravity Method. Sensors 2017, 17, 814. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tian, Q.; Yang, Y.; Zhang, X.; Ge, B. An experimental evaluation method for the performance of a laser line scanning system with multiple sensors. Opt. Lasers Eng. 2014, 52, 241–249. [Google Scholar] [CrossRef]

- Zhou, J.; Li, Y.; Huang, F.; Liu, L. Numerical Calibration of Laser Line Scanning System with Multiple Sensors for Inspecting Cross-Section Profiles. In Proceedings of the SPIE—COS Photonics Asia, Beijing, China, 12–14 October 2016. [Google Scholar]

- Molleda, J.; Usamentiaga, R.; Millara, A.F.; Garcia, D.F.; Manso, P.; Suarez, C.M.; Garcia, I. A Profile Measurement System for Rail Quality Assessment During Manufacturing. IEEE Trans. Ind. Appl. 2016, 52, 2684–2692. [Google Scholar] [CrossRef]

- Son, S.; Park, H.; Lee, K.H. Automated laser scanning system for reverse engineering and inspection. Int. J. Mach. Tools Manuf. 2002, 42, 889–897. [Google Scholar] [CrossRef]

- Xie, Z.; Wang, J.; Zhang, Q. Complete 3D measurement in reverse engineering using a multi-probe system. Int. J. Mach. Tools Manuf. 2005, 45, 1474–1486. [Google Scholar]

- Marani, R.; Nitti, M.; Cicirelli, G.; D’Orazio, T.; Stella, E. High-Resolution Laser Scanning for Three-Dimensional Inspection of Drilling Tools. Adv. Mech. Eng. 2013, 5, 620786. [Google Scholar] [CrossRef] [Green Version]

- Ekstrand, L.; Zhang, S. Autoexposure for three-dimensional shape measurement using a digital-light-processing projector. Opt. Eng. 2011, 50, 123603. [Google Scholar] [CrossRef]

- Jiang, H.; Zhao, H.; Li, X. High dynamic range fringe acquisition: A novel 3-D scanning technique for high-reflective surfaces. Opt. Lasers Eng. 2012, 50, 1484–1493. [Google Scholar] [CrossRef]

- Feng, S.; Zhang, Y.; Chen, Q.; Zuo, C.; Li, R.; Shen, G. General solution for high dynamic range three-dimensional shape measurement using the fringe projection technique. Opt. Lasers Eng. 2014, 59, 56–71. [Google Scholar] [CrossRef]

- Song, Z.; Jiang, H.; Lin, H.; Tang, S. A high dynamic range structured light means for the 3D measurement of specular surface. Opt. Lasers Eng. 2017, 95, 8–16. [Google Scholar] [CrossRef]

- Waddington, C.; Kofman, J. Camera-independent saturation avoidance in measuring high-reflectivity-variation surfaces using pixel-wise composed images from projected patterns of different maximum gray level. Opt. Commun. 2014, 333, 32–37. [Google Scholar] [CrossRef]

- Chen, C.; Gao, N.; Wang, X.; Zhang, Z. Adaptive projection intensity adjustment for avoiding saturation in three-dimensional shape measurement. Opt. Commun. 2018, 410, 694–702. [Google Scholar] [CrossRef]

- Zhao, H.; Liang, X.; Diao, X.; Jiang, H. Rapid in-situ 3D measurement of shiny object based on fast and high dynamic range digital fringe projector. Opt. Lasers Eng. 2014, 54, 170–174. [Google Scholar] [CrossRef]

- Babaie, G.; Abolbashari, M.; Farahi, F. Dynamics range enhancement in digital fringe projection technique. Precis. Eng. 2015, 39, 243–251. [Google Scholar] [CrossRef]

- Schnee, J.; Futterlieb, J. Laser Line Segmentation with Dynamic Line Models. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Seville, Spain, 29–31 August 2011. [Google Scholar]

- Jia, D.; Wei, X.; Chen, W.; Cheng, J.; Yue, W.; Ying, G.; Chia, S.C. Robust Laser Stripe Extraction Using Ridge Segmentation and Region Ranking for 3D Reconstruction of Reflective and Uneven Surface. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015. [Google Scholar]

- Li, F.; Li, X.; Liu, Z. A Multi-Scale Analysis Based Method for Extracting Coordinates of Laser Light Stripe Centers. Acta Opt. Sin. 2014, 34, 1110002. [Google Scholar]

- Song, J.; Sun, C.; Wang, P. Techniques of light intensity adaptive adjusting for the 3D measurement system of the solder paste. Chin. J. Sens. Actuators 2012, 25, 1166–1171. [Google Scholar]

- Tang, R.Y.; Shen, H.H.; He, H.-K.; Gao, F. Adaptive intensity of laser control based on dual-tree complex wavelet transform. Laser J. 2015, 7, 113–116. [Google Scholar]

- Yang, Z.; Wang, P.; Li, X.; Sun, C. 3D laser scanner system using high dynamic range imaging. Opt. Lasers Eng. 2014, 54, 31–41. [Google Scholar]

- Li, X.; Sun, C.; Wang, P. The image adaptive method for solder paste 3D measurement system. Opt. Lasers Eng. 2015, 66, 41–51. [Google Scholar]

- Wang, S.; Xu, J.; Zhang, Y.; Zhang, X.; Xie, F. Reliability Evaluation Method and Application for Light-Stripe-Center Extraction. Acta Opt. Sin. 2011, 31, 1115001. [Google Scholar] [CrossRef]

- Li, T.; Yang, F.; Li, C.; Fang, L. Exposure Time Optimization for Line Structured Light Sensor Based on Light Stripe Reliability Evaluation. Acta Opt. Sin. 2018, 38, 0112005. [Google Scholar]

| (a) | (b) | (c) | (d) | (e) | (f) | ||

|---|---|---|---|---|---|---|---|

| ASW (pixels) | GF | 7.0721 | 8.0803 | 19.1964 | 6.2689 | 15.1558 | 4.6605 |

| BPNN | 6.8747 | 7.9025 | 19.3939 | 6.0165 | 15.2291 | 4.4732 | |

| Deviation | 0.0279 | 0.0220 | 0.0103 | 0.0403 | 0.0048 | 0.0402 | |

| Time (s) | GF | 1.2209 | 2.8378 | 1.7788 | 1.1309 | 2.8270 | 1.1546 |

| BPNN | 0.0092 | 0.0136 | 0.0126 | 0.0099 | 0.0121 | 0.0110 | |

| Relative percentage | 0.75% | 0.48% | 0.71% | 0.88% | 0.43% | 0.95% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, J.; Pan, L.; Li, Y.; Liu, P.; Liu, L. Real-Time Stripe Width Computation Using Back Propagation Neural Network for Adaptive Control of Line Structured Light Sensors. Sensors 2020, 20, 2618. https://doi.org/10.3390/s20092618

Zhou J, Pan L, Li Y, Liu P, Liu L. Real-Time Stripe Width Computation Using Back Propagation Neural Network for Adaptive Control of Line Structured Light Sensors. Sensors. 2020; 20(9):2618. https://doi.org/10.3390/s20092618

Chicago/Turabian StyleZhou, Jingbo, Laisheng Pan, Yuehua Li, Peng Liu, and Lijian Liu. 2020. "Real-Time Stripe Width Computation Using Back Propagation Neural Network for Adaptive Control of Line Structured Light Sensors" Sensors 20, no. 9: 2618. https://doi.org/10.3390/s20092618

APA StyleZhou, J., Pan, L., Li, Y., Liu, P., & Liu, L. (2020). Real-Time Stripe Width Computation Using Back Propagation Neural Network for Adaptive Control of Line Structured Light Sensors. Sensors, 20(9), 2618. https://doi.org/10.3390/s20092618