Unsupervised Human Activity Recognition Using the Clustering Approach: A Review

Abstract

1. Introduction

1.1. Focus of this Survey

- Phase 1. Extraction or Selection of Feature: In this phase, it is necessary to define the characteristics or similarities to be analyzed. This feature can be selected or extracted; the difference in the processes is that, when the selected, the feature is chosen [1], whereas in the second option, the feature is transformed by different techniques to prepare it for new characteristic extracts [2]. The main purpose of this phase is to find the patterns belonging to different clusters, without noise, which are easy to analyze and are known [3,4].

- Phase 2. Clustering Algorithm selection: After extracting the feature, it is necessary to define the clustering algorithm to be applied. In addition to this important selection, defining a corresponding proximity measure and the construction of a criterion function is also indispensable. When the proximity measure function was built, it became an optimization problem with several case studies in the literature [5]. The clustering approach is now applicable to different areas, and for this reason, is very important in order to understand the characteristic of the problem to correctly decide correctly algorithm for solving the identified problem.

- Phase 3. Cluster Validation: In a group of data, the algorithms selected show the different partitions. The big difficulty is to understand and know the quality of the results—the results are defined by the clustering quality metrics [6]. These metrics are divided into two groups: externals and internal. The more useful internal metrics are: cohesion and separation [7], SSW (Sum of Squared Within) [8], SSB (Sum of Squared Between) [7], Sum of Squared base Indexes [6], Davies Bouldin [9], Silhouette coefficient [10] and Dunn-index [11]. The more useful external metrics are: Precision [12], Recall [13], F-Measure [14], Entropy [15], Purity [16], Mutual Information [17,18], and Rand-Index [19]

- Phase 4: Result Interpretation: The purpose of using clustering is to show new information extracted from the original data to solve the initial problem. In some occasions, in order to understand the results, it is necessary to contact an expert in order to explain the cluster’s resultant characteristics. Additionally, additional experiments can be applied in order to explain and prove the extracted knowledge.

1.2. The Big Picture: Human Activity Recognition Using Learning Techniques Approach

1.3. Outline

2. Taxonomy

3. Conceptual Information

3.1. Clustering Techniques

- Underlying structure: to depend the data, generate hypotheses, detect anomalies, and identify the most prominent characteristics.

- Natural classification: to identify the degree of similarity between the forms of organisms (phylogenetic relationship).

- Compression: as a method to organize data and complement it through clustering prototypes.

3.1.1. Clustering Methods

3.1.2. Clustering Methods Descriptions

3.2. Human Activity Recognition

3.2.1. Activities

3.2.2. Type of Sensor

3.2.3. Dataset for Human Activity Recognition

3.2.4. Supervised and Unsupervised

3.2.5. Single or Multioccupancy

4. Type of Clustering Methods for Human Activity Recognition

5. Methodology

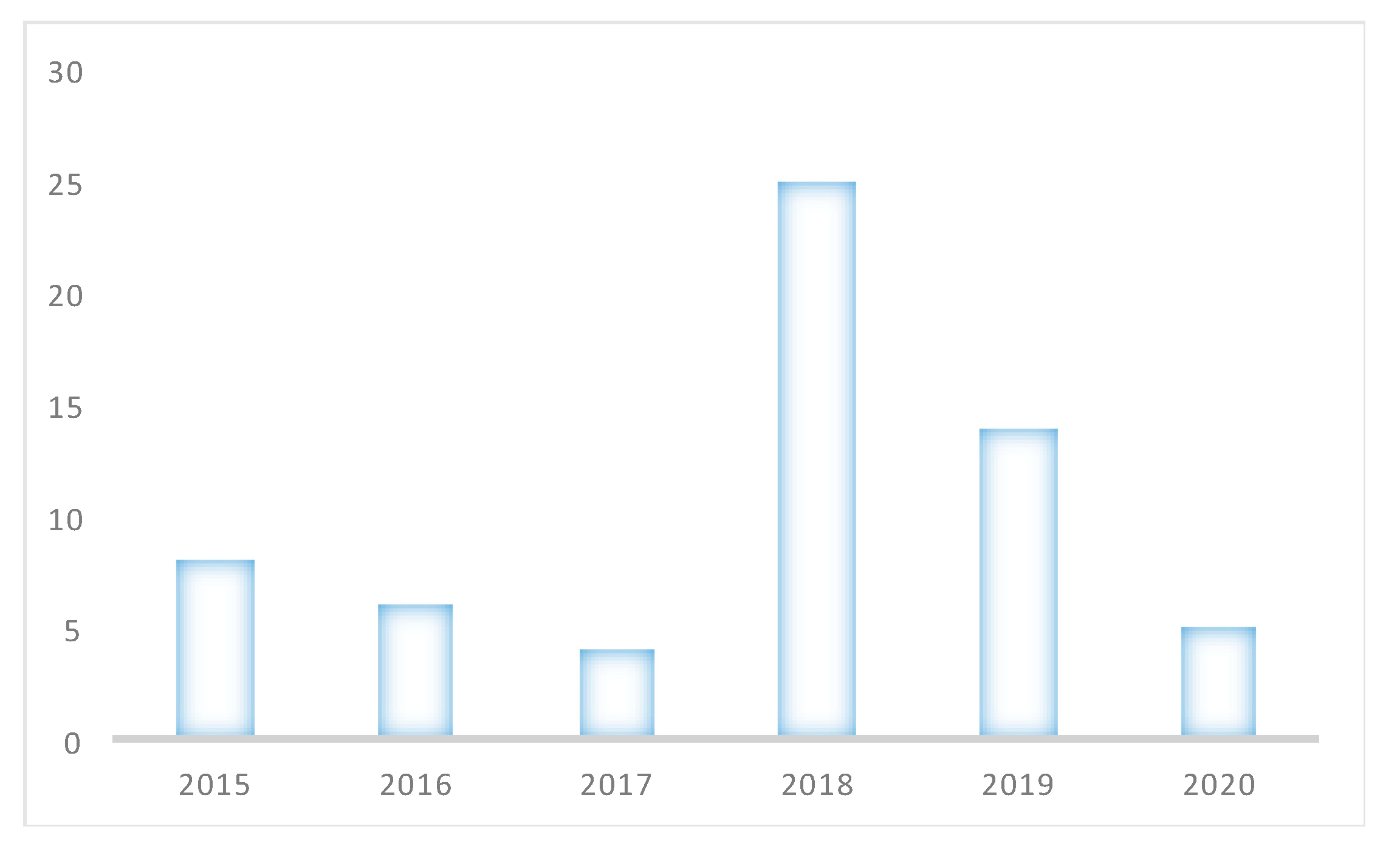

6. Scientometric Analysis

7. Technical Analysis

8. Conclusions

9. Future Works

- Usability of clustering techniques in conjunction with other techniques or algorithms, such as HMM, which support the unsupervised detection of daily life activities.

- Generation and use of new techniques to analyze temporal space support to improve the results of the identification of activities of daily life.

- Other challenges within the clustering application can identify the behavioral analysis of each of the groups generated. This analysis is called Multiclustering Methods, which creates multiple groupings and then combines them into a single result (see Figure 14).

- Exploration of different experimentation scenarios with multi-level applications that include the behavior of unidentified activities.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jain, A.; Duin, R.; Mao, J. Statistical pattern recognition: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 4–37. [Google Scholar] [CrossRef]

- Jain, A.; Murty, M.; Flynn, P. Data clustering: A review. ACM Comput. Surv. 1999, 31, 264–323. [Google Scholar] [CrossRef]

- Bishop, C. Neural Networks for Pattern Recognition; Oxford University Press: New York, NY, USA, 1995. [Google Scholar]

- Sklansky, J.; Siedlecki, W. Large-scale feature selection. In Handbook of Pattern Recognition and Computer Vision; Chen, C., Pau, L., Wang, P., Eds.; World Scientific: Singapore, 1993; pp. 61–124. [Google Scholar]

- Kleinberg, J. An impossibility theorem for clustering. In Proceedings of the 2002 15th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 9–14 December 2002; Volume 15, pp. 463–470. [Google Scholar]

- Jain, A.; Dubes, R. Algorithms for Clustering Data; Prentice-Hall: Englewood Cliffs, NJ, USA, 1988. [Google Scholar]

- Gordon, A. Cluster validation. In Data Science, Classification, and Related Methods; Hayashi, C., Ohsumi, N., Yajima, K., Tanaka, Y., Bock, H., Bada, Y., Eds.; Springer: New York, NY, USA, 1998; pp. 22–39. [Google Scholar]

- Dubes, R. Cluster analysis and related issue. In Handbook of Pattern Recognition and Computer Vision; Chen, C., Pau, L., Wang, P., Eds.; World Scientific: Singapore, 1993; pp. 3–32. [Google Scholar]

- Bandyopadhyay, S.; Maulik, U. Nonparametric genetic clustering: Comparison of validity indices. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 2001, 31, 120–125. [Google Scholar] [CrossRef]

- Bezdek, J.; Pal, N. Some new indexes of cluster validity. IEEE Trans. Syst. Man Cybern. B Cybern. 1998, 28, 301–315. [Google Scholar] [CrossRef]

- Dunn, J.C. A fuzzy relative of the ISODATA process and its use in detecting compact well-separated clusters. J. Cybern. 1973, 3, 32–57. [Google Scholar] [CrossRef]

- Halkidi, M.; Batistakis, Y.; Vazirgiannis, M. Cluster validity methods: Part I & II. SIGMOD Rec. 2002, 31, 40–45. [Google Scholar]

- Leung, Y.; Zhang, J.; Xu, Z. Clustering by scale-space filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1396–1410. [Google Scholar] [CrossRef]

- Levine, E.; Domany, E. Resampling method for unsupervised estimation of cluster validity. Neural Comput. 2001, 13, 2573–2593. [Google Scholar] [CrossRef] [PubMed]

- Davé, R.; Krishnapuram, R. Robust clustering methods: A unified view. IEEE Trans. Fuzzy Syst. 1997, 5, 270–293. [Google Scholar] [CrossRef]

- Geva, A. Hierarchical unsupervised fuzzy clustering. IEEE Trans. Fuzzy Syst. 1999, 7, 723–733. [Google Scholar] [CrossRef]

- Hammah, R.; Curran, J. Validity measures for the fuzzy cluster analysis of orientations. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1467–1472. [Google Scholar] [CrossRef]

- Rand, W.M. Objective criteria for the evaluation of clustering methods. J. Am. Stat. Assoc. 1971, 66, 846–850. [Google Scholar] [CrossRef]

- Lane, N.; Miluzzo, E.; Lu, H.; Peebles, D.; Choudhury, T.; Campbell, A. A survey of mobile phone sensing. IEEE Commun. Mag. 2010, 48, 140–150. [Google Scholar] [CrossRef]

- bin Abdullah, M.F.A.; Negara, A.F.P.; Sayeed, M.S.; Choi, D.J.; Muthu, K.S. Classification algorithms in human activity recognition using smartphones. World Acad. Sci. Eng. Technol. 2012, 68, 422–430. [Google Scholar]

- Stikic, M.; Schiele, B. Activity Recognition from Sparsely Labeled Data Using Multi-Instance Learning. In Proceedings of the 4th International Symposium Location and Context Awareness, Tokyo, Japan, 7–8 May 2009; Volume 5561, pp. 156–173. [Google Scholar]

- Chen, L.; Nugent, C.D. Ontology-based activity recognition in intelligent pervasive environments. Int. J. Web Inf. Syst. 2009, 5, 410–430. [Google Scholar] [CrossRef]

- Palmes, P.; Pung, H.K.; Gu, T.; Xue, W.; Chen, S. Object relevance weight pattern mining for activity recognition and segmentation. Pervasive Mob. Comput. 2010, 6, 43–57. [Google Scholar] [CrossRef]

- Chen, L.; Nugent, C.D.; Wang, H. A Knowledge-Driven Approach to Activity Recognition in Smart Homes. IEEE Trans. Knowl. Data Eng. 2011, 24, 961–974. [Google Scholar] [CrossRef]

- Ye, J.; Stevenson, G.; Dobson, S. A top-level ontology for smart environments. Pervasive Mob. Comput. 2011, 7, 359–378. [Google Scholar] [CrossRef]

- Jain, A.K.; Flynn, P. Image segmentation using clustering. In Advances in Image Understanding; IEEE Computer Society Press: Piscataway, NJ, USA, 1996. [Google Scholar]

- Van Kasteren, T.L.M.; Englebienne, G.; Kröse, B.J.A. Activity recognition using semi-Markov models on real world smart home datasets. J. Ambient Intell. Smart Environ. 2010, 2, 311–325. [Google Scholar] [CrossRef]

- Cook, D.; Crandall, A.S.; Thomas, B.L.; Krishnan, N.C. CASAS: A smart home in a box. Computer 2013, 46, 62–69. [Google Scholar] [CrossRef]

- Cook, D. Learning setting-generalized activity models for smart spaces. IEEE Intell. Syst. 2012, 27, 32–38. [Google Scholar] [CrossRef] [PubMed]

- Singla, G.; Cook, D.J.; Schmitter-Edgecombe, M. Recognizing independent and joint activities among multiple residents in smart environments. J. Ambient Intell. Hum. Comput. 2010, 1, 57–63. [Google Scholar] [CrossRef] [PubMed]

- Almaslukh, B.; AlMuhtadi, J.; Artoli, A. An effective deep autoencoder approach for online smartphone-based human activity recognition. Int. J. Comput. Sci. Netw. Secur. 2017, 17, 160–165. [Google Scholar]

- Ordóñez, F.; Roggen, D. Deep convolutional and lstm recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed]

- Ha, S.; Choi, S. Convolutional neural networks for human activity recognition using multiple accelerometer and gyroscope sensors. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 381–388. [Google Scholar]

- Frigui, H.; Krishnapuram, R. A robust competitive clustering algorithm with applications in computer vision. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 450–465. [Google Scholar] [CrossRef]

- Shi, J.; Malik, J. Normalized cuts and image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 888–905. [Google Scholar]

- Iwayama, M.; Tokunaga, T. Cluster-based text categorization: A comparison of category search strategies. In Proceedings of the 18th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Seattle, WA, USA, 9–13 July 1995; pp. 273–280. [Google Scholar]

- Sahami, M. Using Machine Learning to Improve Information Access. Ph.D. Thesis, Stanford University, Stanford, CA, USA, 15 December 1998. [Google Scholar]

- Bhatia, S.K.; Deogun, J.S. Conceptual clustering in information retrieval. IEEE Trans. Syst. Man Cybern. Part B Cybern. 1998, 28, 427–436. [Google Scholar] [CrossRef]

- Hubert, L.; Arabie, P. The analysis of proximity matrices through sums of matrices having (anti-) Robinson forms. Br. J. Math. Stat. Psychol. 1994, 47, 1–40. [Google Scholar] [CrossRef]

- Hu, W.Y.; Scott, J.S. Behavioral obstacles in the annuity market. Financ. Anal. J. 2007, 63, 71–82. [Google Scholar] [CrossRef]

- Hung, S.P.; Baldi, P.; Hatfield, G.W. Global Gene Expression Profiling in Escherichia coliK12 THE EFFECTS OF LEUCINE-RESPONSIVE REGULATORY PROTEIN. J. Biol. Chem. 2002, 277, 40309–40323. [Google Scholar] [CrossRef]

- De-La-Hoz-Franco, E.; Ariza-Colpas, P.; Quero, J.M.; Espinilla, M. Sensor-based datasets for human activity recognition—A systematic review of literature. IEEE Access 2018, 6, 59192–59210. [Google Scholar] [CrossRef]

- Rawassizadeh, R.; Dobbins, C.; Akbari, M.; Pazzani, M. Indexing multivariate mobile data through spatio-temporal event detection and clustering. Sensors 2019, 19, 448. [Google Scholar] [CrossRef]

- Bouchard, K.; Lapalu, J.; Bouchard, B.; Bouzouane, A. Clustering of human activities from emerging movements. J. Ambient Intell. Hum. Comput. 2019, 10, 3505–3517. [Google Scholar] [CrossRef]

- Jain, A.K. Data clustering: 50 years beyond K-means. Pattern Recognit. Lett. 2010, 31, 651–666. [Google Scholar] [CrossRef]

- Drineas, P.; Frieze, A.M.; Kannan, R.; Vempala, S.; Vinay, V. Clustering in Large Graphs and Matrices. In Proceeding of the Symposium on Discrete Algorithms (SODA), Baltimore, MD, USA, 17–19 January 1999; Volume 99, pp. 291–299. [Google Scholar]

- Gonzalez, T.F. Clustering to minimize the maximum intercluster distance. Theor. Comput. Sci. 1985, 38, 293–306. [Google Scholar] [CrossRef]

- Fisher, D.H. Knowledge acquisition via incremental conceptual clustering. Mach. Learn. 1987, 2, 139–172. [Google Scholar] [CrossRef]

- Gennari, J.H.; Langley, P.; Fisher, D. Models of incremental concept formation. Artif. Intel. 1989, 40, 11–61. [Google Scholar] [CrossRef]

- Aguilar-Martin, J.; De Mantaras, R.L. The Process of Classification and Learning the Meaning of Linguistic Descriptors of Concepts. Approx. Reason. Decis. Anal. 1982, 1982, 165–175. [Google Scholar]

- Omran, M.G.; Engelbrecht, A.P.; Salman, A. An overview of clustering methods. Intell. Data Anal. 2007, 11, 583–605. [Google Scholar] [CrossRef]

- Bezdek, J.C. Pattern Recognition with Fuzzy Objective Function Algorithms; Plenum Publishing Corporation: New York, NY, USA, 1981. [Google Scholar]

- Tamayo, P.; Slomin, D.; Mesirov, J.; Zhu, Q.; Kitareewan, S.; Dmitrovsky, E.; Lander, E.S.; Golub, T.R. Interpreting patterns of gene expresión with self-organizing map: Methos and application to hematopoietic differentiation. Proc. Natl Acad. Sci. USA 1999, 96, 2901–2912. [Google Scholar] [CrossRef]

- Toronen, P.; Kolehmainen, M.; Wong, G.; Catrén, E. Analysis of gene expresión data using self-organizing maps. FEBS Lett. 1999, 451, 142–146. [Google Scholar] [CrossRef]

- Kohonen, T. Self-Organizing Maps; Springer: Berlin, Germany, 1997. [Google Scholar]

- Goldberg, D. Genetic Algorithms in Search, Optimization, and Machine Learning; Addison Wesley: Reading, MA, USA, 1989. [Google Scholar]

- Holland, J.H. Hidden Orderhow Adaptation Builds Complexity; Helix Books: Totowa, NJ, USA, 1995. [Google Scholar]

- Fogel, L.J.; Owens, A.J.; Walsh, M.J. Artificial Intelligence through Simulated Evolution; Wiley: Chichester, WS, UK, 1966. [Google Scholar]

- Fogel, D.B. Evolutionary Computation: Toward a New Philosophy of Machine Intelligence; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Holland, J. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Davis, L. (Ed.) Handbook of Genetic Algorithms; Van Nostrand Reinhold: New York, NY, USA, 1991; p. 385. [Google Scholar]

- Chung, F.R.K. Spectral Graph Theory, CBMS Regional Conference Series in Mathematics; American Mathematical Society: Providence, RI, USA, 1997; Volume 92. [Google Scholar]

- Fiedler, M. Algebraic connectivity of graphs. Czechoslov. Math. J. 1973, 23, 298–305. [Google Scholar]

- Schölkopf, B.; Smola, A.J.; Müller, K.R. Nonlinear component analysis as a kernel eigenvalue problem. Neural Comput. 1998, 10, 1299–1319. [Google Scholar] [CrossRef]

- Girolami, M. Mercer kernel based clustering in feature space. IEEE Trans. Neural Netw. 2002, 13, 780–784. [Google Scholar] [CrossRef]

- Ng, A.Y.; Jordan, M.I.; Weiss, Y. On Spectral Clustering: Analysis and an algorithm. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Rechenber, I. Evolution strategy. In Computational Intelligence: Imitating Life; Zurada, J.M., Marks, R.J., Robinson, C., Eds.; IEEE Press: Piscataway, NJ, USA, 1994. [Google Scholar]

- Schwefel, H.-P. Evolution and Optimum Seeking; Wiley: New York, NY, USA, 1995. [Google Scholar]

- Koza, J.R. Genetic Programming: On the Programming of Computers by Means of Natural Selection; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Von Luxburg, U. A tutorial on spectral clustering. Stat. Comput. 2007, 17, 395–416. [Google Scholar] [CrossRef]

- Kohonen, T. The self-organizing map. Proc. IEEE 1990, 78, 1464–1480. [Google Scholar] [CrossRef]

- Howedi, A.; Lotfi, A.; Pourabdollah, A. Exploring Entropy Measurements to Identify Multi-Occupancy in Activities of Daily Living. Entropy 2019, 21, 416. [Google Scholar] [CrossRef]

- Cook, D.; Schmitter-Edgecombe, M. Assessing the quality of activities in a smart environment. Methods Inf. Med. 2009, 48, 480–485. [Google Scholar]

- Singla, G.; Cook, D.; Schmitter-Edgecombe, M. Tracking activities in complex settings using smart environment technologies. Int. J. BioSci. Psychiatry Technol. 2009, 1, 25–35. [Google Scholar]

- Cook, D.J.; Youngblood, M.; Das, S.K. A multi-agent approach to controlling a smart environment. In Designing Smart Homes; Springer: Berlin/Heidelberg, Germany, 2006; pp. 165–182. [Google Scholar]

- Dernbach, S.; Das, B.; Krishnan, N.C.; Thomas, B.L.; Cook, D.J. Simple and complex activity recognition through smart phones. In Proceedings of the 2012 Eighth International Conference on Intelligent Environments, Guanajuato, Mexico, 26–29 June 2012. [Google Scholar]

- Sahaf, Y. Comparing Sensor Modalities for Activity Recognition. Master’s Thesis, Washington State University, Pullman, WA, USA, August 2011. [Google Scholar]

- Rawassizadeh, R.; Keshavarz, H.; Pazzani, M. Ghost imputation: Accurately reconstructing missing data of the off period. IEEE Trans. Knowl. Data Eng. 2019. [Google Scholar] [CrossRef]

- Wilson, D.H. Assistive Intelligent Environments for Automatic Health Monitoring. Ph.D. Thesis, Robotics Institute, Carnegie Mellon University, Pittsburgh, PA, USA, September 2005. [Google Scholar]

- Singla, G.; Cook, D.J.; Schmitter-Edgecombe, M. Incorporating temporal reasoning into activity recognition for smart home residents. In Proceedings of the AAAI Workshop on Spatial and Temporal Reasoning, Chicago, IL, USA, 13 July 2008; pp. 53–61. [Google Scholar]

- Wren, C.R.; Tapia, E.M. Hierarchical Processing in Scalable and Portable Sensor Networks for Activity Recognition. U.S. Patent No. 7359836, 15 April 2008. [Google Scholar]

- Van Kasteren, T.; Noulas, A.; Englebienne, G.; Kröse, B. Accurate activity recognition in a home setting. In Proceedings of the 10th International Conference on Ubiquitous Computing, Seoul, Korea, 21–24 September 2008; pp. 1–9. [Google Scholar]

- Philipose, M.; Fishkin, K.P.; Perkowitz, M.; Patterson, D.J.; Fox, D.; Kautz, H.; Hahnel, D. Inferring activities from interactions with objects. IEEE Pervasive Comput. 2004, 3, 50–57. [Google Scholar] [CrossRef]

- Patterson, D.J.; Fox, D.; Kautz, H.; Philipose, M. Fine-grained activity recognition by aggregating abstract object usage. In Proceedings of the Ninth IEEE International Symposium on Wearable Computers (ISWC’05), Osaka, Japan, 18–21 October 2005; pp. 44–51. [Google Scholar]

- Hodges, M.R.; Newman, M.W.; Pollack, M.E. Object-Use Activity Monitoring: Feasibility for People with Cognitive Impairments. In Proceedings of the AAAI Spring Symposium: Human Behavior Modeling, Stanford, CA, USA, 23–25 March 2009; pp. 13–18. [Google Scholar]

- Fang, F.; Aabith, S.; Homer-Vanniasinkam, S.; Tiwari, M.K. High-resolution 3D printing for healthcare underpinned by small-scale fluidics. In 3D Printing in Medicine; Woodhead Publishing: Cambrigde, MA, USA, 2017; pp. 167–206. [Google Scholar]

- Veltink, P.H.; Bussmann, H.J.; De Vries, W.; Martens, W.J.; Van Lummel, R.C. Detection of static and dynamic activities using uniaxial accelerometers. IEEE Trans. Rehab. Eng. 1996, 4, 375–385. [Google Scholar] [CrossRef] [PubMed]

- Mathie, M.J.; Coster, A.C.F.; Lovell, N.H.; Celler, B.G. Detection of daily physical activities using a triaxial accelerometer. Med. Biol. Eng. Comput. 2003, 41, 296–301. [Google Scholar] [CrossRef] [PubMed]

- Bao, L.; Intille, S.S. Activity recognition from user-annotated acceleration data. In Proceedings of the International Conference on Pervasive Computing, Linz/Vienna, Austria, 21–23 April 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 1–17. [Google Scholar]

- Chambers, G.S.; Venkatesh, S.; West, G.A.; Bui, H.H. Hierarchical recognition of intentional human gestures for sports video annotation. In Object Recognition Supported by User Interaction for Service Robots; IEEE: Quebec, PQ, Canada, 2002; pp. 1082–1085. [Google Scholar]

- Lester, J.; Choudhury, T.; Borriello, G. A practical approach to recognizing physical activities. In Proceedings of the 4th International Conference on Pervasive Computing, Dublin, Ireland, 7–10 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–16. [Google Scholar]

- Mantyjarvi, J.; Himberg, J.; Seppanen, T. Recognizing human motion with multiple acceleration sensors. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics, Tucson, AZ, USA, 7–10 October 2001; pp. 747–752. [Google Scholar]

- Al-Ani, T.; Le Ba, Q.T.; Monacelli, E. On-line automatic detection of human activity in home using wavelet and hidden markov models scilab toolkits. In Proceedings of the 2007 IEEE International Conference on Control Applications, Singapore, 1–3 October 2007; pp. 485–490. [Google Scholar]

- Zheng, Y.; Liu, Q.; Chen, E.; Ge, Y.; Zhao, J.L. Time series classification using multi-channels deep convolutional neural networks. In Proceedings of the International Conference on Web-Age Information Management, Macau, China, 16–18 June 2014; Springer: Cham, Switzerland, 2014; pp. 298–310. [Google Scholar]

- Jiang, W.; Yin, Z. Human activity recognition using wearable sensors by deep convolutional neural networks. In Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 26–30 October 2015; pp. 1307–1310. [Google Scholar]

- Alsheikh, M.A.; Selim, A.; Niyato, D.; Doyle, L.; Lin, S.; Tan, H.P. Deep activity recognition models with triaxial accelerometers. In Proceedings of the Workshops at the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–13 February 2016. [Google Scholar]

- Hu, D.H.; Yang, Q. CIGAR: Concurrent and Interleaving Goal and Activity Recognition. In Proceedings of the Twenty-Third AAAI Conference on Artificial Intelligence, Chicago, IL, USA, 13–17 July 2008; Volume 8, pp. 1363–1368. [Google Scholar]

- Zhang, L.; Wu, X.; Luo, D. Recognizing human activities from raw accelerometer data using deep neural networks. In Proceedings of the 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 9–11 December 2015; pp. 865–870. [Google Scholar]

- Using the Multicom Domus Dataset 2011. Available online: https://hal.archives-ouvertes.fr/hal-01473142/ (accessed on 25 January 2020).

- Zhang, S.; McCullagh, P.; Nugent, C.; Zheng, H. Activity monitoring using a smart phone’s accelerometer with hierarchical classification. In Proceedings of the 2010 Sixth International Conference on Intelligent Environments, Kuala Lumpur, Malaysia, 19–21 July 2010; pp. 158–163. [Google Scholar]

- Espinilla, M.; Martínez, L.; Medina, J.; Nugent, C. The experience of developing the UJAmI Smart lab. IEEE Access 2018, 6, 34631–34642. [Google Scholar] [CrossRef]

- Kitchenham, B.; Brereton, O.P.; Budgen, D.; Turner, M.; Bailey, J.; Linkman, S. Systematic literature reviews in software engineering—A systematic literature review. Inf. Softw. Technol. 2009, 51, 7–15. [Google Scholar] [CrossRef]

- Fahad, L.G.; Ali, A.; Rajarajan, M. Learning models for activity recognition in smart homes. In Information Science and Applications; Springer: Berlin/Heidelberg, Germany, 2015; pp. 819–826. [Google Scholar]

- Nguyen, D.; Le, T.; Nguyen, S. An Algorithmic Method of Calculating Neighborhood Radius for Clustering In-home Activities within Smart Home Environment. In Proceedings of the 2016 7th International Conference on Intelligent Systems, Modelling and Simulation (ISMS), Bangkok, Thailand, 25–27 January 2016; pp. 42–47. [Google Scholar]

- Nguyen, D.; Le, T.; Nguyen, S. A Novel Approach to Clustering Activities within Sensor Smart Homes. Int. J. Simul. Syst. Sci. Technol. 2016, 17. [Google Scholar] [CrossRef]

- Sukor, A.S.A.; Zakaria, A.; Rahim, N.A.; Setchi, R. Semantic knowledge base in support of activity recognition in smart home environments. Int. J. Eng. Technol. 2018, 7, 67–72. [Google Scholar] [CrossRef]

- Jänicke, M.; Sick, B.; Tomforde, S. Self-adaptive multi-sensor activity recognition systems based on gaussian mixture models. Informatics 2018, 5, 38. [Google Scholar] [CrossRef]

- Honarvar, A.R.; Zaree, T. Frequent sequence pattern based activity recognition in smart environment. Intell. Decis. Technol. 2018, 12, 349–357. [Google Scholar] [CrossRef]

- Chen, W.H.; Chen, Y. An ensemble approach to activity recognition based on binary sensor readings. In Proceedings of the 2017 IEEE 19th International Conference on e-Health Networking, Applications and Services (Healthcom), Dalian, China, 12–15 October 2017; pp. 1–5. [Google Scholar]

- Khan, M.A.A.H.; Roy, N. Transact: Transfer learning enabled activity recognition. In Proceedings of the 2017 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Kona, HI, USA, 13–17 March 2017; pp. 545–550. [Google Scholar]

- Fahad, L.G.; Tahir, S.F.; Rajarajan, M. Feature selection and data balancing for activity recognition in smart homes. In Proceedings of the 2015 IEEE International Conference on Communications (ICC), London, UK, 8–12 June 2015; pp. 512–517. [Google Scholar]

- Fahad, L.G.; Khan, A.; Rajarajan, M. Activity recognition in smart homes with self verification of assignments. Neurocomputing 2015, 149, 1286–1298. [Google Scholar] [CrossRef]

- Bota, P.; Silva, J.; Folgado, D.; Gamboa, H. A Semi-Automatic Annotation Approach for Human Activity Recognition. Sensors 2019, 19, 501. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Ng, W.W.; Zhang, J.; Nugent, C.D.; Irvine, N.; Wang, T. Evaluation of radial basis function neural network minimizing L-GEM for sensor-based activity recognition. J. Ambient Intell. Hum. Comput. 2019. [Google Scholar] [CrossRef]

- Wen, J.; Zhong, M. Activity discovering and modelling with labelled and unlabelled data in smart environments. Expert Syst. Appl. 2015, 42, 5800–5810. [Google Scholar] [CrossRef]

- Fahad, L.G.; Rajarajan, M. Integration of discriminative and generative models for activity recognition in smart homes. Appl. Soft Comput. 2015, 37, 992–1001. [Google Scholar] [CrossRef][Green Version]

- Ihianle, I.; Naeem, U.; Islam, S.; Tawil, A.R. A hybrid approach to recognising activities of daily living from object use in the home environment. Informatics 2018, 5, 6. [Google Scholar] [CrossRef]

- Chua, S.L.; Foo, L.K. Sensor selection in smart homes. Procedia Comput. Sci. 2015, 69, 116–124. [Google Scholar] [CrossRef]

- Shahi Soozaei, A. Human Activity Recognition in Smart Homes. Ph.D. Thesis, University of Otago, Dunedin, New Zealand, January 2019. [Google Scholar]

- Caldas, T.V. From Binary to Multi-Class Divisions: Improvements on Hierarchical Divisive Human Activity Recognition. Master’s Thesis, Universidade do Porto, Oporto, Portugal, July 2019. [Google Scholar]

- Fang, L.; Ye, J.; Dobson, S. Discovery and recognition of emerging human activities using a hierarchical mixture of directional statistical models. IEEE Trans. Knowl. Data Eng. 2019. [Google Scholar] [CrossRef]

- Guo, J.; Li, Y.; Hou, M.; Han, S.; Ren, J. Recognition of Daily Activities of Two Residents in a Smart Home Based on Time Clustering. Sensors 2020, 20, 1457. [Google Scholar] [CrossRef]

- Kavitha, R.; Binu, S. Performance Evaluation of Area-Based Segmentation Technique on Ambient Sensor Data for Smart Home Assisted Living. Procedia Comput. Sci. 2019, 165, 314–321. [Google Scholar] [CrossRef]

- Akter, S.S. Improving Sensor Network Predictions through the Identification of Graphical Features. Ph.D. Thesis, Washington State University, Washington, DC, USA, August 2019. [Google Scholar]

- Oukrich, N. Daily Human Activity Recognition in Smart Home based on Feature Selection, Neural Network and Load Signature of Appliances. Ph.D. Thesis, Mohammed V University In Rabat, Rabat, Morocco, April 2019. [Google Scholar]

- Yala, N. Contribution aux Méthodes de Classification de Signaux de Capteurs dans un Habitat Intelligent. Ph.D. Thesis, The University of Science and Technology—Houari Boumediene, Bab-Ezzouar, Algeria, October 2019. [Google Scholar]

- Lyu, F.; Fang, L.; Xue, G.; Xue, H.; Li, M. Large-Scale Full WiFi Coverage: Deployment and Management Strategy Based on User Spatio-Temporal Association Analytics. IEEE Internet Things J. 2019, 6, 9386–9398. [Google Scholar] [CrossRef]

- Chetty, G.; White, M. Body sensor networks for human activity recognition. In Proceedings of the 2016 3rd International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 11–12 February 2016; pp. 660–665. [Google Scholar]

- Singh, T.; Vishwakarma, D.K. Video benchmarks of human action datasets: A review. Artif. Intell. Rev. 2019, 52, 1107–1154. [Google Scholar] [CrossRef]

- Senda, M.; Ha, D.; Watanabe, H.; Katagiri, S.; Ohsaki, M. Maximum Bayes Boundary-Ness Training for Pattern Classification. In Proceedings of the 2019 2nd International Conference on Signal Processing and Machine Learning, Hangzhou, China, 27–29 November 2019; pp. 18–28. [Google Scholar]

- Petrovich, M.; Yamada, M. Fast local linear regression with anchor regularization. arXiv 2020, arXiv:2003.05747. [Google Scholar]

- Yadav, A.; Kumar, E. A Literature Survey on Cyber Security Intrusion Detection Based on Classification Methods of Supervised Machine Learning; Bloomsbury: New Delhi, India, 2019. [Google Scholar]

- Marimuthu, P.; Perumal, V.; Vijayakumar, V. OAFPM: Optimized ANFIS using frequent pattern mining for activity recognition. J. Supercomput. 2019, 75, 5347–5366. [Google Scholar] [CrossRef]

- Raeiszadeh, M.; Tahayori, H.; Visconti, A. Discovering varying patterns of Normal and interleaved ADLs in smart homes. Appl. Intell. 2019, 49, 4175–4188. [Google Scholar] [CrossRef]

- Hossain, H.S.; Roy, N. Active Deep Learning for Activity Recognition with Context Aware Annotator Selection. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 1862–1870. [Google Scholar]

- Kong, D.; Bao, Y.; Chen, W. Collaborative learning based on centroid-distance-vector for wearable devices. Knowl.-Based Syst. 2020. [Google Scholar] [CrossRef]

- Lentzas, A.; Vrakas, D. Non-intrusive human activity recognition and abnormal behavior detection on elderly people: A review. Artif. Intell. Rev. 2020, 53, 1975–2021. [Google Scholar] [CrossRef]

- Arya, M. Automated Detection of Acute Leukemia Using K-Means Clustering Algorithm. Master’s Thesis, North Dakota State University, Fargo, ND, USA, May 2019. [Google Scholar]

- Chetty, G.; Yamin, M. Intelligent human activity recognition scheme for eHealth applications. Malays. J. Comput. Sci. 2015, 28, 59–69. [Google Scholar]

- Soulas, J.; Lenca, P.; Thépaut, A. Unsupervised discovery of activities of daily living characterized by their periodicity and variability. Eng. Appl. Artif. Intell. 2015, 45, 90–102. [Google Scholar] [CrossRef]

- Rojlertjanya, P. Customer Segmentation Based on the RFM Analysis Model Using K-Means Clustering Technique: A Case of IT Solution and Service Provider in Thailand. Master’s Thesis, Bangkok University, Bangkok, Thailand, 16 August 2019. [Google Scholar]

- Zhao, B.; Shao, B. Analysis the Consumption Behavior Based on Weekly Load Correlation and K-means Clustering Algorithm. In Proceedings of the International Conference on Advanced Intelligent Systems and Informatics, Cairo, Egypt, 26–28 October 2019; Springer: Cham, Switzerland, 2019; pp. 70–81. [Google Scholar]

- Zahi, S.; Achchab, B. Clustering of the population benefiting from health insurance using K-means. In Proceedings of the 4th International Conference on Smart City Applications, Casablanca, Morocco, 2–4 October 2019; pp. 1–6. [Google Scholar]

- Dana, R.D.; Dikananda, A.R.; Sudrajat, D.; Wanto, A.; Fasya, F. Measurement of health service performance through machine learning using clustering techniques. J. Phys. Conf. Ser. 2019, 1360, 012017. [Google Scholar] [CrossRef]

- Baek, J.W.; Kim, J.C.; Chun, J.; Chung, K. Hybrid clustering based health decision-making for improving dietary habits. Technol. Health Care 2019, 27, 459–472. [Google Scholar] [CrossRef] [PubMed]

- Rashid, J.; Shah, A.; Muhammad, S.; Irtaza, A. A novel fuzzy k-means latent semantic analysis (FKLSA) approach for topic modeling over medical and health text corpora. J. Intell. Fuzzy Syst. 2019, 37, 6573–6588. [Google Scholar] [CrossRef]

- Lütz, E. Unsupervised Machine Learning to Detect Patient Subgroups in Electronic Health Records. Master’s Thesis, KTH Royal Institute of Technology, Stockholm, Sweden, January 2019. [Google Scholar]

- Maturo, F.; Ferguson, J.; Di Battista, T.; Ventre, V. A fuzzy functional k-means approach for monitoring Italian regions according to health evolution over time. Soft Comput. 2019. [Google Scholar] [CrossRef]

- Wang, S.; Li, M.; Hu, N.; Zhu, E.; Hu, J.; Liu, X.; Yin, J. K-means clustering with incomplete data. IEEE Access 2019, 7, 69162–69171. [Google Scholar] [CrossRef]

- Long, J.; Sun, W.; Yang, Z.; & Raymond, O.I. Asymmetric Residual Neural Network for Accurate Human Activity Recognition. Information 2019, 10, 203. [Google Scholar] [CrossRef]

- Yuan, C.; Yang, H. Research on K-value selection method of K-means clustering algorithm. J. Multidiscip. Sci. J. 2019, 2, 226–235. [Google Scholar] [CrossRef]

- Wang, P.; Shi, H.; Yang, X.; Mi, J. Three-way k-means: Integrating k-means and three-way decision. Int. J. Mach. Learn. Cybern. 2019, 10, 2767–2777. [Google Scholar] [CrossRef]

- Sadeq, S.; Yetkin, G. Semi-Supervised Sparse Data Clustering Performance Investigation. In Proceedings of the International Conference on Data Science, MachineLearning and Statistics, Van, Turkey, 26–29 June 2019; p. 463. [Google Scholar]

- Boddana, S.; Talla, H. Performance Examination of Hard Clustering Algorithm with Distance Metrics. Int. J. Innov. Technol. Explor. Eng. 2019, 9. [Google Scholar] [CrossRef]

- Xiao, Y.; Chang, Z.; Liu, B. An efficient active learning method for multi-task learning. Knowl. Based Syst. 2020, 190, 105137. [Google Scholar] [CrossRef]

- Yao, L.; Nie, F.; Sheng, Q.Z.; Gu, T.; Li, X.; Wang, S. Learning from less for better: Semi-supervised activity recognition via shared structure discovery. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; pp. 13–24. [Google Scholar]

- Tax, N.; Sidorova, N.; van der Aalst, W.M. Discovering more precise process models from event logs by filtering out chaotic activities. J. Intell. Inf. Syst. 2019, 52, 107–139. [Google Scholar] [CrossRef]

- Artavanis-Tsakonas, K.; Karpiyevich, M.; Adjalley, S.; Mol, M.; Ascher, D.; Mason, B.; van der Heden van Noort, G.; Laman, H.; Ovaa, H.; Lee, M. Nedd8 hydrolysis by UCH proteases in Plasmodium parasites. PLoS Pathog. 2019, 15, e1008086. [Google Scholar]

- Koole, G. An Introduction to Business Analytics; MG Books: Amsterdam, The Netherlands, 2019. [Google Scholar]

- Oh, H.; Jain, R. Detecting Events of Daily Living Using Multimodal Data. arXiv 2019, arXiv:1905.09402. [Google Scholar]

- Caleb-Solly, P.; Gupta, P.; McClatchey, R. Tracking changes in user activity from unlabelled smart home sensor data using unsupervised learning methods. Neural Comput. Appl. 2020. [Google Scholar] [CrossRef]

- Patel, A.; Shah, J. Sensor-based activity recognition in the context of ambient assisted living systems: A review. J. Ambient Intell. Smart Environ. 2019, 11, 301–322. [Google Scholar] [CrossRef]

- Leotta, F.; Mecella, M.; Sora, D. Visual process maps: A visualization tool for discovering habits in smart homes. J. Ambient Intell. Hum. Comput. 2019. [Google Scholar] [CrossRef]

- Ferilli, S.; Angelastro, S. Activity prediction in process mining using the WoMan framework. J. Intell. Inf. Syst. 2019, 53, 93–112. [Google Scholar] [CrossRef]

- Wong, W. Combination Clustering: Evidence Accumulation Clustering for Dubious Feature Sets. OSF Prepr. 2019. [Google Scholar] [CrossRef]

- Wong, W.; Tsuchiya, N. Evidence Accumulation Clustering Using Combinations of Features; Center for Open Science: Victoria, Australia, 2019. [Google Scholar]

- Zhao, W.; Li, P.; Zhu, C.; Liu, D.; Liu, X. Defense Against Poisoning Attack via Evaluating Training Samples Using Multiple Spectral Clustering Aggregation Method. CMC-Comput. Mater. Cont. 2019, 59, 817–832. [Google Scholar] [CrossRef]

- Yang, Y.; Zheng, K.; Wu, C.; Niu, X.; Yang, Y. Building an effective intrusion detection system using the modified density peak clustering algorithm and deep belief networks. Appl. Sci. 2019, 9, 238. [Google Scholar] [CrossRef]

- Cuzzocrea, A.; Gaber, M.M.; Fadda, E.; Grasso, G.M. An innovative framework for supporting big atmospheric data analytics via clustering-based spatio-temporal analysis. J. Ambient Intell. Hum. Comput. 2019, 10, 3383–3398. [Google Scholar] [CrossRef]

| Method | Algorithm |

|---|---|

| Partitional Method | K-means algorithm [46,47] |

| Hierarchical Method [48] | COBWEB [49,50] |

| Diffuse Method [13,51] | Fuzzy C Means [52,53] |

| Method Based on Neural Networks [54,55] | SOM [56] |

| Evolutionary Methods [57,58,59] | Genetic Algorithms [57,58,59,60,61,62] |

| Kernel-Based methods [63,64] | Kernel K-means Algorithms [65,66] |

| Spectral Methods [36] | Standard Spectral Clustering [59] |

| # | Activity’ Name | Description |

|---|---|---|

| 1 | Make a phone call [74] | The participant moves to the phone in the dining room, looks up a specific number in the phone book, dials the number, and listens to the message. |

| 2 | Wash hands [74] | The participant moves into the kitchen sink and washes his/her hands in the sink, using hand soap and drying their hands with a paper towel. |

| 3 | Cook [74] | The participant cooks using a pot. |

| 4 | Eat [74] | The participant goes to the dining room and eats the food. |

| 5 | Clean [74] | The participant takes all the dishes to the sink and cleans them with water and dish soap in the kitchen. |

| 6 | Fill medication dispenser [32] | The participant retrieves a pill dispenser and bottle of pills. |

| 7 | Watch DVD [32] | The participant moves to the living room, puts a DVD in the player, and watches a news clip on TV. |

| 8 | Water plants [32] | The participant retrieves a watering can from the kitchen supply closet and waters three plants. |

| 9 | Answer the phone [32] | The phone rings, and the participant answer it. |

| 10 | Prepare birthday card [32] | The participant fills out a birthday card with a check to a friend and addresses the envelope. |

| 11 | Prepare soup [32] | The participant moves to the kitchen and prepares a cup of noodle soup in the microwave. |

| 12 | Choose outfit [32] | The participant selects an outfit from the clothes closet that their friend will wear for a job interview. |

| 13 | Hang up clothes in the hallway closet [75] | The clothes are laid out on the couch in the living room. |

| 14 | Move the couch and coffee table to the other side of the living room [75] | Request help from another person in multioccupancy experimentation. |

| 15 | Sit on the couch and read a magazine [75] | The participant sits down in the living room and reads a magazine. |

| 16 | Sweep the kitchen floor [75] | Sweep the kitchen floor using the broom and dustpan located in the kitchen closet. |

| 17 | Play a game [75] | Play a game of checkers for a maximum of five minutes in a multioccupancy context. |

| 18 | Simulate paying an electric bill [75] | Retrieve a check, a pen, and an envelope from the cupboard underneath the television in the living room. |

| 19 | Walking [76] | Using body sensors, define if the participant is performing the walking action. |

| 20 | Sitting [76] | Using body sensors, define if the participant is performing the sitting action. |

| 21 | Sleeping [76] | Using body sensors, define if the participant is performing the sleeping action. |

| 22 | Using a computer [76] | The participant is in the position of use of the computer for a certain time. |

| 23 | Showering [76] | Detection of environmental sensors of the participant’s stay in the shower. |

| 24 | Toileting [76] | Detection of environmental sensors of the participant’s stay in the bathroom. |

| 25 | Oral hygiene [76] | Using the object and body sensors, the oral hygiene action is identified. |

| 26 | Making Coffee [76] | Detection of objects and environmental sensors of the action of making coffee by the participant. |

| 27 | Walking upstairs [76] | The participant performs the action of climbing the stairs, being detected by the body sensors. |

| 28 | Walking down stairs [76] | The participant performs the action of going down the stairs, being detected by the body sensors. |

| # | Type of Sensor | Sensor | Type of Activities | Reference |

|---|---|---|---|---|

| 1 | Environmental and Object sensors | Motion detectors, break-beam, pressure mats, contact switches, water flow, and wireless object movement | Eat, drink, housework, toileting, cooking, using a computer, watching TV, and call by phone | [80] |

| 2 | motion, temperature and humidity sensors, contacts switches in the doors, and item sensors on key items | phone call, cooking, wash hands, and clean up. | [81] | |

| 3 | Binary sensors on doors and objects | Toileting, bathing, and grooming | [82] | |

| 4 | Object sensors | Shake sensors | Leaving, toileting, showering, sleeping, drinking, and eating | [83] |

| 5 | radio frequency identification (RFID) | Toileting, oral hygiene, washing, telephone use, taking medication, etc. | [84] | |

| 6 | Using bathroom, making meals/drinks, telephone use, set/clean table, eat, and take out trash | [85] | ||

| 7 | Making coffee | [86] |

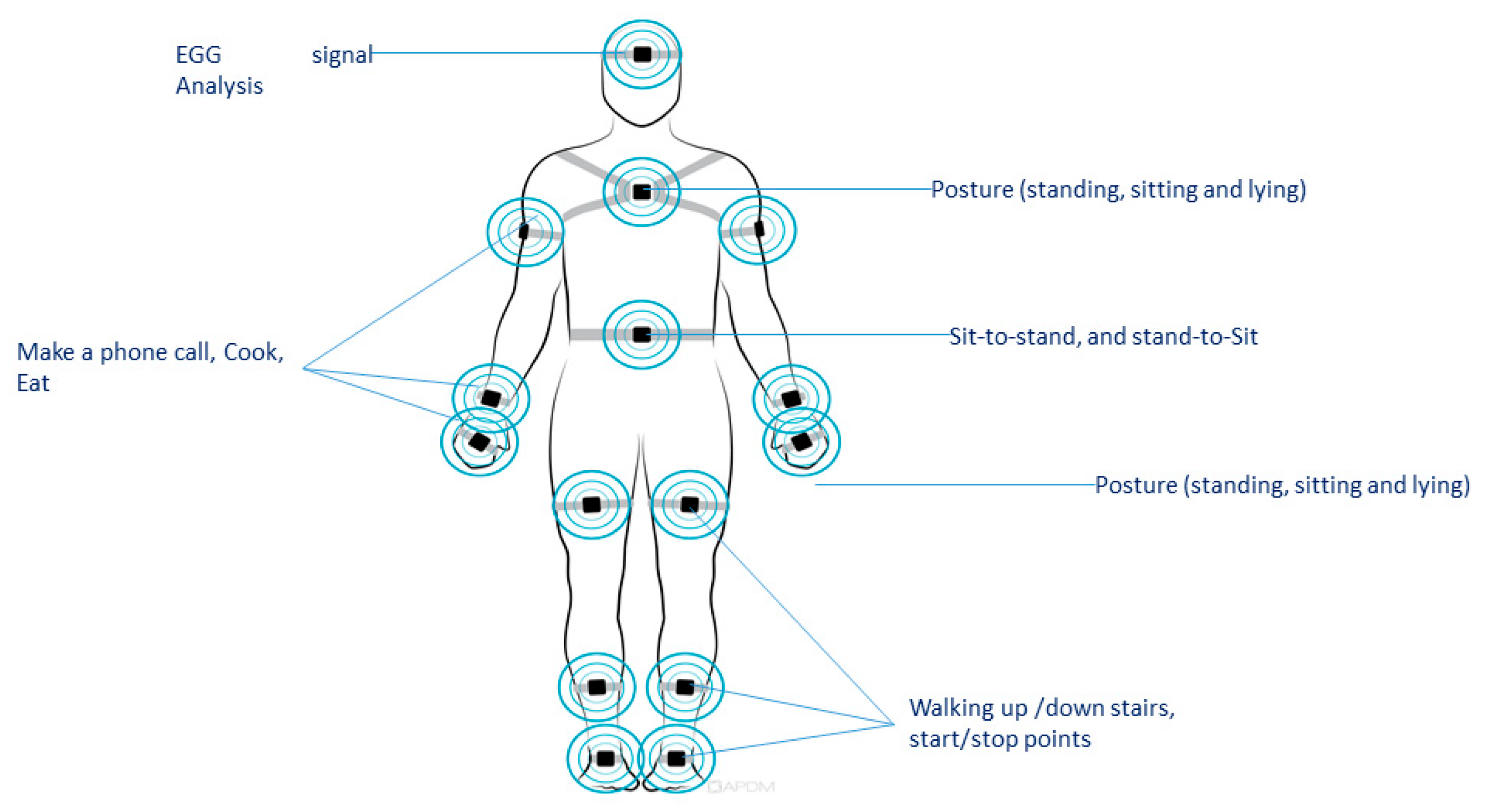

| Number | Sensor Location | Type of Activities |

|---|---|---|

| 1 | Chest [88] | Standing, sitting and lying. |

| 2 | Waist [89] | Sit-to-stand, stand-to-sit, walking. |

| 3 | Upper arm, wrist, thigh and ankle [90] | Posture and some ADLs. |

| 4 | Wrist [91] | Sport movement. |

| 5 | Wrist, waist, and shoulder [92] | Riding elevator, walking up stairs. |

| 6 | On the belt [93,94] | Walking upstairs, walking downstairs, start or stop points. |

| Number | Dataset’s Name | Occupancy | # Subjects | # Activities | Sensor‘s Type |

|---|---|---|---|---|---|

| 1 | Vankasteren [28] | Single | 1 | 8 | E |

| 2 | Opportunity [33] | Multioccupancy | 4 | 16 | O, A |

| 3 | CASAS- Daily Life Kyoto [29] | Single | 1 | 10 | O, A |

| 4 | UCI SmartPhone [32] | Multioccupancy | 30 | 6 | A, G |

| 5 | CASAS Aruba [30] | Single | 1 | 11 | E, O |

| 6 | PAMAP2 [95] | Multioccupancy | 9 | 18 | A, G, M |

| 7 | CASAS Multiresident [31] | Multioccupancy | 2 | 8 | A, O, E |

| 8 | USC-HAD [96] | Multioccupancy | 14 | 12 | A, G |

| 9 | mHeath [34] | Multioccupancy | 10 | 12 | A, G |

| 10 | WISDM [97] | Multioccupancy | 29 | 6 | A |

| 11 | MIT PlaceLab [98] | Single | 1 | 10 | A, O, G |

| 12 | DSADS [99] | Multioccupancy | 8 | 19 | A, G, M |

| 13 | DOMUS [100] | Single | 1 | 15 | A, G, O |

| 14 | Smart Environment- Ulster University [101] | Single | 1 | 9 | A, G, M |

| 15 | UJAmI SmartLab [102] | Single | 1 | 7 | O, E |

| Identifier | Year | Paper Title | Journal | ISSN | Proceedings or Book | Quartile | Journal Country | First Author´s Country | University |

|---|---|---|---|---|---|---|---|---|---|

| Art1 | 2015 | Towards unsupervised physical activity recognition using Smartphone accelerometers | Multimedia Tools and Applications | 1380–7501 | Book Series | Q1 | Netherlands | China | Langhou University |

| Identifier | Dataset | Type | Methods | Metrics | Approach | |||

|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F-Measure | |||||

| Art1 | Kasteren | Real | Calculating neighborhood radius | 86 | 76 | 80 | 76 | Unsupervised |

| Art2 | WISDM | Real | MCODE-Based | 85 | 77 | 83 | 77 | Unsupervised |

| References | Dataset | Accuracy | Precision | Recall | F-Measure |

|---|---|---|---|---|---|

| [104] | Van Kasteren [28] | 97.2% | 88.25% | 83.66% | 84% |

| [116] | 96.67% | 97.33% | 96.67% | 97% | |

| [117] | 93.55% | 92.97% | 91.3% | 91% | |

| [109] | -- | 95% | 100% | 97% | |

| [118] | 88.14% | -- | -- | -- | |

| [107] | 97% | -- | -- | -- | |

| [119] | 92% | -- | -- | -- | |

| [120] | 78.9% | -- | -- | -- | |

| [121] | 84% | -- | -- | -- | |

| [122] | 89.5% | -- | -- | -- | |

| [123] | 82% | -- | -- | -- | |

| [104] | Casas Aruba [30] | 98.14% | 74.73% | 76.29% | 72% |

| [123] | 77.10% | -- | -- | -- | |

| [124] | 74% | -- | -- | -- | |

| [125] | 78% | -- | -- | -- | |

| [126] | 98.93% | -- | -- | -- | |

| [127] | 73.44% | -- | -- | -- | |

| [104] | Casas Kyoto [29] | 98.14% | 74.73% | 76.29% | 72% |

| [116] | 94.21% | 90.10% | 93.11% | 91% | |

| [117] | 94.62% | 93.21% | 94.62% | 93% | |

| [128] | 91% | -- | -- | -- | |

| [129] | 89% | -- | -- | -- | |

| [130] | 81.1% | -- | -- | -- | |

| [131] | -- | 83.26% | -- | -- | |

| [132] | 87.45% | 86.12% | -- | -- | |

| [125] | 78% | -- | -- | -- | |

| [104] | Casas Tulum [67] | 86.15% | 59.18% | 57.12% | 57% |

| [131] | -- | -- | -- | 72% | |

| [132] | -- | -- | -- | 74% | |

| [125] | -- | 65.3% | 82% | -- | |

| [104] | 75.45% | -- | 78% | -- | |

| [105] | Hh102 [68] | 66% | -- | -- | 53% |

| [105] | Hh104 [68] | 78% | -- | -- | 60% |

| [115] | UCI Human Activity Recognition (HAR) [90] | 71% | -- | -- | -- |

| [107] | MIT PlaceLab [97] | 94.5% | -- | -- | -- |

| [118] | PAMAP2 [92] | 62% | -- | -- | -- |

| References | Dataset | Accuracy | Precision | Recall | F-Measure |

|---|---|---|---|---|---|

| [46] | VanKasteren [28] | -- | 88.6% | 95.48% | 91.91% |

| [133] | 87.21% | -- | -- | -- | |

| [134] | 82% | -- | -- | -- | |

| [135] | -- | -- | 72% | 85% | |

| [136] | -- | -- | -- | 82.78% | |

| [137] | -- | 76.23% | -- | -- | |

| [138] | |||||

| [139] | WISDM [96] | 71% | -- | -- | -- |

| [140] | Liara [93] | 86% | -- | -- | -- |

| [109] | Opportunity [33] | 79% | -- | -- | -- |

| [128] | 80% | -- | -- | -- | |

| [115] | 86.8% | -- | -- | -- | |

| [138] | -- | 79.67% | -- | -- | |

| [141] | -- | 82.45% | -- | -- | |

| [142] | -- | -- | 75.45% | -- | |

| [143] | -- | -- | -- | 87.32% | |

| [144] | -- | -- | -- | 85.45% | |

| [139] | MHealth [34] | 71.66% | -- | -- | -- |

| [112] | 71% | -- | -- | -- | |

| [145] | 78.45% | -- | -- | -- | |

| [146] | -- | -- | -- | 78.56% | |

| [147] | -- | -- | -- | 77.56% | |

| [148] | 73.45% | -- | -- | -- | |

| [149] | 78.63%% | -- | -- | -- | |

| [150] | UCI HAR [32] | 52.1% | -- | -- | -- |

| [151] | 76.32% | -- | -- | -- | |

| [152] | -- | -- | -- | 77.22% | |

| [153] | -- | -- | -- | 78.45% | |

| [154] | 79.37% | -- | -- | -- | |

| [155] | 75.31% | -- | -- | -- |

| References | Dataset | Accuracy |

|---|---|---|

| [156] | VanKasteren [28] | 94.3% |

| [157] | 78.5% | |

| [158] | 75.42% | |

| [159] | 81.65% | |

| [160] | 86.32% | |

| [161] | 89.45% | |

| [156] | Casas Aruba [30] | 91.88% |

| [161] | 88.32% | |

| [162] | 89.78% | |

| [163] | 87.67% | |

| [164] | 86.43% | |

| [165] | 89.12% | |

| [156] | Casas Kyoto [29] | 96.67% |

| [166] | 86.32% | |

| [167] | 76.45% | |

| [168] | 89.12% | |

| [169] | 85.34% | |

| [156] | Casas Tulum [67] | 99.28% |

| [156] | Milan [68] | 95.20% |

| [156] | Cairo [68] | 94.17% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ariza Colpas, P.; Vicario, E.; De-La-Hoz-Franco, E.; Pineres-Melo, M.; Oviedo-Carrascal, A.; Patara, F. Unsupervised Human Activity Recognition Using the Clustering Approach: A Review. Sensors 2020, 20, 2702. https://doi.org/10.3390/s20092702

Ariza Colpas P, Vicario E, De-La-Hoz-Franco E, Pineres-Melo M, Oviedo-Carrascal A, Patara F. Unsupervised Human Activity Recognition Using the Clustering Approach: A Review. Sensors. 2020; 20(9):2702. https://doi.org/10.3390/s20092702

Chicago/Turabian StyleAriza Colpas, Paola, Enrico Vicario, Emiro De-La-Hoz-Franco, Marlon Pineres-Melo, Ana Oviedo-Carrascal, and Fulvio Patara. 2020. "Unsupervised Human Activity Recognition Using the Clustering Approach: A Review" Sensors 20, no. 9: 2702. https://doi.org/10.3390/s20092702

APA StyleAriza Colpas, P., Vicario, E., De-La-Hoz-Franco, E., Pineres-Melo, M., Oviedo-Carrascal, A., & Patara, F. (2020). Unsupervised Human Activity Recognition Using the Clustering Approach: A Review. Sensors, 20(9), 2702. https://doi.org/10.3390/s20092702