Identification of Flying Insects in the Spatial, Spectral, and Time Domains with Focus on Mosquito Imaging

Abstract

:1. Introduction

1.1. Reflectance, Depolarization, and Fluorescence Spectroscopy

1.2. Wing-Beat Frequency Analysis

2. Mosquito Sampling Method

2.1. Common Sampling Methods

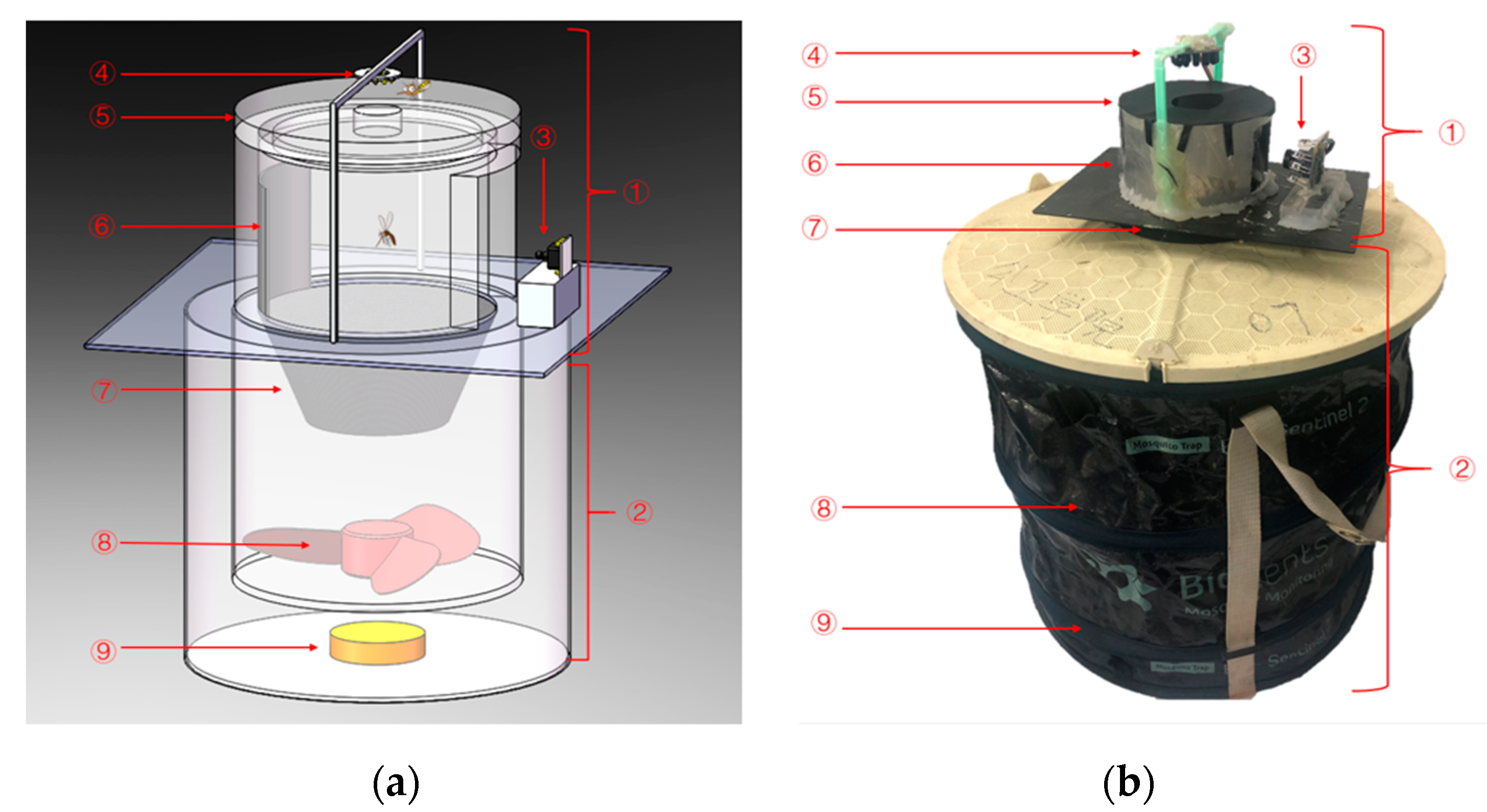

2.2. Present System Description

3. Insect Imaging

3.1. Detection and Classification

- Step one: Convert the image (pixels: 1280 × 960) into grayscale.

- Step two: Cut out the template (pixels: 200 × 144) from one image sample, as shown in Figure 3a, and perform the padding operation to another image sample. Padding operation means to increase length and width of the original photo with the length and width of the template to make the new image (pixels: 1480 × 1104), as shown in Figure 3b. Without applying a padding operation, targets that are near the boundary would be missed by the algorithm.

- Step three: Move the template one pixel to the right and repeat the calculation in Step 2 until the template arrives to the far right.

- Step four: When the template arrives to the far right, move it one pixel down and repeat the calculation in Step two and Step three from the far left.

- Step five: After Step three and Step four, we can get a new matrix of dimension (1480 − 200 + 1) × (1104 − 144 + 1), composed of the calculated correlation coefficient rccoeff values. They are limited between −1 and 1. The higher the correlation value is, the greater the matching degree is. The result is shown in Figure 4b), where the vertical scale, showing the correlation, has been multiplied by 255 for clarity. Then we select the maximum value, the minimum value and maximum position information from the matrix.

- Step six: Centering on the coordinates of the maximum, an area of the same size (pixels: 200 × 144) as the template in the original image (pixels: 1280 × 960) is placed. Normalization, binarization, and morphological processing are carried out for the region within the original area to obtain the contour of the object. Then, we calculate the area and perimeter of this contour and divide the perimeter by the area to get the ratio.

- Step seven: In the matrix covering procedure is performed, which is replacing the area in Step six with the minimum value from Step five. Then, we repeat the process in Step five and Step six until the maximum value is smaller than the threshold.

3.2. Result Evaluation

3.2.1. Detection Algorithm Evaluation

3.2.2. Classification Method Evaluation

4. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Stork, N. How many species of insects and other terrestrial arthropods are there on earth? Annu. Rev. Entomol. 2018, 63, 31–45. [Google Scholar]

- Yinon, M.B.O.; Rob, P.; Ron, M. The biomass distribution on earth. Proc. Natl. Acad. Sci. USA 2018, 115, 6506–6511. [Google Scholar]

- Schowalter, T.D.; Noriega, J.A.; Tscharntke, T. Insect effects on ecosystem services—Introduction. Basic Appl. Ecol. 2018, 26, 1–7. [Google Scholar]

- World Health Organization. World Malaria Report 2020; World Health Organization: Geneva, Switzerland, 2020. [Google Scholar]

- Rosenberg, R.; Lindsey, N.P.; Fischer, M.; Gregory, C.J.; Hinckley, A.F.; Mead, P.S.; Paz-Bailey, G.; Waterman, S.H.; Drexler, N.A.; Kersh, G.J.; et al. Vital signs: Trends in reported vector-borne disease cases—United States and territories, 2004–2016. MMWR Morb. Mortal. Wkly. Rep. 2018, 67, 496–501. [Google Scholar]

- Svanberg, S. Atomic and Molecular Spectroscopy—Basic Aspects and Practical Applications, 4th ed.; Springer: Heidelberg/Berlin, Germany; New York, NY, USA, 2004. [Google Scholar]

- Tkachenko, N.V. Optical Spectroscopy—Methods and Instrumentation; Elsevier: Amsterdam, The Netherlands, 2006. [Google Scholar]

- Brydegaard, M.; Svanberg, S. Photonic monitoring of atmospheric and aquatic fauna. Laser Photonics Rev. 2018, 12, 1800135. [Google Scholar]

- Zhu, S.M.; Malmqvist, E.; Li, W.S.; Jansson, S.; Li, Y.Y.; Duan, Z.; Svanberg, K.; Feng, H.Q.; Song, Z.W.; Zhao, G.Y.; et al. Insect abundance over Chinese rice fields in relation to environmental parameters, studied with a polarization-sensitive CW near-IR lidar system. Appl. Phys. B 2017, 123, 211. [Google Scholar]

- Berthier, S. Structural Color Reference Iridescences: The Physical Colors of Insects; Springer International: Dordrecht, The Netherlands, 2007. [Google Scholar]

- Runemark, A.; Wellenreuther, M.; Jayaweera, H.H.E.; Svanberg, S.; Brydegaard, M. Rare events in remote dark-field spectroscopy: An ecological case study of insects. IEEE Sel. Top. Quantum Electron. 2012, 5, 1573–1582. [Google Scholar]

- Zhu, S.M.; Li, Y.Y.; Gao, L.N.; Li, T.Q.; Zhao, G.Y.; Svanberg, S.; Lu, C.H.; Hu, J.D.; Huang, J.R.; Feng, H.Q. Optical remote detection of flying Chinese agricultural pest insects using dark-field reflectance measurements. Acta Sin. Entomol. 2016, 59, 1376–1385. [Google Scholar]

- Li, Y.Y.; Zhang, H.; Duan, Z.; Lian, M.; Zhao, G.Y.; Sun, X.H.; Hu, J.D.; Gao, L.N.; Feng, H.Q.; Svanberg, S. Optical characterization of agricultural pest insects: A methodological study in the spectral and time domains. Appl. Phys. B 2016, 122, 213. [Google Scholar]

- Li, M.; Jansson, S.; Runemark, A.; Peterson, J.; Kirkeby, C.T.; Jönsson, A.M.; Brydegaard, M. Bark beetles as lidar targets and prospects of photonics surveillance. J. Biophotonics 2020, 14, e202000420. [Google Scholar]

- Brydegaard, M.; Guan, Z.; Wellenreuther, M.; Svanberg, S. Insect monitoring with fluorescence lidar techniques: Feasibility study. Appl. Opt. 2009, 48, 5668–5677. [Google Scholar]

- Guan, Z.; Brydegaard, M.; Lundin, P.; Wellenreuther, M.; Runemark, A.; Svensson, E.I.; Svanberg, S. Insect monitoring with fluorescence lidar techniques: Field experiments. Appl. Opt. 2010, 49, 5133–5142. [Google Scholar]

- Weitkamp, C. LIDAR: Range-Resolved Optical Remote Sensing of the Atmosphere; Weitkamp, C., Ed.; Springer: New York, NY, USA, 2005. [Google Scholar]

- Svanberg, S. Fluorescence spectroscopy and imaging of lidar targets. In Laser Remote Sensing; Fujii, T., Fukuchi, T., Eds.; CRC Press: Boca Raton, FL, USA, 2005; pp. 433–467. [Google Scholar]

- Raimondi, V.; Cecchi, G.; Lognoli, D.; Palombi, L.; Grönlund, R.; Johansson, A.; Svanberg, S.; Barup, K.; Hällström, J. The fluorescence lidar technique for the remote sensing of photoautotrophic biodeteriogens on outdoor cultural heritage: A decade of in situ experiments. Int. Biodeterior. Biodegrad. 2010, 63, 823–835. [Google Scholar]

- Mei, L.; Guan, Z.G.; Zhou, H.J.; Lv, J.; Zhu, Z.R.; Cheng, J.A.; Chen, F.J.; Löfstedt, C.; Svanberg, S.; Somesfalean, G. Agricultural pest monitoring using fluorescence lidar techniques. Appl. Phys. B 2012, 106, 733–740. [Google Scholar]

- Brydegaard, M.; Lundin, P.; Guan, Z.; Runemark, A.; Åkesson, S.; Svanberg, S. Feasibility study: Fluorescence lidar for remote bird classification. Appl. Opt. 2010, 49, 4531–4544. [Google Scholar]

- Potamitis, I.; Ganchev, T.; Kontodimas, D. On automatic bioacoustic detection of pests: The cases of Rhynchophorus ferrugineus and Sitophilus oryzae. J. Econ. Entomol. 2009, 102, 1681–1690. [Google Scholar]

- Potamitis, I.; Rigakis, I. Measuring the fundamental frequency and the harmonic properties of the wingbeat of a large number of mosquitoes in flight using 2D optoacoustic sensors. Appl. Acoust. 2016, 109, 54–60. [Google Scholar]

- Repasky, K.S.; Shaw, J.A.; Scheppele, R.; Melton, C.; Carlsten, J.L.; Spangler, L.H. Optical detection of honeybees by use of wing-beat modulation of scattered laser light for locating explosives and land mines. Appl. Opt. 2006, 45, 1839–1843. [Google Scholar]

- Hoffman, D.S.; Nehrir, A.R.; Repasky, K.S.; Shaw, J.A.; Carlsten, J.L. Range-resolved optical detection of honeybees by use of wing-beat modulation of scattered light for locating land mines. Appl. Opt. 2007, 46, 3007. [Google Scholar]

- Brydegaard, M.; Gebru, A.; Svanberg, S. Super resolution laser radar with blinking atmospheric particles—Application to interacting flying insects. Prog. Electromagn. Res. 2014, 147, 141. [Google Scholar]

- Malmqvist, E.; Jansson, S.; Török, S.; Brydegaard, M. Effective parameterization of laser radar observations of atmospheric fauna. IEEE J. Sel. Top. Quantum Electron. 2015, 22, 327. [Google Scholar]

- Mei, L.; Brydegaard, M. Continuous-wave differential absorption lidar. Lasers Photonics Rev. 2015, 9, 629. [Google Scholar]

- Brydegaard, M.; Jansson, S. Advances in entomological laser radar. J. Eng. 2019, 2019, 7542–7545. [Google Scholar]

- Gebru, A.K.; Rohwer, E.G.; Neethling, P.; Brydegaard, M.S. Investigation of atmospheric insect wing-beat frequencies and iridescence features using a multispectral kHz remote detection system. J. Appl. Remote Sens. 2014, 8, 083503. [Google Scholar]

- Malmqvist, E.; Jansson, S.; Zhu, S.M.; Li, W.S.; Svanberg, K.; Svanberg, S.; Rydell, J.; Song, Z.W.; Bood, J.; Brydegaard, M.; et al. The bat-bird-bug battle: Daily flight activity of insects and their predators over a rice field revealed by high resolution Scheimpflug lidar. R. Soc. Open Sci. 2018, 5, 172303. [Google Scholar]

- Song, Z.W.; Zhang, B.X.; Feng, H.Q.; Zhu, S.M.; Hu, L.N.; Brydegaard, M.; Li, Y.Y.; Jansson, S.; Malmqvist, E.; Svanberg, K.; et al. Application of lidar remote sensing of insects in agricultural entomology on the Chinese scene. J. Appl. Entomol. 2020, 144, 161. [Google Scholar]

- Li, Y.Y.; Wang, K.; Quintero-Torres, R.; Brick, R.; Sokolov, A.V.; Scully, M.O. Insect flight velocity measurement with a CW near-IR Scheimpflug lidar system. Opt. Express 2020, 28, 21891. [Google Scholar]

- Brydegaard, M.; Jansson, S.; Malmqvist, E.; Mlacha, Y.P.; Gebru, A.; Okumu, F.; Killeen, G.F.; Kirkeby, C. Lidar reveals activity anomaly of malaria vectors during pan-African eclipse. Sci. Adv. 2020, 6, eaay5487. [Google Scholar]

- Gebru, A.; Jansson, S.; Ignell, R.; Kirkeby, C.; Prangsma, J.C.; Brydegaard, M. Multiband modulation spectroscopy for the determination of sex and species of mosquitoes in flight. J. Biophotonics 2018, 11, e201800014. [Google Scholar]

- Lu, J.C.; Li, Y.; Yuan, Y.; Zhu, S.M.; Duan, Z.; Zhao, G.Y.; Svanberg, S. Monitoring of flying insects using a dual-wavelength CW lidar system. In Proceedings of the 2019 Asia Communications and Photonics Conference, Optical Society, Chengdu, China, 2–5 November 2019; p. M4A.4. [Google Scholar]

- Kirkeby, C.; Rydhmer, K.; Cook, S.M.; Strand, A.; Torrance, M.T.; Swain, J.L.; Prangsma, J.; Johnen, A.; Jensen, M.; Brydegaard, M.; et al. Advances in automatic identification of flying insects using optical sensors and machine learning. Sci. Rep. 2021, 11, 1555. [Google Scholar]

- Zhao, G.Y.; Ljungholm, M.; Malmqvist, E.; Bianco, G.; Hansson, L.A.; Svanberg, S.; Brydegaard, M. Inelastic hyperspectral lidar for profiling aquatic ecosystems. Laser Photonics Rev. 2016, 10, 807–813. [Google Scholar]

- Duan, Z.; Yuan, Y.; Lu, J.C.; Wang, J.L.; Li, Y.; Svanberg, S.; Zhao, G.Y. Under-water spatially, spectrally, and temporally resolved optical monitoring of aquatic fauna. Opt. Express 2020, 28, 2600–2610. [Google Scholar]

- Wang, X.; Duan, Z.; Brydegaard, M.; Svanberg, S.; Zhao, G.Y. Drone-based area scanning of vegetation fluorescence height profiles using a miniaturized hyperspectral lidar system. Appl. Phys. B 2018, 124, 207. [Google Scholar]

- Potamitis, I.; Rigakis, I. Large aperture optoelectronic devices to record and time-stamp insects’ wingbeats. IEEE Sens. J. 2016, 16, 6053. [Google Scholar]

- Genoud, A.P.; Basistyy, R.; Williams, G.M.; Thomas, B.P. Optical remote sensing for monitoring flying mosquitoes, gender identification and discussion on species identification. Appl. Phys. B 2018, 124, 46. [Google Scholar]

- Rigakis, I.; Potamitis, I.; Tatlas, N.-A.; Livadaras, I.; Ntalampiras, S. A multispectral backscattered light recorder of insects’ wingbeats. Electronics 2019, 8, 277. [Google Scholar]

- Brydegaard, M. Towards quantitative optical cross sections in entomological laser radar—Potential of temporal and spherical parameterizations for identifying atmospheric fauna. PLoS ONE 2015, 10, e0135231. [Google Scholar]

- Kenea, O.; Balkew, M.; Tekie, H.; Gebre-Michael, T.; Deressa, W.; Loha, E.; Lindtjorn, B.; Overgaard, H. Comparison of two adult mosquito sampling methods with human landing catches in south-central Ethiopia. Malar. J. 2017, 16, 30. [Google Scholar]

- Degefa, T.; Yewhalaw, D.; Zhou, G.; Atieli, H.; Githeko, A.K.; Yan, G. Evaluation of human-baited double net trap and human-odour-baited CDC light trap for outdoor host-seeking malaria vector surveillance in Kenya and Ethiopia. Malar. J. 2020, 19, 174. [Google Scholar]

- Moore, C.G.; McLean, R.G.; Mitchell, C.J.; Nasci, R.S.; Tsai, T.F.; Calisher, C.H.; Marfin, A.A.; Moore, P.S.; Gubler, D.J. Guidelines for Arbovirus Surveillance Programs in the United States; 1993. Available online: https://www.cdc.gov/ncezid/dvbd/adb/resources/arboguid_508.pdf (accessed on 31 March 2021).

- Maciel-de-Freitas, R.; Eiras, A.E.; Lourenço-de-Oliveira, R. Field evaluation of effectiveness of the BG-Sentinel, a new trap for capturing adult Aedes Aegypti (Diptera: Culicidae). Memórias do Instituto Oswaldo Cruz 2006, 101, 321–325. [Google Scholar]

- De Los Reyes, A.M.M.; Reyes, A.C.A.; Torres, J.L.; Padilla, D.A.; Villaverde, J. Detection of Aedes Aegypti mosquito by digital image processing techniques and support vector machine. In Proceedings of the 2016 IEEE Region 10 Conference (TENCON), Singapore, 22–25 November 2016; pp. 2342–2345. [Google Scholar]

- Zhu, C.; Wang, J.; Liu, H.; Mi, H. Insect identification and counting in stored grain: Image processing approach and application embedded in smartphones. Mob. Inf. Syst. 2018, 2018, 5491706. [Google Scholar]

- Munoz, J.P.; Boger, R.; Dexter, S.; Low, R.; Li, J. Image recognition of disease-carrying insects: A system for combating infectious diseases using image classification techniques and citizen science. In Proceedings of the Hawaii International Conference on System Sciences (HICSS), Hilton Waikoloa Village, HI, USA, 3–6 January 2018. [Google Scholar]

- Li, Y.P.; Zilli, D.; Chan, H.; Kiskin, I.; Sinka, M.; Roberts, S.; Willis, K. Mosquito detection with low-cost smartphones: Data acquisition for malaria research. arXiv 2017, arXiv:1711.06346v3. [Google Scholar]

- Minakshi, M.; Bharti, P.; Chellappan, S. Leveraging smart-phone cameras and image processing techniques to classify mosquito species. In Proceedings of the 15th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, Association for Computing Machinery, New York, NY, USA, 5–7 November 2018; pp. 77–86. [Google Scholar]

- Dixit, A.; Hegde, N.P. Image texture analysis—Survey. In Proceedings of the 2013 Third International Conference on Advanced Computing and Communication Technologies (ACCT), Rohtak, India, 6–7 April 2013; pp. 69–76. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Zhong, Y.; Gao, J.; Lei, Q.; Zhou, Y. A vision-based counting and recognition system for flying insects in intelligent agriculture. Sensors 2018, 18, 1489. [Google Scholar]

- Koutroumbas, K.; Theodoridis, S. Template matching. In Pattern Recognition, 4th ed.; Koutroumbas, K., Theodoridis, S., Eds.; Academic Press: Cambridge, MA, USA, 2009; pp. 481–519. [Google Scholar]

- Lewis, J.P. Fast template matching. Vis. Interface 1994, 95, 120–123. [Google Scholar]

- Schweitzer, H.; Bell, J.W.; Wu, F. Very fast template matching. In Computer Vision—ECCV 2002, Proceedings of the European Conference on Computer Vision 2002, Copenhagen, Denmark, 28–31 May 2002; Heyden, A., Sparr, G., Nielsen, M., Johansen, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2002; Volume 2353, pp. 358–372. [Google Scholar]

- Versaci, M.; Morabito, F.C. Image Edge Detection: A new approach based on fuzzy entropy and fuzzy divergence. Int. J. Fuzzy Syst. 2021. [Google Scholar] [CrossRef]

- Gandhi, M.; Kamdar, J.; Shah, M. Preprocessing of non-symmetrical images for edge detection. Augment. Hum. Res. 2020, 5, 10. [Google Scholar]

- Svanberg, S. Laser spectroscopy in medical diagnostics. In Lasers for Medical Applications; Jelinkova, H., Ed.; Woodhead Publ.: Cambridge, UK, 2013; pp. 286–324. [Google Scholar]

- Zhou, Z.H. Model evaluation and selection. In Machine Learning, 1st ed.; Springer: Singapore, 2021; pp. 20–40. [Google Scholar]

- Sasaki, Y. The truth of the F-measure. Teach Tutor Mater 2007, 1, 1. [Google Scholar]

- Lin, Y.Y.; Svanberg, S. Foreground scattering elimination by inverse lock-in-like spatial modulation. Vision 2020, 4, 37. [Google Scholar]

- Zheng, Q.; Yang, M.; Yang, J.; Zhang, Q.; Zhang, X. Improvement of generalization ability of deep CNN via implicit regularization in two-stage training process. IEEE Access 2018, 6, 15844–15869. [Google Scholar]

- Snyder, C.; Vishwanath, S. Sample compression, support vectors, and generalization in deep learning. IEEE J. Sel. Areas Inf. Theory 2020, 1, 106–120. [Google Scholar]

- Wang, J.L.; Zhu, S.M.; Lin, Y.Y.; Svanberg, S.; Zhao, G.Y. Mosquito counting system based on optical sensing. Appl. Phys. B 2020, 126, 28. [Google Scholar]

| Algorithm Type | Template Matching | Covering | Padding | Detection Rate |

|---|---|---|---|---|

| Single template matching algorithm | √ | × | × | 64% |

| Multi-target template matching algorithm | √ | √ | × | 84% |

| Our proposed algorithm | √ | √ | √ | 92% |

| Methods | TP | FN | FP | Recall | Precision | F-Measure |

|---|---|---|---|---|---|---|

| No Classification | 86 | 14 | 13 | 86.0% | 86.8% | 86.4% |

| Classification | 93 | 6 | 7 | 93.9% | 93.0% | 93.5% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Y.; Lin, Y.; Zhao, G.; Svanberg, S. Identification of Flying Insects in the Spatial, Spectral, and Time Domains with Focus on Mosquito Imaging. Sensors 2021, 21, 3329. https://doi.org/10.3390/s21103329

Sun Y, Lin Y, Zhao G, Svanberg S. Identification of Flying Insects in the Spatial, Spectral, and Time Domains with Focus on Mosquito Imaging. Sensors. 2021; 21(10):3329. https://doi.org/10.3390/s21103329

Chicago/Turabian StyleSun, Yuting, Yueyu Lin, Guangyu Zhao, and Sune Svanberg. 2021. "Identification of Flying Insects in the Spatial, Spectral, and Time Domains with Focus on Mosquito Imaging" Sensors 21, no. 10: 3329. https://doi.org/10.3390/s21103329

APA StyleSun, Y., Lin, Y., Zhao, G., & Svanberg, S. (2021). Identification of Flying Insects in the Spatial, Spectral, and Time Domains with Focus on Mosquito Imaging. Sensors, 21(10), 3329. https://doi.org/10.3390/s21103329