Implementation of Lightweight Convolutional Neural Networks via Layer-Wise Differentiable Compression

Abstract

:1. Introduction

- The proposed approach addresses the compression problem of CNNs from a fresh perspective, which replaces the original bulky operators with lightweight ones directly instead of pruning units from original redundant operators.

- Most of the existing approaches [15,16,17,18,19] require specifying the compression rate for each layer or require a threshold that is used to determine which structural units to prune. Our proposed approach does not require any such input and can automatically search for the best lightweight operator in each layer to replace the original redundant operator, thereby reducing the number of hyperparameters.

- The proposed approach is end-to-end trainable, which can compress and train CNNs simultaneously using gradient descent in one go. We can obtain various compressed lightweight CNNs with different architectures, which also inspires the future design of CNNs.

2. Related Works

3. Methodology

3.1. Overview

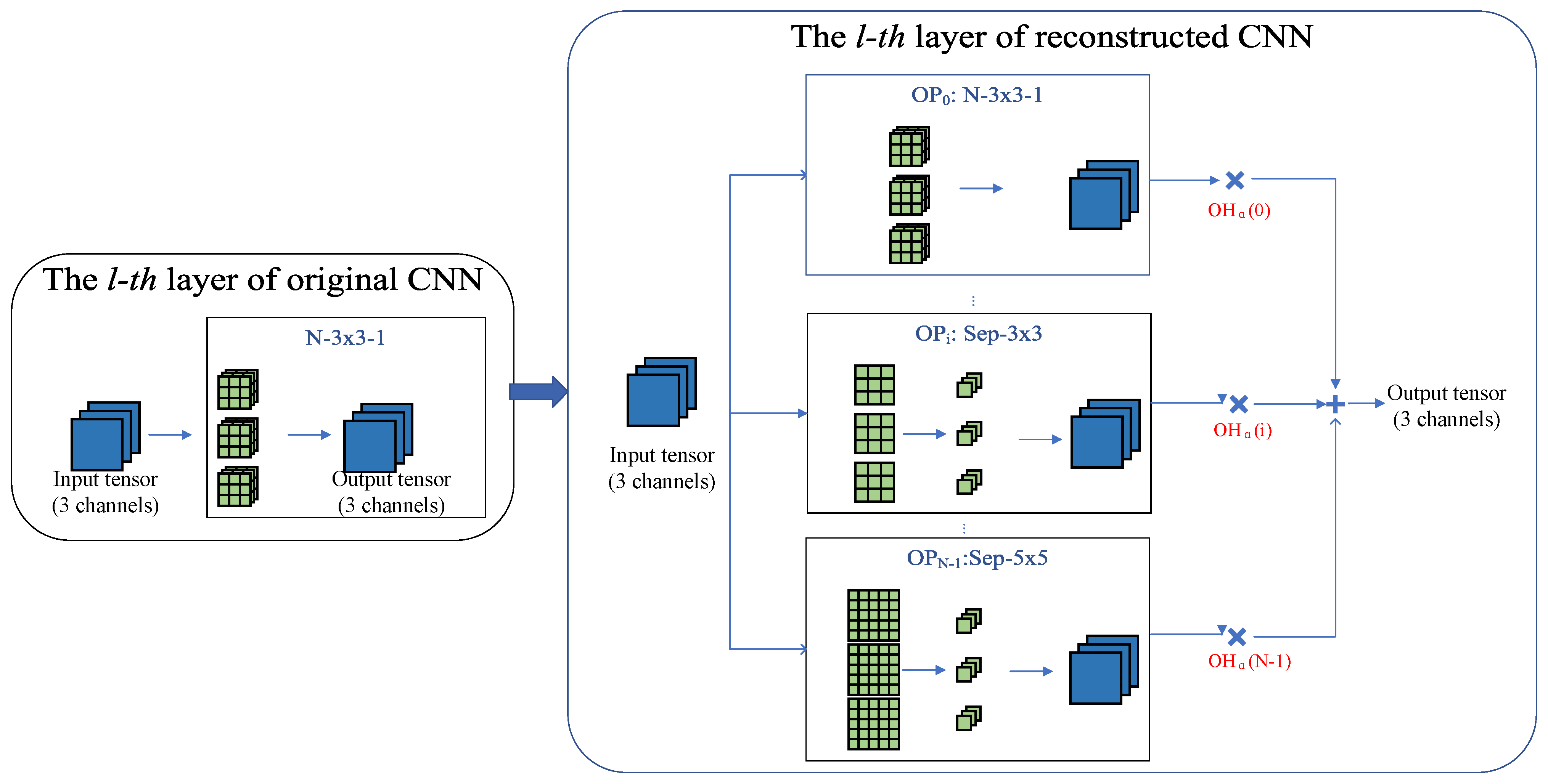

3.2. The Reconstructing Stage

3.3. The Searching Stage

3.3.1. Trainable Gate Function

3.3.2. Continuous Approximation for Discrete One-Hot Vector

3.3.3. Resource-Constrained Objective Function

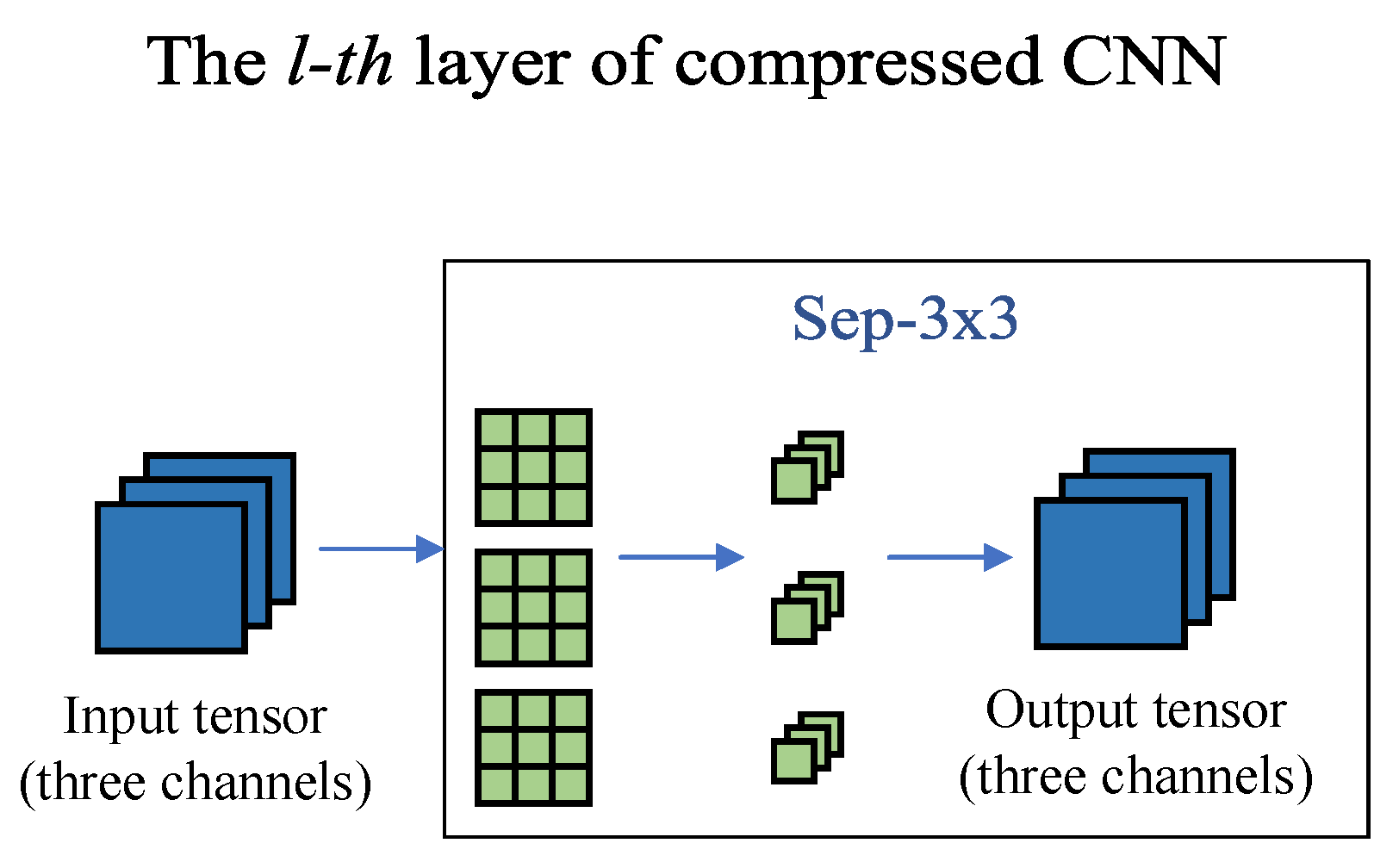

3.4. The Fine-Tuning Stage

4. Experiments

4.1. Dataset

4.2. Evaluation Metrics

4.3. Results on Cifar

4.4. Results on ImageNet

4.5. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Operators

| Name | S | P | G | d | A | k | PS | Flops | ||

|---|---|---|---|---|---|---|---|---|---|---|

| N_3 × 3_g | S | 1 | g | 1 | ReLU | 3 × 3 | ||||

| N_1 × 1_g | S | 1 | g | 1 | ReLU | 1 × 1 | ||||

| skip_connect | - | - | - | - | - | - | 0 | 0 | ||

| avg_pool_3 × 3 | S | 1 | 1 | 1 | ReLU | 3 × 3 | 0 | |||

| max_pool_3 × 3 | S | 1 | 1 | 1 | ReLU | 3 × 3 | 0 | |||

| Sep_3 × 3 | S | 1 | 1 | ReLU | 3 × 3 | |||||

| Sep_5 × 5 | S | 2 | 1 | ReLU | 5 × 5 | |||||

| Sep_7 × 7 | S | 3 | 1 | ReLU | 7 × 7 | |||||

| Dil_3 × 3 | S | 2 | 2 | ReLU | 3 × 3 | |||||

| Dil_5 × 5 | S | 4 | 2 | ReLU | 5 × 5 | |||||

| C_3 × 3_g | S | 1 | g | 1 | CReLU | 3 × 3 | ||||

| Fire | S | 1 | 1 | 1 | ReLU | 3 × 3 | ||||

| Sep_res_3 × 3_g | S | 1 | g | 1 | ReLU | 3 × 3 | ||||

| Sep_res_5 × 5_g | S | 1 | g | 1 | ReLU | 5 × 5 | ||||

| Dil_res_3 × 3_g | S | 2 | g | 2 | ReLU | 3 × 3 | ||||

| Dil_res_5 × 5_g | S | 1 | g | 1 | ReLU | 5 × 5 |

Appendix B. More Detailed Experimental Results

Appendix C. Different Sets of Operators in the Branches of Reconstructed CNN (SOP)

| SOP1 | ||||

| N_3 × 3 | N_3 × 3_2 | N_3 × 3_4 | N_3 × 3_8 | Sep_3 × 3 |

| C_3 × 3 | C_3 × 3_2 | C_3 × 3_4 | Dil_5 × 5 | Sep_5 × 5 |

| SOP2 | ||||

| N_3 × 3 | N_3 × 3_2 | N_3 × 3_4 | Sep_5 × 5 | Dil_3 × 3 |

| N_3 × 3_8 | N_3 × 3_16 | Sep_3 × 3 | Sep_7x7 | Dil_5 × 5 |

| SOP3 | ||||

| N_3 × 3 | N_3 × 3_2 | N_3 × 3_4 | Sep_res_3 × 3 | Sep_3 × 3 |

| Fire | C_3 × 3 | C_3 × 3_2 | Sep_res_5 × 5 | Sep_5 × 5 |

| SOP4 | ||||

| Fire | C_3 × 3_2 | Sep_res_3 × 3_4 | Sep_res_5 × 5_4 | |

| C_3 × 3_4 | N_3 × 3 | Dil_res_3 × 3_4 | Dil_res_5 × 5_4 | |

| SOP5 | ||||

| N_3 × 3 | N_3 × 3_2 | N_3 × 3_4 | Sep_res_3 × 3 | Dil_res_3 × 3 |

| Fire | C_3 × 3 | C_3 × 3_2 | Sep_res_5 × 5 | Dil_res_5 × 5 |

| SOP6 | ||||

| Fire | C_3 × 3_16 | Sep_res_5 × 5_8 | Sep_5 × 5_8 | |

| C_3 × 3_8 | N_3 × 3_16 | Dil_res_5 × 5_8 | Dil_5 × 5_8 | |

| SOP7 | ||||

| N_3 × 3 | N_3 × 3_2 | N_3 × 3_4 | Dil_res_5 × 5 | |

| Fire | C_3 × 3 | C_3 × 3_2 | Sep_res_5 × 5 | |

| SOP8 | ||||

| N_3 × 3 | N_3 × 3_2 | N_3 × 3_4 | Dil_res_5 × 5_8 | |

| Fire | C_3 × 3_4 | C_3 × 3_8 | Sep_res_5 × 5_8 | |

| SOP9 | ||||

| N_3 × 3 | N_3 × 3_2 | N_3 × 3_4 | Dil_3 × 3 | |

| Fire | Dil_5 × 5 | Sep_3 × 3 | Sep_5 × 5 | |

| SOP10 | ||||

| N_1 × 1 | N_1 × 1_2 | N_1 × 1_4 | N_1 × 1_8 | |

| C_3 × 3_4 | N_3 × 3_4 | N_3 × 3_8 | Fire | |

References

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. Squeezenet: Alexnet-level accuracy with 50x fewer parameters and <0.5 mb model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 1314–1324. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Wang, P.; Gao, C.; Wang, Y.; Li, H.; Gao, Y. Mobilecount: An efficient encoder-decoder framework for real-time crowd counting. Neurocomputing 2020, 407, 292–299. [Google Scholar] [CrossRef]

- Ntakolia, C.; Diamantis, D.E.; Papandrianos, N.; Moustakidis, S.; Papageorgiou, E.I. A lightweight convolutional neural network architecture applied for bone metastasis classification in nuclear medicine: A case study on prostate cancer patients. Healthcare 2020, 8, 493. [Google Scholar] [CrossRef]

- Khaki, S.; Safaei, N.; Pham, H.; Wang, L. Wheatnet: A lightweight convolutional neural network for high-throughput image-based wheat head detection and counting. arXiv 2021, arXiv:2103.09408. [Google Scholar]

- Liu, H.; Simonyan, K.; Yang, Y. Darts: Differentiable architecture search. arXiv 2018, arXiv:1806.09055. [Google Scholar]

- Xie, S.; Zheng, H.; Liu, C.; Lin, L. Snas: Stochastic neural architecture search. arXiv 2018, arXiv:1812.09926. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8697–8710. [Google Scholar]

- Dai, X.; Yin, H.; Jha, N.K. Nest: A neural network synthesis tool based on a grow-and-prune paradigm. IEEE Trans. Comput. 2019, 68, 1487–1497. [Google Scholar] [CrossRef] [Green Version]

- Real, E.; Aggarwal, A.; Huang, Y.; Le, Q.V. Regularized evolution for image classifier architecture search. Proc. AAAI Conf. Artif. 2019, 33, 4780–4789. [Google Scholar] [CrossRef] [Green Version]

- Ding, X.; Ding, G.; Han, J.; Tang, S. Auto-balanced filter pruning for efficient convolutional neural networks. AAAI 2018, 3, 7. [Google Scholar]

- He, Y.; Kang, G.; Dong, X.; Fu, Y.; Yang, Y. Soft filter pruning for accelerating deep convolutional neural networks. arXiv 2018, arXiv:1808.06866. [Google Scholar]

- He, Y.; Zhang, X.; Sun, J. Channel pruning for accelerating very deep neural networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1389–1397. [Google Scholar]

- Li, H.; Kadav, A.; Durdanovic, I.; Samet, H.; Graf, H.P. Pruning filters for efficient convnets. arXiv 2016, arXiv:1608.08710. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- Guo, Y.; Yao, A.; Chen, Y. Dynamic network surgery for efficient dnns. arXiv 2016, arXiv:1608.04493. [Google Scholar]

- Hu, H.; Peng, R.; Tai, Y.W.; Tang, C.K. Network trimming: A data-driven neuron pruning approach towards efficient deep architectures. arXiv 2016, arXiv:1607.03250. [Google Scholar]

- Luo, J.H.; Wu, J.; Lin, W. Thinet: A filter level pruning method for deep neural network compression. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5058–5066. [Google Scholar]

- Singh, P.; Kadi, V.S.; Verma, N.; Namboodiri, V.P. Stability based filter pruning for accelerating deep cnns. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 1166–1174. [Google Scholar]

- He, Y.; Liu, P.; Wang, Z.; Hu, Z.; Yang, Y. Filter pruning via geometric median for deep convolutional neural networks acceleration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4340–4349. [Google Scholar]

- Wen, W.; Wu, C.; Wang, Y.; Chen, Y.; Li, H. Learning structured sparsity in deep neural networks. arXiv 2016, arXiv:1608.03665. [Google Scholar]

- Yoon, J.; Hwang, S.J. Combined group and exclusive sparsity for deep neural networks. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 3958–3966. [Google Scholar]

- Zhang, T.; Ye, S.; Zhang, K.; Tang, J.; Wen, W.; Fardad, M.; Wang, Y. A systematic dnn weight pruning framework using alternating direction method of multipliers. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 184–199. [Google Scholar]

- Ma, Y.; Chen, R.; Li, W.; Shang, F.; Yu, W.; Cho, M.; Yu, B. A unified approximation framework for compressing and accelerating deep neural networks. In Proceedings of the IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI), Portland, OR, USA, 4–6 November 2019; pp. 376–383. [Google Scholar]

- Ye, S.; Feng, X.; Zhang, T.; Ma, X.; Lin, S.; Li, Z.; Xu, K.; Wen, W.; Liu, S.; Tang, J.; et al. Progressive dnn compression: A key to achieve ultra-high weight pruning and quantization rates using admm. arXiv 2019, arXiv:1903.09769. [Google Scholar]

- Gusak, J.; Kholiavchenko, M.; Ponomarev, E.; Markeeva, L.; Blagoveschensky, P.; Cichocki, A.; Oseledets, I. Automated multi-stage compression of neural networks. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Swaminathan, S.; Garg, D.; Kannan, R.; Andres, F. Sparse low rank factorization for deep neural network compression. Neurocomputing 2020, 398, 185–196. [Google Scholar] [CrossRef]

- Ruan, X.; Liu, Y.; Yuan, C.; Li, B.; Hu, W.; Li, Y.; Maybank, S. Edp: An efficient decomposition and pruning scheme for convolutional neural network compression. IEEE Trans. Neural Netw. Learn. Syst. 2020. [Google Scholar] [CrossRef]

- He, Y.; Lin, J.; Liu, Z.; Wang, H.; Li, L.J.; Han, S. Amc: Automl for model compression and acceleration on mobile devices. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 784–800. [Google Scholar]

- Zhu, M.; Gupta, S. To prune, or not to prune: Exploring the efficacy of pruning for model compression. arXiv 2017, arXiv:1710.01878. [Google Scholar]

- Liu, N.; Ma, X.; Xu, Z.; Wang, Y.; Tang, J.; Ye, J. Autocompress: An automatic dnn structured pruning framework for ultra-high compression rates. AAAI 2020, 34, 4876–4883. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers; Now Publishers Inc.: Delft, The Netherlands, 2011. [Google Scholar]

- Shang, W.; Sohn, K.; Almeida, D.; Lee, H. Understanding and improving convolutional neural networks via concatenated rectified linear units. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 2217–2225. [Google Scholar]

- Bhardwaj, K.; Suda, N.; Marculescu, R. Dream distillation: A data-independent model compression framework. arXiv 2019, arXiv:1905.07072. [Google Scholar]

- Koratana, A.; Kang, D.; Bailis, P.; Zaharia, M. Lit: Learned intermediate representation training for model compression. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 3509–3518. [Google Scholar]

- Wang, J.; Bao, W.; Sun, L.; Zhu, X.; Cao, B.; Philip, S.Y. Private model compression via knowledge distillation. Proc. AAAI Conf. Artif. 2019, 33, 1190–1197. [Google Scholar] [CrossRef]

- Wang, Z.; Lin, S.; Xie, J.; Lin, Y. Pruning blocks for cnn compression and acceleration via online ensemble distillation. IEEE Access 2019, 7, 175703–175716. [Google Scholar] [CrossRef]

- Wu, M.C.; Chiu, C.T. Multi-teacher knowledge distillation for compressed video action recognition based on deep learning. J. Syst. Archit. 2020, 103, 101695. [Google Scholar] [CrossRef]

- Prakosa, S.W.; Leu, J.S.; Chen, Z.H. Improving the accuracy of pruned network using knowledge distillation. Pattern Anal. Appl. 2020, 1–12. [Google Scholar] [CrossRef]

- Ahmed, W.; Zunino, A.; Morerio, P.; Murino, V. Compact cnn structure learning by knowledge distillation. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Kim, J.; Park, C.; Jung, H.J.; Choe, Y. Plug-in, trainable gate for streamlining arbitrary neural networks. AAAI 2020, 34, 4452–4459. [Google Scholar] [CrossRef]

- Qin, H.; Gong, R.; Liu, X.; Shen, M.; Wei, Z.; Yu, F.; Song, J. Forward and backward information retention for accurate binary neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2250–2259. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Chrabaszcz, P.; Loshchilov, I.; Hutter, F. A downsampled variant of imagenet as an alternative to the cifar datasets. arXiv 2017, arXiv:1707.08819. [Google Scholar]

- Dong, X.; Yang, Y. Nas-bench-201: Extending the scope of reproducible neural architecture search. arXiv 2020, arXiv:2001.00326. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Dutchess County, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- Duggal, R.; Xiao, C.; Vuduc, R.; Sun, J. Cup: Cluster pruning for compressing deep neural networks. arXiv 2019, arXiv:1911.08630. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

| ResNet20 | ||||

| Conv | conv1-6 | conv7-12 | conv13-18 | |

| Channel | 16 | 32 | 64 | |

| ResNet56 | ||||

| Conv | conv1-18 | conv19-36 | conv37-54 | |

| Channel | 16 | 32 | 64 | |

| VGG16 | ||||

| Conv | conv1-2 | conv3-4 | conv5-7 | conv8-13 |

| Channel | 64 | 128 | 256 | 512 |

| ResNet18 | ||||

| Conv | conv1-4 | conv5-8 | conv9-12 | conv13-16 |

| Channel | 64 | 128 | 256 | 512 |

| Model | Method | SOP | PCR | FCR | Paras | TOP1 (%) | MA | |

|---|---|---|---|---|---|---|---|---|

| ResNet20 | He. [45] | - | - | 0 | 0 | 0.27 M | 91.25 | - |

| Ours | SOP2 | 0 | 2.87 | 2.56 | 0.11 M | 91.6 | C.1 | |

| SOP1 | 4.91 | 4.19 | 0.06 M | 90.35 | C.2 | |||

| SOP2 | 6.48 | 5.61 | 0.047 M | 90.15 | C.3 | |||

| ResNet56 | He. [45] | - | - | 0 | 0 | 0.85 M | 93.03 | - |

| Li. [18] | - | - | 1.16 | 1.38 | - | 93.06 | - | |

| Dug. [52] | - | - | - | 2.12 | - | 92.72 | - | |

| Ours | SOP3 | 0 | 3.51 | 3.09 | 0.24 M | 93.75 | C.7 | |

| SOP1 | 0 | 4.4 | 3.37 | 0.19 M | 92.5 | C.6 | ||

| SOP1 | 5.25 | 5.96 | 0.17 M | 91.96 | C.5 | |||

| SOP2 | 6.59 | 7.94 | 0.14 M | 91.22 | C.4 | |||

| VGG16 | Simon. [53] | - | - | 0 | 0 | 16.3 M | 93.25 | - |

| Li [18] | - | - | 2.78 | 1.52 | - | 93.4 | - | |

| Dug. [52] | - | - | 17.12 | 3.15 | - | 92.85 | - | |

| Ours | SOP5 | 0 | 2.82 | 3.71 | 5.79 M | 94.65 | C.8 | |

| SOP4 | 0 | 3.85 | 2.61 | 4.23 M | 93.95 | C.9 | ||

| SOP6 | 15.1 | 15.6 | 1.08 M | 92.35 | C.10 | |||

| ResNet18 | He. [45] | - | 0 | 0 | 11 M | 75.05 | - | |

| Ours | SOP7 | 0 | 2.39 | 2.23 | 4.61 M | 74.5 | C.11 | |

| SOP7 | 2.44 | 2.31 | 4.5 M | 74.2 | C.12 | |||

| SOP8 | 0 | 5.27 | 2.97 | 2.08 M | 74.85 | C.13 | ||

| SOP8 | 4.66 | 3.98 | 2.36 M | 73.6 | C.14 |

| Layers | Output Size | Stride | Densenet121 |

|---|---|---|---|

| conv | 16 × 16 | 1 | 3 × 3 conv |

| Dense Block (1) | 16 × 16 | 1 | [3 × 3 conv] × 6 |

| Transition Layer (1) | 16 × 16 | 1 | 1 × 1 conv 2 × 2 average pool |

| Dense Block (2) | 16 × 16 | 1 | [3 × 3 conv] × 12 |

| Transition Layer (2) | 16 × 16 | 1 | 1 × 1 conv 2 × 2 average pool |

| Dense Block (3) | 16 × 16 | 1 | [3 × 3 conv] × 24 |

| Transition Layer (3) | 8 × 8 | 2 | 1 × 1 conv 2 × 2 average pool |

| Dense Block (4) | 8 × 8 | 1 | [3 × 3 conv] × 16 |

| Classification Layer | 1 × 1 | - | 8 × 8 average pool 120D fully-connected |

| Layers | Output Size | Stride | Channels | Expansion Ratio | MobilenetV2 |

|---|---|---|---|---|---|

| conv | 16 × 16 | 1 | 32 | - | 3 × 3 conv |

| Block (1) | 16 × 16 | 1 | 16 | 1 | bottleneck × 1 |

| Block (2) | 16 × 16 | 1 | 24 | 6 | bottleneck × 2 |

| Block (3) | 16 × 16 | 1 | 32 | 6 | bottleneck × 3 |

| Block (4) | 16 × 16 | 1 | 64 | 6 | bottleneck × 4 |

| Block (5) | 16 × 16 | 1 | 96 | 6 | bottleneck × 3 |

| Block (6) | 8 × 8 | 2 | 160 | 6 | bottleneck × 3 |

| Block (7) | 8 × 8 | 1 | 320 | 6 | bottleneck × 1 |

| conv | 8 × 8 | 1 | 1280 | - | 1 × 1 conv |

| Classification Layer | 1 × 1 | - | - | - | 8 × 8 average pool 120Dfully-connected |

| Model | Method | SOP | PCR | Paras | TOP1 (%) | MA | |

|---|---|---|---|---|---|---|---|

| DenseNet121 | Huang. [54] | - | - | 0 | 9.84 M | 48.83 | - |

| Ours | SOP9 | 0 | 3.42 | 2.88 M | 48.6 | C.15 | |

| SOP9 | 5.13 | 1.92 M | 48.73 | C.16 | |||

| SOP9 | 5.50 | 1.79 M | 47.92 | C.17 | |||

| SOP9 | 5.96 | 1.65 M | 47.9 | C.18 | |||

| SOP9 | 6.0 | 1.64 M | 47.83 | C.19 | |||

| SOP9 | 6.09 | 1.617 M | 47.67 | C.20 | |||

| SOP9 | 6.31 | 1.56 M | 47.5 | C.21 | |||

| SOP9 | 6.47 | 1.52 M | 47.2 | C.22 | |||

| MobileNetV2 | sandler. [3] | - | - | 0 | 2.21 M | 49.2 | - |

| Ours | SOP10 | 0 | 0.45 | 4.89 M | 49.3 | C.23 | |

| SOP10 | 0.71 | 3.12 M | 49.4 | C.24 | |||

| SOP10 | 1.48 | 1.49 M | 49.87 | C.25 | |||

| SOP10 | 2.48 | 0.89 M | 49.06 | C.26 | |||

| SOP10 | 2.91 | 0.76 M | 48.95 | C.27 | |||

| SOP10 | 2.83 | 0.78 M | 48.96 | C.28 | |||

| SOP10 | 3.05 | 0.725 M | 48.75 | C.29 | |||

| SOP10 | 3.06 | 0.723 M | 48.68 | C.30 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Diao, H.; Hao, Y.; Xu, S.; Li, G. Implementation of Lightweight Convolutional Neural Networks via Layer-Wise Differentiable Compression. Sensors 2021, 21, 3464. https://doi.org/10.3390/s21103464

Diao H, Hao Y, Xu S, Li G. Implementation of Lightweight Convolutional Neural Networks via Layer-Wise Differentiable Compression. Sensors. 2021; 21(10):3464. https://doi.org/10.3390/s21103464

Chicago/Turabian StyleDiao, Huabin, Yuexing Hao, Shaoyun Xu, and Gongyan Li. 2021. "Implementation of Lightweight Convolutional Neural Networks via Layer-Wise Differentiable Compression" Sensors 21, no. 10: 3464. https://doi.org/10.3390/s21103464