Video Slice: Image Compression and Transmission for Agricultural Systems

Abstract

:1. Introduction

2. Case Study

3. Related Work

3.1. The Importance of Remote Monitoring System and Transmitted Data Reduction

3.2. Remote Monitoring System

3.3. Image Data Reduction

4. Proposed Method

4.1. Basic Concept

4.2. Prototype

5. Evaluation

5.1. Condition of Communication and Image Files

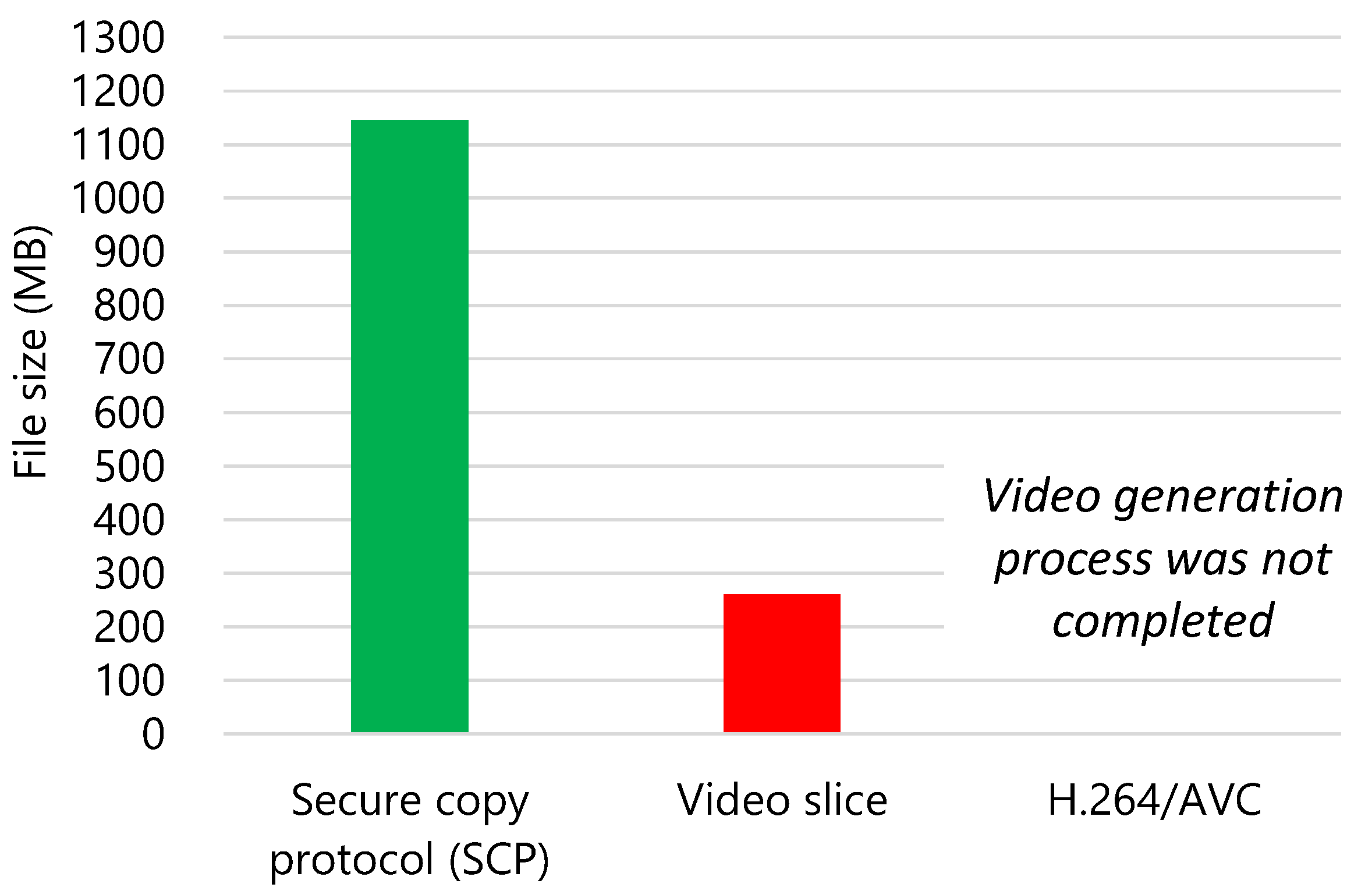

5.2. Reduced Data Amount

5.3. Transmission Processing Time

5.4. Image Degradation

5.5. Worst Case Evaluation

6. Discussion

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning—Method overview and review of use for fruit detection and yield estimation. Comput. Electron. Agric. 2019, 162, 219–234. [Google Scholar] [CrossRef]

- Chen, Y.; Lee, W.S.; Gan, H.; Peres, N.; Fraisse, C.; Zhang, Y.; He, Y. Strawberry Yield Prediction Based on a Deep Neural Network Using High-Resolution Aerial Orthoimages. Remote Sens. 2019, 11, 1584. [Google Scholar] [CrossRef] [Green Version]

- Barkunan, S.; Bhanumathi, V.; Sethuram, J. Smart sensor for automatic drip irrigation system for paddy cultivation. Comput. Electr. Eng. 2019, 73, 180–193. [Google Scholar] [CrossRef]

- Scheifler, R.W.; Gettys, J. The X window system. ACM Trans. Graph. 1986, 5, 79–109. [Google Scholar] [CrossRef]

- Microsoft Corporation. Remote Desktop Protocol. Available online: https://docs.microsoft.com/ja-jp/windows/win32/termserv/remote-desktop-protocol?redirectedfrom=MSDN (accessed on 29 March 2021).

- Wi-Fi Alliance. Remote Desktop Protocol. Available online: https://www.wi-fi.org/downloads-registered-guest/wp_Miracast_Consumer_201301.pdf/7640 (accessed on 29 March 2021).

- Thai, N.Q.; Layek, M.A.; Huh, E.N. A Hybrid Remote Display Scheme for Interactive Applications in Band-Limited Environment. In Proceedings of the 2017 Seventh International Conference on Innovative Computing Technology, Luton, UK, 16–18 August 2017; pp. 167–173. [Google Scholar]

- Zhang, A.; Wang, Z.; Han, Z.; Fu, Y.; He, Z. H.264 Based Screen Content Coding with HSV Quantization. In Proceedings of the 2017 10th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics, Shanghai, China, 14–16 October 2017; pp. 1–5. [Google Scholar]

- Ruan, J.; Jiang, H.; Zhu, C.; Hu, X.; Shi, Y.; Liu, T.; Rao, W.; Chan, F.T.S. Agriculture IoT: Emerging Trends, Cooperation Networks, and Outlook. IEEE Wirel. Commun. 2019, 26, 56–63. [Google Scholar] [CrossRef]

- Tzounis, A.; Katsoulas, N.; Bartzanas, T.; Kittas, C. Internet of Things in agriculture, recent advances and future challenges. Biosyst. Eng. 2017, 164, 31–48. [Google Scholar] [CrossRef]

- Elijah, O.; Rahman, T.A.; Orikumhi, I.; Leow, C.Y.; Hindia, M.N. An Overview of Internet of Things (IoT) and Data Analytics in Agriculture: Benefits and Challenges. IEEE Internet Things J. 2018, 5, 3758–3773. [Google Scholar] [CrossRef]

- Farooq, M.S.; Riaz, S.; Abid, A.; Umer, T.; Zikria, Y.B. Role of IoT technology in agriculture: A systematic literature review. Electronics 2020, 9, 319. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.; Guo, L.; Webb, H.; Ya, X.; Chang, X. Internet of Things Monitoring System of Modern Eco-Agriculture Based on Cloud Computing. IEEE Access 2019, 7, 37050–37058. [Google Scholar] [CrossRef]

- Kim, S.; Lee, M.; Shin, C. IoT-Based Strawberry Disease Prediction System for Smart Farming. Sensors 2018, 18, 4051. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Morais, R.; Silva, N.; Mendes, J.; Adão, T.; Pádua, L.; López-Riquelme, J.A.; Pavón-Pulido, N.; Sousa, J.J.; Peres, E. mySense: A comprehensive data management environment to improve precision agriculture practices. Comput. Electron. Agric. 2019, 162, 882–894. [Google Scholar] [CrossRef]

- Shafi, U.; Mumtaz, R.; García-Nieto, J.; Hassan, S.A.; Zaidi, S.A.R.; Iqbal, N. Precision Agriculture Techniques and Practices: From Considerations to Applications. Sensors 2019, 19, 3796. [Google Scholar] [CrossRef] [Green Version]

- Jawad, H.M.; Nordin, R.; Gharghan, S.K.; Jawad, A.M.; Ismail, M. Energy-Efficient Wireless Sensor Networks for Precision Agriculture: A Review. Sensors 2017, 17, 1781. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Ham, Y.; Han, K.K.; Lin, J.J.; Golparvar-Fard, M. Visual monitoring of civil infrastructure systems via camera-equipped Unmanned Aerial Vehicles (UAVs): A review of related works. Vis. Eng. 2016, 4, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Michael, N.; Shen, S.; Mohta, K.; Mulgaonkar, Y.; Kumar, V.; Nagatani, K.; Okada, Y.; Kiribayashi, S.; Otake, K.; Yoshida, K.; et al. Collaborative Mapping of an Earthquake-DamagedBuilding via Ground and Aerial Robots. J. Field Robot. 2012, 29, 832–841. [Google Scholar] [CrossRef]

- Matsuoka, R.; Nagusa, I.; Yasuhara, H.; Mori, M.; Katayama, T.; Yachi, N.; Hasui, A.; Katakuse, M.; Atagi, T. Measurement of Large-Scale Solar Power Plant by Using Images Acquired by Non-Metric Digital Camera on Board UAV. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B1, 435–440. [Google Scholar] [CrossRef] [Green Version]

- Steenweg, R.; Hebblewhite, M.; Kays, R.; Ahumada, J.; Fisher, J.T.; Burton, C.; Townsend, S.E.; Carbone, C.; Rowcliffe, J.M.; Whittington, J.; et al. Scaling up camera traps: Monitoring the planet’s biodiversity with networks of remote sensors. Front. Ecol. Environ. 2017, 15, 26–34. [Google Scholar] [CrossRef]

- Burton, A.C.; Neilson, E.; Moreira, D.; Ladle, A.; Steenweg, R.; Fisher, J.T.; Bayne, E.; Boutin, S. REVIEW: Wildlife camera trapping: A review and recommendations for linking surveys to ecological processes. J. Appl. Ecol. 2015, 52, 675–685. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Elharrouss, O.; Almaadeed, N.; Al-Maadeed, S. A review of video surveillance systems. J. Vis. Commun. Image Represent. 2021, 77, 103116. [Google Scholar] [CrossRef]

- Alsmirat, M.A.; Obaidat, I.; Jararweh, Y.; Al-Saleh, M. A security framework for cloud-based video surveillance system. Multimed. Tools Appl. 2017, 76, 22787–22802. [Google Scholar] [CrossRef]

- Farnebäck, G. Two-Frame Motion Estimation Based on Polynomial Expansion. In Proceedings of the Scandinavian Conference on Image Analysis, Halmstad, Sweden, 29 June–2 July 2003; pp. 363–370. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Yang, S.; Li, B.; Song, Y.; Xu, J.; Lu, Y. A Hardware-Accelerated System for High Resolution Real-Time Screen Sharing. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 881–891. [Google Scholar] [CrossRef]

- Wiegand, T.; Sullivan, G.J.; Bjontegaard, G.; Luthra, A. Overview of the H.264/AVC Video Coding Standard. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 560–576. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T.; Mao, S. An Overview of Emerging Video Coding Standards. GetMobile Mob. Comput. Commun. 2019, 22, 13–20. [Google Scholar] [CrossRef]

- Sullivan, G.J.; Ohm, J.R.; Han, W.J.; Wiegand, T. Overview of the High Efficiency Video Coding (HEVC) Standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Mukherjee, D.; Bankoski, J.; Grange, A.; Han, J.; Koleszar, J.; Wilkins, P.; Xu, Y.; Bultje, R. The latest open-source video codec VP9—An overview and preliminary results. In Proceedings of the 2013 Picture Coding Symposium, San Jose, CA, USA, 8–11 December 2013; pp. 390–393. [Google Scholar]

- International Organization for Standardization. Coding of Audio-Visual Objects—Part 2: Visual; ISO/IEC 14496-2; International Organization for Standardization: Geneva, Switzerland, 2001. [Google Scholar]

- The Raspberry Pi Foundation. Raspberry Pi Zero W. Available online: https://www.raspberrypi.org/products/raspberry-pi-zero-w/ (accessed on 29 March 2021).

- Toderici, G.; Vincent, D.; Johnston, N.; Jin, H.; Minnen, D.; Shor, J.; Covell, M. Full Resolution Image Compression with Recurrent Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5306–5314. [Google Scholar]

- Krishnaraj, N.; Elhoseny, M.; Thenmozhi, M.; Selim, M.M.; Shankar, K. Deep learning model for real-time image compression in Internet of Underwater Things (IoUT). J. Real Time Image Process. 2020, 17, 2097–2111. [Google Scholar] [CrossRef]

- Prakash, A.; Moran, N.; Garber, S.; DiLillo, A.; Storer, J. Semantic Perceptual Image Compression using Deep Convolution Networks. In Proceedings of the 2017 Data Compression Conference, Snowbird, UT, USA, 4–7 April 2017; pp. 250–259. [Google Scholar]

- Gia, T.N.; Qingqing, L.; Queralta, J.P.; Zou, Z.; Tenhunen, H.; Westerlund, T. Edge AI in Smart Farming IoT: CNNs at the Edge and Fog Computing with LoRa. In Proceedings of the IEEE AFRICON, Accra, Ghana, 25–27 September 2019. [Google Scholar]

- Lee, S.W.; Kim, H.Y. An energy-efficient low-memory image compression system for multimedia IoT products. EURASIP J. Image Video Process. 2018, 2018, 87. [Google Scholar] [CrossRef]

- Azar, J.; Makhoul, A.; Barhamgi, M.; Couturier, R. An energy efficient IoT data compression approach for edge machine learning. Future Gener. Comput. Syst. 2019, 96, 168–175. [Google Scholar] [CrossRef] [Green Version]

- The Raspberry Pi Foundation. Raspberry Pi 4 Model B. Available online: https://www.raspberrypi.org/products/raspberry-pi-4-model-b/ (accessed on 29 March 2021).

- Python Software Foundation. Python. Available online: https://www.python.org/ (accessed on 29 March 2021).

- OpenCV Team. OpenCV. Available online: https://opencv.org/ (accessed on 29 March 2021).

- The Wireshark Foundation. Wireshark. Available online: https://www.wireshark.org/ (accessed on 29 March 2021).

- Kobako, M. Image compression guidelines for digitized documents. J. Image Inf. Manag. 2011, 50, 21. (In Japanese) [Google Scholar]

| Requirement | Transmitting Only Meaningful Data | Transmitting Only When A Significant Change Appears | Transmitting Difference Data | Compressing One Image | Proposal |

|---|---|---|---|---|---|

| Adaptivity for agriculture | Yes | No | Yes | Yes | Yes |

| General versatility | No | Yes | Yes | Yes | Yes |

| Robustness to loss of data | Yes | Yes | No | Yes | Yes |

| Ease of implementation | Yes | Yes | Yes | No | Yes |

| Item | Raspberry Pi Zero W | Raspberry Pi 4 Model B |

|---|---|---|

| Graphics processing unit (GPU) | Videocore IV 250 MHz | Videocore IV 500 MHz |

| Central processing unit (CPU) | ARM1176JZF-S (ARMv6) 1 GHz 32 bit single core | ARM Cortex-A72 (ARMv8) 1.5 GHz 64 bit quad core |

| Random access memory (RAM) | 1 GB | 8 GB |

| Storage (Micro SD card) | 128 GB | 128 GB |

| Operating system | Raspberry Pi OS 32 bit Release: 2 December 2020 Kernel version: 5.4 No graphical user interface (GUI) | Raspberry Pi OS 32 bit Release: 2 December 2020 Kernel version: 5.4 With GUI |

| Python3 version | 3.7.3 | 3.7.3 |

| OpenCV version | 4.4.0 | 4.4.0 |

| Peak Signal-to-Noise Ratio (PSNR) | Structural Similarity (SSIM) | Subjective Appraisal |

|---|---|---|

| Over 40 dB | Over 0.98 | Indistinguishable between original and compressed image. |

| Over 30–40 dB | Over 0.90–0.98 | People can notice the degradation when the image is enlarged. |

| Equal to or lower than 30 dB | Equal to or lower than 0.90 | Obviously degraded. |

| Evaluation Index | Average | Minimum |

|---|---|---|

| PSNR (dB) | 36.11 | 34.20 |

| SSIM | 0.97 | 0.93 |

| Evaluation Index | Result |

|---|---|

| Transmitted and received data amount of secure copy protocol | 1156.7 MB |

| Transmitted and received data amount of video slice | 217.9 MB |

| Processing time for one image transmission (average of all image) | 4.1 s |

| Processing time for one image transmission (average of worst case image) | 4.0 s |

| Worst PSNR | 34.20 dB |

| Worst SSIM | 0.93 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kawai, T. Video Slice: Image Compression and Transmission for Agricultural Systems. Sensors 2021, 21, 3698. https://doi.org/10.3390/s21113698

Kawai T. Video Slice: Image Compression and Transmission for Agricultural Systems. Sensors. 2021; 21(11):3698. https://doi.org/10.3390/s21113698

Chicago/Turabian StyleKawai, Takaaki. 2021. "Video Slice: Image Compression and Transmission for Agricultural Systems" Sensors 21, no. 11: 3698. https://doi.org/10.3390/s21113698