Abstract

In a 3D scanning system, using a camera and a line laser, it is critical to obtain the exact geometrical relationship between the camera and laser for precise 3D reconstruction. With existing depth cameras, it is difficult to scan a large object or multiple objects in a wide area because only a limited area can be scanned at a time. We developed a 3D scanning system with a rotating line laser and wide-angle camera for large-area reconstruction. To obtain 3D information of an object using a rotating line laser, we must be aware of the plane of the line laser with respect to the camera coordinates at every rotating angle. This is done by estimating the rotation axis during calibration and then by rotating the laser at a predefined angle. Therefore, accurate calibration is crucial for 3D reconstruction. In this study, we propose a calibration method to estimate the geometrical relationship between the rotation axis of the line laser and the camera. Using the proposed method, we could accurately estimate the center of a cone or cylinder shape generated while the line laser was rotating. A simulation study was conducted to evaluate the accuracy of the calibration. In the experiment, we compared the results of the 3D reconstruction using our system and a commercial depth camera. The results show that the precision of our system is approximately 65% higher for plane reconstruction, and the scanning quality is also much better than that of the depth camera.

1. Introduction

Today, many application fields require technology for 3D modeling of real-world objects, such as design and construction of augmented reality (AR), quality verification, the restoration of lost CAD data, heritage scanning, surface deformation tracking, 3D reconstruction for object recognition and so on [1,2,3,4,5,6,7]. Among the non-contact scanning methods, the method using a camera and a line laser is widely used because the scanning speed is relatively fast and there is no damage to the object [8,9]. There are two methods for measuring the distance with the laser: the laser triangulation (LT) method and the time-of-flight (ToF) method. The LT method is slower but it has more precise results than the ToF method [10]. However, both methods have relatively small measurable zones. They scan a point or a line, at most, a narrow region at a time. A laser range finder (LRF) was developed to overcome this limitation. It extends the scan area by rotating the light source [11]. The scan area can be further increased by rotating the LRF. Recently, various methods for estimating the rotation axis of the LRF have been studied to obtain a 3D depth map of the environment [12,13,14,15,16,17,18,19,20,21,22].

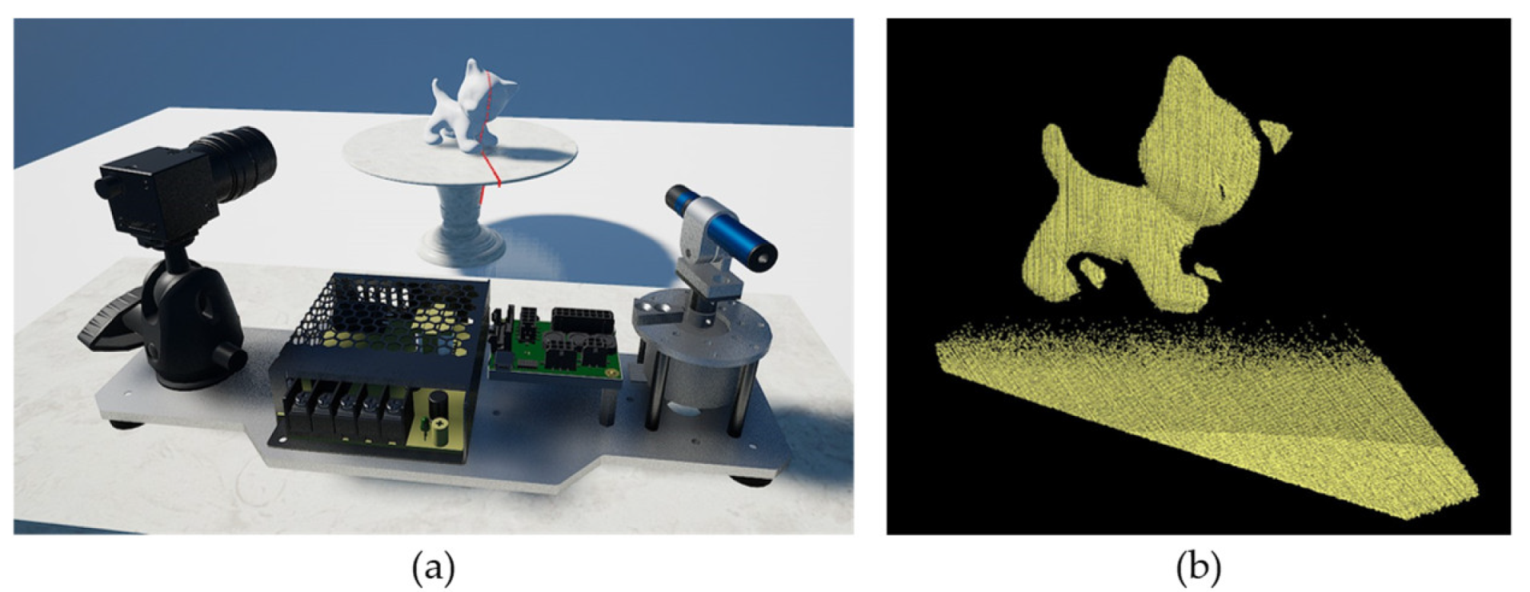

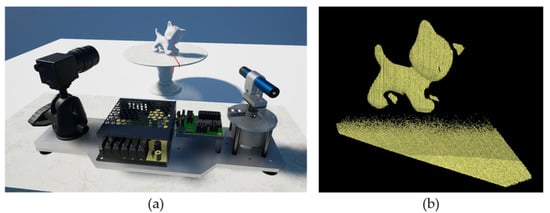

In this study, an LT system with a rotating line laser and wide-angle camera was proposed as shown in Figure 1. We only rotate the line laser, not the entire system, to scan a wide area. Rotating a laser is easy because the line laser is light, leading to a simple system. We do not need to rotate the camera because it already has a wide field of view with a proper lens (e.g., fish-eye lens).

Figure 1.

Three dimensional (3D) reconstruction using our system, (a) setup of the system, (b) reconstruction results.

With this setup, the first step is to precisely estimate this rotation axis with respect to the camera coordinates, to measure the depth of an object using triangulation [23]. Owing to various reasons, such as the fabrication error of mechanical parts, line laser misalignment to the rotation axis, line laser optical properties, and so on, the rotation axis of the line laser is somewhat different from the ideal CAD design. However, this axis is precisely measured to obtain accurate 3D information from the LT method. In this paper, we propose a novel calibration between the camera coordinate system and the rotating line laser coordinate system using a cone model. Finding the axis of rotation with respect to the camera coordinate system is the key for accurate calibration. We noticed that the cone shape is always created while the plane of the line laser rotates about the rotation axis of the motor due to inevitable imperfect alignment between the line laser and the rotating axis. Therefore, we estimate the axis of rotation using the cone model because the central axis of the cone and the rotation axis of the motor are same. The cone model enables us to estimate the rotation axis accurately leading to improved calibration results. In summary, our contribution is to propose a cone model for an accurate calibration between the camera and the rotating line laser resulting in an accurate and wide 3D scanning system.

The rest of Section 1 defines the notation and coordinate system, and Section 2 introduces the schematic diagram of the system and the calibration process. In Section 3, the triangulation method through the line-plane intersection is described, and the calibration between the fixed line laser and camera, and the calibration between the rotating line laser and camera are explained. In Section 4, the calibration accuracy according to the rotation axis of the line laser is evaluated, and the 3D reconstruction results of the RGB-D camera and this system are compared. Then, the accuracy of the 3D depth estimation is analyzed using a planar model. Finally, the conclusions are presented in Section 5.

2. Overall System

2.1. Hardware

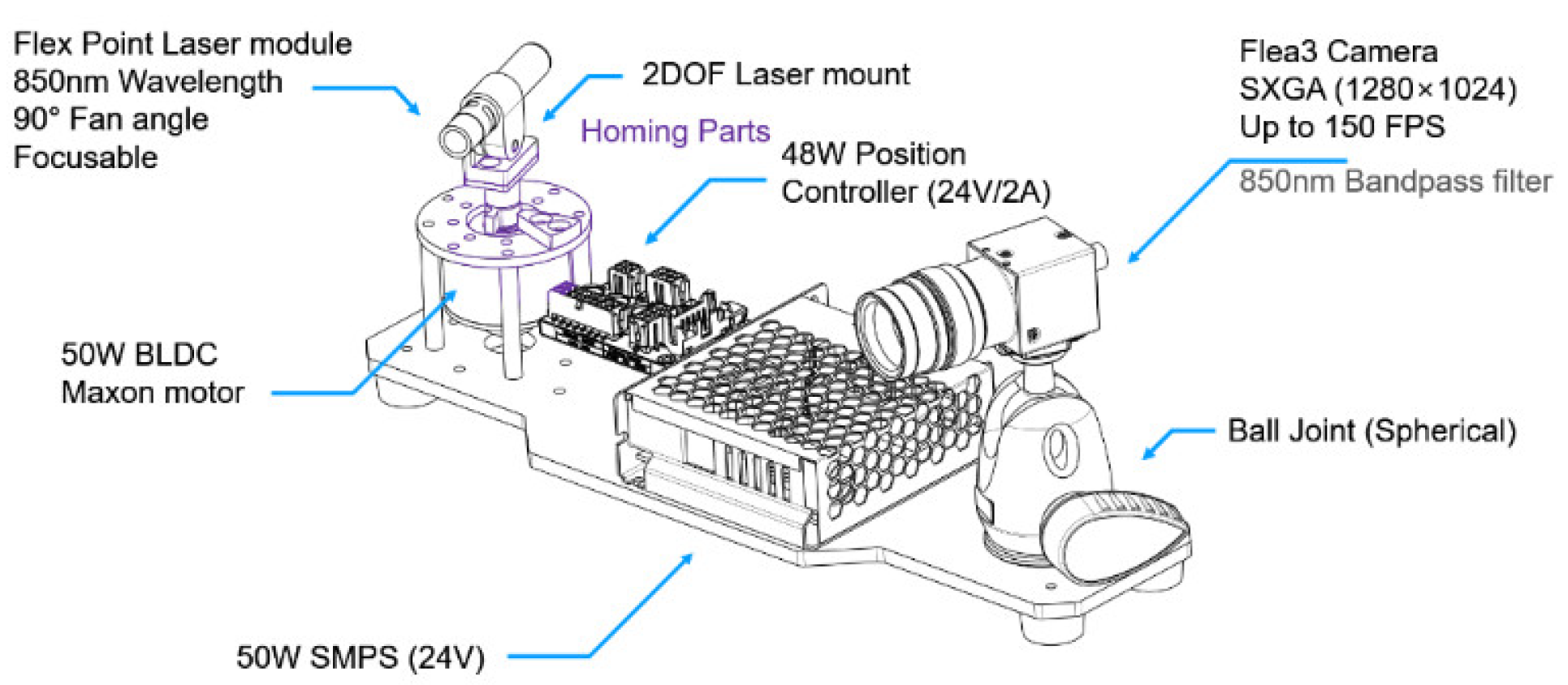

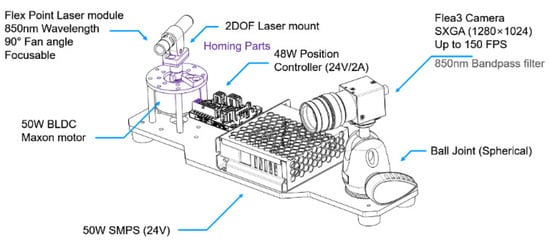

Our scanning system has a line laser fixed to a motor and a camera with an infrared (IR) filter, as shown in Figure 2. The line laser used in our system has 90° fan angles at 850 nm wavelength (FLEXPOINT MVnano, Laser Components, Bedford, NH, USA). We designed a mount that can fix and adjust the orientation of the line laser. This mount has two degrees of freedom rotating about pitch and roll axes. We will explain the effect of the roll angle with simulation. Our brushless direct current (BLDC) motor with an incremental encoder has approximately a resolution of 0.08°. To obtain the absolute angle θ about the motor axis, we calculated the angle with the incremental encoder from the home position. We used a camera with a wide-angle lens and an 850 nm infrared bandpass filter to detect only IR light (FLEA3, FLIR System, Wilsonville, OR, USA). The camera with a lens has vertical and horizontal field of view of approximately 57 and 44, respectively. The camera resolution is 1280 by 1080 and its acquisition speed is 150 frames per second.

Figure 2.

Scanning system with a line laser fixed to a motor and infrared camera.

2.2. Process of Scanning System

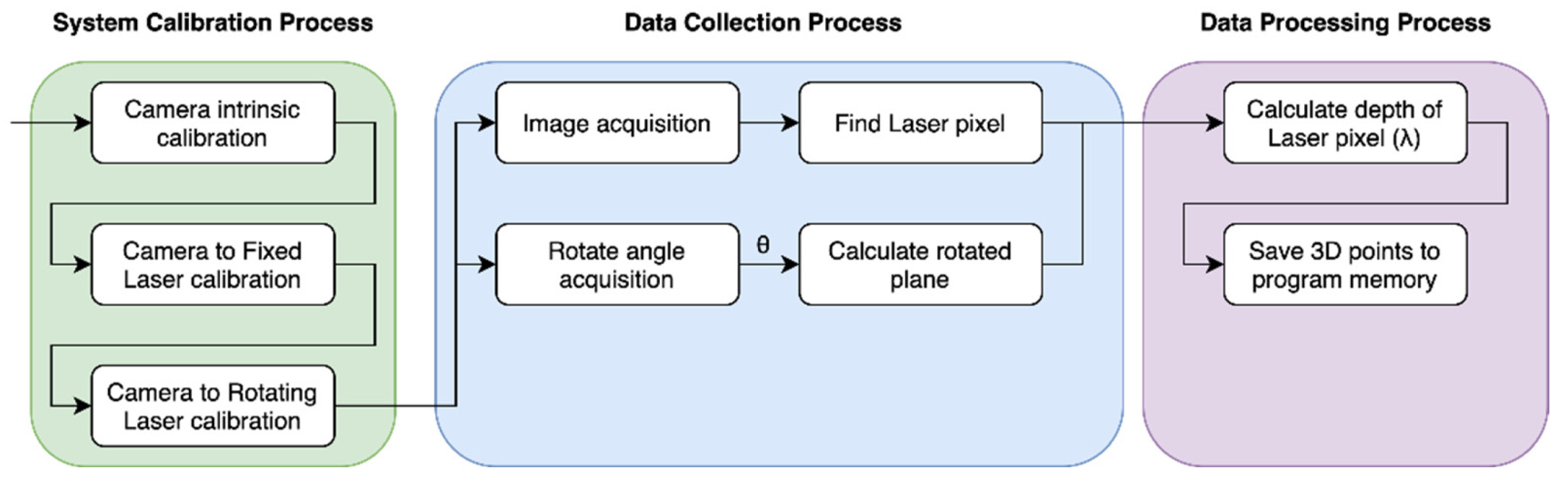

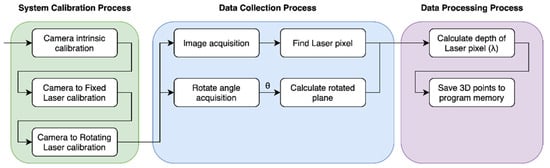

As shown in Figure 3, we executed three processes to acquire 3D scan data. Because our scanning system consists of three components, we determined the transformation relationship between the camera and other devices via calibration methods.

Figure 3.

Flowchart of our scanning process.

Because every point of the 3D scan data was acquired from the image, and the area illuminated by the laser was narrow in the field of view of the sensor, we rotated the laser via the motor and recorded a camera image for every angle of the motor. By using calibration data and the angle value of the motor, we calculated 3D points from each recorded image. The 3D points are stored in the program memory, and we can visualize or store them in the storage of our PC.

2.3. Camera Calibration

In digital imaging, the camera is modeled as a pinhole camera model. The pinhole camera model is a method for projecting any single point present in a 3D space into image coordinates . It consists of a combination of intrinsic parameters and extrinsic parameters, rigid body transform to a world coordinate system.

The parameters estimated through the camera calibration process are used. Currently, most intrinsic and extrinsic parameters are estimated using a checkerboard printed on a flat plane [24]. If we know the intrinsic parameters of the camera, we can calculate where any points represented in the camera reference frame are projected into the image coordinate system. Furthermore, knowing the 3D point to 2D point corresponding pairs of more than four points with the camera’s intrinsic parameters identified, we can estimate the rigid body transform of it with respect to the camera coordinate system.

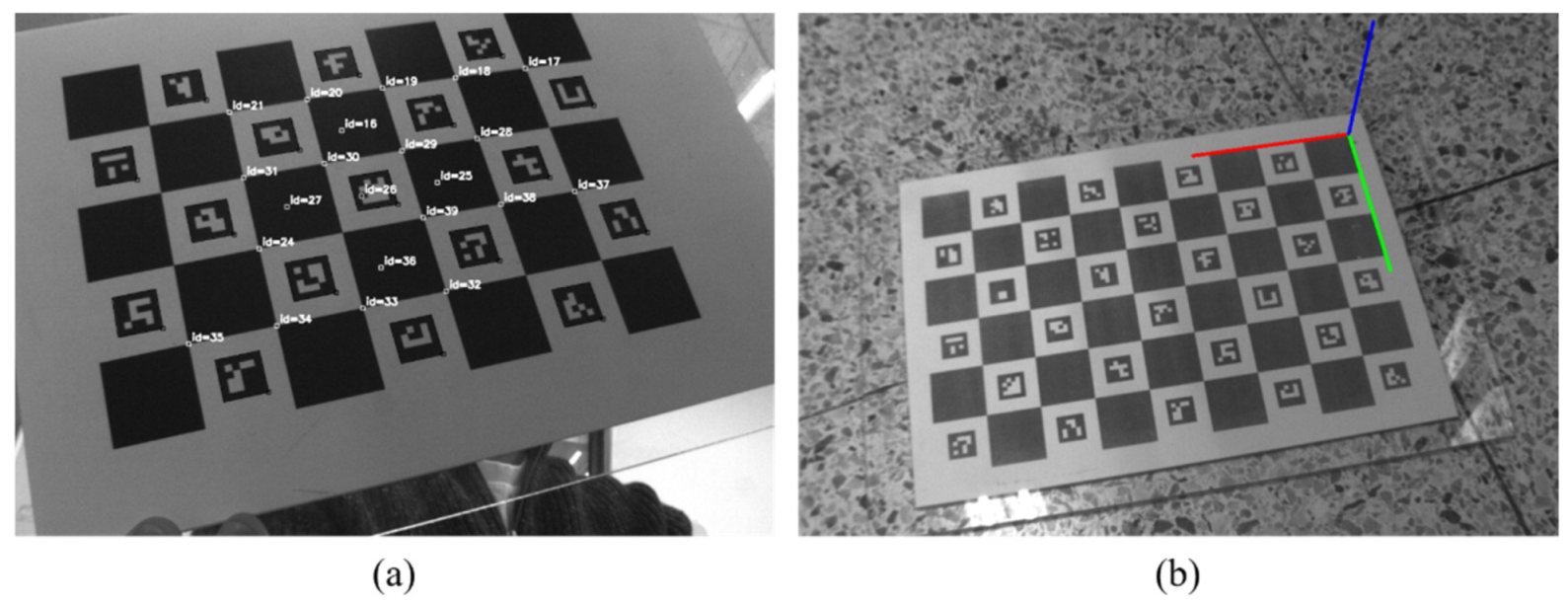

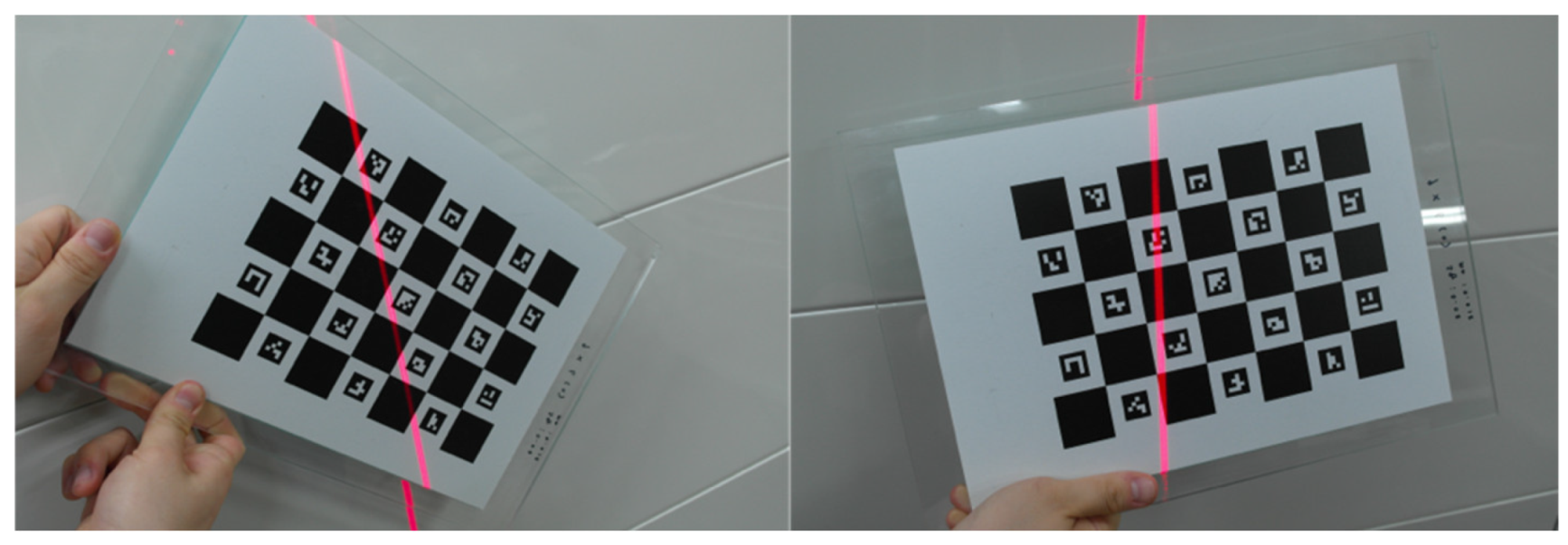

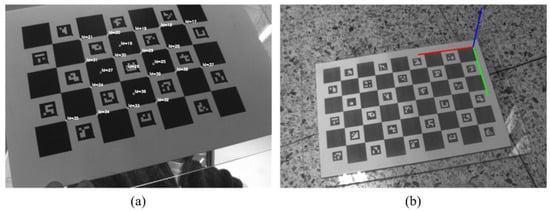

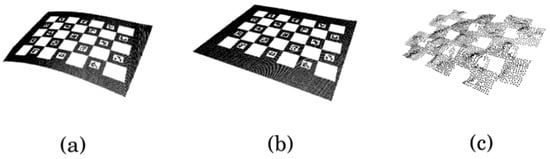

For the experiments, we used a checkerboard with an AR marker, ArUco (ChArUco), to estimate the rigid body transform, as shown in Figure 4. Its parameters were also used to identify the laser plane in the camera reference coordinate system.

Figure 4.

(a) Intrinsic calibration and (b) Extrinsic calibration.

3. Solving Extrinsic Calibration

Our scanning system estimates 3D depth information based on the triangulation of pixel points in the image coordinate system. Therefore, it is necessary to know the ray information of the camera coordinate system and the plane information of the line laser, such as the normal vectors, to calculate the depth. Our scanning system uses a rotating line laser and fixed camera. The first step is to perform a calibration that calculates the geometrical relationship between the camera and the laser-rotating axis. We performed this calibration using a cone shape model because a cone is made from multiple planes generated by the rotation of the line laser. In the following subsections, we explain this process in detail. First, the triangulation method is introduced, and then the process of obtaining the relationship between the camera and plane generated by the line laser is explained. After that, the calibration to find the transformation between the rotation axis of the line laser and the camera is explicated. Finally, we obtained the depth map from the encoder value of the motor and the information between the line laser and camera.

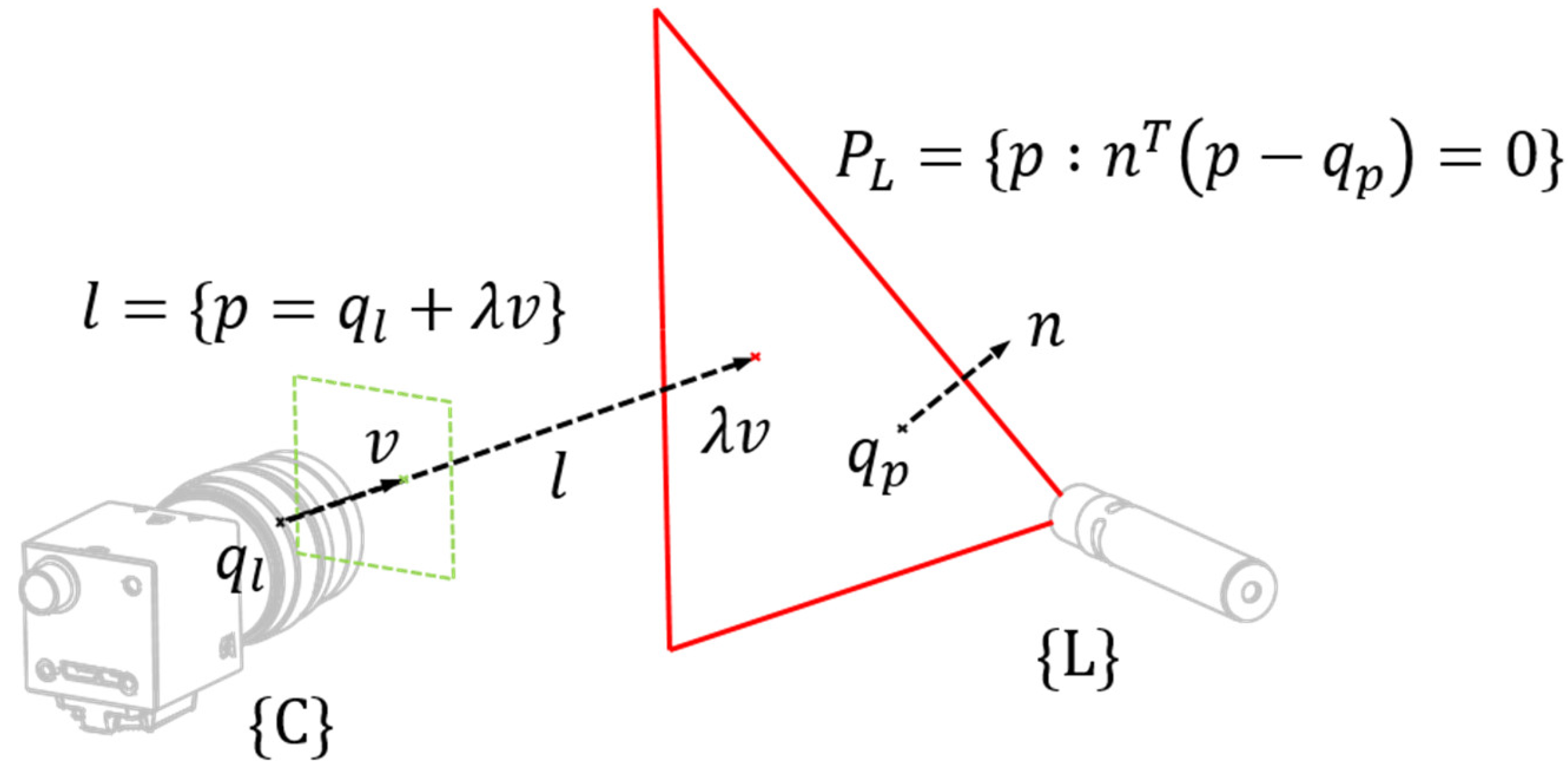

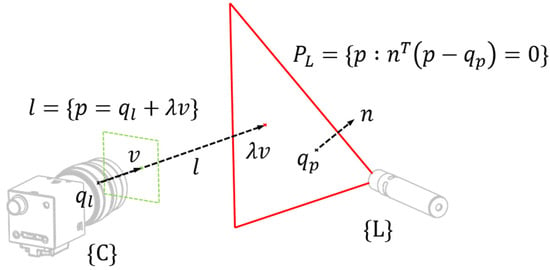

3.1. Triangulation

Triangulation refers to the process of calculating the depth value of a common point observed by two sensors, provided that a transformation matrix between both systems, such as camera and line laser is known. In 3D space, a common point is created through line-line or line-plane intersections. The line is the ray of the camera, and the plane is the line laser plane. Our system corresponds to a line-plane intersection as shown in Figure 5. The intersection between a plane and a line is given by

where , , and are the normal vector of , a point of , a point and a directional vector of , respectively.

Figure 5.

Line-Plane intersection.

becomes a zero vector when passing through the origin of the camera coordinate system. Therefore, the depth can be calculated as

3.2. Camera to Fixed Laser Calibration

To calculate the relationship between fixed line laser and the camera, point on the plane acquired from multiple poses are required, as indicated in Figure 6. Because the transformation matrix from camera () coordinate to world () coordinate can be acquired through the AR marker, the laser points on the checkerboard can estimate the depth through triangulation. Point on the estimated plane can be represented by the following implicit equation:

Figure 6.

Calibration of laser plane with respect to camera coordinate.

The elements of the points set collected from poses and the plane were formulated with matrix multiplication as follows.

Because the set of points acquired through the camera inevitably contains noise data, this equation is not equal to zero. The solution can be found by the linear least-squares method. Therefore, the equation of the plane generated by the line laser can be estimated with respect to the camera coordinates. In addition, we normalized the normal vector of the plane to simplify the geometric analysis in the next section.

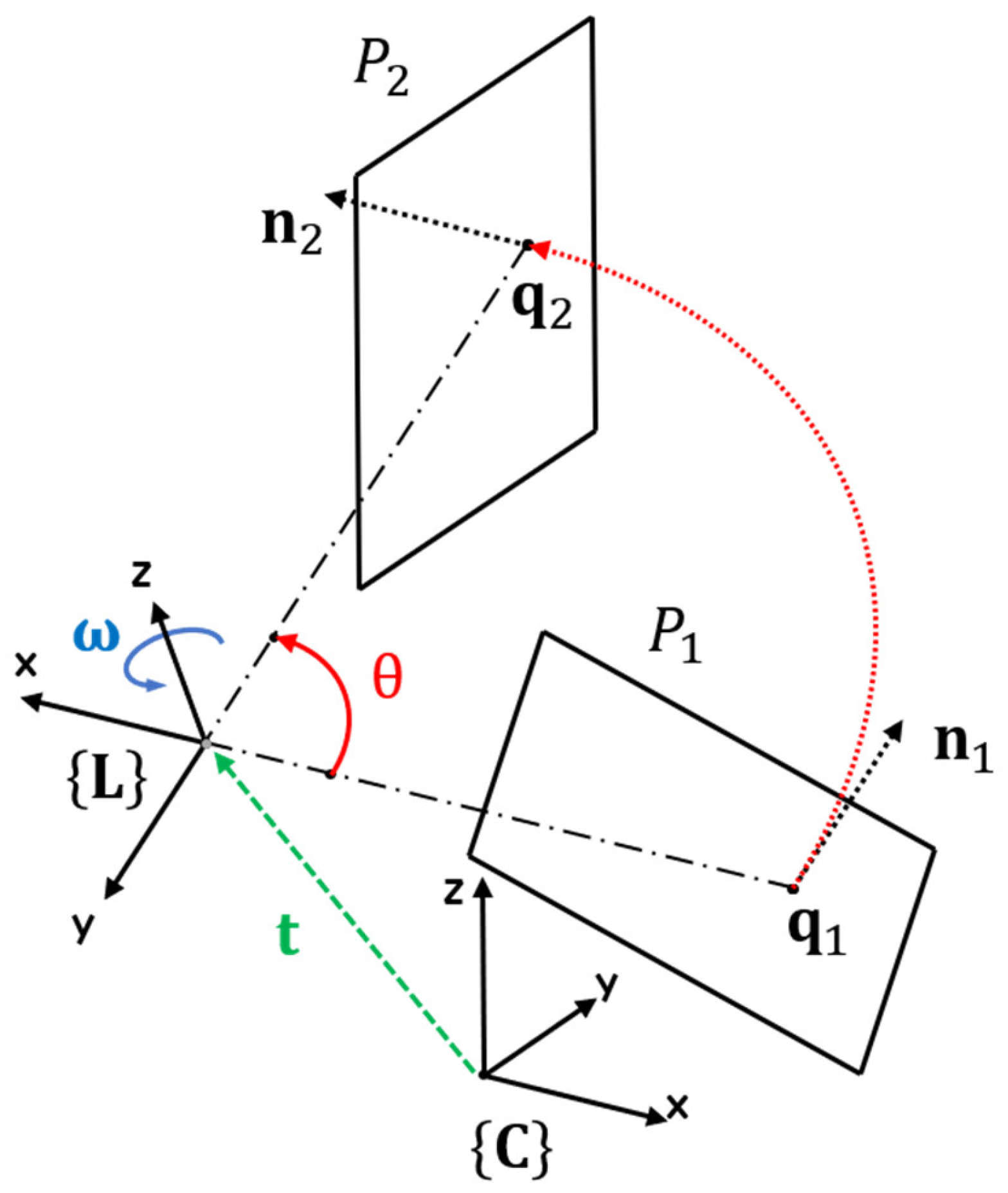

3.3. Camera to Rotating Laser Calibration

When the line laser rotates along the rotation axis of the motor, the plane created by the line laser rotates along the rotation axis. This means that the equation of the plane generated by the line laser in the camera coordinate also changed through an angle . The equation of is related to the rotation axis and the translation vector of the line laser expressed in the camera coordinates. The angle can be obtained through an incremental encoder with the motor. If the line laser is fixed, the parameters for the equation of the plane are constant. However, in our system, the equation of the plane depends on the parameters , and because the line laser rotates along the rotation axis of the motor.

3.3.1. Find a Point on Rotating Axis

To estimate the translation vector , the various planes rotated around angle should be calculated. The implicit equation of plane sets with poses can be expressed using Equation (5).

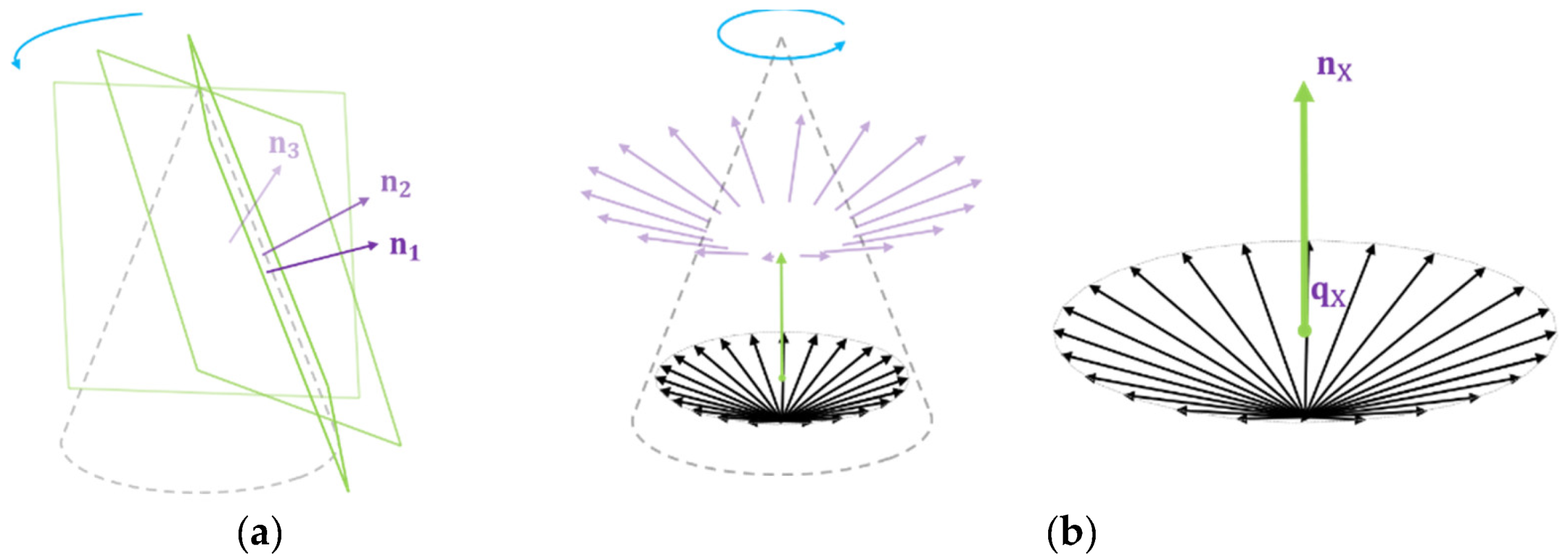

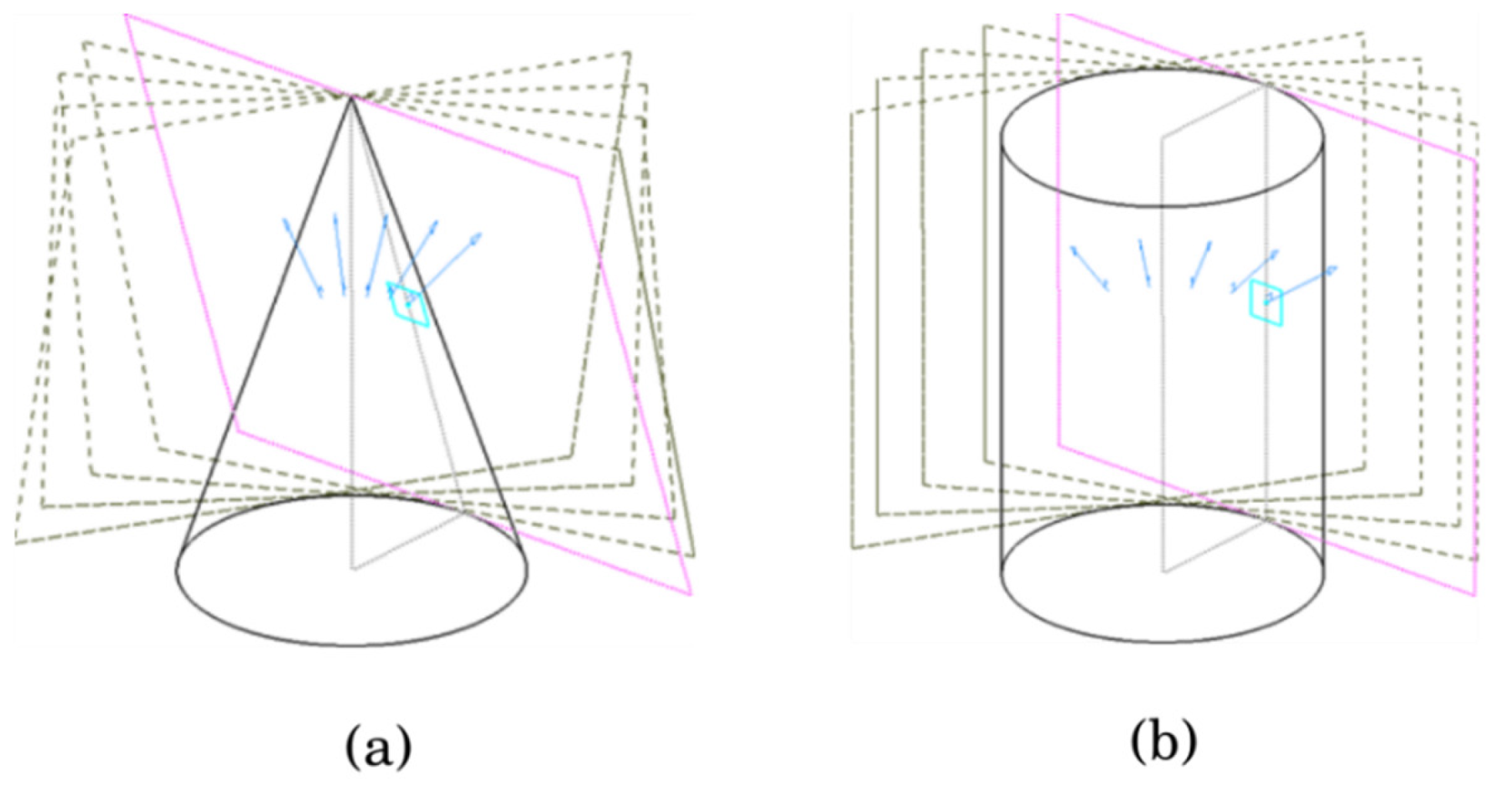

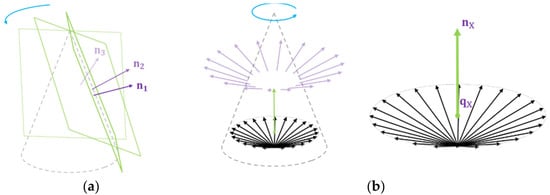

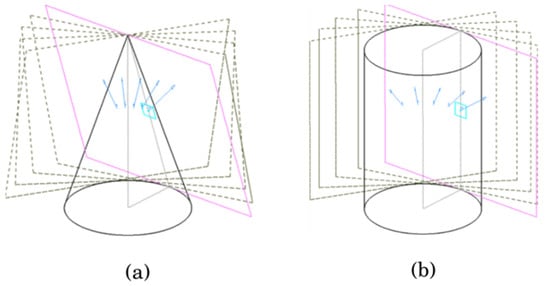

If a plane rotates along an arbitrary axis in a 3D space, there exists an intersection point through which all planes pass through, excluding a specific case (Figure 7a). We discuss this specific case with the simulation in Section 4.1. Simultaneously, the intersection point exists on the rotation axis of the motor. Because the parameters about these planes had noise data, we obtained the position vector using the least squared method, as represented by Equation (6).

Figure 7.

(a) The laser plane with an angle to the rotating axis is forming a cone. (b) The trace of the normal vector of the rotating plane is forming an inverted cone after starting points of them are moved to a point on the rotating axis.

This position vector corresponds to the translation from the camera coordinate to the laser coordinate. In addition, Equation 6 can be interpreted as a geometric meaning that minimizes the distance to a common point for all planes. The shortest distance from a point to the plane was along a line perpendicular to the plane.

3.3.2. Find Rotating Orientation

Similar to estimating the translation of the rotation axis, we used to estimate the direction of . The parameters constituting each equation of equation are . is a normal vector of the plane and is a point on the plane. Because is a free vector, the starting point of the vector can be freely moved. If the starting points of the normal vectors move to a point on the rotating axis, the moved normal vectors form an inverted cone shape. The inverted cone shape is shown in Figure 7b.

As shown in Figure 7b, one end of the normal vector points toward the origin, and the other end points to the base of a cone in 3D space. The shape of the cone is deformed according to the position of the axis of rotation and the position of the laser, but the central axis of the cone and the axis of rotation of the motor are always the same.

The central axis of the cone always is parallel with the normal vector of the base of the cone. Finding the normal vector of the base of the cone can be regarded as the same task as finding the direction vector of the motor’s axis of rotation. Let us denote this base plane of the inverted cone as with (

The process of finding the rotation axis direction from the collected plane is as follows: We generate a matrix consisting of corresponding to the normal vector of the collected planes Each row of a matrix consisting of refers to a point on the base of the cone, so it satisfies the plane equation. Because we have normal vectors, this can be expressed as follows:

In the following matrix form, it becomes:

Because noise exists in the data, the expression is modified in an approximate form, and an optimal solution is obtained in the form of with constraint

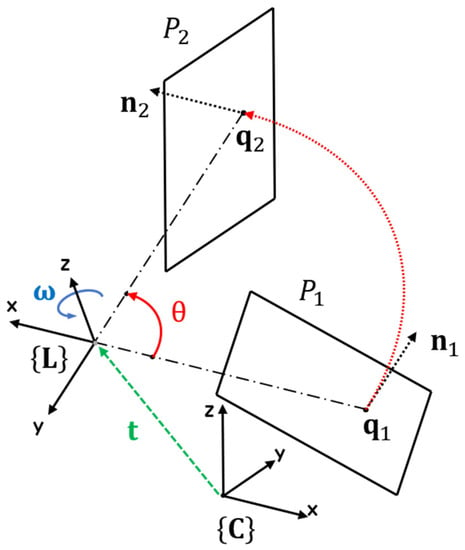

3.4. Rotation of Line Laser Plane around an Axis

The plane created when the line laser rotates can also be determined through the transformation matrix between the camera and the rotation axis. The rotated line laser plane about the axis should be calculated because it is difficult to calibrate between the camera and line laser plane whenever the motor rotates by . As shown in Figure 8, plane is a new plane created when plane rotates about an arbitrary axis of rotation. The plane is expressed as a normal vector n and a point on the plane. Therefore, both the normal vector and point on plane need to be rotated about the Z-axis of the laser coordinate (i.e., rotation axis of the motor). A vector in can be rotated by Rodrigues’ rotation formula. By applying the formula to and , we find the plane rotated by as follows:

where

Figure 8.

The shape and trajectory of the plane rotating by along arbitrary axis (red).

4. Results

To evaluate the calibration described in the previous section, we performed simulations and experiments. First, we evaluated the calibration accuracy in the simulation with respect to the change in , the angle between the rotation axis of the line laser and the line laser plane. Then, we presented two experimental results in a real environment. For comparison, we used a commercial depth camera (RealSense SR305, Intel, Santa Clara, CA, USA), which has a relatively high-quality in the short range. In the first experiment, a plane checkerboard was scanned and then we measured how far the set of points was from that plane. In the second experiment, we show the scan results for various objects using two scan systems.

4.1. Simulation

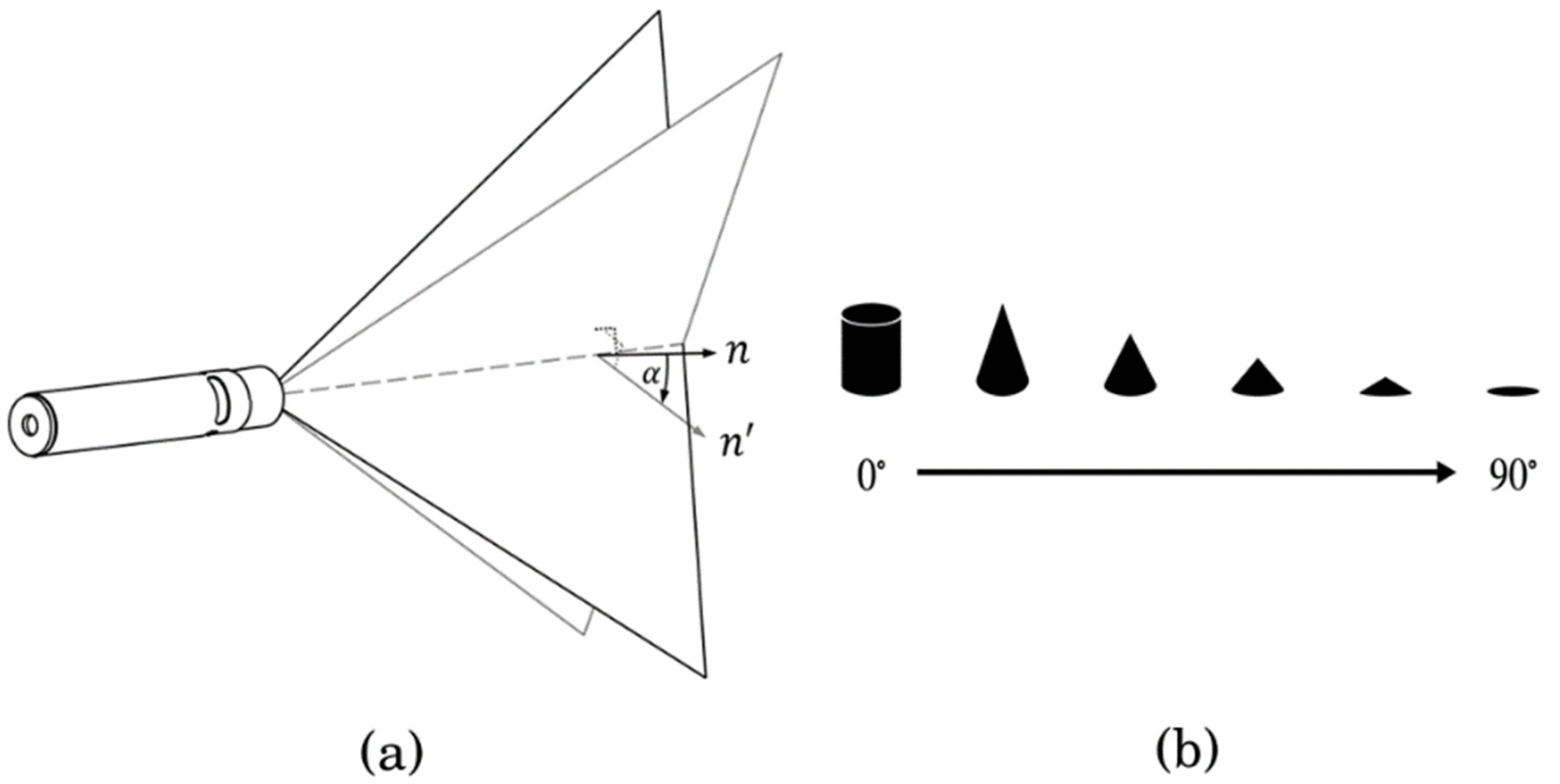

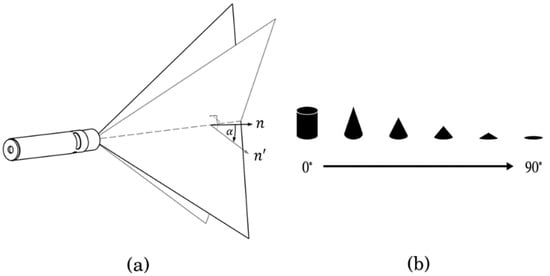

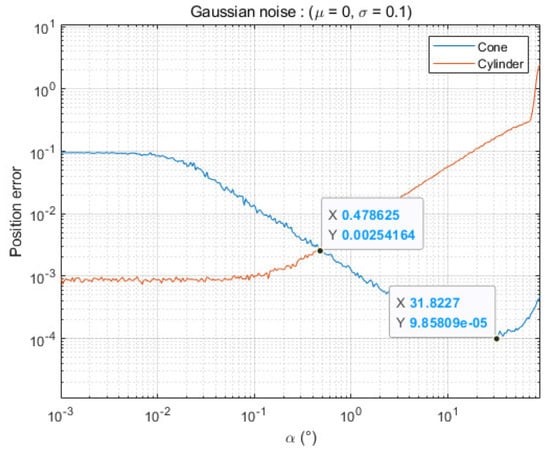

To obtain a depth map for the camera view, we rotated the line laser about a rotation axis. As shown in Figure 9, determines the 3D shape that is tangential to the collected laser planes. The type of this tangential shape is either cone or cylindrical if is less than 90°.

Figure 9.

Simulation condition (a) Angle α between the axis of rotation and the normal vector of the plane generated by the line laser and (b) the change of the shape as the angle increases.

As the value of approaches 0°, the slope of the cone becomes steep. Therefore, if the base area of the cone is constant, the intersection point increases as the value of approaches 0°. If the value of is 0°, the slope of the cone becomes . This means that the intersection point cannot be determined. For this reason, the calibration method for models with very high slope values, such as cylinders, was prepared separately.

If the angle becomes 0°, it shows a cylindrical shape instead of a cone shape, while the line laser rotates about the axis of rotation. If it is formed in a cylindrical shape, as shown in Figure 10b, the following method is used. Unlike the cone shape, normal vectors of planes tangent to a cylinder are in a 2D space perpendicular to the axis of rotation. We find the intersected lines between the collected planes and the plane of the normal vectors. Principal component analysis (PCA) was used to estimate the plane of normal vectors. The center of the circle was estimated using these intersection lines. The distance between the center of the circle and the angle bisector of any two outer tangents was zero. Because of noise, we found a point where the value of the distance to the bisector was minimal from multiple intersections. This point was considered as the coordinate passing through the central axis of the cylinder.

Figure 10.

A different shape of the set of planes, (a) Cone shape, (b) Cylinder shape.

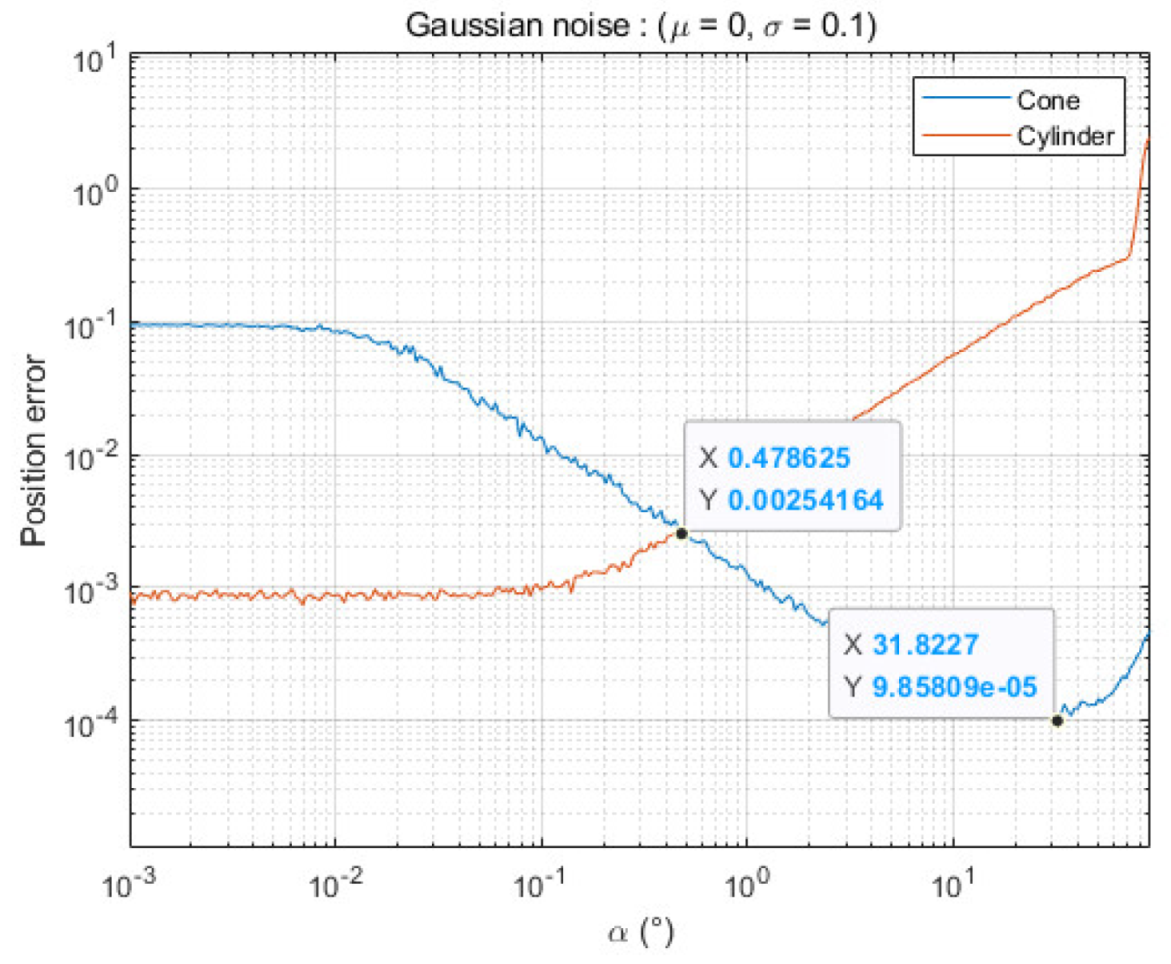

To correctly project in 2D using PCA, the normal vector of the tangent plane must be in a 2D space. That is, it works correctly only for special cases where . Nevertheless, it is also applicable for otherwise , but it will serve as an error for estimating the rotational axis. For this reason, we can see that the estimation error increases as the value of increases, as shown in Figure 11.

Figure 11.

Results of the simulation according to the angle .

Although the points on the plane generated by the line laser in camera coordinates were estimated through the detection of the checkerboard and line laser in the real experiment, we assumed that the points were given values in the simulation. In addition, we added Gaussian noise with a standard deviation of 0.1 mm to emulate the noise of the real environment in the simulation environment.

Because the ground truth is known in the case of simulation, unlike actual experiments, an accurate calibration difference can be obtained. To verify the accuracy of the calibration according to , the distance between the estimated point (cone apex) and the true rotation axis of the line laser was calculated using different angles .

Simulation results show that if the angle is more than 0.47°, a cone-shape-based method is better than a cylinder-based method (Figure 11). This means that the cylinder model is fine if we can adjust the angle between the rotation axis and the laser plane precisely; however, if there is an error of more than 1°, our cone model leads to better results. Furthermore, the results also show that the lowest estimation error occurs at an angle under our noise model. For this reason, we adjusted the angle of our scanning system in real experiments to be approximately 30°.

4.2. Real Experiments

In this experiment, we compared our system with the SR305 camera, which has a relatively high precision compared to other RGB-D cameras in terms of the performance of the depth map. Two experiments were performed. During the experiments, we fixed the two scanning systems together at the plate. First, we evaluated the planarity of the plane, which was estimated for the checkerboard after calibration between the line laser and camera. We also compared the quality of the results scanning various objects, including the environments.

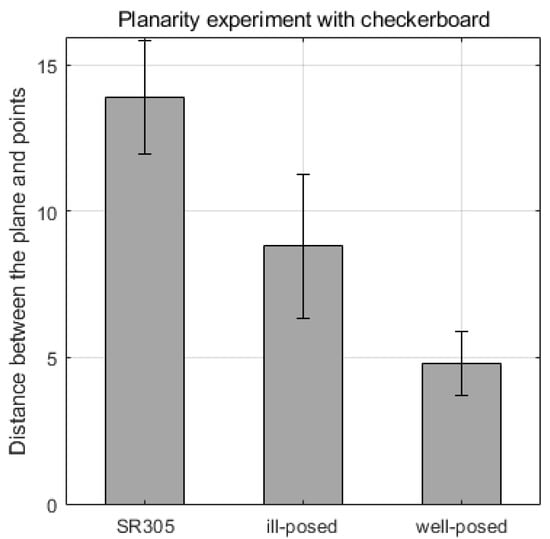

4.2.1. Planarity of the Plane

Because of the flatness condition, the checkerboard is suitable for evaluating the performance of depth estimation. Moreover, we can easily define the reference coordinate with respect to the camera coordinates using the pattern of the checkerboard. Because the origin of the scanning system also has the camera coordinates, it is easy to calculate the error of the planarity. Therefore, we estimated the depth map using the checkerboard plane using two scanning systems. We removed the outlier points in the result using a point cloud tool, such as MeshLab, because it is not easy to only have the checkerboard in the field of view. To evaluate the accuracy of the planarity, each camera estimated world coordinates using intrinsic parameters. We calculated the distance between the reference frame and the estimated points on the checkerboard plane using Equation (9). The parameters of the plane can be obtained using the perspective-n-point algorithm and point vectors are achieved by depth estimation using the scanning systems.

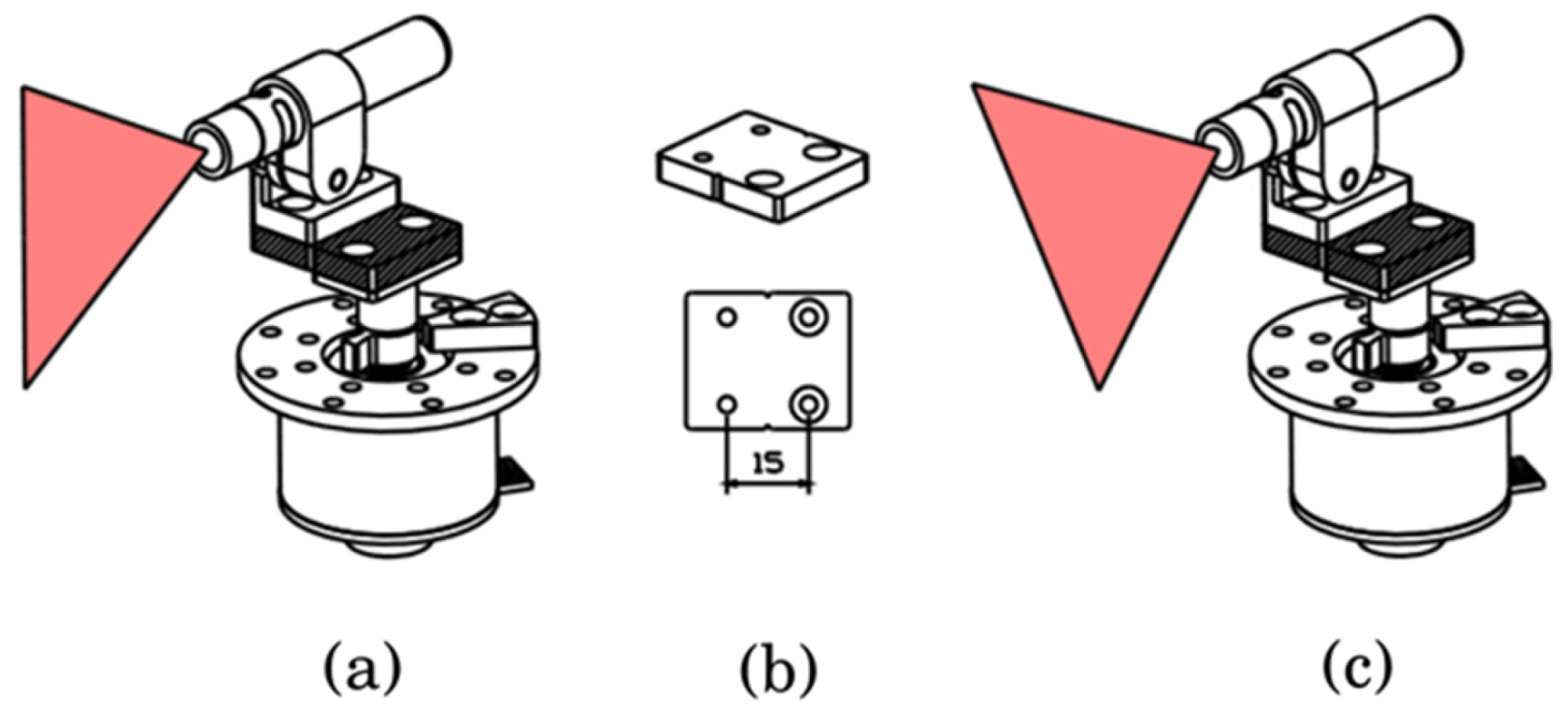

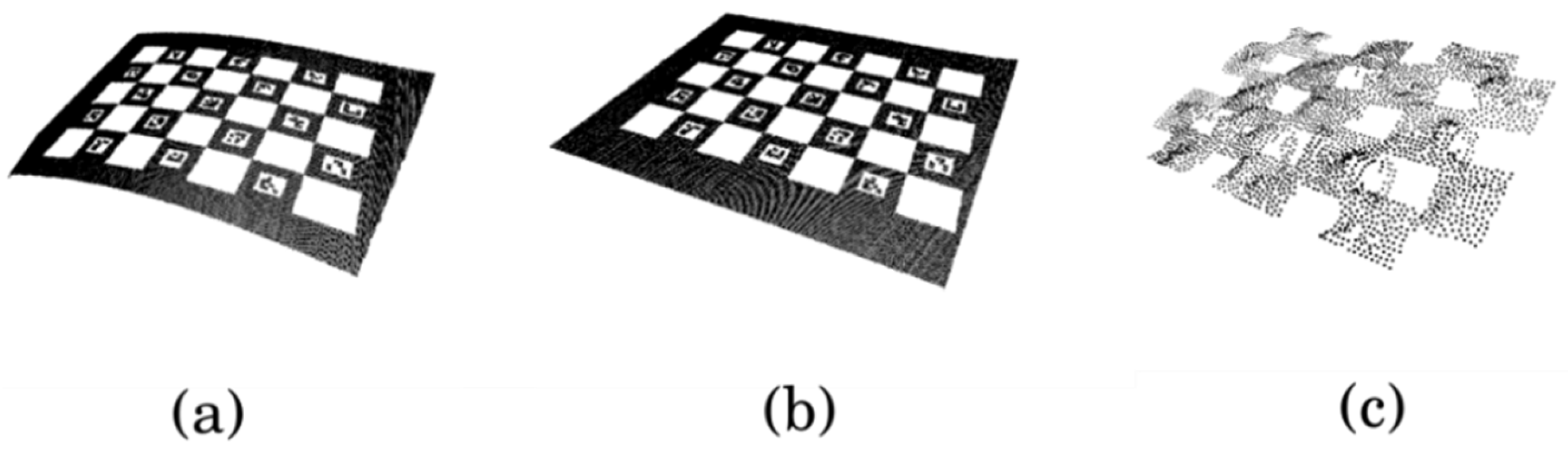

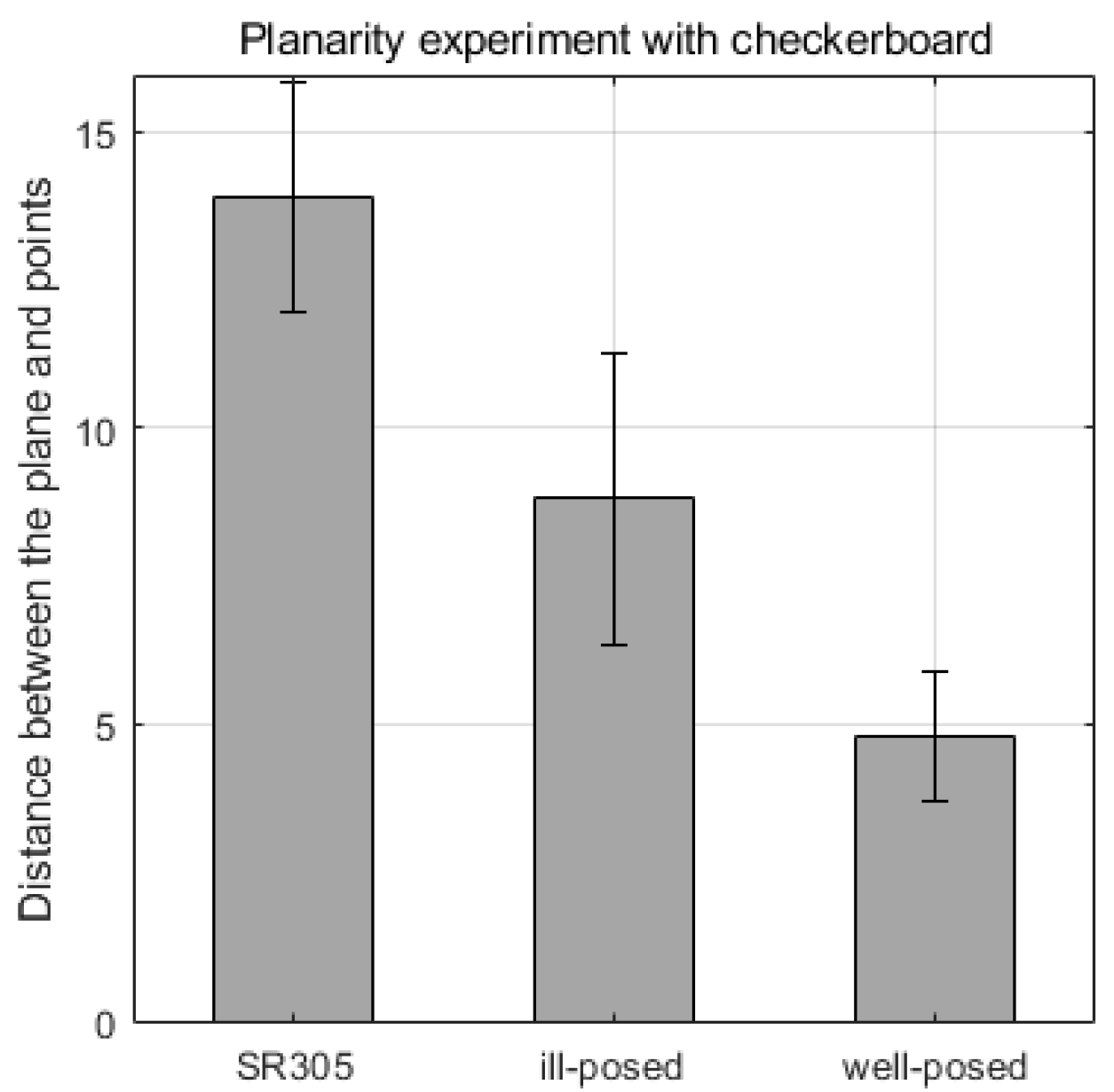

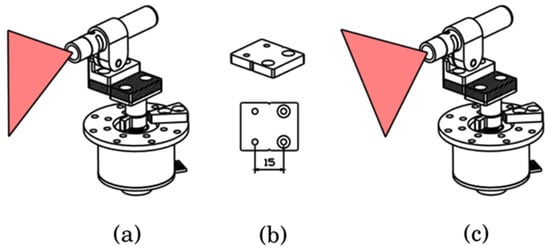

Meanwhile, to verify the effect of the angle of the rotation axis and the line laser as assessed in the simulation, we experimented with and as shown in Figure 12a,c. As shown in Figure 12b, the line laser was moved 15 mm from the axis of rotation. The reason is to easily check the difference in accuracy according to , and to confirm the robustness of the calibration estimation for design errors. As shown in Figure 13, the results of the 3D reconstruction of the checkerboard are indicated by each method. The average and standard deviation of the distances between the points from the plane are shown in Figure 14. We also compared the performance of the 3D reconstruction between our system and SR305 on Table 1. Since our system can detect relatively large distances, the scan volume size of our system is far bigger than SR305.

Figure 12.

Experiment setup, (a) ill-posed condition (, (b) displacement part, and (c) well-posed condition ().

Figure 13.

Results of 3D reconstruction: (a) Our system at , (b) and (c) SR305.

Figure 14.

Distance errors from the checkerboard plane to every point.

Table 1.

Comparison between our system and the commercial sensor (SR305).

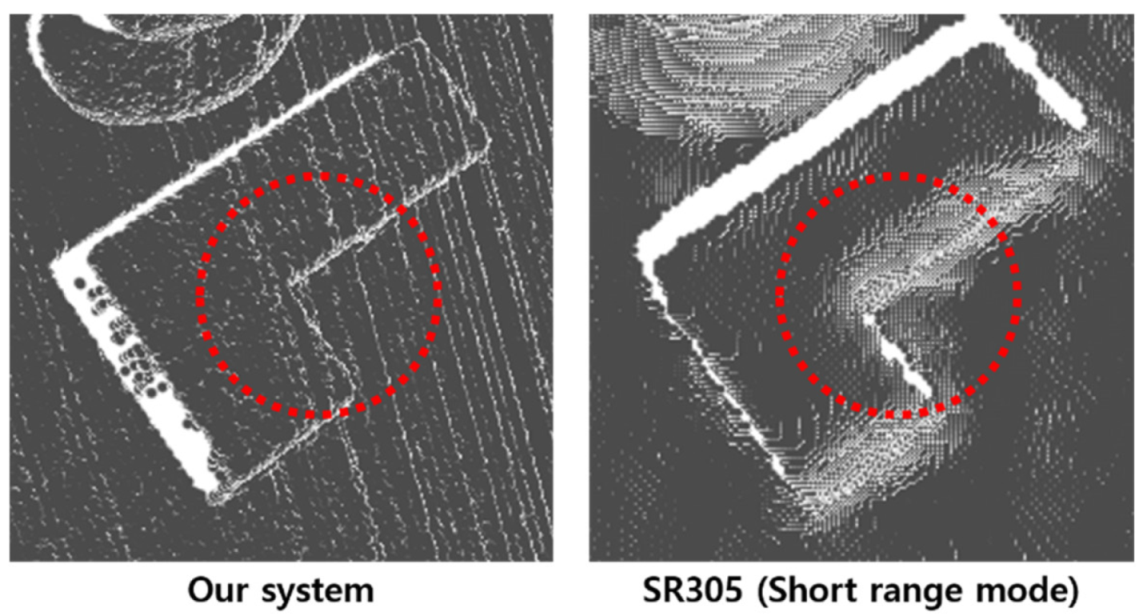

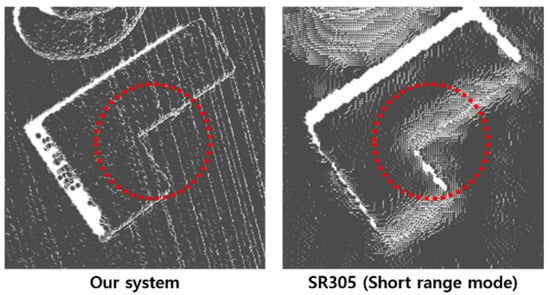

4.2.2. Scene Reconstruction

Unlike previous quantitative assessments, we verified additional qualitative results regarding the reconstruction of various shapes of objects using two scanner systems. As shown in Figure 15, we scanned multiple hand-held items, such as a tennis ball and a teddy bear. Among the scanned objects, the result of the orange cube with sharp edges was significantly different between the two sensors, as depicted in Figure 16.

Figure 15.

Three dimensional reconstruction of multiple objects with background.

Figure 16.

Comparison of the result that was scanned a cube object using two devices.

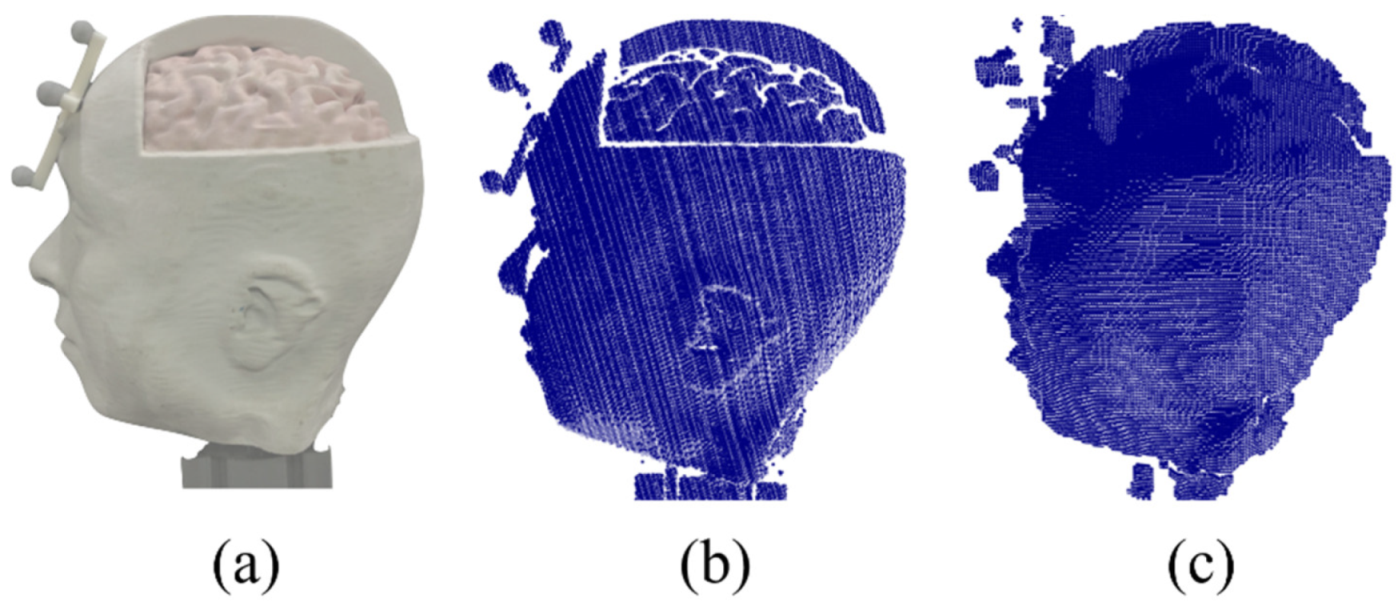

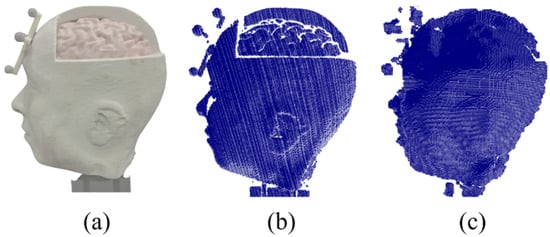

As depicted in Figure 17a, a human plaster with complicated features is appropriate for a qualitative comparison. The size of the plaster was similar to that of the human head. Compared with Figure 17b,c, the cortex areas of the results that were scanned by the two scanners were different. Unlike SR305, our method relatively measured the curvature components of the object.

Figure 17.

Comparison of the 3D reconstruction of a human plaster: (a) Original, (b) our system and (c) SR305.

5. Conclusions

We developed a 3D scanning system for a broad region using a rotating line laser and camera. The transformation should be acquired between the camera coordinates and the plane of the line laser to reconstruct an object. The information about the plane of the line laser with respect to the camera coordinate whenever the plane is rotated should be determined for 3D reconstruction. In this study, we proposed a novel calibration method to determine the relationship between the rotation axis of the line laser and the camera. The center of a cone or cylinder shape generated while the line laser was rotating was calculated. In the simulation study, we evaluated the accuracy of the proposed calibration using two models. The simulation shows that the proposed cone model is superior to the simple cylinder model if the angle alignment error is greater than approximately 0.5°. This means that, in most cases, we have better results when using the proposed cone model because the misalignment of the laser plane and the rotation axis is larger than this value. Finally, we evaluated the performance of the 3D reconstruction of our system through real experiments and compared the results with those of a commercial RGB-D camera. The quality of the restoration using our system was far superior to that of the RGB-D camera. Our system can acquire the depth map of object at a far distance compared to the RGB-D camera. Our future work will include multiple line laser calibration for improving scanning speed and 3D object recognition using this improved depth information.

Author Contributions

Conceptualization, J.L. and S.L.; methodology, J.L.; software, J.L.; validation, J.L. and H.S.; investigation, H.S. and J.L.; writing—original draft preparation, H.S. and J.L.; writing—review and editing, S.L.; supervision, S.L.; project administration, S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the Technology Innovation Program (20001856) funded by the Ministry of Trade, Industry & Energy, and also supported in part by the grant (21NSIP-B135746-05) from National Spatial Information Research Program (NSIP) funded by Ministry of Land, Infrastructure and Transport of Korea government of Korean government.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Blais, F. Review of 20 years of range sensor development. J. Electron. Imaging 2004, 13, 231–243. [Google Scholar] [CrossRef]

- Brown, G.M.; Chen, F.; Song, M. Overview of three-dimensional shape measurement using optical methods. Opt. Eng. 2000, 39, 10–22. [Google Scholar] [CrossRef]

- Godin, G.; Beraldin, J.-A.; Taylor, J.; Cournoyer, L.; Rioux, M.; El-Hakim, S.; Baribeau, R.; Blais, F.; Boulanger, P.; Domey, J.; et al. Active optical 3D imaging for heritage applications. IEEE Comput. Graph. Appl. 2002, 22, 24–36. [Google Scholar] [CrossRef]

- Rambach, J.; Pagani, A.; Schneider, M.; Artemenko, O.; Stricker, D. 6DoF Object Tracking based on 3D Scans for Augmented Reality Remote Live Support. Computers 2018, 7, 6. [Google Scholar] [CrossRef]

- Siekański, P.; Magda, K.; Malowany, K.; Rutkiewicz, J.; Styk, A.; Krzesłowski, J.; Kowaluk, T.; Zagórski, A. On-Line Laser Triangulation Scanner for Wood Logs Surface Geometry Measurement. Sensors 2019, 19, 1074. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Li, L.; Wang, C.; Chen, M.; Feng, W.; Zou, X.; Huang, K. Real-time detection of surface deformation and strain in recycled aggregate concrete-filled steel tubular columns via four-ocular vision. Robot. Comput. Manuf. 2019, 59, 36–46. [Google Scholar] [CrossRef]

- Tang, Y.; Chen, M.; Wang, C.; Luo, L.; Li, J.; Lian, G.; Zou, X. Recognition and Localization Methods for Vision-Based Fruit Picking Robots: A Review. Front. Plant Sci. 2020, 11, 510. [Google Scholar] [CrossRef] [PubMed]

- Kjaer, K.H.; Ottosen, C.-O. 3D Laser Triangulation for Plant Phenotyping in Challenging Environments. Sensors 2015, 15, 13533–13547. [Google Scholar] [CrossRef] [PubMed]

- Khoshelham, K.; Elberink, S.O. Accuracy and Resolution of Kinect Depth Data for Indoor Mapping Applications. Sensors 2012, 12, 1437–1454. [Google Scholar] [CrossRef] [PubMed]

- Schlarp, J.; Csencsics, E.; Schitter, G. Optical Scanning of a Laser Triangulation Sensor for 3-D Imaging. IEEE Trans. Instrum. Meas. 2020, 69, 3606–3613. [Google Scholar] [CrossRef]

- Zeng, Y.; Yu, H.; Dai, H.; Song, S.; Lin, M.; Sun, B.; Jiang, W.; Meng, M.Q.-H. An Improved Calibration Method for a Rotating 2D LIDAR System. Sensors 2018, 18, 497. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Liu, Y.; Dong, L.; Cai, X.; Zhou, D. An Algorithm for Extrinsic Parameters Calibration of a Camera and a Laser Range Finder Using Line Features. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 3854–3859. [Google Scholar] [CrossRef]

- Vilaça, J.L.; Fonseca, J.C.; Pinho, A.M. Calibration procedure for 3D measurement systems using two cameras and a laser line. Opt. Laser Technol. 2009, 41, 112–119. [Google Scholar] [CrossRef]

- Naroditsky, O.; Patterson, A.; Daniilidis, K. Automatic alignment of a camera with a line scan LIDAR system. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3429–3434. [Google Scholar]

- Kwak, K.; Huber, D.F.; Badino, H.; Kanade, T.; Hand, A.; Friedl, W.; Chalon, M.; Reinecke, J.; Grebenstein, M. Extrinsic calibration of a single line scanning lidar and a camera. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1366–1372. [Google Scholar]

- Geiger, A.; Moosmann, F.; Car, O.; Schuster, B. Automatic camera and range sensor calibration using a single shot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St Paul, MN, USA, 14–18 May 2012; pp. 3936–3943. [Google Scholar] [CrossRef]

- Vasconcelos, F.; Barreto, J.P.; Nunes, U.J.C. A Minimal Solution for the Extrinsic Calibration of a Camera and a Laser-Rangefinder. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2097–2107. [Google Scholar] [CrossRef] [PubMed]

- Moghadam, P.; Bosse, M.; Zlot, R. Line-based extrinsic calibration of range and image sensors. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 3685–3691. [Google Scholar] [CrossRef]

- Park, Y.; Yun, S.; Won, C.S.; Cho, K.; Um, K.; Sim, S. Calibration between Color Camera and 3D LIDAR Instruments with a Polygonal Planar Board. Sensors 2014, 14, 5333–5353. [Google Scholar] [CrossRef] [PubMed]

- Levinson, J.; Thrun, S. Automatic Online Calibration of Cameras and Lasers. Robot. Sci. Syst. 2016, 9. [Google Scholar] [CrossRef]

- Kang, J.; Doh, N.L. Full-DOF Calibration of a Rotating 2-D LIDAR with a Simple Plane Measurement. IEEE Trans. Robot. 2016, 32, 1245–1263. [Google Scholar] [CrossRef]

- Dong, W.; Isler, V. A Novel Method for the Extrinsic Calibration of a 2D Laser Rangefinder and a Camera. IEEE Sens. J. 2018, 18, 4200–4211. [Google Scholar] [CrossRef]

- Lanman, D.; Taubin, G. Build your own 3D scanner. In ACM SIGGRAPH 2009 Courses on—SIGGRAPH ’09; ACM: New York, NY, USA, 2009; pp. 1–94. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).